TRACKING SOLUTIONS OF TIME VARYING LINEAR INVERSE

PROBLEMS

Martin Kleinsteuber and Simon Hawe

Department of Electrical Engineering and Information Technology, Technische Universit

¨

at M

¨

unchen

Arcistraße 21, Munich, Germany

Keywords:

Linear inverse problems, Signal reconstruction, Time varying signal reconstruction, Discretized newton flow.

Abstract:

The reconstruction of a signal from only a few measurements, deconvolving, or denoising are only a few

interesting signal processing applications that can be formulated as linear inverse problems. Commonly, one

overcomes the ill-posedness of such problems by finding solutions which best match some prior assumptions.

These are often sparsity assumptions as in the theory of Compressive Sensing. In this paper, we propose

a method to track solutions of linear inverse problems. We assume that the corresponding solutions vary

smoothly over time. A discretized Newton flow allows to incorporate the time varying information for tracking

and predicting the subsequent solution. This prediction requires to solve a linear system of equation, which

is in general computationally cheaper than solving a new inverse problem. It may also serve as an additional

prior that takes the smooth variation of the solutions into account, or, as an initial guess for the preceding

reconstruction. We exemplify our approach with the reconstruction of a compressively sampled synthetic

video sequence.

1 INTRODUCTION

Linear inverse problems arise in various signal pro-

cessing applications like in signal deconvolution, de-

noising, interpolation, or signal reconstruction from

few measurements as in Compressive Sensing. Basi-

cally, they aim at computing or reconstructing a signal

s ∈ R

n

from a set of measurements y ∈ R

m

. Formally,

this measurement process can be written as

y = As +e, (1)

where the vector e ∈ R

m

models sampling errors and

noise, and A ∈ R

m×n

is the measurement matrix. In

most interesting cases, this problem is ill-posed be-

cause either the exact measurement process and hence

A is unknown as in image deblurring, or the number

of observations is much smaller than the dimension of

the signal, which is the case in Compressive Sensing.

In this paper, we restrict to the latter case where the

measurement matrix A is known.

Prior assumptions on the signal help to overcome

the ill-posedness of this problem and to stabilize the

solution. They are incorporated in the signal recov-

ery process by the minimization of a suitable function

g: R

n

→ R, leading to the optimization problem

minimize

s

?

∈R

n

g(s

?

)

subject to kAs

?

− yk

2

2

≤ ε, (2)

where ε is an estimated upper bound on the noise

power kek

2

2

. We refer to (Elad et al., 2007) for the

investigation of two conceptual different approaches,

the so called synthesis and the analysis approach.

One of the most commonly used priors which

we will use in our experiments, is based on the fact

that many interesting signals have a sparse or com-

pressible representation with respect to some (possi-

bly overcomplete) basis. This means that the entire

information about the signal is contained in only a few

transform coefficients. A common sparsity measure is

the `

p

-(pseudo-) norm

kvk

p

p

:=

∑

i

|v(i)|

p

, 0 ≤ p ≤ 1, (3)

where here and throughout the paper, v(i) denotes the

i

th

entry of the vector v. Furthermore, let D be a

linear transformation such that Ds is sparse. In that

case g(s

?

) = kDs

?

k

p

p

is a frequently used regulariza-

tion term for problem (2). For example, D may serve

as a basis transformation as in classical Compres-

sive Sensing, (Cand

`

es and Romberg, 2007; Donoho,

253

Kleinsteuber M. and Hawe S. (2012).

TRACKING SOLUTIONS OF TIME VARYING LINEAR INVERSE PROBLEMS.

In Proceedings of the 1st International Conference on Pattern Recognition Applications and Methods, pages 253-257

DOI: 10.5220/0003864102530257

Copyright

c

SciTePress

2006) or as a discretized form of the total variation

(Combettes and Pesquet, 2004; Rudin et al., 1992).

Many approaches to tackle problem (2) rely on

the convexity of g, like NESTA (Becker et al., 2009),

conjugate subgradient methods (Hawe et al., 2011) or

TwIST (Bioucas-Dias and Figueiredo, 2007), just to

mention a few. That is the reason why the `

1

-norm

is most commonly employed. Although this convex

relaxation leads to perfect signal recovery under cer-

tain assumptions, cf. (Donoho and Elad, 2003), it has

been shown in (Chartrand and Staneva, 2008) that in

some cases, the `

p

-norm for 0 ≤ p < 1 severely out-

performs its convex counterpart.

In this work, we do not assume convexity of g but

we require differentiability. For the `

p

-norm, this can

easily be achieved by a suitable smooth approxima-

tion. Here, we propose an approach based on mini-

mizing the unconstrained Lagrangian form of (2) that

is given by

minimize

s

?

∈R

n

f (s

?

) =

1

2

kAs

?

− yk

2

2

+ λg(s

?

). (4)

The Lagrange multiplier λ ∈ R

+

0

weighs between the

sparsity of the solution and its fidelity to the acquired

samples according to λ ∼ ε.

Consider now a sequence of linear inverse prob-

lems whose solutions vary smoothly over time. As

an example, one may think of the denoising of short

video sequences or the reconstruction of compres-

sively sensed magnetic resonance image sequences,

cf. (Lustig et al., 2007). In this work, we propose an

approach to track the solutions of time varying linear

inverse problems. We employ preceding solutions to

predict the current signal’s estimate. To the best of

the authors’ knowledge, this idea has not been pur-

sued so far in the literature. The crucial idea is to use

a discretized Newton flow to track solutions of a time

varying version of (4). We provide three practical up-

date formulas for the tracking problem and conclude

with a proof of concept by applying our approach to

a short synthetic video sequence, where each video

frame is recovered from compressively sampled mea-

surements.

2 TRACKING THE SOLUTIONS

2.1 Problem Statement

Let t 7→ s(t) ∈ R

n

be a C

1

-curve i.e. with continuous

first derivative that represents a time varying signal

s. Moreover, let y(t) = As(t) be the measurements

of s at time t. In this paper, we consider the prob-

lem of reconstructing a sequence of signals

s(t

k

)

k∈N

at consecutive instances of time. Instead of estimat-

ing s(t

k

) by solving the inverse problem based on the

measurements y(t

k

), we investigate in how far the pre-

viously recovered estimates s

?

i

of s(t

i

), i = 1,... , k can

be employed to predict s(t

k+1

) without acquiring new

measurements y(t

k+1

). This prediction step may serve

as an intermediate replacement for this reconstruction

step or it may be employed as an initialization for re-

construction at time t

k

. Note that in our approach, we

assume a fixed measurement matrix A.

Consider the time variant version of the uncon-

strained Lagrangian function

f (s

?

,t) =

1

2

kAs

?

− y(t)k

2

2

+ λg(s

?

). (5)

The minimum of (5) at time t necessarily yields the

gradient

F(s

?

,t) :=

∂

∂s

?

f (s

?

,t) (6)

to be zero. Consequently, we want to find the smooth

curve s

?

(t) such that

F(s

?

(t),t) = 0. (7)

In other words, we want to track the minima of (5)

over time. A discretized Newton flow, which is ex-

plained in the following subsection, will be used for

that purpose.

2.2 Discretized Newton Flow

Homotopy methods are a well-known approach for

solving problem (7). These methods are based on

an associated differential equation whose solutions

track the roots of F. To make the paper self con-

tained, we shortly rederive the discretized Newton

flow for our situation at hand based on (Baumann

et al., 2005). Specifically, we consider the implicit

differential equation

J

F

(s

?

,t)

˙

s

?

+

∂

∂t

F(s

?

,t) = −αF(s

?

,t), (8)

where α > 0 is a free parameter that stabilizes the dy-

namics around the desired solution. Here,

J

F

(s

?

,t) :=

∂

∂s

?

F(s

?

,t) (9)

is the (n × n)-matrix of partial derivatives of F with

respect to s

?

. Under suitable invertibility conditions

on J

F

, we rewrite (8) in explicit form as

˙

s

?

= −J

F

(s

?

,t)

−1

αF(s

?

,t) +

∂

∂t

F(s

?

,t)

. (10)

We discretize (10) at time instances t

k

, for k ∈ N and

assume without loss of generality a fixed stepsize h >

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

254

0. Depending on the stepsize we choose α :=

1

h

. With

the shorthand notation for s

?

k

:= s

?

(t

k

), the single-step

Euler discretization of the time-varying Newton flow

is therefore given as

s

?

k+1

= s

?

k

−

J

F

(s

?

k

,t

k

)

−1

F(s

?

k

,t

k

) + h

∂F

∂t

(s

?

k

,t

k

)

. (11)

We approximate the partial derivative

∂F

∂t

(s

?

k

,t

k

) by

an m

th

-order Taylor approximation H

m

(s

?

,t). For the

practically interesting case these are, cf. (Baumann

et al., 2005)

H

1

(s

?

,t) =

1

h

F(s

?

,t) − F(s

?

,t − h)

(12)

H

2

(s

?

,t) =

1

2h

3F(s

?

,t) − 4F(s

?

,t − h)

+ F(s

?

,t − 2h)

(13)

H

3

(s

?

,t) =

1

30h

37F(s

?

,t) − 45F(s

?

,t − h)

+ 9F(s

?

,t − 2h) − F(s

?

,t − 3h)

(14)

These approximations turn (11) into the update for-

mula

s

?

k+1

= s

?

k

−

J

F

(s

?

k

,t

k

)

−1

F(s

?

k

,t

k

) + hH

m

(s

?

k

,t

k

)

. (15)

2.3 Explicit Update Formulas

In this subsection we derive concrete update formulas

for tracking the solution of inverse problems as de-

fined in (5).

Often the inverse J

F

(s

?

k

,t

k

)

−1

is not accessible or

infeasible to calculate, in particular when dealing with

high dimensional data. Hence for computing the esti-

mate s

∗

k+1

as in equation (15), we solve

minimize

s∈R

n

kJ

F

(s

?

k

,t

k

)s − b

m

(s

?

k

,t

k

)k

2

2

, (16)

with

b

m

(s

?

k

,t

k

) := J

F

(s

?

k

,t

k

)s

?

k

−

F(s

?

k

,t

k

) + hH

m

(s

?

k

,t

k

)

. (17)

Typically, linear Conjugate Gradient methods ef-

ficiently solve this linear equation, (Nocedal and

Wright, 2006). Note, that this is less computational

expensive compared to solving an individual recon-

struction by minimizing (4).

We now derive three explicit update schemes for

the concrete problem of tracking solutions to inverse

problems based on the approximations (12)-(14).

Let ∇g denote the gradient of g. Then from (5) we

obtain

F(s

?

,t) = A

>

(As

?

− y(t)) + λ∇g(s

?

). (18)

The derivative of F with respect to s

?

is thus

J

F

(s

?

,t) = A

>

A +λH

g

(s

?

), (19)

where H

g

(s

?

) denotes the Hessian of g at s

?

. Combing

equation (18) with (12)-(14) yields

hH

1

(s

?

,t) = A

>

y(t −h) − y(t)

(20)

hH

2

(s

?

,t) =

1

2

A

>

4y(t −h) − 3y(t) − y(t − 2h)

(21)

hH

3

(s

?

,t) =

1

30

A

>

45y(t −h) − 37y(t)

− 9y(t −2h) + y(t − 3h)

. (22)

This results in the explicit formulas for b

1

,b

2

,b

3

b

1

(s

?

k

,t

k

) = λ

H

g

(s

?

k

)s

?

k

− ∇g(s

?

k

)

+ A

>

(2y(t

k

) + y(t

k−1

)

(23)

b

2

(s

?

k

,t

k

) = λ

H

g

(s

?

k

)s

?

k

− ∇g(s

?

k

)

+

1

2

A

>

5y(t

k

) − 4y(t

k−1

) + y(t

k−2

)

(24)

b

3

(s

?

k

,t

k

) = λ

H

g

(s

?

k

)s

?

k

− ∇g(s

?

k

)

+

1

30

A

>

67y(t

k

) − 45y(t

k−1

)

+ 9y(t

k−2

) − y(t

k−3

)

. (25)

The three different explicit update formulas follow

straightforwardly

s

?

k+1

= arg min

s∈R

n

kJ

F

(s

?

k

,t

k

)s − b

m

(s

?

k

,t

k

)k

2

2

. (26)

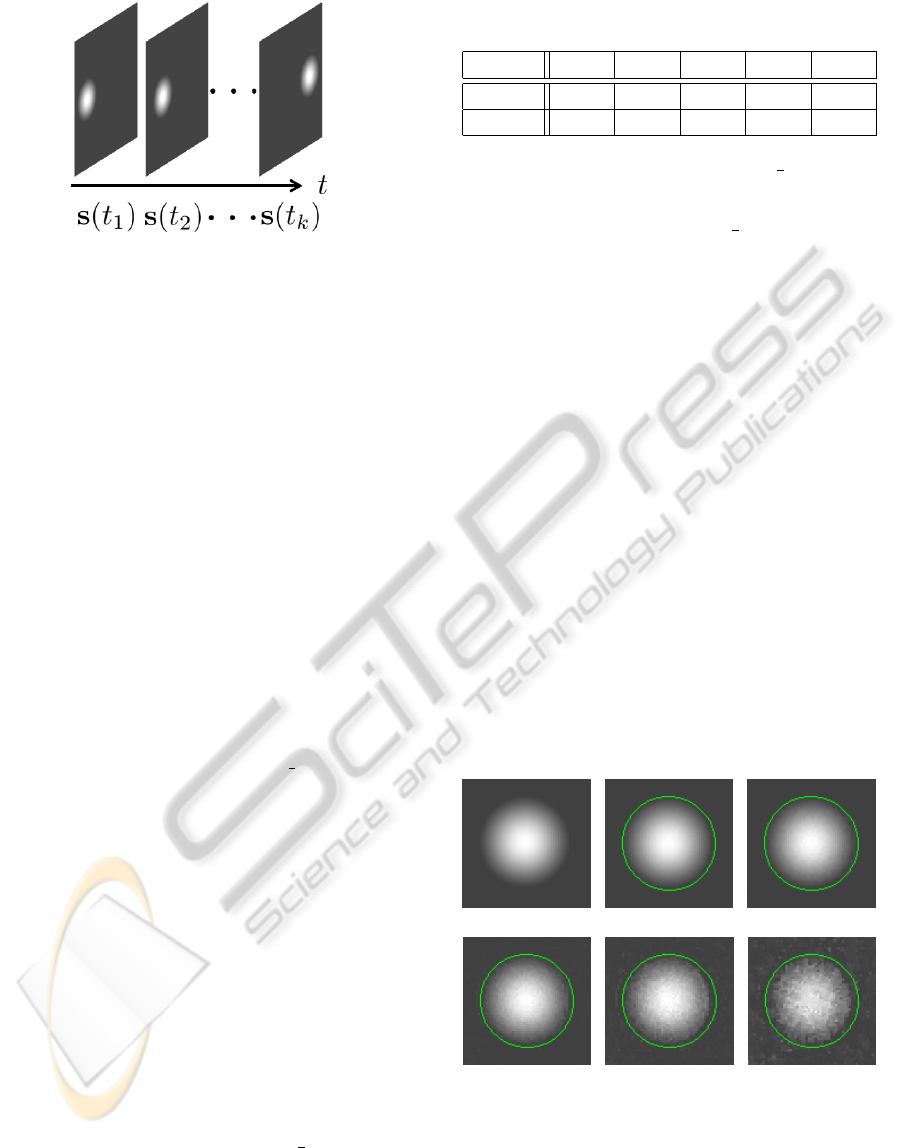

3 EXPERIMENTS

In this section we provide an example as a proof of

concept of our proposed algorithm. It consists of

tracking the reconstruction result of a series of com-

pressively sampled time varying images s(t

k

) ∈ R

n

.

The images are created synthetically and show a ball

moving with constant velocity, see Figure 1. To en-

hance legibility, all formulas are expressed in terms

of matrix vector products. However, regarding the

implementation, we want to emphasize that filtering

techniques are used to deal with the large image data.

Considering the measurement matrix A, we take

m n randomly selected coefficients of the Rudin-

Shapiro transformation (RST) (Benke, 1994). The

TRACKING SOLUTIONS OF TIME VARYING LINEAR INVERSE PROBLEMS

255

Figure 1: Time sequence of synthetic test image.

RST, also know as the real valued Dragon-Noiselet-

transformation, is used because of its efficient im-

plementation and due to its desirable properties for

image reconstruction (Romberg, 2008). We empiri-

cally set the number of measurements to m = 0.2n.

In our experiments we found that the number of mea-

surements does not severely affect the accuracy of the

tracking algorithm, but the speed of convergence. The

larger we chose m the faster the algorithm converges.

Regarding the regularization term g, we exploit

the fact that most images have a sparse gradient.

The simplest way of approximating the image gra-

dient is in terms of finite differences between neigh-

boring pixels in horizontal, and vertical direction re-

spectively. The computation of the finite differences

can be formulated as Ds with a suitable matrix D ∈

R

2n×n

.

To measure the sparsity of the gradient, we em-

ploy a smooth approximation of the `

p

-pseudo-norm

of Ds defined as

g(s) =

2n

∑

i=1

(e

i

Ds)

2

− µ

p

2

, (27)

with 0 < p ≤ 1 and a smoothing parameter µ ∈ R

+

.

The vector e

i

∈ R

2n

is a unit vector where the i

th

com-

ponent is equal to one and the others are zero. For

p = 1, equation (27) is a smooth approximation of the

well known anisotropic total-variation pseudo-norm.

We start our tracking algorithm by measuring RST

coefficients at consecutive instances of time y(t

k

) =

As(t

k

). From these consecutive measurements we

find s

?

k

by individually solving (5) using a Conju-

gate Gradient (CG) method with backtracking line-

search and Hestenes-Stiefel update rule (Nocedal and

Wright, 2006). Explicitly, the gradient of the pro-

posed regularizer (27) is

∇g(s) = D

>

2n

∑

i=1

p E

i

(e

>

i

Ds)

2

+ µ

p

2

−1

Ds, (28)

where E

i

:= e

i

e

>

i

. The Hessian of g is given by

Table 1: PSNR in dB and MSE between estimated signal

s

?

k+ j

for j = 1, . .. , 5 and original signals s(t

k+ j

) j = 1, . .. , 5.

s

?

k+1

s

?

k+2

s

?

k+3

s

?

k+4

s

?

k+5

PSNR 57.2 51.5 34.9 33.3 29.0

MSE 0.12 0.45 20.8 30.3 80.2

H

g

(s) = D

>

2n

∑

i=1

pE

i

(e

>

i

Ds)

2

+ µ

p

2

−1

+ (p − 2)

(e

>

i

Ds)

2

+ µ

p

2

−2

(e

>

i

Ds)

2

D

=: D

>

Q D. (29)

From this, we obtain s

?

k+1

by (26), using a linear

CG-method. Regarding the update formula for b

m

,

we found in our experiments that (24) yields a good

trade-off between prediction results and computa-

tional burden.

The tracking results for our example are presented

in Figure 2(b)-(f) for p = 0.7. We use the knowledge

of s(t

k

), s(t

k−1

) and s(t

k−2

) to iteratively estimate s

?

k+ j

for j = 1, . .., 5 only based on the update formula

(26). Clearly, the smaller j is, the better the estima-

tion. Note that the results shown in Figure 2(e)-(f)

are solely based on previously predicted images. The

green circle indicates the position of the ball in the

original images s(t

k+ j

), j = 1, . . . , 5. It can be seen

that although the quality of the images decreases, the

position of the circle is still captured adequately. As a

quantitative measure of the reconstruction quality, Ta-

ble 1 contains the peak signal to noise ratio (PSNR)

and the mean squared error (MSE) of the estimated

signals to the original signals.

(a) s(t

k

) (b) s

?

k+1

(c) s

?

k+2

(d) s

?

k+3

(e) s

?

k+4

(f) s

?

k+5

Figure 2: Excerpt of original image (a) and estimated im-

ages (b)-(f). The green circle indicates the position of the

ball in the original images.

A final word on the computational cost of the al-

gorithm. Considering the cost for applying the Hes-

sian operator as defined in (29), it mainly depends on

ICPRAM 2012 - International Conference on Pattern Recognition Applications and Methods

256

the cost of applying D and its transposed, as the ma-

trix Q is just a diagonal operator that can be applied in

O(n) flops. Furthermore, we want to mention that any

sparsifying transformation D that admits a fast imple-

mentation e.g. the Wavelet or Curvelet transformation

for images, can be easily used within this framework.

4 CONCLUSIONS

In this paper we present a concept for tracking the so-

lutions of inverse problems that vary smoothly over

time. The tracking is achieved by employing a dis-

cretized Newton flow on the gradient of the cost func-

tion. The approach allows us to predict the signal at

the next time instance from previous reconstruction

results without explicitly taking new measurements.

One advantage is that this prediction step is computa-

tionally less expensive than an individual reconstruc-

tion.

REFERENCES

Baumann, M., Helmke, U., and Manton, J. (2005). Reliable

tracking algorithms for principal and minor eigenvec-

tor computations. In 44th IEEE Conference on Deci-

sion and Control and European Control Conference,

pages 7258–7263.

Becker, S., Bobin, J., and Cand

`

es, E. J. (2009). Nesta: a fast

and accurate first-order method for sparse recovery.

SIAM Journal on Imaging Sciences, 4(1):1–39.

Benke, G. (1994). Generalized rudin-shapiro systems. Jour-

nal of Fourier Analysis and Applications, 1(1):87–

101.

Bioucas-Dias, J. and Figueiredo, M. (2007). A new twist:

Two-step iterative shrinkage/thresholding algorithms

for image restoration. Image Processing, IEEE Trans-

actions on, 16(12):2992 –3004.

Cand

`

es, E. J. and Romberg, J. (2007). Sparsity and inco-

herence in compressive sampling. Inverse Problems,

23(3):969–985.

Chartrand, R. and Staneva, V. (2008). Restricted isometry

properties and nonconvex compressive sensing. In-

verse Problems, 24(3):1–14.

Combettes, P. L. and Pesquet, J. C. (2004). Image restora-

tion subject to a total variation constraint. IEEE Trans-

actions on Image Processing, 13(9):1213–1222.

Donoho, D. L. (2006). Compressed sensing. IEEE Trans-

actions on Information Theory, 52(4):1289–1306.

Donoho, D. L. and Elad, M. (2003). Optimally sparse

representation in general (nonorthogonal) dictionar-

ies via `

1

minimization. Proceedings of the National

Academy of Sciences of the United States of America,

100(5):2197–2202.

Elad, M., Milanfar, P., and Rubinstein, R. (2007). Analysis

versus synthesis in signal priors. Inverse Problems,

3(3):947–968.

Hawe, S., Kleinsteuber, M., and Diepold, K. (2011). Dense

disparity maps from sparse disparity measurements.

In IEEE 13th International Conference on Computer

Vision.

Lustig, M., Donoho, D., and Pauly, J. M. (2007). Sparse

MRI: The application of compressed sensing for rapid

MR imaging. Magnetic Resonance in Medicine,

58(6):1182–1195.

Nocedal, J. and Wright, S. J. (2006). Numerical Optimiza-

tion, 2nd Ed. Springer, New York.

Romberg, J. (2008). Imaging via compressive sampling.

IEEE Signal Processing Magazine, 25(2):14–20.

Rudin, L. I., Osher, S., and Fatemi, E. (1992). Nonlinear

total variation based noise removal algorithms. Phys.

D, 60(1-4):259–268.

TRACKING SOLUTIONS OF TIME VARYING LINEAR INVERSE PROBLEMS

257