TEXTURE OVERLAY ONTO NON-RIGID SURFACE

USING COMMODITY DEPTH CAMERA

Tomoki Hayashi, Francois de Sorbier and Hideo Saito

Graduate School of Science and Technology, Keio University, 3-14-1 Hiyoshi, Kohoku-ku, Yokohama, Japan

Keywords:

Deformable 3-D Registration, Principal Component Analysis, Depth Camera, Augmented Reality.

Abstract:

We present a method for overlaying a texture onto a non-rigid surface using a commodity depth camera. The

depth cameras are able to capture 3-D data of a surface in real-time, and have several advantages compared

with methods using only standard color cameras. However, it is not easy to register a 3-D deformable mesh to a

point cloud of the non-rigid surface while keeping its geometrical topology. In order to solve this problem, our

method starts by learning many representative meshes to generate surface deformation models. Then, while

capturing 3-D data, we register a feasible 3-D mesh to the target surface and overlay a template texture onto

the registered mesh. Even if the depth data are noisy or sparse, the learning-based method provides us with a

smooth surface mesh. In addition, our method can be applied to real-time applications. In our experiments,

we show some augmented reality results of texture overlay onto a non-textured T-shirt.

1 INTRODUCTION

Recent progress in computer vision significantly ex-

tended the possibilities of augmented reality, a field

that is quickly gaining popularity. Augmented reality

is a young field that can be applied to many domains

like entertainment and navigation (Azuma, 1997).

For clothes retail industry, examples of virtual

clothes fitting system have been presented. In these

systems, users can try on clothes virtually. It can be

applied to a tele-shopping system over the internet,

clothes designing, etc.

In order to realize such a virtual fitting using

a monocular 2-D camera, many methods, that reg-

ister a deformable mesh onto user’s clothes and

map the clothes texture to the registered mesh, have

been presented (Ehara and Saito, 2006) (Pilet et al.,

2007) (Hilsmann and Eisert, 2009). For the de-

formable mesh registration, they need that rich tex-

tures or the silhouette of the clothes can be extracted.

In the last few years, a new kind of depth cameras

has been recently released with a reasonable price. By

utilizing the 3-D data captured by the depth camera,

some industrial virtual cloth fitting systems have been

presented. However, those systems roughly, or just

do not, consider the shape of the clothes that a user

wants to wear. On the contrary, there are some meth-

ods which register a 3-D deformable mesh onto cap-

tured depth data of a target surface. Although those

registration methods are very accurate, most of them

require high processing time and are then not suitable

for real-time applications.

In this paper, we present a real-time method that

registers a 3-D mesh and overlays a template texture

onto a non-rigid target surface like a T-shirt. Our

method consists of an off-line phase and an on-line

phase. In the off-line phase, we generate a number

of representative sample meshes by exploiting the in-

extensibility of each edge of the triangles. Then the

PCA (Principal Component Analysis) is applied for

reducing the dimensionality of the mesh. In the on-

line phase, we quickly estimate few parameters for

generating the mesh according to input depth data.

The target region where the template texture should

be overlaid is defined by few color markers. Finally,

the generated mesh is registered onto the target sur-

face and the template texture is mapped to the regis-

tered mesh.

There are some contributions in our research.

First, we overlay a texture which has a feasible shape

onto a non-rigid surface captured by a commodity

depth camera. Even though input depth data is noisy,

our method can generate a natural and smooth shape.

Second, we also do not need to use any texture to gen-

erate the surface mesh that fits the real shape. Finally,

we achieve a real-time process by taking the advan-

tage of the PCA that is a simple method of reducing

the dimension of meshes.

66

Hayashi T., de Sorbier F. and Saito H..

TEXTURE OVERLAY ONTO NON-RIGID SURFACE USING COMMODITY DEPTH CAMERA.

DOI: 10.5220/0003867200660071

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2012), pages 66-71

ISBN: 978-989-8565-04-4

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

2 RELATED WORKS

Traditionally, methods that aim at overlaying a tex-

ture onto a non-rigid surface are applying a two di-

mensional or three dimensional deformable model re-

constructed from a commodity color camera.

2-D Deformable Model. Pilet et al. have pre-

sented a feature-based fast method which detects and

tracks deformable objects in monocular image se-

quences (Pilet et al., 2007). They applied a wide base-

line matching algorithm for finding correspondences.

Hilsmann and Eisert proposed a real-time system that

tracks clothes and overlays a texture on it by estimat-

ing the elastic deformations of the cloth from a single

camera in the 2D image plane (Hilsmann and Eisert,

2009). Self-occlusions problem is addressed by using

a 2-D motion model regularizing an optical flow field.

In both of these methods, the target surface requires a

rich texture in order to perform a tracking.

3-D Deformable Model. Several methods are tak-

ing advantage of a 3-D mesh model computed from

a 2-D input image for augmenting a target surface.

Shen et al. recovered the 3-D shape of an inextensi-

ble deformable surface from a monocular image se-

quence (Shen et al., 2010). Their iterative L

2

-norm

approximation process computes the non-convex ob-

jective function in the optimization. The noise is re-

duced by applying a L

2

-norm on re-projection errors.

Processing time is, however,too long to satisfy a prac-

tical system due to their iterative nature.

Salzmann et al. generated a deformation

mode space from the PCA of sample triangular

meshes (Salzmann et al., 2007). The non-rigid shape

is then expressed by the combination of each defor-

mation mode. This step does not need an estimation

of an initial shape or a tracking. Later, they achieved

the linear local model for a monocular reconstruction

of a deformable surface (Salzmann and Fua, 2011).

This method reconstructs an arbitrary deformed shape

as long as the homogeneous surface has been learned

previously.

Perriollat et al. presented the reconstruction of

an inextensible deformable surface without learning

the deformable model (Perriollat et al., 2010). It

achieves fast computing by exploiting the underlying

distance constraints to recover the 3-D shape. That

fast computing can realize augmented reality applica-

tion. Note that most of those approaches require cor-

respondences between a template image and an input

image.

Depth Cameras. Recent days, depth cameras have

been becoming popular and many researchers have

been focusing on the deformable model registration

using it (Li et al., 2008) (Kim et al., 2010) (Cai et al.,

2010). The depth camera has a big advantage against

a standard camera because it captures the 3-D shape

of the target surface with no texture.

Amberg et al. presented a method which extends

the ICP (Iterative Closest Point) framework to non-

rigid registration (Amberg et al., 2007). The opti-

mal deformation can be determined accurately and

efficiently by applying a locally affine regulariza-

tion. Drawback of this method is that the processing

cost increases due to the iterative process. Papazov

and Burschka proposed a method for deformable 3-

D shape registration by computing shape transitions

based on local similarity transforms (Papazov and

Burschka, 2011). They formulated an ordinary dif-

ferential equation which describes the transition of a

source shape towards a target shape. Even if this ap-

proach does not require any iterative process, it still

requires a lot of computational time.

In addition, we are aware that most methods us-

ing a depth camera assume that the input depth data is

ground truth. Therefore, they may result in an unnat-

ural surface if the depth data is noisy.

3 TEXTURE OVERLAY ONTO

NON-RIGID SURFACE

In this section, we describe our method to overlay a

texture onto a non-rigid surface. Fig. 1 illustrates the

flow of our method. First, in the off-line phase, we

generate deformation models by learning many rep-

resentative meshes. That deformation models were

proposed by Salzmann et al. (Salzmann et al., 2007).

Because the dimension of the mesh in the model is

low, we can quickly generate an arbitrary deformable

mesh to fit the target surface in the on-line phase. In

addition, thanks to the models, even though the input

data is noisy, we can generate a natural mesh that has

smooth shape.

In Salzmann’s method, the iterative processing is

required because it is not easy to generate a 3-D mesh

only from a 2-D color image. In our case, we can

generate a 3-D mesh directly by taking advantage of

3-D data from a depth camera.

3.1 Surface Deformation Models

Generation

In the off-line phase, we generate the deforma-

tion models by learning several representative sam-

ple meshes. This part is based on Salzmann’s

method (Salzmann et al., 2007) that can reduce dras-

TEXTURE OVERLAY ONTO NON-RIGID SURFACE USING COMMODITY DEPTH CAMERA

67

Off-line On-line

Depth image

Sample mesh generation

Angle parameters

Dimensionality reduction

Principal

components

Principal

components

Point cloud sampling

Texture-overlaid image

Color image

Normalization

Principal component

scores computation

Mesh projection

Stretch of average mesh

Average mesh

Te mplate

texture

1HHNKPG 1PNKPG

Figure 1: Flow of our method.

tically the number of degrees of freedom (dofs) of a

mesh by assuming that the original length of the edge

is constant and utilizing PCA.

In our approach, the target surface and the tem-

plate texture are rectangular, so we introduce a rect-

angular surface mesh made of m = M × N vertices

V = { v

1

,...,v

m

} ⊂ R

3

.

3.1.1 Sample Mesh Generation

Thanks to Salzmann’s work, we can generate sample

meshes that are variously deformed by setting small

angle parameters. The number of the parameters is

considerably smaller than 3 × m that is the original

dofs of the mesh V.

We randomly constrained the range of the angle

parameters to [−π/8, π/8] and discarded the gener-

ated meshes that may still not preserve the topology.

Finally, all the sample meshes are aligned in order

to uniformize the result of the PCA.

3.1.2 Dimensionality Reduction

For more sophisticated expression of the mesh, Salz-

mann proposed the method to conduct the dimension-

ality reduction by running the PCA on the sample

meshes presented in Sec. 3.1.1. As a result, we can

get the average mesh

¯

V = {y

1

,...,y

m

} ⊂ R

3

and N

c

principal components P = {p

1

,...,p

m

} ⊂ R

3

which

represent some deformation modes. Then an arbitrary

mesh can be expressed as follows:

V =

¯

V+

N

c

∑

k=1

ω

k

P

k

(1)

where V is the vertices of the target surface mesh that

we want to generate, ω

k

denote k

th

principal compo-

nent score or weights, and P

k

denote the correspond-

ing principal components or deformation modes. N

c

is the number of the principal components, which is

determined by looking at the contribution rate of the

PCA. For example, setting N

c

to 40 principal compo-

nents is enough to reconstruct over 98% of the origi-

nal shape. Any mesh can then be expressed as a func-

tions of the vector: Θ = {ω

1

,...,ω

N

c

}. Once Θ is

known, the surface mesh can be easily reconstructed

using Eq. 1.

In the following section, we explain our method to

generate a 3-D mesh from an input depth image and

to overlay a texture onto a non-rigid surface by using

the surface deformation models.

3.2 Mesh registration

The goal in the on-line phase is to overlay a template

texture S onto a target surface T by using a color

image and its corresponding depth image. In order

to create the mesh onto which the texture is mapped,

we need to estimate the optimal principal component

score vector Θ. In general, the principal component

score ω

k

is described as:

ω

k

= (V−

¯

V)· P

k

(2)

where

¯

V, P

k

and V were defined in Eq. 1.

This equation means that ω

k

will be higher if

(V −

¯

V) is similar to P

k

. In that case, P

k

consider-

ably affects the shape of the generated surface mesh,

and vice versa. The generated surface mesh will then

receive the template texture and will be overlaid onto

the target surface.

3.2.1 Point Cloud Sampling

Eq. 2 means that each data needs to have the same

dimension. Although V is unknown, the input point

cloud of the target surface T is useful as a good can-

didate to replace V. The simplest idea is to set V

by finding the corresponding points between the point

cloud of T and the vertex coordinates of

¯

V. However,

T is a big data set without any special order, implying

that the computational time may become high.

Therefore we sample the point cloud of T to

match its dimension to the dimension of V. The sam-

pling is done on the input depth image by using color

markers. We have eight points defined by eight color

markers on T and add an additional point which is a

centroid of them. Based on their image coordinates,

we sample the region covered by the color markers

such that the number of vertex becomes the expected

sampling resolution N

D

. N

D

is set to m that has the

same dimension as

¯

V. Each sampled coordinate are

computed as linear interpolation of the image coordi-

nates of adjacent 4 points as presented in Fig. 2.

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

68

Figure 2: Markers and sampled coordinates. Green points

on the surface border denote the markers that are detected

based on its color. The center green point is an average

coordinate of the green points on the surface border. Yellow

points are the sampled coordinates.

3.2.2 Normalization

Even if the dimension of the sampled point cloud has

become same as the one of

¯

V, it can not still be used in

Eq. 2 because the scale and the orientation of the point

cloud is different from those of

¯

V and P

k

. In order to

match them, we define a normalized coordinate sys-

tem and a rigid transformation matrix M which trans-

forms the data from the world coordinate system to

the normalized system.

M is computed using the captured depth

data. We first define a rigid transformation which

makes all sampled points of T to fit the co-

ordinates of the undeformed mesh. The unde-

formed mesh is aligned in the normalized sys-

tem so that its four corner vertices correspond to

the coordinates

−

W−1

2

,−

H−1

2

,0

,

W−1

2

,−

H−1

2

,0

,

−

W−1

2

,

H−1

2

,0

and

W−1

2

,

H−1

2

,0

. Note thatW and

H respectively represent the width and the height of

the undeformed mesh.

The estimation of M is done by a least-squares fit-

ting method. The sampled point cloud of T is nor-

malized as V

′

of T

N

by M. The alignment of

¯

V and P

k

in the normalized system can be pre-processed during

the stage presented in the Sec. 3.1.1.

3.2.3 Stretch of Average Mesh

The x and y coordinates of V

′

do not match the ones

of

¯

V in the normalized coordinate system when T is

deformed. If their difference is too big, we can not

reconstruct the optimal ω

k

. Therefore we stretch the

shape of

¯

V to roughly match the one of V

′

.

The stretch of

¯

V is applied on the XY plane di-

rection in the normalized coordinate system. The ver-

tex coordinates of

¯

V which correspond to the color

markers are transformed to the marker’s x and y co-

ordinates while keeping each z coordinate. On top of

that, those stretching transformation vectors are used

for the other coordinates of

¯

V. The remaining coor-

dinates are transformed by applying a weight on the

previously computed vectors. Each weight is pre-

processed based on the square distance between the

viewing coordinate of

¯

V and each vertex coordinate

corresponding to the markers of

¯

V. The resulting ver-

tex coordinates are expressed by

¯

V

′

. As a result of the

stretching, x and y coordinates of V

′

and

¯

V

′

become

similar.

3.2.4 Principal Component Scores Computation

Thus, we can adapt Eq. 2 to:

ω

k

= (V

′

−

¯

V

′

) · P

k

. (3)

Because each ω

k

that is calculated in the Eq. 3 is

applicable to

¯

V

′

, we generate the mesh using this new

equation:

V =

¯

V

′

+

N

c

∑

k=1

ω

k

P

k

. (4)

Then we get the mesh V corresponding to T

N

.

3.2.5 Mesh Projection

Once we generate V, the last stage is to transform it

to the world coordinate system. Because we already

know transformation M from the world coordinate

system to the normalized coordinate system, we can

transform V by using M

−1

.

For the rendering, we define the texture coordi-

nates for each vertex of a surface mesh. Therefore,

the texture is overlaid on the target surface obtained

by M

−1

V.

4 EXPERIMENTAL RESULTS

All the experimental results have been done on a com-

puter composed of a 2.50 GHz Intel(R) Xeon(R) CPU

and 2.00 GB RAM. We use the depth camera Mi-

crosoft Kinect with an image resolution of 640× 480

pixels and a frame rate of 30 Hz. The target surface is

a region of a T-shirt without any textures, but defined

by eight basic color markers. For the resolution of the

rectangular mesh, we set both M and N to 21 vertices.

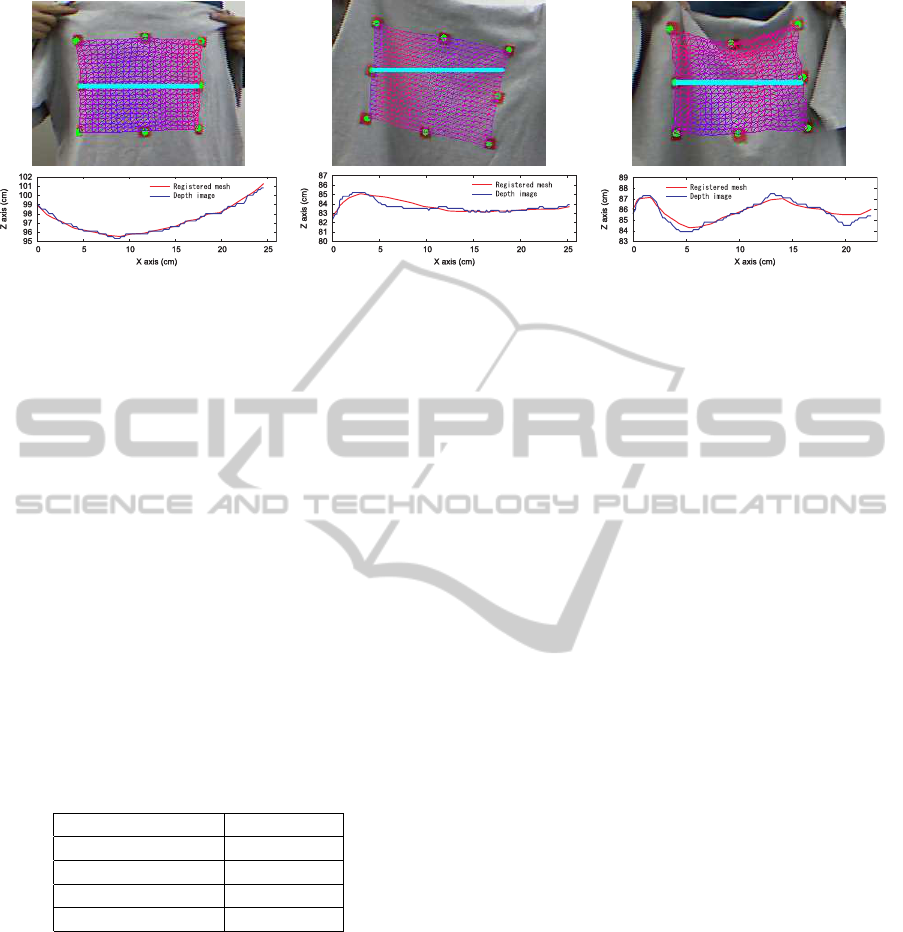

4.1 Depth Comparison

We evaluated our method by the comparison of depth

value between the registered mesh and the depth cam-

era. We plot the depth data of the depth camera and

the registered mesh in the direction of the horizontal

image axis in Fig. 3.

TEXTURE OVERLAY ONTO NON-RIGID SURFACE USING COMMODITY DEPTH CAMERA

69

Figure 3: Plot of depth data of depth camera and generated mesh. The blue points in the top row images denote the positions

which are used for plotting. The bottom row images are the plot of the depth data.

The registered mesh is deformed following the

depth data. Despite of discrete depth data from the

depth camera, our method can generate similar and

smooth shape.

4.2 Processing Time

We calculated the computational time because our

method is supposed to be used for a real-time appli-

cation. The result of the average computational time

in 100 frames is shown in Table 1. Note that the com-

putational time of the mesh registration including the

sampling, the normalization and the principal compo-

nent scores computation is quite small. As a whole,

the average processing speed was over 25 frames per

second.

Table 1: Processing time.

Task Time(msec)

Capturing 11

Marker Detection 11

Mesh Registration 7

Image Rendering 4

4.3 Visualization of Mesh Registration

and Texture Overlay

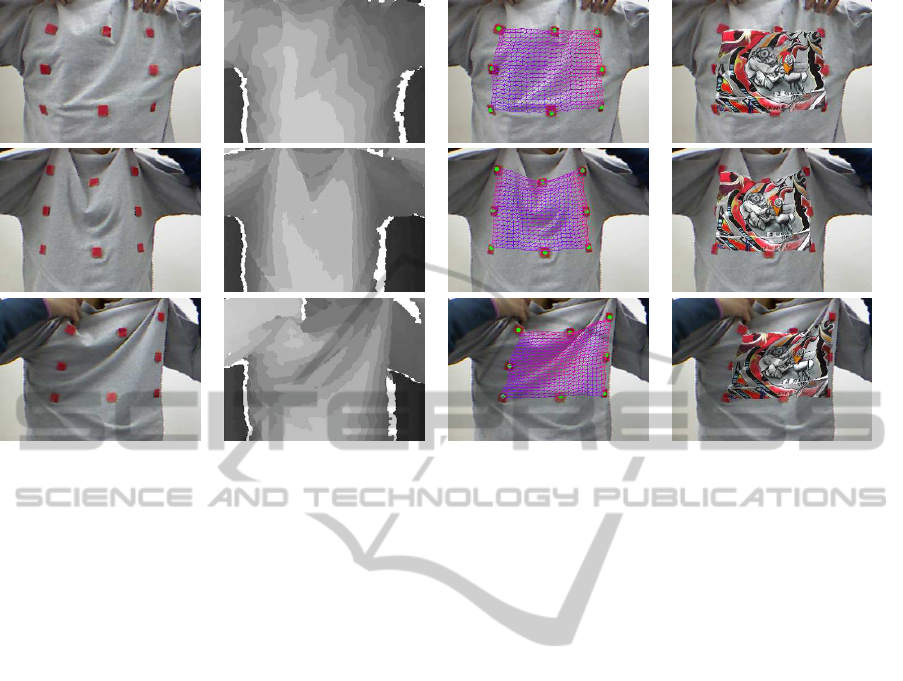

Finally, we illustrate the visualization result of the

texture overlay onto the non-rigid target surface of the

T-shirt as augmented reality in Fig. 4. Even if the tar-

get has no texture inside the target region, our mesh is

deformed to fit the surface according to the data ob-

tained by the depth image.

5 DISCUSSION

Following the description about our method and ex-

perimental results, we summarize the characteristics

of our method. In terms of the processing speed as

the principal advantage of our method, we achieved

quite high processing speed thanks to the reduction

of the dofs of the mesh and the non-iterative mesh

registration method. Since we also regard the mesh

registration accuracy is sufficient, our method can be

applicable to a practical virtual fitting system. More-

over, since the mesh registration is based on the de-

formation models, our method is robust to the noise

of the input depth image.

On the other hand, if the cycle of the spatial fre-

quency of the target surface is shorter than the sam-

pling intervalof the mesh, we can not generate a mesh

having appropriate shape. Although this problem is

supposed to be solved by shrinking the sampling in-

terval, additional processing will be required because

average mesh needs to be sampled.

Basically, the deformation models produce not an

optimal but a broken mesh in case that the target sur-

face is deformed more sharply than the learning angle.

To attack this problem, it might be effective to learn

much more varieties of the representative meshes.

6 CONCLUSIONS AND FUTURE

WORK

We presented a registering method of the 3-D de-

formable mesh using a commodity depth camera for a

texture overlay onto a non-rigid surface. Our method

has several advantages. First, it is not required to at-

tach a rich texture onto the target surface by taking the

advantage of using a depth camera. Second, the PCA

VISAPP 2012 - International Conference on Computer Vision Theory and Applications

70

(a) (b) (c) (d)

Figure 4: Some visualization results. All images are cropped for the visualization and the shadow is reflected from pixel value

of a color image. (a): Input color images. (b): Input depth images. (c): Registered mesh. (d): Texture overlay onto the target

T-shirt.

enables us to generate a feasible shape mesh even if

the depth data are not very accurate or noisy. Further-

more the PCA reduced the dofs of the mesh and helps

to obtain a real-time processing.

As a future work, we will implement the sampling

of the depth image using GPU shader programming

since it will provide a more dense sampling and then

more precise registration with faster processing. In

addition, we expect to replace our template mesh by

a T-shirt model in order to achieve a global virtual T-

shirt overlaying and remove the color markers.

ACKNOWLEDGEMENTS

This work was partially supported by Grant-in-Aid

for Scientific Research (C) 21500178, JSPS.

REFERENCES

Amberg, B., Romdhani, S., and Vetter, T. (2007). Optimal

Step Nonrigid ICP Algorithms for Surface Registra-

tion. In Proc. CVPR, pages 1–8. Ieee.

Azuma, R. (1997). A survey of augmented reality. PTVE,

6(4):355–385.

Cai, Q., Gallup, D., Zhang, C., and Zhang, Z. (2010). 3D

Deformable Face Tracking with a Commodity Depth

Camera. In Proc. ECCV, number 2.

Ehara, J. and Saito, H. (2006). Texture overlay for virtual

clothing based on PCA of silhouettes. In Proc. IS-

MAR, pages 139–142. Ieee.

Hilsmann, A. and Eisert, P. (2009). Tracking and Retex-

turing Cloth for Real-Time Virtual Clothing Applica-

tions. In Proc. MIRAGE, pages 1–12.

Kim, Y. S., Lim, H., Kang, B., Choi, O., Lee, K., Kim, J.

D. K., and Kim, C.-y. (2010). Realistic 3d face mod-

eling using feature-preserving surface registration. In

Proc. ICIP, pages 1821–1824.

Li, H., Sumner, R. W., and Pauly, M. (2008). Global

Correspondence Optimization for Non-Rigid Regis-

tration of Depth Scans. Computer Graphics Forum,

27(5):1421–1430.

Papazov, C. and Burschka, D. (2011). Deformable 3D

Shape Registration Based on Local Similarity Trans-

forms. Computer Graphics Forum, 30(5):1493–1502.

Perriollat, M., Hartley, R., and Bartoli, A. (2010). Monoc-

ular Template-based Reconstruction of Inextensible

Surfaces. IJCV, 95(2):124–137.

Pilet, J., Lepetit, V., and Fua, P. (2007). Fast Non-Rigid Sur-

face Detection, Registration and Realistic Augmenta-

tion. IJCV, 76(2):109–122.

Salzmann, M. and Fua, P. (2011). Linear local models

for monocular reconstruction of deformable surfaces.

PAMI, 33(5):931–44.

Salzmann, M., Pilet, J., Ilic, S., and Fua, P. (2007). Surface

deformation models for nonrigid 3D shape recovery.

PAMI, 29(8):1481–7.

Shen, S., Shi, W., and Liu, Y. (2010). Monocular 3-D track-

ing of inextensible deformable surfaces under L(2) -

norm. image processing, 19(2):512–21.

TEXTURE OVERLAY ONTO NON-RIGID SURFACE USING COMMODITY DEPTH CAMERA

71