HAMAKE: A DATAFLOW APPROACH TO DATA PROCESSING IN

HADOOP

Vadim Zaliva

1

and Vladimir Orlov

2

1

Codeminders, Saratoga, CA, U.S.A.

2

Codeminders, Kiev, Ukraine

Keywords:

Hadoop, Hamake, Dataflow.

Abstract:

Most non-trivial data processing scenarios using Hadoop typically involve launching more than one MapRe-

duce job. Usually, such processing is data-driven with the data funneled through a sequence of jobs. The

processing model could be expressed in terms of dataflow programming, represented as a directed graph with

datasets as vertices. Using fuzzy timestamps as a way to detect which dataset needs to be updated, we can

calculate a sequence in which Hadoop jobs should be launched to bring all datasets up to date. Incremental

data processing and parallel job execution fit well into this approach. These ideas inspired the creation of

the hamake utility. We attempted to emphasize data allowing the developer to formulate the problem as a

data flow, in contrast to the workflow approach commonly used. Hamake language uses just two data flow

operators: fold and foreach, providing a clear processing model similar to MapReduce, but on a dataset level.

1 MOTIVATION AND

BACKGROUND

Hadoop (Bialecki et al., 2012) is a popular open-

source implementation of MapReduce, a data pro-

cessing model introduced by Google (Dean and Ghe-

mawat, 2008).

Hadoop is typically used to process large amounts

of data through a series of relatively simple oper-

ations. Usually Hadoop jobs are I/O-bound (Weil,

2012; Gangadhar, 2012), and execution of even triv-

ial operations on a large dataset could take significant

system resources. This makes incremental processing

especially important. Our initial inspiration was the

Unix make utility. While applying some of the ideas

implemented by make to Hadoop, we took the oppor-

tunity to generalize the processing model in terms of

dataflow programming.

Hamake was developed to address the problem of

incremental processing of large data sets in a collabo-

rative filtering project.

We’ve striven to create an easy to use utility that

developers can start using right away without com-

plex installation or extensive learning curve.

Hamake is open source and is distributed

under Apache License v2.0. The project is

hosted at Google Code at the following URL:

http://code.google.com/p/hamake/.

2 PROCESSING MODEL

Hamake operates on files residing on a local or dis-

tributed file system accessible from the Hadoop job.

Each file has a timestamp reflecting the date and

time of its last modification. A file system direc-

tory or folder is also a file with its own timestamp.

A Data Transformation Rule (DTR) defines an opera-

tion which takes files as input and produces other files

as output.

If file A is listed as input of a DTR, and file B is

listed as output of the same DTR, it is said that “B

depends on A.” Hamake uses file time stamps for de-

pendency up-to-date checks. DTR output is said to be

up to date if the minimum time stamp on all outputs

is greater than or equal to the maximum timestamp on

all inputs. For the sake of convenience, a user could

arrange groups of files and folders into a fileset which

could later be referenced as the DTR’s input or output.

Hamake uses fuzzy timestamps

1

which can be

compared, allowing for a slight margin of error. The

“fuzziness” is controlled by a tolerance of σ. Times-

tamp a is considered to be older than timestamp b if

(b− a) > σ. Setting σ = 0 gives us a non-fuzzy, strict

timestamp comparison.

Hamake attempts to ensure that all outputs from

1

The current stable version of hamake uses exact (non-

fuzzy) timestamps.

457

Zaliva V. and Orlov V..

HAMAKE: A DATA FLOW APPROACH TO DATA PROCESSING IN HADOOP.

DOI: 10.5220/0003893804570461

In Proceedings of the 2nd International Conference on Cloud Computing and Services Science (CLOSER-2012), pages 457-461

ISBN: 978-989-8565-05-1

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

a DTR are up to date

2

To do so, it builds a depen-

dency graph with DTRs as edges and individual files

or filesets as vertices. Below, we show that this graph

is guaranteed to be a Directed Acyclic Graph (DAG).

After building a dependency graph, a graph reduc-

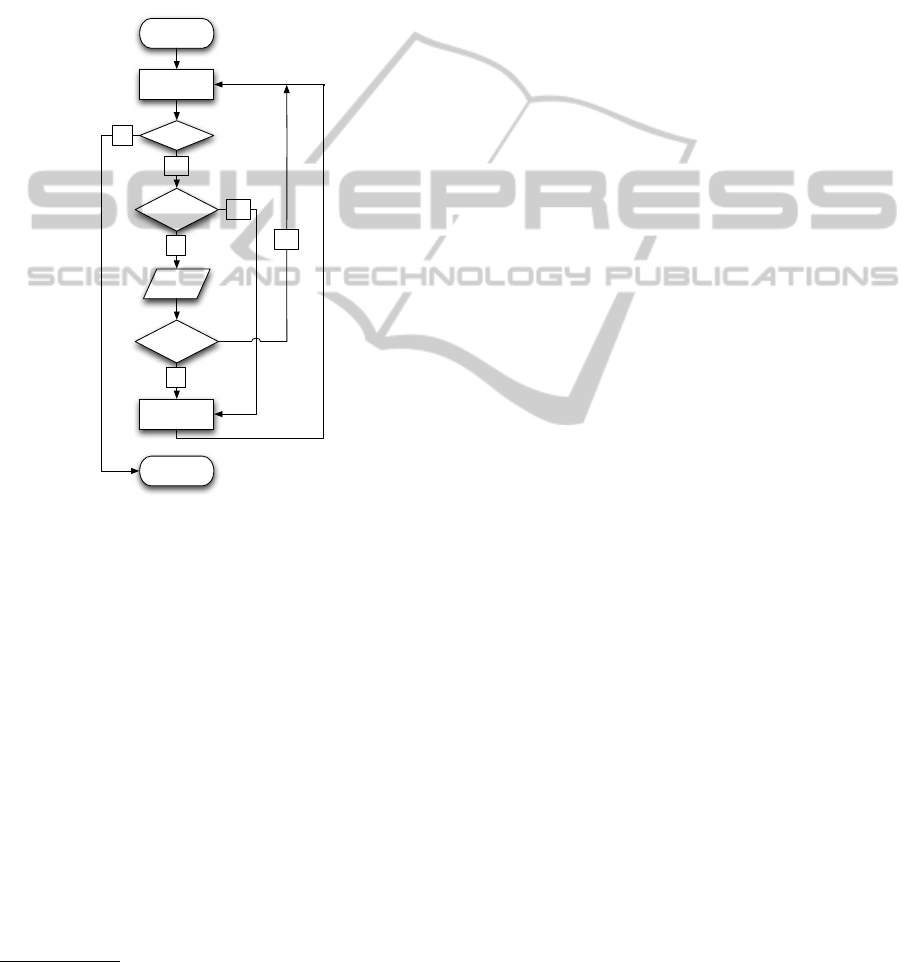

tion algorithm (shown in Figure 1) is executed. Step

1 uses Kahn’s algorithm (Kahn, 1962) of topological

ordering. In step 6, when the completed DTR is re-

moved from the dependency graph, all edges pointing

to it from other DTRs are also removed.

Start

1: Locate DTR

w/o input

dependencies

Stop

2: Found ?

3: Inputs up to

date?

4: Run

DTR

6: Remove DTR

from graph

yes

no

yes

no

5: Exec

Error?

no

yes

Figure 1: Hamake dependency graph reduction algorithm.

The algorithm allows for parallelism. If more than

one DTR without input dependencies is found during

step 1, the subsequent steps 2-6 can be executed in

parallel for each discovered DTR.

It should be noted that if DTR exectuion has

failed, hamake can and will continue to process other

DTRs which do not depend directly or indirectly on

the results of this DTR. This permits the user to fix

problems later and re-run hamake, without the need

to re-process all data.

Cyclic dependencies must be avoided, because a

dataflow containing such dependencies is not guaran-

teed to terminate. Implicit checks are performed dur-

ing the reading of DAG definitions and the building

of the dependency graph. If a cycle is detected, it is

reported as an error. Thus the dependency graph used

by hamake is assured to be a directed acyclic graph.

2

Because hamake has no way to update them, it does

not attempt to ensure that files are up to date, unless they

are listed as one of a DTR’s outputs.

However, hamake supports a limited scenario of

iterative processing with a feature called generations.

Each input or output file can be marked with a gen-

eration attribute. Any two files referencing the same

path in the file system while having different gener-

ations are represented as two distinct vertices in the

dependency graph. This permits resolution of cyclic

dependencies within the context of a single hamake

execution.

One useful consequence of hamake dataflow be-

ing a DAG is that for each vertex we can calculate the

list of vertices it depends on directly and indirectly us-

ing simple transitive closure. This allows us to easily

estimate the part of a dataflow graph being affected by

updating one or more files, which could be especially

useful for datasets where the cost of re-calculation

is potentially high due to data size or computational

complexity.

Hamake is driven by dataflow description, ex-

pressed in a simple XML-based language. The full

syntax is described in (Orlov and Bondar, 2012). The

two main elements, fold and foreach, correspond to

two types of DTRs. Each element has input, output,

and processing instructions. The execution of pro-

cessing instructions brings the DTR output up to date.

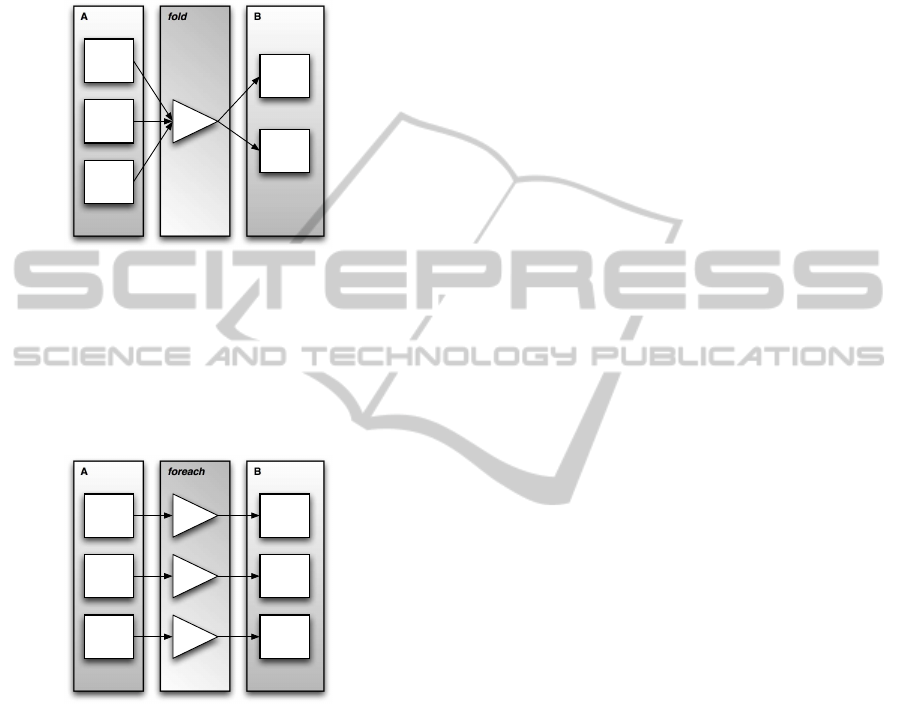

Fold implies a many-to-one dependency between

input and output. In other words, the output depends

on the entirety of the input, and if any of the inputs

have been changed, the outputs need to be updated.

Foreach implies a one-to-one dependency where for

each file in an input set there is a corresponding file in

an output set, each updated independently.

Hamake dataflow language has declarative se-

mantics making it easy to implement various dataflow

analysis and optimization algorithms in the future.

Examples of such algorithms include: merging

dataflows, further execution parallelization, and anal-

ysis and estimation of dataflow complexity.

3 PARALLEL EXECUTION

While determining the sequence and launching of

Hadoop jobs required to bring all datasets up-to-date,

hamake attempts to perform all required computa-

tions in the shortest possible time. To achieve this,

hamake aims for maximal cluster utilization, running

as many Hadoop jobs in parallel as cluster capacity

permits.

There are three main factors that drive job

scheduling logic: file timestamps, dependencies, and

cluster computational capacity. On the highest level,

DTR dependencies determine the sequence of jobs to

be launched.

CLOSER2012-2ndInternationalConferenceonCloudComputingandServicesScience

458

In the case of fold DTR, a single Hadoop job, PIG

script or shell command, may be launched, and hence

there is no opportunity for parallel execution. In the

example shown in Figure 2, since fileset B depends on

all files in fileset A, a single job associated with fold

DTR will be executed.

A B fold

a

1

b

1

a

2

job

a

3

b

2

Figure 2: Decomposition of fold DTR.

A foreach DTR works by mapping individual files

in fileset A to files in fileset B. Assuming that fileset

A consists of 3 files: a

1

, a

2

, a

3

, the dependency graph

could be represented as shown in Figure 3. In this

case, we have an opportunity to execute the three jobs

in parallel.

A B foreach

a

1

b

2

job

a

2

b

2

job

a

3

b

3

job

Figure 3: Decomposition of foreach DTR.

The Hadoop cluster capacity is defined in terms

of the number of map slots and reduce slots. When

a DTR launches a Hadoop job, either directly as de-

fined by mapreduce processing instruction or via PIG

script, a single job will spawn one or more mapper

or reducer tasks, each taking one respective slot. The

number of mappers and reducers launched depends

on many factors, such as the size of the HDFS block,

Hadoop cluster settings, and individual job settings.

In general, hamake has neither visibility of nor con-

trol over most of these factors, so it does not currently

deal with individual tasks. Thus hamake parallel ex-

ecution logic is controlled by a command line option

specifying how many jobs it may run in parallel.

4 EXAMPLE

In a large, online library, the hamake utility can be

used to automate searches for duplicates within a cor-

pus of millions of digital text documents. Documents

with slight differences due to OCR errors, typos, dif-

ferences in formatting, or added material such as a

foreword or publishers note can be found and re-

ported.

To illustrate hamake usage, consider the simple

approach of using the Canopy clustering algorithm

(McCallum et al., 2000) and a vector space model

(Manning et al., 2008) based on word frequencies.

The implementation could be split into a series of

steps, each implemented as MapReduce job:

ExtractText Extract a plain text from native docu-

ment format (e.g. PDF).

Tokenize Split plain text into tokens which roughly

correspond to words. Deal with hyphens, com-

pound words, accents, and diacritics, as well as

case-folding, stemming, or lemmatization, result-

ing in a list of normalized tokens.

FilterStopwords Filter out stopwords, like a, the, and

are.

CalculateTF Calculate a feature vector of term fre-

quencies for each document.

FindSimilar Run Canopy clustering algorithm to

group similar documents into clusters using co-

sine distance as a fast approximate distance met-

ric.

OutputResult Output document names, which are

found in clusters with more than one element.

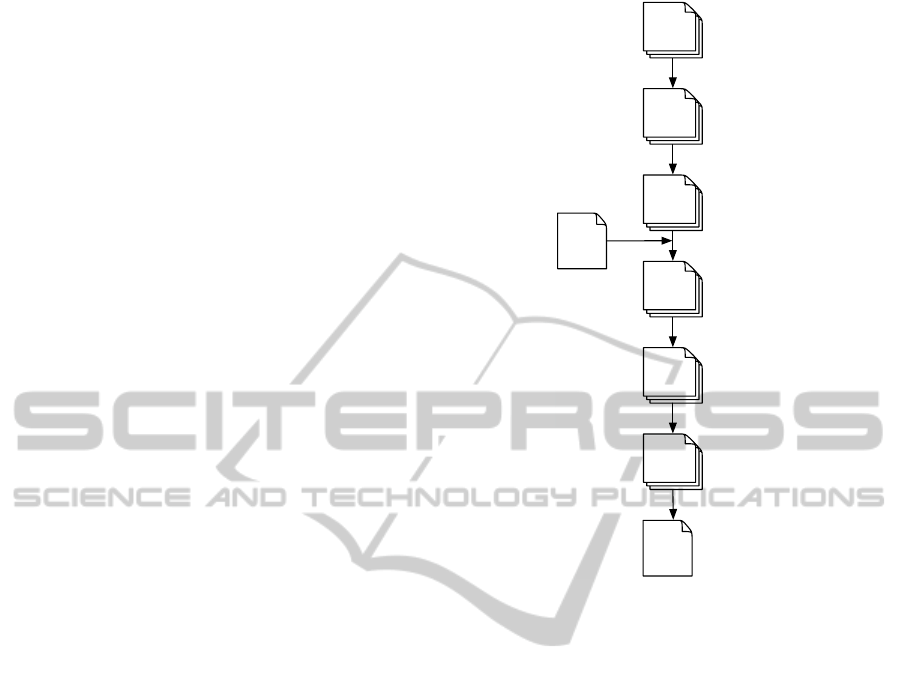

Each of the six MapReduce jobs produces an out-

put file which depends on its input. For each docu-

ment, these jobs must be invoked sequentially, as the

output of one task is used as input of the next. Ad-

ditionally, there is a configuration file containing a

list of stop words, and some task outputs depend on

this file content. These dependencies could be repre-

sented by a DAG, as shown in Figure 4, with vertices

representing documents and jobs assigned to edges.

The XML file describing this dataflowin hamake lan-

guage is shown as Listing 1.

Listing 1: hamakefile, describing process for detecting du-

plicate documents.

1<?xml version =” 1.0 ” encoding=”UTF−8” ?>

2<p r o j e c t name=” FindSimilarBo oks ”>

3

4 <property name=” l i b ” v a l u e=” / l ib / ” />

5

6 <f i l e s e t id=” in p u t ” path =” / doc” mask=” ∗ . pd f ” />

7 <f i l e i d =” output ” path =” / r e s u l t . t xt ” />

8

9 <foreach name=” Ex t r a c t T e x t ”>

10 <input>

HAMAKE:ADATAFLOWAPPROACHTODATAPROCESSINGINHADOOP

459

11 <include i dr ef =” inp u t ” />

12 </ input>

13 <output>

14 <f i l e id=” pl a i n T e x t ” path =” / t xt /${ f o re a ch : f il en a me}” />

15 </ output>

16 <mapreduce j ar =” ${ li b } / hadoopJobs . job ”

17 main=” Te x tEx tra c to r”>

18 <parameter>

19 <l i t e r a l v a l u e=”${ f or e ac h : pa t h }” />

20 </ parameter>

21 <parameter>

22 <referenc e i d r e f =” p l a in T e x t ” />

23 </ parameter>

24 </ mapreduce>

25 </ fo reach>

26

27 <forea ch name=” Tokenize”>

28 <input>

29 <f i l e id=” pl a i n T e x t ” path =” / t xt ” />

30 </ input>

31 <output>

32 <f i l e id=” tokens ” path =” / t o k e n s /$ { f or e ac h :f i l en am e } ” />

33 </ output>

34 <mapreduce j ar =” ${ li b } / hadoopJobs . job ”

35 main=” Token izer ”>

36 . . .

37 </ mapreduce>

38 </ fo reach>

39

40 <forea ch name=” FilterSt o p Wo r d s”>

41 <input>

42 <f i l e id=” stopWords ” path =” / stopwords . t x t ” />

43 <f i l e id=” tokens ” path =” / t o k e n s ” />

44 </ input>

45 <output>

46 <f i l e id=” terms ” p ath =” / terms /${ f o re ac h: f ile n am e } ” />

47 </ output>

48 <mapreduce j ar =” ${ li b } / hadoopJobs . job ”

49 main=” Token izer ”>

50 . . .

51 </ mapreduce>

52 </ fo reach>

53

54 <forea ch name=” Calculate T F”>

55 <input>

56 <f i l e id=” terms ” p ath =” / terms ” />

57 </ input>

58 <output>

59 <f i l e id=”TFVector” path =” / TF” />

60 </ output>

61 <mapreduce j ar =” ${ d i st } / hadoopJobs . jo b ”

62 main=” Ca lculateTF”>

63 . . .

64 </ mapreduce>

65 </ fo reach>

66

67 <fo ld name=” Fi n d Sim ilar ”>

68 <input>

69 <f i l e id=”TFVector” path =” / TF” />

70 </ input>

71 <output>

72 <include i dr ef =” c lu st er sL is t ” p ath =” / c l u st er s ” />

73 </ output>

74 <mapreduce j ar =” ${ li b } / hadoopJobs . job ”

75 main=”Canopy”>

76 . . .

77 </ mapreduce>

78 </ f o l d>

79

80 <fo ld name=” Ou t p u t R e s u l t ”>

81 <input>

82 <f i l e id=” c l u s t er sL is t ” path =” / c l u s te r s ” />

83 </ input>

84 <output>

85 <include i dr ef =” o u t p u t ” />

86 </ output>

87 <mapreduce j ar =” ${ li b } / hadoopJobs . job ”

88 main=” Ou tputSim ilarBook s ”>

89 . . .

90 </ mapreduce>

91 </ f o l d>

92</ project>

The first DTR (lines 10-25) converts a document

from a native format such as PDF to plain text. The

input of the DTR is a reference to the /doc folder,

and the output is the /txt folder. The foreach DTR es-

tablishes one-to-one dependencies between files with

identical names in these two folders. The Hadoop

ExtractText

Tokenize

stop

words

FilterStopWords

CalculateTF

FindSimilar

/doc

/txt

/tokens

/terms

/tf

results

/clusters

OutputResults

Figure 4: Directed acyclic graph of a data flow for duplicate

document detection.

job which performs the actual text extraction is de-

fined using the mapreduce element. It will be in-

voked by hamake for each unsatisfied dependency.

The job takes two parameters, defined with parame-

ter elements - a path to an original document as the

input and a path to a file where the plain text version

will be written. The remaining five DTRs are defined

in a similar manner.

Hamake, when launched with this XML dataflow

definition, will execute a graph reduction algorithm,

as shown in Figure 1, and will find the first DTR

to process. In our example, this is ExtractPlainText.

First, Hamake will launch the corresponding Hadoop

job and immediately following, execute DTRs which

depend on the output of this DTR, and so on until all

output files are up to date. As a result of this data

flow, a file named results.txt with a list of similar doc-

uments will be generated.

This data flow could be used for incremental pro-

cessing.

When new documents are added, hamake will re-

frain from running the following DTRs: ExtractText,

Tokenize, FilterStopWords, and CalculateTF for pre-

viously processed documents. However, it will run

those DTRs for newly added documents and then, re-

run FindSimilar and OutputResults.

CLOSER2012-2ndInternationalConferenceonCloudComputingandServicesScience

460

If the list of stop words has been changed,

hamake will re-run only FilterStopWords, Calcu-

lateTF, FindSimilar, and OutputResults.

5 RELATED WORK

Several workflow engines exist for Hadoop, such as

Oozie (Yahoo!, 2012), Azkaban (Linkedin, 2012),

Cascading (Wensel, 2012), and Nova (Olston et al.,

2011). Although all of these products could be used to

solve similar problems, they differ significantly in de-

sign, philosophy, target user profile, and usage scenar-

ios limiting the usefulness of a simple, feature-wise

comparison.

The most significant difference between these en-

gines and hamake lies in the workflow vs. dataflow

approach. All of them use the former, explicitly spec-

ifying dependencies between jobs. Hamake, in con-

trast, uses dependencies between datasets to derive

workflow. Both approaches have their advantages,

but for some problems, the dataflow representation as

used by hamake is more natural.

Kangaroo (Zhang et al., 2011) is using similar

data-flow DAG processing model, but not integrated

with Hadoop.

6 FUTURE DIRECTIONS

One possible hamake improvement may be better

integration with Hadoop schedulers. For example,

if Capacity Scheduler or Fair Scheduler is used, it

would be useful for hamaketo take information about

scheduler pools or queues capacity into account in its

job scheduling algorithm.

More granular control over parallelism could be

achieved if the hamake internal dependency graph

for foreach DTR contained individual files rather than

just filesets. For example, consider a dataflow consist-

ing of three filesets A, B, C, and two foreach DTR’s:

D

1

, mapping A to B, and D

2

, mapping B to C. File-

level dependencieswould allow some jobs to run from

D

2

without waiting for all jobs in D

1

to complete.

Another potential area of future extension is the

hamake dependency mechanism. The current imple-

mentation uses a fairly simple timestamp compari-

son to check whether dependency is satisfied. This

could be generalized, allowing the user to specify cus-

tom dependency check predicates, implemented ei-

ther as plugins, scripts (in some embedded scripting

languages), or external programs. This would allow

for decisions based not only on file meta data, such as

the timestamp, but also on its contents.

Several hamake users have requested support

for iterative computations with a termination con-

dition. Possible use-cases include fixed-point com-

putations and clustering or iterative regression algo-

rithms. Presently, to embed this kind of algorithm into

the hamake dataflow, it requires the use of the gen-

erations feature combined with external automation,

which invokes hamake repeatedly until a certain exit

condition is satisfied. Hamake users could certainly

benefit from native support for this kind of dataflow.

REFERENCES

Bialecki, A., Cafarella, M., Cutting, D., and O’Malley,

O. (2005, Retrieved Feburay 06, 2012). Hadoop: a

framework for running applications on large clusters

built of commodity hardware.

Dean, J. and Ghemawat, S. (2008). Map Reduce: Simplified

data processing on large clusters. Communications

of the ACM-Association for Computing Machinery-

CACM, 51(1):107–114.

Gangadhar, M. (2010. Retrieved Feburay 06, 2012).

Benchmarking and optimizing hadoop. http://

www.slideshare.net/ydn/hadoop-summit-2010-

benchmarking-and-optimizing-hadoop.

Kahn, A. (1962). Topological sorting of large networks.

Communications of the ACM, 5(11):558–562.

Linkedin (2010. Retrieved Feburay 08, 2012). Azkaban:

Simple hadoop workflow. http:// sna-projects.com/

azkaban/.

Manning, C., Raghavan, P., and Sch¨utze, H. (2008). Intro-

duction to information retrieval. Cambridge Univer-

sity Press.

McCallum, A., Nigam, K., and Ungar, L. H. (2000). Effi-

cient Clustering of High-Dimensional Data Sets with

Application to Reference Matching. KDD ’00.

Olston, C., Chiou, G., Chitnis, L., Liu, F., Han, Y., Lars-

son, M., Neumann, A., Rao, V., Sankarasubrama-

nian, V., Seth, S., et al. (2011). Nova: continuous

pig/hadoop workflows. In Proceedings of the 2011 in-

ternational conference on Management of data, pages

1081–1090. ACM.

Orlov, V. and Bondar, A. (2011. Retrieved Feburay

06, 2012). Hamake syntax reference. http://

code.google.com/p/hamake/wiki/HamakeFileSyntax

Reference.

Weil, K. (2010. Retrieved Feburay 06, 2012). Hadoop

at twitter. http://engineering.twitter.com/2010/04/

hadoop-at-twitter.html.

Wensel, C. (2010. Retrieved Feburay 08, 2012). Cascading.

http://www.cascading.org/.

Yahoo! (2010. Retrieved Feburay 08, 2012). Oozie: Work-

flow engine for hadoop. http://yahoo.github.com/

oozie/.

Zhang, K., Chen, K., and Xue, W. (2011). Kangaroo:

Reliable execution of scientific applications with dag

programming model. In Parallel Processing Work-

shops (ICPPW), 2011 40th International Conference

on, pages 327–334. IEEE.

HAMAKE:ADATAFLOWAPPROACHTODATAPROCESSINGINHADOOP

461