EVALUATION OF E-LEARNING TOOLS BASED

ON A MULTI-CRITERIA DECISION MAKING

Eduardo Islas-Pérez, Yasmín Hernández-Pérez, Miguel Pérez-Ramírez,

Carlos F. García-Hernández and Guillermo Rodriguez-Ortiz

Instituto de Investigaciones Eléctricas, Reforma 113 Col. Palmira, Cuernavaca, Morelos 62490, México

Keywords: E-learning, Evaluation Methodology, Learning Management Systems, Multi-Criteria Decision Making.

Abstract: This paper shows a benchmarking of different e-learning tools based on the definition of a set of criteria

which are useful and desirable characteristics of learning management systems. The final results show the

evaluation from different perspectives. The evaluation is carried out using a methodology based on such set

of criteria as well as a mixture of Multi-Criteria Decision Making methods to evaluate different modern

technologies applied in training and e-learning systems. The criteria are grouped in a three-dimensional

model in accordance with their use and application in training processes. The proposed model organises the

set of criteria in three axes according to their functional scope, the Management, the Technological and the

Instructional axes. Applying this methodology we evaluated different learning technologies and then we

compare them from different points of view. The objective of this work is to help e-learning users and

developers make good decisions about which tools have the best features for developing a system for

management of resources, courses and learning objects.

1 INTRODUCTION

The aim of this work is to present the outcomes of a

benchmarking of e-learning technologies which is

based on a proposed evaluation methodology within

a three-dimensional (3D) model of criteria. The

information, the evaluation methodology and the 3D

model of criteria might provide useful information to

e-learning users and developers to make good

decisions about which tool has or should have the

best features for choosing or developing a

management system of instructional resources such

as courses and learning objects. The three-

dimensional (3D) model and the proposed

methodology in this paper, not only are helpful to

evaluate the applicability of each learning tool from

a global point of view, but they are also useful to

establish the ranking of each learning tool in every

dimension (axis): Management (M), Technological

(T) and Instructional (I), in every plane (MT, MI,

TI) and in a 3-dimensional space (MTI). This

provides different viewpoints which allow

evaluating each tool; these perspectives help to

determine whether or not a tool fulfils the

requirements from a Management, Technological or

Instructional point of view.

Although the extant literature has many articles,

books, internet services, and guides to evaluate LMS

packages (Brandon 2006, Edutools 2007) they do

not use the approach presented in this paper, and

where there is some similarity, the method is not

described in detail as it is covered here. The

evaluation methodology described here is easy to

implement using office tools and it can be adapted to

evaluate other software products as database

management systems and virtual reality

development environments (Islas et al., 2004).

A huge number of LMS packages are available;

more than 100 are mentioned in (Brandon 2009).

The proposed methodology was used to evaluate

only three commercial platforms (Blackboard, IBM

Lotus and PeopleSoft) and five open source tools

(Docebo, Dokeos, Joomla, Moodle and Sakai) since

these LMS are extensively used. We believe that this

evaluation might be useful for companies to make a

decision about which tool fulfil their requirements to

use in their e-learning and e-training activities

(Horton and Norton, 2003).

309

Islas-Pérez E., Hernández-Pérez Y., Pérez-Ramírez M., F. García-Hernández C. and Rodriguez-Ortiz G..

EVALUATION OF E-LEARNING TOOLS BASED ON A MULTI-CRITERIA DECISION MAKING.

DOI: 10.5220/0003914003090312

In Proceedings of the 4th International Conference on Computer Supported Education (CSEDU-2012), pages 309-312

ISBN: 978-989-8565-06-8

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

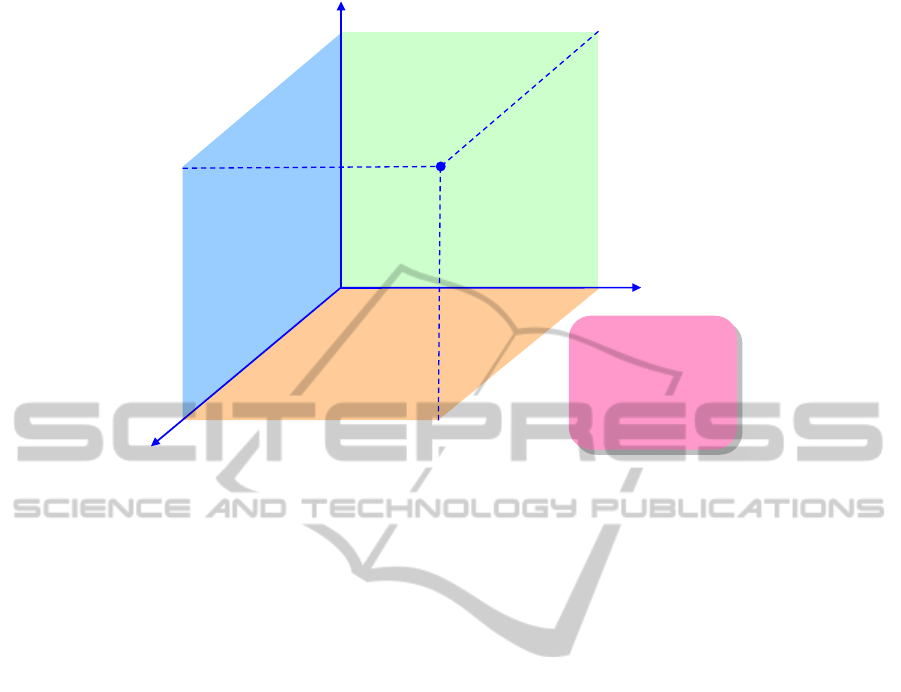

Figure 1: Three-dimensional model to evaluate modern learning and training systems.

2 EVALUATION

METHODOLOGY

2.1 Three-Dimensional Model

The model we are proposing in this work is being

used in our research group to analyze modern

learning and training systems. This model relates the

three more important aspects involved in personnel

training and that constitutes the 3 axes of the 3D

model, namely: Management, Technological and

Instructional. Different analysis, evaluations or

studies can be made with the 3D model proposed.

Every point in the model (see Fig 1) will fall in one

of the axes, in a plane or in the space of the 3D

coordinate system and would represent an eligible

capacity to be evaluated, observed or monitored.

2.2 Criteria and Weight Definition

The methodology is based on 50 criteria used to

evaluate different technologies applied in modern

training and learning systems. This evaluation

methodology, personalized with an appropriate set

of criteria, has been applied earlier in the evaluation

of software and hardware tools, which are related

with development of virtual reality systems (Pérez et

al., 2003). The criteria for e-learning tools are

grouped in the 3D model described above in

accordance with their use and application in training

and learning processes.

2.3 Evaluation Methods Definition

The objective for applying three amalgamation

Multi-Criteria Decision Making (MCDM) methods

is to compare the weighting methods and value

functions in terms of their ease of use,

appropriateness and validity (Bell et al., 1998),

(Chankong and Haimes, 1983), (Hobbs and Meier,

1994) and (Stewart, 1992).

MCDM 1. Additive value function and non-

hierarchical weight assessment.

=

∑

(1)

where:

The value of criterion x

i

for alternative A

j

A single criterion value function that converts the

criterion into a measure of value or worth. These are

often scaled from 0 to 1, with 1 being better. In this

first method these values were not scaled

Weight for criterion x

i

, representing its relative

importance. These are often normalized then:

∑

=1

In this first method the weights were not normalized,

instead they all were assigned with the same value of

1

Number of criteria

MCDM 2. Additive value function and hierarchical

weight assessment.

=

∑

(2)

T

echnological axis

Import/export XML data

Enable/Disable information

Required browser

Server software

Database requirements

Open source

Software version

Accesibility compliance

Operation in movil gadgets

Integration with other tools

Integration with applications

Wiki

MI Plane

Course Management

TI Plane

Instructor helpdesk

Course templates

Compliance with standards

Online search

Online Grading Tools

Discussion forums

Bookmarks

Self-evaluation

Virtual library

M

anagement axis

Student tracking

Statistics

Massive load of users

Curriculum management

Orientation/help

MT Plane

Offline couses/synchronization

Calendar/progress review

Cost for licencies

Hosted services

Remote laboratory

Online journal/notes

Student portfolio

Company profile

Registration

File exchange

Real-time chat

Video services

Whiteboard

Teamwork

Communities

email

Authentication

I

nstructional axis

M

T

MTI

Space

Automated testing and scoring

Instructional design tools

Customized look and feel

Course authorization

Content sharing/reuse

Alerts

Optional extras

MTI

Space

Automated testing and scoring

Instructional design tools

Customized look and feel

Course authorization

Content sharing/reuse

Alerts

Optional extras

There are no criteria for instructional axis

CSEDU2012-4thInternationalConferenceonComputerSupportedEducation

310

where:

In this second method these values were scaled from

0 to 1 using the following expression:

=1+

In this second method the hierarchical weight

assessment was used

The MAX in (1) and (2) indicates that higher values

are better.

MCDM 3. Goal programming and hierarchical

weight assessment.

Goal programming focuses on achievement of goals,

as oppose to additive value functions, which

emphasize trading off criteria.

=

∑

−

(3)

where:

In this third method these values were scaled from 0

to 1

Also in this third method the hierarchical weight

assessment was used

The goal for criteria x

i

, defined as acceptable,

desirable or ideal. In goal programming,

are usually linear functions of

Exponent applied to the absolute value of the

weighted difference between the goal and the actual

value. In this third method was used p=1, which is

often called “city block” metric

MIN in (3) indicates that smaller values are better.

3.3 LMSs Evaluation Results

The following section shows the results obtained

applying the three amalgamation methods described

above. The systems evaluated were: Blackboard

v9.0, Docebo v4.0, Dokeos v2.0, IBM Lotus v8.5.3,

Joomla v2.5, Moodle v1.9.9+, PeopleSoft v9.0 and

Sakai v2.8.

3.3.1 Results Obtained

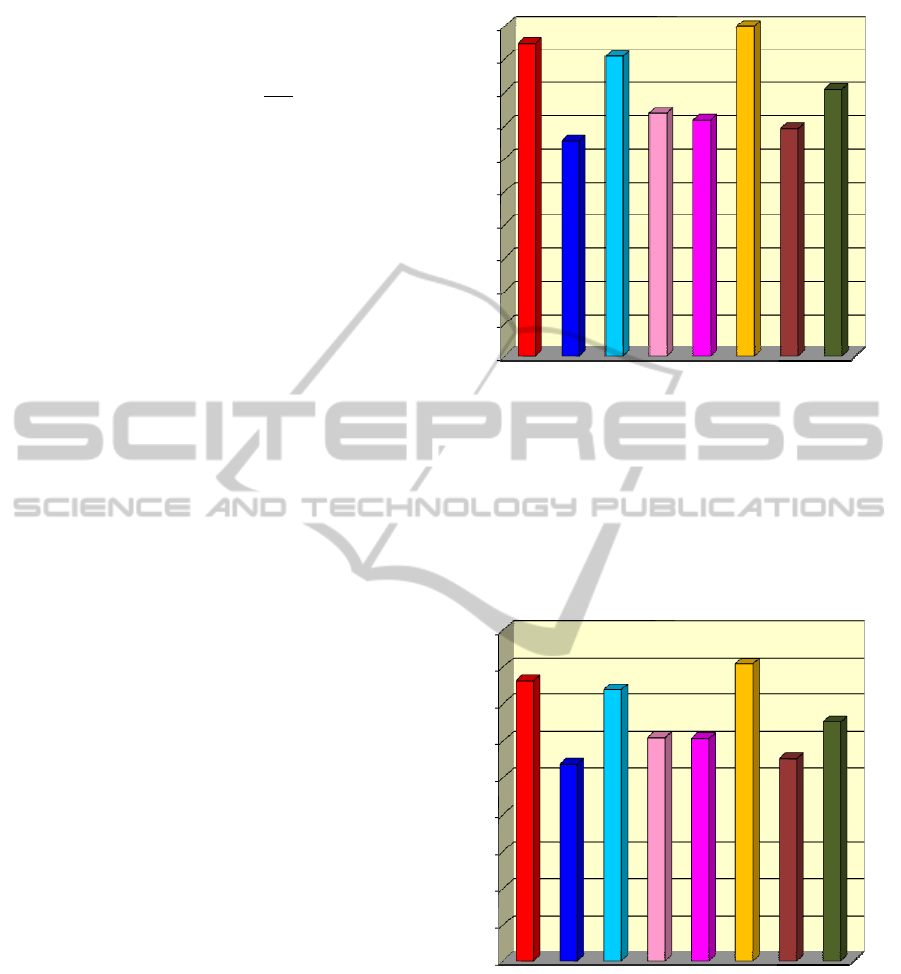

The results for the first MCDM method are depicted

in Fig 2, which shows the ranking and global results

for each software tool. These global results include

all the criteria considered applying the additive value

function without scaling the value function Vi(Xij)

and using non-hierarchical weight assessment. In

this method, the best evaluated tool was Moodle

followed by Blackboard.

In Fig 3 the results obtained for the second

Figure 2: Total results obtained applying the first MCDM

method for the systems evaluated.

MCDM method are shown applying the additive

value function with the scaling of the value

functions from 0 to 1 and using hierarchical weight

assessment. In the first and second method greater

values mean better e-learning tools. In this case as in

the former the best tools considering all the

characteristics was Moodle and Blackboard.

Figure 3: Total results obtained applying the second

MCDM method.

Finally in Fig 4 the results obtained for the third

MCDM method are shown, in this third

amalgamation method we applied goal programming

and hierarchical weight assessment, in this method

smaller values mean which e-learning tools are

better. Also in this evaluation, Moodle and

Blackboard were selected as the best LMSs.

0

25

50

75

100

125

150

175

2

00

2

25

2

50

237

163

227

184

179

253

173

202

Blackboard

Docebo

Dokeos

IBM Lotus

Joomla

Moodle

PeopleSoft

Sakai

0

10

20

30

40

50

60

70

80

90

76.4

53.8

74

61

60.8

81

55.2

65.2

Blackboard

Docebo

Dokeos

IBM Lotus

Joomla

Moodle

PeopleSoft

Sakai

EVALUATIONOFE-LEARNINGTOOLSBASEDONAMULTI-CRITERIADECISIONMAKING

311

Figure 4: Total results obtained applying the third MCDM

method.

4 CONCLUSIONS

In the application of MCDM methods to make a

decision based on the results, Hobbs and Meier

(1994) recommend to apply more than one approach

because different methods offer different results to

compare, in this case, goal programming and

additive value functions are suggested and besides

the results must be shown to decision makers who

can mull over the differences or confirm the

resemblances. In evaluating the results of different

methods, the potential for biases should be kept in

mind. The extra effort is not large; the potential

benefits, in terms of enhanced confidence and a

more reliable evaluation process, are worth.

However the results shown in this paper deploy the

same ranking of choices it does not matter the

method used as opposed in (Hobbs and Meier,

1994).

The model can be used to analyze a broad

variety of different e-learning technologies, the

paper address synchronous and asynchronous web-

based environments where learning content or

courseware is served from a web server and

delivered on demand to the learner’s workstation.

Learners can thus make progress by themselves. The

courseware may be comprised of any combination of

text, images, animation, sounds and movies. The

courseware is interactive and is often combined with

some type of assessment.

One of the main benefits obtained with the

evaluation of several e-learning tools from a general

perspective and from different points of view is that

personnel related in evaluating and selecting an

appropriate e-learning tool is now informed about

this type of technology. The decision can be made

taking into account: management, technological and

instructional characteristics. Furthermore, they can

make up an action plan and choose the best path to

follow in order to integrate this technology into their

learning and training processes.

REFERENCES

Bell M. L., Hobbs B. F., Elliott E. M., Ellis H. and

Robinson Z., 1998. An Evaluation of Multicriteria

Decision Making Methods in Integrated Assesment of

Climate Policy, in Lecture Notes in Economics and

Mathematical Systems. Research and Practice in

Multiple Criteria Decision Making, Virginia USA, pp

229-237

Brandon B., 2006. 282 Tips on the Selection of an LMS or

LCMS, The eLearning Guild

Brandon Hall Research, 2009, Learning Technology

Products

Chankong V. and Haimes Y., 1983. Multiobjective

Decisión Making: Theory and Methods, North

Holland, Amsterdam.

Edutools, Jan 2007. CMS Home, Edutools, [Online].

Available: http://www.edutools.info/course/

Hobbs B. F. and Meier P. M., November 1994.

Multicriteria Methods for Resource Planning: An

experimental comparison, IEEE Transactions on

Power Systems, Vol. 9 (4), pp. 1811-1817.

Horton W. and Norton K., 2003. E-learning tools and

technologies, Wiley Publishing, Inc. Indianapolis

USA.

Islas E., Zabre E. and Pérez M., Apr-Jun 2004. Evaluación

de herramientas de hardware y software para el

desarrollo de aplicaciones de realidad virtual. Boletín

IIE, vol. 28, pp. 61-67.

Pérez M., Zabre E. and Islas E., 2003. Prospectiva y ruta

tecnológica para el uso de la tecnología de realidad

virtual en los procesos de la CFE, Instituto de

Investigaciones Eléctricas, Cuernavaca México,

Technical Report IIE/GSI/022/2003.

Stewart T. J., 1992. A Critical Survey on the Status of

Multiple Criteria Decision Making Theory and

Practice, OMEGA The International Journal of

Management Science, vol. 20 (5/6), pp. 569-586.

0

5

10

15

20

25

30

5.1

27.7

7.5

20.5

20.7

0.5

26.3

16.3

Blackboard

Docebo

Dokeos

IBM Lotus

Joomla

Moodle

PeopleSoft

Sakai

CSEDU2012-4thInternationalConferenceonComputerSupportedEducation

312