EXPERIENCES WITH WEB-BASED PEER ASSESSMENT OF

COURSEWORK

Hans H¨uttel and Kurt Nørmark

Department of Computer Science, Aalborg University, Selma Lagerl¨ofs Vej 300, Aalborg, Denmark

Keywords:

Peer Assessment.

Abstract:

We describe experiments with a web-based system for peer assessment in a course on automata theory and

program semantics. The paper describes the web-based assessment system, and experiences from the first

round of use in 2011. We find a correlation between a high level of peer reviewing activity and a high grade

obtained at written exam.

1 INTRODUCTION

In this paper we describe an experimental system

whose aim is to address some of the challenges that

we have found when giving undergraduate courses.

1.1 Challenges to University Teaching

The proportion of school leavers that enter higher ed-

ucation is steadily increasing, and this has led to the

emergence of a larger group of students with different

attitudes towards learning.

Biggs and Tang (Biggs and Tang, 2007) distin-

guish between the dedicated student –‘Susan’ – and

the less dedicated student – ‘Robert’. ‘Susan’ is inter-

ested in her subject and what she learns is important

to her, while ‘Robert’ is not so interested in his sub-

ject, uses notably less effort and focuses on how to

be able to qualify for a job. Traditionally, university

teaching has focused exclusively on ‘Susan’; an im-

portant challenge has been to cater also to the needs

of ‘Robert’ while keeping the focus on the needs of

the subject and of learning.

Observations suggests that whatever has been

taught in a programme tends to be forgotten by stu-

dents relatively soon. A survey by Anderson et al.

(Anderson et al., 1998) documents that a majority of

undergraduate-level mathematics were able to recall

only little or none of what was taught in their first-

year courses.

Our own experience is similar. We come across

students at masters level asking us for help because

they need to brush up their knowledge of central top-

ics from courses that they passed earlier.

Another challenge is that, because of the increas-

ing student intake, lecturers need to spend more time

evaluating students but will not have more resources

at their disposal.

Lauv˚as and Jakobsen (Lauv˚as and Jakobsen,

2002) point out both of these problems and argue that

it is due to the fact that ‘Robert’ will choose an instru-

mentalist approach to learning whose primary goal is

that of being able to pass the exam. ‘Robert’ will not

prepare for course sessions but tries to cram the mate-

rial during the last few days before the exam. This is

a hindrance to deep learning.

The challenge is then to teach such that some

‘Robert’ students will end up becoming ‘Susans’ and

in such a way that learning is improved, that deep

learning is encouraged and in such a way that the

teacher does not use more resources in this process.

1.2 Formative Evaluation through Peer

Assessment

Peer assessment is a strategy that has received a great

deal attention over the past 15 years in the world of

education research. For a recent overview, see the

survey paper by Topping (Topping, 2009). It has of-

ten often been argued that peer assessment can im-

prove the reflective processes of learning, since stu-

dents through the assessment process will have to re-

flect on how their fellow students are approaching the

task of learning.

One often distinguishes between

• summative evaluation, which is the assessment

whose aim is to determine if the learning goals

113

Hüttel H. and Nørmark K..

EXPERIENCES WITH WEB-BASED PEER ASSESSMENT OF COURSEWORK.

DOI: 10.5220/0003918401130118

In Proceedings of the 4th International Conference on Computer Supported Education (CSEDU-2012), pages 113-118

ISBN: 978-989-8565-07-5

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

of a teaching activity have been reached, and

• formative evaluation, which is the assessment

whose aim is to guide the students so they will

know how their learning is proceeding and what

they should do to improve it. It is through forma-

tive evaluation that reflection can happen.

Often summative evaluation will take the form of

a formal examination. On the other hand, formative

evaluation will be part of the learning process. One

can therefore seek to incorporatea notion of formative

evaluation as part of teaching activities, and this is

where peer assessment comes in.

The claims that Lauv˚as and Jakobsen make are

(Lauv˚as and Jakobsen, 2002): If formative evaluation

is thorough and if one insists that the students make a

substantial effort throughout the course, the students

will learn much more and will remember the material

much better and for a longer period of time. The exam

itself will become a different experience for students,

since they will be examined on the basis of material

with which they are already well acquainted and feel

that they master. Lauv˚as uses the analogy of athletes

that practice regularly and are told by their coach how

to improve their performance.

Lauv˚as and Maugesten have experimented with

methods for restructuring teaching via peer assess-

ment (Maugesten and Lauv˚as, 2004). The activities

have been laid out such that all students would explic-

itly need to adopt a ‘Susan’-style learning strategy.

1.3 Existing Solutions

There has already been a fair amount of work on au-

tomating the administrative aspect of the peer assess-

ment strategy.

Michael de Raadt has created a peer review mod-

ule (de Raadt, ) that can be used with Moodle (Moo-

dle, ). One limitation of this module is that peer

reviews take the form of a series of multiple-choice

questions for which boxes are to be ticked. Other

forms of assessment are not possible.

In a series of papers, Joy and Sitthiworachart have

focused on how to use web-based peer assessment to

assist deep learning in programming classes (Sitthi-

worachart and Joy, 2004; Ward et al., 2004). In this

work, they have among other things, focused on ask-

ing the students to comment on specific aspects of so-

lutions to programming exercises. Their results indi-

cate that web-based peer assessment can indeed assist

in the process of deep learning.

1.4 Our Setting

We have developed and used an experimental system

for peer assessment used in the course Syntax and Se-

mantics taught at our university. Our goal has been

to gain experience in how to increase student efforts

and improve learning through web-based methods for

peer assessment. We have based the design of our

system on previous, largely paper-based strategies for

peer assessment.

2 PREVIOUS EXPERIENCE

From 2004 to 2007, the first author has experimented

with methods of peer assessment in another theory

course on computability and complexity theory.

A questionnaire-based survey has shown that, in

the past, a student would spend only 30 minutes

preparing for a course session. While peer assess-

ment dramatically increased student efforts, the solu-

tion was entirely paper-based and this required a sig-

nificant effort of all involved. The incentive for stu-

dents was that the participating students would have a

reduced syllabus at the oral exam.

The assessment expected of students involved that

of reviewing the solutions to textbook-style problems

solved by their fellow students. Here, the problem

turned out to be one of greatly varying levels of un-

derstanding: students that had difficulting solving a

problem were often unable to provide meaningful re-

views. Quite often, our conversations with students

during commenting sessions revealed that the student

had not read the relevant pages of the course textbook.

Finally, some ‘Robert’-students very consistently

handed in ‘non-solutions’ in which they simply wrote

that they could not solve the problems that had been

stated. These same students would also write very

brief reviews of solutions. As they had handed in so-

lutions and participated in the commenting process

and thereby followed the formal procedures, it was

hard to argue that they should not have a reduced

exam syllabus even if they had done little actual work.

3 THE CURRENT COURSE

The course Syntax and Semantics is found at the

4th semester of the undergraduate programmes in

Computer Science and in Software Technology. The

course covers formal language theory and program-

ming language theory.

The course consists of 15 4-hour sessions, divided

into 15 practical sessions of 2 hours each and a 90

minute lecture. At the same semester, there were three

other courses of 3 ECTS each and an 18 ECTS stu-

dent project. As the students collaborate on projects

CSEDU2012-4thInternationalConferenceonComputerSupportedEducation

114

in groups of 5 to 7 students and are assigned a group

room, it is common practice for them to also work on

course practicals in these project groups.

For the Computer Science students, the exam in

Syntax and Semantics is a 3-hour written exam. Stu-

dents following the Software Technology programme

did not participate in the written exam. These stu-

dent had the course assessed as part of their semester

project which involves several other topics not related

to the course syllabus.

4 THE APPROACH

The course consisted of a variety of teaching activi-

ties, includes lectures and problem solving. However,

in this section we concentrate on the new develop-

ment, namely the approach that we have followed for

peer assessment, and how it led to the design of our

system. First, the overall approach to questioning, an-

swering, and reviewing is described. This includes the

means of incentives provided by the approach. Next,

the workflow of a single session - called an answering

session - is explained.

4.1 The Peer Assessment Approach

Our peer assessment strategy has been based on the

following principles.

• Students should read the course text and reflect on

its content, so the peer assessment exercises asked

a series of questions directly related to the text.

• Each student who answers the question becomes a

reviewer of another student. The student reviewer

should as their peer amend and correct the an-

swers to the text-related questions instead of try-

ing to figure out how the answer is incorrect.

• Students should be encouraged to participate. The

incentive was that their answers to text questions

would be available for them to use at the written

exam. No other textual aids would be allowed at

the exam. The collection of answers was kept in

the form of a portfolio for each student. The exam

questions were the same for all students, regard-

less of their participation in peer assessment or

lack thereof.

• Students should use L

A

T

E

X for their answers to

text-related questions, as this is a system that they

are familiar with is well-suited for technical writ-

ing. A further advantage has been that the web-

based implementation could easily handle the text

files used by L

A

T

E

X. To this end, the students

would be supplied with a standard preamble that

they must use.

Some examples of text-related questions (trans-

lated from Danish) can be found in Figure 1.

(From the session about regular expressions) Let

R be a regular expression. Is it always the case

that the regular expression R∪ ε denotes the same

language as R? If the answer is yes, then explain

why. If the answer is no, then providea counterex-

ample.

(From the session about scope rules described us-

ing structural operational semantics) Here is a

big-step transition rule. Explain which kind of

scope rules are captured by this particular transi-

tion rule.

(CALL-1)

env

V

, env

′

P

⊢ hS, stoi → sto

′

env

V

, env

P

⊢ h

call

p, stoi → sto

′

where env

P

p = (S, env

′

P

)

Figure 1: Examples of text-related questions used (trans-

lated from Danish).

4.2 The Workflow of an Answering

Session

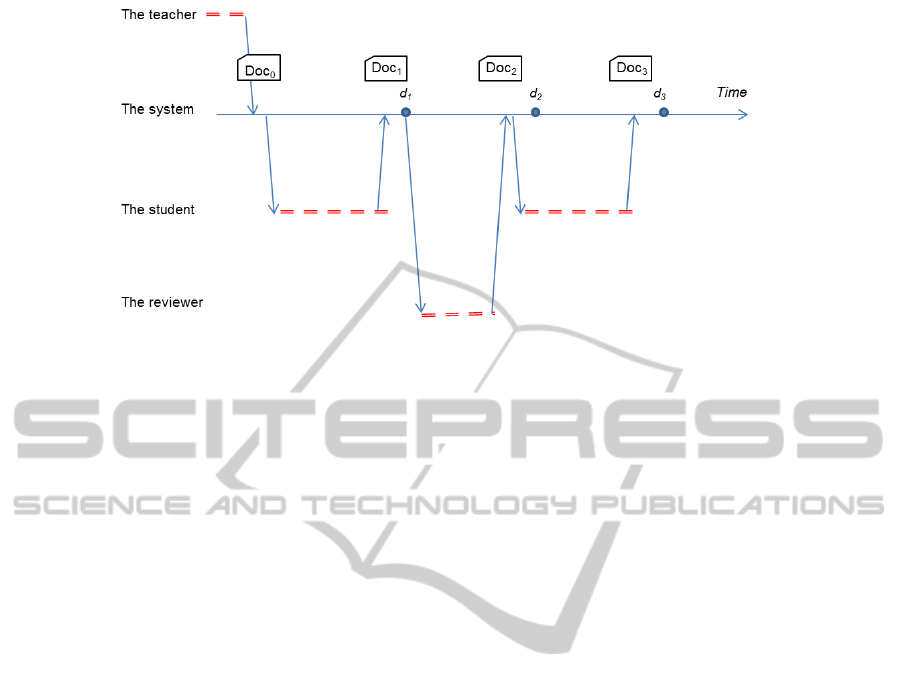

Each of the 15 answering sessions of the course had

the following workflow:

1. After the lecture, the teacher would publish a

L

A

T

E

X file Doc

0

with questions about the associ-

ated part of the text to the course webpage.

2. No later than by the morning of the session, at

deadline d

1

, the student would then answer the

questions found in Doc

0

and hand the answers in

the form of Doc

1

, an amended version of Doc

0

.

3. Just after the deadline d

1

the Doc

1

files would be

distributed among the participating students for

commeting and reviewing. Each reviewer com-

piles Doc

1

, read it and amend/modify the answers.

This would then lead to a new version of the L

A

T

E

X

file, Doc

2

.

4. The students would finally get back Doc

2

and be

able to obtain it and either use it as their own fi-

nal version or revert to the one that they originally

wrote. The final outcome of the session is then

a file Doc

3

which is added to the portfolio of the

student.

This workflow is summarized in Figure 2.

EXPERIENCESWITHWEB-BASEDPEERASSESSMENTOFCOURSEWORK

115

Figure 2: The workflow supported by the system, showing the documents that flow between the teacher, author, and reviewer

together with the deadlines that apply.

4.3 The Implementation

The role of the system was to support the workflow

described above, and make it possible to manage the

portfolio of each student in an easy manner.

The system allows each student to log into a sta-

tus page for each lecture in the course (with use of a

valid username and password). Until the given dead-

line d

1

the status page allows upload of the L

A

T

E

X file

Doc

1

. After the deadline the system appoints a re-

viewer for each student that has submitted a Doc

1

in

the current round of questions. The allocation of re-

viewers is done by computing a permutation of the

students that have submitted a Doc

1

for this session.

If possible, the permutation is formed in such a way

that the reviewer assigned to a given student is in an-

other project group than that of the original author.

With the reviewers appointed, the status page of

the student S is augmented with information about

the reviewer of S, R(S), and the student that will be

reviewed by S. In addition, R(S) gets access to the

material he or she is supposed to review via his or her

status page together with a new deadline d

2

. When a

revised Doc

2

is submitted by R(S), the original author

S receives an email from the system, and the review is

made available at the status page for S. If or when a

review is received, S can submit a final version Doc

3

,

which allows S to elaborate the original contribution

and/or the changes made by the reviewer R(S).

The contributionto the portfolio of student S in the

current round becomes Doc

3

if it exists, else Doc

2

if it

exists, else Doc

1

if it exists, else the empty document.

The system provides the course teacher with an

overview page, in terms of a table that shows the sta-

tus of all students for every answering session so far.

The teacher can activate the appointment of reviewers

(construction of the permutation mentioned above)

from the overview page. In addition, the teacher

has access to the status page of each student via the

overview page, and the individual L

A

T

E

X documents

submitted by authors and reviewers.

At any point in time, it is possible for a student

to construct an aggregated portfolio of answers to all

questions. Behind the scenes the web system pro-

cesses a L

A

T

E

X file, which is aggregated by the rele-

vant pieces submitted by the author and the reviewers

of the author. If there are problems with the process-

ing (due to errors in the L

A

T

E

X document), the student

is supposed to deal with these via the L

A

T

E

X log file,

which also is made available.

The administrative system setup was mainly done

programmatically, via Lisp text files. The system

needs information about all participating students

(full name, email address, encrypted password, and

group number id). In addition, the system needs in-

formation about deadlines for each lecture, as illus-

trated in Figure 2). The system relies on a list of all

d

1

deadlines (relative to Figure 2). The other dead-

lines are calculated by use of fixed offsets. In a future

version of the system it will be attractive to have a

web-interface to both student administration and the

temporal setup of deadlines.

The current system is implemented (by the second

author) in R5RS Scheme (Kelsey et al., 1998) with

use of LAML (Nørmark, 2005) for CGI programming

purposes. Administrative data are stored in files - one

file per student per answering session.

5 EXPERIENCES

In this section we assess the experiences that we have

had using the system in our teaching.

CSEDU2012-4thInternationalConferenceonComputerSupportedEducation

116

No answer at all 136

Only first answer 413

First answer and review 213

First and second answer, no review 67

First answer, review, and second answer 164

Only second answer 27

Total 1020

Figure 3: Distribution of the answering sessions.

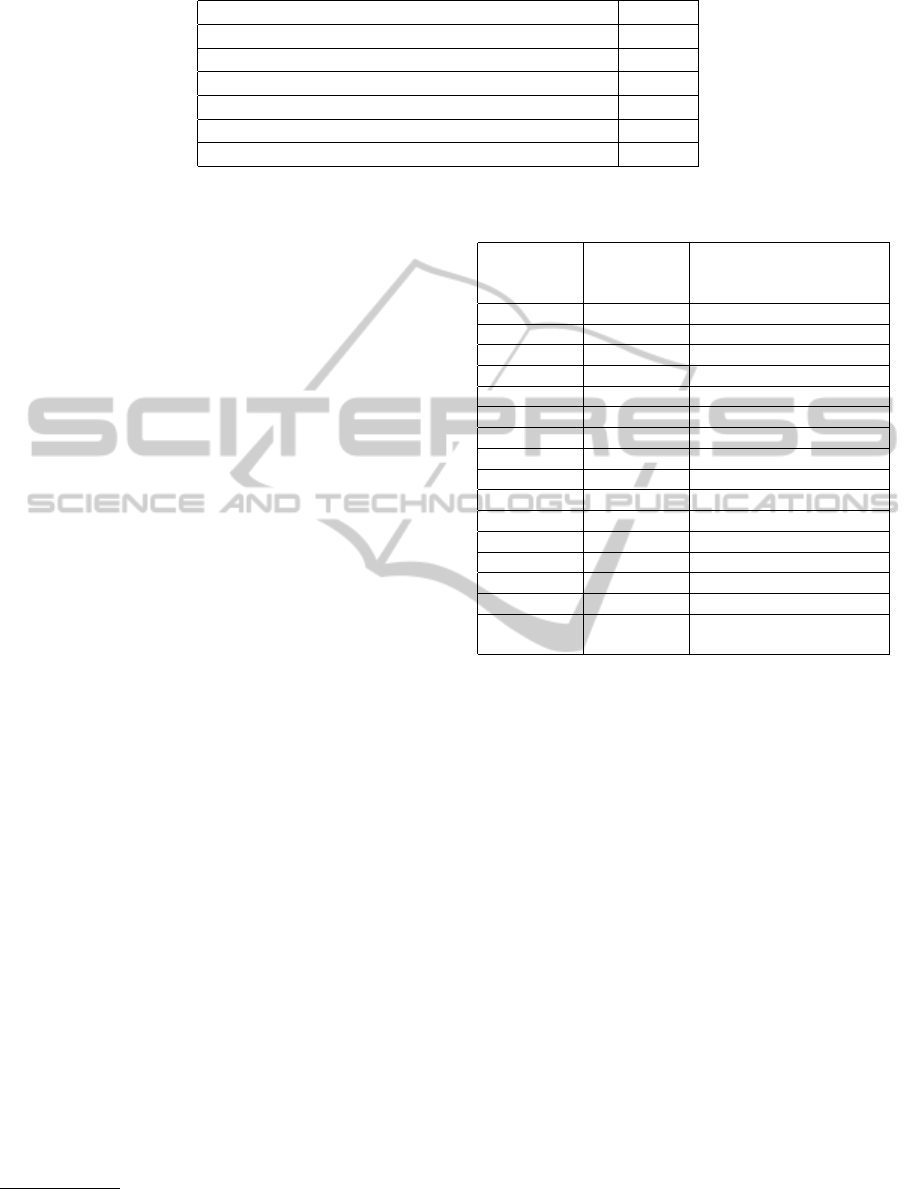

5.1 A Quantitative Analysis

We have performed a quantitative analysis of data by

using the information in the implemented web system

juxtaposed with grades obtained by the students that

attended the written exam. 68 students participated in

15 rounds of answering questions, leading to a total

of 1020 answering sessions each of which follows the

workflow shown in Figure 2. Figure 3 shows a classi-

fication of the 1020 potential answering sessions.

Of the 68 students, 26 were Computer Science

students, and as such they participated in the written

exam, where they got a grade from the Danish 7-step

scale.

1

Figure 4 lists the number of answers (rang-

ing from 0 to 15) and the corresponding number of

students who submitted that particular number of an-

swers. In addition, we show the list of grades obtained

by the computer science students who were required

take the exam. Thus, for instance, 15 students sub-

mitted 14 answers, and among these, 6 participated in

the exam where they obtained the grades -3, -3, 4, 4,

7, and 10, respectively. One immediately notices that

the majority of the students, who attended the writ-

ten exam, submitted answers in almost all of the 15

rounds. This is less pronounced for software students,

who did not participate in the exam.

The activity of each student can be measured in

terms of the number of answers provided in total (0

.. 15), or more fine-grained, using the total number of

answers (0, 1, or 2) and reviews (0 or 1) submitted for

each answering session.

On the other hand, Figure 3 reveals that as many

as 57 % of the answers provided were not followed

by a review. This indicates that the aspect of peer as-

sessment was not seen by the students as a necessary

component of the teaching activity.

5.2 A Qualitative Analysis

By using the system and interacting with the students,

we have made some observations of a more qualita-

1

The Danish 7-step grading scale is compatible with the

ECTS scale: 12 = A, 10 = B, 7 = C, 4 = D, 02 = E, 00 = Fx,

-3 = F. The grade 02 is the lowest passing grade.

Number of

answers

Number of

students

List of grades obtained

(Computer Science

students only)

0 1 00

1 0 none

2 1 none

3 0 none

4 0 none

5 1 none

6 0 none

7 3 00

8 1 none

9 2 none

10 2 4

11 3 7

12 4 none

13 3 none

14 15 -3 -3 4 4 7 10

15 32 00 00 02 4 4 4 7 7 7 7 7

10 10 10 10 12

Figure 4: Exam results.

tive nature.

Unfortunately, it turned out that again, some stu-

dents spent more effort trying to circumvent the sys-

tem than in engaging in the learning process. As al-

ready noticed, some students uploaded answers but

did not comment on the answers provided by oth-

ers. This caused a considerable amount of frustra-

tion. Moreover, a couple of students discovered that

the implementation accidentally allowed them to sub-

mit Doc

3

without submitting Doc

1

. (This is the cause

of the row ‘Only second answer’ in Figure 3). This

meant that they could avoid the first deadline and also

manage to avoid being involved in the commenting

round.

Other evidence suggests that students were not al-

ways careful in producing their answers. Before the

exam, all portfolios were generated and compiled by

the system. In some cases, the students had system-

atically modified the standard preamble, making it in-

compatible with the script for generating portfolios.

In several other cases, the L

A

T

E

X system was unable

to generate a portfolio due to simple L

A

T

E

X errors that

suggest that the author had never bothered to compile

EXPERIENCESWITHWEB-BASEDPEERASSESSMENTOFCOURSEWORK

117

the L

A

T

E

X file before submitting it.

It turned out that strict adherence to all deadlines

was difficult to accomplish. Illness and other unfore-

seen circumstances led to introduction of a period of

time, where students could submit missing contribu-

tions. It turned out that 8% of all contributions were

received during that period. 92% of the contributions

were received on schedule, relative to the deadlines of

the answering sessions.

In feedback from some of the students it is pointed

out that the many deadlines that had to be met, were

a considerable burden. The work hereby enforced on

the students took time and efforts away from other

courses, and from the group project. In addition, it

was pointed out that the students wanted to be able to

revise their contributions, even after the last deadline

of an answering sessions. All taken together, it is the

impression of the students thatthe approach taken was

demanding.

6 CONCLUSIONS AND IDEAS

FOR FURTHER WORK

We have described a web-based system for peer as-

sessment. Our analysis of the exam results indicate

that there is a statistically significant correlation be-

tween activity in the course and the result obtained at

the examination.

The connection between coursework and the exam

that follows is a powerful incentive for the students

to answer the questions and to aggregate a portfolio

that helps them during the exam. On the other hand,

the fact that more than half of the answers submitted

were not reviewed indicate that an important aspect

of the approach, namely that of peer assessment, did

not work out as planned. In order to improve the mo-

tivation for participating in the peer review work, we

consider to change the rules in the next round of use.

Our suggestion is is that a student can only have a

set of answers to text questions added to the portfolio

if the student has also submitted a review within the

same session.

Developing the administrative aspect of our sys-

tem is a topic of further work. Our system was sim-

ple in that respect; unforeseen events at student level

were dealt with on an ad hoc basis. More importantly,

the submitted files were never checked for L

A

T

E

X er-

rors. In a future version of the system, the day-to-

day administrative tasks and L

A

T

E

X issues should be

supported directly by the web-based system. An ap-

proach to this is to integrate our ideas into an exist-

ing web-based system for handling teaching activi-

ties. As Aalborg University is currently in the process

of switching its web-based course administration in-

terface to Moodle (Moodle, ), the ideas of our system

will be integrated into a plugin for Moodle.

REFERENCES

Anderson, J., Austin, K., Barnard, T., and Jagger, J. (1998).

Do third-year mathematics undergraduates know what

they are supposed to know? International Journal of

Mathematical Education in Science and Technology,

29(3):401–420.

Biggs, J. and Tang, C. (2007). Teaching for Quality Learn-

ing at University (Society for Research Into Highter

Education). Open University Press, 3 edition.

de Raadt, M. Michael de Raadt’s Peer Review Assign-

ment Type. http://www.sci.usq.edu.au/staff/deraadt/

peerReview.html.

Kelsey, R., Clinger, W., and Rees, J. (1998). Revised

5

report

on the algorithmic language Scheme. Higher-Order

and Symbolic Computation, 11(1):7–105.

Lauv˚as, P. and Jakobsen, A. (2002). Exit eksamen – eller?

Cappelen. In Norwegian.

Maugesten, M. and Lauv˚as, P. (2004). Bedre læring

av matematikk ved enkle midler? Rapport fra et

utviklingsprosjekt. Technical Report 6, Høgskolen i

Østfold. (in Norwegian).

Moodle. Moodle. http://moodle.org.

Nørmark, K. (2005). Web programming in Scheme with

LAML. J. of Funct. Prog., 15(1):53–65.

Sitthiworachart, J. and Joy, M. (2004). Effective peer as-

sessment for learning computer programming. In 9th

Annual Conference on the Innovation and Technology

in Computer Science Education (ITiCSE 2004), pages

122–126.

Topping, K. J. (2009). Peer assessment. Theory Into Prac-

tice, 48(1):20–27.

Ward, A., Sitthiworachart, J., and Joy, M. (2004). As-

pects of web-based peer assessment systems for teach-

ing and learning computer programming. In 3rd

IASTED International Conference on Web-based Ed-

ucation (WBE 2004), pages 292–297.

CSEDU2012-4thInternationalConferenceonComputerSupportedEducation

118