ANALYSIS OF THE BENEFITS OF COLLECTIVE LEARNING

THROUGH QUESTION ANSWERING

Iasmina-Leila Ermalai

1

, Josep Lluís de la Rosa

2

and Araceli Moreno

3

1

Faculty of Electronics and Telecommunications, “Politehnica” University of Timisoara, Timisoara, Romania

2

Agents Research LAB, Institute of Informatics and Applications, University of Girona, E17071 Girona, Spain

3

Universidad Autónoma de Tamaulipas, Facultad de Ingeniería, Campus Tampico - Madero,

Victoria, Tamaulipas, México

Keywords: Q&A Learning, Peer-to-peer Learning, Collaborative Learning, Social Learning, Intelligent Agents.

Abstract: Online collaborative learning between peers seems a viable complementary method to traditional learning,

even as the input no longer comes from only one man – the tutor – but from a number of people with

different levels of competence. Furthermore, nowadays an increasing number of people turn to social

networks when they need to find answers, for reasons like trust, response time and effort. Thus social

networks behave at times similarly to online collaborative learning networks. This paper presents a model of

Questions & Answers (Q&A) learning where students are the ones that ask and also answer questions, as a

method to increase and reinforce knowledge.

1 INTRODUCTION

Recent surveys, notably Chi (2009), show that

people turn to social networks when they need

information or seek answers to subjective or open

questions. The main enablers are trust (Q&A sites

provide answers from strangers, while social

networks provide answers from people you know),

response time (social networks are faster than Q&A

sites), effort, personalization, and social awareness

(Morris et al., 2010). People often resort to social

networks as they find it easier to formulate a full

question, rather than to recurrently try to find the

right key words, and moreover when answers come

from friends, which have a certain degree of trust

and expertise, known by the questioner.

We claim that Questions & Answers (Q&A) is a

proper model for collaborative learning (CL).

Glasser (1986) argued that students remember more

information when they are actively engaged in

learning as a social act: we learn more by

collaborating, communicating within a group.

Glasser's trial data reveal that we remember 10% of

what we read, 20% of what we hear, 30% of what

we see, 50% of what we hear and see, 70% of what

we talk with others, 80% of what we experiment and

95% of what we teach others.

An interesting new approach, the Self Organized

Learning Environments (SOLE) (Mitra et al., 2010)

can be empowered by online social networks,

mobile applications, and social currencies. It leans

on Social Constructivism for computer supported

collaborative learning (CSCL). The former Mitra’s

hole-in-the-wall experiments (2003) showed that

groups of children, given shared digital resources,

seem not to require adult supervision for learning.

Students learn best when they actively construct

their own understanding through social interaction

with peers. The responsibility of the instructor is to

facilitate the students’ learning process around a

particular content. This method of learning from

peers is also known as Reciprocal Peer Tutoring

(RPT) (Allen et al., 1978) or adaptive collaborative

learning support (ACLS) (Walker et al., 2009).

2 SIMULATIONS OF

COLLABORATIVE LEARNING

The Walker model (2009) basically encourages

solving own problems, by offering hints, but also

provides the alternative of asking questions to a peer

tutor. This was the general context which allowed us

to propose a peer Q&A simulation of CSCL and

compare it to a traditional tutor Q&A system. In

peer Q&A systems students answer each other’s

331

Ermalai I., Lluís de la Rosa J. and Moreno A..

ANALYSIS OF THE BENEFITS OF COLLECTIVE LEARNING THROUGH QUESTION ANSWERING.

DOI: 10.5220/0003919503310335

In Proceedings of the 4th International Conference on Computer Supported Education (CSEDU-2012), pages 331-335

ISBN: 978-989-8565-06-8

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

questions, a process supervised and facilitated by

tutors. For the initial model we drew inspiration

from Moreno et al. (2009) - completing Frequently

Asked Questions (FAQ) as a sort of a Wikipedian

approach of collaborative learning on a subject.

Each student initially has all its classmates in the

contact list. Further on, he can add whomever he

chooses to, from parents to friends outside school.

The model is similar to Facebook (or any social

network) in the sense that a user can post a question

on his wall or send email to a list/group of friends

(by means of the extinct Quora). Such a question

would be visible to his network friends who could

answer it in the eventuality they had the knowledge

and the availability to do so. We chose these

approaches to avoid spamming everybody in the

network list. In the eventuality a friend does not

know the answer, but he is willing to help, he can

share the question on his own wall, so that his

friends can see it. The difference from Facebook

resides in the fact that, in the eventuality that a

friend of a friend responds to the question, both the

initial asker, and the helping friend would receive

the answer. This is a method that would help to also

increase the knowledge of the mediator, the friend.

Every student entering the system has a LCV

(Learner Competences Vector) which includes

various domains of user knowledge (we will refer

them further on as subjects) and also a level of

competence for each of these matters. In order to

rate the competences of the answerer in each subject,

we drew inspiration from Bloom’s taxonomy of

educational goals (1956): competences range

between 1 and 6, being: 1 - knowledge, 2 –

comprehension, 3 – application, 4 – analysis, 5 –

synthesis, and 6 - evaluation. We introduced the 0

value to declare the forgetting factor (the user knew

something about that domain, but no longer does).

LCV initially contains no competences (Ø).The

competence value is built upon the user’s answers.

For example, after few answers about the following

subjects: a,f and h, the LCV could be L={a4, f2, h3}

that means competence of analysis in subject a, of

comprehension in subject f and application on

subject h. After a period of time in which a user does

not answer any question in the a subject, a

competence would decrease by a factor. In our

simulations, initial values for LCV (subjects and

competences) were randomly generated.

2.1 Assumptions and Measures

Drawing inspiration from Pous et al. (1997), we

consider that a set of pedagogical inputs can change

the state of the students, namely the LCV. Aside

from the traditional input system, where the teacher

provides the input that is supposed to change the

pedagogical value of each student, our model

introduces the student/peer input system, where:

A = { A

i

} is the set of students in a class.

K = {a, b..., z} is the set of subjects k

j

,

c = [0,1...,6] are the competence levels

L

i

is the LCV for each student A

i

C is the curricula, pedagogical goals in terms of

LCV for a class of students; for example C= {a6, b5,

c6, d4} means that subject ‘a’ should be achieved at

level ‘6’, while content ‘b’ at a lower level ‘5’, etc.

q

i

is the increase/reinforcement factor. It is

calculated out of the Q&A of each student A

i

that

should increase his competence level c in a subject k

j

α is the forgetting factor. When a subject

competence gets to 0, it means the student

recognises it, he is aware he knew something about

it, but now no longer remembers it. After every n

weeks a pupil does not ask, nor answer any question

about a subject, his competence decreases by a

factor d

f

The goal is to make average L

i

as close as

possible to the curricula C (completeness), after a

time frame of 12-15 weeks (a semester).

lim

→

∀

(

)=

(1)

If the model behaves as Moreno et al. (2009) did,

it would show that CL is faster to reach

completeness (1), than the traditional tutor-based

system. The premises are that learning can be

achieved through receiving answers to questions and

reinforced through question answering. Every time a

student asks or answers a question, his competence

level is increased by a factor q

i

. Nonetheless, we

consider that he will learn only when receiving

answers from a peer with higher competence levels.

As well, we assumed that having a considerable

difference between the asker’s and the answerer’s

competence is not that good for learning, as the

answer could be too complex, eliminating thus the

peer tutoring advantage.

The mechanism of the model seeks to avoid

spamming network friends. This is achieved by

posting the question on the wall (like in Facebook)

or by sending email to a close group of friends.

Communication by emails could be implemented

with the Asknext protocol (Trias et al., 2010). The

teacher stimulates the community of students by

suggesting appropriate questions. He inspects the

unanswered questions and decides if answering

himself or inviting other students to answer. In the

CSEDU2012-4thInternationalConferenceonComputerSupportedEducation

332

future he will also have the possibility to correct

answers; this implies an upgrade of the model so that

it would allow the possibility to distinguish between

good and wrong answers. Friends will also be able

to rate the answers, which will lead to upgrading the

social network.

2.2 Experiments

Let us consider a class of 8 students that do question

answering, as well as the teacher does. The

questions are related to one subject from the

educational curriculum. The time frame is 12 weeks

(one semester) to reach goals set up in the

curriculum.

The student’s learning curve is the amount of

knowledge and the speed with which he achieves it.

It depends on his native abilities and also on the

effort he puts in. The native abilities will influence

the stochastic model, while in our approach, a

deterministic one, we only took into consideration

the effort. Effort is considered to be the frequency

with which a student answers and also asks

questions. Asking questions is not viewed as a sign

of laziness (not wanting to learn or solve problems

by himself, thus asking questions), but as a sign of

conscious and sustained, constant work. In this case

each student has a questions’ vector (consisting of

questions asked in a period of 12 weeks), an

answers’ matrix (answers given every week) and an

evolution vector (L

i

). The bandwidth is seen as the

number of questions one can answer. In this model

the professor’s bandwidth is considered to be

infinite.

The social aspect (reorganizing the network’s

hierarchy according to who do I trust most,

depending on received answers) is left for future

improvements.

The students’ evolution was simulated for a

period of 12 weeks, where the following two aspects

were rewarded with points:

• receiving answers to asked questions

• answering questions asked by different agents.

The answer factor, q

i

of student A

i

is:

),,(

γ

tIfq

ci

=

(2)

Where:

• I

c

= C

c

- a

c

, is the competence index. C

c

is the

curriculum target competence and a

c

is the

initial average competence of the class

• t, is the weeks allocated to reach C

c

from a

c

• γ, is the number of questions a pupil has to

answer each week (established by the tutor)

We calculated q

i

with the following formula:

γ

tI

q

c

i

/

=

(3)

Each pupil has to answer at least 4

questions/week; every time a pupil gives an answer

to a question asked by a peer, he earns 0.1 points of

competence; if he reaches answering the established

target of 4 questions, he gains 0.4 points/week. If

there was no answer in a week, his competence is

decreased by the same value a week, entitled the

forgetting factor (d

f

=0.4). For each answer received,

the student is considered to be achieving knowledge

worth of 0.1 points.

The classical approach implies professors

consistently answering to pupils’ questions. The

forgetting factor in the classical approach is not

taken into account as students are supposed to learn

based on the traditional input system – the tutor.

Considering the ideal case that students would ask

one question/week and that every answer would

change their knowledge level, a simple simulation

for 8 students, generated the evolution of the class’s

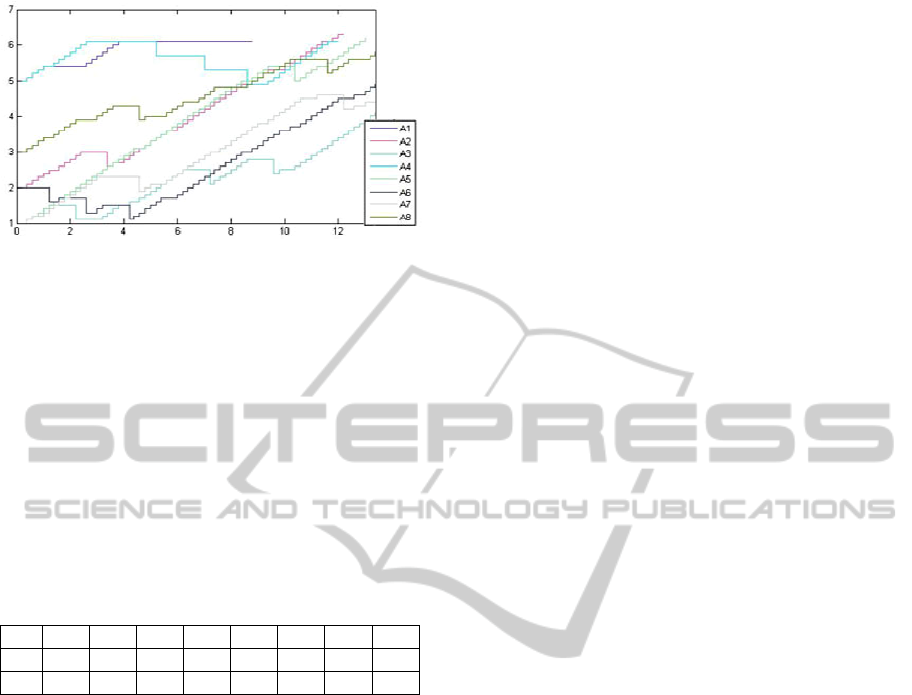

competences, as presented in Figure 1.

Let us see how students evolve with

collaborative learning through question answering.

Pupils gain knowledge by answering questions

asked by peers. The rating mechanism is described

by equation (3). There are a few more things that

were taken into account when measuring the

evolution of students’ competences: answers were

accepted only from agents of higher competence in

the subject as well as the difference between

competences was lower than 4 - an a1 student (who

knows content a with competence level of 1) should

receive answers from a2, a3, a4 students, but not

from a5 and a6, as it would no longer be peer

tutoring. Results obtained after multiple simulations

are summarised in Figure 2.

Figure 1: Classical model of learning, where students are

asking, and tutors are answering.

For generating Figure 1 and 2 we used 8 agents with

the same initial competences and questions’ vector.

In the first one (where only tutors’ answers

contribute to the students’ competence increase), a

ANALYSISOFTHEBENEFITSOFCOLLECTIVELEARNINGTHROUGHQUESTIONANSWERING

333

Figure 2: Q&A model of Collaborative Learning.

slight improvement can be observed in the

established period of time, whilst in the second one,

peer-to-peer interaction appears to be the best

method to boost competence.

Even in the worst case scenario, a week without

any activity, the overall results at the end of a 12

weeks semester were encouraging.

Table 1 presents an example of the increase in

competences (points gained), during 12 weeks, for

both of the above models, one subject, one set of

simulations.

Table 1: Competences gained during 12 weeks in the

classical (C), and in the collaborative learning (CL) model.

A1 A2 A3 A4 A5 A6 A7 A8

C 0,8 0,5 1.6 0,7 0,8 0,7 0,7 0,8

CL 1,1 4,3 3,1 1,1 5,0 3,5 3,6 5,1

3 FINAL COMMENTS

In recent years studies have offered constant proof

of the impact collaborative learning has on the way

students accumulate knowledge. Peer-to-peer

learning appears to succeed where classic tutor input

fails or faces resistance. The Q&A model proposed

in this paper seeks to increase knowledge by

emphasizing the importance of questions and

answers among peers in online social networks, a

process sustained and enhanced by tutors.

Simulations compared two models: the classic

model, where students ask and teachers answer, and

proposed one, where students ask and peers answer.

Simulations showed the increase in knowledge was

more significant in the latter case.

Expanding the network, allowing network

friends from different classes, and even from outside

school could only lead to an increasing Q&A

activity, to more questions and faster answers,

lowering thus the necessity for tutors to intervene, in

order to stimulate the answering process.

Furthermore agents will be used to speed up the

question answering, by automatically answering

questions they have answers for in a special Q&A

list (consisting of questions and answers approved or

given by tutors) and by suggesting contacts (other

agents) that could answer question from a certain

field (using the Learner Competence Vector).

ACKNOWLEDGEMENTS

This paper was supported by the project

"Development and support of multidisciplinary

postdoctoral programmes in major technical areas of

national strategy of Research - Development -

Innovation" 4D-POSTDOC, contract no. POSDRU/

89/1.5/S/52603, project co-funded by the European

Social Fund through Sectorial Operational Progra-

mme Human Resources Development 2007-2013,

and the Spanish MICINN contract IPT-430000-

2010-13 project Social powered Agents for Know-

ledge search Engines (SAKE).

REFERENCES

Allen, A. R., Boraks, N., 1978. Peer Tutoring - Putting it

to Test, Reading Teacher, vol. 32, no. 3, pp. 274-278.

Bloom, B. S., Krathwohl, D. R., 1956. Taxonomy of

Educational Objectives: The Classification of

Educational Goals. Handbook I: Cognitive Domain,

Longmans.

Chi, E. H., 2009. Information seeking can be social,

Computer, vol. 42, pp. 42–46.

Glasser, W., 1986. Control Theory in the Classroom,

Perennial Library, New York.

Morris, M. R., Teevan, J., Panovich, K., 2010. What do

people ask their social networks, and why?: a survey

of status message Q&Abehavior, 28th Intl.Conf.

Human factors in Comp. Sys

Mitra, S., 2003. Minimally invasive education: a progress

report on the “hole-in-the-wall” experiments, British J.

of Educational Tech., vol. 34, no. 3, pp. 367-371.

Mitra, S., Dangwal, M., 2010. Limits to self-organising

systems of learning — the Kalikuppam experiment,

British J. of Educational Technology, vol. 41, no. 5,

pp. 672-688.

Moreno, A., de la Rosa, J. L, Szymanski, B. K., Barcenas,

J. M., 2009. Reward System for Completing FAQs,

Artificial Int. Research and Dev., Vol. 202, pp. 361-

370

Pous, C., de la Rosa, J. L., Vehi, J., Melendez, J.,

Colomer, J., 1997. Anàlisi de Plans d'Estudi amb

Enginyeria de Sistemes, Innovació en educació,

pp.149-182

Trias, A., de la Rosa, J. L, Galitsky, B., Dobrocsi, G.,

2010. 'Automation of social networks with QA agents,

Proc. of the 9

th

International Conference on

CSEDU2012-4thInternationalConferenceonComputerSupportedEducation

334

Autonomous Agents and Multiagent Systems: volume 1

Walker, E., Rummel, N., Koedinger, K. R., 2009.

Modeling Helping Behavior in an Intelligent Tutor for

Peer Tutoring, in Artificial Intelligence in Education,

vol. 200, pp. 341-348.

ANALYSISOFTHEBENEFITSOFCOLLECTIVELEARNINGTHROUGHQUESTIONANSWERING

335