LARGE-SCALE LINKED DATA PROCESSING

Cloud Computing to the Rescue?

Michael Hausenblas

1

, Robert Grossman

2

, Andreas Harth

3

and Philippe Cudr´e-Mauroux

4

1

Digital Enterprise Research Institute, NUI Galway, Galway, Ireland

2

University of Chicago & Open Cloud Consortium, Chicago, U.S.A.

3

Institute AIFB, Karlsruhe Institute of Technology, Karlsruhe, Germany

4

eXascale Infolab, Department of Informatics, University of Fribourg, Fribourg, Switzerland

Keywords:

Linked Data.

Abstract:

Processing large volumes of Linked Data requires sophisticated methods and tools. In the recent years we have

mainly focused on systems based on relational databases and bespoke systems for Linked Data processing.

Cloud computing offerings such as SimpleDB or BigQuery, and cloud-enabled NoSQL systems including

Cassandra or CouchDB as well as frameworks such as Hadoop offer appealing alternatives along with great

promises concerning performance, scalability and elasticity. In this paper we state a number of Linked Data-

specific requirements and review existing cloud computing offerings as well as NoSQL systems that may be

used in a cloud computing setup, in terms of their applicability and usefulness for processing datasets on a

large-scale.

1 MOTIVATION

Although datasets are increasingly made available over

the Web, it is still relatively rare that a dataset is linked

to another one. An important trend over the past decade

has been the growing awareness of the importance of

“light-weight” approaches (Franklin et al., 2005) to in-

tegrate data. With these approaches the goal is to

create loosely integrated “dataspaces” instead of com-

pletely integrated databases or distributed databases.

Early approaches for lightweight data integration in-

clude (Grossman and Mazzucco, 2002), which advo-

cated using Universal Keys—columns of data identified

by a Uniform Resource Identifier (URI)—and Web pro-

tocols to link columns of data in one table to another

table.

The most successful effort for light-weight Web data

integration is based upon Tim Berners-Lee’s Linked

Data principles (Berners-Lee, 2006): 1. Use URIs to

identify data elements, 2. Using HTTP URIs allows

looking up a data elements identified through the URI,

3. When someone looks up a URI, provide useful in-

formation using standards, such as RDF, and 4. Include

links to URIs in other datasets to enable the discovery of

more data elements. In a nutshell, Linked Data is about

applying the general architecture of the WWW to the

task of sharing structured data on a global scale. Tech-

nically, Linked Data is about employing URIs, the Hy-

As of September 2010

Music

Brainz

(zitgist)

P20

YAGO

World

Fact-

book

(FUB)

WordNet

(W3C)

WordNet

(VUA)

VIVO UF

VIVO

Indiana

VIVO

Cornell

VIAF

URI

Burner

Sussex

Reading

Lists

Plymouth

Reading

Lists

UMBEL

UK Post-

codes

legislation

.gov.uk

Uberblic

UB

Mann-

heim

TWC LOGD

Twarql

transport

data.gov

.uk

totl.net

Tele-

graphis

TCM

Gene

DIT

Taxon

Concept

The Open

Library

(Talis)

t4gm

Surge

Radio

STW

RAMEAU

SH

statistics

data.gov

.uk

St.

Andrews

Resource

Lists

ECS

South-

ampton

EPrints

Semantic

Crunch

Base

semantic

web.org

Semantic

XBRL

SW

Dog

Food

rdfabout

US SEC

Wiki

UN/

LOCODE

Ulm

ECS

(RKB

Explorer)

Roma

RISKS

RESEX

RAE2001

Pisa

OS

OAI

NSF

New-

castle

LAAS

KISTI

JISC

IRIT

IEEE

IBM

Eurécom

ERA

ePrints

dotAC

DEPLOY

DBLP

(RKB

Explorer)

Course-

ware

CORDIS

CiteSeer

Budapest

ACM

riese

Revyu

research

data.gov

.uk

reference

data.gov

.uk

Recht-

spraak.

nl

RDF

ohloh

Last.FM

(rdfize)

RDF

Book

Mashup

PSH

Product

DB

PBAC

Poké-

pédia

Ord-

nance

Survey

Openly

Local

The Open

Library

Open

Cyc

Open

Calais

OpenEI

New

York

Times

NTU

Resource

Lists

NDL

subjects

MARC

Codes

List

Man-

chester

Reading

Lists

Lotico

The

London

Gazette

LOIUS

lobid

Resources

lobid

Organi-

sations

Linked

MDB

Linked

LCCN

Linked

GeoData

Linked

CT

Linked

Open

Numbers

lingvoj

LIBRIS

Lexvo

LCSH

DBLP

(L3S)

Linked

Sensor Data

(Kno.e.sis)

Good-

win

Family

Jamendo

iServe

NSZL

Catalog

GovTrack

GESIS

Geo

Species

Geo

Names

Geo

Linked

Data

(es)

GTAA

STITCH

SIDER

Project

Guten-

berg

(FUB)

Medi

Care

Euro-

stat

(FUB)

Drug

Bank

Disea-

some

DBLP

(FU

Berlin)

Daily

Med

Freebase

flickr

wrappr

Fishes

of Texas

FanHubz

Event-

Media

EUTC

Produc-

tions

Eurostat

EUNIS

ESD

stan-

dards

Popula-

tion (En-

AKTing)

NHS

(EnAKTing)

Mortality

(En-

AKTing)

Energy

(En-

AKTing)

CO2

(En-

AKTing)

education

data.gov

.uk

ECS

South-

ampton

Gem.

Norm-

datei

data

dcs

MySpace

(DBTune)

Music

Brainz

(DBTune)

Magna-

tune

John

Peel

(DB

Tune)

classical

(DB

Tune)

Audio-

scrobbler

(DBTune)

Last.fm

Artists

(DBTune)

DB

Tropes

dbpedia

lite

DBpedia

Pokedex

Airports

NASA

(Data

Incu-

bator)

Music

Brainz

(Data

Incubator)

Moseley

Folk

Discogs

(Data In-

cubator)

Climbing

Linked Data

for Intervals

Cornetto

Chronic-

ling

America

Chem2

Bio2RDF

biz.

data.

gov.uk

UniSTS

UniRef

Uni

Path-

way

UniParc

Taxo-

nomy

UniProt

SGD

Reactome

PubMed

Pub

Chem

PRO-

SITE

ProDom

Pfam

PDB

OMIM

OBO

MGI

KEGG

Reaction

KEGG

Pathway

KEGG

Glycan

KEGG

Enzyme

KEGG

Drug

KEGG

Cpd

InterPro

Homolo

Gene

HGNC

Gene

Ontology

GeneID

Gen

Bank

ChEBI

CAS

Affy-

metrix

BibBase

BBC

Wildlife

Finder

BBC

Program

mes

BBC

Music

rdfabout

US Census

Media

Geographic

Publications

Government

Cross-domain

Life sciences

User-generated content

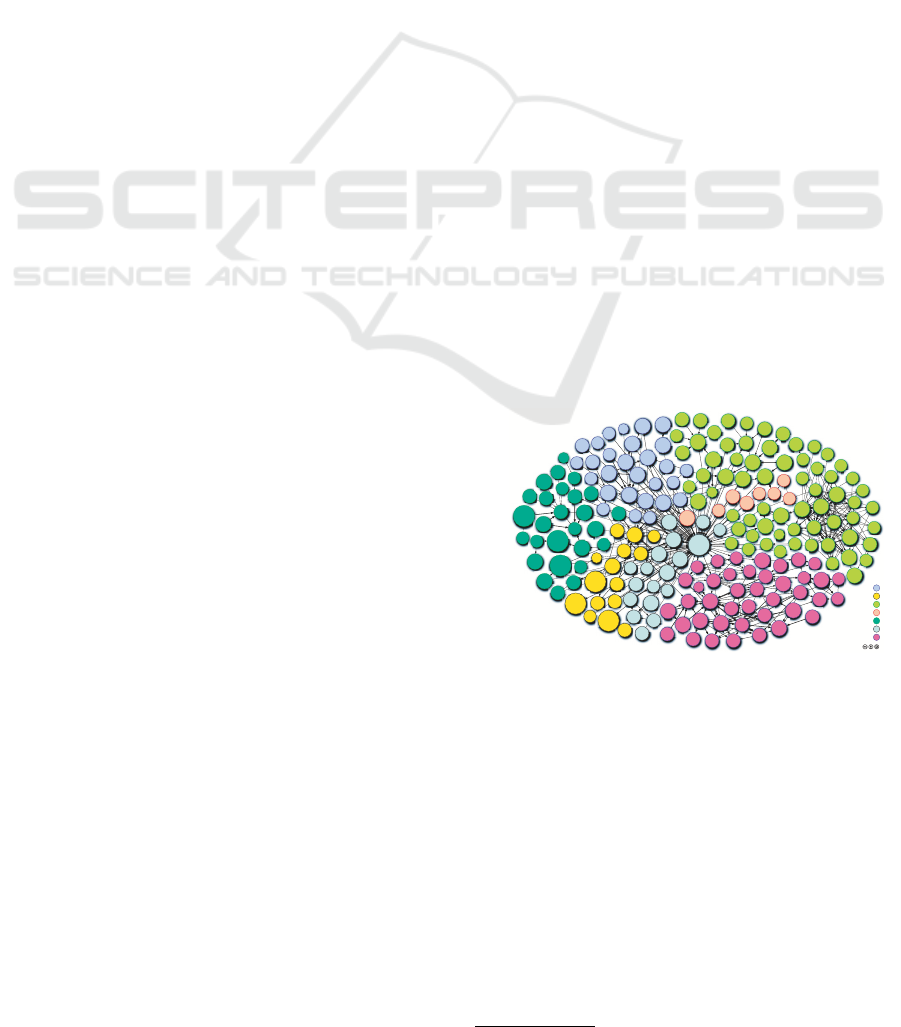

Figure 1: The Linked Open Data cloud in late 2011.

pertext Transfer Protocol (HTTP) and the Resource De-

scription Framework (RDF) to publish and access struc-

tured data on the Web and to connect related data that

is distributed across multiple data sources into a sin-

gle global data space (Bizer et al., 2009), enabling a

new class of applications (Hausenblas, 2009) where the

data integration effort is shared between data publish-

ers, third-party services and data consumers. Increasing

numbers of data providers have begun to adopt Linked

Data; the most prominent example of the Linked Data

principles applied to open data sources is the Linked

Open Data (LOD) cloud

1

depicted in Figure 1.

It currently contains over 300 datasets that con-

1

http://lod-cloud.net/

246

Hausenblas M., Grossman R., Harth A. and Cudré-Mauroux P..

LARGE-SCALE LINKED DATA PROCESSING - Cloud Computing to the Rescue?.

DOI: 10.5220/0003928702460251

In Proceedings of the 2nd International Conference on Cloud Computing and Services Science (CLOSER-2012), pages 246-251

ISBN: 978-989-8565-05-1

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

tribute around 35+ billion RDF triples and over 500 mil-

lion cross-data set links (Bizer et al., 2010). In the visu-

alisation of the LOD cloud in Figure 1, each node rep-

resents a distinct dataset and arcs indicate the existence

of links between data elements in the two data sets.

Currently, there exist three options to process Linked

Data in a central location:

• Dedicated triple stores

2

, such as 4store, Allegro-

Graph, BigData, BigOWLIM, Virtuoso or YARS2,

as well as triple stores in the cloud like the Talis plat-

form

3

or Dydra

4

.

• Relational databases along with i) built-in RDF sup-

port, for example Oracle 11g

5

, or ii) RDB2RDF

mappings, currently under W3C standardisation

6

.

• NoSQL offerings.

In this paper, we focus on the last category. We em-

phasise that this paper is concerned with the question

to what extent NoSQL systems can be used to pro-

cess Linked Data in a cloud computing setup; we con-

sider the more general question of the appropriate data

management infrastructure for distributed data as out of

scope for this work. Sometimes the term cloud comput-

ing is used instead of NoSQL, since in practice, many

cloud computing offerings (Armbrust et al., 2010) are

NoSQL solutions and many NoSQL solutions are cloud-

enabled.

A good starting point for Linked Data processing

with the cloud is Arto Bendiken’s write-up on “How

RDF Databases Differ from Other NoSQL Solutions”

7

as well as Sandro Hawke’s “RDF meets NoSQL”

8

.

The remainder of the paper is structured as follows:

in Section 2 we state requirements concerning Linked

Data processing, then, in Section 3 we review systems

in terms of their Linked Data processing capabilities and

in Section 4 we compare the systems against the require-

ments stated earlier as well as conclude our survey and

report on next steps.

2 REQUIREMENTS

Based on the interactions with researchers and practi-

tioners in the realm of various projects as well as draw-

ing from own experience in the field of Linked Data pro-

cessing in the past four years, we have identified a num-

ber of requirements in addition to the “hard” require-

2

http://www.w3.org/wiki/LargeTripleStores

3

http://www.talis.com/platform/

4

http://dydra.com/

5

http://www.oracle.com/technetwork/database/options/

semantic-tech/

6

http://www.w3.org/2001/sw/rdb2rdf/

7

http://blog.datagraph.org/2010/04/rdf-nosql-diff

8

http://decentralyze.com/2010/03/09/rdf-meets-nosql/

ments performance, throughput, scalability and elastic-

ity (Armbrust et al., 2010; Cooper et al., 2010; Dory

et al., 2011):

URIs as Identifiers

Supporting URIs as primary keys. The first Linked

Data principle (see above) suggests the use of URIs

to name entities. The processing platforms must

thus be able to use URIs as identifiers natively, or

to map URIs to their own internal identifiers effi-

ciently.

RDF Support

Importing and Exporting RDF datasets. The ability

to import RDF data both in small chunks (for ex-

ample, as RDF/XML files) and as large data dumps

(for example, bulk loading of large N-Triples or N-

Quads files) is essential, since in the LOD cloud data

is typically exposed in an RDF serialisation.

Interface

The ability to serve information as HTTP, which

is often used when browsing Linked Data sets and

dereferencing URIs to get additional information

about arbitrary data elements.

Partitioning

Support for logical partitions, for example via

Named Graphs

9

(also sometimes referred to as

“context”) for managing the dataspace.

Update

Providing update facilities, for example via HTTP

PUT/POST over an HTTP interface or via SPARQL

update

10

to perform arbitrary inserts and updates on

data.

Indexing

Support for a modular indexing sub-system that al-

lows to use specialised indexing services, such as

text indexing via the Semantic Information Retrieval

Engine (SIREn)

11

. The ability to offer those in-

dexes is important in many LOD applications, for

example to support full-text search interfaces and

co-reference services such as SameAs.org

12

.

Inferencing

Support for reasoning, for example taking into ac-

count equivalence statements via

owl:sameAs

ax-

ioms as well as other logical constructs provided by

RDFS and OWL (e.g.,

subclasses

,

transitive

properties

, etc.).

Rich Data Processing

Providing query facilities which can range, depend-

ing on the functionality and scalability require-

ments of the application, from simple Linked Data

9

http://www.w3.org/2011/rdf-wg/wiki/TF-Graphs

10

http://www.w3.org/TR/sparql11-update/

11

http://siren.sindice.com/

12

http://sameas.org/

LARGE-SCALELINKEDDATAPROCESSING-CloudComputingtotheRescue?

247

look-ups over triple pattern look-ups to conjunctive

queries and finally full-fledged general SPARQL

query

13

facilities (joins, aggregates, property paths,

etc.) in order to perform rich, structured queries.

Efficient Graph Processing

Efficient support for path or transitive closure

queries. As entities are interlinked on the LOD

cloud, it is often necessary to follow series of links

iteratively to resolve a given query. Such graph

queries are very common in our context, however

can be extremely expensive on some platforms, for

example, on relational platforms where they often

boil down to multi-joins.

In Section 4 we discuss the above listed, Linked Data-

specific requirements along with the findings of this pa-

per.

3 DATA PROCESSING SYSTEMS

REVIEW

Following Cattel’s terminology (Cattell, 2011) we un-

derstand data stores to include cloud computing as well

as NoSQL offerings. In the following, we review sev-

eral data stores, in alphabetic order, in terms of their

capabilities to perform large-scale processing of Linked

Data processing perspective. A number of runner-ups

are discussed as well in the following.

3.1 BigQuery

BigQuery

14

is a cloud computing offering by Google,

supposed to complement MapReduce jobs in terms of

interactive query processing, introduced together with

Google Storage and the Google Prediction API in early

2010. In late 2010 we looked into utilising BigQuery

for Linked Data processing by developing the BigQuery

Endpoint (Hausenblas, 2010a), an application deployed

on Google App Engine that allows to load RDF/N-

Triples content into Google Storage as well as exposing

an endpoint allowing to query the data.

3.2 Cassandra

Apache Cassandra is a second-generation distributed

database, bringing together Dynamo’s (DeCandia et al.,

2007) fully distributed design and Bigtable’s column-

family-based data model (Chang et al., 2006). There

is a Cassandra storage adaptor for RDF.rb (Bendiken,

2010b) available, developed by Arto Bendiken and we

developed CumulusRDF (Ladwig and Harth, 2010),

which uses Apache Cassandra as a storage back-end.

13

http://www.w3.org/TR/sparql11-query/

14

https://code.google.com/apis/bigquery/

Brisk

15

is a Hadoop-style data processing framework

built on top of the Apache Cassandra data store.

3.3 CouchDB

Apache CouchDB is a distributed, document-oriented

database written in the Erlang; it can be queried and in-

dexed in a MapReduce fashion. It manages the data as

a collection of JSON documents and is used by Ubuntu,

Couchbase and many more. Greg Lappen has provided

a CouchDB storage adaptor for RDF.rb (Lappen, 2011).

CouchDBs native language is JSON, hence it seems

that efforts like JavaScript Object Notation for Linked

Data (JSON-LD)

16

are a good fit for the data represen-

tation part. Only recently, a discussion took place on

the CouchDB users list regarding “CouchDB vs. RDF

databases” (Nunes, 2011).

3.4 Hadoop/Pig

Apache Hadoop is a software framework written in Java

that supports reliable, scalable, distributed computing.

Apache Pig

17

is a high-level data analysis language on

top of Hadoop’s MapReduce framework. The com-

munity discusses (Castagna, 2010) best practices for

processing RDF data with MapReduce/Hadoop. Mika

et.al. experimented with a system using Hadoop and Pig

for SPARQL query processing (Mika and Tummarello,

2008). Tanimura et. al. (Tanimura et al., 2010) have re-

ported on an extensions to the Pig data processing plat-

form for scalable RDF data processing using Hadoop,

somewhat related to what Sridhar et. al. (Sridhar et al.,

2009) have suggested in their RAPID system. Arto

Bendiken has developed RDFgrid (Bendiken, 2010a), a

frameworkfor batch-processing RDF data with Hadoop,

as well as Amazon’s Elastic Map Reduce

18

.

3.5 HBase

Apache HBase is a distributed, versioned, column-

oriented store modelled after Google’ Bigtable, writ-

ten in Java. A couple of institutions like Mendeley,

Facebook and Adobe are using HBase. Gabriel Ma-

teescu has provided an article (Mateescu, 2009) on how

to process RDF data using HBase and Paolo Castagna

has developed an experimental HBase-RDF implemen-

tation (Castagna, 2011). Sun and Jin (Sun and Jin, 2010)

have proposed a scalable RDF store based on HBase.

15

http://www.datastax.com/products/brisk

16

http://json-ld.org/

17

http://pig.apache.org/

18

http://aws.amazon.com/elasticmapreduce/

CLOSER2012-2ndInternationalConferenceonCloudComputingandServicesScience

248

3.6 MongoDB

MongoDB is a schema-free, JSON-document-oriented

database written in C++. It is used by an array of

sites and providers including SourceForge, CERN, and

Foursquare. Rob Vesse has reported (Vesse, 2010) on

experiments he conducted with MongoDB as an RDF

store and William Waites has provided a write-up on

“Mongo as an RDF store” (Waites, 2010). Further, An-

toine Imbert has developed

MongoDB::RDF

for Perl (Im-

bert, 2010).

3.7 Pregel

Pregel is a system for graph processing developed at

Google (Malewicz et al., 2010). Similar to Hadoop,

Pregel uses a set of nodes in a cluster to distribute work

which is executed in parallel, with defined synchroniza-

tion points to allow for exchange of intermediate results

between the parallel processes. Unlike the MapReduce

framework, for which an open source implementation

is available in Apache Hadoop, Pregel is currently not

available outside Google.

3.8 SimpleDB

Amazon SimpleDB is a distributed database/web-

service written in Erlang. It is often used together with

other Amazon Web Services (AWS) offerings such as

the Simple Storage Service (S3), for example by Alexa,

Livemocha or Netflix. Stein and Zacharias have sum-

marised (Stein and Zacharias, 2010) their experiences

with RDF processing in SimpleDB in their open source

project Stratustore

19

.

3.9 Riak

Riak is a Dynamo-inspired key-value store with a dis-

tributed database network platform and built-in MapRe-

duce support. It supports high availability and is used

in production by institutions such as Comcast, Wikia

or Opscode. Andrew McKnight has shared (McKnight,

2010) his thoughts concerning SPARQL query process-

ing on the Riak platform and we had a look into stor-

ing an RDF graph in Riak using HTTP Link head-

ers (Hausenblas, 2010b) allowing for graph traversing.

3.10 Other Systems

There are a number of systems that would be capable

of processing Linked Data in the cloud, however we are

not aware of a cloud deployment or the features are not

yet available, publically; for sake of completeness, we

list these systems in the following:

19

http://code.google.com/p/stratustore/

3.10.1 Distributed Graph Databases

• Neo4j is a graph database implemented in Java that

has built-in RDF processing support, including in-

dexing. Further, Gremlin is a graph traversal lan-

guage that works over graph databases implement-

ing the Blueprints interface

20

, such as Neo4j or Ori-

entDB

21

and Graphbase

22

is an implementation of

the Blueprints interface on top of HBase.

• Microsoft’s Trinity (Microsoft, 2011) is a graph

database over distributed memory cloud, providing

computations on large scale graphs; it can report-

edly be deployed on hundreds of machines. Fur-

ther, Microsoft is building a graph library

23

on top of

their cloud computing framework Orleans that tar-

gets hosting very large graphs with billions of nodes

and edges.

• GoldenOrb

24

is a cloud-based open source project

for massive-scale graph analysis, building upon

Hadoop, modelled after Googles Pregel architec-

ture.

3.10.2 Hybrid Systems

• MonetDB

25

has support for RDF processing in the

queue.

• Sindice (Oren et al., 2008), a semantic indexer, uses

Hadoop and Lucence/SIREn to processes billions of

triples.

• The Large Knowledge Collider project is work-

ing on a Web-scale Parallel Inference Engine

26

, a

MapReduce-based, distributed RDFS/OWL infer-

ence engine.

• Hizalev reported (Hizalev, 2011) on a Redis-based

triple database.

• Seaborne reported (Seaborne, 2009) running TDB, a

native persistent storage layer for the RDF process-

ing framework Jena, on a cloud storage system.

• SARQ

27

is an open source text indexing system for

SPARQL using a remote Solr server.

4 DISCUSSION

Table 1 lists our Linked Data-specific requirements in-

troduced earlier against the identified systems from

20

http://tinkerpop.com/

21

http://www.orientechnologies.com/orient-db

22

https://github.com/dgreco/graphbase

23

http://research.microsoft.com/en-us/projects/ldg/

24

http://www.goldenorbos.org/

25

http://www.monetdb.org/Home

26

http://www.few.vu.nl/∼jui200/webpie.html

27

https://github.com/castagna/SARQ

LARGE-SCALELINKEDDATAPROCESSING-CloudComputingtotheRescue?

249

Table 1: Coverage of Linked Data processing capabilities.

System Backend Identifiers Interface Partition Update Index Query Inference

(Hausenblas, 2010a) BigQuery URIs Linked

Data

quads + fixed custom -

(Ladwig and Harth,

2010)

Cassandra URIs Linked

Data

quads + multiple Linked

Data

lookups

-

(Tanimura et al., 2010) Pig/Hadoop URIs custom triples - fixed SPARQL rules

(Sridhar et al., 2009) Pig/Hadoop URIs custom triples - fixed RAPID -

(Mika and Tummarello,

2008)

Pig/Hadoop URIs custom triples - fixed SPARQL forward-

chaining

rules

(Huang et al., 2011) RDF-

3X/Hadoop

URIs custom triples - fixed SPARQL -

(Sun and Jin, 2010) HBase URIs custom triples - fixed SPARQL -

(Vesse, 2010) MongoDB URIs custom triples - multiple SPARQL -

(Stein and Zacharias,

2010)

SimpleDB URIs custom triples + multiple SPARQL -

(Hausenblas, 2010b) Riak URIs HTTP triples - fixed custom -

Sec. 3. The practical applicability of the systems sur-

veyed varies: some systems represent first steps in map-

ping the RDF triple structure into a K/V-based storage

layout, while others focus on optimising join processing

capabilities. Similarly, while some systems provide de-

fined interfaces for insert, update and query, other sys-

tems are still in the prototype status which custom inter-

faces, often resembling those of the underlying process-

ing system.

As becomes apparent from the plethora of systems

surveyed and listed in Table 1, the burgeoning field of

cloud-based Linked Data management is still fractured.

Community-built benchmarks can serve as catalysts and

help to unify a field. While the Wisconsin Bench-

mark (DeWitt, 1993) can be considered as the prototyp-

ical benchmark for parallel databases, it is rather out-

dated. (Pavlo et al., 2009) compared MapReduce with

parallel databases, providing useful insights and guid-

ance on what are important metrics. Most relevantly,

Cooper et. al. (Cooper et al., 2010) introduced the Ya-

hoo! Cloud Serving Benchmark (YCSB) and only re-

cently Dory et. al. (Dory et al., 2011) reported on elas-

ticity and scalability measurements of cloud databases.

We currently establish a benchmark for Linked Data

processing with cloud computing offerings

28

as we be-

lieve that a common, benchmark could help to further

identify and organise requirements, and in the process

unite a fractured field towards a common goal.

REFERENCES

Armbrust, M., Fox, A., Griffith, R., Joseph, A. D., Katz, R.,

Konwinski, A., Lee, G., Patterson, D., Rabkin, A., Sto-

28

https://github.com/mhausenblas/nosql4lod

ica, I., and Zaharia, M. (2010). A view of cloud comput-

ing. Commun. ACM, 53:50–58.

Bendiken, A. (2010a). RDFgrid. https://github.com/

datagraph/rdfgrid.

Bendiken, A. (2010b). RDF.rb storage adapter for Apache

Cassandra. https://github.com/bendiken/rdf-cassandra.

Berners-Lee, T. (2006). Linked Data, Design Issues.

Bizer, C., Heath, T., and Berners-Lee, T. (2009). Linked

Data—The Story So Far. Special Issue on Linked Data,

International Journal on Semantic Web and Information

Systems (IJSWIS), 5(3):1–22.

Bizer, C., Jentzsch, A., and Cyganiak, R. (2010). State of the

LOD Cloud. http://www4.wiwiss.fu-berlin.de/lodcloud/

state/.

Castagna, P. (2010). Best practices for processing RDF

data using MapReduce. http://j.mp/processing-rdf-data-

using-mapreduce-via-hadoop.

Castagna, P. (2011). HBase-RDF. https://github.com/

castagna/hbase-rdf.

Cattell, R. (2011). Scalable SQL and NoSQL data stores. SIG-

MOD Rec., 39:12–27.

Chang, F., Dean, J., Ghemawat, S., Hsieh, W. C., Wallach,

D. A., Burrows, M., Chandra, T., Fikes, A., and Gru-

ber, R. E. (2006). Bigtable: a distributed storage system

for structured data. In Proc. of the 7th USENIX Sympo-

sium on Operating Systems Design and Implementation

- Volume 7, OSDI ’06, pages 15–15, Berkeley, CA, USA.

USENIX Association.

Cooper, B. F., Silberstein, A., Tam, E., Ramakrishnan, R.,

and Sears, R. (2010). Benchmarking cloud serving sys-

tems with YCSB. In Proc. of the 1st ACM symposium

on Cloud Computing, SoCC ’10, pages 143–154, New

York, NY, USA. ACM.

DeCandia, G., Hastorun, D., Jampani, M., Kakulapati,

G., Lakshman, A., Pilchin, A., Sivasubramanian, S.,

Vosshall, P., and Vogels, W. (2007). Dynamo: amazon’s

highly available key-value store. SIGOPS Oper. Syst.

Rev., 41:205–220.

DeWitt, D. J. (1993). The Wisconsin Benchmark: Past,

CLOSER2012-2ndInternationalConferenceonCloudComputingandServicesScience

250

Present, and Future. In Gray, J., editor, The Benchmark

Handbook. Morgan Kaufmann.

Dory, T., Mejias, B., Roy, P. V., and Tran, N.-L. (2011). Com-

parative elasticity and scalability measurements of cloud

databases. In Proc. of the 2nd ACM Symposium on Cloud

Computing, SoCC ’11, New York, NY, USA. ACM.

Franklin, M. J., Halevy, A. Y., and Maier, D. (2005). From

databases to dataspaces: a new abstraction for informa-

tion management. SIGMOD Record, 34(4):27–33.

Grossman, R. and Mazzucco, M. (July/August, 2002). Datas-

pace - a web infrastructure for the exploratory analysis

and mining of data. IEEE Computing in Science and

Engineering, pages 44–51.

Hausenblas, M. (2009). Exploiting Linked Data to Build Web

Applications. IEEE Internet Computing, 13(4):68–73.

Hausenblas, M. (2010a). BigQuery Endpoint. http://

code.google.com/p/bigquery-linkeddata/.

Hausenblas, M. (2010b). Toying around with Riak for Linked

Data. http://webofdata.wordpress.com/2010/10/14/riak-

for-linked-data/.

Hizalev, P. (2011). Redis based triple database. http://petrohi.

me/post/6114314450/redis-based-triple-database.

Huang, J., Abadi, D., and Ren, K. (2011). Scalable sparql

querying of large rdf graphs. In Proc. of the 37st Inter-

national Conference on Very Large Data Bases.

Imbert, A. (2010). MongoDB-RDF.

https://github.com/ant0ine/MongoDB-RDF.

Ladwig, G. and Harth, A. (2010). An RDF Storage Scheme on

Key-Value Stores for Linked Data Publishing. Technical

Report, KIT.

Lappen, G. (2011). RDF.rb storage adapter for CouchDB.

https://github.com/ipublic/rdf-couchdb.

Malewicz, G., Austern, M. H., Bik, A. J., Dehnert, J. C., Horn,

I., Leiser, N., and Czajkowski, G. (2010). Pregel: a sys-

tem for large-scale graph processing. In Proc. of the

2010 international conference on management of data,

SIGMOD ’10, pages 135–146, New York, NY, USA.

ACM.

Mateescu, G. (2009). Finding the way through the semantic

Web with HBase. developerWorks, IBM.

McKnight, A. (2010). SPARQL on Riak. http://andrew

mcknight.blogspot.com/2010/12/sparql-on-riak.html.

Microsoft (2011). Trinity. http://research.microsoft.com/en-

us/projects/trinity/.

Mika, P. and Tummarello, G. (2008). Web semantics in the

clouds. IEEE Intelligent Systems, 23:82–87.

Nunes, D. (2011). CouchDB x RDF databases comparison.

http://comments.gmane.org/gmane. comp. db. couchdb.

user/2334.

Oren, E., Delbru, R., Catasta, M., Cyganiak, R., Sten-

zhorn, H., and Tummarello, G. (2008). Sindice.com:

A document-oriented lookup index for open linked data.

IJMSO, 3(1).

Pavlo, A., Paulson, E., Rasin, A., Abadi, D. J., DeWitt, D. J.,

Madden, S., and Stonebraker, M. (2009). A compari-

son of approaches to large-scale data analysis. In Proc.

of SIGMOD conference on management of data, pages

165–178, New York, NY, USA. ACM.

Seaborne, A. (2009). Running TDB on a cloud storage system.

http://j.mp/running-tdb-on-cloud-storage-system.

Sridhar, R., Ravindra, P., and Anyanwu, K. (2009). RAPID:

Enabling Scalable Ad-Hoc Analytics on the Semantic

Web. In Proc. of the 8th International Semantic Web

Conference, ISWC ’09, pages 715–730, Berlin, Heidel-

berg. Springer-Verlag.

Stein, R. and Zacharias, V. (2010). RDF on Cloud Number

Nine. In Workshop on New Forms of Reasoning for the

Semantic Web (NeFoRS).

Sun, J. and Jin, Q. (2010). Scalable RDF store based on HBase

and MapReduce. In Advanced Computer Theory and En-

gineering (ICACTE), 2010 3rd International Conference

on, volume 1, pages V1–633–V1–636.

Tanimura, Y., Matono, A., Lynden, S., and Kojima, I. (2010).

Extensions to the Pig data processing platform for scal-

able RDF data processing using Hadoop. In Data Engi-

neering Workshops (ICDEW), 2010 IEEE 26th Interna-

tional Conference on, pages 251 –256.

Vesse, R. (2010). Experimenting with MongoDB as an RDF

Store . Blog post, University of Southampton.

Waites, W. (2010). Mongo as an RDF store. http://wwaites.

posterous.com/mongo-as-an-rdf-store.

LARGE-SCALELINKEDDATAPROCESSING-CloudComputingtotheRescue?

251