LGMD based Neural Network for Automatic Collision Detection

Ana Silva, Jorge Silva and Cristina Santos

Industrial Electronic department, University of Minho, Campus of Azurem, Guimarães, Portugal

Keywords: Bio-inspired Model, Lobula Giant Movement Detector Neuron, Artificial Neural Networks, Collision

Avoidance.

Abstract: Real-time collision detection in dynamic scenarios is a hard task if the algorithms used are based on

conventional techniques of computer vision, since these are computationally complex and, consequently,

time-consuming. On the other hand, bio-inspired visual sensors are suitable candidates for mobile robot

navigation in unknown environments, due to their computational simplicity. The Lobula Giant Movement

Detector (LGMD) neuron, located in the locust optic lobe, responds selectively to approaching objects. This

neuron has been used to develop bio-inspired neural networks for collision avoidance. In this work, we

propose a new LGMD model based on two previous models, in order to improve over them by

incorporating other algorithms. To assess the real-time properties of the proposed model, it was applied to a

real robot. Results shown that the LGMD neuron model can robustly support collision avoidance in

complex visual scenarios.

1 INTRODUCTION

Many animals extract salient information from

complex, dynamic visual scenes to drive behaviours

necessary for survival. Insects are particularly

challenging for robotic systems: they achieve their

performance with a nervous system that has less

than a million neurons and weighs only about 0.1

mg. By this reason, some of these insects provide

ideal biological models that can be emulated in

artificial systems. These models have the potential to

reproduce complex behaviours with low

computational overhead by using visual information

to detect imminent collisions caused either by a

rapidly approaching object or self-motion towards

an obstacle.

In locusts, the Lobula Giant Movement Detector

(LGMD) is a bilaterally paired motion sensitive

neuron that integrates inputs from the visual system,

responding robustly to images of objects

approaching on a collision course (Gray, John R , et

al., 2001) (Rind, 1987) (Gabbiani, et al., 1999)

(Gray, et al., 2010). This neuron is responsible for

triggering escape and collision avoidance behaviours

in locusts. The first physiological and anatomical

bio-inspired model for the LGMD neuron was

developed by Bramwell in (Rind and Bramwell,

1996). The model continued to evolve (Blanchard, et

al., 2000) (Yue and Rind, 2006) (Stafford, et al.,

2007) (Meng, et al., 2010) and it was used in mobile

robots and deployed in automobiles for collision

detection. These connectionist models have shown

that the integration of on and off channels and feed-

forward inhibition can account for aspects of the

LGMD neuron looming sensitivity and selectivity

when stimulated with approaching, translating and

receding objects.

However, further work is needed to develop

more robust models that can account for complex

aspects of visual motion (Guest and Gray, 2006). In

this article, we are interested in understanding the

LGMD models previously proposed by (Yue and

Rind, 2006) as well as the achieved properties of the

model described at (Meng, et al., 2010). Thereby, we

are interested in integrate the two previous LGMD

models, (Yue and Rind, 2006) and (Meng, et al.,

2010), in order to take the advantage of noise

immunity proposed in (Yue and Rind, 2006) and

direction sensitivity proposed in (Meng, et al.,

2010).

In a previous study, we implemented the models

from (Yue and Rind, 2006) and (Meng, et al., 2010)

and submitted them to relevant simulated visual data

sets. This step enabled us to understand some of the

literature models limitations in relation to obstacle

detection and avoidance.

132

Silva A., Silva J. and Santos C..

LGMD based Neural Network for Automatic Collision Detection.

DOI: 10.5220/0004044201320140

In Proceedings of the 9th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2012), pages 132-140

ISBN: 978-989-8565-22-8

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

With this knowledge, we propose a new model

to cope with the limitations showed by the models

implemented ((Yue and Rind, 2006) and (Meng, et

al., 2010)). The proposed model was validated over

a set of different visual scenarios. In order to the

LGMD network be used as a robust collision

detector for real robotic applications, and based on

(Yue and Rind, 2006), it was proposed a mechanism

to enhance the features of colliding objects. The

model from (Yue and Rind, 2006) favours grouped

excitation, which normally indicates the presence of

an obstacle, and ignores isolated excitation, which

can be the result of noise present in the captured

image, with selective passing coefficients. The

model (Yue and Rind, 2006) has the capability to

filter out the isolated excitations through the

excitation gathering mechanism, allowing that only

parts of the captured image with bigger excitatory

spatial areas can contribute to the excitation of the

LGMD cell. Besides this extraordinary capability of

noise reduction, when computationally

implemented, the neural network based on (Yue and

Rind, 2006) generated false collision alarms when

stimulated with receding objects. Based on (Meng,

et al., 2010), we have modified the LGMD model, so

that it could distinguish approaching from receding

movements.

On the other side, the LGMD model proposed by

(Meng, et al., 2010) is not immune to the presence of

noise levels in the captured image, which can leads

it to produce false collision alerts in the presence of

noise. However, as it was said before, this model is

able to detect the direction of movement in depth.

Taking the advantages of each model (Yue and

Rind, 2006) (Meng, et al., 2010), we decided to

propose a new LGMD model that is more robust in

collision detection. The model here proposed was

tested on simulated and non-simulated environments

and, through the obtained results, it can be

concluded that it works very efficiently in both

scenarios. In relation to the real performance of the

proposed method, a collision avoidance is judge by

the evaluation of a real robot moving around in a

real environment and avoiding real obstacles (of

different shapes, sizes and colours) and processing

captured images (containing real noise, blur,

reflections, etc). In our perspective, the new

proposed method increases the precision of obstacle

detection, in a way that this model is robust to the

presence/absence of high noise levels in the captured

image, as well as is able to detect the movement

direction of the visual stimulus. Besides that, when

tested in a real environment, the results were very

satisfactory. For a better understanding of the work

developed, the paper was organized in the following

way: in section 2, we make a detailed description of

the proposed LGMD neural network model. In

section 3 are presented some experimental results on

simulated and recorded video data. In this section we

also present the experiments carried out with a robot

DRK8000 to test the stability of this model in

relation to collision detection in real scenarios.

Finally, in section 4 we make the conclusions of the

work here described.

2 THE PROPOSED NEURAL

NETWORK MODEL FOR

LGMD

The biological inspired neural network here

proposed is based on previous models described on

(Blanchard, et al., 2000) (Yue and Rind, 2006)

(Stafford, et al., 2007) (Meng, et al., 2010). The

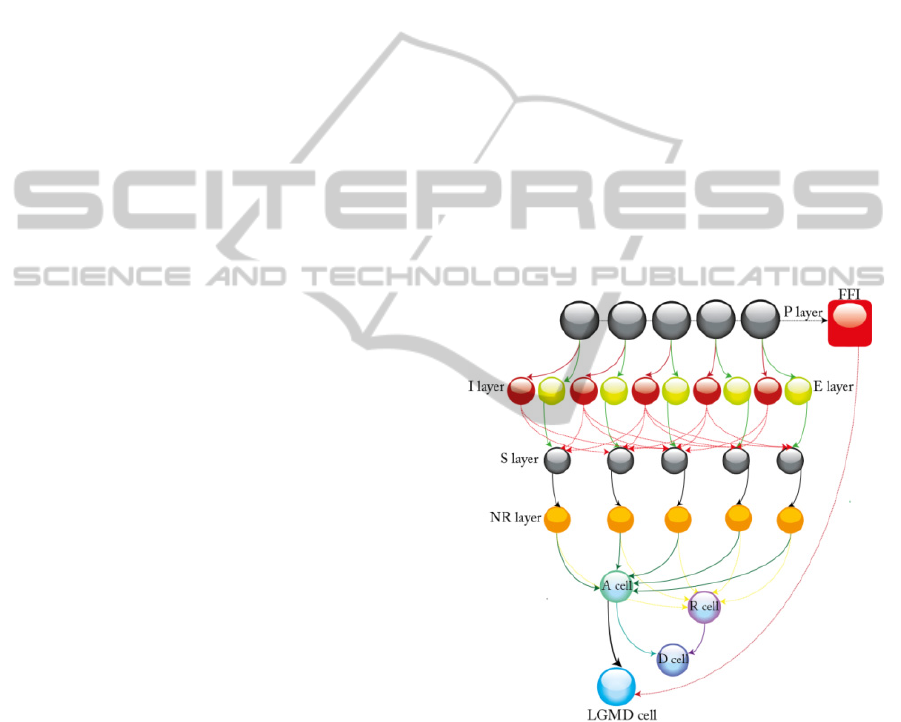

modified neural network is shown on Figure 1.

Figure 1: Schematic illustration of the proposed LGMD

model. There are five groups of cells and five single cells:

P layer: photoreceptor cells; E layer: excitatory cells; I

layer: inhibitory cells; S layer: summing cells; NR layer:

noise-reduction cells; A cell: approaching cell; R cell:

receding cell; D cell: direction cell; FFI cell: feed-forward

inhibition cell. LGMD cell: represents the LGMD

biological neuron.

The LGMD neural network here proposed is

composed by five groups of cells: photoreceptor

cells (P layer), excitatory cells (E layer), inhibitory

cells (I layer), summing cells (S layer) and noise

LGMDbasedNeuralNetworkforAutomaticCollisionDetection

133

reduction cells (NR layer). Besides that, it is

composed by five single cells: the direction sensitive

system, composed by the approaching cell (A cell),

the receding cell (R cell) and the direction cell (D

cell), the feed-forward inhibition cell (FFI cell) and

the LGMD cell.

A grayscale image of the camera current field of

view, represented has a matrix of values (from 0 to

255), is the input to a matrix of photoreceptor units

(P layer). This layer calculates the absolute

difference between the luminance of the current and

the previous input image, mathematical represented

by the following equation:

(

,

)

=

(

,

)

−

(

,

)

(1)

Where P

f

is the output of the P layer at frame f,

L

f

and L

f-1

are the captured luminance at frames f and

f-1, respectively. The output of the P layer is the

input of two layers: the excitatory (E) and the

inhibitory (I) layers. To the excitatory cells of the E

layer, the excitation that comes from the P layer is

passed directly to the retinotopic counterpart. The

inhibition layer (or I layer) receives the output of the

P layer and applies a blur effect on it, using:

(

,

)

=

1

9

δ

(

x+i,

y

+j

)

(2)

δ

(

x,

y

)

=

(

,

)

(3)

Where I

f

is the output of the I layer at frame f,

and P

f-1

is the output of the P layer at frames f-1.

Then, the output of the I layer passes to the summing

layer, in a retinotopic mode.

The summing layer (or S layer) receives the

output from the E and I layers and performs the

followin operation:

(

,

)

=

(

,

)

−I

∙

(

,

)

,

(

,

)

=

(

,

)

and

(

,

)

≥0

(4)

Where P

f

is the output of the P layer at frame f, I

f

the output of the I layer at frame f and I

str

(a scalar,

set to be 0.35) represents the inhibition strength.

Based on (Yue and Rind, 2006), it was added a

new mechanism for the LGMD neural network to

filter the background noise. This mechanism,

implemented in the NR layer, takes clusters of

excitation in the S units to calculate the input to the

LGMD membrane potential. These clusters provide

higher individual inputs then the ones of isolated S

units. The excitation that comes from the S layer is

then multiplied by a passing coefficient Ce

f

, which

value depends on the surrounding neighbours of

each pixel, calculated as follows:

(

,

)

=

1

9

δ

(

x+i,

y

+j

)

(5)

δ

(

x,

y

)

=

(

,

)

(6)

The final excitation level of each cell in the NR

layer, at frame f (NR

f

), is given by:

(

,

)

=

(

,

)

∙

(

,

)

∙w

(7)

w=∆c+max

(

[

]

)

∙C

(8)

Cw is set to 4, and Δc is a small number (0.01),

to prevent w from being zero, and max (|[Ce]

f

|) is the

largest element in matrix |[Ce]

f

|.

Within the NR layer, a threshold filters the

decayed excitations (isolated excitations), as:

(

,

)

=

(

,

)

,

(

,

)

∙

≥

0,

(

,

)

∙

<

(9)

Where C

de

∈ [0, 1] is the decay coefficient and

T

de

is the decay threshold (set to 20). The decay

threshold here used was experimentally determined.

The NR layer is able to filter out the background

detail that may cause excitation. Hence, only the

main object in the captured scene will cause

excitation.

The LGMD potential membrane K

f

, at frame f, is

summed after the NR layer, as described in the

following equation:

=

=(

(

,

)

)

(10)

Where n is the number of rows and m is the

number of columns of the matrix representing the

captured image.

The A and R cells (adapted from (Meng, et al.,

2010)) are two grouping cells for depth movement

direction recognition. The A cell holds the mean of

three samples of the LGMD cell:

=

(

+

+

)

3

(11)

The R cell shares the same structure as the A cell

but with a temporal difference, having one frame

delay from A.

=

(12)

Analyzing the equations above described, it can

be concluded that if the object is approaching A

f

> R

f

and if the object is receding, R

f

> A

f

.

The D cell is used to calculate the direction of

movement. This can be represented by the following

equation:

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

134

=(

)−(

)

(13)

This cell exploits the movement direction in

depth. It is based on the fact that a looming object

(approaching) gets larger whereas a receding object

gets smaller. In a way to distinguish the movement

direction detected by the D cell, it was added a

threshold mechanism, T

D

(set to

0.05×n×m), where n is the number of rows and m is

the number of columns of the captured image),

which was experimentally determined.

=

1,

≥

0,

>

>−

−1,

≤−

(14)

The LGMD membrane potential K

f

is then

transformed to a spiking output using a sigmoid

transformation,

=(1+

)

(15)

Where n

cell

is the total number of cells in the NR

layer and k

f

∈ [0.5, 1]. The collision alarm is decided

by the spiking of the LGMD cell.

However, the spiking output k

f

(from equation

(15), representative of the LGMD cell output) is not

the final output of the neural network. It was

implemented a spiking mechanism using an

adaptable threshold. This threshold starts with a

value experimentally determined, T

s

(0.88) and it is

updated at each frame, through the following

process,

Where [T

l

, T

u

] defines the lower and upper limits

for adaptation (T

l

is 0.180 and T

u

is 0.90) , Δt is the

increasing step (0.01), ℿ (0.72) is a threshold that

limits the averaged spiking output s

av

,between frame

f-n to frame f-k (n is 5 and k is 2),

=

1

−+1

(17)

If the sigmoid membrane potential k

f

exceeds the

thresholdT

s

a spike is produced, as follows:

=

1,

≥

0,ℎ

(18)

Finally, a collision is detected when there are n

sp

spikes in n

ts

time steps (n

sp

≤ n

ts

), where n

sp

is 4 for

the simulated experiments and 3 to real experiments

(since captured images in real experiments present

higher variations) and n

ts

is 5 for all the experiments

(values experimentally determined).

=

1,

≥

0,ℎ

(19)

The robot escape behavior is initialized when a

collision is detected. Additionally, the spikes can be

suppressed by the FFI cell when occurs an intense

field movement. When the robot is turning, sudden

changes in the visual scenario occur which can lead

the network to produce spikes and even false

collision alerts.

The feed-forward inhibition cell (FFI cell) is

very similar to the LGMD cell but the FFI cell

receives the output from the P layer (and not from

the NR layer), being represented by:

=

∑∑

(

,

)

(20)

Where FFI

f

is the output of the FFI cell at frame

f and P

f-1

is the output of the P layer at frame f-1. If

FFI

f

exceeds a threshold T

FFI

, the spikes produced

by the LGMD cell are inhibited. The threshold T

FFI

was experimentally determined (set to 25).

As described in this section, the proposed neural

network for the LGMD neuron only involves low

level image processing. So, the proposed neural

network model is able to work in real time and,

besides that, is independent of object classification.

3 EXPERIMENTAL RESULTS ON

THE PROPOSED MODEL

In a way to test the efficiency of the LGMD neural

network here proposed, two different data sets were

used. The first experiment was made on a simulated

data set and, after that, it was used a recorded video

to prove the capacity of the LGMD neural network

here proposed to work in a real environment. In the

second experiment, we implemented the LGMD

neural network in a real robot, DRK8000, located

within a real arena. All the parameters were kept the

same during all the experiments.

3.1 Simulated Environment

We develop a simulation environment in Matlab

(MATLAB, 2011) that enables us to assess the

effectiveness of the proposed LGMD neural

=

+∆,

>ℿ

(

+∆

)

∈[

,

]

−∆,

<ℿ

(

−∆

)

∈[

,

]

,ℎ

(16)

LGMDbasedNeuralNetworkforAutomaticCollisionDetection

135

network. Objects were simulated according to their

movement and the corresponding data was acquired

by a simulated camera and processed by the LGMD

neural network. Image sequences were generated by

a simulated camera with a field of view of 60º in

both x and y axis and a sampling frequency of 100

Hz. The simulated environment enabled us to adjust

several parameters, such as: image matrix

dimensions, the camera rate of acquisition, the

image noise level, the object shape, the object

texture, as well as other parameters.

The computer used in the experiments here

described was a Laptop (Toshiba Portegé R830-

10R) with 4 GHz CPUs and Windows 7 operating

system. Relative to the parameters used by the

LGMD neural network, they were determined before

the experiments.

3.2 Results on Simulated Data Set and

on Real Recorded Data

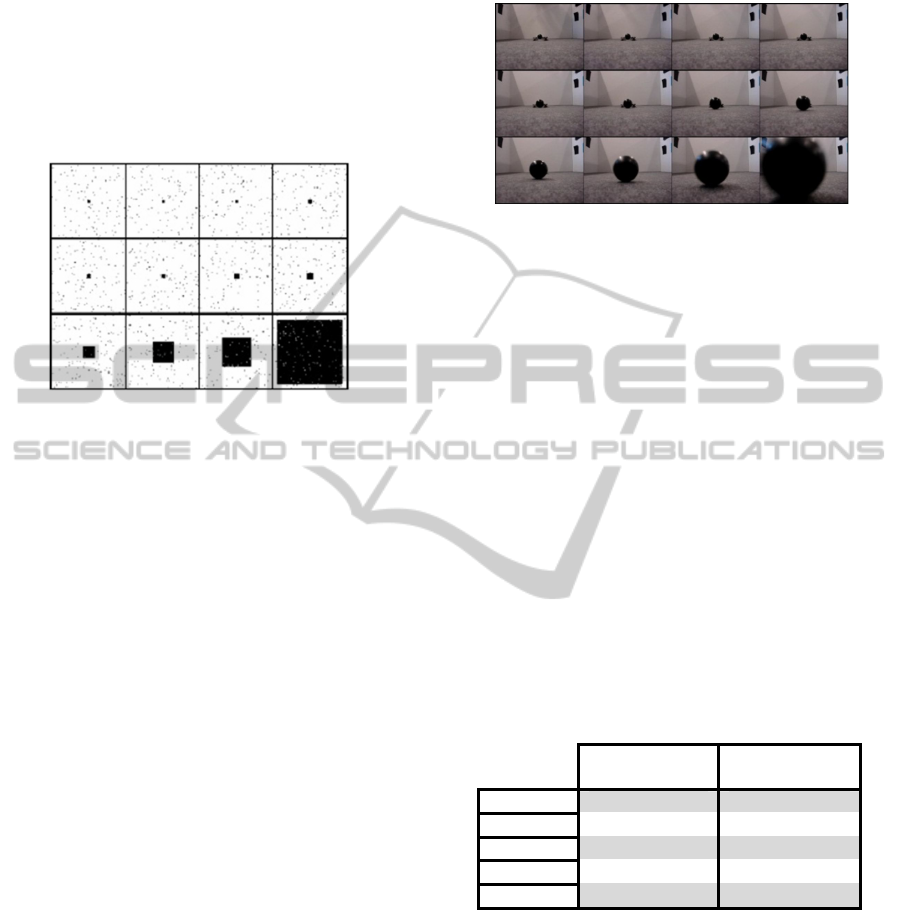

Previous to the stimulation of the LGMD model here

proposed, several experiments have been made in

order to verify and analyse how the image of a black

squared object grows when it is approaching to a

simulated camera. For that, we used synthesized

black (0) and white (255) images, with 100

(horizontal) by 100 (vertical) pixels of resolution.

The object being observed was a square black filled

rectangle (figure 2), whose properties as acquisition

frequency, velocity, trajectory, shape, texture, noise

level or object size could be changed.

The obtained results enabled us to conclude that

the image growing depends on several factors,

including the camera acquisition frequency and the

object velocity, among other characteristics.

However, the curve that approximates this growing

is always an exponential curve, whose slope depends

on these aforementioned factors.

As a second step, and in the context of this study,

we made an exhaustive analysis of the response of

our LGMD model to a set of standard LGMD

stimulation protocols, which allowed us to validate

our model with respect to the biological system

(Gabbiani, et al., 2001). In our first experiment we

evaluated the proposed LGMD model, by using a

looming stimulus consisting of a solid square with

10 repetitions to each size/velocity=l/|v| pair (where

l stands for the half length of the square object and v

for its linear velocity). With these experiments, we

wanted to prove that our model respects the

properties verified by Gabbiani et al. (Gabbiani, et

al., 1999) (Gabbiani, et al., 2001) as well as by

Badia (Badia, et al., 2010). These properties,

founded in the locust visual system, include a linear

relation between the time of the peak firing rate of

the LGMD neuron and the ratio that correlated the

stimulus object size (l) and the stimulus linear

velocity (v) (Gabbiani, et al., 2002).

As a first step, we analysed the LGMD model

here proposed using, for that, a looming stimulus in

the form of a black square. We repeated this

procedure to ten different l/|v| ratios, from 5 to 95

milliseconds. Through the obtained results it was

observed that the fit of the TTC (time-to-collision)

of the peak firing rate, obtained through the LGMD

neural network, versus the l/|v| ratios, is consistent

with the biological results, showing a correlation

coefficient (r) superior to 0.99.

In literature, it was also reported that the LGMD

neuron responses are largely independent of the

stimulus texture, shape and approaching angle

(Badia, et al., 2010) (Gabbiani, et al., 2001). The

results obtained when we subjected our model to

stimulus with different textures, different shapes and

different approaching angles (as shown on Figure 2)

to different l/|v| ratios (also from 5 to 95ms) showed

us that the proposed model still has a linear

relationship between the TTC of the peak firing rate

versus the l/|v| ratios, not being affected by the

change on the stimulus characteristics, as reported

for the biological system.

Figure 2: Artificial visual stimuli, developed in Matlab

(MATLAB, 2011).

In the first experiment made, to test the LGMD

model invariance to textured objects, the correlation

coefficient between the LGMD model responses and

the regression line was bigger than 0.99 (r>0.99),

meaning that the LGMD model is not sensitive to

the texture of the objects. Relatively to the second

experiment, which allowed us to test the LGMD

model invariance to object shape, the correlation

coefficient between the model responses and the

regression line was 0.97, approximately (r=0.9734).

And, for the last one, in order to test the LGMD

model invariance to different approaching angles of

the looming stimulus, we aligned the camera at

different angular orientations relatively to the

projection screen. After the analysis of the obtained

results we could conclude that, as the camera angle

deviates from the center (0º), the correlation

coefficient decays (for a camera angle of 33º of

deviation, r=0.995; for a camera angle of 55º of

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

136

deviation, r=0.9192 and, finally, for a camera angle

of 75º of deviation, r=0.874). Through this

validation, we could conclude that the LGMD model

here proposed respects the biological principles.

After the model validation, we fed the LGMD

neural networks proposed by (Yue and Rind, 2006)

(Meng, et al., 2010) and the one proposed by us,

with simulated image sequences (a representation

can be seen on Figure

3).

Figure 3: Selected frames from the simulated image

sequence. The square object changes its size from small

(10 by 10cm, l=5cm) to big, and moves at 100 cm/s

(v=100cm/s). The relation l/|v| is 50 milliseconds. The

noise level in all the image sequence is, approximately,

500 pixels. The frame rate was 100 Hz.

In this point, we used four different simulated visual

stimuli:

Stimulus 1: composed by a black approaching

square, over a white background, with l/|v| equal to

50 milliseconds, acquired with a frame rate of 100

Hz, without noise added to the image sequence.

Stimulus 2: composed by a black receding

square, over a white background, with l/|v| equal to

50 milliseconds, acquired with a frame rate of 100

Hz, without noise added to the image sequence.

Stimulus 3: composed by a black approaching

square, over a white background, with l/|v| equal to

50 milliseconds, acquired with a frame rate of 100

Hz, with 500 pixels of noise added to the image

sequence.

Stimulus 4: composed by a black receding

square, over a white background, with l/|v| equal to

50 milliseconds, acquired with a frame rate of 100

Hz, with 500 pixels of noise added to the image

sequence.

In addition to these four simulated visual stimuli,

and in order to test the LGMD models in a real

environment, we recorded a real video sequence,

using a Sony Cyber shot digital camera 7.2

megapixels to obtain the video clip. The resolution

of the video images was 640 by 480 pixels, with an

acquisition frequency of 30 frames per second. In

Figure 4 it is represented some selected frames

captured by the camera, showing a real approaching

black ball.

Figure 4: Selected frames from the recorded image

sequence used in the experiment. The recorded video is

composed by 44 frames, showing a black approaching

ball.

After the computational implementation of the

LGMD models proposed in (Yue and Rind, 2006)

and (Meng, et al., 2010), and after subject those to

all the stimuli previously described, we verify that

the collisions were detected, by the different LGMD

models, at different time instants and, consequently,

at different distances of the object (simulated or real)

relatively to the camera. For a better understanding

and organization of the results, we decided to call

“LGMD model 1” to the model proposed by (Yue

and Rind, 2006) and “LGMD model 2” to the model

proposed by (Meng, et al., 2010).

The results obtained are resumed in the

following table.

Table 1: Distances at which collision detection alarms

were generated by the LGMD model 1 and LGMD model

2, in five different situations tested.

LGMD model 1 LGMD model 2

Stimulus 1 26 cm 14 cm

Stimulus 2 35 cm --

Stimulus 3 26 cm 20 cm

Stimulus 4 35 cm 11 cm

Real video 24 cm 14 cm

As we can observe on Table 1, in the

approaching situations (stimulus 1, 3 and real video),

the LGMD model 1 detected a collision when the

object was located at, approximately, 24-26 cm

relatively to the camera. This model showed its

immunity to the noise presence since it detected a

collision exactly at the same distance when

stimulated with stimulus 1(absence of noise) and 3

(presence of high noise levels). However, if we

observe the obtained results for the LGMD model 1

when stimulated with receding objects (stimulus 2

and 4) it detected a false collision when the object

LGMDbasedNeuralNetworkforAutomaticCollisionDetection

137

was located at 35 cm relatively to the camera, in

both situations tested. Through these last results one

can conclude that the LGMD model 1 is not able to

distinguish between approaching and receding

objects, generating false collision alerts in the

presence of receding objects. But we can also

conclude that this model has high immunity to the

noise presence in the captured images.

Relatively to the LGMD model 2 and observing

Table 1, for the stimulus 1 and 3, this model did not

detect collisions for the same distance. When

stimulated with stimulus 1, it detected a collision

when the object was located at 14 cm relatively to

the camera and when stimulated with stimulus 3, a

collision was detected sooner, when the object was

at 20 cm relatively to the camera. This happened due

to the fact that the LGMD model 2 is not immune to

the noise presence and the noise pixels, which were

not eliminated by this model, composed an extra

excitation to the LGMD neural network.

In the presence of a receding object, the LGMD

model 2 was able to not produce false collision alerts

when stimulated with stimulus 2. However, when we

feed the LGMD model 2 with the stimulus 4, it

detected a false collision when the object was

located at 11 cm relatively to the camera. This

happened also due to the non-immunity of the

LGMD model 2 to the noise presence, which works

as an extra excitation, leading to the generation of

false collision alerts.

After this analysis, relative to the behaviour of

the LGMD model 1 and LGMD model 2 in different

situations, we could extract some particular

characteristics of both models. These results leaded

us to produce a mixed LGMD model, combining the

advantages of the LGMD model 1 and LGMD

model 2. Thus, the LGMD model here proposed

provides noise immunity, as well as a directionally

sensitive system.

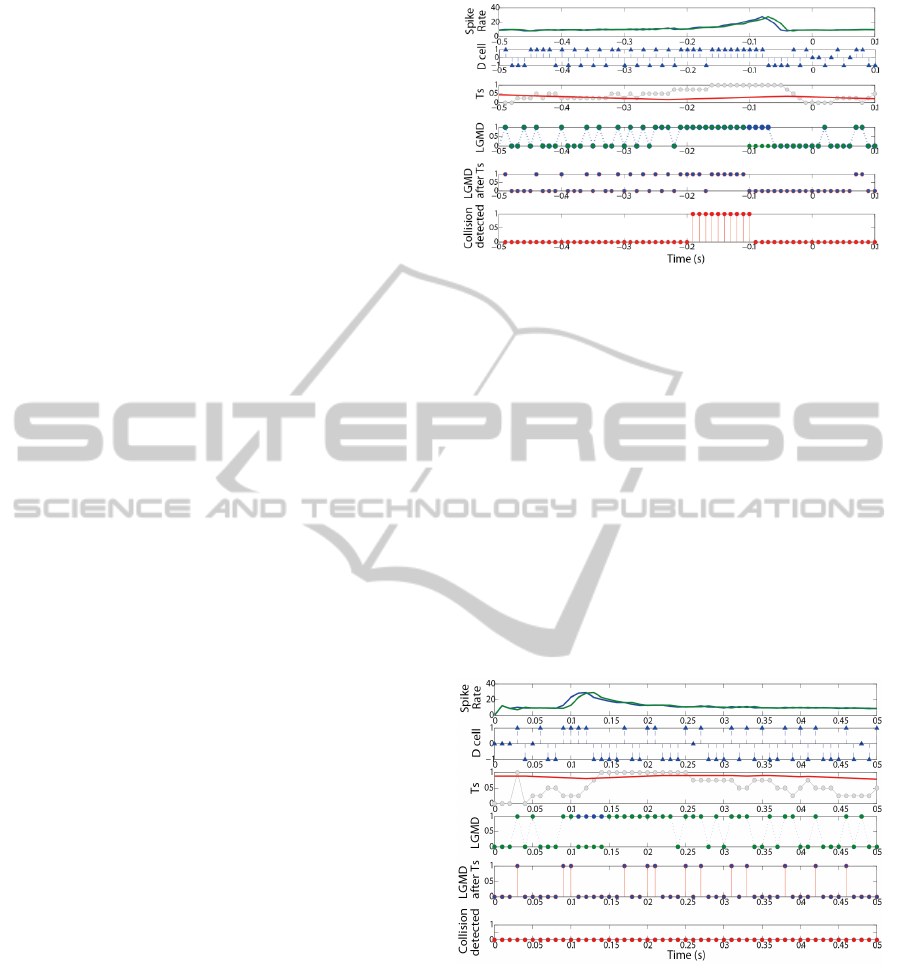

Figure 5 shows the output from the LGMD

model here proposed. In this figure, at each time step

we can observe the result of different mathematical

processing (described on section 2), corresponding

to the layers of the proposed model, executed

sequentially, necessary to detect, with the maximum

precision, an imminent collision.

The analysis of these results showed, on Figure

5, that the LGMD neural network detected a

collision at time -0.19 seconds, i.e., when the object

was located at 19 cm relatively to the camera.

In relation to the receding object, represented on

Figure 6, as expected no collisions were detected.

The results previously described showed the

efficacy of the LGMD neural network proposed by

Figure 5: LGMD model response to an approaching object

which l/|v| set at 50 milliseconds. Spike Rate: blue graph:

is obtained by the ratio of the A cell value and the total

number of cells in the NR layer. Green graph: is obtained

by the ratio of the R cell value and the total number of

cells in the NR layer. D cell: output of the direction cell: 1:

approaching, 0: no significant movement, -1: receding. Ts:

adaptative threshold represented by the red line; the gray

points represent the s

av

output. LGMD: Blue graph: output

of the LGMD cell (mathematically represented by the k

f

value). Green points: output of the LGMD cell after the

Feed-forward inhibition. LGMD after T

s

: represents the

output of the LGMD cell after the application of the

threshold T

s

and being in account the output of the D cell.

Collision detected: the output of this graph is one when it

is detected four successive spikes in five successive time-

steps. In all these graphs, the zero value corresponds to the

time of collision.

Figure 6: LGMD model response to a receding object

which l/|v| was equal to 50 milliseconds. The legend of

this figure is similar to the one described on the figure 5.

us. On Figure 5 and Figure 6, it is shown the LGMD

model immunity to high noise levels, as well as the

capability of this model in distinguish the direction

of movement between successive frames. Then, to

test the capability of the proposed LGMD model in a

more realistic environment, we subjected it to the

real video sequence, represented on Figure 4. In this

situation, the model produced a collision alert when

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

138

the object was located at 28 cm relatively to the

camera.

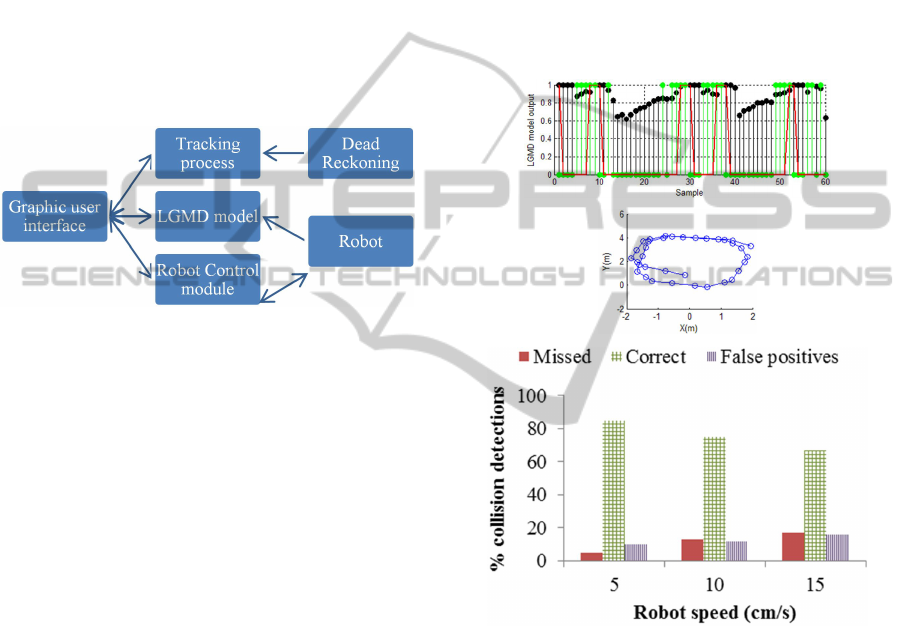

3.3 Results on a Real Robot

In order to assess the capability of the LGMD model

here proposed in a real environment, we used a

DRK8000 mobile robot, with a 8-bit CIF (352 by

288 pixels) colour CMOS camera, working at 10Hz,

having a field-of-view of 70 degrees, approximately.

The robot was located within an arena, surrounded

by four walls with attached objects with different

colours, shapes, textures and sizes. The arena has 16

m

2

. We used the dead reckoning process in order to

predict the position of the robot at each time instant.

Figure 7: Integrated simulation processes used in the real

experiment.

As Figure 7 shows, the simulation system used

comprises four processes: The LGMD model

module, the robot control module, the tracking

module and the graphical user interface. The

experiment ran in real-world time, with 10 time

steps per second. The LGMD model module was

composed by the different layers observed on Figure

1, and the final output of this model comprises two

different states: “collision detected” or “non-

collision detected”.

The second module, the robot control module,

consists in the reactive control structure, capable of

controlling the robot, using only the output of the

LGMD model module. The behaviours comprised

by this module, can be divided in two: 1- basic

exploratory activity; 2- collision avoidance of

obstacles, triggered by the response of the LGMD

module. If the robot detects an imminent collision, it

stops, rotates and, then, continues the movement in a

straight line. The turning speed is 1/3 of the robot

speed for the left wheel and -1/3 of the robot speed

to the right wheel. The robot was set to rotate during

1 second. Finally, in relation to the tracking process,

we used dead reckoning in order to determine the

position of the robot at each time step and, then, use

this information to infer about the distance at which

the robot deviates of a potential collision/obstacle.

In the experiment, three long robot movement

periods (120 seconds, speed at 5, 10 and 15 cm/s)

were conducted to test and show the mechanism of

the collision detector in a real environment.

After the experiment, and through the analysis of

the dead reckoning relative to the robot movement

during all the running time, we could extract, as well

as characterize, the collision detections. Collision

detections between 20cm and 100cm away from the

wall were classified as correct, those detected closer

than 20cm from the wall were classified as missed,

and collisions detected at a distance over 100cm as

false positives (see Figure 8).

Figure 8: Top graph: LGMD model output, running at real

time, for different LGMD layers, during the experiment

with the DRK8000 robot, for a robot speed of 5 cm/s.

Midle graph: Dead reckoning of the robot during the

initial time steps of the experiment, for a robot speed of 5

cm/s. Categorization of the collision detections as missed,

correct and false positives, for three different robot

velocities tested: 5, 10 and 15 cm/s.

As represented on Figure 8, as the velocity of the

robot increases, the percentage of collision

detections classified as correct decreases, as well as

the percentage of missed and false positives

detections increases. The increase of missed

collisions to higher speeds was due to the simple

collision avoidance mechanism adopted in this

article: the robot always turns to the same side

LGMDbasedNeuralNetworkforAutomaticCollisionDetection

139

regardless the relative position of the nearby objects.

The increase in the number of false positives to

higher velocities is based on the fact that, at higher

velocities, the difference between successive frames

is higher, leading to the production of high

excitation levels and, consequently, a bigger number

of collision detection alarms.

Although the difference verified in relation to

correct collision detections between different

velocities, the results obtained are very satisfactory, as

the number of correct detections are always higher

than the sum of missed and false positive detections.

4 CONCLUSIONS

In this paper, we propose a modified LGMD model

based on the identified LGMD neuron of the locust

brain. The model proved to be a robust collision

detector for autonomous robots. This model has a

mechanism that favours grouped excitation, as well as

two cells with a particular behaviour that provide

additional information on the depth direction of

movement.

For applications as collision detectors in

robotics, the model proposed is able to remove the

noise captured by the camera, as well as enhance its

ability to recognize the direction of the object

movement and, by this way, remove the false

collision alarms produced by the previous models

when a nearby object is moving away.

Experiments with a DRK8000 robot showed that

with these two new

procedures, the robot was able to

travel autonomously in real time and within a real

arena.

The results illustrate the benefits of the LGMD

based neural network here proposed, and, in the

future, we will continue to use and enhance this

approach, using, for that, a combination of

physiological and anatomical studies of the locust

visual system, in order to improve our understanding

about the relation between the LGMD neuron output

and the locust muscles related to the avoidance

manoeuvres.

ACKNOWLEDGEMENTS

Work supported by the Portuguese Science

Foundation (grant PTDC/EEA-CRO/100655/2008).

Ana Silva is supported by PhD Grant

SFRH/BD/70396/2010, granted by the Portuguese

Science Foundation.

REFERENCES

Badia, S. B. i., Bernardet, U. & Verschure, P. F. M. J.,

2010. Non-Linear Neuronal Responses as an Emergent

Property of Afferent Networks: A Case Study of the

Locust Lobula Giant Movement Detector. PLoS

Comput Biol, 6(3), p. e1000701.

Blanchard, M., Rind, F. C. & Verschure, P. F. M. J., 2000.

Collision avoidance using a model of the locust

LGMD neuron. Robotics and Autonomous Systems,

30(1), pp. 17-37.

Gabbiani, F., Krapp, H. G., Koch, C. & Laurent, G., 2002.

Multiplicative computation in a visual neuron sensitive

to looming. Nature, Volume 420, pp. 320-324.

Gabbiani, F., Krapp, H. & Laurent, G., 1999. Computation

of object approach by a wide-field motion-sensitive

neuron. J. Neurosci., Volume 19, 1122-1141.

Gabbiani, F., Mo , C. & Laurent, G., 2001. Invariance of

Angular Threshold Computation in a Wide-Field

Looming-Sensitive Neuron. The Journal of

Neuroscience, 21(1), p. 314–329.

Gray, John R , J. R., Lee, J. K. & Robertson, R., 2001.

Activity of descending contralateral movement detector

neurons and collision avoidance behaviour in response

to head-on visual stimuli in locusts. Journal of

Comparative Physiology A, Volume 187, pp. 115-129.

Gray, J. R., Blincow, E. & Robertson, R., 2010. A pair of

motion-sensitive neurons in the locust encode approaches

of a looming object. Journal of Comparative Physiology

A: Neuroethology, Sensory, Neural, and Behavioral

Physiology, 196(12), pp. 927-938.

Guest, B. B. & Gray, J. R., 2006. Responses of a looming-

sensitive neuron to compound and paired object

approaches. Journal of Neurophysiology, 95(3), pp.

1428-1441.

MATLAB, 2011. version 7.12.0 (R2011a). Natick,

Massachusetts: The MathWorks Inc..

Meng, H., Yue, S., Hunter, A. Appiah, K., Hobden, M.

Priestley, N., Hobden, P. & Pettit, C., 2009. A

modified neural network model for the Lobula Giant

Movement Detector with additional depth movement

feature. Atlanta, Georgia: Proceedings of International

Joint Conference on Neural Networks, 14-19 June.

Rind, F., 1987. Non-directional, movement sensitive neurones

of the locust optic lobe. Journal of Comparative

Physiology A: Neuroethology, Sensory, Neural, and

Behavioral Physiology, Volume 161, pp. 477-494.

Rind, F. C. & Bramwell, D. I., 1996. Neural Network

Based on the Input Organization of an Identified

Neuron Signaling Impeding Collision. Journal of

Neurophysiology, 75(3), pp. 967-985.

Stafford, R., Santer, R. D. & Rind, F. C., 2007. A bio-

inspired visual collision detection mechanism for cars:

combining insect inspired neurons to create a robust

system. BioSystems, Volume 87, pp. 164-171.

Yue, S. & Rind, F.C., 2006. Collision detection in

complex dynamic scenes using an LGMD-based

visual neural network with feature enhancement. IEEE

transactions on neural networks. 17 (3), 705-716.

ISSN 1045-9227.

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

140