Spaxels, Pixels in Space

A Novel Mode of Spatial Display

Horst Hörtner, Matthew Gardiner, Roland Haring, Christopher Lindinger and Florian Berger

Ars Electronica Futurelab, Ars Electronica Strasse 1, A-4040 Linz, Austria

Keywords: Space Pixel, Spaxel, Voxel, Pixel, Media Display Technology, Spatial Imaging, 3D Visualization.

Abstract: We introduce a novel visual display paradigm through the use of controllable moving visible objects in

physical space. Spaxels is a conjugation of "space" and "pixels". It takes the notion of a pixel, and frees it

from the confines of a static two-dimensional matrix of a screen or projection surface to move three

dimensionally in space. Spaxels extend the notion of Voxels, volumetric pixels, in that a Spaxel can

physically move, as well as transition in colour and shape. Our current applied research is based on the

control of a swarm of unmanned aerial vehicles equipped with RGB lighting and a positioning system that

can be coordinated in three dimensions to create a morphing floating display. This paper introduces Spaxels

as a novel concept and paradigm as a new kind of spatial display.

1 INTRODUCTION

Visual media has undergone countless evolutionary

transformations through innovations in technology,

from the first primitive though enduring methods of

aboriginal cave paintings, to oil painting, still

photography, celluloid film, video, holography, to

current day large LED displays, transparent displays,

and stereoscopic displays. Some collective factors

that define these modes of display are: two

dimensional matrices of image elements, fixed

planes of display, and the static nature of the display

elements such as fixed position pixels or chemical

compounds like paint or silver gelatine. The break

from the fixed two dimensional image plane has

been a much anticipated jump. MIT Media Lab’s

Hiroshi Ishii predicts the next phase of human

computer interaction with his notion of Radical

Atoms (Ishii et al., 2012).

Though the vision of Radical Atoms is more

connected to the physicality of computational media

at a smaller atomic scale, the notion of Spaxels falls

neatly into this idea of a transformable, kinetic,

computable environment into which visual media

can be expressed. Where each visual element can be

positioned at a required location, relocating as

required, allowing the expansion of the image to fill

a field of view, allowing changes in Spaxel

density/luminosity, rotation, and scale in space.

2 RELATED WORK

A significant exception to the 2D confinement of the

image plane is True 3D (Kimura et al., 2011) a

system by Burton Inc and Keio University employs

laser-plasma to generate dots of plasma, like pixels,

in mid-air. Their 2011 paper cited a jump from 300

points/sec to 50,000 points/sec from their 2006

model, to a volume of 20x20x20cm. The researchers

encouragingly promise that their next version will

include red, green and blue plasma, overcoming the

limitation of the monochromatic image. The

excitation of air or water particles is an important

leap in 3D imaging. An artwork by Art+Com Kinetic

Sculpture (Sauter et al., 2011) for BMW uses 715

metal spheres suspended on a kinetic vertical cable

system. The cables locate the spheres vertically in

space to create images of BMW vehicles and waves.

Ocean of Light (“ocean of light : home,” n.d.) by

Squidsoup is a volumetric array of RGB LEDs

arranged vertically on hanging strips, it also

generates wave forms as light sculpture from

audience interactions. Several other works, such as

The Source at the London Stock Exchange, have

addressed the idea of kinetic sculpture as a mode of

transformable image display.

Additionally the use of unmanned aerial vehicles

for various manipulations in physical space recently

became a prospering research field. Among many

other research labs the GRASP Lab at the

19

Hörtner H., Gardiner M., Haring R., Lindinger C. and Berger F..

Spaxels, Pixels in Space - A Novel Mode of Spatial Display.

DOI: 10.5220/0004126400190024

In Proceedings of the International Conference on Signal Processing and Multimedia Applications and Wireless Information Networks and Systems

(SIGMAP-2012), pages 19-24

ISBN: 978-989-8565-25-9

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

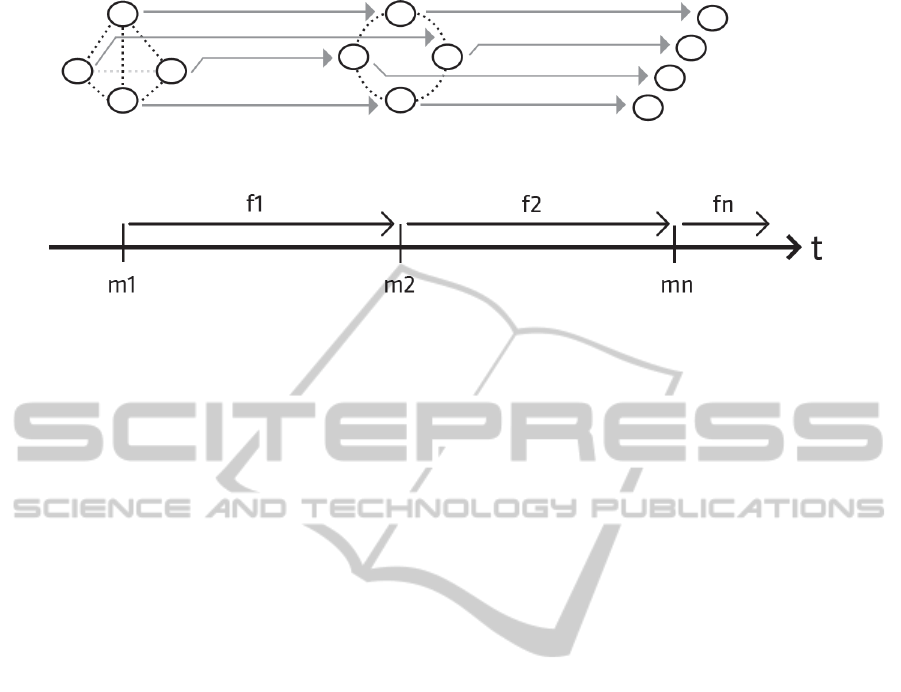

Figure 1: Spaxels moments (m

1

, m

2

, m

n

) in relation to Spaxels flow (f

1

, f

2

, f

n

).

Pennsylvania University (Michael et al., 2010) and

the Flying Machine Arena of the ETH Zurich

(Schoellig et al., 2010) received a lot of acclamation

for their ground-braking experimental work.

Our concept of Spaxels extends previous works

in the field by proposing a controllable swarm of lit

objects in 3D space and time that is not connected to

a cabled system. Instead Spaxels are floated

independently by means of aviation. Our vision is of

a transformable display of pixels in space, hence the

coining of the term Spaxels: Space Pixels.

3 NATURE OF SPAXELS

To illustrate the concept of the Spaxels two aspects

have to be considered, the nature of a single Spaxel

and the nature of a collective of Spaxels that form a

Spaxel Sculptural Image (SSI).

3.1 Nature of Singular Spaxel

In a general sense Spaxels are analogous to

volumetric pixels (Voxels) with the addition of the

property of movement. A Spaxel has the following

defining properties: colour, position in physical 3D

space, and a trajectory vector. Orientation and shape

are optional properties to extend Spaxel functions.

3.2 Nature of Spaxel Sculptural Image

Considering free floating physical objects in the

generation of sculptural images it is needed to reflect

upon two overall aspects; the first aspect is the

sculptural image itself; and the second is the

transition towards this sculptural image. This can be

compared to classical animation strategies like “key

frames” and “in-betweening”. Subsequently we call

the discreet state “Spaxel Moment” and the

transitional state “Spaxel Flow”. Both need aesthetic

consideration when constructing visual

representations with Spaxels.

3.2.1 Spaxels Moment

Spaxel moments describe a specific state of the

sculptural display at a discrete moment in time. They

can be compared to frames in traditional display

technologies. There are several thinkable ways of

how such a frame or Spaxel moment can be defined

in the course of a specific visualization. Spaxels can

be controlled by a global instance that is

synchronizing their properties, transmitting

commands and therefore guaranteeing the overall

image. This instance can be either integrated into

one specific Spaxel (leader of the swarm) or located

outside on a remote location (remote control).

Additionally Spaxels can also self-organize based on

predefined models and algorithms and reach distinct

layouts in time and space based on local data and

decisions.

3.2.2 Spaxels Flow

In designing an authority that computes and defines

discrete states of the Spaxel display, it is necessary

to consider the transitions in between these Spaxel

moments. This integrated capability is a mandatory

property to maintain a coherent overall visualization.

We refer to this as the flow of the Spaxels (see

Figure 1). As one of the main aspects of the flow of

Spaxels is – amongst aesthetic considerations – the

avoidance of collisions and the overall react ability,

this capability can imply complex algorithmic

requirements. Simple implementations like shortest

path algorithms might be substituted by more

complex swarm-like behavior (Reynolds, 1987) or

SIGMAP2012-InternationalConferenceonSignalProcessingandMultimediaApplications

20

generative and autonomous algorithmic strategies

(Bürkle et al., 2011), depending on the resolution

and Spaxel density of the display.

Figure 2: A single Spaxel represented by a quadrocopter,

an unmanned aerial vehicle with preliminary LED

lighting.

4 PROOF OF CONCEPT AND

RESEARCH SETTING

As a research environment a set of unmanned aerial

vehicles (see Figure 2) is chosen. Currently the

research group works on a setup of 50 of those

instances to conduct the proof of concept.

4.1 Embodiment of a Single Spaxel

Each Spaxel used for current research is represented

by an Ascending Technologies Hummingbird

quadrocopter (“AscTec Hummingbird AutoPilot |

Ascending Technologies,” n.d.). These unmanned

aerial vehicles (UAV) provide a GPS based autopilot

for autonomous outdoor waypoint navigation. A

dedicated high-level processor controls the flight

maneuvers of the quadrocopters and can be

programmed by the researchers. The maximum

flight time is at least 20 minutes and the maximum

speed of the vessel is more than 50 km/h. The UAV

is controlled by a base station transmitting

commands up to a distance of 600 meters using a

proprietary radio technology transmitting in the 2.4

GHz spectrum. The quadrocopter has a high-level

and a low-level processor. The high-level processor

is used for communication and LED control. The

low-level processor does the actual flight control

based on the GPS waypoints received. Each

unmanned aerial vehicle is equipped with an array of

RGB LEDs, which can be altered in hue and

brightness through corresponding remote

commands. Figure 3 shows the basic scheme of the

radio communication. The proprietary LairdTech

radio chipset uses channel hopping to maximize and

stabilize the transmission. Therefore only one

physical channel is available. Within this channel

the communication follows a time-synchronized

request and reply based 1:n broadcast scheme.

Figure 3: Basic layout of the broadcast communication

scheme.

Table 1 gives an overview of available

commands.

Table 1: Available control commands.

From To Command

SU SP, G, A LED

SU SP WP

SU SP, G, A L

SU SP, G, A P

SU SP R

SP SU U

The server unit (SU) sends broadcast packages to

all Spaxel units. Each network packages has a

distinct addressing scheme on application level.

Individual Spaxels (SP), predefined groups of

Spaxels (G) or all Spaxels (A) can be addressed. The

in-flight commands contain commands to update the

waypoints (WP) the Spaxel should use for the next

movement path, to change the RGB value of the

LED lighting (LED), to fly to a predefined parking

position (P), to land (L) or a request to transmit

current flight data like speed, position, altitude and

charge (R). Whenever a Spaxel receives an update

flight data request it has to reply within a predefined

timeframe otherwise the Spaxel will be sent to

parking position and ultimately be requested to land.

Whenever a Spaxel does not receive commands

from the server anymore it will also go to parking

position. If there is no GPS signal available, the

Spaxel will stand still and wait in air. Shortly before

battery charge is empty it will emergency land using

the barometer for approximated height

measurements.

Spaxels,PixelsinSpace-ANovelModeofSpatialDisplay

21

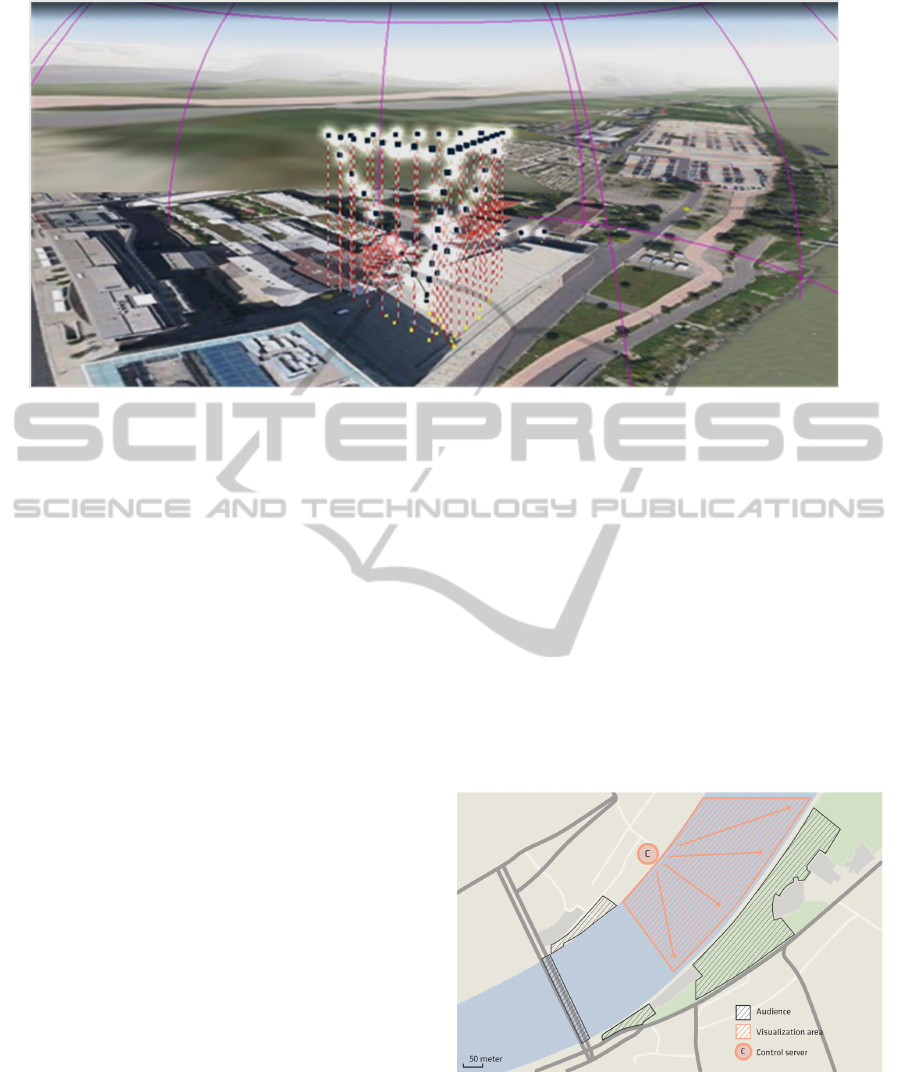

Figure 4: Screenshot of the current development state of the Spaxels simulator and control server.

4.2 Embodiment of a Sculptural Image

The first implementation of a Spaxel display will

consist of a swarm of 50 individual UAVs. Each of

these Spaxels is remote controlled by a central on-

ground server. The server runs a virtual simulation

tool that calculates patterns in space and trajectories

for each Spaxel. Furthermore visual properties for

the LEDs are determined based on generative

patterns. Based on this data discrete Spaxel moments

are computed. They are transmitted to the UAV

using eight parallel physical radio channels. The

UAVs interpret them as waypoints and compute the

transitions as Spaxel flow locally. Furthermore, the

UAVs transmit their actual position and speed to the

server to update the simulation. The current data

transmission and overall swarm update frequency

varies at 3 to 5 frames per seconds. Figure 4 shows a

screenshot of the current development state of the

simulation and control server. Orthographic images

are used as floor texture for the reference of the

swarm operator. The current Spaxels position, flight

path and illumination state is visualized.

Furthermore a hemisphere restricts the possible

movement of the Spaxels to a predefined maximum

distance from the server unit.

The main tasks of the server unit are to

synchronize and control the movement of the

Spaxels, avoid collisions and alter their visual

properties. Furthermore the server triggers

automatically emergency routines for the case that

the control over a Spaxels unit cannot be maintained

anymore.

4.3 Proof of Concept Setting

The concept of Spaxels does not determine the

overall scale of the visualization per se. Applications

can range from indoor micro-Spaxel visualizations

to large outdoor scenarios. The scope of our current

research is to realize a large-scale Spaxel display for

an audience of several thousand viewers.

The overall visualization space is across several

hundred meters. Consideration needs to be given to

the different possible viewpoints of the audience to

achieve a coherent visualization. Figure 5 shows the

spatial setup we are working on with an overall

dimension of roughly 500 by 500 meters.

Figure 5: Proposed audience and visualization area.

5 RESEARCH

CONSIDERATIONS

Spaxels open up a number of new areas for

SIGMAP2012-InternationalConferenceonSignalProcessingandMultimediaApplications

22

consideration like density/luminosity, immersion,

rotation, field of view, scale, shape, and point cloud

rendering. In general it is important to firstly identify

properties that can be categorized as analogies of

conventional display technology properties and

secondly to identify conceptually new capabilities

which go beyond.

5.1 Density of Image

The notions of scale, and resolution with and SSI

become a matter of luminosity and density of image

elements. In the case of a Spaxel display, density can

be optimized to best suit the display of a given

image or object given the resolution, or number of

Spaxels. For example a Spaxel image of a human

with arms by sides, being tall than wider would be

shown from a topologically optimal point of view, as

a more or less tall and blobby cylinder. Whereas the

image of a human head would be seen as a group of

Spaxels arranged in a more or less blobby sphere.

The space-time configuration of Spaxels can adjust

algorithmically to the content. Density can be

packed to increase or decrease depending on the

field of view, and are measured as Spaxels per cubic

meter.

5.2 Transformations

Rotation and translation–affine transformations–of

the SSI around and past the audience’s field of view

is possible. The entire SSI can be transformed in the

airspace surrounding the audience. The audience can

be surrounded, and immersed in the SSI. Research

already undertaken in virtual reality could be

extended with larger and more physical contexts.

5.3 Field of View

Another research consideration is a focused field of

view; memorably explored in cinema by Lucasfilm

in the Star Wars saga through the assorted

holographic projections that serve as centrepiece

displays for communication and visualisation. The

holographic projection of Princess Leia appears as a

monochrome cinematic feed with a bluish tinge

projected into the air and showing only a limited

field of view. The key research issue is separation of

the object of focus from the background, when

considering an entire scene of 3D data, the time for a

Spaxcel to move from one position as part of a

mountain 100’s of miles away to a closer position of

an object in the foreground, an act accomplished in

one frame of film. Lucasfilm’s approach, it seems,

was to imagine a zone of recording, and objects

outside this zone, such as backgrounds are

completely ignored. The same occurs in Kinect

recognition software, information beyond a certain

distance threshold is ignored.

5.4 Point Clouds and Spaxels

3D scans from consumer devices such as Kinect

produce a data set containing X,Y,Z and RGB

values in relation to the devices lenses, The data sets

are expressed as point clouds, that when viewed

collectively, and with a high enough density form a

recognisable image. Point clouds are a suitable way

for capturing the three-dimensional world around us,

in most purposes they merely form the starting point

for an algorithm to calculate a 3D mesh. But the

question still remains, once we have this

information, how do we get back into the real world,

we can make a 3D print or static sculptural object.

Spaxels offer a method to express dynamic point

clouds in real time, in real space.

5.5 State Switching vs Transformations

We have identified two methods for animating

images: state switching and transformations (figure

6). State switching involved a matrix of Spaxels that

alternately switch on and off, and a transformation

involves physically moving an individual Spaxel.

The transient time for the transformation compared

to the speed of switching will become an artistic

decision, though contributing factors like Spaxel

density will influence the decision computationally.

Figure 6: State switching (A) vs Transformations (B).

5.6 Shape

The shape of an individual Spaxel, including the

scale and geometry, can be morphed through robotic

transformation using a method similar to our

research in robotic origami systems (Wang-Iverson

et al., 2011). Such transformations could increase or

decrease the local image density through the change

in size and shape of a light diffuser.

Spaxels,PixelsinSpace-ANovelModeofSpatialDisplay

23

5.7 Future Work

Our current research is focused on the first

realization of a Spaxel display, which fulfills the

requirements described in this paper. The display

itself will work in the first implementation with 50

individual Spaxel units. The core research question

is to derive requirements for aesthetic music based

visualization for a large crowd audience. This will

include the precise and responsive control in air,

synchronization among the Spaxel units and overall

aesthetic considerations. Future research will include

scaling up the number of Spaxels, as well as

developing concepts for making the shape

dynamically transformable. We are hard at work,

and expect first ground-breaking results within the

coming months.

In our current work Spaxels are controlled by a

central server unit. A future step for future work

would be to decentralize control of the Spaxels

swarm by using rule-based agents. Rule-based agent

systems for visualizations have a long history in

computer graphics. (Reynolds, 1987) presented a

model that simulated the behavior of a flock of birds

by the use of a few relatively simple, global rules.

This effective steering scheme is manifested by rules

for group cohesion, alignment and separation to

avoid collisions with other swarm entities. Building

on these results a next step would be to calculate the

Spaxel flow locally and distributed on the Spaxel

units themselves. This could lead to a lower need for

synchronization and therefore to a higher overall

responsiveness of the display.

6 CONCLUSIONS

In this paper we introduced a new paradigm for

spatial displays. Spaxels extend the concept of

Voxels from a discretely ordered matrix to a

continuous space. Furthermore we also introduced

the work on the first embodiment of this concept

based on unmanned aerial vehicles. This research

opens up a whole new field of visualizations in

physical space. Two core concepts for these

sculptural displays are the notion of the moment as

representation of a frame and the flow as the

transition in between. Both need to be redefined

based on the novel technological constraints. The

kinetic and dimensional potential of Spaxels

significantly extends the scale and mode of visual

expression.

ACKNOWLEDGEMENTS

To the team’s fearless quadrocopter test pilots and

programmers: Michael Mayr, Andreas Jalsovec and

Ben Olsen and to Ascending Technologies for their

outstanding support.

REFERENCES

AscTec Hummingbird AutoPilot | Ascending

Technologies. (n.d.). Retrieved April 25, 2012, from

http://www.asctec.de/asctec-hummingbird-autopilot-5/

Bürkle, A., Segor, F., & Kollmann, M. (2011). Towards

Autonomous Micro UAV Swarms. J. Intell. Robotics

Syst., 61(1-4), 339–353.

Ishii, H., Lakatos, D., Bonanni, L., & Labrune, J.-B.

(2012). Radical atoms: beyond tangible bits, toward

transformable materials. Interactions, 19(1), 38–51.

Kimura, H., Asano, A., Fujishiro, I., Nakatani, A., &

Watanabe, H. (2011). True 3D display. ACM

SIGGRAPH 2011 Emerging Technologies,

SIGGRAPH ’11 (pp. 20:1–20:1). New York, NY,

USA: ACM.

Michael, N., Mellinger, D., Lindsey, Q., & Kumar, V.

(2010). The GRASP Multiple Micro-UAV Testbed.

Robotics Automation Magazine, 17(3), 56 –65.

Ocean of light : home. (n.d.). Retrieved April 25, 2012,

from http://oceanoflight.net/

Patsy Wang-Iverson, Robert J. Lang, and Mark Yim (Ed.).

(2011). Origami 5: Fifth International Meeting of

Origami Science, Mathematics, and Education. CRC

Press.

Reynolds, C. W. (1987). Flocks, herds and schools: A

distributed behavioral model. SIGGRAPH Comput.

Graph., 21(4), 25–34. doi:10.1145/37402.37406

Sauter, J., Jaschko, S., & Ängeslevä, J. (2011). Art+Com:

Media Spaces and Installations. Gestalten.

Schoellig, A., Augugliaro, F., & D’Andrea, R. (2010). A

Platform for Dance Performances with Multiple

Quadrocopters. Presented at the IEEE/RSJ

International Conference on Intelligent Robots and

Systems.

SIGMAP2012-InternationalConferenceonSignalProcessingandMultimediaApplications

24