A Proposed Framework for Analysing Security Ceremonies

Marcelo Carlomagno Carlos

1∗

, Jean Everson Martina

2†

, Geraint Price

1

and Ricardo Felipe Cust´odio

2

1

Royal Holloway University of London, Information Security Group, Egham, Surrey, TW20 0EX, U.K.

2

Universidade Federal de Santa Catarina, Laborat´orio de Seguranc¸a em Computac¸˜ao, Florian´opolis, SC, Brazil

Keywords:

Security Ceremonies, Security Protocols, Formal Methods, Cognitive Human Formalisation.

Abstract:

The concept of a ceremony as an extension of network and security protocols was introduced by Ellison.

There are no currently available methods or tools to check correctness of the properties in such ceremonies.

The potential application for security ceremonies are vast and fill gaps left by strong assumptions in security

protocols. Assumptions include the provision of cryptographic keys and correct human interaction. Moreover,

no tools are available to check how knowledge is distributed among human peers nor their interaction with

other humans and computers in these scenarios. The key component of this position paper is the formali-

sation of human knowledge distribution in security ceremonies. By properly enlisting human expectations

and interactions in security protocols, we can minimise the ill-described assumptions we usually see failing.

Taking such issues into account when designing or verifying protocols can help us to better understand where

protocols are more prone to break due to human constraints.

1 INTRODUCTION

Protocols have been analysed since Needham and

Schroeder (Needham and Schroeder, 1978) first in-

troduced the idea and methods have been researched

to prove protocols’ claims. We have seen a lot of re-

search in this field. Particularly in developing formal

methods and logics to check and verify such claims.

We must cite Burrows et al. (Burrows et al., 1989) for

their belief logic, Abadi for spi-calculus (Abadi and

Gordon, 1997), Ryan (Ryan and Schneider, 2000),

Lowe (Lowe, 1996) and Meadows (Meadows, 1996)

for works on state enumeration and model checking,

and Paulson and Bella (Paulson, 1998; Bella, 2007)

for their inductive method as the principal initiatives.

We have also seen the creation of a number of tools

to verify and check security protocols automatically.

These techniques and tools have evolved in such a

way that, nowadays, we can check and analyse com-

plex and extensive protocols.

Meadows (Meadows, 2003) and Bella et al. (Bella

et al., 2003) in their area survey gave us a broad cov-

erage of the maturity in this field of protocol verifi-

cation. They also point to trends followed by meth-

ods, pinpointing their strong and weak features. They

∗

Supported by CNPq/Brazil

†

Supported by CNPq/FINEP Brazil

give propositions for research ranging from open-

ended protocols, composability and new threat mod-

els; something that has changedvery little since Dolev

and Yao’s proposal (Dolev and Yao, 1983). These

problems seem very well covered. Current research

is, in general, aimed at optimising the actual methods

in speed and coverage. Extending protocol verifica-

tion and description to include fine-grained assump-

tions and derivations is an unexplored research path.

Nevertheless, recent research (Dhamija et al.,

2006; Gajek, 2005; Jakobsson, 2007) shows that even

the most deployed, tested and analysed protocols can

have security problems. This usually happens when a

user acts in an unexpected, but plausible way. Since

protocols operate at computer level, we tend to ver-

ify them for computer interaction.However, they are

built to accomplish a human task and thus we should

design and verify protocols (in this case ceremonies)

against human interaction. We should take into ac-

count human processes when designing computer se-

curity protocols. Corroborating the idea that the veri-

fication of security protocols should include environ-

mental assumptions, Bella et al. (Bella et al., 2003)

state that “it is unwise to claim that a protocol is veri-

fied unless the environmental assumptions are clearly

specified. Even then, we can be sure that somebody

will publish an attack against this protocol”.

Ceremonies and their analysis were introduced by

440

Carlomagno Carlos M., Everson Martina J., Price G. and Felipe Custódio R..

A Proposed Framework for Analysing Security Ceremonies.

DOI: 10.5220/0004129704400445

In Proceedings of the International Conference on Security and Cryptography (SECRYPT-2012), pages 440-445

ISBN: 978-989-8565-24-2

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

Ellison (Ellison, 2007). He states that “ceremonies

extend the concept of protocols by also including hu-

man beings, user interfaces, key provisioning and all

instances of the workflow”. This idea can give a

broader coverage of the protocols’ point of view, ex-

tending what can be analysed and verified by pro-

tocol techniques. Ellison gives an overview and es-

tablishes the basic building blocks for ceremony de-

scription. Carlos and Price (Carlos and Price, 2012)

further analysed the human-protocol interaction prob-

lems, proposing a taxonomy of overlooked compo-

nents in this interaction and elaborating a set of de-

sign recommendations for security ceremonies. Al-

though Ellison proposes the possibility of using for-

mal methods for security protocol analysis, no major

work is found today in the ceremony formal-analysis

field. This creates a weak spot, and leads to empirical

analysis, which can be difficult and error-prone, as the

history of protocol analysis shows us.

An important advance in the reasoning about cere-

monies was introduced by Rukˇs˙enas et al. (Ruksenas

et al., 2008). They developed a human error cognitive

model, initially applied to interaction on interfaces.

They show that, normally, security leaks come from

mistakes made when modelling interfaces, not taking

into account the cognitive processes and expectations

of human beings behind the computer screen. They

successfully verify problems on an authentication in-

terface and a cash-point interface. They showed that

the normal lack of consideration in the human peers

cognitive processes is one of the weakest factors in

these systems. Their proposal comes with a powerful

implementation using a model-checker.

Our approach is different. We do not focus on a

specifically difficult to describe limitation of human

beings, but on giving to the protocol and ceremony

designers a better way to define human expectation

and interaction. Thus, by making the assumptions

more explicit, and requiring a description of the cer-

emony’s security, we can enable designers to experi-

ment with different ceremony techniques. By stating

fine-grained assumptions and analysing their absence,

we can get insights of potential break points for secu-

rity ceremonies. This is the conceptual extension we

are proposing in verifying security ceremonies using

established techniques based on formal method’s.

To try to achieve this complex task of verifying se-

curity ceremonies we need to first understand the ma-

jor differences and features of ceremonies when com-

pared to security protocols (Section 2). We briefly

discuss a real world example on Section 3. Then we

describe our proposal for the formalisation of human

knowledge distribution in security ceremonies in Sec-

tion 4. The future direction of our work and our next

steps are presented in Section 5. Finally, we conclude

with some thoughts on what is achievable and the lim-

itations we are likely to encounter.

2 CEREMONY ANALYSIS

VERSUS PROTOCOL

ANALYSIS

Security ceremonies are a superset of security proto-

cols. They can be seen as an extension of security

protocols, including additional node types, communi-

cation channels and operations which were previously

considered out-of-bound. These operations are nor-

mally assumptions we make when trying to check or

analyse claims for protocols. They include a safe key

distribution scheme for symmetric key protocols; the

confidence we must have that the computer executing

the protocol is trusted; and whether users will behave

as expected or not, among other things. We usually

make these assumptions but we rarely do explicitly

describe them.

The inclusion of human interaction and, conse-

quently, behaviour and cognitive processes, is a char-

acteristic of ceremonies as human peers are out of

bounds for protocol verification. They are normally

the most error prone peer in any process, and their

inclusion can enrich the details and coverage of any

analysis done so far.

Protocol descriptions tend to be easier to tran-

scribe as mathematical notations due to the intrinsic

computational characteristics present in them. Much

of this comes from them being targeted to computers.

Ceremony modelling is a much more subtle approach,

since the possibilities involved in modelling human

behaviour are immense. However, by adding new

components to the specification, such as new node

types (humans) and communication mediums (user

interfaces, speech, etc), we will be able to describe as-

sumptions related to these components in a more pre-

cise manner. Consequently, a more detailed analysis

of the ceremony’s security properties will be possible.

3 AN EXAMPLE CEREMONY

SSL/TLS are a set of cryptographic protocols that

provide privacy and data integrity for communication

over networks. A practical application of these pro-

tocols can be seen when we connect to websites and

a padlock appears in the browser window. The pad-

lock indicates to the user that the connection between

client and server is encrypted and the server is authen-

AProposedFrameworkforAnalysingSecurityCeremonies

441

ticated to the client (the clients can also be authenti-

cated to the server, but this is an optional feature).

These protocols are widely used and are also the

object of many studies and analysis (Paulson, 1999;

Mitchell et al., 1998). The results of those studies

show that the SSL/TLS protocols are well designed

and secure. Additionally, these protocols are designed

to prevent man-in-the-middle attacks (MITM). How-

ever, there are specific situations where we can deploy

a MITM attack by exploiting assumptions which can

be difficult to achieve. We chose as an example the

assumption that users are capable of making an accu-

rate decision on whether to accept a certificate or not.

Current analysis of the protocol assumes there is a

trusted Certification Authority (CA) and all parties in-

volved possess the CA’s public key. Nevertheless, this

assumption does not cover some real world scenarios.

When there is no valid certification path between the

server’s certificate and the client’s trusted CAs, most

implementations allow a dynamic (real-time) accep-

tance and addition of new trusted certificates. In this

case, the initial assumption is weakened, and the ver-

ification is less comprehensive.

The dynamic acceptance of new certificates (and

consequently new servers’ public keys) is currently

not analysed. This happens because these mes-

sages are sent through another medium, the computer-

human medium, which is out of the scope of protocol

analysis. This leaves such implementations suscepti-

ble to failure, weakening the achievability of the pro-

tocol’s goals due to the weakening of the assumptions.

Additionally, implementations such as those we find

in web browsers force users to authenticate digital ob-

jects (Certificates) which, according to some research

findings, is not feasible (Carlos and Price, 2012).

We have seen some attempts to include specific

human interaction into protocol specification. Gajek

et al. (Gajek et al., ) developed a protocol that in-

cludes a human node in the specification. This sim-

plified approach is the first attempt that we are aware

of to formally verify protocols including human inter-

actions.

By modelling ceremony analysis using formal

methods, we will be able to break broad assump-

tions such as those we use in SSL/TLS analysis,

into smaller and more plausible assumptions. Con-

sequently, we will allow designers to have better in-

sights of the protocols’ weak spots, such as those we

have discussed. In the future, with further develop-

ment of the ceremony analysis research field, we will

be able to model even more complex aspects, such as

composability of security protocols and consequently

security ceremonies.

4 A PROPOSED METHOD

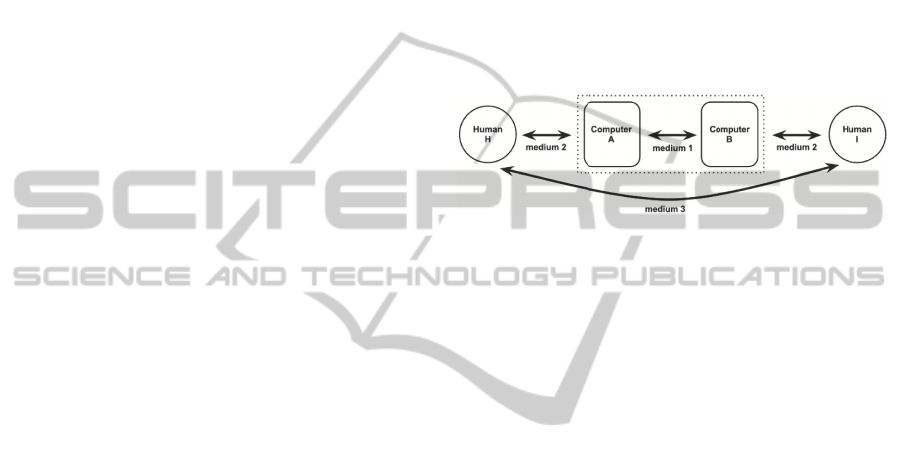

In traditional protocol specifications we have one

communication medium. In ceremonies we include

humans into the specification. Consequently we have

to define two new communication mediums, one

to represent human-computer interaction (user inter-

faces) and another to symbolise human-human inter-

action. Figure 1 gives an overview of the communi-

cation mediums involved. The area bounded by the

dotted line represents the traditional protocol point of

view, while the complete figure represents a ceremony

point of view.

Figure 1: Ceremony communication mediums.

As we see, in a protocol specification, the human-

protocol and human-human interaction are assumed

to happen out-of-band, and become part of the design

assumptions. When implemented, the assumptions

are replaced by dynamic user-interactions. When

these assumptions are too strong, it becomes diffi-

cult to implement a protocol providing the expected

security properties (Carlos and Price, 2012). By

adding new components to the specification, such as

users and different communication mediums, we can

start to describe these assumptions in the ceremony,

and consequently perform a more detailed analysis

of them and their impact on the ceremony’s security

properties. Lack of precise description of assump-

tions is a weak spot in protocol design.

We decidedto implement our description and veri-

fication model for security ceremonies based on Paul-

son’s Inductive Method (Paulson, 1998). Paulson in-

troduced the inductive method of protocol verification

where protocols are formalised in typed high-order

logic as an inductive defined set of all possible execu-

tion traces. An execution trace is a list of all possible

events in a given protocol. Events can be described as

the action of sending or receiving messages, as well

as off-protocol gathered knowledge. The attacker is

specified following Dolev-Yao’s propositions. The at-

tacker has his knowledge derived and extended by two

operators called synth and analz. Operator analz rep-

resents all the individual terms that the attacker is able

to learn using his capabilities defined by the threat

model within the protocol model, and synth repre-

sents all the messages he can compose with the set

of knowledge he possesses.

SECRYPT2012-InternationalConferenceonSecurityandCryptography

442

Protocols are defined as inductive sets constructed

over abstract definitions of the network (computer-

to-computer media) and cryptographic primitives.

Proofs about a protocol’s properties are written as

lemmas. Lemmas are constructed taking the prop-

erties we desire to achieve within the set of all ad-

missible traces, and are typically proven inductively.

This framework is built over induction, which makes

the model and all its verifications potentially infinite,

giving us a broad coverage and flexibility. This ap-

proach has already been used to prove a series of clas-

sical protocols (Paulson, 1998) as well as some well-

known industry grade protocols, such as the SET on-

line payment protocol, Kerberos and SSL/TLS (Bella

et al., 2002; Bella, 2007).

Based on the current model for protocols set

by Paulson (Paulson, 1998) and extended by Bella

(Bella, 2007), we include a new agent type called Hu-

man. We also add a set of operators, messages and

events to set up our human peer and enable it to work

under human capabilities and constraints. This new

agent type is capable of storing knowledge and send-

ing messages over the mediums it is capable of oper-

ating. This agent is also capable of using knowledge

conversion functions to be able to operate its devices.

Humans are related to devices they operate or own,

and some of the physical constraints existent in the

real world are also present in this relation.

Our specification of the Human peer is similar

to the one for a computer Agent. It is created as

shown in Definition 1 to enable the type constriction

provided in the inductive method implemented in Is-

abelle/HOL.

Definition 1. Human datatype definition

datatype

human = Friend nat

To enable the representation of the different medi-

ums described above we extended the datatype Event

as shown in Definition 2. To represent the protocol

side, the events were kept unchanged and are com-

posed by Says, Gets and Notes. To represent the new

human-computer medium we have the events Dis-

plays and Inputs which takes an agent, a human and

a message, and a human, an agent and a message re-

spectively. Representing the human-human medium,

we have the events Tells, Hears and Keeps, which are

similar to the protocol events, but now we take a hu-

man instead of an agent as the parameter. This con-

struction is made to allow us to control the flow of

information passed between devices and humans, as

well as between human peers.

Definition 2. Event datatype definition

datatype

event = Says agent agent msg

| Gets agent msg

| Notes agent msg

| Displays agent human msg

| Inputs human agent msg

| Tells human human msg

| Hears human msg

| Keeps human msg

After modelling the human agent and its mes-

sages, we adapted the two functions that deal with

knowledge distribution and control the freshness of

components appearing in different protocol runs. The

function that deals with knowledge distribution is

called knows and its extended description is shown

in Definition 3. One peculiarity of our implementa-

tion so far is that the function knows deals only with

the computer media knowledge flow and the cross-

medium flow in the agent direction. We still lack a

function to distribute knowledge in the human me-

dia flow and in the cross-medium flow in the human

direction. The function for freshness is called used

and is very similar to knows in construction. It is not

shown here due to space constraints.

Definition 3. Event datatype definition

primrec knows::"agent=>event list=>msg

set"

where

knows Nil:"knows A []= initState A"

| knows Cons:

"knows A (ev#evs) = (if A = Spy then

(case ev of

Says A'B X=>insert X(knows Spy evs)

|Displays A' H X => if A' ∈ bad

then insert X (knows Spy evs) else

knows Spy evs

|Inputs H A' X => if A' ∈ bad then

insert X (knows Spy evs) else knows Spy

evs

|Gets A' X => knows Spy evs

|Tells C D X => knows Spy evs

|Hears C X => knows Spy evs

|Keeps C X => knows Spy evs

|Notes A' X => if A'∈ bad then

insert X (knows Spy evs) else knows Spy

evs)

else (case ev of

Says A'B X => if A'=A then insert X

(knows A evs) else knows A evs

|Displays A' H X => if A'=A then

insert X (knows A evs) else knows A evs

|Inputs H A' X => if A'=A then

insert X (knows A evs) else knows A evs

|Tells C D X => knows A evs

|Hears C X => knows A evs

|Keeps C X => knows A evs

|Gets A' X => if A'=A then insert X

(knows A evs) else knows A evs

| Notes A' X => if A'=A then insert

X (knows A evs) else knows A evs))"

AProposedFrameworkforAnalysingSecurityCeremonies

443

We also define a new set of functions to rep-

resent the information that flow,s and is processed

through, the two new communication mediums. For

the human-computer medium, we created three func-

tions called Reads, Recognises and Writes. Reads rep-

resents a human reading a message (e.g. a text dis-

played on the screen) and this adds information to the

referred human knowledge. Recognises accounts a

human recognising something he/she already knows

and is being presented to him/her. In other words,

something that the human is reading and matches

something previously known. Finally, Writes, as

shown in Definition 4 is equivalenttoPaulson’s Synth,

where we enable humans to combine their knowledge

in a monotonic way to create new possible inputs. For

now we do not consider inherent human constrains.

Definition 4. Writes function definition

inductive set writes:: "msg set=>msg set"

for H :: "msg set" where

Inj [intro]:"X ∈ H ==> X writes H"

|Agent [intro]:"Agent agt ∈ writes H"

|Human [intro]:"Human hum ∈ writes H"

|Secret [intro]:"Secret n ∈ writes H"

|Number [intro]:"Number n ∈ writes H"

|MPair [intro]:"[|X ∈ writes H; Y ∈

writes H|] ==> |X,Y| ∈ writes H"

In addition to the functions described above two

new events, Displays and Inputs, are available. Dis-

plays represents a computer displaying a message to a

human (e.g., via user interface). Inputs describes the

event of a human sending a command or data to the

computer (e.g., typing text in a text box). This gives

us an abstract representation of the complex human-

computer medium. In this cross-medium space we

believe more details can be plugged to describe inher-

ent factors of human-computer interaction.

For the human-human medium, we created three

functions called Listens, Understands and Voices.

Listens represents a human listening to a message sent

from another human (e.g., one human listening to

another). Understands accounts for a human under-

standing something that has been said and matches

with previously known information. This informa-

tion can be something gathered beforehand, creating

a paradox in the ceremony concept, or acquired dur-

ing a previous or current run of the ceremony. To

conclude, Voices is the equivalent to a human saying

something to another human passing its knowledge

via the human-human medium. These constructions

enable us to explore a different threat model for the

human media as we will briefly discuss in Section 5.

Finally, we define three new events for human-

human interaction as said above: Tells, Hears and

Keeps. A human sending a message to another hu-

man is represented by the event Tells. Hears is the

complementary event, describing a human receiving

a message from another. And Keeps is equivalent to

the Notes event, already existent for protocols. From

Notes we may receive something out-of-band of the

protocol, and consequently, from Keeps something

out-of-band to the ceremony may be received. This

construction is present because we believe there is al-

ways a limit on what can be described.

With the infrastructure described above imple-

mented, we already have a partially working frame-

work. Together with the definitions we have proven

a series of lemmas required by Isabelle/HOL. These

lemmas are required for reasoning about the defini-

tions. We have already proven more than 80 technical

lemmas regarding monotonicity, idempotence, transi-

tivity, and set operations for the inductive sets and re-

cursive definitions we specified. We have also proven

lemmas regarding the relation of the functions we in-

troduced with those already existent in the method.

5 FUTURE WORK

Protocols are, by design, implemented to attend to hu-

man demands. The method we propose approaches

real world concerns on the design level. It is impossi-

ble to represent all possible human characteristics in a

limited set of operations, but by including the human

node in the specification, we can thoroughly study in-

teractions and factors which were previously included

in the set of assumptions for each protocol.

Our next step is to verify simple ceremonies us-

ing our model and then further develop and refine the

model. In addition to that, we will apply the proposed

model to check whether a specified ceremony over-

looks human-protocol interaction components, such

as those described in (Carlos and Price, 2012). We

also plan to use the model to verify whether some

of the design recommendations proposed by Carlos

and Price (Carlos and Price, 2012) (e.g. the use of

forcing functions to prevent inappropriate user inter-

action) are correctly implemented or not.

The contextual coverage that ceremonies bring to

security protocols is another property is worth verify-

ing. This can give us better insights into the problem

of protocol composability. The composability prob-

lem normally happens because of clashes among en-

vironmental assumptions that are embedded into pro-

tocols. By not being able to model the environment,

we also cannot predict what will happen when two

protocols, that are designed focusing on their own re-

spective environments are put to work together.

Another point worth mentioning in the ceremony

verification area is the lack of a tailored threat model.

SECRYPT2012-InternationalConferenceonSecurityandCryptography

444

We need a model that encompasses active threats, as

we havein protocols, as well as passivethreats such as

unreliable behaviour and memory. We can use some

work from Roscoe (Roscoe et al., 2003) that talks

about human centric security as a basis. However our

initial experiments already show that the threat model

described by Dolev and Yao is not realistic for our

human-to-human interaction media. The presence of

an omnipotent and omnipresent being in human inter-

actions is highly debatable.

6 FINAL CONSIDERATIONS

The idea of modelling ceremonies and applying for-

mal methods to them seems promising. The knowl-

edge acquired by the protocol analysis community

can be used to boost the ceremony analysis area. Such

analysis can help us detect scenarios where protocols

are more prone to failure. By better understanding

these issues we will be able to design more user cen-

tric protocols which are less-likely to fail.

We don’t want to change the way we analyse pro-

tocols today, since the formal methods available are

mature and powerful for their intended purposes. We

want to approach the problem from an extended point

of view. Our focus on using a mature and powerful

method, such as Paulson’s inductive method, is rea-

sonable. Our objective with this model is to extend

the coverage from the verification of security proto-

cols to ceremonies. Human behaviour is indeed un-

predictable, but by including humans in the formal

models we can, at least, begin to detect some previ-

ously undetectable flaws due to human interaction.

REFERENCES

Abadi, M. and Gordon, A. D. (1997). Reasoning about

cryptographic protocols in the spi calculus. In Proc.

of the 8th Int. Conf. on Concurrency Theory, pages

59–73. Springer-Verlag.

Bella, G. (2007). Formal Correctness of Security Protocols,

volume XX of Information Security and Cryptogra-

phy. Springer Verlag.

Bella, G., Longo, C., and Paulson, L. C. (2003). Is the ver-

ification problem for cryptographic protocols solved?

In Security Protocols Works., volume 3364 of LNCS,

pages 183–189. Springer.

Bella, G., Massacci, F., and Paulson, L. C. (2002). The ver-

ification of an industrial payment protocol: the SET

purchase phase. In Proc. of the 9th ACM CCS, pages

12–20, Washington, DC, USA. ACM Press.

Burrows, M., Abadi, M., and Needham, R. (1989). A logic

of authentication. In Proc. 12th ACM Symposium on

Operating Systems Principles, Litchfield Park, AZ.

Carlos, M. C. and Price, G. (2012). Understanding the

weaknesses of human-protocol interaction. In Works.

on Usable Security at 16th Int. Conference on Finan-

cial Cryptography and Data Security.

Dhamija, R., Tygar, J. D., and Hearst, M. (2006). Why

phishing works. In Proc. of the SIGCHI conference on

Human Factors in computing systems, CHI ’06, pages

581–590, New York, NY, USA. ACM.

Dolev, D. and Yao, A. (1983). On the security of public key

protocols. Information Theory, IEEE Transactions on,

29(2):198–208.

Ellison, C. (2007). Ceremony design and analy-

sis. Cryptology ePrint Archive, Report 2007/399.

http://eprint.iacr.org/.

Gajek, S. (2005). Effective protection against phishing and

web spoofing. In Proc. of the9th IFIP Conf. on Comm.

and Multimedia Sec., LNCS 3677, pages 32–41.

Gajek, S., Manulis, M., Sadeghi, A.-R., and Schwenk, J.

Provably secure browser-based user-aware mutual au-

thentication over tls. In Proc. of the 2008 ACM sympo-

sium on Information, computer and communications

security.

Jakobsson, M. (2007). The human factor in phishing. In In

Privacy & Security of Consumer Information ’07.

Lowe, G. (1996). Breaking and fixing the needham-

schroeder public-key protocol using fdr. In Proc. of

the 2nd Int. Works. on Tools and Algorithms for Con-

struction and Analysis of Systems, pages 147–166.

Meadows, C. (1996). Language generation and verification

in the nrl protocol analyzer. In Proc. of the 9th IEEE

CSF, page 48, Washington, DC. IEEE Comp. Soc.

Meadows, C. (2003). Formal methods for cryptographic

protocol analysis: Emerging issues and trends. IEEE

Journal on Selected Areas in Communications, 21.

Mitchell, J. C., Shmatikov, V., and Stern, U. (1998). Finite-

state analysis of SSL 3.0. In Proc. of the 7th con-

ference on USENIX Security Symposium, volume 7,

page 16, San Antonio, Texas. USENIX.

Needham, R. M. and Schroeder, M. D. (1978). Using en-

cryption for authentication in large networks of com-

puters. Commun. ACM, 21(12):993–999.

Paulson, L. C. (1998). The inductive approach to verifying

cryptographic protocols. Journal of Computer Secu-

rity, 6(1-2):85–128.

Paulson, L. C. (1999). Inductive analysis of the Internet

protocol TLS. ACM Transactions on Information and

System Security, 2(3):332–351.

Roscoe, A. W., Goldsmith, M., Creese, S. J., and Zakiuddin,

I. (2003). The Attacker in Ubiquitous Computing En-

vironments: Formalising the Threat Model. In Proc.

of 1st Int. Works. on Form. Asp. in Security and Trust.

Ruksenas, R., Curzon, P., and Blandford, A. (2008).

Modelling and analysing cognitive causes of security

breaches. Innovations in Systems and Software Engi-

neering, 4(2):143–160.

Ryan, P. and Schneider, S. (2000). The modelling and anal-

ysis of security protocols: the csp approach. Addison-

Wesley Professional.

AProposedFrameworkforAnalysingSecurityCeremonies

445