Comparison the Performance of Hybrid HMM/MLP and RBF/LVQ

ANN Models

Application for Speech and Medical Pattern Classification

Lilia Lazli

1

, Abdennasser Chebira

2

, Mounir Boukadoum

3

and Kurosh Madani

2

1

Laboratory of Research in Computer Science (LRI/GRIA), Badji Mokhar University,

B.P.12 Sidi Amar 23000 Annaba, Algeria

2

Images, Signals and Intelligent Systems Laboratory (LISSI / EA 3956), PARIS XII University,

Senart-Fontainebleau Institute of Technology, Bat. A, Av. Pierre Point, F-77127 Lieusaint, France

3

Univesité du Québec A Montréal (UQAM), Montreal, Québec, Canada

Keywords: Speech Recognition, Medical Diagnosis, Hybrid RBF/LVQ Model, Hybrid HMM/MLP Model.

Abstract: In the last several years, the hybrid models have become increasingly popular. We use involves multi-

network RBF/LVQ structure and hybrid HMM/MLP model for speech recognition and medical diagnosis.

1 INTRODUCTION

The main difficulty in classification of speech and

biomedical signals is related, on the one hand, to a

large varity of such signals for a same diagnosis

result by example the variation panel of

corresponding medical signals could be very large,

and on the other hand, to the close resemblance

between such signals for two differents classification

results. We propose two hybrid approaches for

classification of electrical signals.

First, we have developed a serial multi-neural

network approach that involves both Learning

Vector Quantization (LVQ) and Radial Basis

Function (RBF) Artificial Neural Networks (ANN).

It is admitted that techniques based on single neural

network show a number of attractive futurs to solves

problems for which classical solutions have been

limited, it is also admitted that a flat neural structure

doesn`t represent the more appropriated way to

approach "intelligent behavior".

We propose in second part a hybrid HMM/MLP

model which makes it possible to join the

discriminating capacities, resistance to the noise of

MLP (Multi-Layer Perceptron) and the flexibilities

of HMMs (Hidden Markov Model) in order to

obtain better performances than traditional HMM.

2 DATABASES CONSTRUCTION

Three speech Data Bases (DB) and medical DB have

been used in this work:

2.1 Speech Databases

1) The first one referred to as DB1, the isolated

digits task has 13 words in the vocabulary: 1, 2, 3, 4,

5, 6, 7, 8, 9, zero, oh, yes and no, with a total of

3900 utterances.

2) The second DB2 contained about 50 speakers

saying their last and first name, the city of birth and

residence.

3) The DB3, contained the 13 control words (i.e.

View/new, save/save as/save all), the used training

set consists of 3900 sounds saying by 30 speakers.

2.2 Biomedical Database

The object is the classification of an electric signal

coming from a medical test, the used signals are

called Potentials Evoked Auditory (PEA) (Dujardin,

2006); (Lazli, 2007).

We choose 3 categories of patients (3 classes)

according to the type of their trouble, we selected

213 signals, so that every signal contains 128

parameters. 92 belong to the Normal (N) class, 83,

to the Endocochlear class (E) and 38 to the

Retrocochlear class (R). The basis of training

659

Lazli L., Boukadoum M., Chebira A. and Madani K..

Comparison the Performance of Hybrid HMM/MLP and RBF/LVQ ANN Models - Application for Speech and Medical Pattern Classification.

DOI: 10.5220/0004133706590662

In Proceedings of the 9th International Conference on Informatics in Control, Automation and Robotics (ANNIIP-2012), pages 659-662

ISBN: 978-989-8565-21-1

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

contains 24 signals, of which 11 correspondent to

the R class , 6 to the E class and 7 to the N class.

3 MULTI-NEURAL NETWORK

BASED APPROACH

The approach we proposed to solve the problem is

based on Multi-Neural Network (MNN) concept. A

MNN could be seen as a neural structure including a

set of similar neural networks (homogeneous MNN

architecture) or a set of different neural nets

(heterogeneous MNN architecture).

The serial homogeneous MNN is equivalent to a

single neural network structure with a greater

number of layers with different neuron activation

functions. So the use of homogeneous MNN with a

serial organization is here out of real interest. In the

parallel homogeneous MNN configuration, each

neural net operates as some "expert". So the interest

of parallel homogeneous MNN appears when a

decision stage, to process the results pointed out by

the set of such "expert", is associated to such MNN

structure becomes then a serial/parallel MNN,

needing an optimization procedure to determine the

number of neural nets to be used (Dujardin, 2006).

We propose an intermediary solution: a two

stage serial heterogeneous MNN structure

combining a RBF based classifier (operating as the

first processing stage) with a LVQ based decision-

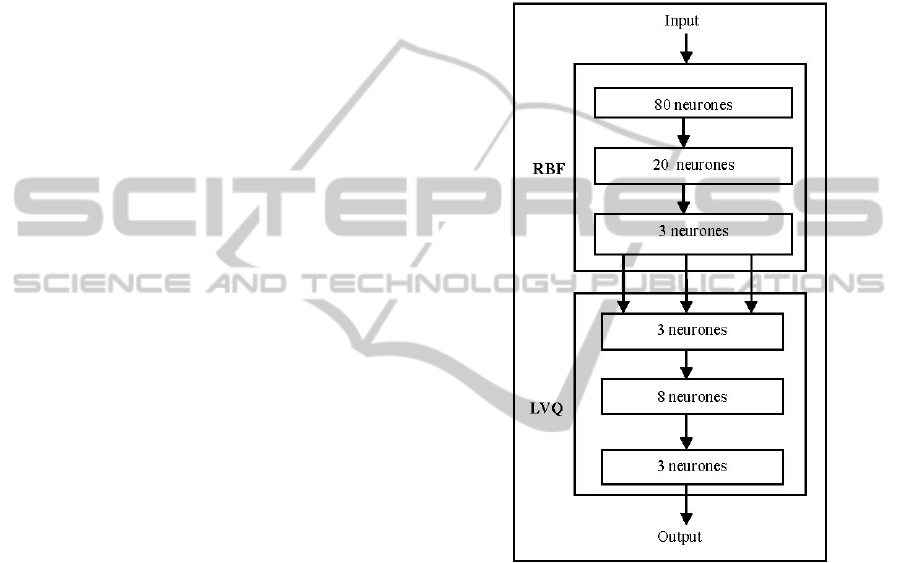

classification stage see figure 1.

The RBF model we use is a weighted-RBF

model but a standard one and so, it performs the

feature space mapping associating a set of

"categories" (in our case a category corresponds to a

possible pathological class for example for medical

DB ) to a set of "areas" of the feature space. The

LVQ neural model belongs to the the class of

competitive neural network structure. It includes one

hidden layer, called competitive layer. Even if the

LVQ model has essentially been used for the

classification tasks, the competitive nature of it`s

learning strategy (based on winner takes all

strategy), makes it usable as a decision-classification

operator. On the other hand, the weighted nature of

transfer functions between the input layer and the

hidden one and between the hidden layer and the

output one in this model allows non-linear

approximation capability, making such neural net a

function "approximation operator".

Taking into account the above analysis, the

proposed serial MNN structure could be seen as a

structure associating a neural decision operator to a

neural classifier. Moreover, the proposed structure

could also be seen as some global neural structure

with two hidden layers. So, the association of two

neural models improves the global order of the non

linear approximation capability of such global neural

operator, comparing to each single neural structure

constituting the MNN system. This technique allows

to fill in the gap induced by the RBF ANN, and thus,

to refine the classification.

Figure 1: Serial Multi-Neural Network based structure.

4 HYBRID HMM-ANN MODELS

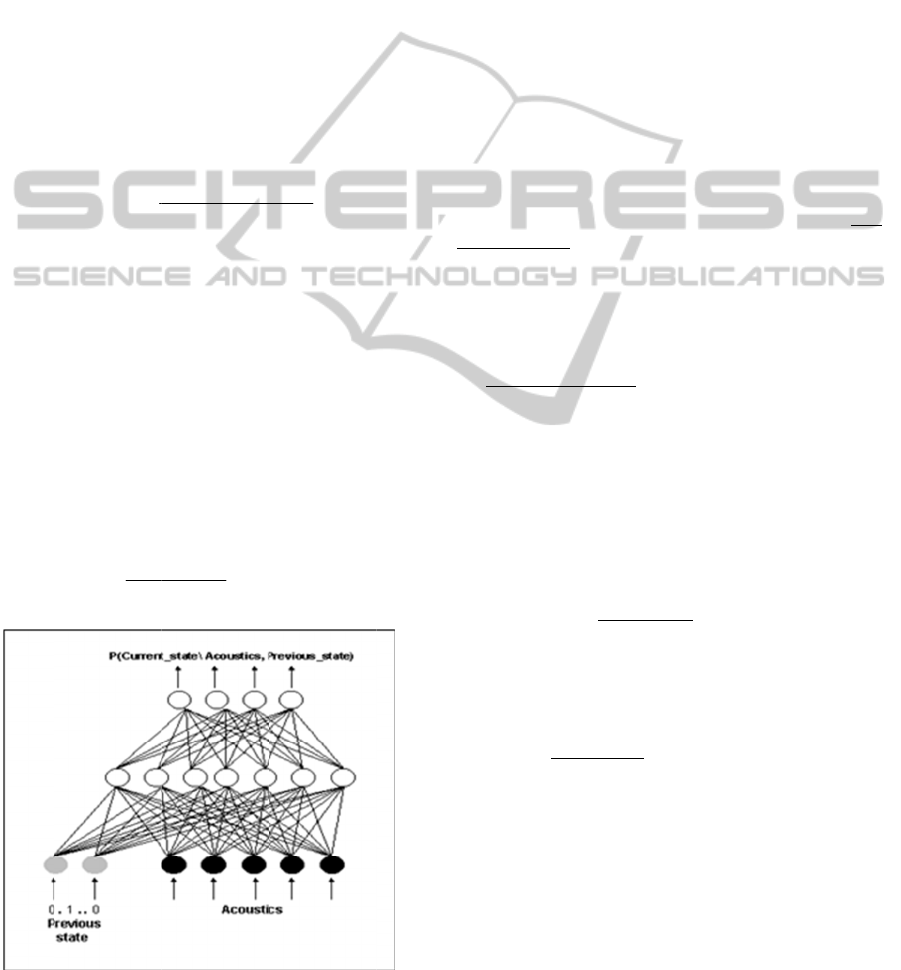

We describe the theoretical formulation of our

hybrid HMM/ANN model, an approach for the

training and estimation of posterior probabilities

using a recursive algorithm that is reminiscent of the

EM (Expectation Maximization) algorithm for the

estimation of data likelihoods. The method is

developped in the context of a statistical model for

transition-based electrical signal recognition using

ANN to generate probabilities for HMM. In the new

approach, we use local conditional posterior

probabilities of transitions to estimate global

posterior probabilities of instance sequences given

acoustic data.

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

660

4.1

E

A

ANN c

a

and Se

l

systems,

approxi

m

Functio

n

equatio

n

p(x

n

\q

k

),

vector

g

ANN c

p

robabil

i

acoustic

emissio

n

network

g

k

(x

n

,Θ)

p

Which i

m

p(q

k

). It

during

c

p

robabil

i

p

roduci

n

p

robabil

i

classifie

r

p

robabil

i

or test c

o

Thu

s

emissio

n

obtaine

d

the train

i

Figure

2

transitio

n

E

stimatin

g

A

NN

a

n be used to

l

lami, 2003)

the role

o

m

ate probab

i

n

s (PDF). Pra

c

n

s, we would

l

is the value

o

g

iven the h

y

an be train

e

i

ty p(q

k

\x

n

)

o

data (figure

n

PDF value

s

outputs appr

o

is an estimat

e

)\(

nk

xq =

m

plicitly co

n

is thus pos

s

c

lassification

w

i

ties occur o

n

n

g the netwo

r

i

ties can b

e

r

to compens

a

i

ties that are

n

o

nditions.

s

, scaled lik

e

n

probabilitie

d

by dividing

i

ng se

t

, whic

h

(

p

x

p

2

: An MLP

t

n

probabilities.

HMM Lik

e

classify

p

att

e

. For statis

o

f the local

i

lities or Pr

o

c

tically, give

n

l

ike to estim

a

o

f the PDF of

y

pothesized

H

e

d to produ

o

f the HM

M

2). This ca

n

s

using Bay

e

o

ximate Baye

e

of:

)(

)\(

n

kn

xp

p

qxp

n

tains a priori

s

ible to var

y

w

ithout retra

i

n

ly as multi

p

r

k outputs.

A

e

adjusted

d

a

te for traini

n

n

ot represent

a

e

lihoods p(x

n

s in standar

d

the network

h

gives us an

e

)(

)\

n

kn

x

q

x

t

hat estimates

e

lihoods w

i

e

rn classes (

L

t

ical recogn

i

estimator i

s

o

bability De

n

n

the basic H

M

a

te something

the observed

H

MM state.

c

e the post

e

M

state give

n

be converte

e

s’rule. Since

sian probabil

i

)(

k

q

p

class proba

b

y

the class p

r

i

ning, since t

h

p

licative ter

m

A

s a result,

c

d

uring use

o

n

g data with

c

a

tive of actua

l

n

\q

k

) for us

e

d

HMM ca

n

outputs g

k

(x

n

)

e

stimate of:

local condit

i

i

th

L

azli

i

tion

s

t

o

n

sity

MM

like

data

The

e

rior

the

e

d to

e

the

i

ties,

(1)

b

ility

r

iors

hese

m

s in

c

lass

o

f a

c

lass

l

use

e

as

n

be

n

)

by

(2)

i

onal

Du

r

co

n

cla

s

b

y

is

n

the

opt

i

rec

o

H

M

ch

a

5

5.

1

Th

e

R

B

wit

h

cla

s

cla

s

the

me

d

72

%

the

cor

r

61

%

5.

2

Co

n

b

io

m

cor

r

inp

u

Th

e

ne

u

eq

u

A

N

ma

n

in

h

co

n

int

o

T

of

ge

n

we

l

cor

r

r

ing recognit

n

stant for all

s

sification. It

t

he priors, w

e

n

o longer a di

s

discriminant

i

mization fo

r

o

gnition. Th

u

M

M formalis

m

a

racteristics.

CASE S

T

EXPER

I

1

Compa

r

and L

V

e

learning D

B

B

F network

w

h

a ra

t

e of co

r

s

s, 58% for th

For the tre

e

s

sification as

DB2, 63% f

o

The LVQ ne

t

d

ical DB wit

h

%

for the R

c

N class. F

o

r

ect classific

a

%

for the DB

2

2

Results

Multi-

n

n

cerning the

m

edical DB,

r

esponding t

o

u

t vectors, t

h

e

number of

u

rons.

For the LV

Q

u

al to the n

u

N

N. The outp

u

n

y neurons a

s

h

idden layer i

n

sidering the

n

o

the 3 classe

s

T

he learning

D

the learnt

v

n

eralization p

h

l

l classifies

6

r

ect classific

a

i

on, the sea

t

classes and

c

ould be arg

u

e

re using a s

c

s

criminant cri

training has

a

r

the system

u

s, this permi

t

m

, while taki

n

T

UDY A

N

I

MNTAL

R

r

ison Stud

y

Q Approa

c

B

has succes

s

ell classifies

r

rect classific

a

e

E class, 68

%

e

speech D

B

follows: 51%

r the DB3.

t

wor

k

well cl

a

h

a ra

t

e of c

o

c

lass, 57% fo

r

o

r the tree s

p

a

tion as follo

w

2

, 68% for the

Relative t

o

eural Net

w

RBF ANN,

the number

o

the number

h

e output la

y

neurons of t

h

Q

ANN, the n

u

u

mber of out

p

t layer of the

L

classes (3).

T

s

8 neurons h

n

umber of su

b

s

.

D

B has succe

s

v

ectors are

w

h

ase. We ca

n

5% of the f

u

a

tion of: 71

%

t

ing factor

p

will not c

h

u

ed that, whe

n

c

aled likeliho

o

i

terion. Howe

v

a

ffected the

p

m

that is us

e

i

t uses of the

n

g advantage

N

D

RESULT

S

y

with Sin

g

c

hes

s

fully been l

e

62,3% of th

e

a

tion of: 61%

%

for the N cl

a

B

s, a rate o

%

for the DB1

,

a

ssifies 62%

o

o

rrect classifi

c

r

the E class

,

p

eech DBs,

a

w

s: 65% for

DB3.

o

RBF/LV

Q

w

ork Appr

o

by exampl

e

of input neu

r

of compone

n

y

er contain 3

h

e hidden la

y

u

mber of inp

u

p

ut cells of

LVQ ANN c

o

T

he number o

h

as been dete

r

u

bclasses we

c

ssfully been l

w

ell classifie

n

see that thi

s

u

ll DB, with

%

for the R c

l

p

(x

n

) is a

h

ange the

n

dividing

o

d, which

v

er, since

arametric

e

d during

standard

of ANN

S

g

le RBF

e

arnt. The

e

full DB,

for the R

a

ss.

f co

r

rect

,

52% for

o

f the full

c

ation of:

57% for

a

rate of

the DB1,

Q

based

o

ach

e

for the

r

ons (88)

n

ts of the

neu

r

ons.

y

e

r

is 20

u

t cells is

the RBF

o

ntains as

f neurons

mined by

c

an count

earnt. All

d

in the

s

networ

k

a rate of

l

ass, 55%

ComparisonthePerformanceofHybridHMM/MLPandRBF/LVQANNModels-ApplicationforSpeechandMedical

PatternClassification

661

for the E class, 69% for the N class.

For the speech DBs, a rate of correct

classification as follows: 79% for the DB1, 86% for

the DB2, 72% for the DB3.

Comparing to the two single approaches, with

our proposed MNN technique, we obtain globally

better results than the single RBF or LVQ ANN

approach.

5.3 Results Relative to Discrete HMM

and Hybrid HMM/MLP Approach

Further assume that for each class in the vocabulary

we have a training set of k occurrences (instances) of

each class where each instance of the categories

constitutes an observation sequence.

a. Discrete HMM

For speech DBs, 10-state, strictly left-to-right,

discrete HMM were used to model each basic unit

(words). In this case, the acoustic feature were

quantizied into 4 independent codebooks according

to the KM algorithm: 128 clusters for the J RASTA-

PLP coefficients, 128 for the Δ J RASTA-PLP

coefficients, 32 clusters for Δ energy, 32 clusters for

ΔΔ energy.

For the PEA signals, 5-state, strictly left-to-right,

discrete HMM were used. Table 1 gives the results

of this experiment.

Table 1: Discrete HMM results.

BDB SDB1 SDB2 SDB3

Rate% 84 87 90 76

b. Discrete MLP with entries provided by the FCM

Algorithm

We use an hybrid HMM/MLP model using in entry

of the ANN an acoustic vector composed of real

values which were obtained by applying the FCM

algorithm (Lazli and Sellami, 2003) with 2880 real

components corresponding to the various

membership degrees of the acoustic vectors to the

classes of the "code-book". We reported the values

used for SDB2.

Table 2: Hybrid HMM/MLP results.

BDB SDB1 SDB2 SDB3

Rates % 94 97 97 83

10-state, strictly left-to-right, word HMM with

emission probabilities computed from an MLP with

9 frames of quantizied acoustic vectors at the input.

Thus a MLP with only one hidden layer including

2880 neurons at the entry, 30 neurons for the hidden

layer and 10 output neurons was trained.

For the PEA signals, a MLP with 64 neurons at

the entry, 18 neurons for the hidden layer and 5

output neurons was trained. Table 2 gives the

results.

6 CONCLUSIONS

In this paper, the association of RBF and LVQ

neural models improves the global order of the non

linear approximation capability of such global neural

operator, comparing to each single neural structure

constituting the MNN system.

For the second hybrid HMM/MLP model, a

recognition tasks show an increase in the estimates

of the posterior probabilities of the correct class after

training.

From the effectiveness, it seems that the hybrid

HMM/MLP model are more powerful than multi-

network RBF/LVQ structure.

REFERENCES

L. Lazli, M. Sellami. "Connectionist Probability

Estimators in HMM Speech Recognition using Fuzzy

Logic". MLDM 2003: the 3rd international conference

on Machine Learning & Data Mining in pattern

recognition, LNAI 2734, Springer-verlag, pp.379-388,

July 5- 7, Leipzig, Germany, 2003.

A-S. Dujardin. "Pertinence d'une approche hybride multi-

neuronale dans la résolution de problèmes liés au

diagnostic industrièle ou médical". Internal report, I2S

laboratory, IUT of "Sénart Fontainebleau", University

of Paris XII, Avenue Pierre Point, 77127 Lieusant,

France, 2006.

L. Lazli, A-N. Chebira, K. Madani. "Hidden Markov

Models for Complex Pattern Classification". Ninth

International Conference on Pattern Recognition and

Information Processing, PRIP’07 may 22-24, Minsk,

Belarus, 2007. http://uiip.basnet. by/conf/prip2007/

prip2007.php-id=200.htm

ICINCO2012-9thInternationalConferenceonInformaticsinControl,AutomationandRobotics

662