Component-based Gender Classification based on Hair

and Facial Geometry Features

Wen-Shiung Chen

1

, Wen-Jui Chang

1

, Lili Hsieh

2

and Zong-Yi Lin

1

1

Dept. of Electrical Engineering, National Chi Nan University, Puli, Nantou, Taiwan

2

Dept. of Information Management, Hsiuping University of Science and Technology, Taichung, Taiwan

Keywords: Biometrics, Face Recognition, Gender Classification, Face Detection, Hair Detection, ASM.

Abstract: In this paper, a component-based gender classification based on hair and facial geometrical features are

presented. By way of these preprocessing, hair and facial geometry features can then be extracted

automatically from the face images. We compare hair detection methods by examining their color and

texture features, and also analyze some geometrical features from references. The best performance of

87.15% in gender classification rate is achieved by combining the most significant hair and geometrical

features which is better than some of the literature before.

1 INTRODUCTION

Gender classification is a branch of face recognition

that can be used as pre-treatment or in combination

with other identification to improve recognition

results. In addition, the human face recognition

technology can be used not only for identity

recognition, but also the images and related

multimedia interaction applications. According to

biology, human being can distinguish gender

difference by seeing face regions. After choosing the

general direction, there are still two main approaches

for face gender classification: appearance-based and

feature-based (Makinen and Raisamo, 2008). The

appearance-based approach takes advantage of full

image, in which all pixels are counted for its result to

analyze. The feature-based is according to facial

organ or special region’s characters, like the measure

of area, length and width, distance, position, relative

position and so on. Many correlative introductions

about these two main approaches are also found in

some articles. Since the appearance-based approach

must handle all pixels for a given images, it will

lower down the recognition performance. Therefore,

in this paper we just pay attention to the component-

based method. We will introduce the hair and facial

geometry features detection of the literature as

follows.

2 THE PROPOSED GENDER

CLASSIFICATION

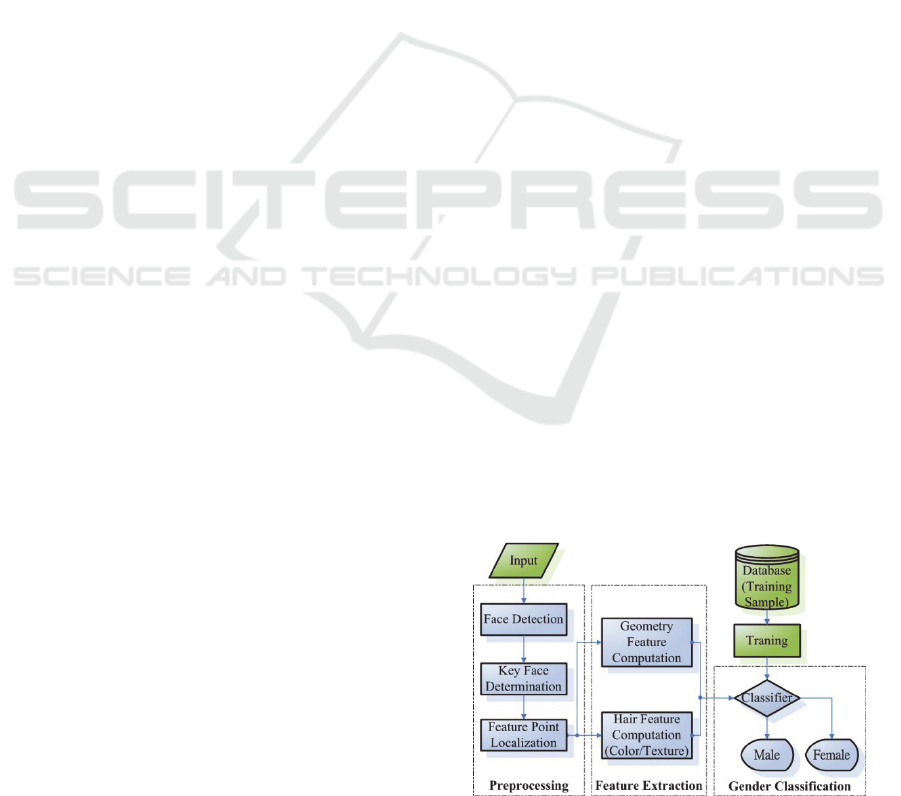

The architecture of gender classification system is

presented in Fig. 1. The system can be roughly

divided into three modules, which are preprocessing

module, feature extraction module and

classification/recognition module. We will introduce

the content one after another.

Figure 1: Architecture of gender classification system.

2.1 Pre-processing

We can count the weights of each face in an image

based on the formula, then follow the weight size to

recognize the key face in the image to get its

information. Then, for the purpose of feature-based

computation, many feature regions are computed

626

Chen W., Chang W., Hsieh L. and Lin Z..

Component-based Gender Classification based on Hair and Facial Geometry Features.

DOI: 10.5220/0004154806260630

In Proceedings of the 4th International Joint Conference on Computational Intelligence (NCTA-2012), pages 626-630

ISBN: 978-989-8565-33-4

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

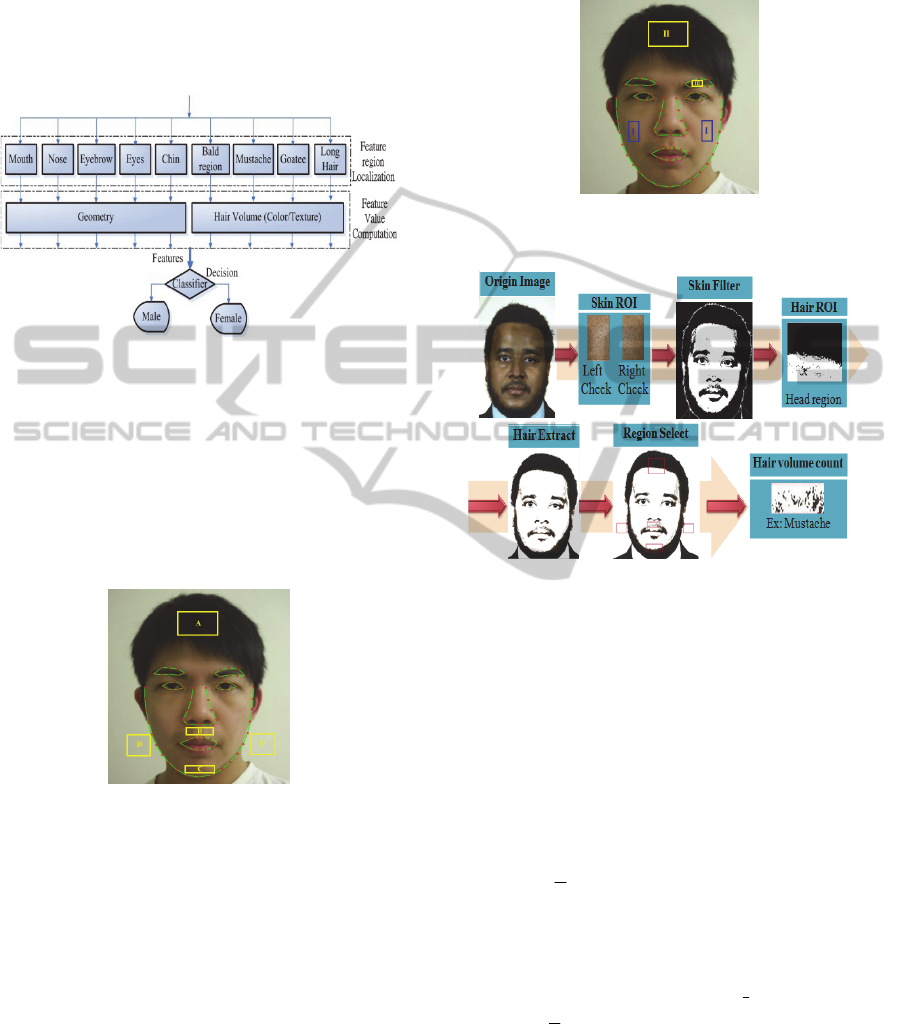

individually. The computed regions and the

remained steps are shown in Fig. 2. The most

important issue to be solved in this premise is to find

out the position precisely of face organ characters.

So this work uses ASM (Active Shape Model)

(Lanitis et al., 1995) to locate the feature points and

to find the special region’s characteristics.

Figure 2: Flowchart of face localization, feature extraction

and recognition.

2.2 Hair Detection

In previous sections, two kinds of hair detection

methods are discussed. We use these two methods to

compute the special regions’ hair volume to find the

gender uniqueness. The effective zones of hair

features are shown in Fig. 3.

Figure 3: (a) Bald region, (b) mustache, (c) goatee, and (d)

long hair.

2.2.1 Adaptive Skin and Hair Color

Detection

Adaptive detection technique uses the detection

regions which are similar to target test color areas.

Thus it can reduce the skin and hair color influenced

by ethnic.

As shown in Fig. 4, for getting the hair feature,

we should extract the skin (cheek) color without

glasses and hair interference, and remove all the skin

color from image first. Then, we use the hair color

region with no skin color for extracting hair from the

image. That is fundamental for hair region detection.

By the way, if the system determines that the

forehead region is completely bald, then the

extraction is from the right eyebrow. Detail of the

hair extraction process is shown in Fig. 5.

Figure 4: Region (I): Skin color extraction. Regions (II)

and (III): Hair color extraction.

Figure 5: The procedure of hair color extraction.

Skin color distribution of course is based on

YCbCr color space to calculate mean value and

standard deviation, like Eqs. (1) and (2). Then it

determines up and down threshold of skin color

based on mean value and standard deviation, like

Eqs. (3) and (4). Then according to Eq. (5), it

classifies if every pixel is skin color. As the same

way, hair color extraction also uses this algorithm to

complete hair region volume. The computation is as

follows.

,1

,

,,

1

ij

ij

YCbCr

PROI

P

n

(1)

where

,,P Y Cb Cr

, and

,,

,,

YCbCr

YCbCr

,1

1

2

2

,

,, ,,

1

ij

ij

Y Cb Cr Y Cb Cr

PROI

P

n

(2)

where

,,P Y Cb Cr

, and

,,

,,

YCbCr

YCbCr

CrCbYCrCbYU

H

,,),,(

3

(3)

Component-basedGenderClassificationbasedonHairandFacialGeometryFeatures

627

CrCbYCrCbYL

H

,,),,(

3

(4)

Other0

1

, UyxL

HIH

(5)

1

ROI

is the skin color sampling area,

2

ROI

is the

total face including hair scope.

ji

P

,

is the distribution of all pixels of

1

ROI

,

1

,,2,1 Ni

,

1

,,2,1 Mj

n is the total pixel number of

1

ROI

,

11

* MNn

yx

I

,

is the distribution of all pixels of

2

ROI

,

2

,,2,1 Nx

,

2

,,2,1 My

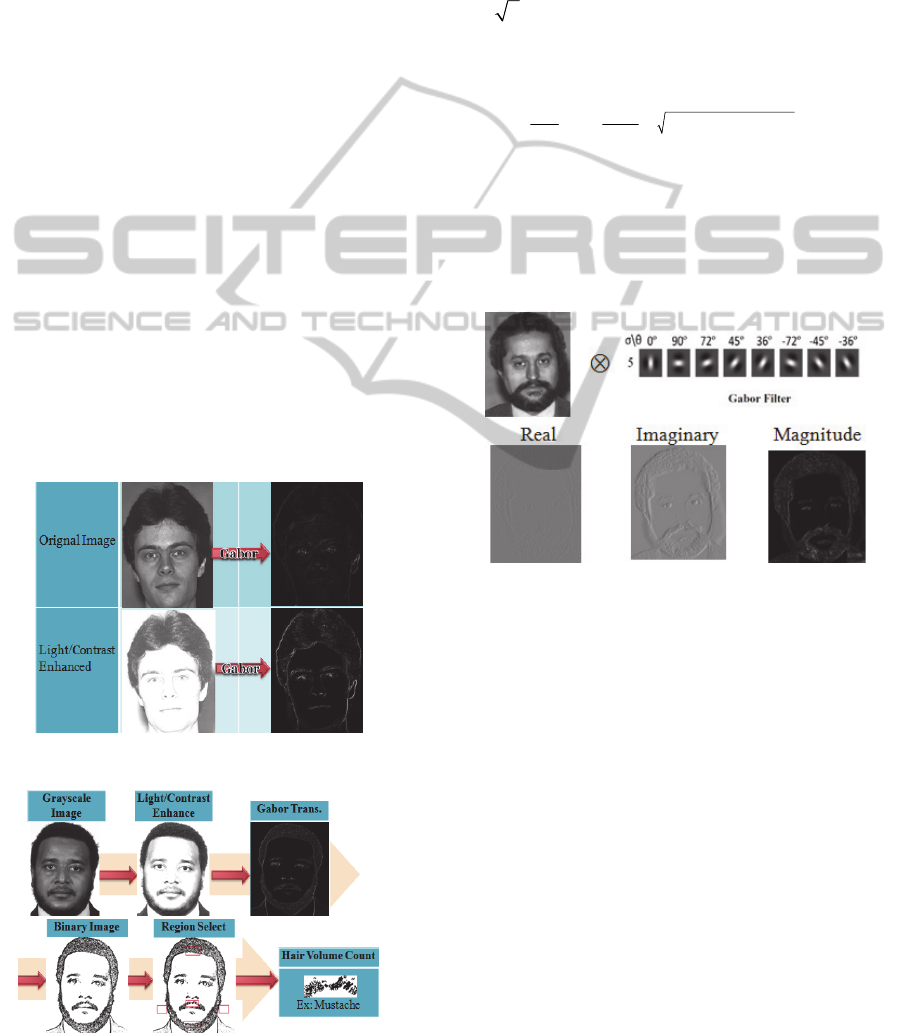

2.2.2 Gabor Transform for Hair Texture

Detection

Among many of texture detection methods, Gabor

transform has the excellent performance (Manjunath

and Ma, 1996). So we used Gabor wavelet to

convert an image to magnitude response. Still, as

Fig. 6, if we change the light environment of image,

texture information may have higher beneficial

detection result (Maenpaa, 2004). We enhance the

light and contrast before transferring. Detail process

of the hair extraction is shown in Fig. 7.

Figure 6: Environment lighting example.

Figure 7: The procedure of hair texture extraction.

The general idea of Gabor transform is shown in

Fig. 8. Gabor filter can be separated into real,

imaginary and magnitude responses from Eq. (6), we

just pick out the magnitude feature for texture

detection from Eq. (7). Gabor magnitude transform

is to make Gabor filter convolution with image f and

then observe the change of texture from Eq. (8). The

parameters of the filter used here are

20 0.2PI

.

¿

¿

,

, , exp 2 cos sin

(, ) (, )

RI

Gxygxy j x y

Gxy jGxy

(6)

22

22

22

1

, exp , ,

22

RI

xy

gxy Gxy Gxy

(7)

, , ( , )

ij

Gx y f x iy j g xy

(8)

¿ ,

, Gxy

is Gabor filter.

¿ : frequency θ: orientation σ: bandwidth

,

R

Gxy

,

,

I

Gxy

and

, gxy

are real, imaginary,

and magnitude responses of Gabor filter.

Figure 8: Gabor transform.

2.3 Computation of Geometrical

Features

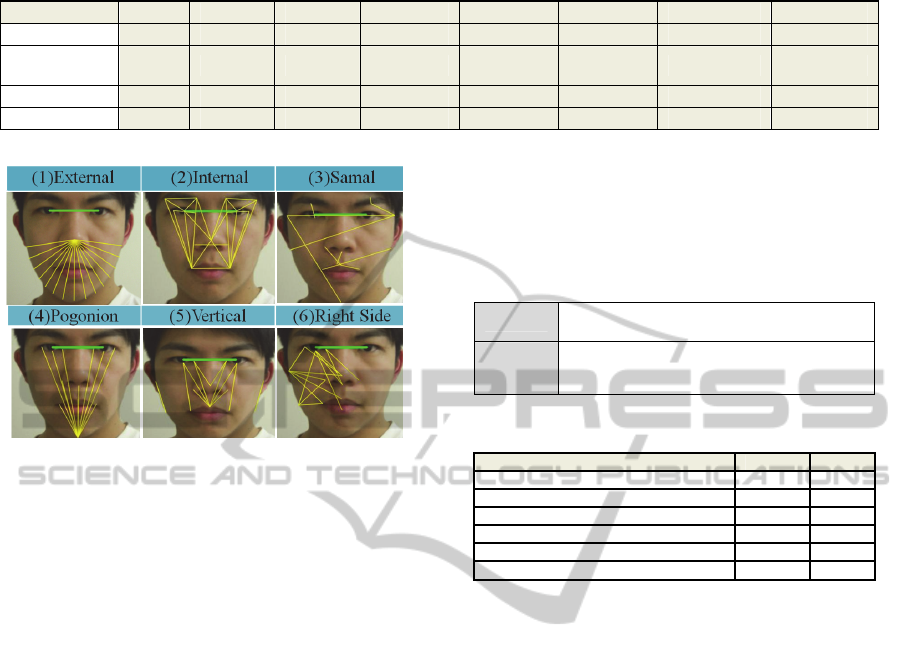

In the case of geometry length measurement, it

calculates all the statement Euclidean distances

between the selected points on a face in Fig. 9. This

article compares those proposals in the literature

from the group of geometric and cross combination,

to find the most recognizable geometry length (A.

Samal et. al’s feature lengths are in the name of

Samal). To decrease the variation of distance from

object and reduce the complication of full image

normalization, we normalized each fine line’s values

from the thick line’s distance between two eyes.

IJCCI2012-InternationalJointConferenceonComputationalIntelligence

628

Table 1: Comparison of individual feature and classification.

Samal Internal External Pogonion Vertical Right Side Hair texture Hair color

SVM 67.85% 65.05% 66.70% 65.85% 62.85% 65.40% 80.10% 72.65%

Modest

AdaBoost

65.30% 62.10% 61.35% 65.15% 62.00% 64.65% 79.25% 70.05%

LogitBoost 64.60% 60.70% 60.65% 64.75% 61.75% 64.30% 78.30% 66.35%

Real AdaBoost 64.65% 60.30% 59.55% 65.00% 61.45% 64.10% 78.55% 66.85%

Figure 9: Geometrical feature extraction (thick line for

normalization).

3 EXPERIMENTAL RESULTS

3.1 Database

Related to face recognition scope, FERET database

is an image database extensively used. There are

14,126 pictures in the database, including 1,199

persons and about 2,722 images. We choose 2,000

images (male and female have 1,000 images,

respectively) to test colorful face images.

3.2 Experimental Results

At first, we compare all the classifiers and individual

features as shown in Table 1. For all listed

classifiers, SVM has the best performance than the

others. And if focusing on individual feature, the

result shows that hair features get higher correct

classification rate than geometry features, we can

consider it as strong features. But for individual

feature, there is still betterment.

Moreover, For the purpose of getting higher

correct classification rate, we had tested many sets

of feature combination. I just list some well

performed results as shown in Table 2. For facial

geometry gender classification, combined with

Samal and external feature it has the best

performance. From this, we can understand that it is

not always the case that the more geometry features

selected, the better performance it will be. Still

more, if it can combine strong feature of texture

detection, the total correct recognition rate can be

higher up to 87.15%.

Table 2: Performance of feature combination.

Texture

(1) complex background, (2) wrinkle and pores

(3) susceptibility to light.

Color

(1) darkness background, (2) region selected

(3) the same color as skin, (4) susceptibility to

light.

Table 3: Performance of combined features.

Feature Combination Features Rate

Internal + External

39 69.00%

Samal+ External

25 70.10%

Pogonion + External

29 69.85%

Samal + External + Hair color

29 79.55%

Samal + External + Hair texture

29 87.15%

Samal + External + Hair texture and color

33 86.55%

3.3 Discussion

There are more changing factors of color features

than the texture features, so it is easy to know the

poor results that should be. The normal color and

texture influence factor are shown as Table 3. As

you can see, even system can achieve the best

performance from simulation results, texture

features is not always better than color features for

long hair detection, even though texture features can

get the best recognition rate in experiments. The

reason is that FERET has many pictures in simple

background, and just changes the influence of light.

In practice, we should choose the features

combination methods that rely on the changes of

back ground or to eliminate background.

4 CONCLUSIONS

In this paper, we construct a fast and low complex

gender classification system. Our experimental

results show the importance of hair texture and the

most appropriate geometry characteristics of

matching for gender classification. We can still find

the importance of texture features, because of color

Component-basedGenderClassificationbasedonHairandFacialGeometryFeatures

629

causes more unpredictable factors. Finally, the best

performance of our proposed system is to combine

hair and geometry features that can get the

classification rate to 87.15% in gender classification.

REFERENCES

E. Makinen and R. Raisamo, “An experimental

comparison of gender classification methods,”

Pattern Recognition Letters, vol. 29, no. 10, pp.

1544-1556, Jul. 2008.

F A. Lanitis, C. J. Taylor and T. F. Cootes, “An automatic

face identification system using flexible appearance

models,” 5th British Machine Vision Conference on

Image and Vision Computing, vol. 13, no. 5, pp. 393-

401, 1995.

B. S. Manjunath and W. Y. Ma, “Texture features for

browsing and retrieval of image data,” IEEE Trans.

on Pattern Analysis and Machine Intelligence, vol.

18, no. 8, pp. 837-842, Aug.1996.

T. Maenpaa, “Classification with color and texture: jointly

or separately?” Pattern Recognition, vol. 37, no. 8, pp.

1629-1640, 2004.

IJCCI2012-InternationalJointConferenceonComputationalIntelligence

630