EEG Beta Range Dynamics and Emotional Judgments

of Face and Voices

K. Hiyoshi-Taniguchi

1,2

, M. Kawasaki

3

, T. Yokota

1

, H. Bakardjian

4,1

, H. Fukuyama

2

,

F. B. Vialatte

5,1

and A. Cichocki

1

1

Laboratory for Advanced Brain Signal Processing, RIKEN Brain Science Institute, Wakō, Japan

2

Human Brain Research Centres, Kyoto University Graduate of Medicine, Kyoto, Japan

3

RIKEN BSI-TOYOTA Collaboration Center, RIKEN, Wakō, Japan

4

IM2A, Groupe Hospitalier Pitié-Salpétrière, Paris, France

5

Laboratoire SIGMA, ESPCI ParisTech, Paris, France

Keywords: Emotion, Multi-modal, EEG.

Abstract: The purpose of this study is to clarify multi-modal brain processing related to human emotional judgment.

This study aimed to induce a controlled perturbation in the emotional system of the brain by multi-modal

stimuli, and to investigate whether such emotional stimuli could induce reproducible and consistent changes

in the brain dynamics. As we were especially interested in the temporal dynamics of the brain responses, we

studied EEG signals. We exposed twelve subjects to auditory, visual, or combined audio-visual stimuli.

Audio stimuli consisted of voice recordings of the Japanese word ‘arigato’ (thank you) pronounced with

three different intonations (Angry - A, Happy - H or Neutral - N). Visual stimuli consisted of faces of

women expressing the same emotional valences (A, H or N). Audio-visual stimuli were composed using

either congruent combinations of faces and voices (e.g. H x H) or non-congruent (e.g. A x H). The data was

collected with a 32-channel Biosemi EEG system. We report here significant changes in EEG power and

topographies between those conditions. The obtained results demonstrate that EEG could be used as a tool

to investigate emotional valence and discriminate various emotions.

1 INTRODUCTION

Judgment is the operation of the mind by which

knowledge of the values and relations of things is

obtained. Judgment is important for decision

making, and involves both cognitive and infra-

cognitive processes. In social cognition, judging the

emotion of another human being is important to

interpret communications. For instance, patients

with emotional judgment disorders, such as patients

suffering from major depression (Griamm et al.,

2008), can have serious social impairments. Our

purpose is to investigate the neurodynamics of

human emotional judgments.

Human communication is based both on face and

voice perception, therefore facial expression and

tone of voice is important to understand emotions.

Such multi-modal brain processes are difficult to

investigate. Anatomically, a huge literature

emphasizes the role of sub-cortical areas in emotion

processing (see e.g. Ledoux, 2000). This explains

the preponderance of fMRI studies in brain science

literature, as this imaging technique provides

information about sub-cortical activities. However,

these sub-cortical areas do not work independently

one from another, and consequently emotion

processing necessarily involves large-scale networks

of neural assemblies, in cortico-subcortical transient

interactions, where the time evolution of the network

is a key factor (Tsuchiya and Adolfs, 2007).

Therefore, EEG could provide crucial information

about emotional processes. For this reason, in a

previous pilot study, we investigated the effects of

voice and face emotional judgments on EEG signals,

on two subjects (Hiyoshi-Taniguchi, et al., 2011).

Here we extend those results with 12 subjects.

The purpose of our study was to induce a

controlled perturbation in the emotional system of

the brain by multi-modal stimuli, and to control if

such stimuli could induce reproducible changes in

EEG signal. We used a combination of photos and

voices with congruent or non congruent emotional

738

Hiyoshi-Taniguchi K., Kawasaki M., Yokota T., Bakardjian H., Fukuyama H., B. Vialatte F. and Cichocki A..

EEG Beta Range Dynamics and Emotional Judgments of Face and Voices.

DOI: 10.5220/0004184307380740

In Proceedings of the 4th International Joint Conference on Computational Intelligence (SSCN-2012), pages 738-740

ISBN: 978-989-8565-33-4

Copyright

c

2012 SCITEPRESS (Science and Technology Publications, Lda.)

valence. Through the investigation of this

‘abnormal’ perceptual condition, we intend to reveal

the mechanisms of normal emotional judgment (how

one can distinguish the valence of emotions in a

given stimulus). The use of three different valence

stimuli (neutral, angry, happy) will be compared.

2 METHOD

We recruited 12 subjects for this study. All subjects

were young (age =21.9 ± 0.31) healthy adults,

without prior history of any neurological or

psychiatric disorders. All subjects were screened to

be right handed using the Edinburgh handedness

test. 10 subjects were female, 2 were male.

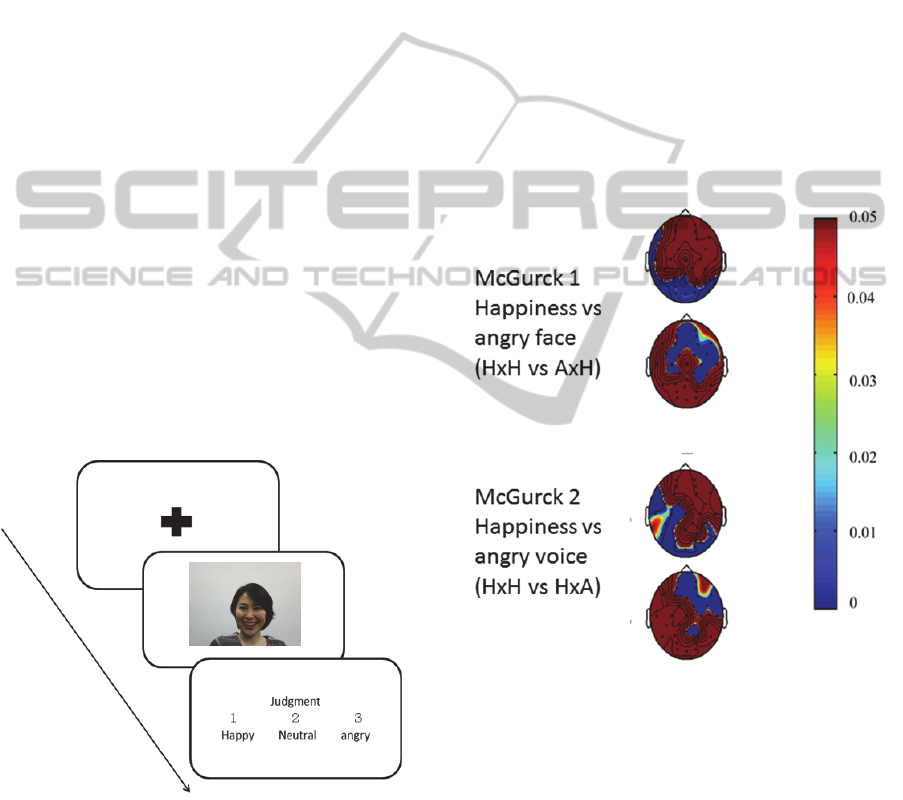

We exposed these subjects to auditory, visual, or

combined audio-visual stimuli. Stimuli were

presented for 2 sec, the subjects was asked to answer

afterwards within a 3 sec window, and then had 5

sec of rest (one trial = 10 sec). Audio stimuli

consisted of voice recordings of the word ‘arigato’

(thank you) pronounced with three different

intonations (Angry - A, Happy - H or Neutral - N).

Visual stimuli consisted of faces of women

expressing the same emotional valences (A, H or N).

Audio-visual stimuli were composed using either

congruent combinations of faces and voices (e.g.

HxH) or non-congruent (e.g. AxH):

3 RESULT

For multimodal stimuli, the common pattern

between emotional conditions (both in HxH vs. NxN

and in AxA vs. NxN) is observed, with a general

increase of the EEG power in peripheral areas, for

beta range.

In the non-congruent condition, specific effects are

observed (Figure 1):

- when comparing a congruent stimulus with a

non-congruent stimulus with a visual difference

(HxH vs. AxH), one can observe a distinct pattern: a

longitudinal shift of power in the alpha range

(increased in the frontal area, decreased in the

occipital area). The same shift is obtained in the beta

range, but only for the HxH vs. AxH condition.

- when comparing a congruent stimulus with a

non-congruent stimulus with an auditory difference

(HxH vs. HxA), another distinct pattern is visible: a

longitudinal shift of power in the beta range

(increased in the frontal area, decreased in the

occipital area).

Finally, in all the non-congruent conditions, an

increase of activity is observed in the theta range, in

a right centro-temporal location (C

4

, CP

4

).

Figure 1: Illustration of the difference between HxH and

AxH and HxA conditions in the beta (12-25 ) ranges.

4 DISCUSSION

We first off all analyzed congruent emotional

judgment. Emotional judgment is known to be

associated with neural correlates in the left and right

dorso-lateral prefrontal cortex (Nakamura, et al.,

1999; Ochsner, et al., 2002; Lange, et al., 2003;

Keightley, et al., 2003; Northoff, et al., 2004;

Grimm, et al., 2006). We indeed observed in the

congruent condition strong activations in the

prefrontal channels, especially in the alpha and beta

EEGBetaRangeDynamicsandEmotionalJudgmentsofFaceandVoices

739

ranges. From our result, we observe an angry-visual

and happy-auditory preferential association. This

effect might be due to a well-known reaction of

preparation to danger (the Colavita visual

dominance effect, see e.g. van Damme, et al., 2009):

perception of angry emotion means a potential

danger, which would place the subject in a

preferential visual dominance mode. Threatening

facial expression also induces avoidance behaviour,

visible in eye-tracking (Rigoulot and Pell, 2012).

These effects might be correlates of the EEG

occipital area alpha range increase.

REFERENCES

Grimm, S., Schmidt, C. F., Bermpohl, F., Heinzel, A.,

Dahlem, Y., Wyss M., 2006, Segregated neural

representation of distinct emotion dimensions in the

prefrontal cortex—an fMRI study. NeuroImage

30:325–340.

Hiyoshi-Taniguchi, K., Vialatte, F. B., Kawasaki, M.,

Fukuyama, H., Cichocki, A., 2011, Neurodynamics of

Emotional Judgments in the Human Brain. 4th

Internationcal Conference on Neural Computation

Theory and Applications (NCTA 2011), Barcelona,

Spain.

Keightley, M. L., Winocur, G., Graham, S. J., Mayberg,

H. S., Hevenor, S. J., Grady C. L., 2003, An fMRI

study investigating cognitive modulation of brain

regions associated with emotional processing of visual

stimuli. Neuropsychologia 41:585–596.

Lange, K., Williams, L. M., Young, A. W., Bullmore, E.

T., Brammer, M. J., Williams, S. C. R., 2003, Task

instructions modulate neural responses to fearful facial

expressions. Biol Psychiatry 53:226 –232.

Ledoux, J. E., 2000, Emotion circuits in the brain. Annu.

Rev. Neurosci., 23:155-184.

McGurk, H., MacDonald, J., 1976, Hearing lips and

seeing voices. Nature, 264(5588):746–748.

Nakamura, K., Kawashima, R., Ito, K., Sugiura, M., Kato,

T., Nakamura, A. 1999, Activation of the right inferior

frontal cortex during assessment of facial emotion. J

Neurophysiol 82:1610 –1614.

Northoff, G., Heinzel, A., Bermpohl, F., Niese, R.,

Pfennig, A., Pascual-Leone, A., Schlaug G., 2004,

Reciprocal modulation and attenuation in the

prefrontal cortex: An fMRI study on emotional–

cognitive interaction. Hum. Brain Mapp. 21:202–212.

Ochsner, K. N., Bunge, S. A., Gross, J. J., Gabrieli J D

(2002): Rethinking feelings: An FMRI study of the

cognitive regulation of emotion. J Cogn Neurosci

14:1215–1229.

Rigoulot S., Pell M. D., 2012, Seeing emotion with your

ears: emotional prosody implicitly guides visual

attention to faces. PLoS One, 7(1):e30740.

Tsuchiya, N, Adolphs, R., 2007, Emotion and

consciousness. Trends Cogn Sci. 11(4):158-67

Van den Stock J., Grèzes, J., de, Geldera, B., 2008,

Human and animal sounds influence recognition of

body language. Brain Research, 1242(25):185-190.

Van Damme, S., Crombez, G., Spence, C. 2009, Is visual

dominance modulated by the threat value of visual and

auditory stimuli? Exp. Brain Res., 193(2):197-204.

IJCCI2012-InternationalJointConferenceonComputationalIntelligence

740