Drowsiness Detection based on Video Analysis Approach

Belhassen Akrout, Walid Mahdi and Abdelmajid Ben Hamadou

Laboratory MIRACL, Institute of Computer Science and Multimedia of Sfax, Sfax University, Sfax, Tunisia

Keywords: Drowsiness Detection, Multi-scale Analysis, Circular Hough Transform, Haar Features, Wavelet

Decomposition, Geometric Features.

Abstract: The lack of concentration due to the driver fatigue is a major cause that justifies the high number of

accidents. This article describes a new approach to detect reduced alertness automatically from a system

based on video analysis, to prevent the driver and also to reduce the number of accidents. Our approach is

based on the temporal analysis of the state of opening and closing the eyes. Unlike many other works, our

approach is based only on the analysis of geometric features captured form faces video sequence and does

not need any elements linked to the human being.

1 INTRODUCTION

Many efforts have been made to detect drowsiness

of drivers. The drowsiness is the risk of falling

asleep for a moment with eyes closed and eyes open

at times which is an intermediate state between

waking and sleeping. This state is involuntary and is

accompanied by reduced alertness. A study of the

SNCF (Guy et al., 2008), the characteristic signs of

drowsiness are manifested by behavioral signals

such as yawning, decreased reflexes, heavy eyelids,

itchy eyes, a desire to close eyes for a moment, a

need to stretch, a desire to change positions

frequently, phases of "micro sleeps" (about 2-5

seconds), a lack of memory of the last stops and

trouble keeping head up. In literature, many systems

based on video analysis have proposed for

drowsiness detecting. Special attention is given to

the measures related to the speed of eye closure.

Indeed, the analysis of the size of the iris that

changes its surface according to its state in the video

allows the determination of the eye closure (Rajinda

et al., 2011); (Horng et al., 2004) Other work is

based on detecting the distance between the upper

and the lower eyelids in order to locate eye blinks.

This distance decreases if the eyes are closed and

increases when they are open (Tnkehiro et al., 2002)

(Masayuki et al., 1999) (Hongbiao et al., 2008)

(Yong et al., 2008). These so-called single-variable

approaches can prevent the driver in case of

prolonged eye closure, of its reduced alertness. The

duration of eye closure used as an indication varies

from one work to another. Horng (Horng et al.,

2004), the driver is considered dozing if he / she

close their eyes for 5 consecutive images. Hongbiao

(Hongbiao et al., 2008) estimated that the reduced

alertness is determined if the distance between the

eyelids is less than 60% for a period of 6.66 seconds.

Yong (Yong et al., 2008) divides the state of eye

opening into three categories (open, half open,

closed). This division allows concluding the

drowsiness of the driver if the eyes are kept closed

more than four consecutive images or eyes move

from a state of half open to a closed state for eight

successive images. Besides, the percentages of

detection of fatigue vary in literature. Yong reached

91.16% of correct average rate for recognition of the

condition of the eyes. As for Horng, he explains that

the average accuracy rate for detection of fatigue can

reach 88.9%. Wenhui (Wenhui et al., 2005) achieved

100% as a correct detection rate. The majorities of

this works calculates the results by a study on

subjects varying in number from two to ten

individuals (four individuals for Horng (Horng et al.,

2004) and only two for Yong (Yong et al., 2008).

The second type of approach is called multi-

variable. In this context, the maximum speed

reached by the eyelid when the eye is closed

(velocity) and the amplitude of blinking calculated

from the beginning of blink until the maximum

blinking are two indications that have been studied

by Murray (Murray et al., 2005) . The latter shows

that the velocity amplitude ratio (A/PCV) is used to

prevent the driver one minute in advance. Picot

413

Akrout B., Mahdi W. and Ben Hamadou A..

Drowsiness Detection based on Video Analysis Approach.

DOI: 10.5220/0004210004130416

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2013), pages 413-416

ISBN: 978-989-8565-47-1

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

presents a synthesis of different sizes as the duration

to 50%, the PERCLOS 80%, the frequency of

blinking and the velocity amplitude ratio. Picot

(Picot et al., 2009) shows that its criteria are more

relevant to the detection of drowsiness. These

variables are calculated every second on a sliding

window of the length of 20 seconds. They are fused

by fuzzy logic to improve the reliability of the

decision. This study shows a percentage of 80% of

good detections and 22% of false alarms.

The mono-variable approach enables us to detect

the state of drowsiness, but in a very advanced stage.

We, hence, focus on studying the characteristics

which aim at foretelling the driver, about his fatigue,

before she falls asleep, by analyzing the speed of

closing his eyes. In the case of multi-variable

approaches, we find that some methods are based on

the analysis of the EOG signal. This kind of analysis

requires technical cooperation between the hardware

and the driver. Moreover, these methods need the

use of wide range of parameters, which calls for

more learning data. Nevertheless, video-based

approaches, rest on the segmentation of the iris of

the eye so as to extract the features for the

subsequent steps. The iris segmentation is calculated

from the images difference, in the case of using

infrared cameras. Still, the drawback of such a

method lies in the noise sensitivity of the luminance.

In this context, the Hough Circular transform

method is used to localize the iris. This method

shows sturdiness in the face of the desired shape, an

ability to adapt even to images with poor or noisy

quality as well as an identification of all directions

due to the use of a polar description. Based on the

afore-mentioned remarks, we propose in this paper,

an approach that determines the state of drowsiness

by analyzing the behavior of the driver's eyes from a

video.

2 PROPOSED APPROACH

Figure 1: Detection scheme of drowsiness.

This paper presents an approach for detecting

drowsiness of a driver by studying the behavior of

conductor eyes in real time by an RGB camera

(Figure 1). This approach requires a critical step

presumed through the automatic face detection, first,

and the detection of the box that encompasses both

eyes, in the step that follows.

2.1 Face and Eyes Location

In order to come to delight the face and the eyes, our

approach exploits the object detector of Viola and

Jones that is about a learning technique based on

Haar features. This method (Viola and Jones, 2001)

uses three concepts: the rapid extraction of features

using an integral image, a classifier based on

Adaboost and the implementation of a cascade

structure.

2.2 Iris and Both Eyelids Detection

With reference to the observation of the eye, we note

that human eyes are characterized by horizontal

contours representing the eyelids and the wrinkles or

vertical contours as the ones of the iris. The

application of two-scale Haar wavelet allows

extracting the vertical, horizontal and diagonal

contours. The vertical contours are used in the

localization of the iris of the eye following

application of the Circular Hough Transform. The

use of the wavelet allows us to highlight the

contours that we want to spot more and more. In our

case, the scale of the second rate improves the

contours of the iris and the two lids which are going

to be detected.

2.2.1 Edge Extraction based on 2D Haar

Wavelet

The application of the Haar wavelet allows us to

split the image to find the vertical and horizontal

details for the detection of the iris and both eyelids.

The wavelet transform is characterized by its multi-

resolution analysis. It is a very effective tool for

noise reduction in digital image. We can also ignore

certain contours and keep only the most

representative ones. This type of analysis is allowed

by the multi-resolution.

2.2.2 Iris and the Two Eyelids Detection

based on Circular Hough Transform

In general, the Hough transform (Cauchie et al.,

2008) has two spaces, the space XY and parameter

space which varied according to the detected object.

Our approach involves the detection of the iris by

applying the Hough transform on the vertical details

of the eye. Both eyelids are located using the

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

414

Circular Hough Transform on the image of

horizontal details of the Haar wavelet

decomposition.

2.3 Geometric Features Extraction

With reference to the detection of the iris, the upper

eyelid and lower one, we can extract geometric

features able to characterize the state of drowsiness

of a driver.

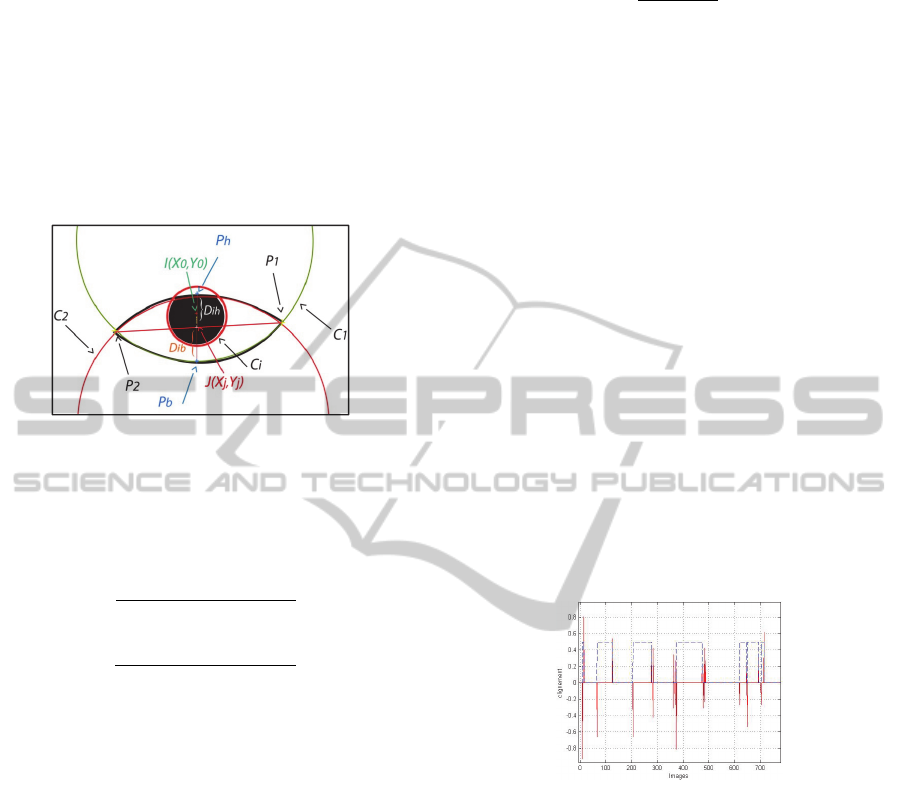

Figure 2: Representation of features from the figure of the

eye.

We propose two geometric features

and

(Figure 2). These features represent the distance

between the point and respectively the point

and

, (Equation 1).

(1)

The use of the point J as a reference calculation, not

the point I of the center of the iris, allows us to avoid

the uncertainties of calculation. These uncertainties

are due to the positioning of the point I above the

upper eyelid of the eye, resulting in miscalculations

of the featureD

.

2.4 Experimental Study, Analysis of

Eye Closure and Result of Blink

Detection

In this section, we describe the experimental studies

we conducted to validate the two features

and

previously proposed. In order to produce

realistic data, a human subject is placed in front of

our system to simulate different possible movements

of the head, the eyelids and the positions of the iris,

probably related to different states of fatigue. This

experiment consists of studying the temporal

variation of both features and normalized the initial

state of the eye (Equation 2).

(2)

The initial value V

is calculated at the beginning of

the algorithm when the eyes were open by 75%. The

first analysis consists of determining the change of

state positions, whether the beginning of closure of

the eyes or the beginning of opening. The first

derivative of the function ′

allows finding these

variations which are characterized by a negative

abrupt change for early closing of the eyes, and by a

positive sudden change for early opening of the eye.

Experimentally and after analyzing the video

recordings, we found that the derivative of the signal

requires a low pass filter to eliminate noise. The

selecting of a period considered as blink, is located

in the case where ′

falls below (closure) and

above (opening) of a well-defined threshold.

Sharabaty (Sharabaty et al., 2008) showed that the

maximum duration of a normal blink is 0.5 seconds

whereas above this value is considered prolonged

closure. In our case the normal or long blink is

validated if it satisfies the two previous conditions

and its period must exceed 0.15 seconds. Figure 3

shows blink validated with interrupted lines and not

validated (between frames 479 and 490) for the

signal of′

.

Figure 3: Figure shows the blinking validated.

The purpose of the second experiment is to study

the difference in closing speed of a person's eyes in a

normal state and in another drowsy one. Figure 9

shows the average time of closing of the eyes of a

normal person and of a sleepy one. Generally, for a

tired individual, the eye closure is slower than that

of a vigilant person. This measure can be used as a

factor to determinate the level of fatigue. The

cumulative change (Equation 3) allows calculation

from the period when the individual has their eyes

closed.

′

(3)

Fatigue states are characterized by a continuous

segment because the evolution of ′

is too low.

DrowsinessDetectionbasedonVideoAnalysisApproach

415

This time is lower than 0.5 second (Sharabaty et al.,

2008) for a normal blink and it is greater than two

seconds (Dinges et al., 1998) for a blink of an

individual in a state of drowsiness. In brief, the three

conducted experiments show the difference between

an alert individual and a sleepy one by analyzing the

variation of the features D

andD

. Generally, the

detection of states of drowsiness is processed by the

location of blinking first, then by studying the speed

of blinking and finally by calculating the duration of

eye closure. The driver is considered in a state of

drowsiness if the speed of eye closure exceeds 1

second or the duration of eye closure is greater than

2 second.

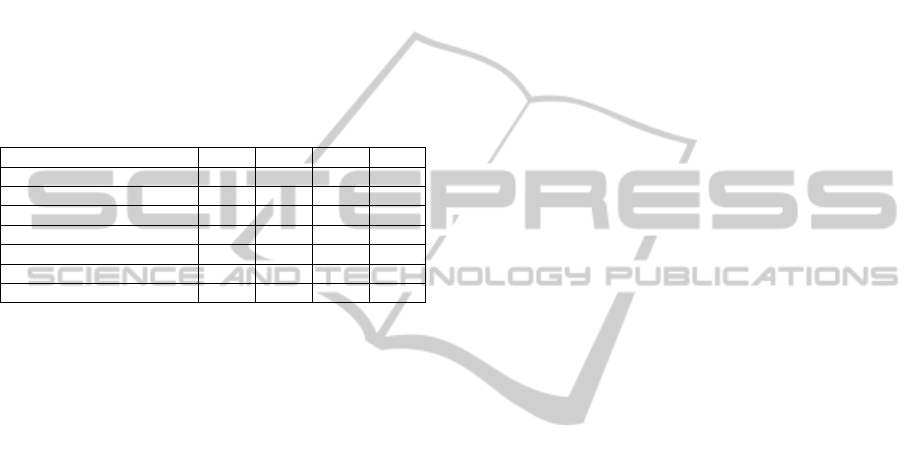

Table 1: Result of drowsiness detection.

Videos 1 2 3 4

Real drowsiness 9 7 4 8

Generated alarm 8 7 4 8

False negative 1 0 0 0

False positive 0 0 0 0

Correct alarm 8 7 4 7

Correct rate of fatigue 88% 100% 100% 87%

Accuracy rate of fatigue 100% 100% 100% 100%

Table 1 shows an example of drowsiness

detection result conducted on the four test videos.

The opinion of an expert in this step is essential to

determine the actual driver drowsiness. Our

approach does not generate false alarms for the

detection of fatigue in all videos. On the other hand,

there are alarm errors of states of fatigue due to false

detections of the iris that influences the detection of

both eyelids and subsequently the values of features.

3 CONCLUSIONS

This paper presents an approach to the detection of

reduced alertness, based on video analysis. Our

system uses a study of the eyes by analyzing the

video of several topics. The steps of detecting

drowsiness consist firstly of locating a driver face

and eyes by applying Haar features. The circular

Hough transform allows the detection of the center

of the iris and the intersection points of both eyelids

(Figure 2) in order to capture two geometric

features. The blink detection, the frequency and the

period of eye closure are major factors in

determining the fatigue of an individual. The

guidance, other facial feature as yawning, the

monitoring and the 3D pose estimation of the face

are also indicators of the state of vigilance of an

individual. These data are the subject of our future

work in order to improve the obtained results.

REFERENCES

Cauchie, J. Fiolet, V. Villers, D., (2008). Optimization of

an Hough transform algorithm for the search of a

center. Pattern Recognition. USA.

Dinges, D. Mallis, M. Maislin, G. Powell, J., (1998)

Evaluation of techniques for ocular measurement as

an index of fatigue and the basis for alertness

management. Departement of Transportation Highway

Safety. USA.

Hongbiao, M. Zehong, Y. Yixu, S. Peifa, J., (2008). A

Fast Method for Monitoring Driver Fatigue Using

Monocular Camera, Proceedings of the 11th Joint

Conference on Information Sciences. China.

Horng,W. Chen, C. Chang, Y., (2004). Driver fatigue

detection based on eye tracking and dynamic template

matching. IEEE International Conference on

Networking, Sensing & Control. USA.

Masayuki, K. Hideo, O. Tsutomu, N., (1999). Adaptability

to ambient light changes for drowsy driving detection

using image processing. UC Berkeley Transportation

Library

Murray, J. Andrew, T. Robert, C., (2005). A new method

for monitoring the drowsiness of drivers. International

Conference on Fatigue Management In

Transportation Operations. USA.

Picot, A. Caplier, A. Charbonnier, S., (2009). Comparison

between EOG and high frame rate camera for

drowsiness detection. IEEE Workshop on Applications

of Computer Vision. USA.

Rajinda, S. Budi, J. Sara, L. Arthur, H. Saman, H. Peter,

F., (2011). Comparing two video-based techniques for

driver fatigue detection: classification versus optical

flow approach, Machine Vision and Applications.

USA.

Tchernonog, G. Pelle, B. Larbi, M., (2008) Expertise

CHSCT Unité de production de Brétigny conduite.

France.

Viola, P.Jones, M. (2001). Rapid object detection using a

boosted cascade of simple features. CVPR. Kauai.

USA.

Wenhui, D. Xiuojuan, W., (2005). Driver Fatigue

Detection Based On The Distance Of Eyelid. IEEE

Workshop Vlsi Design & Video Tech. China.

Yong, D. Peijun, M. Xiaohong, S. Yingjun, Z., (2008).

Driver Fatigue Detection based on Eye State Analysis.

Proceedings of the 11th Joint Conference on

Information Sciences. China.

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

416