Dynamic Scenario Adaptation Balancing Control, Coherence

and Emergence

Camille Barot, Domitile Lourdeaux and Dominique Lenne

Heudiasyc - UMR CNRS 7253, Universit

´

e de Technologie de Compi

`

egne, 60200 Compi

`

egne, France

Keywords:

Planning, Virtual Environments for Training.

Abstract:

As the industrial world grows more complex, virtual environments have proven to be interesting tools to train

workers to procedures and work situations. To ensure learning and motivation, a pedagogical control of these

environments is needed. However, existing systems either do not provide control over running simulations,

limit user agency, need the authors to specify a priori all possible scenarios, or allow for incoherent behaviours

from the simulated technical system or the virtual characters. We propose in this paper a model for a dynamic

and indirect control of the events of a virtual environment. Our model aims to ensure the control, coherence,

and emergence of situations, in virtual environments designed for training in highly complex work situations.

1 INTRODUCTION

With the growing complexity of work situations and

procedures, training has become a key issue in the in-

dustrial world. In the past decade, there has been an

increasing interest in the use of virtual reality envi-

ronments for training. Realistic simulation of work

situations allows for an efficient training, especially

when cost, accessibility, or dangerosity aspects pre-

vent learners from being put in genuine work sit-

uations. However, the framed simulations that are

used to train workers to technical gestures and stan-

dard procedures are no longer sufficient to answer all

training needs, especially when stepping out of ini-

tial training to address continuous training of expe-

rienced workers, or when critical domains such as

risk management are concerned. In such cases, the

virtual environment scenario has to be controlled dy-

namically according to pedagogical rules, in order to

provide interesting and relevant situations, adapted to

the learner’s profile and needs. Yet, controlling such

complex environments is far from being trivial, and

existing systems often have to make trade-offs on ei-

ther the control, coherence (i.e. perceived consistency

in characters motivations and technical system reac-

tions), or emergence of new situations. We believe

that these three aspects need to be respected so that

the virtual environments can be used for real training

sessions. Therefore, we propose a scenario adaptation

process that allows our system to control the unfold-

ing of events in highly complex simulations contain-

ing virtual autonomous characters, while keeping the

global scenario and individual behaviours coherent.

Moreover, this process is dynamic, so that the adapta-

tion can happen during the course of the simulation,

to cope with learners agency and fit their evolving

training needs. After presenting the limits of exist-

ing systems, we will expose our model for a dynamic

scenario adaptation. We will illustrate our proposal

with a scenario example, then discuss its limitations.

We will then conclude and expose the perspectives we

foresee for this work.

2 RELATED WORK

Most virtual environments designed for training or

educational purposes rely on a pedagogical scenario,

which is a sequence of learning activities the user

has to perform, whether a predetermined sequence of

scenes (Magerko et al., 2005), or a prescribed pro-

cedure the user has to execute (Mollet and Arnaldi,

2006). Yet, in order to stay within the boundaries of

these predefined paths, these virtual environments of-

fer a strong guidance to the trainee, often stopping

them whenever they make a mistake or an action that

does not belong to the training scenario. By limiting

the user’s freedom of action, these systems prevent

trial-and-error approaches. Moreover, scenarios defi-

nition requires a large amount of work when the train-

ing addresses long procedures or complex situations.

232

Barot C., Lourdeaux D. and Lenne D..

Dynamic Scenario Adaptation Balancing Control, Coherence and Emergence.

DOI: 10.5220/0004213802320237

In Proceedings of the 5th International Conference on Agents and Artificial Intelligence (ICAART-2013), pages 232-237

ISBN: 978-989-8565-39-6

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

On the other hand, some environments focus on

the simulation part by opting for the “sandbox” ap-

proach. They let the user act freely as the simulation

evolves and reacts to their actions (Shawver, 1997).

In these environments, the only pedagogical control is

that of the initial state of the world. However, without

any real-time pedagogical control, the efficiency of

the training is not guaranteed. The simulation could

go in any direction, yet we would want it to be rele-

vant to the profile and current state of the trainee.

One approach for ensuring both user agency and

pedagogical control is to define a multilinear graph

of all possible scenarios. In (Delmas et al., 2007),

the set of possible plots is thus explicitely modelized

through a Petri Network. However, when the com-

plexity of the situations scales up, it becomes difficult

to predict all possible courses of actions. Especially

when the training aims towards difficult coactivity sit-

uations, the decision making processes and emotions

expressed by the virtual characters have to be believ-

able, and therefore are often based on complex psy-

chological models. In this case, it becomes impossi-

ble to foresee all possible combinations, and the vir-

tual characters have to be given some autonomy in

order for the scenarios to emerge from their actions.

To combine autonomous characters and a global

scenario control is however fundamentally problem-

atic: the controlling entity cannot influence au-

tonomous characters behaviour unless they provide

specific “hooks”. And indeed, most of the environ-

ments that include complex, emotional characters,

provide only semi-autonomous characters, like in

Scenario Adaptor (Niehaus and Riedl, 2009). These

characters can be given orders, whether at behavioural

level, or on a higher, motivational level. The main

weakness of this approach is that nothing ensures that

the global behaviour of the characters will stay co-

herent. Yet coherence, especially in training environ-

ments, is essential to maintain to ensure the user’s un-

derstanding of what is going on, as shown by (Si et al.,

2010).

Few systems combine a global control of the

simulation with the possibility of new situations to

emerge from user actions or characters autonomous

behaviour, all the while ensuring their coherence. An

attempt to unite these different aspects has been made

in Thespian (Si et al., 2009), by computing characters

motivation at the start of the simulation so that the

events would unfold according to an human-authored

plot. However, this system doesn’t allow dynamic

scenario adaptation, in that we would like not to have

a predefined plot but one that changes in real-time ac-

cording to what learning situations are considered rel-

evant in line with the user’s activity.

3 PROPOSITION

3.1 Approach

As we aim to train to complex work situations

with notable human-factors component, we adopt a

character-based approach, using autonomous cogni-

tive characters in order for such situations to emerge

from both their interactions and those of the learner.

We propose a scenario adaptation module called

SELDON (ScEnario and Learning situations adapta-

tion through Dynamic OrchestratioN) that aims to en-

sure a pedagogical control over a complex simulation,

without restraining the emergence of new situations

or disturbing the coherence of objects or characters’

behaviours. Our model lets the user act freely, and in-

directly adjusts the unfolding of events. The scenario

adaptation occurs not only at the start of the simula-

tion but during its course, by dynamically generating

learning situations that would be relevant to learner’s

profile and activity traces, then altering the scenario

in real-time to guide him towards these situations.

SELDON is composed of two modules: TAILOR

and DIRECTOR. TAILOR produces learning situa-

tions and constraints over the global scenario based

on the current state of the learner (Carpentier et al.,

2013). This paper focuses on DIRECTOR, which is

in charge of generating a scenario respecting these

constraints. The global scenario adaptation process

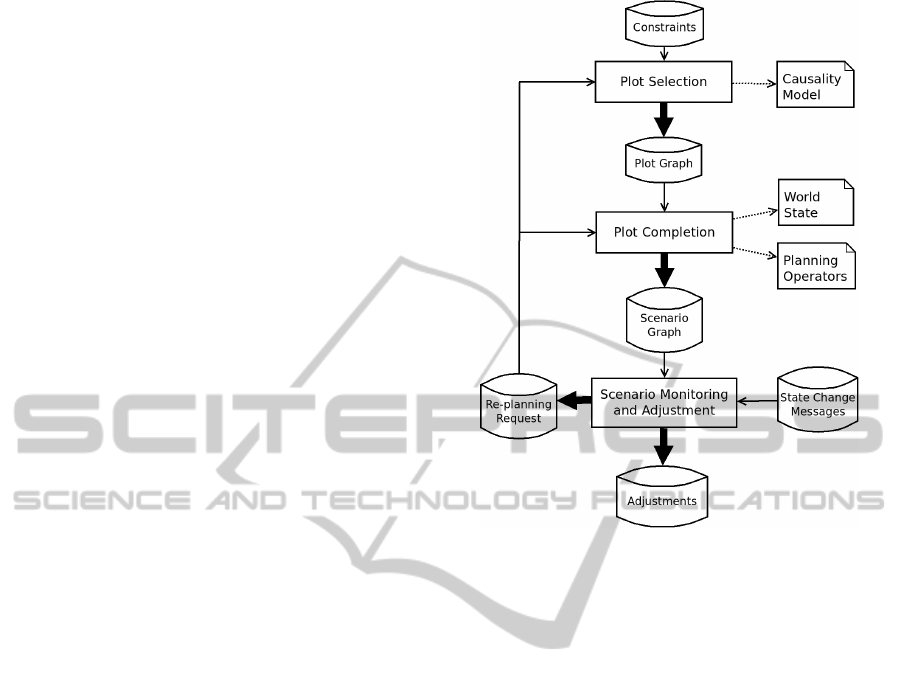

is described in Figure 1, here shown whithin the HU-

MANS platform (Carpentier et al., 2013).

Figure 1: System architecture.

DynamicScenarioAdaptationBalancingControl,CoherenceandEmergence

233

3.2 Scenario Adaptation Process

The evolution of objects states and the virtual char-

acters actions in the HUMANS platform are deter-

ministic and ruled by a domain model and an activity

model, both described in (Carpentier et al., 2013). As

DIRECTOR uses the same set of models, it can pre-

dict the unfolding of events (i.e. state changes) from

a given initial situation (i.e. a set of states), and thus

control the scenario by changing this initial situation.

However, such adjustments could only happen at

the start of the simulation, while some of the states

might not have to be initialized right away. For in-

stance, the broken state of a spring object is crucial in

determining the happening of a leak. However, until

this leak happens, this state remains unknown to the

learner, as it is not associated with a graphical repre-

sentation. The late commitment (Swartjes, 2010) of

such particular parameters can thus be used to direct

the scenario dynamically. The system would then be

able to adapt to changes in the pedagogical objectives,

and to cope with the user’s deviation from what had

initially been planned.

The DIRECTOR module is in charge of produc-

ing a set of adjustments that would adapt the scenario

according to the constraints that are given by TAI-

LOR. This process is presented in Figure 2: first, DI-

RECTOR selects a set of partially ordered plot points;

then, these plot points are instantiated and used as

landmarks to plan a scenario graph consisting of both

the actions and behaviours of the simulation’s agents

that are wanted in the scenario, and the adjustments

needed for them to occur; finally, it tracks the changes

in the environment in order to check that the events

unfold according to the scenario, and triggers the ad-

justments when they are needed.

3.2.1 Input: Constraints

DIRECTOR takes as input two types of constraints:

1. Situation Constraints: the situations that the user

should encounter, or that should be avoided. One

of these situations is tagged as the goal situation.

Each constrained situation s is associated whith a

desirability value des ∈ [−1, 1].

2. Metric Constraints: global constraints on the sce-

nario, such as its complexity or believability (see

Table 1). Each one is associated with an accept-

able interval I ⊂ R and a strength str ∈ [−1, 1].

3.2.2 Output: Adjustments

To influence the simulation without modifying objects

states or giving orders to the virtual characters, DI-

RECTOR can request three types of adjustments:

Figure 2: DIRECTOR scenario adjustment process.

1. Late Commitments: several states are marked as

late commitable during the domain definition, and

their initial values can be specified during the sim-

ulation. Those states can be object states (e.g.

amount of liquid in a container) or character states

(e.g. experience level of an agent);

2. Happenings: exogenous events (e.g. storm, phone

call) can be triggered, as long as they don’t have

to be explained by the domain model;

3. Occurence Constraints: depending on the granu-

larity of the domain modeling, some behaviours

can have uncertain effects, that rely on a random

draw (the spattering linked to a leak, for instance);

DIRECTOR can constrain the occurrence or non-

occurrence of such effects.

3.2.3 Plot Selection

To reduce the computational cost, the scenario gen-

eration process is split into two phases: first, a plot

is selected from a predefined causality model. Then,

this plot is instantiated and completed through a plan-

ning process.

A plot is a partially ordered graph composed of

two types of plot points: events and situations. These

plot points are non-instanciated, referring to objects

types and not instances. DIRECTOR selects the plots

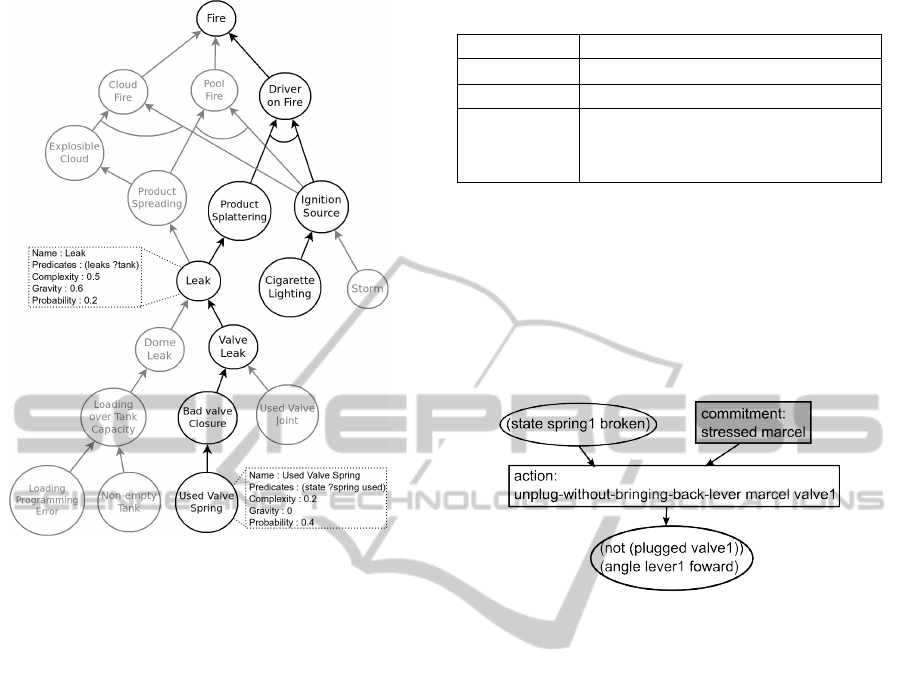

from a causality model (Figure 3) — an AND/OR

ICAART2013-InternationalConferenceonAgentsandArtificialIntelligence

234

Figure 3: Extract of a causality model and a selected plot.

graph created by risk-analysis experts, which con-

tains causal relationships between possible acciden-

tal events and unwanted situations, tagged with their

respective complexity, gravity, and relative probabili-

ties. Possible plot graphs are generated by expanding

the portion of the causal graph from the goal situa-

tion, splitting at every OR gate. These plot graphs

are evaluated regarding the situation constraints (de-

sirability of the situations appearing in the graph) and

the metric constraints given by TAILOR. One plot

is randomly selected between the plots with the best

evaluation.

Given the representation of a plot, where:

p ∈ P =

h

N

p

, A

p

i

is a plot, i.e. a set of partially or-

dered plot points

N

p

is a set of nodes (situations or events) n

A

p

is a set of causal arcs a

The evaluation functions of currently implemented

metric constraints are presented in Table 1.

3.2.4 Plot Completion

Once the plot is selected, the concepts it refers to are

instantiated in regard to existing objects and agents

in the current state of the world, so that each plot

point contains a set of predicates that can be used as

a goal by a planning system — in our case, as land-

marks (Porteous et al., 2010). However, unlike most

Table 1: Metric Constraints.

Type Evaluation function

complexity

|

A

p

|

∗

∑

n∈N

p

complexity(n)

gravity max

n∈N

p

gravity(n)

believability

∏

n∈N

0

p

F(n)

where N

0

p

are the leaf nodes of p

and F the statistical frequency of n

planning-based systems, the resulting plan is not pre-

scriptive, but serves as a prediction of the simulation

behaviour. The aim of the completion phase is thus

not to generate the optimal plan between two plot

points A and B, but rather to compute the adjustments

needed to bring the simulation from situation A to sit-

uation B, and predict the autonomous agents actions

from situation A given these adjustments.

Figure 4: Scenario plan between two plot points.

The use of actions as planning operators to com-

pute a plan between A and B would offer no guarantee

that the agents would actually follow this plan. On the

other hand, using adjustments would require to com-

pute a whole scenario prediction each time an opera-

tor is tried, to check if this prediction contains situa-

tion B. Therefore, two types of operators are used:

1. Prediction Operators: they represent the actions

and behaviours that will happen in the simulation.

The actions operators are generated from the ac-

tivity model, and framed by preconditions so that,

in a given situation, only one operator would be

applicable for a given agent (the one that the vir-

tual character would select in the simulation). The

simulation behaviours are generated from the do-

main model.

2. Adjustment Operators: they correspond to

the commitments, happenings and occurence-

constraints.

The scenario generation module iterates over the

arcs between couples of plot points to replace each

one with a plan composed of these five types of op-

erators. Goal situation, current world states and op-

erators are feeded to a external planner (here we use

(Hoffmann, 2001)).

DynamicScenarioAdaptationBalancingControl,CoherenceandEmergence

235

3.2.5 Scenario Monitoring and Adjustment

The generated scenario plan is translated into a di-

rected acyclic graph allowing to monitor the execu-

tion of actions and behaviours and to trigger the com-

mitments, happenings and occurence-constraints. In-

deed, adjustments are not triggered when the scenario

is generated, but instead when they are needed, in or-

der for the situation to stay open as long as possible.

4 EXAMPLES

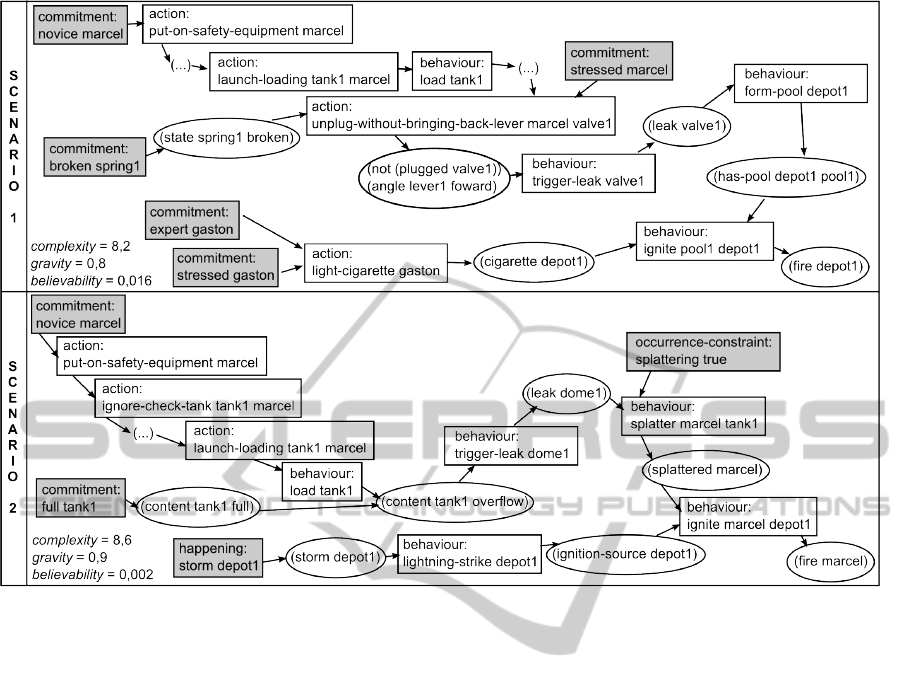

Figure 5 presents two examples of scenario genera-

tion for an hazardous matter loading training applica-

tion (Barot et al., 2011), with two virtual characters,

Marcel and Gaston. In the top example, the learner

is a beginner, therefore the set of constraints that DI-

RECTOR receives as input is:

• goal = Fire

• desirability(DomeLeak) = −0.3

• believability ∈ [0.01, 0.03]

DIRECTOR first selects a plot, represented by the el-

liptic nodes in the graph. This plot is instantiated re-

garding to the environment, and then completed with

the planning operators, represented by the rectangu-

lar nodes in the graph. The white ones correspond to

prediction operators, while the grey ones correspond

to adjustment operators. The unfolding of events in

the simulation will be monitored by DIRECTOR, and

the planned commitments will be triggered. The sec-

ond set of constraints is made for a more experienced

learner:

• goal = Fire

• desirability(ValveLeak) = −0.8

• gravity ∈ [0.9, 1]

The generated scenario contains the 3 types of ad-

justments: commitments, happenings and occurrence-

constraints.

5 DISCUSSION

We proposed a model of a scenario adaptation process

to control character-based complex simulations with-

out limiting user agency or forcing incoherent char-

acter or objects behaviours. Our aim was to balance

control, emergence and coherence, and indeed:

• the scenario can be controlled by setting different

constraints over situations or global metrics;

• scenarios emerge from the domain, activity and

causality models without having to be described

explicitely, and different scenarios can emerge

from different sets of constraints;

• the coherence of behaviours is ensured by the in-

direct adjustments.

As for now TAILOR can only provide DIREC-

TOR with situations constraints on situations that ap-

pear in the causality model, because the situation con-

straints filtering is made before the planning phase.

Therefore, if an unwanted situation should appear be-

tween two selected plot points, the DIRECTOR mod-

ules would allow it. Moreover, the linear scenario

generation process does not allow to take into account

as a selection criteria the number of commitments that

are needed for the realization of the scenario. Yet,

this should be minimized, as the less commitments

are made, the more possibilities are left for adaptation

afterwards. An iterative process mixing plot selection

and planning phases might solve these problems.

Another prospect concerns the prediction of

learner actions. They are currently planned the same

way as virtual characters actions, by considering the

learner to be a virtual character with an “average” pro-

file. It would be interesting to link the planning pro-

cess with the learner monitoring module so that the

prediction of user activity would be more accurate.

6 CONCLUSIONS

We proposed in this paper a model to dynamically

adapt the scenario of a virtual environment in a

character-based, emergent approach. This model

uses late commitment, exogenous events and occur-

rence constraints on uncertain consequences to influe

on characters and system behaviour without spoil-

ing their coherence. Combinatorial explosion is dealt

with by splitting the scenario generation process into

two parts: first, the selection of a plot in a predefined

plot graph, then the instantiation and completion of

this plot according to the current world state.

A first prototype has been implemented inside of

the HUMANS software platform and seems to be giv-

ing satisfying results in generating coherent scenarios,

however only the scenario generation part is function-

nal and the execution and monitoring remains yet to

be tested. As for now, the relevance and diversity

of the generated scenarios is limited by the linearity

of the selection, instantiation and planning processes.

The next version of the SELDON model will have to

deal with a more iterative generation process in order

to improve on this point.

ICAART2013-InternationalConferenceonAgentsandArtificialIntelligence

236

Figure 5: Examples of scenario generation from two sets of constraints.

ACKNOWLEDGEMENTS

The authors would like to thank the PICARDIE re-

gion and the European Regional Development Fund

(ERDF) for the funding of this work. We also thank

Fabrice CAMUS for his work on the field analyses.

REFERENCES

Barot, C., Burkhardt, J.-M., Lourdeaux, D., and Lenne, D.

(2011). V3S, a virtual environment for risk manage-

ment training. In JVRC11: Joint Virtual Reality Con-

ference of EGVE - EuroVR, pages 95–102.

Carpentier, K., Lourdeaux, D., and Mouttapa-Thouvenin, I.

(2013). Dynamic selection of learning situations in

virtual environment. In ICAART 2013.

Delmas, G., Champagnat, R., and Augeraud, M. (2007).

Plot monitoring for interactive narrative games. In In-

ternational conference on Advances in computer en-

tertainment technology - ACE ’07, page 17.

Hoffmann, J. (2001). FF: the Fast-Forward planning sys-

tem.

Magerko, B., Wray, R. E., Holt, L. S., and Stensrud, B.

(2005). Improving interactive training through indi-

vidualized content and increased engagement. In The

Interservice/Industry Training, Simulation & Educa-

tion Conference (I/ITSEC), volume 2005.

Mollet, N. and Arnaldi, B. (2006). Storytelling in virtual

reality for training. In Technologies for E-Learning

and Digital Entertainment, volume 3942, pages 334–

347.

Niehaus, J. and Riedl, M. (2009). Scenario adaptation:

An approach to customizing Computer-Based training

games and simulations. In AIED 2009: 14 th Interna-

tional Conference on Artificial Intelligence in Educa-

tion Workshops Proceedings, page 89.

Porteous, J., Cavazza, M., and Charles, F. (2010). Applying

planning to interactive storytelling: Narrative control

using state constraints. ACM Trans. Intell. Syst. Tech-

nol., 1(2):10:110:21.

Shawver, D. M. (1997). Virtual actors and avatars in a flex-

ible user-determined-scenario environment. In IEEE

Virtual Reality Annual International Symposium, page

170171.

Si, M., Marsella, S., and Pynadath, D. (2009). Directorial

control in a decision-theoretic framework for interac-

tive narrative. Interactive Storytelling, page 221233.

Si, M., Marsella, S., and Pynadath, D. (2010). Importance

of well-motivated characters in interactive narratives:

an empirical evaluation. In Third joint conference on

Interactive digital storytelling, page 1625.

Swartjes, I. (2010). Whose Story Is It Anyway. PhD thesis,

University of Twente.

DynamicScenarioAdaptationBalancingControl,CoherenceandEmergence

237