Use of Biological Motion based Cues and Ecological Sounds

in the Neurorehabilitation of Apraxia

Marta Bieńkiewicz

1

, Georg Goldenberg

2

, José M. Cogollor

3

, Manuel Ferre

3

,

Charmayne Hughes

1

and Joachim Hermsdörfer

1

1

Technische Universität München, Lehrstuhl für Bewegungswissenschaft,

Uptown München-Campus D Georg-Brauchle-Ring 60/62 D-80992, München, Germany

2

Städtisches Klinikum München, Klinik für Neuropsychologie,

Englschalkinger Straße 77, Munich 81 925, Germany

3

Centro de Automática y Robótica, Universidad Politécnica de Madrid, ETSI Industriales, Madrid, Spain

Keywords: Apraxia, Dynamic Cues, Biological Motion, Ecological Sounds.

Abstract: Technological progress in the area of informatics and human interface platforms create a window of

opportunities for the neurorehablitation of patients with motor impairments. The CogWatch project

(www.cogwatch.eu) aims to create an intelligent assistance system to improve motor planning and

execution in patients with apraxia during their daily activities. Due to the brain damage caused by

cardiovascular incident these patients suffer from impairments in the ability to use tools, and to sequence

actions during daily tasks (such as making breakfast). Based on the common coding theory (Hommel et al.,

2001) and mirror neuron primate research (Rizzolatti et al., 2001) we aim to explore use of cues, which

incorporate aspects of biological motion from healthy adults performing everyday tasks requiring tool use

and ecological sounds linked to the action goal. We hypothesize that patients with apraxia will benefit from

supplementary sensory information relevant to the task, which will reinforce the selection of the appropriate

motor plan. Findings from this study determine the type of sensory guidance in the CogWatch interface.

Rationale for the experimental design is presented and the relevant literature is discussed.

1 INTRODUCTION

Smart prompting technologies play an increasing

role in providing daily assistance to people suffering

from compromised cognitive functioning (Seelye et

al., 2011). The aim of the proposed study is to

investigate the application of dynamic cues based on

biological motion and ecological sounds to improve

daily activities in a group of apraxic stroke

survivors. In its simplest form, apraxia can be

defined as a loss of the ability to use tools or

perform hand gestures, and is typically caused by

brain tissue loss in the parietal and frontal lobe areas

of the left hemisphere (Goldenberg et al., 2007).

Recent research conducted in UK, suggests that

approximately 24% of stroke survivors have

persistent signs of apraxia (Bickerton et al., 2012).

Another group of patients that have difficulties with

daily activities are those with action disorganisation

syndrome (ADS). ADS patients typically suffer from

bilateral frontal brain damage and have similar

deficits to apraxic patients when performing daily

activities (Cooper et al., 2005). Increasing the

independence of apraxia and ADS patients during

everyday tasks (such as making tea, grooming, and

eating) is a matter of priority for patients, their

caregivers, and clinicians (Hazel, 2012).

2 BACKGROUND

2.1 Apraxia as a Stroke Consequence

Apraxia is a neurological sign of brain damage,

behaviourally observed as the inability to perform

skilled, well-learned motor acts. Those acts might be

transitive (i.e. involving tools or multi-step actions,

such as sawing a piece of wood or teeth brushing) or

intransitive (e.g., pantomime of gestures and

imitation tool use) (Gross and Grossman, 2008).

These two different subtypes of apraxia are

221

Bie

´

nkiewicz M., Goldenberg G., M. Cogollor J., Ferre M., Hughes C. and Hermsdörfer J..

Use of Biological Motion based Cues and Ecological Sounds in the Neurorehabilitation of Apraxia.

DOI: 10.5220/0004237902210227

In Proceedings of the International Conference on Health Informatics (HEALTHINF-2013), pages 221-227

ISBN: 978-989-8565-37-2

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

classified separately – conceptual apraxia and

ideomotor apraxia respectively, but might coincide

together. An important feature of apraxia is that it is

independent from other sensory, or motor problems

(such as paresis or spasticity) that might occur as a

consequence of stroke damage. Due to apraxia,

patients are prone to conceptual, spatial and

temporal errors during daily activities that can lead

to potential health and safety issues (e.g., grasping

the knife by the sharp end, pouring boiling water

onto the kitchen desktop). Common errors include

problems with sequencing in multistep actions (e.g.,

action addition, omission, anticipation and

perseveration errors) along with conceptual errors

(e.g., misuse of objects, object substitution,

hesitation, toying and mislocation) (Petreska et al.,

2007). The difficulty with the use of objects is a

source of frustration for patients, making them rely

on caregivers for help in everyday life. This loss of

independence compounds the problems associated

with stroke, and makes the consequences of apraxia

more debilitating (Hanna-Plady et al., 2003).

Although clinicians have established a set of

well-developed assessment tools to trigger apraxic

behaviour, the underlying mechanisms of error

production are still not well understood (Goldenberg

et al.,. 1996). The cognitive aspect of apraxia (i.e.,

the loss of knowledge or memory of how the action

is performed) is often accompanied by changes in

the kinematic pattern of the movement in the

unimpaired hand. In the latter case, features such as

grip aperture, time to peak velocity, deceleration

phase are pointed out as possible kinematic markers

of apraxia (Laimgruber et al., 2005). These

difficulties, along with the loss of conceptual

knowledge, create a void that could be filled by

intelligent assistive technology that could facilitate

patients’ motor performance during everyday

activities.

Finding optimal cues (prospective information)

that could be implemented in the assistive system for

patients is one of the priorities of the CogWatch

project. In this paper we explore and compare static

and dynamic cues that could aid the daily

functioning of patients. Of particular interest is to

validate whether cues can provide information ahead

of time to facilitate the motor execution in patients

who exhibit impaired kinematic performance.

Dynamic cues can incorporate both spatial and

temporal aspects of the movement, or as in the case

of prompts, provide verbal instruction about the next

step of the action. In this study, we will investigate

the use of cues that account for both conceptual and

kinematic deficits in apraxia. This will be presented

in the following section.

2.2 The Aim of the Cogwatch Project

The aim of the CogWatch project is to provide an

online prompting system that can be implemented in

the home setting (Giachristis and Randall,

submitted). This system is comprised of three

technological modules: instrumented tools that

provide feedback to the system indicating how an

object is being manipulated, CogWatch wrist worn

device that provides feedback about the errors and

prompting instructions to a patient, and a Virtual Task

Execution (VTE) screen that provides prospective

sensory guidance about the appropriate object and

tool action and execution.

Additionally, the feedback system will be based

on two types of cues: semantic feedback (i.e.,

information that the error was made via visual,

sensorimotor and auditory channel) and dynamic cues

that provide prospective information as to how the

next step of action should be performed (based on the

motion capture recordings of healthy individuals

performing and action with the same objects and task

scenario).

2.3 Prospective Cues

Cues are widely embedded in our everyday

environment, for example in traffic lights that signal

when it is a safe time to cross a road. The term ‘cue’

can be defined as external information relevant to

the movement execution (Horstink et al., 1993). In

general, cues are divided into sources of spatial and

temporal information. Gibson (1950) proposed that

the environment is built of structured arrays of

sensory information that we can perceive through

different sensory modalities. Spatial cues can

provide information about where to aim a movement

(e.g., target space on an object) whilst temporal cues

can provide information about when to execute the

movement (e.g. a metronome that triggers a “move

now” response). For example, if the goal of the

action is to grasp a moving object, successful

interception with the target requires the action to be

coupled to the motion path of the object. Object in

motion can provide continuous information on both

the spatial and temporal dimensions that directly

guides our motor planning. By comparison, a

stationary object can only provide spatial cues about

the current placement of the object without

additional temporal information. Using sensory

information to guide goal-directed actions relies on

the indirect sensorimotor pathways in the basal-

HEALTHINF2013-InternationalConferenceonHealthInformatics

222

ganglia circuitry that are involved in motor learning

and interaction with the environment (Redgrave et

al., 2010).

Cues can be presented in all sensory modalities:

visual, acoustic, haptic, somatosensory (Sveistrup,

2004), and can be static or dynamic (Amblard et al.,

1985). So far, the use of cueing paradigm has been

most effectively applied to rehabilitation of motor

impairments in Parkinson’s disease, such as gait

(Nieuwboer et al., 2007), and arm movements in the

hemiparesis following stroke (Thaut et al., 2002).

Since apraxia is a multifaceted syndrome, an

effective cueing method needs to prevent patients

from committing both conceptual and dynamic

errors during their task performance. We propose

that cues based on biological motion recordings of

healthy adults performing transitive and intransitive

movements are potentially a best fit for further

exploration as they have a potential to encapsulate

both motor concept and efficient motor programme.

2.4 Two Lines of Exploration: Cues

based on Biological

Motion vs. Ecological Sounds

The aim of this study is to verify which cues are best

tailored to the needs of patients with apraxia

syndrome, based on the plethora of research

dedicated to action perception coupling. To do so,

we propose two paths of exploration. First, to test

the cues based on the biological motion of a healthy

adult performing the action (transitive and non-

transitive), incorporated in a simulation of a moving

avatar on the VTE screen. Second, to test the use of

ecological sounds linked to achieving the goal of the

action (e.g., the sound of the tooth brushing) alone

and incorporated in the animations.

2.4.1 Incorporating Biological Motion

into Avatar Movement

The idea that the observation of another person’s

movement can activate motor representation stems

from the research on primate subjects conducted by

the Parmesan group of Rizzolatti (Rizzolatti and

Craighero, 2004); (Prinz, 1997). Researchers have

identified a class of neurons, referred in the literature

as ‘mirror neurons’ that are activated when one

performs a motor action, and when observing

another individual performing this action (primate

and human studies). Perception of the action of

others not only discharges neurons involved in

motor representation, but also consequently

facilitates acts that are congruent to the displayed

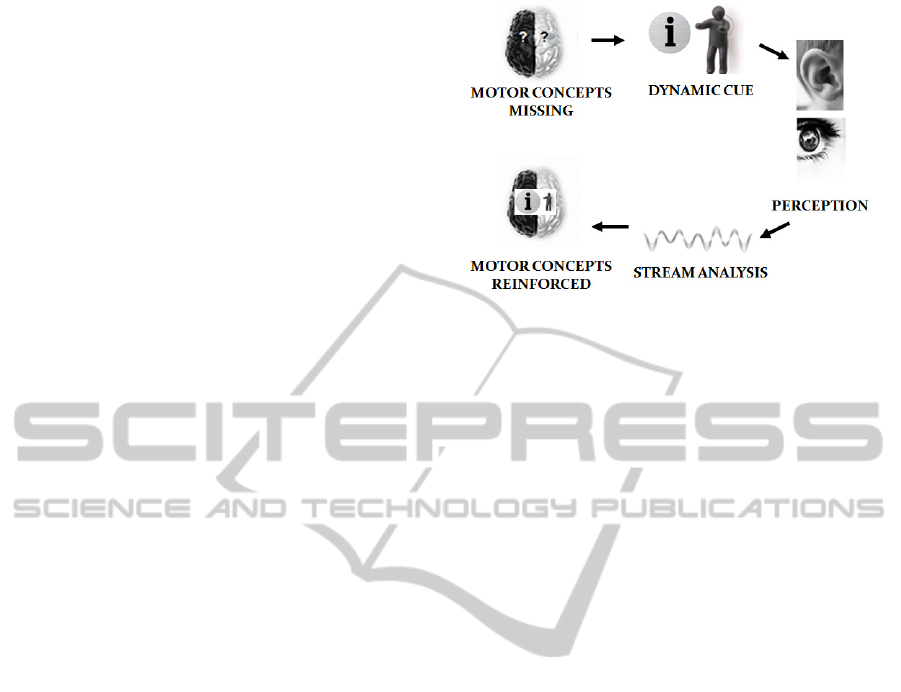

Figure 1: Illustration of the cueing paradigm for apraxia

patients and how relevant information about the motor

concept could be extracted from the dynamic cues of

different sensory modalities. Information perceived (for

example via visual channel) is analysed in terms of

temporal and spatial features and its relevance (meaning)

which feeds into missing motor concepts and movement

planning.

action performance and inhibits actions that are not

congruent with the observed motion (Christensen et

al., 2011). In primate research, the discharge of

mirror neurons was demonstrated to be linked to the

availability of the goal of action. That is, transitive

actions only (Rizzolatti et al., 2001). In humans,

however, the activity of the mirror neuron network is

not determined by the goal of action, as intransitive

acts also can elicit discharge of those neurons

(Jackson et al., 2006); (Fadiga et al., 1995); (Tanaka

and Inui, 2002). Interestingly, intransitive actions are

the first actions that are copied by human newborns

(Meltzoff and Moore, 1977). Gallese and Goldman

(1998) suggested that the mirror neuron network

plays a crucial part in motor learning in humans, as

it facilitates the acquisition of motor skills (such as

tool use) through imitation. In summary, there is a

body of research suggesting that sensory information

linked to the action in the environment, is mapped

onto the motor representation of this action

(Rizzolatti et al., 2001). Usually action observation

imposes 3

rd

person perspective perception (see

Figure 2). However, Jackson et al. (2006) have

found a subtle difference (in terms of brain

activation patterns) between observation and

imitation of motor acts in humans, depending on the

perspective of the person perceiving a motor event.

Their work, based on fMRI investigation, suggests

more tight links between 1

st

person perspective and

the sensorimotor system, compared to the 3

rd

person

perspective that requires additional transformation of

the visuospatial perspective. In line with their

findings, observing an action from a 1

st

person

UseofBiologicalMotionbasedCuesandEcologicalSoundsintheNeurorehabilitationofApraxia

223

perspective does not require additional mental

operations, and therefore might be better suited to

imitation learning. Indeed, limited evidence from

clinical apraxia research suggests that patients with

apraxia make less motor errors in pantomime when

an experimenter is demonstrating the action when

seated next to them, rather than vis-à-vis (Jason,

1983).

Figure 2: Illustration of the 1

st

and 3

rd

person perspective

on the example of tooth brushing. The photo on the left

illustrates 1

st

person perspective (left hand), the photo on

the right 3

rd

person view (left hand).

The novel aspect of this study is to use biological

motion displays that provide temporal characteristics

of the movement that can be incorporated into motor

planning (see Figure 1). From the mirror neuron

perspective, the observation of an avatar performing

an action (e.g., tooth brushing) has the potential to

facilitate action performance in apraxia patients.

Limited research on the use of cues in apraxia

suggests that the addition of somaesthetic cues may

improve certain aspects of apraxic movement (de

Renzi et al., 1982). The supplementary information

prescribed by the cues might promote the selection

of an adequate motor program (Hermsdörfer et al.,

2006).

2.4.2 Ecological Sounds

Vision is the most intuitive sensory modality that

allows us to interact with the environment and to

regulate our movements (Goodale, and Humphrey,

1998).

However, recent scientific evidence suggests

that vision, audition, and haptic modalities are

partially interchangeable (Zahariev and MacKenzie,

2007). Humans are capable of identifying both the

size and shape of an object dropped onto a surface

using auditory feedback of the event, without any

visual information or previous knowledge of the

object (Lakatos et al., 1997); (Grassi, 2005);

(Houben et al., 2005). The common coding approach

suggests that motor representations can be accessed

through different sensory modalities, as the sensory

representations are shared in the brain (Hommel et

al., 2001). Importantly, previously mentioned

research on motor neurons, also shows that mirror

neurons discharge when the action-related sounds

were made available without the action being visible

(Kohler et al, 2002); (Keysers et al, 2003). Another

recent investigation has demonstrated that mirror

neurons can respond to newly acquired associations

between sounds (not relevant to action) and actions

via learning (Ticini et al., 2012). This suggests that

the human brain operates on a high-order sensory-

motor representation level, which is independent

from the afferent input and directed at the goal of

actions. In line with ideomotor theory, some authors

speculate that action goals are tangled with the

expected sensory feedback (Prinz, 1997).

Ecological sounds (i.e. sounds that are linked to

the goal of the production, such as the sound of a

nail hit by a hammer, a passing helicopter, or a

bouncing ball) contain spatio-temporal

characteristics that allow humans to successfully

interact with the environment. For example, to avoid

colliding with a moving object (e.g., passing car) or

intercept with the environment (e.g., catch a ball). In

addition, ecological sounds have been demonstrated

to boost motor performance in Parkinson’s disease.

Young et al. (2012) used the sound of walking on

gravel, with different gait characteristics (e.g., stride

amplitude, cadence) to facilitate walking in people

with moderate to advanced Parkinson’s. In those

patients, an improvement in gait pattern was

observed when their steps were mapped to the sound

of walking, delivered via headphones.

In this study, we propose using sounds that are

associated with everyday actions – the sound of

tooth brushing, sawing and hammering. These cues

will be compared to verbal commands, avatar

displays in the 1

st

and 3

rd

person (see Figure 2), and

still pictures.

2.5 Experimental Design

In the pilot study phase, cues will be validated in a

group of five neurologically healthy adults. Further,

a group of 10 patients with recognised apraxia

features will be tested, along with 10 age-matched

controls to create baseline performance for the

patient group. Patients will be recruited from the

Klinik für Neuropsychologie in Städtisches

Klinikum München (STKM), Germany. Ethical

approval was granted for the study by a local

committee.

Control and patient groups will be tested under

three conditions:

A. Actual action execution

B. Pantomime with action object visible

C. Pantomime with action object not visible

HEALTHINF2013-InternationalConferenceonHealthInformatics

224

Three daily tasks will be introduced:

Tooth brushing

Sawing a piece of wood

Hammering

The set of cues will comprise of:

I. No cues, instruction of the task given before

the task starts

II. Verbal prompts, step by step

III. Simulation 1st perspective

IV. Simulation 3rd perspective

V. Simulation 1st perspective+sounds

VI. Simulation 3rd perspective+sounds

VII. Sounds only

VIII. Still pictures sequence

IX. Still pictures sequence plus sounds

Participants will perform each of the tasks under

three conditions with the set of IX cueing blocks for

each condition. Motor performance will be recorded

using high frequency video cameras and an

ultrasonic motion capture system (Zebris).

Pantomime and tool use will be assessed using the

Goldenberg & Hagmann (1998) 2 point scale. The

following kinematic variables noted in the literature

as motor features of apraxia (Laimgruber et al.,

2005) will be analysed: movement time (MT), peak

velocity (PV), deceleration phase (DP) and grip

aperture (GA). In addition, errors will be categorized

according to the error classification proposed by

Schwartz et al. (1995). To observe how presentation

of cues can moderate motor planning in patients,

error corrections will be subdivided into two

categories: pre-error correction (e.g., hesitation

before performing a movement in a wrong spatial

position) and post-error correction (e.g., changing

the spatial position after the movement proved to be

ineffective). Number of errors committed and

kinematic features of the movement will be

compared across conditions for each patient and

groups between patients and age-matched controls.

3 RESEARCH AIMS

The purpose of this research is to explore how

patients with apraxia can benefit from the

availability of artificial environmental sensory

information that can be harnessed for motor

planning. The rationale behind the study is based on

the assumption that patients will be able to extract

this information to aid their own cognitive and

kinematic deficits of tool use and gesture

production. The critical question is to define which

cues have the greatest potential to be utilised by

patients to prospectively guide their movements and

effectively be implemented in the CogWatch

interface.

In addition, the study aims to explore how

perception of biological motion displays moderate

behaviour in apraxia patients, depending on the

perspective of perception (1

st

person versus 3

rd

person). To the best knowledge of the authors, this

study is the first to address this issue in individuals

with apraxia. We hypothesize that task performance

will improve in terms of the decreased number of

conceptual and motor errors committed when

dynamic cues are made available (the ones based on

the biological motion and ecological sounds), in

comparison to task performance when no cues are

available, or they are static and do not contain

biological movement patterns.

4 CONCLUSIONS

The work on this project is still ongoing and requires

detailed experimentation with selected stroke

survivors that show persistent signs of apraxia. On

the basis of the data analysis from the proposed

study, the most effective method of cueing action

use and pantomime will be implemented in the

CogWatch interface.

The current development of the CogWatch

system aims to provide a user-friendly, home-based

solution for stroke survivors that will improve their

degree of independence during activities of daily

living. In addition, the user-experience will be

enhanced by providing a customised interface for

each patient. The interface will be tailored to the

personal preferences and requirements, to increase

the comfort of interaction, ecological validity and

efficacy of the system. For example, the avatar used

in the simulation will be personalised to resemble

the patient and his home environment. The cueing

method will also be adjusted to the capabilities of

the patients, for example, whether the person is able

to move both arms or just one (hemiparesis). This

non-immersive system will allow the transfer of

information that is necessary to successfully

accomplish daily activities such as preparation of

food.

Importantly, the CogWatch system is designed to

be an entirely non-invasive and affordable solution

for the majority of patients. The technology

UseofBiologicalMotionbasedCuesandEcologicalSoundsintheNeurorehabilitationofApraxia

225

implemented in the project so far (KinectTM system

and VTE monitor) is relatively low cost and is under

continuous development by the manufacturers.

Furthermore, it offers flexibility in adjusting and

updating the interface to the changing needs of

patients (for example, partial recovery). Finally, the

CogWatch system is targeted at home-based

rehabilitation of the patients in a chronic phase of

the disease, when often they do not have further

access to rehabilitation from their medical providers.

The CogWatch system will deliver affordable and

easy access to therapeutic intervention at patients’

homes, which is the most comfortable and natural

setting, without a need for supervision.

An additional benefit for the patient is that the

Cogwatch system will be able to provide an online

and objective assessment of the patient’s progress

that can partially replace the clinic visits

(Giachristis, & Randall; submitted). In sum, the

CogWatch system will be driven by readily available

and cost efficient technology that can be customised

to patient requirements in order to heighten user-

friendliness.

ACKNOWLEDGEMENTS

This work was funded by the EU STREP Project

CogWatch (FP7-ICT- 288912).

REFERENCES

Amblard, B., Cremieux, J., Marchand, A. R., & Carblanc,

A. (1985). Lateral orientation and stabilization of

human stance: Static versus dynamic visual cues.

Experimental Brain Research, 61(1), 21-37.

Bickerton, W., Riddoch, J., Samson, D., Balani,A., Mistry,

B., & Humphreys, G., (2012). Systematic assessment

of apraxia and functional predictions from the

Birmingham Cognitive Screen, Journal of neurology,

neurosurgery & psychiatry with practical neurology,

83 (5), 513-52.

Christensen, A., Ilg, W., & Giese, M. A. (2011).

Spatiotemporal tuning of the facilitation of biological

motion perception by concurrent motor execution. The

Journal of Neuroscience, 31(9), 3493-3499.

Cooper, R. P.; Schwartz, M.; Yule, P. & Shallice, T.

(2005). The simulation of action disorganisation in

complex activities of daily living. Cognitive

Neuropsychology 22 (8) 959-1004.

De Renzi, E., Faglioni, P., & Sorgato, P. (1982) Modality

specific and supramodal mechanisms of apraxia. Brain,

105, 301–312.

Fadiga, L., Fogassi, L., Pavesi, G., & Rizzolatti G. (1995)

Motor facilitation during action observation: a

magnetic stimulation study, Journal of

Neurophysiology, 73, 2608–2611.

Gallese,V., & Goldman, A., (1998). Mirror neurons and

the simulation theory of mind-reading, Trends in

Cognitive Sciences, 2(12), 493-501.

Giachristis, C., & Randall, G. (submitted) CogWatch:

Cognitive Rehabilitation for Apraxia and Action

Disorganization Syndrome Patients. 6

th

International

Conference of Health Informatics.

Gibson, J. J. (1950). Perception of the visual world.

Boston: Houghton Mifflin.

Goldenberg, G., Hermsdörfer, J., Glindemann, R., Rorden,

C., & Karnath, H. O. (2007). Pantomime of tool use

depends on integrity of left inferior frontal cortex.

Cerebral Cortex, 17, 2769-2776.

Goldenberg, G., & Hagmann, S. (1998) Therapy of

activities of daily living in patients with apraxia.

Neuropsychological Rehabilitation, 8(2), 123–41.

Goldenberg, G., Hermsdörfer, J., & Spatt, J. (1996).

Ideomotor apraxia and cerebral dominance for motor

control. Cognitive Brain Research, 3, 95-100.

Goodale, M. A., & Keith Humphrey, G. (1998). The

objects of action and perception. Cognition, 67(1-2),

181-207.

Grassi, M. (2005). Do we hear size or sound? balls

dropped on plates. Attention, Perception, &

Psychophysics, 67(2), 274-284.

Gross, R., & Grossman, M. (2008). Update on apraxia.

Current neurology and neuroscience reports, 8 (6),

490-496.

Hazell, A. (2012) CogWatch – Cognitive Rehabilitation of

Apraxia and Action Disorganisation Syndrome:

Assessing the requirements of Healthcare

Professionals, Stroke Survivors and Carers. UK Stroke

Forum Conference 2012 Proceedings.

Hanna-Pladdy, B., Heilman, K.M., & Foundas, A.L.

(2003) Ecological implications of ideomotor apraxia:

evidence from physical activities of daily living.

Neurology, 60, 487–490.

Hermsdörfer, J., Hentze, S., & Goldenberg, G. (2006)

Spatial and kinematic features of apraxic movement

depend on the mode of execution. Neuropsychologia,

44, 1642–1652.

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W.

(2001). The theory of event coding (TEC): A

framework for perception and action planning.

Behavioral and Brain Sciences, 24(05), 849.

Horstink, M., De Swart, B., Wolters, E., & Berger, H.

(1993). Paradoxical behavior in Parkinson's disease.

In: E. C Wolters, & P. Scheltens (Eds). Mental

dysfunction in Parkinson's disease; proceedings of the

European congress on mental dysfunction in

Parkinson's disease. Amsterdam: Vrije Universiteit,

1993. Proceedings of the European Congress on

Mental Dysfunction in Parkinson's Disease.

Houben, M., Kohlrausch, A., & Hermes, D. (2005). The

contribution of spectral and temporal cues to the

auditory perception of size and speed of rolling balls.

Acta Acustica United with Acustica, 91(6), 1007.

HEALTHINF2013-InternationalConferenceonHealthInformatics

226

Jackson, P., Meltzoff, A., & Decety, J. (2006) Neural

circuits involved in imitation and perspective-taking.

Neuroimage, 15 (31), 429-439.

Jason, G. W. (1983). Hemispheric asymmetries in motor

function: Left hemisphere specialization for memory

but not performance. Neuropsychologia, 21 (1), 35-45.

Keysers, C., Kohler, E., Umiltà, M.A., Nanetti, L.,

Fogassi, L., & Gallese, V. (2003). Audiovisual mirror

neurons and action recognition, Experimental Brain

Research, 153 (4), 628-636.

Kohler, E., Keysers, C., Umilta, M., Fogassi, L., Gallese,

V., & Rizzolatti, G. (2002). Hearing sounds,

understanding actions: action representation in mirror

neurons. Science, 297, 846–848.

Laimgruber, K., Goldenberg, G., & Hermsdörfer, J. (2005)

Manual and hemispheric asymmetries in the execution

of actual and pantomimed prehension,

Neuropsychologia, 43(5), 682-692.

Lakatos, S., McAdams, S., & Caussé, R. (1997). The

representation of auditory source characteristics:

Simple geometric form. Attention, Perception, &

Psychophysics, 59(8), 1180-1190.

Meltzoff, A. N., & Moore, K. (1977) Imitation of Facial

and Manual Gestures by Human Neonates, Science

198 (4312), 75-78.

Nieuwboer, A., Kwakkel, G., Rochester, L., Jones, D., van

Wegen, E., Willems, A. M. et al. (2007). Cueing

training in the home improves gait-related mobility in

Parkinson’s disease: The RESCUE trial. Journal of

Neurology, Neurosurgery & Psychiatry, 78(2), 134-

140.

Petreska, B., Adriani, M., Blanke, O. & Billard,

A. (2007) Apraxia: a review. In C. von Hofsten (Ed.).

From Action to Cognition. Progress in Brain Research.

Elsevier. Amsterdam. Vol. 164, pp. 61-83

Prinz, W. (1997). Perception and action planning.

European Journal of Cognitive Psychology, 9 (2),

129-154.

Redgrave, P., Rodriguez, M., Smith, Y., Rodriguez-Oroz,

M., Lehericy, S., Bergman, H., et al. (2010). Goal-

directed and habitual control in the basal ganglia:

Implications for Parkinson's disease. Nature Reviews.

Neuroscience, 11(11), 760-772.

Rizzolatti, G., Fogassi, L., & Gallese, V. (2001)

Neurophysiological mechanisms underlying the

understanding and imitation of action. Nature

Reviews. Neuroscience, 2(9), 661-670.

Rizzolatti, G., & Craighero, L. (2004). The mirror-neuron

system. Annual Review of Neuroscience, 27(1), 169-

192.

Schwartz, M. F., Montgomery, M. W., Fitzpatrick-

desalme, E. J., Ochipa, C., Coslett, H. B., & Mayer, N.

H. (1995). Analysis of a disorder of everyday action.

Cognitive Neuropsychology, 12, 863-892.

Seelye, A., Schmitter-Edgecombe, M., Das, B., & Cook,

D. (2011). Application of Cognitive Rehabilitation

Theory to the Development of Smart Prompting

Technologies, Biomedical Engineering, IEEE

Reviews, 99, pp.1, 0.

Sveistrup, H. (2004). Motor rehabilitation using virtual

reality. Journal of NeuroEngineering and

Rehabilitation, 1(1), 10.

Tanaka, S., & Inui, T. (2002) Cortical involvement for

action imitation of hand/arm postures versus finger

configuration : An fMRI study, NeuroReport 13,

1599-1602.

Thaut, M. H., Kenyon, G. P., Hurt, C. P., McIntosh, G. C.,

& Hoemberg, V. (2002). Kinematic optimization of

spatiotemporal patterns in paretic arm training with

stroke patients. Neuropsychologia, 40(7), 1073-1081.

Ticini, L.F., Schutz-Bosbach, S., Weiss, C., Casile, A., &

Waszak, F. (2012). When sounds become actions:

higher-order representation of newly learned action

sounds in the human motor system. Journal of

Cognitive Neuroscience, 24(2), 464-474.

Young, W.R., Rodger, M.W.M., & Craig, C.M. (2012)

Using ecological event-based acoustic guides to cue

gait in Parkinson's disease patients [abstract].

Movement Disorders, 27 Suppl 1 :119.

Zahariev, M., & MacKenzie, C. (2007). Grasping at ‘thin

air’: Multimodal contact cues for reaching and

grasping. Experimental Brain Research, 180(1), 69-

84.

UseofBiologicalMotionbasedCuesandEcologicalSoundsintheNeurorehabilitationofApraxia

227