A Tensor-based Clustering Approach for Multiple Document

Classifications

Salvatore Romeo

1

, Andrea Tagarelli

1

, Francesco Gullo

2

and Sergio Greco

1

1

DIMES Dept., University of Calabria, Cosenza, Italy

2

Yahoo! Research, Barcelona, Spain

Keywords:

Document Clustering, Itemset Mining, Tensor Modeling and Decomposition.

Abstract:

We propose a novel approach to the problem of document clustering when multiple organizations are provided

for the documents in input. Besides considering the information on the text-based content of the documents,

our approach exploits frequent associations of the documents in the groups across the existing classifications,

in order to capture how documents tend to be grouped together orthogonally to different views. A third-order

tensor for the document collection is built over both the space of terms and the space of the discovered fre-

quent document-associations, and then it is decomposed to finally establish a unique encompassing clustering

of documents. Preliminary experiments conducted on a document clustering benchmark have shown the po-

tential of the approach to capture the multi-view structure of existing organizations for a given collection of

documents.

1 INTRODUCTION

Real-world data often presents inherent character-

istics that raise challenging issues to their effec-

tive analysis, namely high dimensionality and multi-

faceted nature. Tensor representation and decompo-

sitions (Cichocki et al., 2009; Kolda and Bader, 2009)

are natural approaches for handling large amounts of

such data. They are indeed considered as a multi-

linear generalization of matrix factorizations, since

all dimensions or modes are retained thanks to multi-

linear models which can produce unique and mean-

ingful components.

There exists a large variety of application domains

in which tensor representation and decompositions

are being increasingly applied, ranging from chemo-

metrics and psychometrics to signal processing, from

computer vision to neuroscience and image recogni-

tion (Kolda and Bader, 2009). The applicability of

tensor models has also attracted growing attention in

pattern recognition, information retrieval, and data

mining related fields to solve problems such as di-

mensionality reduction (Liu et al., 2005), link anal-

ysis (Kolda and Bader, 2006), and document cluster-

ing (Liu et al., 2011; Kutty et al., 2011). Focusing on

the clustering task, advanced methods can go beyond

the usual approach that yields a partition of the input

dataset to overlapping, fuzzy, or probabilistic cluster-

ing; however, many real-world clustering-based ap-

plications are increasingly demanding for taking into

account some knowledge about the multi-faceted na-

ture of a document collection, for which multiple pre-

defined organizations might be available.

In this paper we are interested in extending the

task of document clustering, which is traditionally

performed according only to the textual content infor-

mation of the documents, to the case in which a sin-

gle clustering is desired starting from multiple orga-

nizations of the documents. Such existing document

organizations can be seen as multiple views over a

document collection which might correspond to user-

provided, possibly alternative organizations, or to the

results separately obtained by one or more document

clustering algorithms or supervised text classifiers.

For example, news articles can be clustered based on

the topics they discuss, or to reflect some existing cat-

egorization of major themes or meta-information they

are related to.

The underlying assumption of our approach is

that, when the documents can be naturally grouped in

multiple ways, a single new clustering encompassing

all existing document organizations can be obtained

by integrating the textual content information with

knowledge on the available groupings of the docu-

ments. However, since no information about class la-

bels of the available groupings is assumed to be avail-

200

Romeo S., Tagarelli A., Gullo F. and Greco S. (2013).

A Tensor-based Clustering Approach for Multiple Document Classifications.

In Proceedings of the 2nd International Conference on Pattern Recognition Applications and Methods, pages 200-205

DOI: 10.5220/0004269102000205

Copyright

c

SciTePress

able, our key idea to accomplish the task relies on

the identification of frequent co-occurrences of docu-

ments in the groups across the existing organizations,

in order to capture how documents tend to be grouped

together orthogonally to the different views. Based on

the discovered frequent associations of the documents

as well as on the usual term-document representation

of the text contents, a novel tensor model is built and

decomposed to finally establish a unique clustering of

documents that might be suited to reflect the multi-

dimensional structure of the initial document organi-

zations.

To the best of our knowledge, no other existing

tensor-based approach for document clustering is con-

ceived to handle the availability of multiple organi-

zations for the documents in input. We would like

also to point out that, while the problem of extract-

ing a single clustering from multiple existing ones is

actually not novel—a large corpus of research in ad-

vanced data clustering has been developed to address

the problem of ensemble clustering (see (Ghosh and

Acharya, 2011) for an overview)—in this work we

face the problem from a different perspective, which

relies on a tensorial representation of a set of clus-

terings and also relaxes a main assumption in ensem-

ble clustering methods, whereby the feature relevance

values are assumed to be unavailable.

2 PROPOSED APPROACH

We are given a collection D = {D

1

,...,D

|D|

} of

documents, which are represented over a set V =

{w

1

,...,w

|V |

} of terms. We are also given a set

of organizations of the documents in D, denoted as

C S = {C

1

,...,C

H

}, such that each C ∈ C S repre-

sents a set of homogeneous groups of documents. We

hereinafter generically refer to each of the document

organizations as a document clustering and to each of

the homogeneous groups of documents as document

cluster.

In the following we describe in detail the pro-

posed framework, broken down into four main steps,

namely extraction of closed document-sets from mul-

tiple document organizations, construction of the ten-

sor model, decomposition of the tensor, and induction

of a document clustering.

2.1 Extracting Closed Frequent

Document-sets

In our setting, an item corresponds to a document in

D, hence an itemset is a document-set, while a trans-

action corresponds to a cluster that belongs to any

clustering in C S . As a transactional dataset is a multi-

set of transactions, there will be as many transactions

as the number of clusters over all document cluster-

ings in C S .

We extract document-sets that frequently oc-

cur (given a user-specified minimum-support thresh-

old) over all available clusters, specifically frequent

document-sets that are closed, since we aim to mini-

mize the size of the set of patterns discovered while

ensuring its completeness. However, in contrast to

typical scenarios of transactional data, a peculiarity of

our context is that the size of the transactional dataset

(i.e., the number of document clusters) is much lower

than the size of the item domain (i.e., the number of

documents). Thus, in order to extract (closed) fre-

quent document-sets, a traditional (closed) frequent

itemset mining approach could be prohibitive, as it

would require a cost which is exponential with the

number of documents.

Given a transactional dataset T , we denote with

t a transaction, with T ⊆ T a set of transactions

(transaction-set), and with I

T

=

∩

t∈T

t the itemset

shared by the transactions (i.e., clusters) in T . A

transaction-set containing d transactions is said a d-

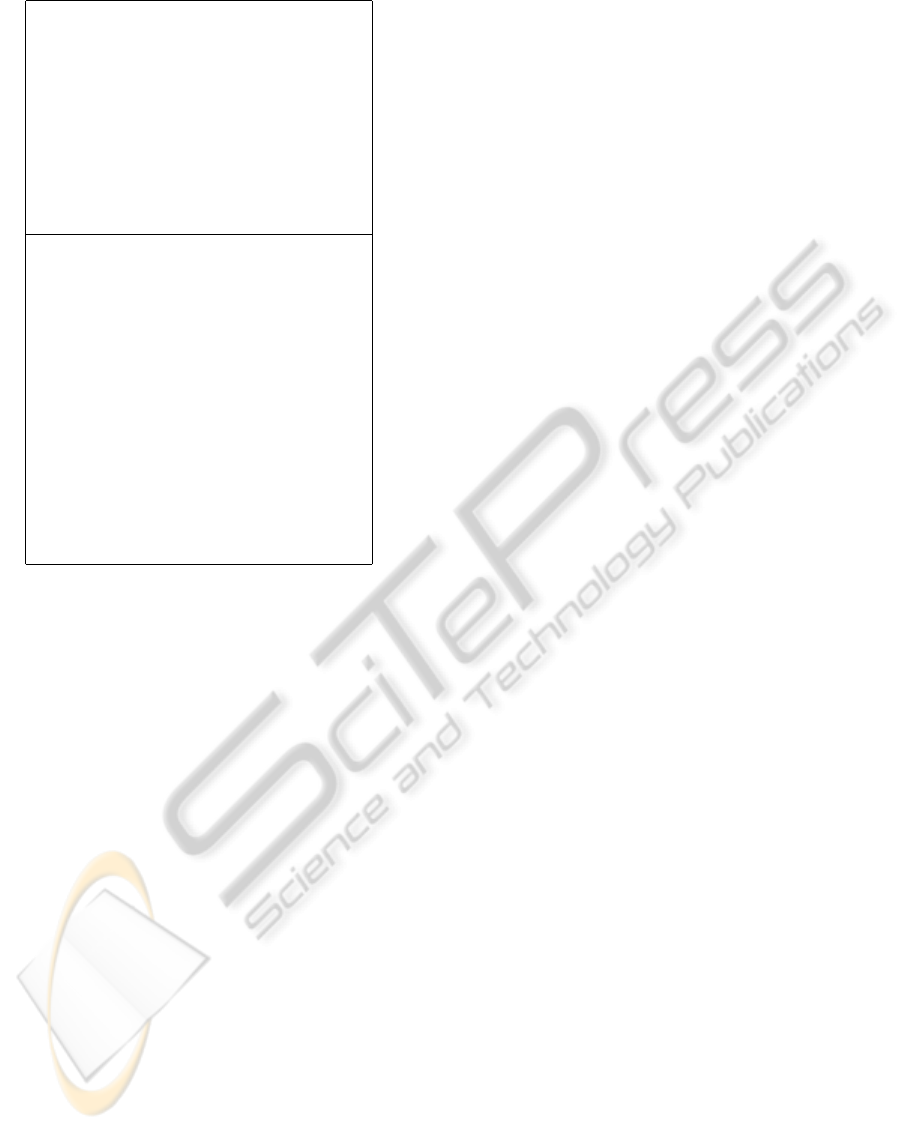

transaction-set, with 1 ≤ d ≤ |T |. Figure 1 shows

the proposed closed frequent itemset miner, which

uses a level-wise search where d-transaction-sets are

used to explore (d + 1)-transaction-sets. To perform

the search, an enumeration tree is incrementally built

such that each node represents a pair of the form

(T,I

T

). The initial set of 1-transaction-sets (Line 2)

is used to compute (1 + it)-transaction-sets, at each

iteration it of the search procedure; this procedure

terminates after at most |T | levels along each branch

of the enumeration tree. To avoid redundant unions

among transaction-sets (hence, intersections among

their itemsets), the ordering between the first trans-

actions of any two transaction-sets is involved at each

iteration (Lines 8 and 10). Note that, as the search

space is being explored, the support of the item-

sets obtained by the intersection of a growing num-

ber of transactions is monotonically non-decreasing.

Therefore, every candidate closed itemset (Line 11) is

checked to be a frequent itemset (Line 13). The merge

function (Line 5) searches for all pairs that have the

same common itemset and yields a single pair con-

taining the union of the transaction-sets. Finally, the

set CI of all closed frequent itemsets is created from

the set of I

T

elements in C

IT-P

(function extractIT,

Line 6).

ATensor-basedClusteringApproachforMultipleDocumentClassifications

201

Input:

A transactional dataset T ,

a minimum support threshold minsup

Output:

A set CI of closed frequent itemsets

begin

1. C

IT-P

←

/

0

2. P ← {({t},t) | t ∈ T }

3. P

0

← P

4. search(P

0

,P,C

IT-P

)

5. C

IT-P

← merge(C

IT-P

)

6. CI ← extractIT(C

IT-P

)

end

procedure search(P

′

,P,C

IT-P

)

7. for all (T,I

T

) ∈ P

′

do

8. let t be the first transaction in T

9. P

′′

←

/

0

10. for all ({t

i

},t

i

) ∈ P,t < t

i

do

11. T

j

← T ∪ {t

i

}, I

T

j

← I ∩ {t

i

}

12. P

′′

← P

′′

∪ {

T

j

,I

T

j

}

13. if support(I

T

j

) ≥ minsup then

14. remove from C

IT-P

all (T

k

,I

T

k

) such that

15. T

j

⊇ T

k

and I

T

k

= I

T

j

16. if I

T

j

is a closed itemset for the itemsets

17. in C

IT-P

then

18. C

IT-P

← C

IT-P

∪ {(T

j

,I

T

j

)}

19. endIf

20. endIf

21. endFor

22. search(P

′′

,P,C

IT-P

)

23. endFor

Figure 1: Intersection-based closed frequent itemset mining

algorithm.

2.2 Building a Tensor for Multiple

Document Organizations

To model a set of documents contextually to multiple

available organizations of the documents, we define

a third-order tensor such that the first mode corre-

sponds to the closed frequent document-sets extracted

from the set of document organizations, the second

mode to the terms representing the document con-

tents, and the third mode to the documents.

Formally, if we denote with C DS =

{CDS

1

,...,CDS

|C DS |

} the set of closed frequent

document-sets extracted from C S , we define a tensor

X ∈ R

I

1

×I

2

×I

3

, where I

1

= |C DS |, I

2

= |V |, and

I

3

= |D|. Hence, the i

3

-th slice of the tensor refers to

document D

i

3

and is represented by a matrix of size

I

1

× I

2

, where the (i

1

,i

2

)-th entry will be computed to

determine the relevance of term

w

i

2

in document

D

i

3

contextually to the document-set CDS

i

1

.

Given a document D, a term w, and a frequent

document-set CDS (we omit here the subscripts for

the sake of readability of the following formulas), our

aim is to incorporate the following aspects in the term

relevance weight: (1) the popularity of the term in the

document, (2) the rarity of the term over the collec-

tion of documents, (3) the rarity of the term locally to

the frequent document-set, and (4) the support of the

frequent document-set.

Aspects 1 and 2 refer to the notions of term fre-

quency and inverse document frequency in the clas-

sic t f .id f term relevance weighting function. For-

mally, the frequency of term w in document D, de-

noted as t f (w,D), is equal to the number of occur-

rences of w in D. The inverse document frequency

of term w in the document collection is defined as

id f (w) = log(|D|/N(w)), where N(w) is the number

of documents in D that contain w.

To account for aspect 3, we introduce an in-

verse document-set frequency factor: ids f (w,CDS) =

log(1 + |CDS|/N(w,CDS)), where |CDS| is the

number of documents belonging to the frequent

document-set CDS, and N(w,CDS) denotes the num-

ber of documents in CDS that contain w. Moreover,

the ids f weight is defined to be equal to zero if term

w is absent in all documents of CDS; otherwise, note

that ids f weight is always a positive value even in

case of maximum popularity of the term in the fre-

quent document-set.

As for aspect 4, we exploit the support

of the frequent document-set: s(CDS) =

exp(supp(CDS)/(max

CDS

′

∈C DS

supp(CDS

′

))),

where supp (CDS) is the support of CDS, i.e., the

number of clusters in every C ∈ C S that contain CDS.

Note that the support of a document-set is bounded

by |C S | in case of non-overlapping clusters in each

C .

Finally, by combining all four factors,

the overall term relevance weighting func-

tion has the form: weight(CDS, w, D) =

t f (w,D) id f (w) ids f (w,CDS) s(CDS).

2.3 Tensor Decomposition

According to the tensor decomposition notations

in (Cichocki et al., 2009), we will use symbols ⊗, ~,

and ×

n

to denote the Kronecker product, the element-

wise product, and the mode-n product (with n ∈

{1,2,3}), respectively. Moreover, if we denote with

G the core tensor and with A its factor matrices, sym-

bols

ˆ

X = G ×{A}, A

⊗

−n

, G ×

−n

{A} and X

(n)

denote

the product G ×

1

A

(1)

×

2

A

(2)

×

3

A

(3)

, the Kronecker

product between all factor matrices except A

(n)

, the

mode-n product between G and all factor matrices ex-

cept A

(n)

and the matricization along mode n of tensor

X , respectively.

Nonnegative Tucker Decomposition (NTD) is the

state-of-the-art in tensor decomposition, which allows

for taking into account all interactions between the

tensor modes. Particularly, we refer to the fast Be-

ICPRAM2013-InternationalConferenceonPatternRecognitionApplicationsandMethods

202

Input:

X : input data of size I

1

× I

2

× I

3

,

J

1

,J

2

,J

3

: number of basis for each factor,

β: divergence parameter (default: 2).

Output:

core tensor G ∈ R

J

1

×J

2

×J

3

factor matrices A

(1)

∈ R

I

1

×J

1

+

, A

(2)

∈ R

I

2

×J

2

+

,

A

(3)

∈ R

I

3

×J

3

+

begin

1. Nonnegative ALS initialization for all A

(n)

and G

2. repeat

3.

ˆ

X ’ = G ×

1

A

(1)

×

2

A

(2)

4. X ” = computeStep1(X ,

ˆ

X ’,A

(3)

,n,β)

5. for n = 1 to 3 do

6. A

(n)

← A

(n)

~ computeStep2(X ”,A,G,n) ⊘

computeStep3(

ˆ

X ’,A,G,β,n)

7. a

(n)

j

n

← a

(n)

j

n

/ ∥ a

(n)

j

n

∥

p

8. endFor

9. G ← G ~

X ” × {A

T

}

⊘

ˆ

X

.[β]

× {A

T

}

10. until a stopping criterion is met

end

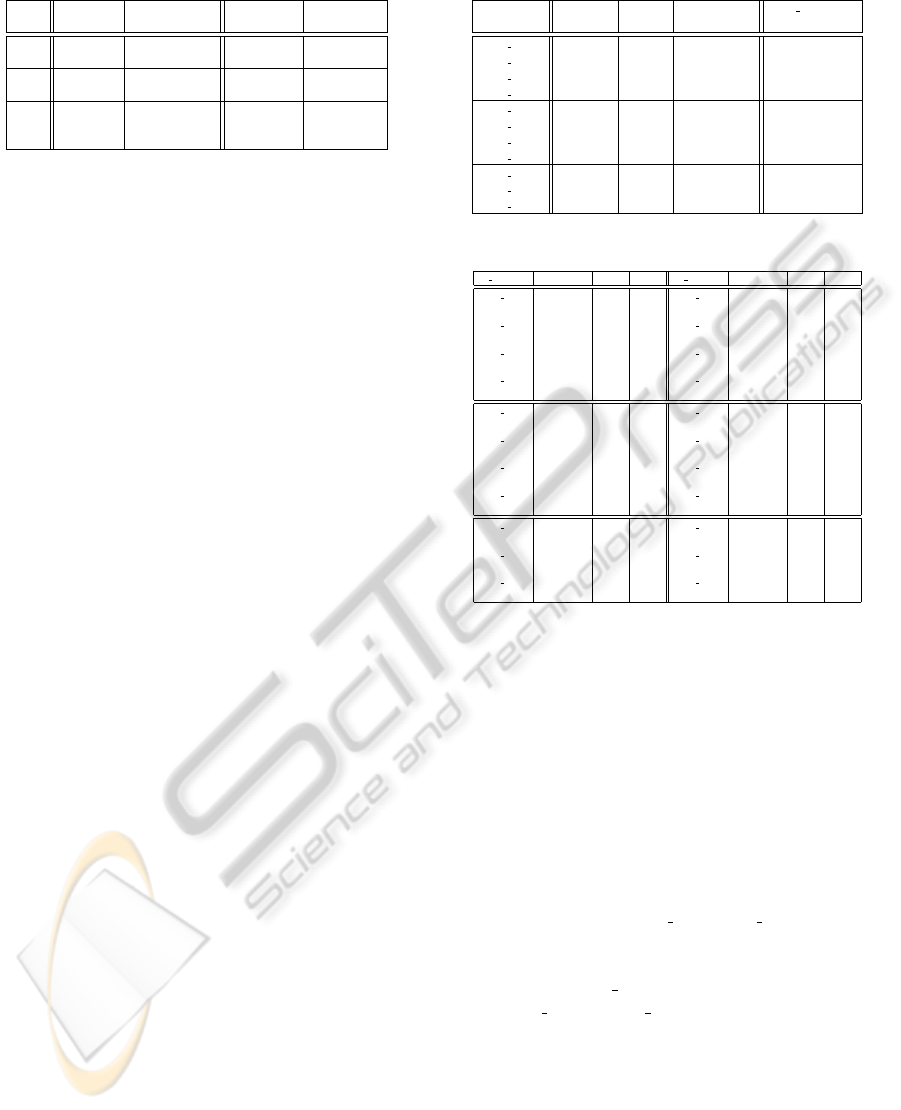

Figure 2: Modified fast BetaNTD algorithm.

taNTD algorithm (Cichocki et al., 2009) that relies

on beta divergences (Basu et al., 1998), which have

been successfully applied for robust PCA and clus-

tering. The fast BetaNTD algorithm has multiplica-

tive update rules defined in function of the tensor X

and its current approximation

ˆ

X . Unfortunately,

ˆ

X

is a large yet dense tensor and hence it cannot be

easily kept in primary memory. We decompose the

tensor following the lead of the approach proposed

in (Kolda and Sun, 2008), and our resulting algo-

rithm is shown in Figure 2. To avoid storing the

entire tensor

ˆ

X , we keep in memory only an inter-

mediate result

ˆ

X ’ = G ×

1

A

(1)

×

2

A

(2)

(Line 3), and

then partially compute the final approximated tensor

as

ˆ

X =

ˆ

X ’ ×

3

A

(3)

only for a limited number of en-

tries at time, for each mode.

Let us consider the update rule for any A

(n)

:

A

(n)

← A

(n)

~

X

(n)

~

ˆ

X

.[β−1]

(n)

A

⊗

−n

G

T

(n)

⊘

ˆ

X

.[β]

(n)

A

⊗

−n

G

T

(n)

In the above rule, the most expensive operations are

X

(n)

~

ˆ

X

.[β−1]

(n)

, A

⊗

−n

G

T

(n)

, and

ˆ

X

.[β]

(n)

A

⊗

−n

G

T

(n)

, thus we

decompose the problem into three smaller steps: (1)

computation of X ~

ˆ

X

.[β−1]

(which considers only

the nonzero entries of X ), (2) block-wise computa-

tion of (X

(n)

~

ˆ

X

.[β−1]

(n)

)A

⊗

−n

G

T

(n)

, and (3) block-wise

computation of

ˆ

X

.[β]

(n)

A

⊗

−n

G

T

(n)

.

Step 1. Product X ~

ˆ

X

.[β−1]

is computed once to

obtain matricizations given by X

(n)

~

ˆ

X

.[β−1]

(n)

. More-

over, the product X ~

ˆ

X

.[β−1]

is computed start-

ing from the intermediate result

ˆ

X ’ and, since X ~

ˆ

X

.[β−1]

is the element-wise product of a sparse tensor

with a dense one, the resulting tensor will also be a

sparse tensor whose nonzero entries are in the same

positions as those within X , and only these entries

need to be computed.

Step 2. Since A

⊗

−n

G

T

(n)

is the transpose of

the matricization along the mode n of the tensor re-

sulting from G ×

−n

{A}, which has the same num-

ber of columns of X

(n)

~

ˆ

X

.[β−1]

(n)

, it can be noted

that (X

(n)

~

ˆ

X

.[β−1]

(n)

)A

⊗

−n

G

T

(n)

is the sum of a certain

number of matrix products; for instance, for n = 1,

(X

(n)

~

ˆ

X

.[β−1]

(n)

)A

⊗

−n

G

T

(n)

will be the result of the sum

of I

3

matrix products.

Step 3.

ˆ

X

.[β]

(n)

A

⊗

−n

G

T

(n)

(Line 6) is computed anal-

ogously to (X

(n)

~

ˆ

X

.[β−1]

(n)

)A

⊗

−n

G

T

(n)

. Finally, for the

core tensor update rule (Line 9), we compute a normal

mode-n product and each entry of

ˆ

X

.β

is computed

starting from the intermediate result

ˆ

X ’.

2.4 Document Clustering Induction

We consider different ways of inducing a document

clustering solution from the decomposed tensor. One

simple way is to derive a monothetic clustering from

the third factor matrix (A

(3)

) by assigning each docu-

ment to the component (cluster) corresponding to the

highest relevance value stored in the matrix. A di-

rect way is to input a standard document clustering

algorithm with A

(3)

. An alternative way, which does

not explicitly involve A

(3)

, is to consider a clustering

solution obtained by applying a document clustering

algorithm to the projection of the matrix of the term-

frequencies (over the original document collection) to

A

(2)

—the rationale here is to project the original doc-

ument vectors of term-frequencies along the mode-2

components, which express discriminative informa-

tion for the term grouping, hence deriving a cluster-

ing of the documents that are mapped to a lower di-

mensional space. We hereinafter refer to the differ-

ent ways as monothetic, direct, and t f -projected doc-

ument clustering, respectively.

3 EVALUATION AND RESULTS

Reuters Corpus Volume 1 (RCV1) (Lewis et al., 2004)

is a major benchmark for text classification/clustering

research. RCV1 is particularly suited for our study

since every news, besides its plain-text fields (i.e.,

body and headlines) is originally provided with al-

ATensor-basedClusteringApproachforMultipleDocumentClassifications

203

Table 1: Document classification sets.

news text proc. clustering size (no. of

fields params params clusters)

CS1 headline l f = 0 k ∈ [5..20] 4 (50)

body l f = {0, 1, 5} k ∈ [5..20] 12 (150)

CS2 headline l f = 5 k ∈ [5..43] 20 (480)

+ body

CS3 headline l f = 0 k ∈ [5..20] 4 (50)

body l f = 0 k ∈ [5..20] 4 (50)

metadata – – 3 (19)

ternative categorizations according to three different

category fields (metadata): TOPICS (i.e., major sub-

jects), INDUSTRIES (i.e., types of businesses dis-

cussed), and REGIONS (i.e., geographic locations and

economic/political side information). After filtering

out very short news (i.e., documents with size less

than 6KB) and any news that did not have at least one

value for each of the three category fields, we selected

the news labeled with one of the Top-5 categories for

each of the three category fields. This resulted in a

dataset of 3081 news. From the text of the news,

we discarded strings of digits, retained alphanumeri-

cal terms, performed removal of stop-words and word

stemming.

We generated various sets of classifications ob-

tained over the RCV1 dataset, according to the tex-

tual content information as well as to the Top-

ics/Industries/Regions metadata. For the purpose of

generating the text-based classifications, we used the

bisecting k-means algorithm implemented in the well-

known CLUTO toolkit (Karypis, 2007) to produce

clustering solutions of the documents represented

over the space of the terms contained in the body

and/or headlines. Table 1 summarizes the main char-

acteristics of the three sets of document classifications

used in our evaluation. Columns text proc. params

and clustering params refer to the lower document-

frequency cut threshold (l f , percent) used to select

the terms for the document representation, and to

the number of clusters (k, with increment of 5 in

CS1,CS3 and 2 in CS2) taken as input to CLUTO to

generate the text-based classifications. Moreover, col-

umn size reports the number of classifications and re-

lating number of clusters of documents (within brack-

ets) that rely on the same type of information (i.e.,

body, headline, metadata).

For each of the three document classification sets,

we derived different tensors according to various set-

tings of the closed frequent document-set extraction.

Table 2 contains details about the tensors built upon

the selected configurations. Note that, in each of the

tensors, mode-2 corresponded to the space of terms

extracted from the body and headline of the news

(2692 terms) and mode-3 to the average number of

clusters in the corresponding classification sets (i.e.,

13 for CS1, 24 for CS2, and 11 for CS3).

Table 2: Tensors and their decompositions.

min length no. of avg % of TD-S

of CDS CDS C DS per doc. size

CS1 Ten1 50 17443 3.29% 174 × 27 × 13

CS1 Ten2 100 5871 5.25% 58 × 27 × 13

CS1 Ten3 150 2454 7.12% 24 × 27 × 13

CS1 Ten4 200 1265 8.53% 12 × 27 × 13

CS2 Ten1 50 12964 3.78% 129 × 27 × 24

CS2 Ten2 100 7137 4.87% 71 × 27 × 24

CS2 Ten3 150 3129 5.89% 31 × 27 × 24

CS2 Ten4 180 918 7.53% 9 × 27 × 24

CS3 Ten1 50 2806 3.09% 28 × 27 × 11

CS3 Ten2 100 843 5.15% 8 × 27 × 11

CS3 Ten3 150 326 7.15% 3 × 27 × 11

Table 3: Summary of results.

TD-S clustering F Q TD-L clustering F Q

CS1 Ten1 monoth. 0.509 0.603 CS1 Ten1 direct 0.556 0.601

t f -proj. 0.610 0.838 t f -proj. 0.665 0.881

CS1 Ten2 monoth. 0.534 0.599 CS1 Ten2 direct 0.570 0.603

t f -proj. 0.625 0.838 t f -proj. 0.688 0.884

CS1 Ten3 monoth. 0.542 0.598 CS1 Ten3 direct 0.586 0.601

t f -proj. 0.624 0.835 t f -proj. 0.689 0.889

CS1 Ten4 monoth. 0.533 0.598 CS1 Ten4 direct 0.579 0.605

t f -proj. 0.624 0.838 t f -proj. 0.687 0.837

CS2 Ten1 monoth. 0.494 0.603 CS2 Ten1 direct 0.599 0.604

t f -proj. 0.569 0.847 t f -proj. 0.625 0.893

CS2 Ten2 monoth. 0.496 0.603 CS2 Ten2 direct 0.556 0.601

t f -proj. 0.561 0.843 t f -proj. 0.629 0.889

CS2 Ten3 monoth. 0.495 0.603 CS2 Ten3 direct 0.560 0.604

t f -proj. 0.570 0.846 t f -proj. 0.635 0.895

CS2 Ten4 monoth. 0.497 0.604 CS2 Ten4 direct 0.555 0.602

t f -proj. 0.577 0.848 t f -proj. 0.639 0.890

CS3 Ten1 monoth. 0.556 0.597 CS3 Ten1 direct 0.619 0.600

t f -proj. 0.617 0.837 t f -proj. 0.677 0.888

CS3 Ten2 monoth. 0.556 0.597 CS3 Ten2 direct 0.619 0.599

t f -proj. 0.620 0.837 t f -proj. 0.686 0.839

CS3 Ten3 monoth. 0.553 0.597 CS3 Ten3 direct 0.610 0.596

t f -proj. 0.620 0.837 t f -proj. 0.680 0.887

For each of the tensors constructed, we run the al-

gorithm in Figure 2 with different settings to obtain

two decompositions: the first one led to a core-tensor

with a number of components on mode-3 equal to the

average number of clusters in the original classifica-

tion set, whereas the other two modes were set equal

to the number of closed document-sets and number

of terms, respectively, scaled by a factor of 0.01; the

second decomposition was devised to obtain a larger

core-tensor with components of each mode equal to

an increment of a multiplicative factor of 10 w.r.t. the

mode in the core-tensor obtained by the first decom-

position. We use suffixes TD-S and TD-L to denote

the first (smaller) and second (larger) decompositions

of a tensor, respectively; last column in Table 2 con-

tains details about TD-S decompositions. From the

result of a TD-S (resp. TD-L) decomposition, we de-

rived a monothetic (resp. direct) or, alternatively, a

t f -projected clustering solution, with number of clus-

ters equal to the number of mode-3 components.

All clustering solutions were evaluated in terms

of average F-measure (Steinbach et al., 2000) (F)

between a clustering solution derived from the ten-

sor model and each of the input document classifi-

cations. By using the original t f .id f representation

of the documents (based on the text of body plus

ICPRAM2013-InternationalConferenceonPatternRecognitionApplicationsandMethods

204

headline fields), we also computed the centroid-based

intra-cluster similarity and inter-cluster similarity and

then used their difference to obtain an overall quality

score (Q).

Table 3 shows our main experimental results.

Comparing the performance of the different types

of induced clustering, the t f -projected solutions

achieved higher quality than the monothetic clus-

terings (for the case TD-S) and the direct cluster-

ings (for the case TD-L), which was particularly ev-

ident in terms of internal quality. Looking at each

classification-set tensors, we observed that a lower av-

erage percentage of closed document-sets generally

led to slightly better performance for classification-

sets characterized by conceptually different views

(i.e.,

CS1

and

CS3

), whereas an inverse tendency

occurred for a more homogeneous classification-set

(CS2). However, we also observed no significant dif-

ferences in the overall average performance obtained

by varying the number of components in mode-1,

which would indicate a relatively small sensitivity of

the tensor approximation to the mode-1 (i.e., space

of the mined closed document-sets). Also, the F-

measure scores for the CS3 tensors were comparable

or even better than for the other tensors, which would

suggest the ability of our tensor model to handle doc-

ument classification sets which express possibly alter-

native views (i.e., different content-based views along

with metadata-based views).

4 CONCLUSIONS

We proposed a novel document clustering framework

that copes with the knowledge about multiple exist-

ing classifications of the documents. The main nov-

elty of our study is the definition of a third-order ten-

sor model that takes into account both the document

content information and the ways the documents are

grouped together across the available classifications.

Further work might be focused on a thorough investi-

gation of the impact of the frequent document-sets on

the sparsity of the tensor, and on a comparison with

clustering ensemble methods.

REFERENCES

Basu, A., Harris, I. R., Hjort, N. L., and Jones, M. C. (1998).

Robust and efficient estimation by minimising a den-

sity power divergence. Biometrika, 85(3):pp. 549-559.

Cichocki, A., Phan, A. H., and Zdunek, R. (2009). Nonneg-

ative Matrix and Tensor Factorizations: Applications

to Exploratory Multi-way Data Analysis and Blind

Source Separation. Wiley, Chichester.

Ghosh, J. and Acharya, A. (2011). Cluster ensembles. Wiley

Interdisc. Rew.: Data Mining and Knowledge Discov-

ery, 1(4):305-315.

Karypis, G. (2002/2007). CLUTO - Software

for Clustering High-Dimensional Datasets.

http://www.cs.umn.edu/ cluto.

Kolda, T. and Bader, B. (2006). The TOPHITS model for

higher-order web link analysis. In Proc. SIAM Work-

shop on Link Analysis, Counterterrorism and Secu-

rity.

Kolda, T. G. and Bader, B. W. (2009). Tensor decomposi-

tions and applications. SIAM Review, 51(3):455-500.

Kolda, T. G. and Sun, J. (2008). Scalable tensor decompo-

sitions for multi-aspect data mining. In Proc. IEEE

ICDM Conf., pages 363-372.

Kutty, S., Nayak, R., and Li, Y. (2011). XML Documents

Clustering Using a Tensor Space Model. In Proc.

PAKDD Conf., pages 488-499.

Lewis, D. D., Yang, Y., Rose, T., and Li, F. (2004). RCV1:

A New Benchmark Collection for Text Categorization

Research. Journal of Machine Learning Research,

5:361-397.

Liu, N., Zhang, B., Yan, J., Chen, Z., Liu, W., Bai, F., and

Chien, L. (2005). Text Representation: From Vector

to Tensor. In Proc. IEEE ICDM Conf., pages 725-728.

Liu, X., Gl

¨

anzel, W., and Moor, B. D. (2011). Hybrid clus-

tering of multi-view data via Tucker-2 model and its

application. Scientometrics, 88(3):819-839.

Steinbach, M., Karypis, G., and Kumar, V. (2000). A Com-

parison of Document Clustering Techniques. In Proc.

KDD Text Mining Workshop.

ATensor-basedClusteringApproachforMultipleDocumentClassifications

205