Pupil Localization by a Template Matching Method

Donatello Conte

1

, Rosario Di Lascio

2

, Pasquale Foggia

1

, Gennaro Percannella

1

and Mario Vento

1

1

University of Salerno, Via Ponte Don Melillo 1, 84084, Fisciano (SA), Italy

2

A.I. Tech srl, Via E. Capozzi 62, Avellino, Italy

Keywords:

Pupil Localization, Pupil Tracking, Template Matching.

Abstract:

In this paper, a new algorithm for pupil localization is proposed. The algorithm is based on a template matching

approach; the original contribution is that the model of the pupil that is used is not fixed, but it is automatically

constructed on the first frame of the video sequence to be examined. Therefore the model is adaptively tuned

to each subject, in order to improve the robustness and the accuracy of the detection. The results show the

effectiveness of the proposed algorithm.

1 INTRODUCTION

Among the different applications of biomedical im-

age processing, e.g. Mitotic HEp-2 cells recogni-

tion (Percannella et al., 2011) or Blood Vessel Seg-

mentation (Fraz et al., 2012), Eye Tracking plays an

important role both from the research and commer-

cial point of view. In fact Eye tracking is an impor-

tant component in many applications including hu-

man computer interaction, virtual reality, and diag-

nosis of some health problems. Abnormal eye move-

ments can be an indication of diseases such as balance

disorders, strabismus, etc.

Several technologies have been used for eye track-

ing, such as electrooculography (EOG) or flying-spot

lasers, but video-based eye tracking systems proved to

be the most effective. In particular, head mounted eye

tracking systems are more accurate than other kinds

of video-based systems.

Detecting the pupil is the most frequently used

method to track the horizontal and vertical eye po-

sition in video-based systems. If the detection can

be done accurately and robustly, then the eye orien-

tation can be determined from the pupil center coor-

dinates. Unfortunately, significant errors in the pupil

center computation may arise due to artifacts caused

by eyelids, eyelashes, corneal reflections, make-up,

etc.

Some attempts have been previously made to at-

tain a fast and accurate pupil center detection; in the

next section a brief survey on the subject is presented.

A common limitation of these approaches is that they

do not take into account the specificity of each ob-

served eye, and so may fail when they encounter

an eye with unusual characteristics (e.g. particularly

large or small pupil, shape not exactly elliptical etc.)

In this paper we propose a new, model-based, algo-

rithm for pupil center localization, which adapts auto-

matically to the characteristics of the observed pupil.

The remainder of the paper is organized as fol-

lows. In Section 2, we present some related works,

while in Section 3 we describe the proposed pupil lo-

calization method. Section 4 show the results of the

experimental phase and some conclusions are drawn

in Section 5.

2 RELATED WORKS

In the literature on pupil detection, a first group of

works (Boumbarov et al., 2009; Krishnamoorthy and

Indradevi, 2010) is based on the technique of active

contours. These methods assume that a parametric

model of the shape of the contour of the pupil is

known (usually an ellipse is used); then they employ

different algorithms for the iterative learning of the

chosen model parameters. Boumbarov et al. (Boum-

barov et al., 2009) propose the use of a Particle Filter;

the other approach works by minimizing an energy

function based on the gradient (Krishnamoorthy and

Indradevi, 2010).

A second group of works (Benletaief et al., 2010;

Kinsman and Pelz, 2011) starts from a characteriza-

tion of the pixels belonging to the pupil, and tries

to reconstruct the pupil region from a seed point,

by clustering adjacent pixels (Benletaief et al., 2010)

779

Conte D., Di Lascio R., Foggia P., Percannella G. and Vento M..

Pupil Localization by a Template Matching Method.

DOI: 10.5220/0004287907790782

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2013), pages 779-782

ISBN: 978-989-8565-47-1

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

or by using a probabilistic mixture model together

with the Expectation-Maximization algorithm (Kins-

man and Pelz, 2011).

Two other papers (S. Kim and Lee, 2011; Mo-

hammadi and Raie, 2011) work with a RGB cam-

era and first seek the iris contour, and then recognize

the boundary of the pupil, either through geometrical

properties (Mohammadi and Raie, 2011) or through

the use of artificial neural networks (S. Kim and Lee,

2011).

Finally, a large group of papers (Kolodko et al.,

2005; Yan et al., 2009) relies on the fact that the pupil

localization systems typically use infrared (IR) cam-

eras. In an IR image of the eye, the pupil is the darkest

region in the image. Therefore these works binarize

the initial image and perform a fitting of an ellipse

(with a minimum and maximum size) on the bina-

rized image contours. A variation of this scheme is

presented in some papers, e.g. (Kocejko et al., 2008),

which assume that the unique convex region result-

ing from binarization is the one corresponding to the

pupil; then they identify the center of the pupil as the

center of mass of the resulting region.

The methods of the first and second groups as-

sume that a seed point belonging to the pupil can be

easily and reliably found. The works that are based

on image binarization make the hypothesis that there

are no other elliptical regions in the image except the

one corresponding to the pupil.

These assumptions may fail in many cases, due to

the noise in the image, or because of the presence of

other dark elements similar to the pupil color (such

as the make-up), or due to non-perfect centering of

the eye in front of the camera. Furthermore, all these

methods cannot always recognize when the pupil is

not present (closed eye) or if it is partially visible be-

cause of the eyelids.

The basic idea of our proposal, which overcomes

the limits described above, is to build an appearance

model of the pupil, which is specific to the eye under

examination, and to search the portion of the image

most similar to the model. The model is constructed

(automatically) on the first frame of the sequence.

The method makes no assumptions on the position of

the pupil and therefore it is not based on an initial so-

lution. Furthermore, by performing a global search,

it is not deceived by regions that are circular but do

not correspond to the pupil. Finally, by including a

threshold on the similarity between model and image,

it can recognize when the pupil is not present in the

image.

3 THE PROPOSED METHOD

The main idea of the algorithm is to construct a model

for the pupil on the first frame of the sequence, and

then, for each succesive frame, to correlate the model

with the image in order to find the image region that

best matches with the model.

Given a template image of the pupil, for each

frame, the steps of the algorithm are described in the

following:

1. the image is thresholded with a fixed value (the

illumination system is such that the pixels color

of the pupil are always the darkest pixels in the

image);

2. a template matching (Brunelli, 2009) is applied

between the pupil model and the image in order

to find the position of maximum correlation;

3. starting from this position, the algorithm performs

a stabilization procedure in order to reduce the lo-

calization errors due to the noise in the image.

The correlation function used in the template

matching is:

R(x, y) =

∑

x

0

,y

0

(T (x

0

, y

0

) · I(x + x

0

, y + y

0

))

r

∑

x

0

,y

0

(T (x

0

, y

0

)

2

·

∑

x

0

,y

0

I(x + x

0

, y + y

0

)

2

where T denotes the template and I denotes the im-

age; the summation is done over template pixels

(x

0

, y

0

) ∈ T .

Because of the noise and of the distortions along

the edge of the region representing the pupil, the point

of maximum correlation does not coincide exactly

with the center of the pupil. For this reason a further

step is performed to find a more stable pupil center.

Starting from the maximum correlation position,

a contour finding algorithm is performed. Only

the largest contour is considered, assuming that the

smaller ones are due to noise. The found contour will

include part of the contour of the pupil, but it may

also include other borders (e.g. if the pupil is partially

covered by the eyelid). Thus, an ellipse is fitted to

the contour. The fitting is performed by calculating

the ellipse that fits (in a least-squares sense) the set of

2D contour points. Finally, the center of the ellipse is

taken as the pupil center.

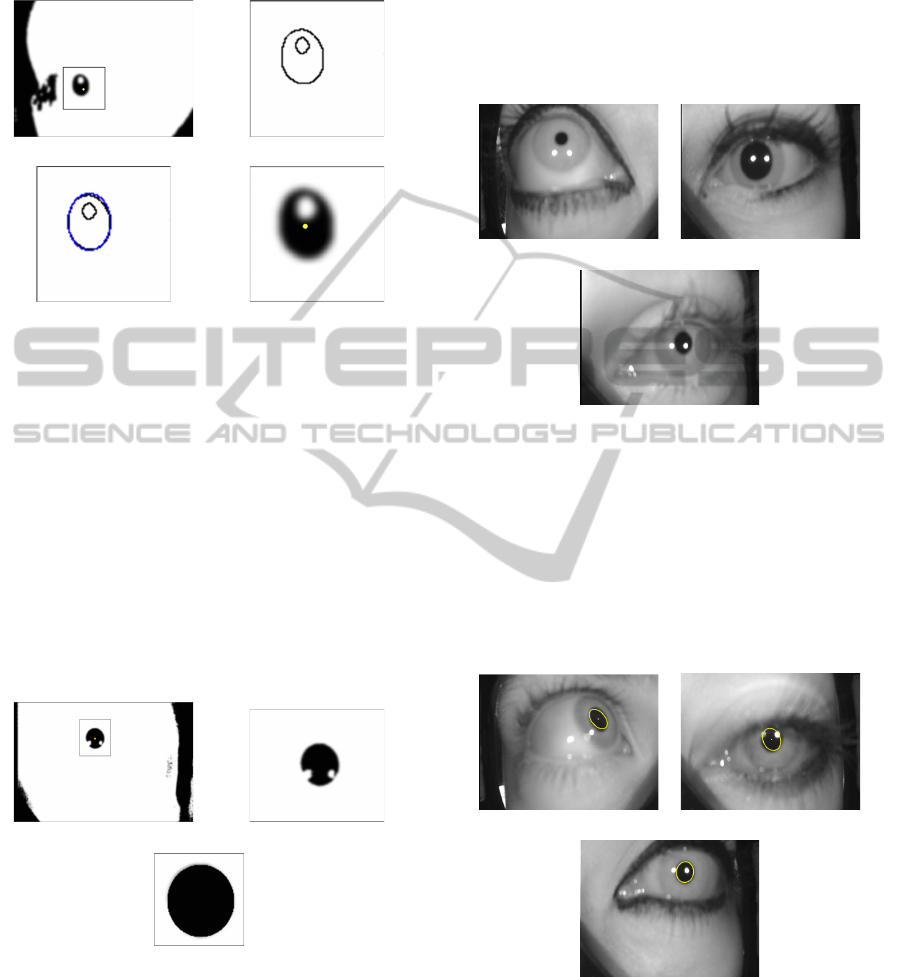

Fig. 1 illustrates the steps described above: the

template matching finds a first, not necessarily opti-

mal, solution (Fig. 1a). Performing a search of the

contours in the considered region, the largest contour

corresponds to the pupil (Fig. 1b). Fig. 1c shows the

ellipse found after the execution of an ellipse fitting

algorithm, and Fig. 1d shows the new pupil center that

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

780

significantly improves the solution found by the tem-

plate matching alone.

a) b)

c) d)

Figure 1: a) First solution after the application of the tem-

plate matching. b) Contours finding on the considered re-

gion. c) Ellipse Fitting. d) Final solution.

The construction of the model of the pupil for

the considered video sequence is carried out on the

first frame, again with a method based on Template

Matching. However, in this case we use a set of pre-

defined templates. Starting from the point of maxi-

mum correlation, the actual region of the pupil is re-

constructed through a Region Growing Process (Pratt,

2007) on the binarized image, by using as a seed point

the solution resulting from Template Matching (See

Fig. 2 for an example). Once this region is found, it is

used as a template for subsequent frames.

a) b)

c)

Figure 2: a) Template Matching Solution. b) Region Grow-

ing Result. c) Final Pupil Model.

4 RESULTS

A set of 28 videos (from 14 different subjects) was

recorded to analyze the performance of the algorithm;

the videos were acquired using an IR camera mounted

on a mask worn by the subject. The average video

length was 450 frames; therefore about 13000 frames

were used in our experiments.

Of the 14 subjects, five were women, and four of

them had make-up (eye-liner). In Fig. 3 some sample

frames are shown.

a) b)

c)

Figure 3: Samples frames from the acquired dataset.

The sequences ground truth (exact pupil position)

for each frame of the video set has been manually de-

tected and recorded in a file. The frames in which

the eye was closed have been also noted in order to

evaluate the ability of the algorithm in detecting the

absence of a pupil in the image (because of the clos-

ing of the eyelid).

a) b)

c)

Figure 4: Samples frames in which the algorithm succeeds

in detecting pupil center.

Pupil detection outcomes can be broadly classified

into the following four possibilities:

• True Positives. The pupil has been detected cor-

rectly.

PupilLocalizationbyaTemplateMatchingMethod

781

Table 1: Obtained results of the proposed algorithm.

# frames # frames

TP TN FP FNopened closed

eyes eyes

13677 222 13062 152 568 130

• True Negatives. The algorithm identifies that

there was no pupil to be detected, e.g. the eye

closed.

• False Positives. The algorithm detects a pupil

where there is none in the image, or the detected

center is too distant (over a choosen threshold)

from the real one.

• False Negatives. The algorithm detects that there

is no pupil even though there is a clearly visible

pupil in the image.

a) b)

Figure 5: Samples frames in which the algorithm fails in

detecting pupil center.

Table 1 shows the incidence of such cases over the

frames of the collected dataset. In 95% of the frames,

the algorithm succeeds in detecting the pupil center or

in detecting a closed eye.

In Fig. 4 it is possible to see three examples in

which the algorithm succeeds in the detection of the

pupil center. The cases are particularly difficult due

to the large rotation of the eye (Fig. 4a) or because

of very long eyebrows (Fig. 4b) or very pronounced

makeup (Fig. 4c). Note also that because of the non

perfect adherence of the mask to the face, dark and

thick regions are present on the image borders: this

causes, in many algorithms, a failure to find the cor-

rect position of the pupil.

In Fig. 5 there are two cases in which the algo-

rithm fails: in the first case, due to the make-up, the

algorithm fails to recognize the closed ey,e while in

the second image, being very noisy, the shape of the

pupil is very distorted.

5 CONCLUSIONS

In this paper a new model-based algorithm for pupil

localization is presented. The algorithm overcomes

some common problems affecting other approaches

by constructing a model that is specific for the ob-

served subject. The experimental results show the ef-

fectiveness of the proposed algorithm.

Future work will be oriented to the use of a more

refined appearance model, incorporating probabilistic

elements, in order to improve the detection accuracy

for very noisy images and for the case in which the

pupil is largely occluded by the eyelid.

REFERENCES

Benletaief, N., Benazza-Benyahia, A., and Derrode, S.

(2010). Pupil localization and tracking for video-

based iris biometrics. In 10th Int. Conf. on Infor-

mation Science, Signal Processing and their Applica-

tions.

Boumbarov, O., Panev, S., Sokolov, S., and Kanchev, V.

(2009). Ir based pupil tracking using optimized parti-

cle filter. In IEEE International Workshop on Intelli-

gent Data Acquisition and Advanced Computing Sys-

tems: Technology and Applications.

Brunelli, R. (2009). Template Matching Techniques in Com-

puter Vision: Theory and Practice. Wiley.

Fraz, M., Remagnino, P., Hoppe, A., Uyyanonvara, B.,

Rudnicka, A., Owen, C., and Barman, S. (2012).

Blood vessel segmentation methodologies in retinal

images - a survey. Computer Methods and Programs

in Biomedicine, 108(1):407–433.

Kinsman, T. B. and Pelz, J. B. (2011). Towards a subject-

independent adaptive pupil tracker for automatic eye

tracking calibration using a mixture model. In IVMSP

Workshop: Perception and Visual Signal Analysis.

Kocejko, T., Bujnowski, A., and Wtorek, J. (2008). Eye

mouse for disabled. In Conf. on Human System Inter-

actions.

Kolodko, J., Suzuki, S., and Harashima, F. (2005). Eye-gaze

tracking: An approach to pupil tracking targeted to

fpgas. In Int. Conf. on Intelligent Robots and Systems.

Krishnamoorthy, R. and Indradevi, D. (2010). New snake

model for pupil localization using orthogonal polyno-

mials transform. In Int. Conf. on Machine Vision.

Mohammadi, M. R. and Raie, A. (2011). A novel tech-

nique for pupil center localization based on projective

geometry. In 7th Iranian Machine Vision and Image

Processing.

Percannella, G., Soda, P., and Vento, M. (2011). Mitotic

hep-2 cells recognition under class skew. Lecture

Notes in Computer Science, 6979:353–362.

Pratt, W. K. (2007). Digital Image Processing. John Wiley

& Sons.

S. Kim, B. H. and Lee, M. (2011). Gaze tracking based on

pupil estimation using multilayer perception. In Pro-

ceedings of International Joint Conference on Neural

Networks.

Yan, B., Zhang, X., and Gao, L. (2009). Improvement on

pupil positioning algorithm in eye tracking technique.

In International Conference on Information Engineer-

ing and Computer Science.

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

782