Guiding Techniques for Collaborative Exploration in Multi-scale Shared

Virtual Environments

Thi Thuong Huyen Nguyen

1

, Thierry Duval

2

and C

´

edric Fleury

3

1

INRIA Rennes Bretagne-Atlantique, IRISA, UMR CNRS 6074, 35042 Rennes, France

2

Universit

´

e de Rennes 1, UEB, IRISA, UMR CNRS 6074, 35042 Rennes, France

3

INSA de Rennes, UEB, IRISA, UMR CNRS 6074, 35042 Rennes, France

Keywords:

Virtual Reality, 3D Navigation, Collaborative Virtual Environments.

Abstract:

Exploration of large-scale 3D Virtual Environments (VEs) is often difficult because of lack of familiarity with

complex virtual worlds, lack of spatial information that can be offered to users and lack of sensory (visual,

auditory, locomotive) details compared to the exploration of real environments. To address this problem, we

present a set of metaphors for assisting users in collaborative navigation to perform common exploration tasks

in shared collaborative virtual environments. Our propositions consist in three guiding techniques in the form

of navigation aids to enable one or several users (called helping user(s)) to help one main user (called exploring

user) to explore the VE efficiently. These three techniques consist in drawing directional arrows, lighting up

path to follow, and orienting a compass to show a direction to the exploring user. All the three techniques are

generic so they can be used for any kind of 3D VE, and they do not affect the main structure of the VE so

its integrity is guaranteed. To compare the efficiency of these three guiding techniques, we have conducted

an experimental study of a collaborative task whose aim was to find hidden target objects in a complex and

multi-scale shared 3D VE. Our results show that although the directional arrows and compass surpassed the

light source for the navigation task, these three techniques are completely appropriate for guiding a user in 3D

complex VEs.

1 INTRODUCTION

Navigation is a fundamental and important task for all

VE applications as it is in the real world, even if it is

not the main objective of a user in a VE (Burigat and

Chittaro, 2007). Navigation includes two main tasks:

travel and wayfinding. Travel tasks enable the user

to control the position and orientation of his view-

point (Darken and Peterson, 2001; Bowman et al.,

2004). Wayfinding tasks enable the user to build a

cognitive map in which he can determine where he is,

where everything else is and how to get to particular

objects or places (Jul and Furnas, 1997; Darken and

Peterson, 2001).

In the literature, many different techniques have

been proposed for travel in VEs (Zanbaka et al., 2004;

Suma et al., 2010). By evaluating their effect on

cognition, they suggest that for applications where

problem solving is important, or where opportunity

to train is minimal, then having a large tracked space,

in which the user can physically walk around the vir-

tual environment, provides benefits over common vir-

tual travel techniques (Zanbaka et al., 2004). Indeed,

physical walking is the most natural technique that

supports intuitive travel and it can help the user to

have more spare cognitive capacity to process and

encode stimuli (Suma et al., 2010). However, the

size of a virtual environment is usually larger than

the amount of available walking space, even with big

CAVE-like systems. As a result, alternative travel

techniques have been developed to overcome this lim-

itation such as walking-in-place, devices simulating

walking, gaze-directed steering, pointing, or torso-

directed steering. In the context of this paper, to get

an efficient and simple way of traveling and to im-

prove sense of presence in VE, we combine the physi-

cal walking technique to give exploring user (as much

as possible) an intuitive travel by using a big CAVE-

like system with head tracking for position and ori-

entation, and a virtual travel to control the exploring

user’s position in the VE by using a flystick device.

Wayfinding tasks rely on the exploring user’s cog-

nitive map because he must find his way to move us-

ing this map. So if he lacks an accurate spatial knowl-

327

Nguyen T., Duval T. and Fleury C..

Guiding Techniques for Collaborative Exploration in Multi-scale Shared Virtual Environments.

DOI: 10.5220/0004290403270336

In Proceedings of the International Conference on Computer Graphics Theory and Applications and International Conference on Information

Visualization Theory and Applications (GRAPP-2013), pages 327-336

ISBN: 978-989-8565-46-4

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

edge about the environment, the performance of nav-

igation will be reduced (Elmqvist et al., 2007). In

such large-scale VEs, this problem becomes more se-

rious. In addition, as with navigation in real environ-

ment, the exploring user has to navigate the VE many

times before he can build a complete cognitive map

about this environment, and he may not always want

to spend so much effort and time on this task (Buri-

gat and Chittaro, 2007). To deal with these problems,

many solutions have been proposed such as naviga-

tion aids, guidelines that support the user to explore

and gain spatial knowledge about VE, e.g., (Vinson,

1999; Chittaro and Burigat, 2004). Nevertheless, in

3D immersive environments, it is also difficult to give

additional navigation aids without interfering with the

immersion of the exploring user.

Although collaborative exploration of complex

and large-scale VEs is not usually considered the

main task to achieve in a collaborative VE, the

wayfinding time of the exploring user can be consid-

erably reduced by having the assistance from helping

users who can have a global and complete view of the

VE such as a bird’s eye view. By proposing and eval-

uating new metaphors dedicated to 3D collaborative

interactions, including collaborative exploration, the

collaboration between distant users who are sharing a

virtual environment can be improved.

In order to facilitate the collaboration between the

exploring user and the helping users, even when they

are on distant sites, we propose a set of three guiding

techniques in the form of navigation aids (drawing di-

rectional arrows, lighting up the path to follow, and

orienting a compass to show the direction) used by

the helping users to guide the exploring user to target

places. We want to provide some guiding techniques

that should be simple, intuitive, efficient and easy to

use. In addition, we do not use verbal or textual com-

munication between users because the difference of

languages often happens when users work together

remotely, and it may cause misunderstanding or de-

lay in collaboration. We also want to build general

guiding techniques that do not require developers to

create specific maps for each new 3D VE, to modify

system or interface for the new VE model, or to add

many objects such as guidelines into it. By satisfying

these conditions, these techniques can be integrated

in many kinds of 3D complex, large-scale VEs while

the integrity of these environments is ensured.

Therefore, collaborative exploration can be used

in different applications: in exploring visualization

of scientific data to find points of interest; in explor-

ing complex large-scale environments that it takes too

much time to build a map or to define landmarks; or

in exploring unstable environments with so many dy-

Figure 1: The 3D virtual building from the bird’s eye view

of the helping user.

namic elements that it is difficult to build a representa-

tive map at every moment such as training simulators

for firefighters or soldiers (Backlund et al., 2009).

To evaluate our propositions, we conducted a

study to compare the three guiding techniques in a

collaborative application that aimed at finding hidden

target objects in a large and complex virtual building

(see Figure 1). Without help from another user, the

exploring user could not easily find these target ob-

jects in a short time.

This paper is structured as follows: section 2

presents a state of the art about navigation aids and

collaborative navigation for VEs. Section 3 presents

the three considered guiding techniques used to help

users to explore VEs. Section 4 describes the context

of our experimental study and its results while sec-

tion 5 discusses these results. Finally, section 6 con-

cludes and section 7 discusses possible future work.

2 RELATED WORK

2.1 Navigation Aids in Virtual

Environments

The development of different forms of navigation aids

aims to enable the exploring user of a virtual environ-

ment to find his way to target objects or places with-

out previous training. In order to overcome wayfind-

ing difficulties in VEs, two principal approaches have

been considered: designing VEs to facilitate wayfind-

ing behavior, and proposing wayfinding aids.

Designing VEs is often extracted from environ-

mental design principles of urban architects in real-

world. Darken et al. (Darken and Sibert, 1996) sug-

gest three organizational principles to provide a struc-

ture by which an observer can organize a VE into

a spatial hierarchy capable of supporting wayfinding

tasks: dividing a large world into distinct small parts,

organizing the small parts under logical spatial order-

ing, and providing frequent directional cues for orien-

tation. When these principles are applied to structured

and architectural environments (e.g., urban landscape,

GRAPP2013-InternationalConferenceonComputerGraphicsTheoryandApplications

328

buildings), they make it easier for users to construct

cognitive maps efficiently (Vinson, 1999; Darken and

Peterson, 2001). However, in other applications, such

as scientific visualization applications (Yang and Ol-

son, 2002), or in other kinds of environment, such as

open ocean environments or forests, it is difficult but

still necessary to organize objects in the environment

in an understandable way and to build semantic con-

nections between them.

Many kinds of wayfinding aids have been pro-

posed. Map is the most useful and “classic” wayfind-

ing aid. By using two kinds of map (i.e. egocen-

tric map with “forward-up” orientation and geocen-

tric map with “north-up” orientation (Darken and Pe-

terson, 2001)), users can access a large amount of in-

formation about the environment. However, the map

scaling problem of a very large VE and the align-

ment with this environment can cause high cogni-

tive load for users (Bowman et al., 2004). Environ-

ment maps can be found as 2D or 3D maps (Chittaro

and Venkataraman, 2001). The Worlds-In-Miniature

(WIM) metaphor is a technique that augments an im-

mersive display with a hand-held miniature copy of

the virtual environment just like a 3D map (Stoakley

et al., 1995). It is possible to navigate directly on this

WIM map by using it to determine where to go in

the VR. Nevertheless, because the environment can-

not be seen during this interaction, it limits the spatial

knowledge that users can gain for navigation.

Landmarks are also a very powerful cue to rec-

ognize a position in the environment and to acquire

spatial knowledge. Landmarks are usually statically

implemented a priori in the environment but they can

also be used as tools. For example, Kim et al. (Kim

et al., 2005) propose a topic map that contains a se-

mantic link map between landmarks, which are fa-

mous regional points in the VE. This topic map can

be applied to the navigation of the VE as an ontol-

ogy of subject knowledge, which represents subjects

of the environment (e.g., buildings, its metadata, land-

marks), and spatial knowledge, which represents the

environment structure. However, it is also limited by

the subject and spatial knowledge that designers can

describe about the environment in the ontology. The

more complex and abstract the environment is, the

more difficult the description of the ontology is.

Additionally, there is another way for users to dis-

cover the environment progressively by retracing their

steps (Ruddle, 2005). It is called trail technique and it

describes the path that users had previously followed.

Ruddle notes that trails are useful for first time navi-

gation in a VE, but that trail pollution impedes their

utility during subsequent navigation. Accordingly,

this approach is only appropriate for a repeated ex-

ploration and search task for a given set of locations.

Furthermore, a set of direction indications as

wayfinding aids has also been developed in the litera-

ture for VEs: compass (Darken and Peterson, 2001),

directional arrows (Bacim et al., 2012; Nguyen et al.,

2012), virtual sun (Darken and Peterson, 2001). They

are familiar tools for orientation-pointing in VE be-

cause of their intuitiveness and efficiency.

2.2 Collaborative Navigation

As mentioned above, collaboration can provide a

powerful technique to support the exploring user to

deal with lack of spatial knowledge in complex and

large-scale VEs. Although Collaborative Virtual En-

vironments (CVEs) have been developed to provide

a framework of information sharing and communi-

cation (Macedonia et al., 1994; Dumas et al., 1999;

Churchill et al., 2001), collaborative navigation task

in such environments has not been largely explored

and only limited attention has been devoted to evalu-

ate its efficiency in navigation in VEs.

It is essential for navigation in a CVE to support

the way of communication between users because it

is vital to understand what the others are referring to.

Many developers used verbal conversation as means

of communication to accomplish a given common

task (Hindmarsh et al., 1998; Yang and Olson, 2002).

However, if the users are located in distinct physical

domains, even in different countries, language diffi-

culty becomes an obstacle for collaboration to a com-

mon goal. So the communication technique for col-

laboration, especially for navigation in CVEs, should

be simple, intuitive, efficient and non-verbal. Based

upon these points, our primary motive is to develop

and to evaluate guiding techniques enabling helping

users to guide an exploring user toward target places

in complex large-scale CVEs.

We share this objective with the organizers of the

3DUI Contest 2012

1

and its participants. As naviga-

tion aids, some techniques have been proposed such

as “anchors” and a string of blue arrows that con-

nects them or directional arrows (Bacim et al., 2012;

Nguyen et al., 2012), point light sources (Cabral et al.,

2012) or beacons (Notelaers et al., 2012; Nguyen

et al., 2012; Wang et al., 2012). Although they are

powerful navigation aids, it is usually difficult to ap-

ply them for navigation in many kinds of environ-

ment. The environment of (Bacim et al., 2012) is not

flexible. It is difficult to modify the helping user’s

interface because his view and navigation aids are

definitively specified. If the VE changes, the interface

of the helping user can not be used any more and we

1

http://conferences.computer.org/3dui/3dui2012/

GuidingTechniquesforCollaborativeExplorationinMulti-scaleSharedVirtualEnvironments

329

Figure 2: Directional arrows in the exploring user’s and the

helping user’s views.

Figure 3: Light source in the exploring user’s and the help-

ing user’s views.

have to design a new one. The proposition of point-to-

point guide by remotely controlling the position of the

exploring user in (Nguyen et al., 2012) is not very ap-

propriate for the purpose of navigation aids because

it can cause him to become disoriented and to learn

nothing for his spatial knowledge about the environ-

ment. In addition, there is little feedback from the ex-

ploring user to allow the helping user to know about

the current situation of the exploring user.

So according to our best knowledge, there is no

complete and evaluated solution to improve the per-

formance, the flexibility, and the ease of use of collab-

orative navigation in such complex, large-scale CVEs.

3 GUIDING TECHNIQUES

In this paper, we propose to evaluate and compare

three guiding techniques in the form of navigation

aids (arrows, light source and compass) that would

enable one or several helping user(s) to guide an ex-

ploring user who is traveling in an unfamiliar 3D VE

efficiently. The task of the exploring user would be

to find targets objects or places without the spatial

knowledge of this 3D VE.

3.1 Arrows

The first guiding technique is based on directional

arrows (see Figure 2) that are drawn by the helping

users to indicate the direction or the path that the ex-

ploring user has to follow. The helping users can draw

as many directional arrows of different sizes as they

want. However, so many directional arrows added

within the environment or too big arrows may af-

fect the immersion of the exploring user. As a result,

the helping users have to determine when, how and

where to put directional arrows to guide efficiently

the exploring user. These arrows will disappear af-

ter a while. So the helping users are recommended to

draw directional arrows within easy reach of the ex-

ploring user’s visibility zone. By using a dedicated

3D cursor to draw in the view of the helping users,

it improves the ease of use for the helping users and

it makes possible to draw arrows at any height and in

any 3D direction, so it can facilitate the exploration of

multi-floor virtual buildings.

To draw these arrows, a helping user simply has

to make a kind of 3D drag’n drop gesture. First he

must place the 3D cursor at a position that will be the

origin of the arrow, then he has to activate the cursor

to create the arrow, and the next moves of the 3D cur-

sor will change the length of the arrow, stretching the

arrow between the origin of the arrow and the current

position of the 3D cursor. When he estimates that the

arrow has a good shape, he can signify to the 3D cur-

sor that the stretching of the arrow is finished. This

kind of gesture can be driven by any device that can

provide a 3D position and can send events to the 3D

cursor, for example an ART Flystick or simply a 2D

mouse (with the wheel providing depth values).

From a technical point of view, this 3D cursor able

to draw arrows can be brought to a CVE by a helping

user when he joins the CVE, so there is nothing to

change in the main structure of this CVE and its in-

tegrity is guaranteed.

3.2 Light Source

The second guiding technique is based on a light

source used to light up a path to each target object

(see Figure 3). The exploring user cannot see the light

source itself but only its effect on objects within the

environment. This technique thus depends a lot on the

rendering and illumination quality of the exploring

user’s immersive view. The light source is attached

to a support object that can only be seen by a helping

user. This helping user controls the light source by

moving its support with a 3D cursor and shows up to

the exploring user the path he must follow.

It is important to note that when the helping user

is using the light source to guide, the available light

sources of the building are turned off, the exploring

user has himself a virtual lamp attached to his head to

light up the environment around him. Then there are

just two light sources, one associated to the exploring

user’s head and one used to guide him.

Here again, from a technical point of view, this

GRAPP2013-InternationalConferenceonComputerGraphicsTheoryandApplications

330

Figure 4: Compass in the exploring user’s and the helping

user’s views.

3D cursor, the light source attached to the head of the

exploring user, the light source used to guide him can

be brought to the CVE by a helping user when he joins

the CVE, so there are very few things to change in the

main structure of the CVE: we just need to be able to

put the lights of the CVE off.

3.3 Compass

The third guiding technique is based on a compass

attached to the position of the exploring user (with

an offset), a typical tool to navigate in VEs (see Fig-

ure 4). The compass does not point directly to the

target object location, but points to the location of an-

other virtual object that plays the role of the “north”

of this compass, and this object cannot be seen by

the exploring user. A helping user can control this

“north” by moving it with a 3D cursor, to show up

to the exploring user the path he must follow. So by

moving the “north” of the compass, a helping user

can guide the exploring user to pass across hallways,

rooms, doors, etc. before reaching the target position.

It is thus a simple and powerful tool to guide the ex-

ploring user in any VE.

Here again, from a technical point of view, this

3D cursor, the compass attached to the position of the

exploring user, and the virtual object serving as the

“north” of the compass can be brought to the CVE

by a helping user when he joins the CVE, so, as for

the arrow-based guiding technique, there is nothing to

change in the main structure of the CVE.

To place the compass at the best possible position

relative to the exploring user, it is possible to allow

the exploring user to adjust its offset, simply by mov-

ing the compass through a 3D interaction. However,

this possibility was not offered to our exploring users

during the experiment that is presented in this paper.

3.4 The Guiding Viewpoints

To be able to use these three guiding techniques in an

efficient way, we built two principal kinds of views

for our helping user: a bird’s eye view (see Figure 1)

Figure 5: A “looking over the exploring user’s shoulder”

view of the helping user.

and a first-person perspective by “looking over the ex-

ploring user’s shoulder” (just like a camera attached

to the shoulder of the exploring user) (see Figure 5).

The bird’s eye view could be considered as a 3D

map or a World-In-Miniature (Stoakley et al., 1995).

These views were obtained by choosing some partic-

ular points of view: the “looking over the exploring

user’s shoulder” view was attached to the point of

view of the exploring user and the bird’s eye view

was obtained by increasing the helping user’s scale.

Both views were built without any changes to the

main structure of the VE, with the same concerns: to

guarantee the integrity of the VE, and to offer the pos-

sibility to be used for any kind of VE.

4 EVALUATION

4.1 Context

The Virtual Environment

In order to test these three different navigation aids,

we have built a complex, large virtual building (about

2500 m

2

) with hallways and many rooms of different

sizes filled with furniture objects (e.g., tables, chairs,

shelves). These objects were repeatedly used to fill

these rooms. It means that each object itself could not

be taken as a landmark, and only the way that each

room was arranged made it distinct from the others

in the building. Besides, the position of objects did

not change during the experiment. We used this en-

vironment to conduct all the studies described in this

paper, with different views from several positions in

the VE for a helping user to observe all activities of

an exploring user in the immersive system.

The Exploring User

The exploring user was immersed in the VE with a

first-person perspective (see Figure 6). He was con-

trolling a flystick to travel in the virtual world and as

his head was tracked in a CAVE-like system, he was

GuidingTechniquesforCollaborativeExplorationinMulti-scaleSharedVirtualEnvironments

331

Figure 6: First-person perspective of the exploring user in a

CAVE-like system.

able to move physically to observe objects more care-

fully in the environment. He was also able to move

forward or backward, and to turn right or left by us-

ing the joystick of the flystick. The direction of move-

ment by the joystick was where he was looking at. He

used some specific buttons of the flystick to pick up

target objects or to return to a starting position.

The Helping User

Our system would have made it possible for several

helping users to collaborate at the same time with the

exploring user. However, in order to simplify the eval-

uation, there was only one helping user during this ex-

periment. Moreover, this helping user was the same

for all the exploring users of the experiment: he had

a good knowledge of the apparition order and posi-

tions of targets, and he was in charge of providing

the navigation aids always in the same way for each

exploring user. He was the designer of the guiding

techniques and was strongly involved in their imple-

mentation, their deployment and their testing. So his

performance was stable when guiding each exploring

user, as he had already improved his skills during the

tuning of the experimental setup.

For interaction, the helping user had a 3D cursor

to manipulate objects within the VE, to add naviga-

tion aids such as directional arrows, or to control the

light source or the “north” of the compass. The help-

ing user was also able to control the position and ori-

entation of his own viewpoint as well as to change his

own scale in the view. It means that he was able to be-

come bigger to have an overall view of the building,

or smaller to take a look inside each room to locate

the target (but he was not allowed to pick up the tar-

get by himself). He was also able to see where was the

exploring user at every moment. The interface of the

helping users was pure in 3D, although in our experi-

ment he was using a desktop environment. Neverthe-

less, it would be possible and perfectly adequate for

the helping user to use an immersive display system.

In order to locate the next target that the explor-

ing user had to find, the helping user was allowed to

move a 3D clipping plane to make a 3D scan of the

VE. This scanning tool was also brought into the VE

by the helping user. It was generic and as the three

guiding techniques that are evaluated in this paper, it

guaranteed the integrity of the VE.

Explicit Communications between Users

The helping user was able to send signals (in our ex-

periment, they were color signals) to the exploring

user to inform him about his situation. When the help-

ing user was searching the target object on the map

and the exploring user had to wait until the helping

user found it, the helping user could send an orange

signal. When the exploring user was entering the right

room or was following the right way, the helping user

could send a green signal. Last, when the exploring

user was taking the wrong way, the helping user could

send a red signal. These signals could become a com-

munication channel between the users performing a

collaborative task.

4.2 Task

Task to Achieve

As mentioned above, each exploring user of this ex-

periment had to find 12 different positions of target

objects represented by small glowing cubes. When

the exploring user was picking up the target object,

this target was disappearing and a color signal was

appearing to tell both users that the target had been

reached and that the system had stopped measuring

time. Then the exploring user was invited to go back

to the starting position for the search of the next tar-

get. By pressing a dedicated button of his flystick,

he was teleported back to this starting position. And

when both the exploring and the helping user were

ready, the target object was reappearing at another

position in the environment. During the experiment,

each guiding technique was used successively 4 times

to find 4 target positions. There was a total of 12 dif-

ferent positions for the three guiding techniques. The

12 targets were always appearing in the same order,

and the order of the techniques used for the guiding

(A: Arrows, L: Light, C: Compass) was changing af-

ter each user, to be one of these 6 configurations: A-

L-C, A-C-L, L-A-C, L-C-A, C-A-L, C-L-A. So we

were needing a number of participants that would be

multiple of 6 in order to encounter the same number

of these 6 configurations.

GRAPP2013-InternationalConferenceonComputerGraphicsTheoryandApplications

332

Measures

In order to evaluate how the three guiding techniques

have influenced the efficiency of navigation, we did

not count the time it took the helping user to find

where was the target position on the map. We just

considered the time it took the exploring user to com-

plete the target search task. It included two separate

but continuous tasks: a navigation task and a search

task. The navigation task was based on the naviga-

tion aids added in the environment to find a path from

the starting position to the target position. The start-

ing position was always the same for all the target ob-

jects and for all the participants of the experiment. So,

for each target, the exploring user moved always from

the same starting point and the system measured the

time taken to reach the target object. This time was

thus measured into the navigation time and the search

time. The navigation time was the time taken to nav-

igate from the starting position to the area of 2.5 me-

ters around the target and the search time was the time

to search and pick up the target in this area. We used

this approach to calculate the time because sometimes

the target object was well hidden in the environment,

so the exploring user was not able to find it at first

glance, and we wanted to make a clear difference be-

tween the time taken for the navigation (coming not

farther than 2.5 meters from the target) and the time

taken for the precise searching and finding of the tar-

get. Once the exploring user had entered this zone,

the search time was recorded. However, the naviga-

tion time was specifically taken into consideration be-

cause it was directly representing the performance of

navigation aids. The search time was also recorded

in order to obtain preliminary data for further stud-

ies about efficient and appropriate metaphors for the

searching task.

4.3 Experimental Setup

The hardware setup of the experiment consisted of a

big CAVE-like system in the shape of an “L” whose

size was 9.60 meters long, 3.10 meters high and 2.88

meters deep. This visual system immersed exploring

users in a high-quality visual world and they were us-

ing a pair of active shutter glasses. We also used a

tracking system to locate the position and the orienta-

tion of the exploring user’s head. To enable exploring

users to manipulate objects in such an environment,

we used a tracked flystick as an input device. The

helping user worked with a desktop workstation and

used a mouse to drive a 3D cursor.

The software setup used for the experiment in-

cluded Java to write the CVE, Java3D to develop the

helping user’s views on desktop, jReality to develop

the immersive view of the exploring user, and Blender

to model the virtual environment.

4.4 Participants

In this study, the designer of the virtual environment

played the role of the guiding user. Additionally, there

were 18 male and 6 female subjects who served as ex-

ploring users. Their age ranged from 21 to 61, averag-

ing at 30.5. Thirteen of them (8 males and 5 females)

had no experience at all in immersive navigation in

3D virtual environments.

4.5 Procedure

Before beginning the training phase of the experi-

ment, each participant was verbally instructed about

the experiment procedure, the virtual environment

and the control devices. He was explained the goal

of the experiment to search a target object at differ-

ent positions by following the navigation aids added

in the environment. He was also instructed to pay at-

tention to find the target carefully when he reached

the narrow zone around the target because it was not

always easy to find it at first glance.

In the training phase, the participant was sug-

gested to navigate freely in the virtual building. When

he was feeling at ease with the environment and

the control devices, we were beginning the training

phase. The participant was given a simple task to

complete: he was asked to find his way from a start-

ing point (the entrance of the building) to some target

positions with our three different guiding techniques.

In the evaluation phase, the participant was asked

to search 12 target positions in the environment by

basing on three different guiding techniques.

In the final phase, the participant filled out a short

subjective questionnaire concerning his experience of

navigating in immersive virtual environments and his

opinion about the guiding in general, his preferences

for the perturbation, stress, fatigue, intuitiveness, and

efficiency of each guiding technique.

4.6 Results

Navigation Performance

We focused on the efficiency of the three different

guiding techniques when we applied them in the nav-

igation task. So the navigation time was consid-

ered as an important measure in this statistical anal-

ysis. P values of average navigation time of the three

techniques were calculated using repeated measures

GuidingTechniquesforCollaborativeExplorationinMulti-scaleSharedVirtualEnvironments

333

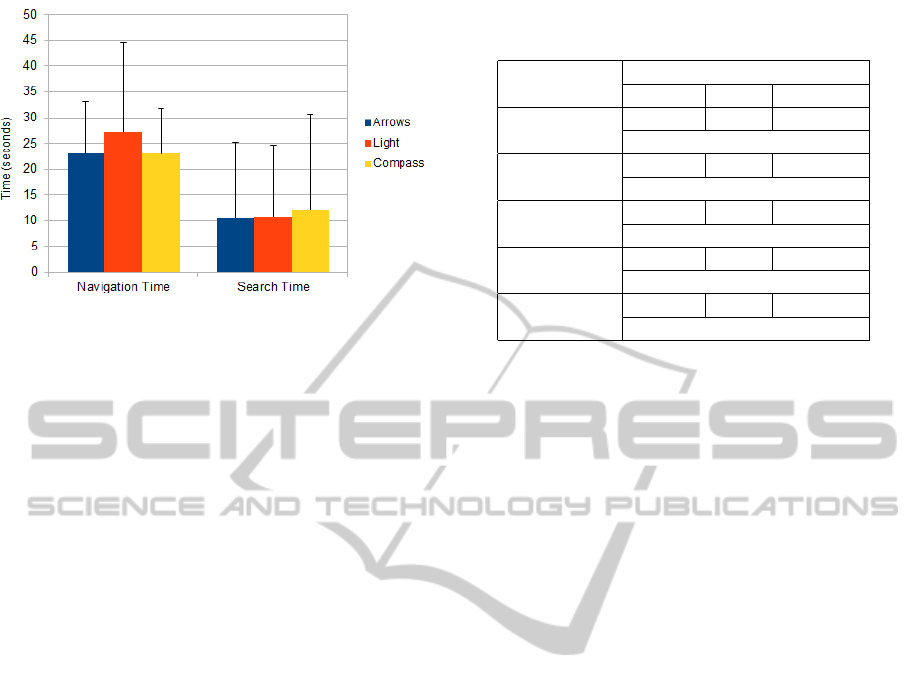

Figure 7: Means and standard deviations of navigation and

search time (in seconds) for three guiding techniques.

ANOVA and post hoc multiple pairwise comparison

(Tukey-Kramer post hoc analysis).

The average navigation time, the average search

time and their standard deviations are presented in

Figure 7. For the recorded navigation time, the re-

sult revealed a statistically significant difference for

the three navigation aids (F(2,285) = 3.67, p = 0.026).

In addition, the Tukey-Kramer post hoc analysis in-

dicated that navigation time in the Light condition

(mean = 27.26) was significantly higher than navi-

gation time in the Arrows condition (mean = 22.99)

(p = 0.05) and Compass condition (mean = 22.97)

(p = 0.05), while there was no significant difference

between Arrows and Compass conditions (p = 0.99).

However, based on the preliminary results of

search time, we did not find out about any significant

effect of guiding techniques on the recorded search

time: for the three guiding techniques (F(2,285) =

0.29, p = 0.74) as well as for each condition. These

results indicated that the effect of the guiding tech-

niques for search time was not statistically significant

but this must be confirmed by further studies.

Subjective Estimation

Each user was asked to fill a questionnaire with sub-

jective ratings (using a 7-point Likert scale) for the

three techniques according to the following criteria:

perturbation, stress, fatigue, intuitiveness, and effi-

ciency. A Friedman test has been performed on the

questionnaire and the p-values were showed in ta-

ble 1. Dunn post-hoc analysis showed that the light

was rated to be significantly more perturbing, more

tiring, and less intuitive and less efficient than the ar-

rows and the compass guiding techniques. Moreover,

no significant differences were found between the ar-

rows and the compass guiding techniques on these

five subjective ratings. Regarding the subjects’ gen-

eral preference, we found most exploring users pre-

Table 1: Average scores and p-values for five qualitative

measures with significant differences shown in bold.

Question

Navigation Aids

Arrows Light Compass

Perturbation

6.17 4.58 5.67

p = 0.00054

Stress

6.29 5.46 6.54

p = 0.01063

Fatigue

6.08 4.87 6.41

p = 0.00011

Intuitiveness

6.17 4.54 6.20

p = 0.00010

Efficiency

5.87 4.46 6.16

p = 0.00002

ferred to be guided by arrows or by the compass.

5 DISCUSSION

The results of the navigation performance study

showed that the directional arrows and the compass

outperformed the light source in navigation task. The

low performance of the light source came from the

lack of accuracy of light effect on the environment.

It might come from the confusion between the guid-

ing light source and the light source that the explor-

ing user had with him when he was approaching the

guiding light source. The light source was also too

sensitive to the elements of the environment such as

the quality of 3D model of the environment or the ren-

dering and illumination quality of the immersive view

as mentioned above. However, we found out that the

confusion between the light source to guide and the

light source of the exploring user rather affected the

search task than the exploration task because this con-

fusion usually happened in a small space such as in

a room when the exploring user was surrounded by

many different objects.

There were no significant differences among the

three guiding techniques in the search task. It can

be explained because some of the targets were very

easy to find (the exploring user was able to see them

as soon as he entered the room where the target was

hiding) while some others were very difficult to find

(hidden within some furniture in a room). So the fi-

nal physical approach to the target did not really de-

pend on the navigation aids but rather on the ability of

the exploring user to move physically in his surround-

ing workspace. Further experiments will be needed to

have a better evaluation of these guiding techniques

for precise search of target.

The subjective results supported the results of nav-

igation performance study in evaluating the efficiency

GRAPP2013-InternationalConferenceonComputerGraphicsTheoryandApplications

334

of the arrows and compass aids in collaborative nav-

igation. Most of the participants found them more

intuitive, easy to follow, and efficient to indicate di-

rection than the light source. However, some explor-

ing users found the light source more natural than the

other guiding techniques, especially when they were

in a big hall or in a long hallway.

Sometimes, in small rooms, not only the light

source made the exploring users confused, but also

the compass or the directional arrows because they

were occluded by the VE (for example, by walls).

And for the search task, an exploring user of our ex-

periment found that the compass was a little annoy-

ing and confusing when it was near the target because

its “north” was unstable. So some factors such as the

quality of the 3D rendering, the structure of the virtual

building, and the size of navigation aids could have a

deep impact on navigation and search performances.

We need to take them into consideration to improve

the performance of collaborative exploration.

The activity of the helping user could also explain

some differences between the guiding techniques. In-

deed, to guide an exploring user using directional ar-

rows, he simply had to use about 4 or 5 arrows to draw

the direction toward each target. With the compass,

he just had to put the support object that controlled

the compass “north” at the entrance of the hallway or

the room where he wanted the exploring user to enter

to and then put it near the target when the exploring

user approached it. It was more complicated with the

light source because of the confusion between the two

light sources. The helping user had to move the light

source or make it flicker to get the intention of the ex-

ploring user. He also had to choose where to put the

light source to make a clear difference between the ef-

fect of this guiding light source and those of its own

light source in the environment.

Our VR framework enables a helping user to use

these guiding techniques in many different platforms:

he can be immersed in a CAVE-like system with a

tracking system or simply be in front of a desktop

computer with a mouse. This can facilitate the flex-

ibility of collaborative exploration between distant

users who have different working conditions.

6 CONCLUSIONS

In this paper we have presented a set of three collab-

orative guiding techniques (directional arrows, light

source and compass) that enable some helping users

to guide an exploring user in a complex 3D CVE.

These collaborative guiding techniques can be used

in many kinds of 3D CVEs because they do not mod-

ify the structure of the environment. Indeed, all the

guiding aids are dynamically provided by the helping

users through the creation or the manipulation of few

dedicated 3D objects that the helping users can bring

with them when they join the CVE. The helping users

can also bring with them a generic 3D clipping plane

to make a 3D scan of the VE to locate the targets or

the places to reach.

An experimental study was conducted to evalu-

ate these three types of guiding techniques for navi-

gation and search in a complex, large-scale building.

The results of our experiment showed that these three

guiding techniques could reduce wasted time in the

wayfinding task because of their simplicity, intuitive-

ness and efficiency in navigation. Additionally, al-

though the directional arrows and the compass outper-

formed the light source for the navigation task, several

exploring users found the light source guiding tech-

nique very natural, and it can probably be combined

with the two other guiding techniques.

7 FUTURE WORK

In the future, these guiding techniques should be im-

proved to overcome some of their limitations such as

the occlusions of arrows and compass (by enabling

the exploring user to change their size or their posi-

tion dynamically), the instability of compass, or the

confusion of light sources (by enabling the exploring

user or the helping users to change properties of these

light sources such as color, intensity, attenuation, vis-

ibility of their beacon dynamically, . . . ). It would also

be very interesting to study the best way to combine

these guiding techniques or to switch dynamically be-

tween them in order to optimize the overall guiding

for navigation and search of targets.

We will have to make further experiments to eval-

uate the efficiency of these guiding techniques for pre-

cise search of objects or to propose other appropriate

metaphors for this kind of task.

Our work will also be extended by evaluating the

ease of use, the simplicity and the efficiency of these

guiding techniques from the helping user’s point of

view when he is immersed in the environment with

a 3D interface and when he is not with a 2D inter-

face and a 3D cursor. The efficiency of these guiding

techniques provided by the helping users could also

be compared with these same guiding technique auto-

matically generated by computer.

GuidingTechniquesforCollaborativeExplorationinMulti-scaleSharedVirtualEnvironments

335

ACKNOWLEDGEMENTS

We wish to thank Foundation Rennes 1 Progress, In-

novation, Entrepreneurship for its support.

REFERENCES

Bacim, F., Ragan, E. D., Stinson, C., Scerbo, S., and Bow-

man, D. A. (2012). Collaborative navigation in vir-

tual search and rescue. In Proceedings of IEEE Sym-

posium on 3D User Interfaces, pages 187–188. IEEE

Computer Society.

Backlund, P., Engstrom, H., Gustavsson, M., Johannesson,

M., Lebram, M., and Sjors, E. (2009). Sidh: A game-

based architecture for a training simulator. Interna-

tional Journal of Computer Games Technology.

Bowman, D. A., Kruijff, E., LaViola, J. J., and Poupyrev,

I. (2004). 3D User Interfaces: Theory and Practice.

Addison Wesley Longman Publishing Company, Red-

wood City, CA, USA.

Burigat, S. and Chittaro, L. (2007). Navigation in 3d vir-

tual environments: Effects of user experience and

location-pointing navigation aids. International Jour-

nal of Human-Computer Studies, 65(11):945–958.

Cabral, M., Roque, G., Santos, D., Paulucci, L., and Zuffo,

M. (2012). Point and go: Exploring 3d virtual envi-

ronments. In Proceedings of IEEE Symposium on 3D

User Interfaces, pages 183–184. IEEE Computer So-

ciety.

Chittaro, L. and Burigat, S. (2004). 3d location-pointing as

a navigation aid in virtual environments. In Proceed-

ings of the Working Conference on Advanced Visual

Interfaces, pages 267–274. ACM New York.

Chittaro, L. and Venkataraman, S. (2001). Navigation aids

for multi-floor virtual buildings: A comparative eval-

uation of two approaches. In Proceedings of the ACM

symposium on Virtual Reality Software and Technol-

ogy, pages 227–235. ACM New York.

Churchill, E. F., Snowdon, D. N., and Munro, A. J. (2001).

Collaborative Virtual Environments: Digital Places

and Spaces for Interaction. Springer Verlag London.

Darken, R. P. and Peterson, B. (2001). Spatial orienta-

tion, wayfinding, and representation. In K. M. Stan-

ney (Ed.), Handbook of Virtual Environments: De-

sign, Implementation, and Applications, pages 493–

518. Erlbaum.

Darken, R. P. and Sibert, J. L. (1996). Navigating large

virtual spaces. International Journal of Human-

Computer Interaction, 8(1):49–72.

Dumas, C., Degrande, S., Saugis, G., Chaillou, C., Viaud,

M.-L., and Plenacoste, P. (1999). Spin: a 3d interface

for cooperative work. Virtual Reality Society Journal.

Elmqvist, N., Tudoreanu, E., and Tsigas, P. (2007). Tour

generation for exploration of 3d virtual environments.

In Proceedings of ACM Symposium on Virtual Reality

Software and Technology, pages 207–210. ACM New

York.

Hindmarsh, J., Fraser, M., Heath, C., Benford, S., and

Greenhalgh, C. (1998). Fragmented interaction: Es-

tablishing mutual orientation in virtual environments.

In Proceedings of ACM conference on Computer sup-

ported cooperative work, pages 217–226. ACM New

York.

Jul, S. and Furnas, G. W. (1997). Navigation in electronics

worlds. ACM SIGCHI Bulletin, 29(4):44–49.

Kim, H.-K., Song, T.-S., Choy, Y.-C., and Lim, S.-B.

(2005). Guided navigation techniques for 3d virtual

environment based on topic map. International Con-

ference on Computational Science and Its Applica-

tions, 3483:847–856.

Macedonia, M., Zyda, M. J., Pratt, D., Barham, P., and

Zeswitz, S. (1994). Npsnet: A network software ar-

chitecture for large scale virtual environments. Pres-

ence, 3(4):265–287.

Nguyen, T. T. H., Fleury, C., and Duval, T. (2012). Col-

laborative exploration in a multi-scale shared virtual

environment. In Proceedings of IEEE Symposium on

3D User Interfaces, pages 181–182. IEEE Computer

Society.

Notelaers, S., Weyer, T. D., Goorts, P., and Maesen, S.

(2012). Heatmeup: a 3dui serious game to explore col-

laborative wayfinding. In Proceedings of IEEE Sym-

posium on 3D User Interfaces, pages 177–178. IEEE

Computer Society.

Ruddle, R. A. (2005). The effect of trails on first-time and

subsequent navigation in a virtual environment. In

Proceedings of the IEEE Virtual Reality, pages 115–

122. IEEE Computer Society.

Stoakley, R., Conway, M., and Pausch, R. (1995). Virtual

reality on a wim: Interactive worlds in miniature. In

Proceedings of SIGCHI conference on Human Factors

in Computing Systems, pages 265–272. ACM New

York.

Suma, E., Finkelstein, S., Clark, S., Goolkasian, P., and

Hodges, L. (2010). Effects of travel technique and

gender on a divided attention task in a virtual environ-

ment. In Proceedings of IEEE Symposium on 3D User

Interfaces, pages 27–34. IEEE Computer Society.

Vinson, N. G. (1999). Design guidelines for landmarks to

support navigation in virtual environments. In Pro-

ceedings of SIGCHI Conference on Human Factors in

Computing Systems, pages 278–285. ACM New York.

Wang, J., Budhiraja, R., Leach, O., Clifford, R., and

Matsua, D. (2012). Escape from meadwyn 4: A

cross-platform environment for collaborative naviga-

tion tasks. In Proceedings of IEEE Symposium on 3D

User Interfaces, pages 179–180. IEEE Computer So-

ciety.

Yang, H. and Olson, G. M. (2002). Exploring collaborative

navigation: the effect of perspectives on group per-

formance. In Proceedings of the international con-

ference on Collaborative Virtual Environments, pages

135–142. ACM New York.

Zanbaka, C., Lok, B., Babu, S., Xiao, D., Ulinski, A., and

Hodges, L. F. (2004). Effects of travel technique on

cognition in virtual environments. In Proceedings of

the IEEE Virtual Reality 2004, pages 149–157. IEEE

Computer Society.

GRAPP2013-InternationalConferenceonComputerGraphicsTheoryandApplications

336