External Cameras and a Mobile Robot for Enhanced Multi-person

Tracking

A. A. Mekonnen

1,2

, F. Lerasle

1,2

and A. Herbulot

1,2

1

CNRS, LAAS, 7 avenue du Colonel Roche, F-31400 Toulouse, France

2

Univ de Toulouse, UPS, LAAS, F-31400 Toulouse, France

Keywords:

Multi-target Tracking, Multi-sensor Fusion, Automated Human Detection, Cooperative Perception Systems.

Abstract:

In this paper, we present a cooperative multi-person tracking system between external fixed-view wall mounted

cameras and a mobile robot. The proposed system fuses visual detections from the external cameras and laser

based detections from a mobile robot, in a centralized manner, employing a “tracking-by-detection” approach

within a Particle Filtering scheme. The enhanced multi-person tracker’s ability to track targets in the surveilled

area distinctively is demonstrated through quantitative experiments.

1 INTRODUCTION

Automated multi-person detection and tracking are

indispensable in video-surveillance, robotic and sim-

ilar systems. Unfortunately, automated multi-person

perception is very challenging due to variations in hu-

man appearance. These challenges are further am-

plified in robotic platforms due to mobility, limited

Field-Of-View (FOV) of on-board sensors, and lim-

ited on-board computational resources. Relatively

successful multi-person perception systems have been

reported in classical video-surveillance frameworks

that rely on visual sensors fixed in the environ-

ment (Hu et al., 2004). Even though these systems

benefit from global perception from wall-mounted

cameras, they are still susceptible to occlusions and

dead-spots. To circumvent these shortcomings, we

propose a cooperativemulti-personperception system

consisting of a mobile robot and two wall-mounted

fixed-view cameras. This system benefits from the

global perception of the wall-mounted cameras and

additionally, from the mobile platform which pro-

vides local perception, a means for action, and as it

can move around, the ability to cover dead spots and

possibly alleviate occlusions resulting in enhanced

perception capabilities. Similar systems have been

proposed in (Chia et al., 2009) and (Chakravarty

and Jarvis, 2009). Contrary to both works, our pro-

posal fuses cooperative information in a centralized

manner. The proposed system has the ability to

complement local perception with global perception

and vice-versa, enhancing each individual approach

through cooperation. To the best of our knowledge

this cooperative framework has not been addressed in

the literature.

Touchscreen

ELO

RF

Antennas

2D SICK

Laser

Firewire Camera

on PTU

Laptop

Camera 1

Flea RGB

Camera 2

Flea RGB

Hub

Firewire

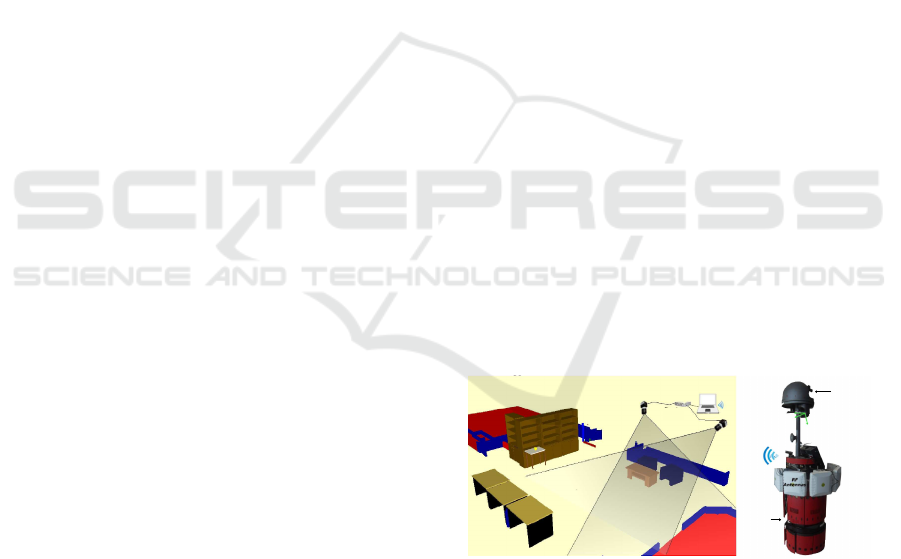

Figure 1: Perceptual platform; static cameras (with rough

positions and fields of view) and Rackham.

This paper is structured as follows: architecture of

the cooperative system is presented in section 2. Sec-

tion 3 describes the different detection modalities that

drivethe multi-person tracker (presented in section 4).

Evaluations and results are presented in section 5 fol-

lowed by concluding remarks in section 6.

2 ARCHITECTURE

Our cooperative framework is made up of a mobile

robot and two fixed view wall-mounted RGB flea2

cameras (figure 1). The cameras havea maximum res-

olution of 640x480pixels and are connected to a dual-

core Intel Centrino Laptop via a fire-wire cable. The

robot, called Rackham, is an iRobot B21r mobile plat-

form. It has various sensors, of which its SICK Laser

411

Mekonnen A., Lerasle F. and Herbulot A..

External Cameras and a Mobile Robot for Enhanced Multi-person Tracking.

DOI: 10.5220/0004294904110415

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2013), pages 411-415

ISBN: 978-989-8565-48-8

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

Range Finder (LRF) is utilized in this work. Commu-

nication between the mobile robot and the computer

hosting the cameras is accomplished through a wi-fi

connection.

Leg Detection

Foreground Segmentation

(Detection)

HOG based Person

Detection

Multi-Person Tracking

2D SICK Laser

(on robot)

Flea2 RGB

cameras (wall mounted)

TRACKING

DETECTION

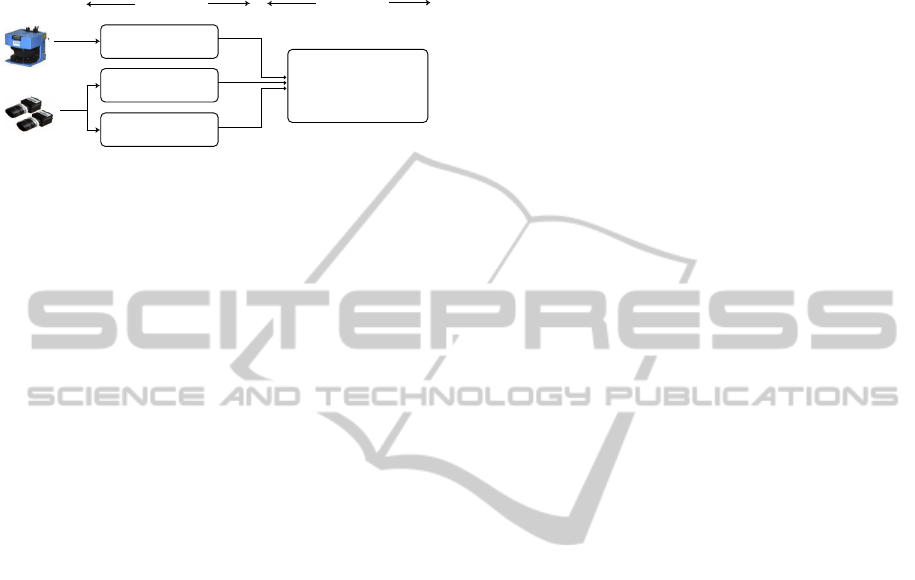

Figure 2: Multi-person detection and tracking system block

diagram.

Figure 2 shows block diagram of the envisaged

multi-person perceptual system. It has two main

parts. The first part deals with automated multi-

person detection. The second part is dedicated for

multi-person tracking. It takes all detections as in-

put and fuses them in a Particle Filtering framework.

Each of these parts are discussed in detail in subse-

quent sections. It is worth mentioning here that the

entire system is calibrated with respect to a global ref-

erence frame. Both the intrinsic and extrinsic param-

eters of the fixed cameras are known and in addition

the mobile robot has localization module that local-

izes its pose with respect to the reference frame using

laser scan segments.

3 MULTI-PERSON DETECTION

The perceptual functionalities of the entire system are

based on various detections. The detection modules

are responsible for automatically detecting persons in

the area. Differentperson detection modalities are uti-

lized depending on the data provided by each sensor.

Leg Detection with LRF: the LRF provides hori-

zontal depth scans with a 180

o

FOV and 0.5

o

reso-

lution at a height of 38cm above the ground. Person

detection, hence, follows by segmenting leg patterns

within the scan. In our implementation a set of ge-

ometric properties characteristic to human legs and

outlined in (Xavier et al., 2005) are used.

Person Detection from Wall Mounted Cameras:

to detect persons using the wall mounted cameras,

two different modes are used. First, a foreground

segmentation using a simple Σ-∆ background subtrac-

tion technique (Manzanera, 2007) is used. The mo-

bile robot is masked out of the foreground images us-

ing its position from its localization module. Second,

Histogram of Oriented Gradients (HOG) based per-

son detection (Dalal and Triggs, 2005) is used. This

method makes no assumption of any sort about the

scene or the state of the camera (mobile or static). It

detects persons in each frame using HOG features.

Both detections are projected to yield ground posi-

tions, (x, y)

G

with associated color appearance infor-

mation in the form of HSV histograms (P´erez et al.,

2002), of individuals in the area.

4 MULTI-PERSON TRACKING

Multi-person tracking in our context, is concerned

with the problem of tracking a variable number of

persons, possibly interacting, in the ground plane.

The literature in multi-target tracking contains differ-

ent approaches but when it comes to tracking mul-

tiple interacting targets of varying number (Khan

et al., 2005) has clearly shown that Reversible

Jump Markov Chain Monte Carlo - Particle Filters

(RJMCMC-PFs) are more appealing taking perfor-

mance and computational requirements into consid-

eration. Inspired by this, we have used RJMCMC-

PF, adapted to our cooperativeperceptual strategy, for

multi-person tracking driven by the various heteroge-

neous detectors. The actual detectors are: the LRF

based person detector, the foreground segmentation

(detection) and HOG based detections from each wall

mounted camera. Implementations choices crucial to

any RJMCMC-PF are briefly discussed below.

State Space: the state vector of a person i in hy-

pothesis n at time t is a vector encapsulating the id

and (x, y) position of an individual on the ground

plane with respect to a defined coordinate base, x

n

t,i

=

{Id

i

, x

n

t,i

, y

n

t,i

}.

Proposal Moves: RJMCMC-PF accounts for the

variability of the tracked targets by defining a variable

dimension state space. Proposal movespropose a spe-

cific move on each iteration to guide this variable state

space exploration. In our implementation, four sets of

proposal moves, m = {

Add

,

Update

,

Remove

,

Swap

},

are used. The choice of the proposals privileged in

each iteration is determined by q

m

, the jump move

distribution. These values are determined empir-

ically and are set to {0.15, 0.8, 0.02, 0.03} respec-

tively. Equation 1 shows computation of the accep-

tance ratio, β, of a proposal X

∗

at the n

th

iteration.

It makes use of the jump move distribution, q

m

; pro-

posal move distribution, Q

m

(), associated with each

move; the observation likelihood, π(X

n

t

); and the in-

teraction model, Ψ(X

n

t

).

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

412

β = min

1,

π(X

∗

)Q

m∗

X

n−1

t

|X

∗

q

m∗

Ψ(X

∗

)

π

X

n−1

t

Q

m

X

∗

|X

n−1

t

q

m

Ψ

X

n−1

t

(1)

where m ∈ {

Add

,

Update

,

Remove

,

Swap

} and m

∗

de-

notes the reverse operation.

Update

and

Swap

moves

are self reversible.

Add

: the add move, randomly selects a detected per-

son, x

p

, from the pool of provided detections and ap-

pends its state vector on X

n−1

t

resulting in a proposal

state X

∗

. The proposal density driving the

Add

pro-

posal, Q

Add

X

∗

|X

n−1

t

, is then computed according

to equation 2.

Q

Add

X

∗

|X

n−1

t

=

∑

d

k

d

.

N

d

∑

j=1

N

x

p

;z

d

t, j

, Σ

.

1− k

m

N

t

∑

j=1

N

x

p

;

ˆ

X

t−1, j

, Σ

!

(2)

where d represents the set of detectors, namely: from

laser (l), fixed camera 1 (c

1

), and fixed camera 2 (c

2

);

d ∈ {l, c

1

, c

2

}, N

d

the total number of detections in

each detector, k

d

is a weighting term for each detector

such that

∑

d

k

d

= 1, N

t

is the number of targets in the

MAP, and k

m

is a normalizationconstant. When a new

person is added, its appearance is cross-checked with

the appearance of persons that have been tracked for

re-identification.

Remove

: this move randomly selects a tracked person

x

p

from the particle being considered, X

n−1

t

, and re-

moves it, proposing a news state X

∗

. Contrary to the

add move, the proposal density used when comput-

ing the acceptance ratio, Q

Remove

(X

∗

|X

n−1

t

) (equation

3), is given by the distribution map from the tracked

persons masked by a map derived from the detected

passers-by.

Q

Remove

X

∗

|X

n−1

t

=

1−

∑

d

k

d

.

N

d

∑

j=1

N

x

p

;z

d

t, j

, Σ

!

.

k

m

N

t

∑

j=1

N

x

p

;

ˆ

X

t−1, j

, Σ

!

(3)

Update

: here, the state vector of a randomly cho-

sen passer-by is perturbed by a zero mean nor-

mal distribution. The update proposal density,

Q

update

(X

∗

|X

n−1

t

), is a normal distribution with the

position of the newly updated target as mean.

Swap

: the swap move handles the possibility of id

switches amongst near or interacting targets. When

this move is selected, the ids of the two nearest

tracked persons are swapped and a new hypothesis X

∗

is proposed. The acceptance ratio is computed similar

to the

Update

move.

Interaction model (Ψ(.)): is used to maintain

tracked person identity and penalize fitting of two

trackers on the same object during interaction. A

Markov Random Field (MRF), similar to (Khan et al.,

2005), is adopted to address this.

Observation Likelihood (π(.)): the observation

likelihood, in equation 1, is derived from all detec-

tor outputs except the laser for which blobs formed

from the raw laser range data, denoted as l

b

, are con-

sidered. If the specific proposal move is an

Update

or

Swap

move, a Bhattacharyya likelihood measure is

also incorporated. Each detection is represented as

a Gaussian, N (.), centered on the detection. Rep-

resenting the measurement information at time t as

z

t

, the observation likelihood of the n

th

particle X

n

t

at

time t is computed as shown in equation 4.

π(X

n

t

) = π

B

(X

n

t

).π

D

(X

n

t

)

π

B

(X

n

t

) =

∏

M

i=1

∏

2

c=1

e

−λB

2

i,c

, if m =

Update

or

Swap

1 , otherwise

π

D

(X

n

t

) =

1

M

M

∑

i=1

∑

d

k

d

.π

x

i

|z

d

t

!

,

∑

d

k

d

= 1

π

x

i

|z

d

t

=

1

N

d

N

d

∑

j=1

N

x

i

;z

d

t, j

, Σ

(4)

Above, B

i

represents the Bhattacharyya distance

computed between the appearance histogram of a pro-

posed target i in particle X

n

t

and the target model in

each camera c. M represents the number of targets

in the particle, and N

d

the total number of detections

in each detection modality d, d = {l

b

, c

1

, c

2

}, in this

case including the measures from the laser blobs. k

d

is a weight assigned to each detection modality tak-

ing their respective accuracy into consideration and x

i

represents the position of target i in the ground plane.

5 EVALUATIONS AND RESULTS

To evaluate the performance of our RJMCMC-PF

multi-person tracker, three sequences acquired using

Rackham and the wall mounted cameras are used.

Each sequence contains a laser scan and video stream

from both cameras. Sequence I and II contain 200

frames each and consist of two and three targets con-

secutively. Sequence III is 186 frames long contain-

ing four targets moving in the vicinity of the robot.

The evaluation is carried out using the CLEAR MOT

metrics (Bernardin and Stiefelhagen, 2008), Multi-

ple Object Tracking Accuracy (MOTA) and Precision

(MOTP). To clearly observe the advantages of each

ExternalCamerasandaMobileRobotforEnhancedMulti-personTracking

413

Table 1: Multi-person tracking evaluation results.

Sequence

Laser-only Fixed Cameras only Cooperative

MOTP MOTA MOTP MOTA MOTP MOTA

µ σ µ σ µ σ µ σ

I 15.62 2.34 0.41 0.05 19.80 0.14 0.79 0.03 17.01 1.87 0.84 0.03

II 19.90 1.66 0.27 0.07 22.79 1.35 0.70 0.05 17.73 0.50 0.79 0.03

III 21.94 1.75 0.20 0.07 28.44 1.60 0.46 0.07 21.30 1.34 0.54 0.04

sensor modality, the evaluation is carried out by do-

ing the tracking using (1) laser-only information, (2)

vision-only data from the two wall mounted cameras,

and finally (3) laser and the two cameras coopera-

tively. A hand labeled ground truth with (x, y) ground

positions and unique id for each person is used in the

evaluation. Each sequence is run eight times to ac-

count for the stochastic nature of the filter. Results

are reported as mean value and associated standard

deviation in table 1.

The results presented in table 1 clearly attest the

improvements in perception brought by the cooper-

ative fusion of laser and wall mounted camera per-

cept. The cooperative system consisting of laser and

two wall mounted cameras exhibit an MOTA of 0.841

when tracking two targets, 0.793 for three targets.

These results clearly indicate the enhanced perfor-

mance of this system. Sample tracking sequences

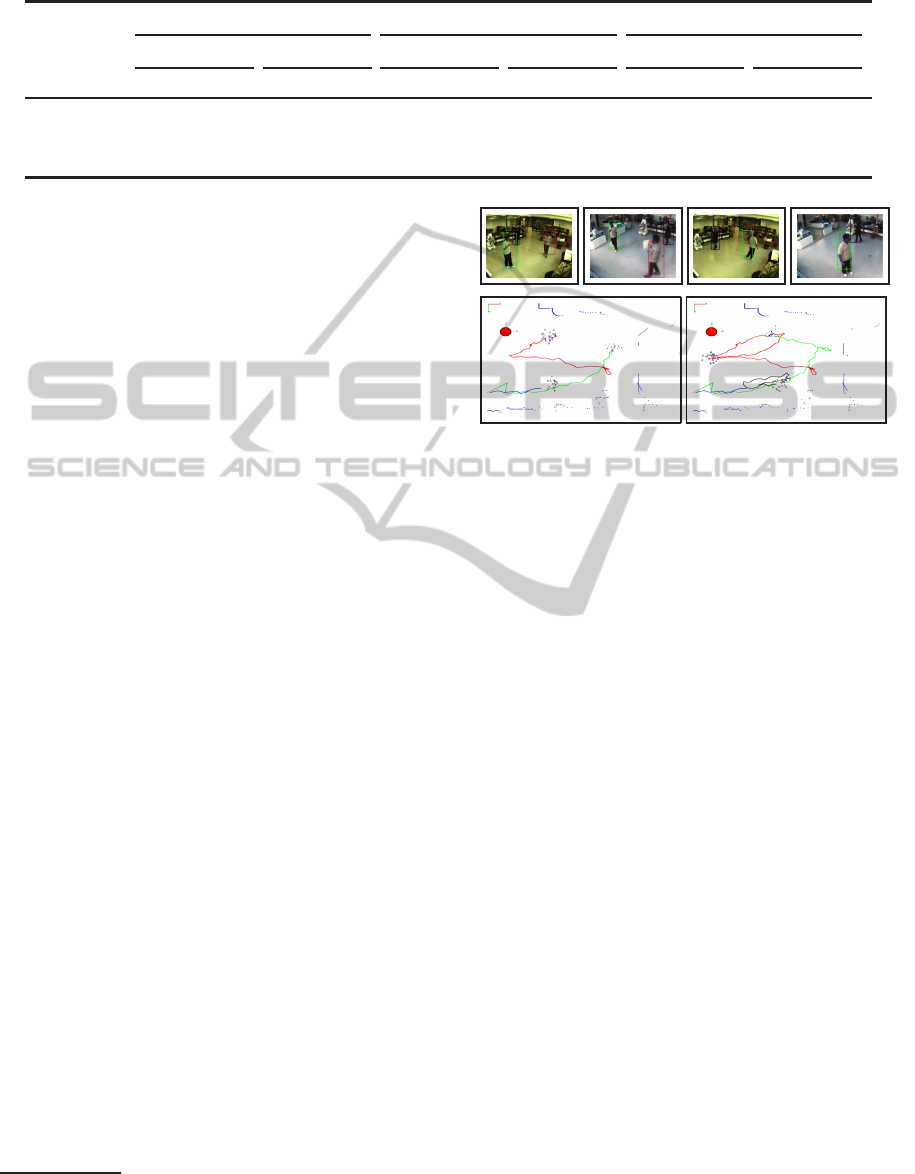

from sequence II are shown in figure 3

1

. Evidently,

the LRF-only has low accuracy owing to the mistakes

made with leg like structures in the environment, sen-

sitivity to occlusion, and lack of discriminating infor-

mation amongst tracked passers-by. The results ob-

tained using the wall mounted cameras show major

improvements though their position tracking preci-

sion is relatively lower compared to those which in-

clude laser measurement. The final tracker runs at

1f ps. Most of the computation time, ≈ 700ms, is

spent on HOG based person detection.

6 CONCLUSIONS

The work presented herewith makes its main contri-

bution in the vein of multi-person tracking by propos-

ing a cooperative scheme between overhead cam-

eras and sensors embedded on a mobile robot in or-

der to track people in crowds. Our Bayesian data

fusion framework with the given sensor configura-

tion enhances typical surveillance systems with only

fixed cameras and complete embedded systems with-

1

For complete run, visit the URL homepages.laas.fr/

aamekonn/videos/

(a) (b)

Figure 3: Multi-person tracking illustrations taken from se-

quence II at a) frame 60, and b) frame 94.The top row im-

ages show camera streams and the bottom shows the ground

floor with tracked persons’ trajectories superimposed

1

.

out wide FOV and straightforward (re)-initialization

ability. The presented results are a clear indication of

the framework’s notable tracking performance.

REFERENCES

Bernardin, K. and Stiefelhagen, R. (2008). Evaluating mul-

tiple object tracking performance: the CLEAR MOT

metrics. EURASIP Journal on Image and Video Pro-

cessing, 2008:1:1–1:10.

Chakravarty, P. and Jarvis, R. (2009). External cameras

and a mobile robot: A collaborative surveillance sys-

tem. In Australasian Conf. on Robotics and Automa-

tion (ACRA’09), Sydney, Australia.

Chia, C., Chan, W., and Chien, S. (2009). Cooperative

surveillance system with fixed camera object localiza-

tion and mobile robot target tracking. In Advances

in Image and Video Technology, volume 5414, pages

886–897. Springer Berlin / Heidelberg.

Dalal, N. and Triggs, B. (2005). Histograms of oriented

gradients for human detection. In Int. Conf. on Com-

puter Vision and Pattern Recognition (CVPR’05), San

Diego, CA, USA.

Hu, W., Tan, T., Wang, L., and Maybank, S. (2004). A

survey on visual surveillance of object motion and be-

haviors. IEEE Trans. Syst., Man, Cybern., Syst., Part

C: Applications and Reviews, 34(3):334 –352.

Khan, Z., Balch, T., and Dellaert, T. (2005). Mcmc-based

particle filtering for tracking a variable number of in-

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

414

teracting targets. IEEE Trans. Pattern Anal. Mach. In-

tell., 27(11):1805–1918.

Manzanera, A. (2007). Sigma - delta background subtrac-

tion and the zipf law. In Iberoamericann Congress on

Pattern Recognition (CIARP’07), Valparaiso, Chile.

P´erez, P., Hue, C., Vermaak, J., and Gangnet, M. (2002).

Color-based probabilistic tracking. In Europ. Conf. on

Computer Vision (ECCV’02), Copenhagen, Denmark.

Xavier, J., Pacheco, M., Castro, D., and Ruano, A. (April,

2005). Fast line, arc/circle and leg detection from laser

scan data in a player driver. In Int. Conf. on Robotics

and Automation (ICRA’05), Barcelona, Spain.

ExternalCamerasandaMobileRobotforEnhancedMulti-personTracking

415