Visual-based Natural Landmark Tracking Method to Support UAV

Navigation over Rain Forest Areas

Felipe A. Pinagé

1

, José Reginaldo H. Cavalho

1

and José P. Queiroz Neto

2

1

Institute of Computing,Federal University of Amazonas, Manaus, Brazil

2

Campus Manaus Industrial District, Federal Institute of Amazonas, Manaus, Brazil

Keywords: Natural Landmark Tracking, Features Supression, Wavelet Transform, Unmanned Aerials Vehicle.

Abstract: Field application of unmanned aerials vehicles (UAVs) have increased in the last decade. An example of

difficult task is long endurance missions over rain forest, due to the uniform pattern of the ground. In this

scenario an embedded vision system plays a critical role. This paper presents a SIFT adaptation towards a

vision system able to track landmarks in forest areas, using wavelet transform to suppression of nonrelevant

features, in order to support UASs navigation. Preliminary results demonstrated that this method can

correctly track a sequence of natural landmarks in a feasible time for online applications.

1 INTRODUCTION

The use of Unmanned Aerial Systems (UASs) in

real scenarios has increased in recent decades due to

its advantages when compared to manned aerial

systems, especially in avoiding risks to human

operators, as they are not in the cockpit, but

comfortably seated miles of distance away of the

operation theatre. UAS applications spans from

military to civil domains and can cover a dozen of

different types of missions, including border

security, combat, scientific research, environmental

monitoring, among many others.

In (Bueno et al, 2001), one can find results of an

system prototype based on an airship designed for

environmental monitoring, more specific, for the

Amazon rain forest area. However, there are

difficulties to operate autonomously in all-weather

condition, while ensuring a safe flight, demanded

better navigation systems than the ones available at

that time for scientific research applications.

On the other hand, the lack of effective civil

applications of UAS to help protecting the Amazon

Rain forest shows that the challenges are still

beyond what current solutions can provide. For

instance, a relevant problem to be faced is the

navigation over the Amazon due to the all-equal

treetops view from above, even with good weather

and long-range visibility. The problem complexity

increases dramatically in the occurrence of fog or a

cloudy weather (not mentioning rain). In such

scenario, natural landmarks on the ground play an

important role in navigation supporting system,

providing to the vehicle references to be followed.

Moreover, to extend off-the-shelf vision systems to

treat natural landmarks is, basically, an issue of

software development.

As natural landmarks, one may have clearings,

river branches, or any element that contrasts from

the uniform pattern of the canopy (for forests,

canopy refers to the upper layer). The problem of

tracking of the natural landmarks in forest areas

starts to be treated (Pinagé et al., 2012), but remains

a challenge.

The autonomy of an UAV increases with an

embedded vision system helps to solve unexpected

critical situations, e.g., loss of Global Positioning

System signal (GPS), and the ability to interact with

the environment using natural landmarks (Cesetti et

al., 2009). The aerial navigation system can decide

the next target, and change it as new and updated

images of the environment become available.

This paper presents a methodology solely

defined by software to track natural landmarks in

real-time, among a set of predefined ones to extent

UAS navigation capability in the context of the

Amazon rain forest.

The paper is organized as follows. Section 2

details the method specification, describes the image

processing techniques and how they are applied.

Section 3 presents the preliminary results. Finally, in

Section 4 concludes the paper.

416

Pinagé F., Cavalho J. and Neto J..

Visual-based Natural Landmark Tracking Method to Support UAV Navigation over Rain Forest Areas.

DOI: 10.5220/0004304304160419

In Proceedings of the International Conference on Computer Vision Theory and Applications (VISAPP-2013), pages 416-419

ISBN: 978-989-8565-48-8

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

2 METHOD DESCRIPTION

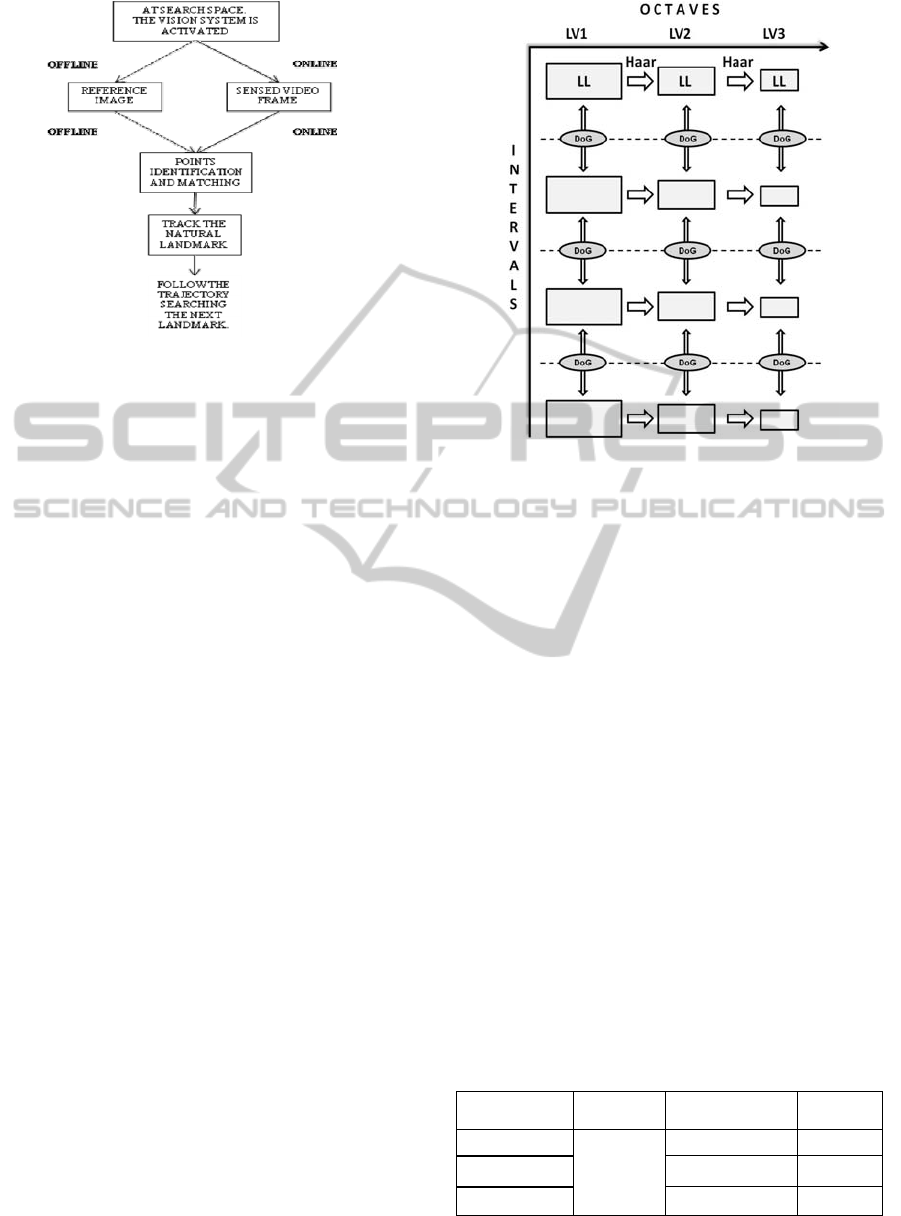

The block diagram of Figure 1 shows how the steps

are organized. The UAV reaches (using GPS) the

search space (the neighborhood area of the reference

image) by GPS and then activate the vision system.

Initially, the canopy pattern is eliminated via

multiresolution analysis of images based on wavelet

transform. In this case, at a larger scale it is possible

to extract only the salient features and suppress the

nonrelevant ones (Fonseca et al, 2008). This is

necessary, otherwise during feature extraction

hundreds of useless features, related to leaves,

branches, and other small image elements related to

a typical canopy texture, will be found.

We use multiresolution analysis to adapt the SIFT

in order to eliminate the canopy pattern.

In summary, the current video frames are

processed online to compare their keypoints with the

ones at the reference image. This way the natural

landmark is tracked. As soon as the landmark is

found the vision system locks it, while preparing to

search the next landmark.

2.1 Nonrelevant Features Suppression

The wavelet transform is an useful and powerful tool

for image local analysis and processing. As digital

images are discrete data, we use the discrete wavelet

transform (DWT). This way, only really large and

robust features will be well represented.

According to (Meddeber et al, 2009), the same

image is represented in different resolutions and

scales in each decomposition level. Thus, the

nonrelevant features disappear in the low resolutions

(large scales) and the biggest and really important

features can be identified more easily.

In each decomposition level is created four

images (sub-bands): LL, LH, HL and HH. This

decomposition can be repeated recursively, reaching

other levels. Therefore, LL sub-band contains

maximum information compared to others sub-bands

(Malviya and Bhirud, 2009), and it is considered the

approximation of original image, also with the small

features suppressed.

2.2 Keypoints Identification and Point

Matching

The main process is the identification of keypoints

in the acquired image and matching them with the

reference image keypoints set. In this paper, the

keypoints can be rivers, roads, its details (corners,

turns, margins, etc) or any information that does not

correspond only to trees.

There are automatic methods to identify

keypoints, developed from algorithms that use

similarity measurements. The same method applied

in the identification of the keypoints of the reference

image (done offline) has to be applied in the sensed

video frame (during the flight). The SIFT, developed

by (Lowe, 2004) was utilized in this work to

generate descriptors of keypoints which are invariant

to scale, rotation and partially invariant to change in

illumination.

There are also other methods for automatic point

identification and matching, such as SURF (Speed

Up Robust Features) and ASIFT (Affine SIFT).

Both are extensions of the SIFT. The SURF (Bay et

al, 2006) uses integral images for image convolution

and thus computing and comparing features much

faster. And the ASIFT (Morel & Yu, 2009) uses the

same SIFT techniques to be invariant to scale and

rotation, combined with point of view simulations,

which provides much more correct matches.

Therefore, as confirmed by the experimental results

in the next section, SIFT is by far the best suited

method for feature matching to our scenario of

application.

2.3 SIFT Adaptation

As wavelet advantages mentioned before, the SIFT

algorithm was adapted in order to detect only

relevant features for this problem, in addition to

reduce your runtime. In (Kim et al, 2007), they

propose a SIFT adaptation that uses Difference of

Wavelets (DoW) for detection of local extrema.

Applied to forest images, this method extract a lot of

features including canopy pattern, it can be verified

in the next section.

Inspired in DoW method, we use the LL sub-

bands of wavelet decomposition just to be the first

image of each octave in the space-scale generated by

SIFT (Figure 2). This way, the wavelet transform is

responsible to suppress nonrelevant features each

next octave.

3 PRELIMINARY RESULTS

The software application was implemented using

C++ language with OpenCV library. The images and

videos used were obtained by flights over forest

areas near the cities of Manaus and Belo Horizonte

(Brazil).

Visual-basedNaturalLandmarkTrackingMethodtoSupportUAVNavigationoverRainForestAreas

417

Figure 1: Natural landmark tracking scheme.

The results are obtained after the matching process

between the reference image containing the natural

landmark and the sensed frame that simulated the

online video frame of the UAV; these input data

were obtained by the same sensor, in different time,

angles and altitudes.

SIFT, SURF and ASIFT algorithms were applied

to detect the keypoints and afterwards match the

images. These three algorithms were compared to

validate their feasibility for this specific application.

Table I presents the average runtime and the

accuracy rate of each algorithm. It is clear that the

ASIFT has a large amount of correct matches,

however, it has a computational cost unfeasible for

this real-time operation. SURF, on the other hand,

although fast, has many wrong matches.

SIFT presented the best trade-off between

matches and computational effort, with a sufficient

amount of correct matches to track the natural

landmark and an acceptable runtime when

considering this real-time operation.

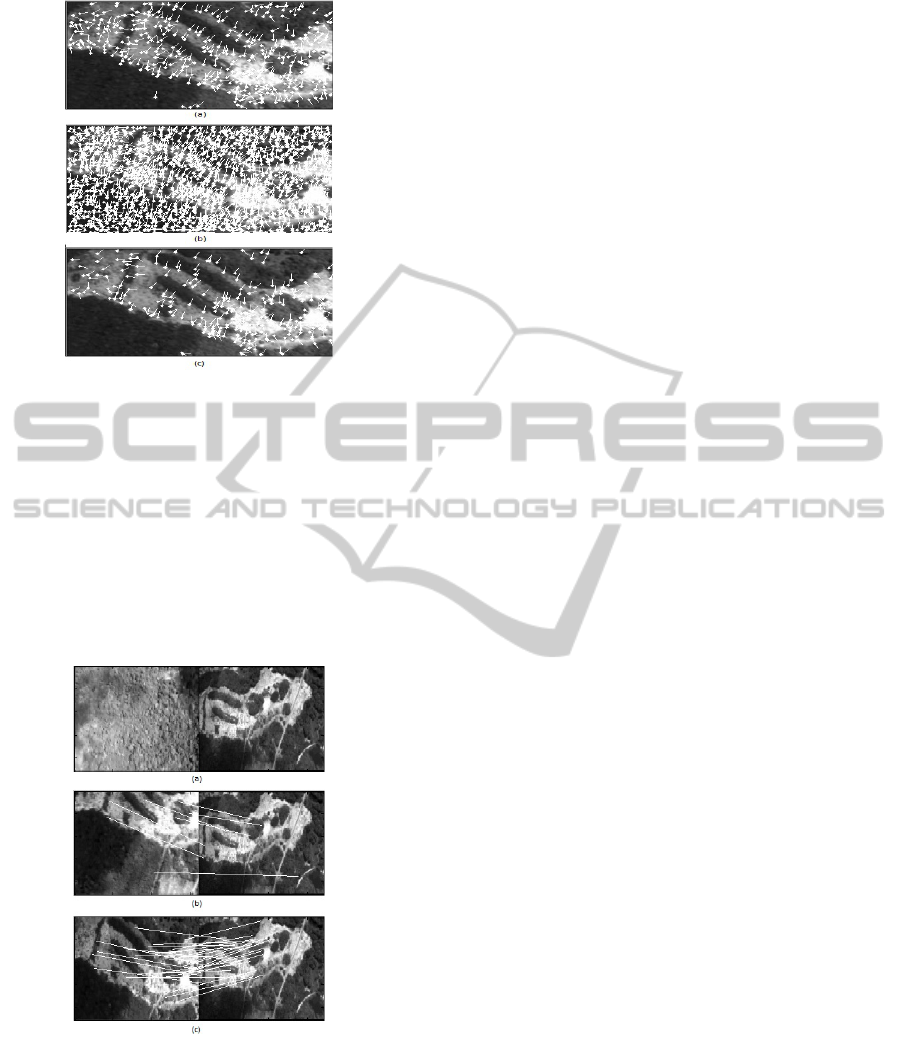

SIFT was adapted for improve the keypoints

detection by suppressing of the nonrelevant features

(canopy). The DoW method detects many keypoints

including the canopy (Figure 3(b)), the opposite of

the objective of this work. Therefore it is not

feasible for this application.

Our SIFT adaptation only substitutes the first

images of each octave for the LL sub-bands obtained

by Haar wavelet decomposition, and keeping the

Gaussians for the intervals, as shown in Figure 2.

The keypoints detected by our SIFT adaptation can

be verified in Figure 3(c). Our SIFT adaptation has a

computational cost very close to original SIFT.

However, the differential of our adaptation is being

able to suppress canopy pattern.

Figure 2: Our SIFT adaptation.

This paper shows one tracking test using our SIFT

adaptation, which can be observed in Figure 4,

which images at left are the sensed frame, and the

images at right are the reference images.

Furthermore, this tracked frame will be also

stored to be used as natural landmark and support

next missions over the same area and similar

weather conditions.

4 CONCLUSIONS

All implementations were solely based on software.

The methodology combines two existing image

processing techniques focusing in your importance

to this application.

We could conclude that SIFT is the best of the

three tested algorithms, because it does not to exceed

the computational cost required for real-time

systems, and does not have many wrong matches.

The runtime of the tests were very promising

with respect to the feasibility of this method to an

embedded vision system.

Table 1: Average runtime and accuracy rate.

Algorithm Steps

Average

Runtime (s)

Accuracy

Rate (%)

SIFT

Points

Detection

and

Matching

0,9273 97

SURF 0,0319 75

ASIFT 9,66 99

VISAPP2013-InternationalConferenceonComputerVisionTheoryandApplications

418

Figure 3: Keypoints detection: (a) original SIFT; (b) SIFT

by DoW; (c) Our SIFT adaptation.

Our SIFT adaptation is useful for this application to

eliminate canopy pattern by wavelet decomposition,

detect more easily the relevant features, and thus,

ensure the correct natural landmark tracking.

Next steps include the increasing of the database

of images. In addition to treating the occurrence of

clouds that can cause occlusion of natural

landmarks. Finally, the system will be embedded for

the validation during experimental flights.

Figure 4: Tracking: (a) Any matches were found, the

frame contains only clouds and tree pattern; (b) bad

representation of the natural landmark; (d) final result and

best representation of the natural landmark.

ACKNOWLEDGEMENTS

This work is partially sponsored by Financing

Agency for Studies and Projects (Finep) under grant

number 01-10 0611-00, the Foundation for Research

Support of the State of Amazonas (FAPEAM),

process 01135/2011, and the National Institute of

Science and Technology on Embedded Critical

Systems (INCT-SEC, processes 573963/2008-8 and

08/57870-9).

REFERENCES

Bueno, S. S., Bergerman, M., Ramos Jr, J. G., Paiva, E.

C., Carvalho, J. R. H., Elfes, A., Maeta, S. M.,

Mirisola, L. G. B., Faria, B. G. and Pereira, C. S.

(2001) “Robótica Aérea em Preservação Ambiental: O

Uso de Dirigíveis Autônomos”. In: 7a. Reunião

Especial da SBPC, Manaus-AM.

Pinagé, F. A., Carvalho, J. R. H. and Queiroz-Neto, J. P.

(2012). “Natural landmark tracking method to support

UAV navigation over rain forest areas”. In: Workshop

of Embedded Systems of SBESC, Natal, Brazil.

Cesetti, A., Frontoni, E., Mancini, A., Zingaretti, P. and

Longhi, S. (2009). “Vision-based Autonomous

Navigation and Landing of an Unmanned Aerial

Vehicle using Natural Landmarks”. In: 17th

Mediterranean Conference on Control & Automation.

Thessaloniki, Greece.

Fonseca, L. M., Costa, M. H. M., Korting, T. S., Castejon,

E. and Silva, F. C. (2008) “Multitemporal Image

Registration Based on Multiresolution

Decomposition”. Revista Brasileira de Cartografia No

60/03. (ISSN 1808-0936).

Meddeber, L., Berrached, N. E. and Taleb-Ahmed, A.

(2009). “The Practice of an Automatic Registration

System based on Contour Features and Wavelet

Transform for Remote Sensing Images”. IEEE Second

International Conference on Computer and Electrical

Engineering.

Malviya, A. and Bhirud, S. G. (2009). “Wavelet based

Image Registration Using Mutual Information”. In:

International Conference on Emerging Trends in

Electronic and Photonic Devices & Systems, Varanasi,

India.

Lowe, D. (2004). “Distinctive Image Features from Scale

Invariant Keypoints”. In: International Journal of

Computer Vision. vol. 60, No. 2, pp. 91-110.

Bay, H., Tuytelaars, T. and Gool, V. (2006). “SURF:

Speed Up Robust Features”. In Proceedins of the

Ninth European Conference on Computer Vision.

Morel, J. M. and Yu, G. (2009). “A New Framework for

FullyAffine Invariant Image Comparison”. In: SIAM

Journal of Image Sciences, vol 2 (2).

Kim, Y., Lee, J., Cho, W., Park, C., Park, C. and Paik, J.

(2007). “Vision Systems: Segmentation and Pattern

Recognition”, ISBN 987-3-902613-05-9, Edited by:

Goro Obinata and Ashish Dutta, pp.546, I-Tech,

Vienna, Austria.

Visual-basedNaturalLandmarkTrackingMethodtoSupportUAVNavigationoverRainForestAreas

419