Peer-to-Peer MapReduce Platform

Darhan Akhmed-Zaki

1

, Grzegorz Dobrowolski

2

and Bolatzhan Kumalakov

1

1

Mechanics-Mathematics Faculty, al-Farabi Kazakh National University, Al-Farabi ave. 71, Almaty, Kazakhstan

2

Department of Computer Science, AGH University of Science and Technology, Cracow, Poland

Keywords:

Peer-to-Peer Computing, MapReduce Framework, Multi-agent Systems.

Abstract:

Publicly available Peer-to-Peer MapReduce (P2P-MapReduce) frameworks suffer lack of practical implemen-

tation, which significantly reduces their role in system engineering. Presented research supplies valuable data

on working implementation of P2P-MapReduce platform. Resulting novelties include advanced workload dis-

tribution function, which integrates mobile devices as execution nodes; novel computing node and multi-agent

system architectures.

1 INTRODUCTION

Current research is motivated by a chapter on

Peer-to-Peer MapReduce framework presented in

(Antonopoulos and Gillam, 2010), where authors use

simulation to justify its benefits.

We conduct practical experiment and pursuits two

objectives. First of all, theoretical studies presented

in (Marozzo et al., 2011) and (Antonopoulos and

Gillam, 2010) emphasize that volunteer computing

promises considerable increase in system dependabil-

ity due to self-organization phenomena. That is P2P-

MapReduce platform is more likely to finish execu-

tion when unexpected node failure accrues or nodes

leave infrastructure unpredictably at a run time. We

support this argument by conducting computational

experiment and presenting derived practical data.

Second, we analyse workload distribution within

complex computing infrastructure. System complex-

ity in this case comes from viewing computing ar-

chitecture as a collection of autonomous devices,

which encapsulate control and goal achieving func-

tions (Zambonelli et al., 2003). As a result, devices

are not viewed as means of achieving targets, but as

active components that solve user defined problems

by self-organizing and cooperating.

We bring additional complexity by integrating

mobile devices as processing units into the comput-

ing infrastructure. As a result, agent accepts mapper

and reducer roles with respect to its subjective self-

evaluation. Thus, device capabilities are analyzed and

decisions are made dynamically at a run time in a de-

centralized fashion.

The reminder of the article is organized as fol-

lows. Section 2 describes P2P-MapReduce architec-

ture and implementation, while Section 3 defines the

workload distribution function that servesas agent de-

cision making tool. Finally, Sections 4 and 5 present

computational experiment results and conclusions.

2 MapReduce PLATFORM

In order to implement and test P2P-MapReduce plat-

form we propose architecture, described in Subsec-

tion 2.1.

Core of our framework is the high degree of ma-

chine autonomy, which is not limited to freedom of

accepting or rejecting tasks, but also includes the right

to independently change roles from execution to exe-

cution; or take numerous roles (reducer and supervi-

sor) at the same time. Agents in this case carry orga-

nizational and managerial responsibilities. Comput-

ing node intercommunications are governed by agents

social interactions, thus, general system architecture

fully relies on P2P principles. There are several dis-

tinctions from existing architectures.

Unlike in (Gangeshwari et al., 2012), where au-

thors organize multiple data centers (agent super-

vised) into a hyper cubic structure, we view every

machine as an autonomous entity. Hyper cube agents

carry supervisory functions for data center infrastruc-

tures with pre-installed MapReduce software. Deci-

sion making is made on the level of organization; that

is accepting or rejecting jobs and optimizing commu-

nications. In our approach machines self-organize to

565

Ahmed-Zaki D., Dobrowolski G. and Kumalakov B..

Peer-to-Peer MapReduce Platform.

DOI: 10.5220/0004330405650570

In Proceedings of the 5th International Conference on Agents and Artificial Intelligence (ICAART-2013), pages 565-570

ISBN: 978-989-8565-39-6

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

actually perform MapReduce jobs without being pre-

organized into any structures. Moreover, they do not

require installing additional MapReduce software.

In (Marozzo et al., 2011) computing devices (run

by agents) are assigned master or slave roles and then

master nodes cooperate to organizeand manage effec-

tive job execution. One master node communicates

with the user and organizes task execution, whilst

other master nodes monitor it and voluntarily take on

control if it fails. Slaves are assigned map and reduce

operations by an active master node, execute code and

return the result to the master.

P2P principles, in this case, are implemented to

manage master node failure, whilst slave nodes are

being controlled. In our approach there is no di-

rect control mechanism, but localized supervision in

a form of reducer-mapper and supervisor-reducer re-

lationships. This means that no node has direct con-

trol over others, but may indirectly influence execu-

tion flow. In such a way we apply the complexity

prism and design a system that makes use of agent

autonomy in a broader way.

We are also aware of other volunteer MapReduce

architectures (Costa et al., 2011) and (Dang et al.,

2012), which, however, do not make use of agent-

oriented approach.

Reminder of the section describes system archi-

tecture and its current implementation in more details.

2.1 Architecture

Our system consists of multiple nodes that interact in

order to perform MapReduce jobs. Every node may

initiate user task, or perform any task offered to it.

Process is visualized in Figure 1.

Broker node receives job specification from the

user, brakes it into reduce and map tasks and broad-

casts information messages to potential performers

(Figure 1). Having received a broadcast message,

other nodes evaluate it and issue an offer or do not re-

spond to information message at all. Broker chooses

between potential performers on the basis of offer

price and readiness to become reducer node, where

the lowest offer (or the first lowest offer received, if

there is a number of them) wins.

Chosen performer gets confirmation message and

looks for supervisors to serve as active backup entities

for the execution time. Their primary role is to moni-

tor reducer actions, save peer state and, if unexpected

failure accrues, to restart it in the last available state.

When supervision is set, performer requests the

job, gets reducer status and searches for mappers and

leaf reducer nodes by following the same protocol. In

such a way nodes self-organize in a tree structure until

Figure 1: UML Sequence Diagram describes agent interac-

tions when performing a job.

Figure 2: Diagram describes MapReduce tree structure that

is formed by peer nodes before reduce operation begins.

the last required node is added to the tree structure

(Figure 2).

Reducer nodes serve as a core of the tree struc-

ture, whilst mapper nodes do not have leaf nodes at

ICAART2013-InternationalConferenceonAgentsandArtificialIntelligence

566

all. This means that every reducer node tries to find

leaf reducer and if none is fount, tree development

terminates. Number of mapper nodes per reducer is

set by system developer, as well as number of su-

pervisors required per reducer. One supervisor may

supervise numerous reducers and take on mapper or

reducer roles at the same time depending on self eval-

uation results.

Job execution terminates when all mapper nodes

pass their results to corresponding reducers and all re-

ducers in a hierarchy pass processed results up to the

level of the broker node.

It is also remarkable that the broker agent does not

have any mapper nodes, but every reducer node does.

Supervisors monitor reducer nodes and reducers carry

out supervision role for their mapper nodes.

On the node level there are three main com-

ponents: broker agent, performer agent and com-

piler/interpreter.

When node receives a broadcast message, it is

handled by the performer agent. Performer agent

evaluates nodes current state and makes a decision

whether to form an offer message or to do nothing. If

an offer is issued to the requesting node, performer is

responsible for handling upcoming operations. That

is finding supervisors, passing executable code to the

interpreter/compiler, retrieving execution results and

sending them to the destination.

Broker agent is created by interpreter/compiler

when user wants to launch a computing task. It

is responsible for broadcasting information mes-

sages, finding itself supervisor and reducer nodes and

handling organizational communication at execution

time.

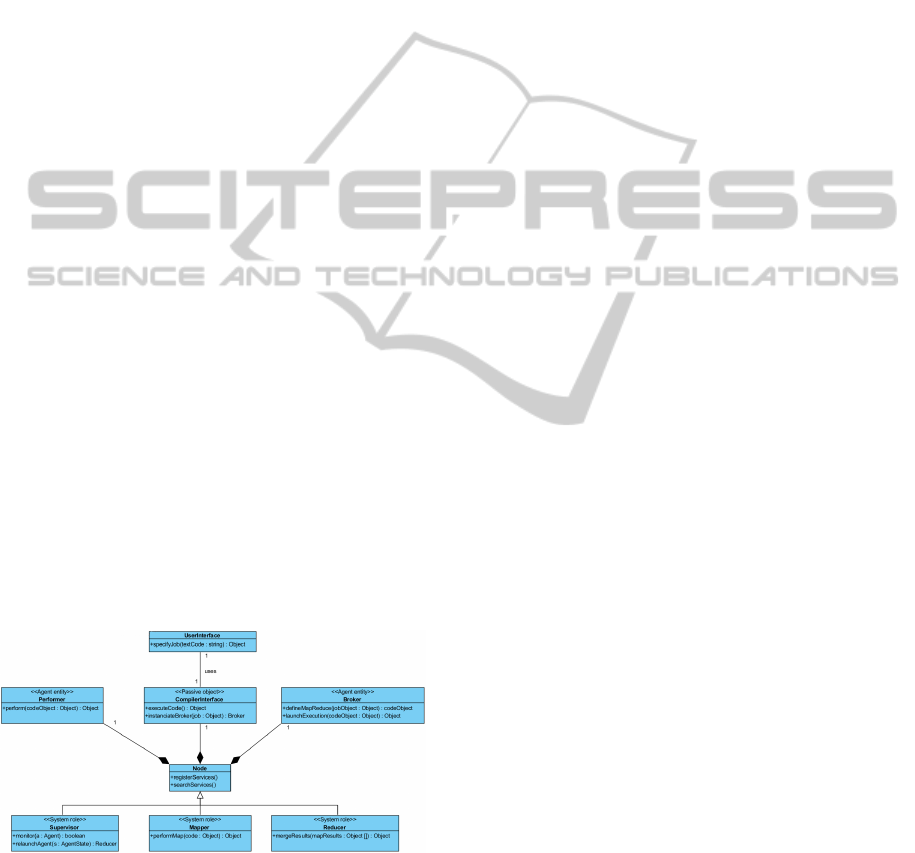

Figure 3 presents class diagram, which visualizes

nodes structure, system roles and their relationship to

the user interface.

Figure 3: UML Class Diagram describing nodes composite

structure, their roles and relationship with user interface.

More details on agent decision making are ex-

plained in Section 3.

2.2 Implementation

We implemented system prototype using JADE (Bel-

lifemine et al., 2007) due to cross platform prop-

erties and well established development tools of

Java. Moreover, availability of standard Jade behav-

iors allowed convenient grouping of individual op-

erations using ParallelBehaviour and SequentialBe-

haviour classes, supplied in JADE-4.2.0 distribution.

Executable code (written in Lisp) is encapsulated

into ACLMessage object and passed between agents.

Code is executed on Java Virtual Machine using

Clojure-1.4 (PC and server machines) and JSceme-

7.2 (mobile devices). It is important that standard

JSceme libraries had to be modified in order to work

with floating point numbers and files on mobile de-

vices. Apart from that software was used as supplied

in standard distribution.

Agent initialization includes publishing two ad-

vertisements: first, supervision services, second,

MapReduce services. As noted before there is no pre-

defined mapper or reducer role, because it depends on

self-evaluation at execution time.

Supervisor does not copy reducer state directly,

but knows about changes by listening to duplicated

messages, sent to the reducer. In other words it up-

dates state record when receives mapper and leaf re-

ducer message duplicates.

In order to describe job submission and failure

recovery mechanisms we take example scenario that

corresponds to the algorithm described in Figure 1:

1. All agents register their supervision and execution

services using DFAgentDescription class;

2. When job arrives broker agent breaks it down into

map and reduce operations and launches initiate-

Execution behavior, which is an extention of the

Jade Behaviour;

3. When perspective performer receives offer it de-

cides to execute the reduce task or not. If deci-

sion is positive, agent instantiates SequentialBe-

haviour object with unique CoversationId;

4. Broker tracks best offer among received within

specified timeout and sends confirmation;

5. Perspective performer becomes reducer node

and searches for supervisors by broadcasting

ACLMessage.INFORM. When answers are re-

ceived first three answer owners become super-

visors and their addresses are put into offerMes-

sage. Then offerMessage is broadcasted to new

potential performers;

6. Supervisors monitor their reduce node. If a call

timeout is reached, supervisor tries to re-launch

Peer-to-PeerMapReducePlatform

567

the agent. If host is unreachable, supervisor tries

to assign the task to other host at last reducer state;

7. When reducer receives mapper and leaf reducer

results it uses provided reduce code and data by

passing it to the Clojure compiler or scheme in-

terpreter for execution. Result is encapsulated and

returned to the specified destination;

8. When reducer part is done it notifies supervisors

and all of them delete their job SequentialBe-

haviour object.

3 WORKLOAD DISTRIBUTION

FUNCTION

Task of the distribution function is to distribute work-

load between computing nodes as even as possible.

Formally it may be specified as follows.

Let us denote executable task by J and its step

by k, such as J = {k

1

, k

2

, . . . , k

n

}, where all steps

are performed by a set of computing nodes A =

{a

1

, a

2

, . . . , a

m

}. k in this case is an uninterrupted pro-

cess which is performed according to its specification.

If step k

n

may be performed by node a

m

, we denote it

as a mapping function k

n

→ a

m

.

Workload distribution means that mappings be-

tween different nodes in A should be distributed as

even, as possible. Let us use price as a derivative

of available resources, workload and other parame-

ters, which reflects comparative workload of individ-

ual device. As a result, every successful mapping

k

i

→ a

j

(1 ≤ i ≤ n, 1 ≤ j ≤ m) gets computing price

p

ijk

assigned by an accepting computing node. Fol-

lowing is the price function:

p

ijk

= f(ω

k

, p

b

, b

l

) (1)

Here, p

b

denotes basic resource price, which is

set by device owner; b

l

denotes battery load; and ω

k

denotes resources availability at the time, when step k

arrives.ω

k

has following descrete values:

ω

k

=

1 device free, can map and reduce

0.6 device buisy, can map and reduce

0.3 device can map only

0 otherwise

Computed price for different mappings may not

be the same p

ijk

6= p

l jk

, where i 6= l and 1 ≤ i, l ≤ m.

If they are equal, the conflict is resolved on the first

come first served basis.

After price is computed tuple ((ρ(ω

ijk

), p

ijk

) is re-

turned to initiator node, where p

ijk

is computer price

and ρ(ω

ijk

) is determined as follows:

ρ(ω

ijk

) =

1 ω

ijk

> 0, want to supply services

0 otherwise

Then, issuer returns result of function ϕ(p

ijk

),

which determines executor node.

ϕ(p

ijk

) =

1 ρ(ω

ijk

) = 1, p

ijk

of k → a

i

lowest

0 otherwise

We, also, include client balance cb value in order

to represent the amount of money user can spend on

services.

Using values stated above distribution function is

formulated as follows:

min

p

n

∑

i=1

ρ(ω

ijk

)ϕ(p

ijk

) (2)

Subject to:

n

∑

i=1

ρ(ω

ijk

)ϕ(p

ijk

) ≤ cb (3)

n

∑

i=1

ρ(ω

ijk

)ϕ(p

ijk

) > 0 (4)

Objective function (2) minimizes overall cost of

performing MapReduce job by choosing lowest price

at each step. Constraints ensure that overall solution

cost is always lower than client balance (3) and at

least one path of job execution exists (4).

Objective function is implemented as an aim of

every agent to choose cheapest offer available. In its

turn, offer is a derivative of unused physical resources

of the host device. In such a way it is ensured that next

step performer is the one, who has bigger proportion

of free resources.

4 EXPERIMENT

AND EVALUATION

4.1 Experiment Design

In order to test our P2P platform against master-slave

based systems we have set up an infrastructure that

consists of 23 PCs with Intel Core i3 processors and

2 Gb RAM; 2 HP servers with 4 Core Intel Xeon Pro-

cessors and 6 Gb RAM. To fully test the P2P infras-

tructure we also included 12 mobile devices run under

Windows Phone and Android operation systems into

P2P-MapReduce runs.

All nodes (except mobile devices) got master-

slave based Hadoop-1.0.4 Stable release and newly

designed multi-agent MapReduce system installed on

ICAART2013-InternationalConferenceonAgentsandArtificialIntelligence

568

them. Each system was tested by performing three

rounds of 100 runs of the same MapReduce task one

after another. When Hadoop runs were performed

P2P-MapReduce system was off and visa versa.

At the first stage probability of the node (includ-

ing head node) accepting the task and crashing was

programmed to be 5%, at second stage 10% and third

20%. Crashing in this case means that every time

node receives the task it calls a method, which with

the given probability calls finalize(). Frequency of

the task being launched is controlled by randomizer

that generates pauses between launches in the range

between 0 and 2 seconds.

To keep record of finished jobs and workload

distribution performer agents sent acknowledgement

messages and CPU load data to the Logger agent.

Collected data was plotted to graphs using MS Excel

software.

4.2 Experiment Results

Figure 4 presents percentage of finished jobs given

100 runs of the MapReduce task. From the results it

is seen that developed P2P platform shows better de-

pendability at the defined probabilities of failure. This

corresponds to the theoretical result of (Antonopou-

los and Gillam, 2010). However, our implementa-

tion of P2P-MapReduce platform has shown 99% of

all jobs finished at 5% failure rate, which further de-

creased down to 82% with failure probability at 20%,

unlike predicted 100%. Preliminary analysis of fail-

ure causes indicate that it might be due to supervisors

trying to restart reducer agents at overloaded devices.

Further analysis should giveclarification on this issue.

This result satisfies the first aim of the research

and supports hypothesis on the P2PMapReduce plat-

form dependability.

Figure 4: Competitive analysis of P2P platform and Hier-

archical platform performance given different node failure

probabilities.

In terms of second aim: workload distribution,

platform shows performance presented in Figure 5.

It is seen that workload is evenly distributed be-

tween computing nodes, except the highest workload

is recorded at devices number 13, 19 and 25. This

is due to the fact that those are mobile phones and

map operation takes considerably more resources to

be computed.

Figure 5: Workload distribution in P2P computing environ-

ment (x axis - CPU load; y axis - CPU hours; z axis - agent

identifier).

In order to make deeper analysis let us consider

average workload on device types: server machines,

mobile devices and personal computers (Figure 6). It

confirms higher load on mobile devices, whilst PCs

and server machines use little amount of their re-

sources.

Figure 6: Average workload distribution over device types

in P2P computing environment.

This result confirms that developed system sus-

tains desired behavior under system complexity.

Thus, there is a perspective in applying alike sys-

tem for infrastructures where computingnodes are not

guaranteed to be available for a long time.

5 CONCLUSIONS

Presented research provides some practical insides to

the P2P-MapReduce computing concept. In particu-

lar they confirm that system maintains desired work-

load balancing behavior in a complex environment

Peer-to-PeerMapReducePlatform

569

and is able to self-organize and self-reorganize dy-

namically without being explicitly programmed to do

so.

On the other hand, system performance has not

been studied yet, whist it is one of the most im-

portant factors when choosing a computing platform.

Thus, there is a need to analyze execution efficiency

and compare it to avaliable P2P-MapReduce platform

evaluations.

Further research is going to concentrate on the ex-

ecution performance. In particular we shell design or

adopt an agent learning framework that is to manage

system efficiency by affecting agents social behavior.

Finally, it is worth pointing out considerable limi-

tations of the presented research. First, implemented

P2P platform is simple and perspective platform de-

velopment may lead to changes in dependability in

any way. Second, experiment installation of Hadoop

might not be optimal and results may be misleading

to some extent.

REFERENCES

Antonopoulos, N. and Gillam, L. (2010). Cloud comput-

ing: Principles, Systems and Applications, volume 0

of Computer Communications and Networks, chap-

ter 7, pages 113–126. Springer-Verlag London Lim-

ited, London, UK.

Bellifemine, F. L., Caire, G., and Greenwood, D. (2007).

Developing Multi-Agent Systems with JADE. John Wi-

ley & Sons, NJ.

Costa, F., Silva, L., and Dahlin, M. (2011). Volunteer cloud

computing: Mapreduce over the internet. In IEEE In-

ternational Parallel and Distributed Processing Sym-

posium, pages 1855–1862. IEEE Computer Society.

Dang, H. T., Tran, H. M., Vu, P. N., and Nguyen, A. T.

(2012). Applying mapreduce framework to peer-

to-peer computing applications. In Nguyen, N. T.,

Hoang, K., and Jedrzejowicz, P., editors, ICCCI (2),

volume 7654 of Lecture Notes in Computer Science,

pages 69–78. Springer.

Gangeshwari, R., Janani, S., Malathy, K., and Miriam, D.

D. H. (2012). Hpcloud: A novel fault tolerant archi-

tectural model for hierarchical mapreduce. In ICRTIT

2012, pages 179–184. IEEE Computer Society.

Marozzo, F., Talia, D., and Trunfio, P. (2011). A framework

for managing mapreduce applications in dynamic dis-

tributed environments. In Cotronis, Y., Danelutto, M.,

and Papadopoulos, G. A., editors, PDP, pages 149–

158. IEEE Computer Society.

Zambonelli, F., Jennings, N. R., and Wooldridge, M.

(2003). Developing multiagent systems: The gaia

methodology. ACM Trans. Softw. Eng. Methodol,

12(3):317–370.

ICAART2013-InternationalConferenceonAgentsandArtificialIntelligence

570