Smoothing Parameters Selection for Dimensionality Reduction Method

based on Probabilistic Distance

Application to Handwritten Recognition

Faycel El Ayeb and Faouzi Ghorbel

GRIFT Research Group, CRISTAL Laboratory, Ecole Nationale des Sciences de l’Informatique (ENSI),

La Manouba University, 2010 Manouba, Tunisia

Keywords:

Dimensionality Reduction, Feature Extraction, Fisher Criterion, Orthogonal Density Estimation, Patrick-

Fisher Distance, Smoothing Parameter, Handwritten Digits Classification, Invariant Descriptors.

Abstract:

Here, we intend to give a rule for the choice of the smoothing parameter of the orthogonal estimate of Patrick-

Fisher distance in the sense of the Mean Integrate Square Error. The orthogonal series density estimate preci-

sion depends strongly on the choice of such parameter which corresponds to the number of terms in the series

expansion used. By using series of random simulations, we illustrate the better performance of its dimensional-

ity reduction in the mean of the misclassification rate. We show also its better behavior for real data. Different

invariant shape descriptors describing handwritten digits are extracted from a large database. It serves to com-

pare the proposed adjusted Patrick-Fisher distance estimator with a conventional feature selection method in

the mean of the probability error of classification.

1 INTRODUCTION

It is well known that the feature extraction obtained

by dimensional reduction algorithms is very impor-

tant task for pattern recognition. Different applica-

tions in this field as face analysis, handwritten charac-

ter recognition, 3D-medical image segmentation have

investigated such approach. The famous Linear Dis-

criminate Analysis (LDA) method which optimizes

the Fisher ratio (Fukunaga, 1990) is very used in

practice for reducing dimensionality. However, when

one of the conditional probability density functions

(PDFs) relative to labels follows a non Gaussian dis-

tribution, the LDA gives generally bad and non stable

result (Ghorbel et al., 2012). It has been proved that

the maximization of an estimate of the Patrick-Fisher

distance (Drira and Ghorbel, 2012) (Aladjem, 1997)

(Patrick and Fisher, 1969) (Hillion et al., 1988) could

be improves the result because it considers the hole of

the statistical information about the conditional ob-

servation. However the LDA method is generally ex-

pressed only according to the first and second statisti-

cal moments of PDFs. In the present work, we inves-

tigate a dimensionality reduction method introduced

in (Ghorbel, 2011). It consists on an estimator of the

Patrick-Fisher distance (

ˆ

d

PF

) using the conventional

orthogonal series density estimator.

Among the non parametric density estimation

method, the PDFs could be approximated by an or-

thogonal series expansion (Cencov and Nauk, 1962).

The performance and smoothness of the orthogonal

series density estimate depend strongly on the optimal

choice of the parameter k

N

which corresponds to the

number of terms in the series expansion used. Rather

than arbitrarily choosing the value of k

N

for each class

to estimate the Patrick-Fisher distance (d

PF

), we pro-

pose to select the number of terms that minimize the

mean integrated square error (MISE) of the

ˆ

d

PF

. The

remaining of the paper is organized as follows. In

section 2, we review the orthogonal series density es-

timator. The LDA method and the one based on the

ˆ

d

PF

are recalled in Section 3. After that, we propose

a novel rule for selecting the optimal values of k

N

for

ˆ

d

PF

. Experimental results both on simulated data and

on handwritten digits database are given in Section 4.

Section 5 gives a conclusion of the paper.

2 ORTHOGONAL SERIES

DENSITY ESTIMATION

In the following, we just recall the orthogonal series

density estimation method by presenting its essen-

325

El Ayeb F. and Ghorbel F. (2013).

Smoothing Parameters Selection for Dimensionality Reduction Method based on Probabilistic Distance - Application to Handwritten Recognition.

In Proceedings of the 2nd International Conference on Pattern Recognition Applications and Methods, pages 325-330

DOI: 10.5220/0004333503250330

Copyright

c

SciTePress

tial convergence studies detailed in (Beauville, 1978).

The orthogonal series estimator of the PDF of a given

sample X

i

assumed to follow the same distribution

could be obtained by the following limited Fourier se-

ries expansion:

ˆ

f

k

N

(x) =

k

N

∑

m=0

ˆa

m,N

e

m

(x) (1)

Where {e

m

(x)} is a complete and orthogonal basis

of functions. Here k

N

is called the truncation value

or sometimes the smoothing parameter. It represents

an integer depending on the sample size N. The

Fourier coefficients estimators {ˆa

m,N

} could be writ-

ten according to the sample set of random variable

X = (x

1

,...,x

N

) as:

{ˆa

m,N

} =

1

N

N

∑

i=1

e

m

(x

i

) (2)

The convergence of this orthogonal series density

estimator depends on the choice of k

N

. Kronmal and

Tarter investigated a method for the determination of

the optimal choice of k

N

(Kronmal and Tarter, 1968).

2.1 Smoothing Parameter Selection for

Orthogonal Density Estimation

Convergence theorems have been established to find

the optimal value of k

N

for several error criteria.

Among these criteria the MISE between the theoreti-

cal PDF and its estimate

ˆ

f

k

N

can be expressed by:

MISE = E(

Z

|f (x) −

ˆ

f

k

N

(x)|

2

dx) (3)

Where E(.) is the expectation operator.

By replacing

ˆ

f

k

N

(x) by its orthogonal density es-

timate and after some calculations given in (Kronmal

and Tarter, 1968), the MISE could be written as fol-

low:

MISE(

ˆ

f

k

N

(x)) '

1

N −1

k

N

∑

i=0

[2

ˆ

d

i

−(N + 1) ˆa

2

i,N

] +

∞

∑

i=0

a

2

i

(4)

Where

ˆ

d

i

=

1

N

N

∑

j=1

e

2

i

(x

j

) (5)

As

∞

∑

i=0

a

2

i

does not depend on k

N

then searching for

k

N

which minimize the MISE(

ˆ

f

k

N

(x)) is the same to

minimize the following expression:

J(k

N

) =

1

N −1

k

N

∑

i=0

[2

ˆ

d

i

−(N + 1) ˆa

2

i,N

] (6)

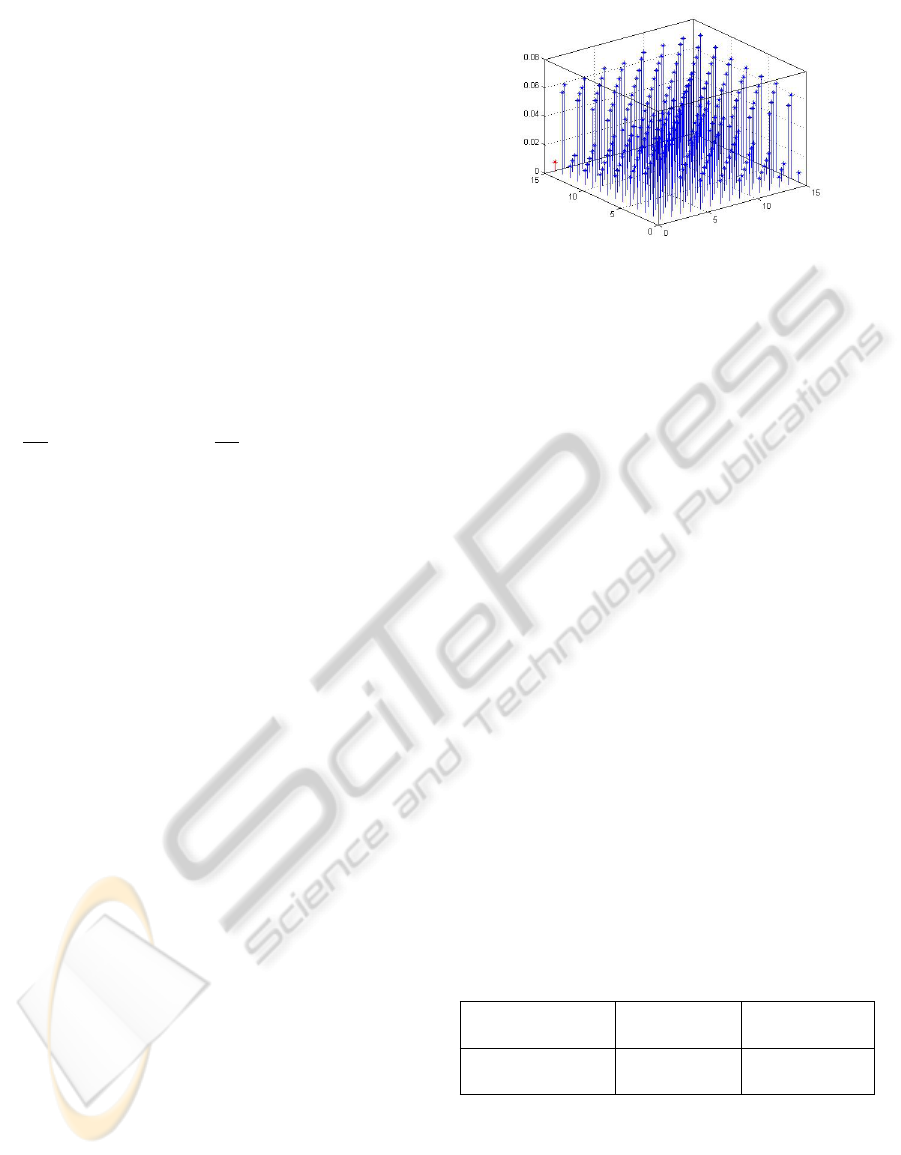

Figure 1: Behavior of J(k

N

) against k

N

(k

∗

N

correspond to

the minima of J(k

N

)).

Kronmal and Tarter (Kronmal and Tarter, 1968)

indicated the existence of an optimal value of k

N

that

minimize the J(k

N

). They proved that k

N

has a value

much smaller than the sample size N. Their strategy

for determining the optimal value of k

N

consists on

the following rule. Starting from k

N

= 1 and increase

by 1 this value until J(k

N

) increase. The optimal value

will be the value of k

N

just before J(k

N

) increase.

This strategy is called stopping rule. Since J(k

N

) may

have multiple local minima, to avoid being trapped at

a local minimum, one cannot simply increase m incre-

mentally until J(k

N+1

) > J(k

N

). For this reason, Kro-

nmal and Tarter give an improvement on this rule by

suggesting to stop the rule only if we obtain a certain

sequence length of ∆J(k

N

) = (J(k

N

) −J(k

N+1

)) < 0.

This rule is adopted latter in (Beauville, 1978) and

(Wong and Wang, 2005).

We give the following experience to illustrate this

strategy. We generate a sample set of random variable

X from a multimodal distribution composed of the su-

perposition of two Gaussians with the following pa-

rameters µ

1

= 1, Var

1

= 2 and µ

2

= 3, Var

2

= 1. Here

µ and Var correspond respectively to the mean and

the variance of X . In Figure 1, we plot J(k

N

) against

k

N

for different values of sample size. The optimal

values obtained are equal to 4, 6 and 15 respectively

to sample size equal to 100, 1000 and 10000. This

experimental results show that the optimal number of

terms for the orthogonal density estimation is much

smaller than the sample size. In addition the error

J(k

N

) increases monotonically from all values greater

than the optimal k

N

. In the next section, we will re-

view a standard method for dimensionality reduction

and the one we investigated based on the

ˆ

d

PF

.

3 DIMENSIONALITY

REDUCTION

The goal of a dimensionality reduction is to project

ICPRAM2013-InternationalConferenceonPatternRecognitionApplicationsandMethods

326

high dimensional data samples in a low dimensional

space in which groups of data are the most sepa-

rated. In the following subsections, we recall the LDA

method and the one based on the

ˆ

d

PF

. We also present

a novel rule for selecting the optimal smoothing pa-

rameters values to improve the convergence of the

ˆ

d

PF

.

3.1 Linear Discriminant Analysis

Method

The LDA is a widely used method for dimensionality

reduction. It intends to reduce the dimension, so that

in the new space, the between class distances are max-

imized while the within class ones are minimized. To

that purpose, LDA considers searching for orthogonal

linear projection matrix W that maximizes the follow-

ing so-called Fisher optimization criterion (Fukunaga,

1990) :

J(W ) =

trace(W

T

S

b

W )

trace(W

T

S

W

W )

(7)

S

W

is the within class scatter matrix and S

b

is the

between class scatter one. Their two well known ex-

pressions are given by:

S

W

=

c

∑

k=1

π

k

E((X −µ

k

)(X −µ

k

)

T

) (8)

S

b

=

c

∑

k=1

π

k

(µ

k

−µ)(µ

k

−µ)

T

(9)

Where µ

k

is the conditional expectation of the

original multidimensional random vector X relative to

the class k. µ corresponds to the mean vector over all

classes. c is the total number of classes and π

k

de-

note the prior probability of the k

th

class. E(.) is the

expectation operator.

Because it’s not practical to find an analytical so-

lution W that maximizes the criteria J(W), one pos-

sible suboptimal solution is to choose W formed by

the d eigenvectors of S

−1

W

S

b

those correspond to the

d largest eigenvalues. In general, the value of d is

chosen to be equal to the number of classes minus

one. After computation of W, the LDA method pro-

ceeds to the projection of the original data onto the

reduced space spanned by the vectors of W. Note that

this method is based only on first and second order

moments and thus it assumes that the different under-

lying distributions of classes are normally distributed.

This restrictive assumption constitutes a limitation to

using LDA and makes it fail when dealing with non-

Gaussian classes distributions.

3.2 Dimensionality Reduction using

Patrick-Fisher Distance based on

Orthogonal Series

Let recall the expression of the Patrick-Fisher dis-

tance d

PF

:

d

PF

= [

Z

X

|π

1

P

1

(x/w

1

) −π

2

P

2

(x/w

2

)|

2

dx]

1/2

(10)

Here π

i

is the prior probability of class w

i

and

P

i

(x/w

i

) denotes its conditional probability density.

The

ˆ

d

PF

(Ghorbel, 2011) is obtained by substitut-

ing the conditional probability density by its orthog-

onal estimation into the expression of the d

PF

. After

some computations given in (Ghorbel, 2011), the ex-

pression of the

ˆ

d

PF

could be written as follow:

ˆ

d

PF

(W ) =

1

N

2

(

N1

∑

i=1

N1

∑

j=1

K

k

N1

(< W |x

1

i

>,< W |x

1

j

>)+

N2

∑

i=1

N2

∑

j=1

K

k

N2

(< W |x

2

i

>,< W |x

2

j

>) −2Re(

N1

∑

i=1

N2

∑

j=1

K

min(k

N1

,k

N2

)

(< W |x

1

i

>,< W |x

2

j

>)))

(11)

Where < | > denotes the scalar product opera-

tor. x

k

i

is the i

th

observation of the k

th

class. Re(.)

correspond to the real part of complex number and

K

k

Ni

(x,y) is the kernel function associated to the or-

thogonal system of functions {e

m

(x)} used (Ghorbel,

2011). {N

i

}

i∈{1,2}

is the sample size of the i

th

class

and N is the total size of all classes.

The

ˆ

d

PF

expression depends on {k

Ni

}

i∈{1,2}

which

represent the number of terms to be used to estimate

the PDF of the i

th

class.

For dimensionality reduction purpose, this

ˆ

d

PF

is

considered as the criterion function to be maximized

with respect to a linear projection matrix W that trans-

form original data space onto a d-dimensional sub-

space so that classes are most separated. A linear pro-

jection matrix W that maximizes the

ˆ

d

PF

should be

found numerically. Since the equation of this estima-

tor is highly nonlinear according to the element of W

and an analytical solution is often practically not fea-

sible, we will resort to an optimization algorithm to

compute a suboptimal projection matrix W.

3.3 Smoothing Parameter Selection for

Orthogonal Patrick-Fisher Distance

Estimator

To determine the optimal numbers of {k

Ni

}

i∈{1,2}

to

SmoothingParametersSelectionforDimensionalityReductionMethodbasedonProbabilisticDistance-Applicationto

HandwrittenRecognition

327

be used for estimating the

ˆ

d

PF

, we propose to con-

sider those minimizing the MISE criteria of this esti-

mator. We define this latter as:

MISE = J(k

N1

,k

N2

) = E(|d

PF

−

ˆ

d

PF

|

2

) (12)

Note that the simplicity of the MISE expression

for orthogonal density estimator does not seem to be

the same for the

ˆ

d

PF

. Hence, a numerical evaluation

of k

N

1

and k

N

2

is extremely complex. For solving the

optimal choice problem for the

ˆ

d

PF

, we propose to use

for each class orthogonal density estimator the opti-

mal value determined by the method described in sec-

tion 2. Rather than used a pre-specified values, this

choice seems to be reasonable to minimize the MISE

of the

ˆ

d

PF

. To verify this purpose, we give the follow-

ing simulation study. We generate two samples data

from two different Gaussians distributions with pa-

rameters µ

1

= 1, Var

1

= 3 and µ

2

= 3, Var

2

= 1. Each

sample have a size equal to 1000. We vary k

N1

from 1

to

√

N1 and k

N2

from 1 to

√

N2 and we calculate

ˆ

d

PF

for each pair (k

N1

,k

N2

) by considering k

N1

terms and

k

N2

terms to estimate respectively the PDF of the first

sample and the second one. The theoretical d

PF

can

be calculated since we have the analytical expression

of the Gaussian PDF of each sample. We approxi-

mate the integral in the expression of d

PF

by using

the Simpson’s method (Atkinson, 1989). To estimate

the expectation in the expression of J(k

N1

,k

N2

), we

generate samples one hundred times and we calculate

the means of the square difference between the d

PF

and its orthogonal estimation

ˆ

d

PF

. Figure 2 shows the

values of J(k

N1

,k

N2

). The pair (k

N1

,k

N2

) that mini-

mizes J(k

N1

,k

N2

) is selected to be used as the optimal

values of k

N1

and k

N2

.

Based on an extensive simulation, the values of

k

N1

and k

N2

which minimize respectively the orthog-

onal density estimate of the first class and the sec-

ond one give a sub-optimal solution to minimize

J(k

N1

,k

N2

). This choice could be useful when we

have no information about the PDFs of data which

corresponds generally to the case of real world data.

4 EXPERIMENTAL RESULTS

In this section, we intend to compare the perfor-

mances of the dimensionality reduction method based

on the

ˆ

d

PF

described above with the LDA both on sim-

ulated data and on real world dataset. To do that, we

evaluate the classification accuracy of a nonparamet-

ric Bayesian classifier that is applied on the projected

data onto the reduced space. We evaluate the classifi-

cation accuracy by counting the number of misclassi-

fied samples obtained by the classifier over all classes

of the projected data.

Figure 2: Values of J(k

N1

,k

N2

) against the different val-

ues of pair (k

N1

,k

N2

). In red color the selected minima of

J(k

N1

,k

N2

).

4.1 Experiment with Simulated Data

This experiment concerns the two-class case. Vec-

tors data from the first class are drawn from a multi-

variate Gaussian distribution with mean vector µ

1

=

(3...3)

T

. For the second class, vectors data are gen-

erated from a mixture of two multidimensional Gaus-

sians distributions. The first distribution has a mean

vector µ

2

= (2...2)

T

and the second has a mean vector

µ

3

= (4...4)

T

. We consider for all these distributions

the same covariance matrix

∑

= 2I where I denotes

the identity matrix. The sample size for each class

is equal to 1000 and generated vectors have a dimen-

sion equal to 14. We search for the projection vector

W that map the generated data onto the optimal one-

dimensional subspace according to the two methods

of reduction studied. Note that the used system of the

orthogonal functions is the trigonometric one (Hall,

1982). After finding the projection vector W accord-

ing to each method, simulated data are projected onto

the reduced space. Then we applied a Bayesian clas-

sifier on the projected data obtained. Classification

results are summarized in Table 1. We remark that the

dimensional reduction accuracy of the method based

on the

ˆ

d

PF

is better than the LDA.

Table 1: Classification results of experiment with simulated

data.

LDA

method

Method

based on

ˆ

d

PF

Misclassification

rate

0.47 0.22

The LDA method fails to find an optimal subspace

in which satisfactorily class separation is obtained

since the original simulated data contain multimodal

distribution. However, the method based on the

ˆ

d

PF

succeeds to overcome the restriction of unimodality.

The success of this latter method can be explained by

the fact that the

ˆ

d

PF

based method accounts for higher

ICPRAM2013-InternationalConferenceonPatternRecognitionApplicationsandMethods

328

order statistics and not just for the second order as in

the LDA.

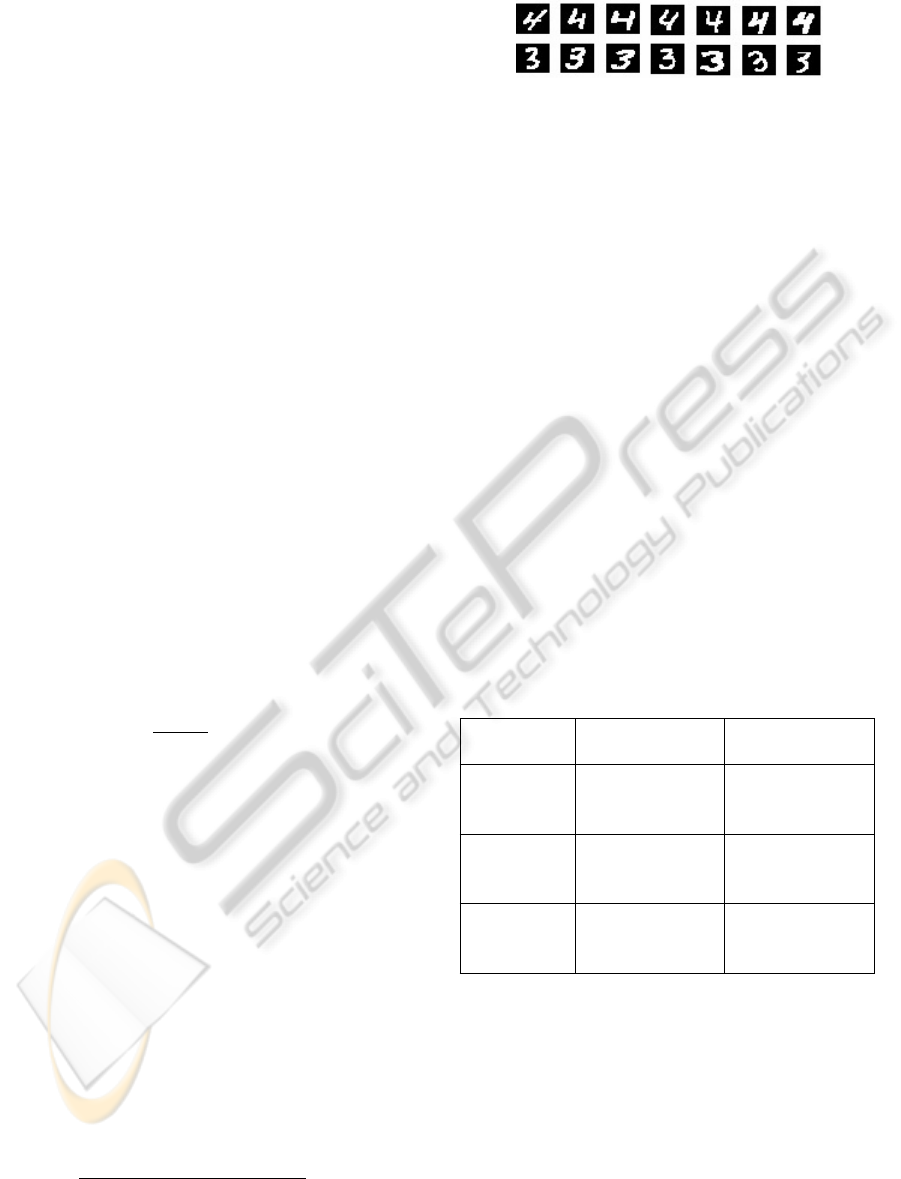

4.2 Experiment with Real World Data

In this experiment, we consider a sample set selected

from the publicly available MNIST database contain-

ing binary images of handwritten digits. From this

database we consider a subset formed by two classes

of digits. Each class contains 1000 randomly selected

digits. Figure 3 shows some examples of selected

digits. Each digit is described by a features vector

which is invariant under planar rotation, translations

and scale factors. We denote the features vector by

I

k

. Among the large proposed invariant descriptors

for planar contour shape in the literature, we con-

sider here three different kinds of contour-descriptors.

The first one is well known and is called Fourier de-

scriptors (Ghorbel and Bougrenet, 1990). The second

one introduced by Crimmins admits the completeness

property (Crimmins, 1982). Third one introduced in

(Ghorbel, 2011) gives in the same time the complete-

ness and the stability properties to the descriptors. In

the following, we recall the definitions of these three

set of invariants descriptors. Let denote by γ a nor-

malized arc length of a closed contour which repre-

sents the exterior handwritten boundary and by C

k

(γ)

its corresponding Fourier coefficient with order k.

4.2.1 Fourier Descriptors Set

{I

k

} =

|C

k

(γ)|

|C

1

(γ)|

∀ k ≥ 2 (13)

4.2.2 Crimmins Descriptors Set

I

k

0

= |C

k

0

(γ)|

I

k

1

= |C

k

1

(γ)|

(

∀ k 6= k

0

and k 6= k

1

:

I

k

= C

k

0

−k

1

k

(γ)C

k

1

−k

k

0

(γ)C

k−k

0

k

1

(γ)

(14)

4.2.3 Ghorbel Descriptors Set

I

k

0

= C

k

0

(γ)

I

k

1

= C

k

1

(γ)

∀ k 6= k

0

and k 6= k

1

:

I

k

= 0 i f |C

k

0

(γ)| = 0 or |C

k

1

(γ)| = 0

I

k

=

C

k

0

−k

1

k

(γ)C

k

1

−k

k

0

(γ)C

k−k

0

k

1

(γ)

|C

k

0

(γ)|

k

1

−k−p

|C

k

1

(γ)|

k−k

0

−q

otherwise

(p and q are two f ixed positive f loats)

(15)

Figure 3: Some examples of digits selected from MNIST

database.

We compute Fourier coefficients from digit out-

line boundary and we construct the three invariants

descriptors sets as defined above. We consider for

each digit shape from our selected sample the first

fourteen Fourier coefficients. After dimensional re-

duction with the two methods studied, classification

results are computed and are illustrated in Table 2.

We notice that the two dimensionality reduction

methods perform similar when using the Fourier de-

scriptors dataset and Ghorbel descriptors. These re-

sults can be justified by the fact that these two sets

of descriptors verify the property of stability intro-

duced in (Ghorbel, 2011). This property expresses the

fact that low level distortion of the shape does not in-

duce a noticeable divergence in the set of descriptors.

Hence, the distribution of the invariant descriptors as-

sociated to each class has one mode since in this case

we can assume that only the first and the second statis-

tical moments are needed to estimate their conditional

PDFs. This is not the case for Crimmins descriptors

since it does not verify the stability property so that

the induced classes PDFs could be multi-modal.

Table 2: Misclassification rate results of experiment with

real dataset.

LDA method

Method based

on

ˆ

d

PF

Fourier

descriptors

set

0.26 0.25

Crimmins

descriptors

set

0.38 0.15

Ghorbel

descriptors

set

0.1 0.1

5 CONCLUSIONS

In this paper we propose a rule for the determina-

tion of the optimal number of terms in an orthogo-

nal series for best approximation of the orthogonal

Patrick-Fischer distance estimator. When data are

multi-modal, experimental results both on simulated

data and on real dataset have shown that the orthogo-

nal Patrick-Fisher distance estimator gives better per-

formance in the mean of misclassification rate since it

SmoothingParametersSelectionforDimensionalityReductionMethodbasedonProbabilisticDistance-Applicationto

HandwrittenRecognition

329

increases the probabilistic measure between the pro-

jected classes onto the reduced space and decreases

the number of the misclassified samples. Otherwise,

when the different conditional distributions are with

one mode, the LDA performance becomes similar.

Thus, LDA becomes preferable because of its relative

algorithmic simplicity. Simulation data and real data

basis are tested in order to prove the importance of the

adjustment of the orthogonal Patrick-Fischer distance

estimator. In our future work, we will consider the

multi-class case.

REFERENCES

Aladjem, M. (1997). Linear discriminant analysis for two

classes via removal of classification structure. In In

IEEE PAMI.

Atkinson, K. (1989). An Introduction to Numerical Analy-

sis. John Wiley and Sons.

Beauville, J. P. A. (1978). Estimation non paramtrique de la

densit et du mode exemple de la distribution gamma.

In In Revue de Statistique Applique.

Cencov, N. N. and Nauk, D. Z. (1962). Estimation of an

unknown density function from observations. In In

SSSR.

Crimmins, T. (1982). A complete set of fourier descriptors

for two-dimensional shapes. In In IEEE Trans. Syst.

Drira, W. and Ghorbel, F. (2012). Dimension reduction by

an orthogonal series estimate of the probabilistic de-

pendence measure. In ICPRAM’12, Int. Conf. on Pat-

tern Recognition Applications and Methods, Portugal.

Fukunaga, K. (1990). Introduction to Statistical Pattern

Classification. Academic Press, New York.

Ghorbel, F. (2011). Vers une approche mathmatique unifie

des aspects gomtrique et statistiques de la reconnais-

sance des formes planes. arts-pi, Tunisie.

Ghorbel, F. and Bougrenet, J. T. (1990). Automatic control

of lamellibranch larva growth using contour invariant

feature extraction. In Pattern Recognition.

Ghorbel, F., Derrode, S., and Alata, O. (2012). Rcentes

avances en reconnaissance de formes statistique. arts-

pi, Tunisie.

Hall, P. (1982). Comparison of two orthogonal series meth-

ods of estimating a density and its derivatives on an

interval. In In Journal of Multivariate Analysis.

Hillion, A., Masson, P., and Roux, C. (1988). Une mthode

de classification de textures par extraction linaire non

paramtrique de caractristiques. In In Colloque TIPI.

Kronmal, R. and Tarter, M. (1968). The estimation of

probability densities and cumulatives by fourier series

methods. In In JASA, Journal of the American Statis-

tical Association.

Patrick, E. A. and Fisher, F. P. (1969). Non parametric fea-

ture selection. In In IEEE Trans. Information Theory.

Wong, K. W. and Wang, W. (2005). Adaptive density esti-

mation using an orthogonal series for global illumina-

tion. In In Computers and Graphics.

ICPRAM2013-InternationalConferenceonPatternRecognitionApplicationsandMethods

330