Non-uniform Quantization of Detail Components in Wavelet

Transformed Image for Lossy JPEG2000 Compression

Madhur Srivastava

1

and Prasanta K. Panigrahi

2

1

Department of Biological and Environmental Engineering, Cornell University, Ithaca, New York, U.S.A.

2

Department of Physical Sciences, Indian Institute of Science Education and Research, Mohanpur, West Bengal, India

Keywords:

JPEG-2000 Standard, Discrete Wavelet Transform, Thresholding, Non-uniform Quantization, Image Com-

pression.

Abstract:

The paper introduces the idea of non-uniform quantization in the detail components of wavelet transformed

image. It argues that most of the coefficients of horizontal, vertical and diagonal components lie near to zeros

and the coefficients representing large differences are few at the extreme ends of histogram. Therefore, this

paper advocates need for variable step size quantization scheme which preserves the edge information at the

edge of histogram and removes redundancy with the minimal number of quantized values. To support the

idea, preliminary results are provided using a non-uniform quantization algorithm. We believe that successful

implementation of non-uniform quantization in detail components in JPEG-2000 still image standard will

improve image quality and compression efficiency with lesser number of quantized values.

1 INTRODUCTION

The emergence of JPEG (Joint Photographic Experts

Group)-2000 still image compression standard has led

to a different approach to image analysis of image

data compared to the previous JPEG standard (Mar-

cellin et al., 2000). It has higher compression effi-

ciency and better error resilience. Furthermore, it is

progressive by resolution and quality in comparison

to other still image compression standards (Skodras et

al., 2001). These new features have enabled the use of

JPEG-2000 in new areas like internet, color facsimile,

printing, scanning, digital photography, remote sens-

ing, mobile, medical imagery, and e-commerce (Sko-

dras et al., 2001). One of the major reasons for bet-

ter performance and the wide range of applications

of JPEG-2000 is due to the introduction of Discrete

Wavelet Transform (DWT) in the standard replacing

Discrete Cosine Transform. In addition, the quanti-

zation of DWT coefficients of image has led to rate-

distortion feature in the standard. Part-I of JPEG-

2000 image compression standard uses fixed-size uni-

form scalar deadzone quantization scheme. In Part-II,

deadzone with variable length is incorporated in uni-

form scalar quantization. Additionally, trellis coded

quantization (TCQ) scheme is combined in the Part-

II of JPEG compression standard (Marcellin et al.,

2002). However, there are some limitations on the

step size selection in the case of lossy image compres-

sion using non-invertible wavelets (see section 3).

This paper advocates the need for non-uniform

quantization scheme in the detail components of

DWT for lossy image compression to overcome the

current limitations, to improve image quality at same

size, and to improve the compression ratio at a par-

ticular image quality. The main motivation for advo-

cating non-uniform quantization is that in each detail

component, the majority of the coefficients lie near

to zero and the coefficients representing large differ-

ences are few at the extreme ends of histogram. This

is due to the fact that each detail component repre-

sents the high frequency coefficients and in an image,

high frequency coefficients only occur at the edges

which constitute extremely low percentage of the en-

tire image. Therefore, there is a need for a quantiza-

tion scheme that provides variable step size with big-

ger step size around the zero, and smaller step size

at the ends of the histogram plot of each horizontal,

vertical and diagonal component. On the other hand,

the approximation component is untouched with non-

uniform quantization. This is because in approxima-

tion component almost all the frequency coeffients

have substantial magnitude and contain high amount

of information, unlike detail components which have

high redundancy.

The paper is organized in the following way. Sec-

604

Srivastava M. and K. Panigrahi P. (2013).

Non-uniform Quantization of Detail Components in Wavelet Transformed Image for Lossy JPEG2000 Compression.

In Proceedings of the 2nd International Conference on Pattern Recognition Applications and Methods, pages 604-607

DOI: 10.5220/0004333706040607

Copyright

c

SciTePress

tion 2 briefly describes discrete wavelet transform. In

section 3, uniform quantization scheme is given with

its limitations on non-invertible wavelets. Section 4

provides a prospective non-uniform quantization al-

gorithm. Preliminary results supporting the proposed

algorithm are given in section 6. Finally, section 7

concludes the paper stating the advantages of using

the proposed non-uniform quantization algorithm and

provides direction for future work.

2 DISCRETE WAVELET

TRANSFORM

In discrete wavelet transform the image is decom-

posed into four pieces at each level. It is identical to

the subbands spaced in the frequency domain. At first

level the original image is decomposed into four lev-

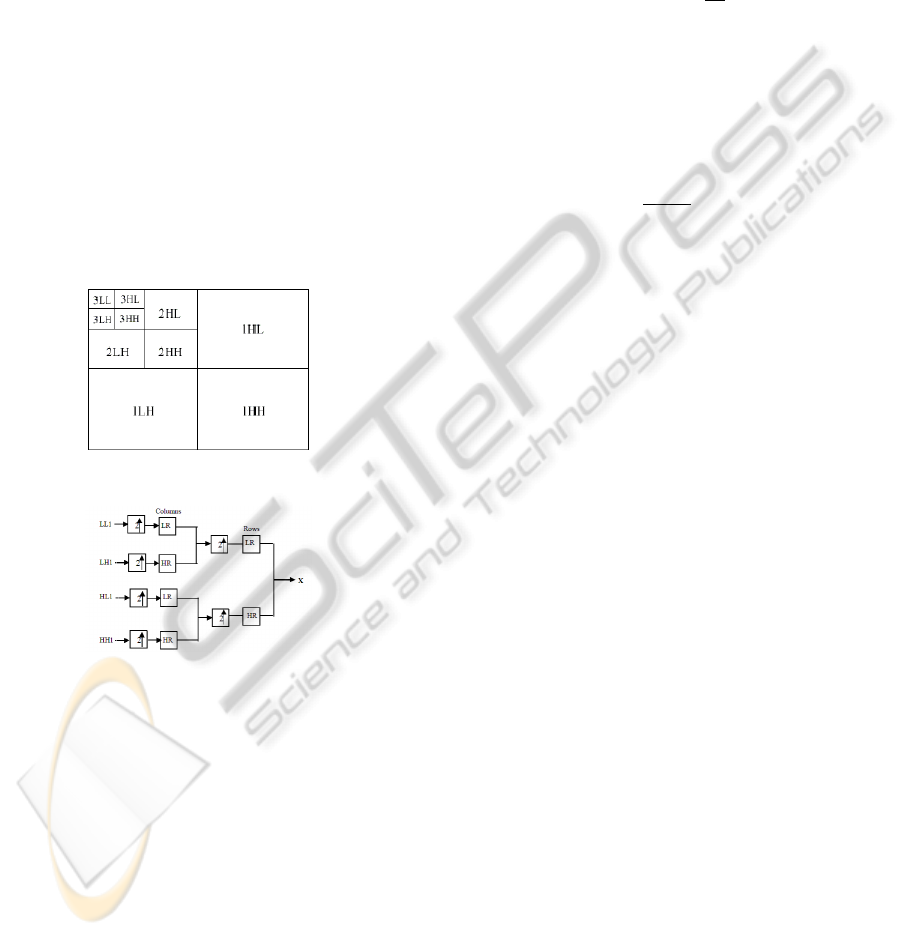

els which are labeled as LL, LH, HL and HH as shown

in fig. 1. Where LL subband is called the average

Figure 1: Representation of three level 2-D DWT.

Figure 2: Wavelet filter bank for one level image.

part. It is low pass filtered in both the directions and

it is most likely identical to the original image, hence

it is called approximation. LH is the difference of the

horizontal rows, HL gives the vertical differences and

HH gives the diagonal difference. The components

LH, HL and HH are called detailed components. The

approximation part is further decomposed until the

final coefficient is left. The decomposed image can

be reconstructed using a reconstruction filter. Fig. 2

shows the wavelet filter bank for one level image re-

construction. Since approximation part is identical to

original image hence it contains wavelet coefficients

of larger amplitudes. On the other hand in detailed

components, wavelet coefficients are smaller in am-

plitude and are close to zero.

3 UNIFORM SCALAR

QUANTIZATION

In Part-I of the standard, JPEG-2000 applies dead-

zone uniform scalar quantizater on the wavelet trans-

formed image. The quantization index q is calculated

using the following formula,

q = sign(y)⌊

|y|

∆

⌋ (1)

where ∆ is quantizer step size and y is the input to the

quantizer.

The uniform scalar quantizer with variable size of

deadzone modifies the above formula to the follow-

ing,

q =

0 |y| < −nz∆

sign(y)⌊

|y|+nz∆

∆

⌋ |y| ≥ −nz∆

(2)

It has to be noted from equation 2 that the fixed

width of step size is maintained, except for the dead-

zone region. However, in the case of non-invertible

wavelets there are some limitations regarding step

size and its binary representation (Marcellin et al.,

2002). Firstly, there has to be only one quantization

step size per subband. This constraints the step size

to be less than or equal to the quantization step size

of all the different regions of interest in the subband.

Secondly, the step size can acquire upto 12 significant

binary digits. Thirdly, the upper bound of step size

can quantize almost all the coefficients of subband to

zero when the step size is twice or more than the sub-

band magnitude. Lastly, the lower bound on the step

size restricts high accuracy coding as upto 21 and 30

fractional bits of HH coefficients are allowed to be

encoded for 8 and higher bits, respectively.

4 PROPOSED NON-UNIFORM

QUANTIZATION ALGORITHM

An algorithm is proposed for the quantization of each

detail component in the wavelet domain into variable

step sizes using mean and standard deviation. Starting

from the weighted mean of histogram plot, the algo-

rithm is recursively applied on the sub-ranges to find

the next threshold level and continuing it till ends of

the histogram are reached.

The method takes into account that majority of co-

efficients lie near to zero and coefficients representing

large differences are few at the extreme ends of his-

togram. Hence, the procedure provides for variable

step size with bigger block size around the mean, and

having smaller blocks at the ends of histogram plot

Non-uniformQuantizationofDetailComponentsinWaveletTransformedImageforLossyJPEG2000Compression

605

Figure 3: Left to Right: Original ’Lenna’ image; Reconstructed image at quantized level 2; Reconstructed image at quantized

level 4; Reconstructed image at quantized level 6; Reconstructed image at quantized level 8; Reconstructed image at quantized

level 10. DB9 wavelets are used in all forward and inverse wavelet transforms.

of each horizontal, vertical and diagonal components,

leaving approximation coefficients unchanged. The

algorithm is based on the fact that a number of dis-

tributions tends toward a delta function in the limit

of vanishing variance. In the following we systemati-

cally outline the algorithm.

1. n, the number of step sizes are taken as input.

2. Range R = [a,b]; initially a= min(histogram) and

b = max(histogram).

3. Find weighted mean (µ) of values ranging in R.

4. Initially, thresholds T

1

= µ and T

2

= µ+0.001 . For

T

2

, 0.001 is added to avoid the use of weighted

mean again. Any value can be taken such that it

doesnt move significantly from weighted mean.

5. Repeat steps 6-9 (n− 2)/2 times

6. Find weighted mean (µ

1

) and standard deviation

(σ

1

) of values ranging [a,T

1

] and weighted mean

(µ

2

) and standard deviation (σ

2

) of values ranging

[T

2

,b].

7. Thresholds t

1

and t

2

are calculated as t

1

=µ

1

- k

1

σ

1

and t

2

=µ

2

+ k

2

σ

2

where k

1

and k

2

are free param-

eters. These are used to increase or decrease the

block size.

8. Assign the values ranging [t

1

,T

1

] and [T

2

,t

2

] with

their respective weighted mean.

9. Assign T

1

=t

1

-0.001 and T

2

=t

2

+0.001 . The value

0.001 is added and subtracted to avoid the reuse

of t

1

and t

2

. Any value can be taken such that it

doesnt move significantly from t

1

and t

2

.

10. Finally, Take weighted mean of values ranging

[a,T

1

] and [T

2

,b] and assign the same to the re-

spected range.

The above algorithm was used to compare

Daubechies and Coiflet wavelet family on there effi-

cacy to provide effective image segmentation in (Sri-

vastava et al., 2011). Moreover, it is also applied in

(Srivastava et al., unpublished) to carry out wavelet

based image segmentation. However, no study has

been conducted of its effectiveness on lossy image

compression. The preliminary results shown in the

next section suggests that the above algorithm can be

useful in carrying out non-uniform quantization in de-

tail coefficients.

5 PRELIMINARY

EXPERIMENTAL RESULTS

The proposed algorithm is applied on the standard

test images from the USC-SIPI Image Database

(sipi.usc.edu/database). Peak Signal to Noise Ratio

(PSNR) and Mean Structural Similarity Index Mea-

sure (MSSIM) (Wang et al., 2004) are used to evalu-

ate the quality of reconstructed images. The PSNR is

computed using the following formula,

PSNR = 10log

10

(

I(x,y)

2

max

MSE

) (3)

MSE =

1

MN

M

∑

x=1

N

∑

y=1

(I(x, y) −

˜

I(x,y))

2

(4)

where M and N are dimensions of the image, x and y

are pixel locations, I is the input image, and

˜

I is the

reconstructed image. The MSSIM is calculated with

the help of following formulae,

SSIM(p,q) =

(2µ

p

µ

q

+ K

1

)(2σ

pq

+ K

2

)

(µ

2

p

+ µ

2

q

+ K

1

)(σ

2

p

+ σ

2

q

+ K

2

)

(5)

MSSIM(I,

˜

I) =

1

M

M

∑

i=1

SSIM(p

i

,q

i

) (6)

where µ is mean; σ is standard deviation; p and q are

window sizes of original and reconstructed images,

and the size of typical window is 8× 8; K

1

and K

2

are

the constants with K

1

= 0.01 and K

2

= 0.03; M is the

total number of windows.

Fig. 3 displays the reconstructed images at dif-

ferent quantized values after applying the proposed

algorithm on each detailed component, separately. In

addition to the fig. 3, table 1 shows the PSNR and

MSSIM of four test images with dimensions 512 ×

512 at quantized values ranging from 2 to 10. DB9 is

ICPRAM2013-InternationalConferenceonPatternRecognitionApplicationsandMethods

606

Table 1: PSNR and MSSIM of reconstructed gray-level test images using DB9 wavelets at different quantized values.

Image Name (Dimension) Number of Quantized Values PSNR (dB) MSSIM

Lenna (512 × 512) 2 37.7780645697 0.9982142147

4 41.8874641847 0.9992823393

6 43.8590907980 0.9994968461

8 44.2931530130 0.9995311420

10 44.3489820362 0.9995364013

Baboon (512 × 512) 2 26.8132232589 0.9595975231

4 31.5997241598 0.9869355763

6 32.9223693072 0.9905018802

8 33.0622299131 0.9908045725

10 33.0762467334 0.9908358964

Pepper (512 × 512) 2 35.1261558539 0.9968488432

4 38.0511064803 0.9982568820

6 40.6144660901 0.9990231546

8 40.9936699886 0.9991423921

10 41.0082447784 0.9991441908

House (512 × 512) 2 31.9886146645 0.9904885967

4 35.7235201624 0.9951268912

6 38.2730683421 0.9975855578

8 38.4299731739 0.9976918850

10 38.4349224964 0.9976960112

the wavelet used for taking DWT and inverse DWT.

As can be seen from fig.3, the images are not dis-

tinguishable visually. Results from table 1 substan-

tiates the claim. For ’Lenna’, minimum and maxi-

mum PSNR observed are 37.77 and 44.38, respec-

tively, with MSSIM being .99 at all quantized num-

bers. Overall, there is increase in PSNR and MSSIM

with the increase of the number of quantized values.

This increase may or may not be effectivefor practical

application. For example, ’Baboon’ will be best rep-

resented with 4 quantized levels because there is sub-

stantial increase of PSNR and MSSIM from quantized

level 2, but compared to quantized 6 to 10, the in-

crease of these higher levels are not effective enough

to be noticed visually.

6 CONCLUSIONS

This paper introduces the concept of non-uniform

quantization for detailed components in the JPEG-

2000 still image standard. We believe that further ex-

ploring this option might lead to the following pro-

gressive results in the current standard: (1) Improved

quality at same image size, (2) Better compression at

same quality, (3) Flexible number of quantized values

based on the actual statistics of wavelet tranformed

image, and (4) Variable step size compared to fixed

step size, maximizing the elimination of redundancy.

However, the results shown in the paper are pre-

liminary and suggestive. It will be interesting to ex-

amine the proposed quantization algorithm when em-

bedded in the JPEG-2000 standard. Factors which

will determine the success of proposed approach

will be actual compressed size, image quality, time

complexity and encoder complexity. Also, there is

wide scope for testing other non-uniform quantiza-

tion schemes, or perhaps creating new quantization

scheme customized to the standard.

REFERENCES

Marcellin, M. W., Gormish, M. J., Bilgin, A. and Boliek,

M. P. (2000). An Overview of JPEG-2000. In Pro-

ceedings of IEEE Data Compression Conference, pp.

523-541.

Marcellin, M. W., Lepley, M. A., Bilgin, A., Flohr, T. J.,

Chinen, T. T., and Kasner, J. H. (2002). An Overview

of quantization in JPEG 2000. In Signal Processing:

Image Communication, vol. 17, pp. 73-84.

Skodras, A., Christopoulos, C., and Ebrahimi, T. (2001).

The JPEG 2000 Still Image Compression Standard. In

IEEE Signal Processing Magazine, pp. 36-58.

Srivastava, M., Yashu, Y., Singh, S. K., and Panigrahi, P. K.

(2011). Multisegmentation through wavelets: Com-

paring the efficacy of Daubechies vs Coiflets. In Con-

ference on Signal Processing and Real Time Operat-

ing System.

Srivastava, M., Singh, S. K., and Panigrahi, P. K. (Unpub-

lished) A Fast Statistical Vanishing Method for Mul-

tilevel Thresholding in Wavelet Domain.

Wang, Z., Bovik, A. C., Sheikh, H. R. and Simoncelli, E.

P. (2004). Image quality assessment: from error visi-

bility to structural similarity. In IEEE Transactions on

Image Processing, pp. 600-612.

Non-uniformQuantizationofDetailComponentsinWaveletTransformedImageforLossyJPEG2000Compression

607