Early Usability Evaluation in Model Driven Framework

Lassaad Ben Ammar and Adel Mahfoudhi

University of Sfax, ENIS, CES Laboratory, Soukra km 3,5, B.P. 1173-3000, Sfax, Tunisia

Keywords:

MDE, User Interface, Early Usability Evaluation, Metrics.

Abstract:

Usability evaluation play a key role in the user interfaces development process. It is a crucial part that con-

strains the success of an interactive application. Usability evaluation is usually conducted by end users or

experts after the generation of the user interfaces. Therefore, the ability to go back and makes major changes

to the design is greatly reduced.

Recently, user interfaces engineering is moving towards Model Driven Development (MDD) process. The

conceptual models have become a primary artifact in the development process. Therefore, evaluating the us-

ability from the conceptual models would be a significant advantage with regard to saving time and resources.

The present paper proposes a model-based usability evaluation method which allows designers to focus on

the usability engineering from the conceptual models. The evaluation can be automated taking as input the

conceptual model that represent the system abstractly.

1 INTRODUCTION

The evaluation of user interfaces is a recognized prob-

lem and well explored in literature (Grislin and Kol-

ski, 1996). Several methods, techniques, tools and

criteria have been proposed to ensure the usability of

the user interfaces. However, usability evaluation is

usually conducted at the last stage of the development

process by end users or experts. At this stage, the abil-

ity to go back and makes major changes in the design

is greatly reduced.

In the last decade, the user interfaces engineer-

ing is moving towards Model Driven Development

(MDD) process. In this context, the Model Driven

Engineering (MDE) (Favre, 2004) proved quite ap-

propriate. This approach tends to develop user inter-

faces through the definition of models and their trans-

formations to a less abstract level to the code in the

target platform. A renowned work in this context is

the Cameleon project (Calvary et al., 2001). It pro-

vides a unifying reference framework for the user in-

terface development taken into consideration the con-

text of use. Such user interfaces are namely multi-

target. As drawback, this framework ignore usabil-

ity engineering and consider usability as a natural by-

product property of whatever approach being used.

Therefore, there is a need to extend the Cameleon

framework in order to promotes usability as a first

class entity in the development process.

The main objective of this paper is to proposes an ex-

tension of the Cameleon framework by considering

the usability engineering as a part of the development

process. We opted for the Cameleon framework since

it presents a unifying framework for the development

of multi-target user interface. The evaluation of the

usability can be conducted from the conceptual mod-

els.

We structure the remainder of this paper as follows.

While Section 2 presents an outline of the usabil-

ity models quoted in the literature, Section 3 pro-

vides a description of our proposed usability evalu-

ation method. A case study is presented in Section 4

in order to show the usefulness of our proposal to the

uncovering of potential usability problems. Finally,

Section 5 presents the conclusion and provides per-

spectives for future research work.

2 RELATED WORKS

Usability evaluation is often defined as methodolo-

gies for measuring the usability aspects of a user in-

terface and identifying specific problems (Nielsen,

1993). There exist several methods targeting the us-

ability evaluation of user interfaces. In this section,

we focus our interest in the analysis of model-based

methods since our main motivation is to integrate us-

ability issues into a model driven development ap-

23

Ben Ammar L. and Mahfoudhi A..

Early Usability Evaluation in Model Driven Framework.

DOI: 10.5220/0004411200230030

In Proceedings of the 15th International Conference on Enterprise Information Systems (ICEIS-2013), pages 23-30

ISBN: 978-989-8565-61-7

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

proach.

The usability evaluation has attracted the atten-

tion of both Human Computer Interaction (HCI) com-

munity and Software Engineering (SE) communities.

The SE community proposed a quality model in the

ISO/IEC 9126-1 standard (ISO, 2001). In this model,

usability is decomposed into Learnability, Under-

standability, Operability, Attractiveness and Compli-

ance. However, the HCI community has shown in the

ISO/IEC 9241-11 standard (ISO, 1998) how usability

can be measured in term of Efficiency, Effectiveness

and User Satisfaction. Although both standards are

useful, they are too abstract and need to be extended

or adapted in order to be applied in a specific domain.

Some initiatives to extend both standards are pro-

posed over the last few years. (Seffah et al., 2006)

analyzed existing standards and surveys in order to

detect their limits and complementarities. Moreover,

the authors unify all these standards into a single con-

solidated model called Quality in Use Integrated Mea-

surement QUIM. The QUIM model includes metrics

that are based on the system code as well as on the

generated interface. This makes the application of the

QUIM to a model driven development process diffi-

cult.

(Abrahão and Insfrán, 2006) proposed an exten-

sion of the ISO/IEC 9126-1 usability model. The

added feature is intended to measure the user interface

usability at an early stage of a model driven develop-

ment process. The model contains subjective mea-

surement which raises the question about its applica-

bility at the intermediate artifacts. Besides, it lacks of

any detail about how various attributes are measured

and interpreted. An extension of this model is pre-

sented in (Fernandez et al., 2009).

The usability of a multi-platform user interface

generated with an MDE approach is evaluated in

(Aquino et al., 2010). The evaluation is conducted in

term of effectiveness, efficiency and user satisfaction.

The usability evaluation is based on the experiments

with end-users. This dependency is incompatible with

an early usability evaluation.

(Panach et al., 2011) proposes an early usability

measurement method. The usability evaluation is car-

ried out early in the development process since the

conceptual model. The main limitation of this pro-

posal is that metrics are specific to the OO-method

(Gómez et al., 2001). Therefore, they cannot be ap-

plied to other method, which is a disadvantage. They

need some adaptation in order to be used (adopted) in

other similar methods.

The analysis of the related works allows us to un-

derline some limitations. The system implementa-

tion is always a requirement to perform the evalua-

tion. Besides, the majority of the existing proposals

lack of guidelines about how usability attributes and

metrics are measured and interpreted. Regardless of

the approach, none takes into account the variation

of context elements during their process activities and

the influence it brings to the selection of the most rel-

evant attributes and metrics. Considering these limi-

tations, it becomes clear that usability evaluation in a

multi-target user interface development process is still

an immature area and many more research works are

needed. In order to covers this need, we propose to in-

tegrate usability issues into the Cameleon framework.

The goals of our proposal are: 1) the evaluation pro-

cess must be carried out quickly in the development

process and independently of the system implementa-

tion, 2) the evaluation must be done in an automation

way. The proposed method is intended to evaluate he

usability from the conceptual model. For that reason,

we propose a usability model wherein usability met-

rics are based on the conceptual primitives. Metrics

are extracted from existing usability guidelines such

as (Bastien and Scapin, 1993), (M. Leavit, 2006) and

(Panach et al., 2011) with respect to the following re-

quirements: 1) possibility to be quantified based on

conceptual primitives and 2) relation with one of the

context of use elements (user, platform, environment).

3 PROPOSED USABILITY

EVALUATION METHOD

3.1 Overview

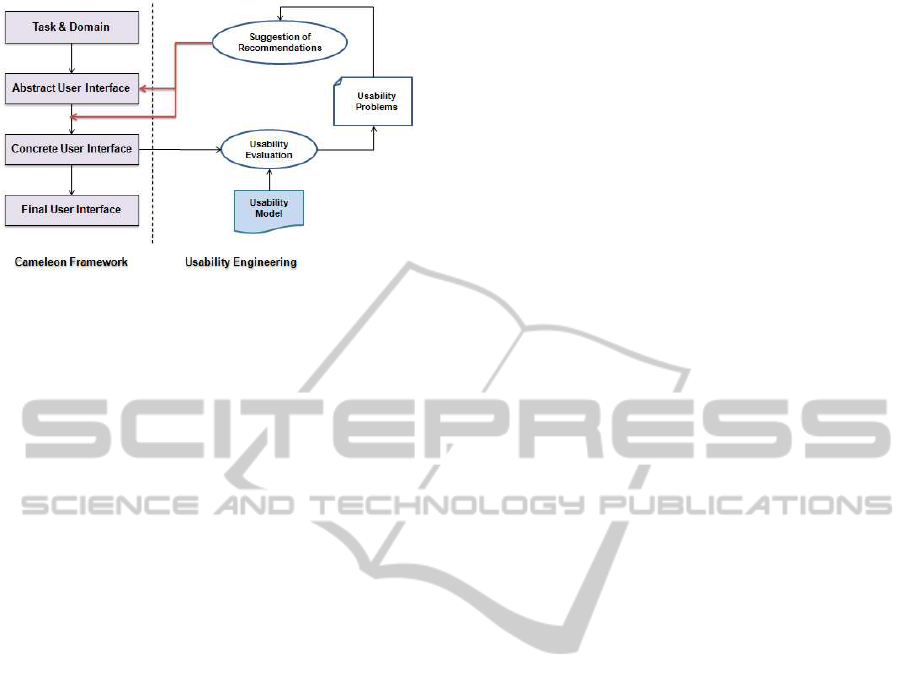

The Cameleon framework provides a user interface

development process which defines four essential lev-

els of abstraction: Task & Domain, Abstract User

Interface (AUI), Concrete User Interface (CUI) and

Final User Interface (FUI). The development process

takes as input the conceptual models in order to gen-

erate the final executable user interface. In this frame-

work, the conceptual models covers the AUI and the

CUI levels. The CUI model is the most affected by

usability. Therefore, we opted to perform the evalu-

ation from this level. To do that, we proposes a set

of usability attributes which can be quantified from

by means of metrics which are based on the concep-

tual primitives of this model. The usability evaluation

module take as input the CUI model and the usability

model. As outcome, it provides a set of specific us-

ability problems. Problems are related to the concep-

tual primitives that are affected by it. These problems

are used to suggest some recommendationsin order to

correct the previous stages or the transformation rules

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

24

Figure 1: The proposed Usability Measurement Method.

(see Fig.1).

The proposed usability model extend that pre-

sented in the ISO/IEC 9126-1 standard. In such model

usability is decomposed into five sub-characteristics

that are defined as follows:

• Learnability: the capability of the software prod-

uct to enable the user to learn its application.

• Understandability: the capability of the software

product to enable the user to understand whether

the software is suitable, and how it can be used for

particular tasks and conditions of use.

• Operability: the capability of the software product

to enable the user to operate and control it.

• Attractiveness: the capability of the software

product to be attractive to the user.

• Compliance: The capability of the software prod-

uct to adhere to standards, conventions, style

guides or regulations relating to usability.

Since the sub-characteristics have been described ab-

stractly, we have analyzed some usability guide-

lines presented in the literature ((Bastien and Scapin,

1993), (M. Leavit, 2006), (Panach et al., 2011)) in

order to extract and adapt more detailed usability at-

tributes. Next Sub-Section shows our proposal to de-

compose the former sub-characteristics into measur-

able attributes.

3.2 Attribute Specification

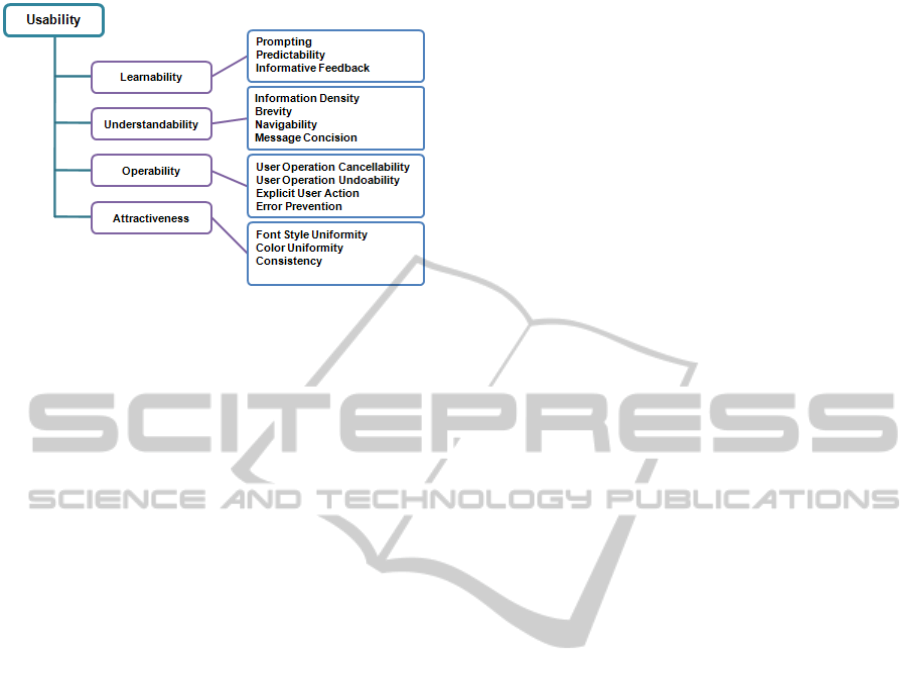

The Learnability can be measured in terms of Prompt-

ing, Predictability and Informative Feedback. The

Prompting refers to the means available to advise, ori-

ent, inform, instruct, and guide the users throughout

their interactions with a computer. The Predictability

refers to the ease with which a user can predict his

future action. The Informative Feedback concerns the

response of the system to the user action. Learnabil-

ity attributes are closely related to the user character-

istics. They can be considered as essential in order to

guarantee a high level of user satisfaction.

In order to be able to measure the Understandabil-

ity sub-characteristic, we propose four measurable at-

tributes. The first attribute is the Information Den-

sity which is the degree in which the system will dis-

play/demand the information to/from the user in each

interface. The Brevity focus on the reduction of the

level of cognitive efforts of the user (number of ac-

tion steps). The short term memory capacity is lim-

ited. Consequently, shorter entries reduce consider-

ably the probability of making errors. Besides, the

Navigability pertains to the ease with which a user

can move around in the application. Finally, Message

Concision concerns the use of few words while keep-

ing expressiveness in the error message. The under-

standability attributes are closely related to the plat-

form features. For example, the screen size has strong

influences to the information density, the navigability

and the brevity attributes.

Operability includes attributes that facilitate the

user’s control and operation of the system. We pro-

pose the following attributes: User Operation Can-

cellability, the possibility to cancel action without

harmful effect to the normal operation; User Oper-

ation Undoability, the proportion of actions that can

be undone without harmful effect to the normal op-

eration; Explicit user action, the system should per-

form only actions requested by user; Error Preven-

tion, available means to detect and prevent data en-

try errors, command errors, or actions with destruc-

tive consequences. Interactive systems should al-

low a high level of control to users especially those

with a low level of experience. Hence, user interface

is obliged to present interface components allowing

such control. The screen size of the platform being

used can affect this control when it does not allow

displaying button like undo, cancel, validate, etc.

The Attractiveness sub-characteristic includes the at-

tributes of software product that are related to the aes-

thetic design to make it attractive to user. We argue

that some aspect of attractiveness can be measured

with regard to the Font Style Uniformity and Color

Uniformity. The Consistency measure the maintain-

ing of the design choice to similar contexts. The user

preferences in term of color or font style are related

to the attractiveness attributes. It should be noted that

some environment features (e.g. indoor/outdoor, lu-

minosity level) affect the choice of the color in order

to obtain a good contrast which give more clear infor-

mation.

Fig.2 shows an overview of our proposal for at-

tributes specification.

EarlyUsabilityEvaluationinModelDrivenFramework

25

Figure 2: The Proposed Usability Model.

3.3 Metric Definition

In order to be able to measure the internal attributes

proposed in the previous Section, we need to define

the metrics required to measure each one. It should

be noted that metrics are intended to measure the in-

ternal usability from the conceptual models that is

why they are founded based on the conceptual primi-

tives of the method presented in (Bouchelligua et al.,

2010). Even though the metrics are specified to this

method, the concept of each one can be applied to any

MDE method with similar conceptual primitives. The

main reason of the choice of the method presented in

(Bouchelligua et al., 2010) is that this method is com-

pliant to the Cameleon framework and use the BPMN

notation to describe the user interface models. The

BPMN notation is based on the Petri networks which

allows the validation of metrics. In what follows, we

list the definition of some examples of these metrics.

Information Density. The average of field edit per

UI.

ID1 =

n

∑

i=1

xi/

n

∑

i=1

yi. (1)

x ∈ (UIFieldEdit), y ∈ (UIWindow).

The maximum number of elements per UI.

ID2 =

n

∑

i=1

xi. (2)

x ∈ (UIField).

Brevity. We propose the number of step required to

accomplish a goal or a task from a well designated

context.

BR = distance(x,y). (3)

x,y ∈(UIWindow), distance(x,y) returns the distance

between x and y.

Navigability. The average of navigation elements

per UI.

NB =

n

∑

i=1

xi/

n

∑

i=1

yi. (4)

x ∈ (UIFieldNavigation), y ∈ (UIWindow).

Message Concision. Since the quality of the mes-

sage is a subjective measure, we propose the number

of word as an internal metric to measure the quality

of the message. The number of word in a message

MC =

n

∑

i=1

xi. (5)

x ∈ (word in UIDialogBox).

Error Prevention. To prevent user against error

while entering data, we propose to use a drop down

list instead of text field when the input element have

a set of accepted values.

ERP =

n

∑

i=1

dropdownlist(x)/n. (6)

x ∈ (UIFieldIn with limited values), dropdownlist re-

turn the number of UIDropDownList.

3.4 Indicator Definition

The metrics defined previously provides a numerical

value that need to have a meaning in order to be in-

terpreted. The mechanism of indicator is restored in

order to reach such goal. It consists in the attribu-

tion of qualitative values to each numerical one. Such

qualitative values can be summarized in: Very Good

(VG), Good (G), Medium (M), Bad (B) and Very Bad

(VB). For each qualitative value, we assign a numer-

ical range. The ranges are defined build on some us-

ability guidelines and heuristics described in the liter-

ature. Next, we detail the numeric ranges associated

with some metrics in order to be considered as a Very

Good value.

• Information Density: several usability guidelines

recommend minimizing the density of a user in-

terface (M. Leavit, 2006). We define a maximum

number of elements per user interface to keep

a good equilibrium between information density

and white space: 15 input elements (ID1), 10 ac-

tion elements (ID2), 7 navigation elements (ID3),

and 20 elements in total (ID4) (Panach et al.,

2011).

• Brevity: some research studies have demonstrated

that the human memory has the capacity to retain

a maximum number of 3 scenarios (Lacob, 2003).

Each task or goals requiring more than 3 steps

(counted in keystrokes) to be reached decreases

usability (Minimal Action MA).

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

26

• Navigability: some studies have demonstrated

that the first level navigational target (Navigation

Breadth NB) should not exceed 7 (Murata et al.,

2001).

• Message Concision: since the quality of the mes-

sage can be evaluated only by the end-user, the

number of the word in a message is proposed as an

internal metrics to assess message quality (Word

Number WN). A maximum of 15 words is recom-

mended in a message (Panach et al., 2011).

• Error Prevention: The system must provide mech-

anisms to keep the user from making mistakes

(Bastien and Scapin, 1993). One way to avoid

mistakes is the use of ListBoxes for enumerated

values. (Panach et al., 2011) recommend at least

90% of enumerated values must be shown in a

ListBox to improve usability (ERP).

Metrics which are extracted from the proposition of

(Panach et al., 2011), they are extracted with their

ranges of values. in fact, this ranges are empirically

validated. For the others metrics, the ranges of values

to consider the numeric value as Very Good are taken

into consideration in order to estimate the value to be

considered as Very Bad. The Medium, Bad and Good

values are equitably distributed once we have the two

extremes. The Table 1 shows the list of indicators that

we have been defined.

3.5 Automatic Usability Evaluation

Process

Conducting the usability measurement manually is a

tedious task. That is why we propose to automatize

this process by implementing it as a model transfor-

mation process. The model transformation process re-

quires two model as input (the user interface model

and the usability model) and provides as outcome a

usability report which contains the detected usability

problem. In the model transformation literature, the

definition of the meta-model

1

is a prerequisite in or-

der to use a model.

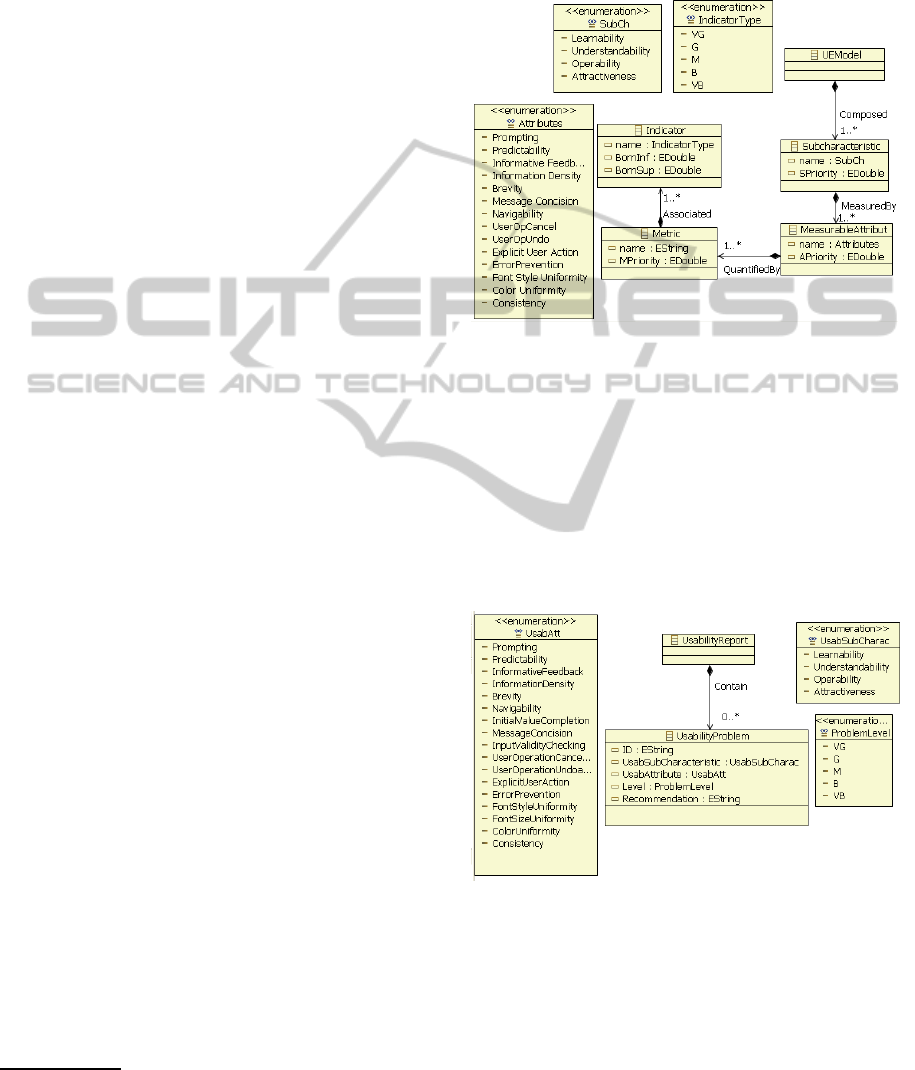

Concerning the usability model, the proposed

meta-model is composed of hierarchy with four lev-

els:

• Sub-characteristic: A set of abstract concept used

to define usability.

• Attribute: An entity which can be ensured during

the model transformation process.

1

A meta-model is a language that can express models.

It defines the concepts and relationships between concepts

required for the expression of the model.

• Metric: A set of metric used to quantify an at-

tribute.

• Indicator: qualitative value assigned to each set of

values used to rank metric to give meaning.

Figure 3: Usability Meta-Model.

With regard to the usability report, we propose a sim-

ple meta-model which explain the usability problem

using the following scheme: the description of the

usability problem, the related attribute is the sub-

characteristic and attribute in the model that are af-

fected by the usability problem, the level of the de-

tected problem and the recommendation to solve such

problem.

Fig. 4 shows the proposed usability report meta-

model.

Figure 4: Usability Report Meta-Model.

It should be noted that the use of internal usability

attributes and metrics which are based on the concep-

tual models is recommended as an appealing way to

predict the usability perceived by end-users (Panach

et al., 2011). However, the validity of the proposed

method need to be tested empirically.

EarlyUsabilityEvaluationinModelDrivenFramework

27

Table 1: Proposed indicators.

Metric VG G M B VB

ID1 ≺15 15≤ID1≺20 20≤ID1≺25 25≤ID1≺30 ID1≥30

ID2 ≺10 10≤ID2≺13 13≤ID2≺16 16≤ID2≺19 ID2≥19

MA ≺2 2≤MA≺4 4≤MA≺5 5≤MA≺6 MA≥6

NB ≺7 5≤NB≺10 10≥NB≺13 13≤NB≺16 NB≥16

WN ≺15 15≤WN≺20 20≤WN≺25 25≤WN≺30 WN≥30

4 AN ILLUSTRATIVE CASE

STUDY

This section investigates a case study in order to il-

lustrate the applicability of our proposal. The pur-

pose is to show the usefulness of our proposal in the

assessment of the user interface usability. The re-

search question addressed by this case study is: Does

the proposal contribute to uncover usability problem

since the conceptual model?

The object of the case study is a Tourist Guide

System (TGS). The scenario is adapted from (Hariri,

2008). The mayor’s office of a touristic town decides

to provide visitors a tourist guide system. The system

allows the visitors to choose the visit type (tourism,

shopping, work, etc.). During the visit, the TGS of-

fers tourists several choices of visit traverses, indicate

the paths to follow and provides informationabout the

nearby points of interests. Tourists can use the system

to find places (restaurant, hotel, etc.) and know the

itineraries of visits. The system will run on terminals

of visitors (laptop, PDA, mobile phone, etc.). There-

fore, the user interface must adapt to the context of

use. For example, the computing devices being used,

the tourist language, preference, etc. It should be able

also to bring a feeling of comfort and ease of use in

order to increase the satisfaction degree.

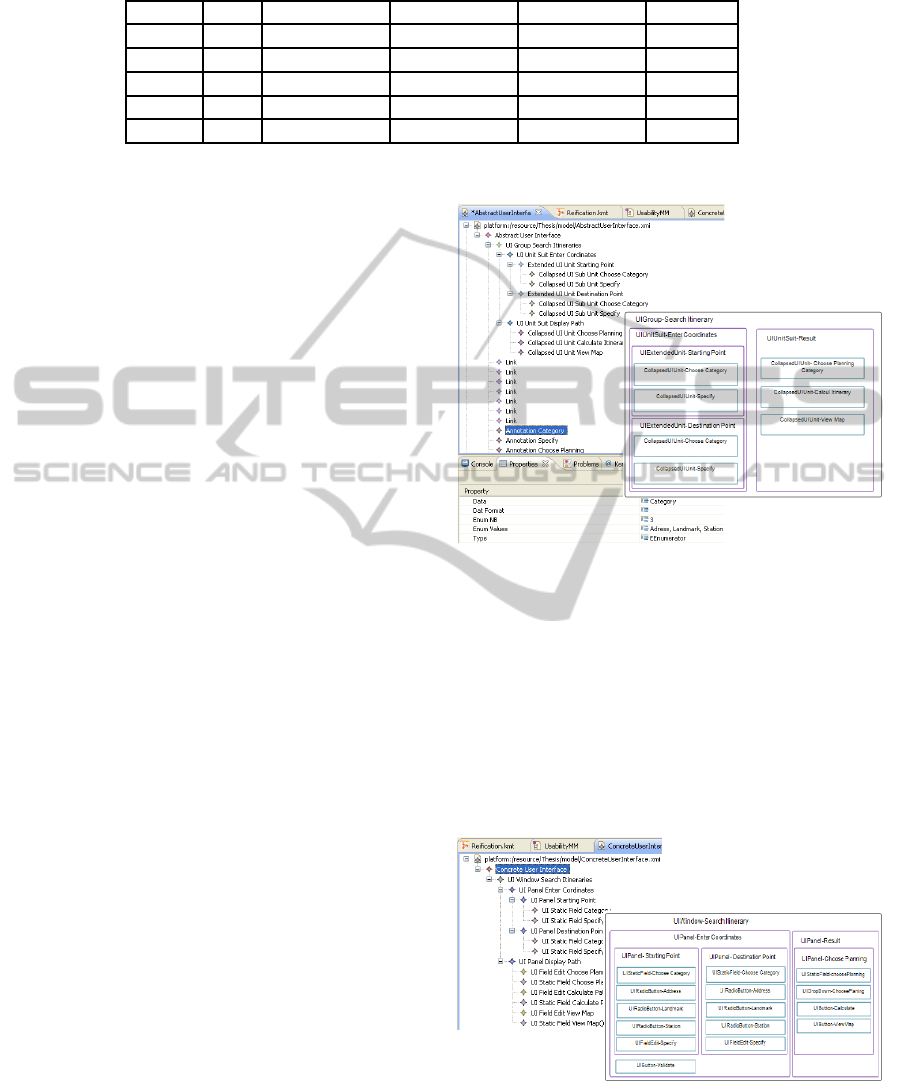

Since the tourist guide system is large, we focus

our interests in the generation of the concrete user

interface for the «Search itinerary» task. We sup-

pose to have the abstract user interface from Fig 5

as a result of the transformation of the task model

«Search itinerary» following the model transforma-

tion explained in details in (Bouchelliguaet al., 2010).

The result of the transformation is an XML file which

is in accordance with the AUI metamodel (left part

of Fig. 5). To better clear up the user interface lay-

out, we develop an editor with the Graphical Model-

ing framework (GMF) of eclipse. The sketch of the

user interface presented by the editor is shown in the

right part of Fig. 5.

The abstract user interface contains a UIGroup

called «Search itinerary» which gives access to two

UIUnitSuit called «Enter Coordinates» and «Result».

Figure 5: Abstract User Interface.

The «Enter Cordinates» container gives access to

specify the starting point and the destination point.

The tourist should choose the category (Address,

Landmark, Station) before specifying the starting or

the destination point. The validation of the coordi-

nates allows tourist to choice the planning (Pedes-

trian, Cyclable, Vehicule, Metro, Train, Bus). Af-

ter that, the TGS system shows the list of possible

itineraries. The TGS system can shows the list in a

map.

Figure 6: Concrete User Interface.

An ordinary transformation which takes as input

the abstract user interface model allows producing the

concrete user interface model of Fig. 6. It should be

noted that this transformation was done taken into ac-

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

28

count a context of use defined by the analyst. The

context is the following: a laptop as an interactive de-

vice (normal screen size), an Englishman as a tourist

with a low level of experience.

In order to evaluate the concrete user interface, we

pursue a reduced version of the usability evaluation

process presented in (Ammar et al., 2012). The pur-

pose of the evaluation is to evaluate the usefulness of

the proposed model to discover the usability problems

presented in the evaluated artifact. The product part

to be evaluated is the concrete user interface model.

The selected attributes are the Information Density

and the Error Prevention. The metrics selected to

evaluate the former attributes are ID2 and ERP. The

indicators are those presented in Tab. 1.

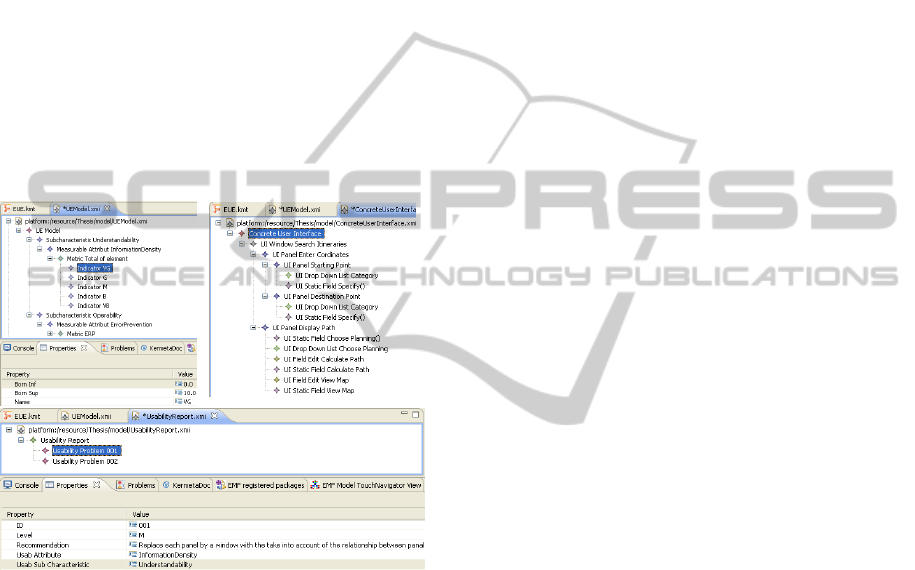

The result of the evaluation is a usability report

model which contains the detected problems (see Fig.

7).

Figure 7: Usability Evaluation Process.

• Usability problem N1: There is no means which

preventsthe user against error while entering data.

Related attribute: Operability / Error Prevention.

Level: VB

Recommendation: Each input element with lim-

ited values will be displayed in a dropdown list

to protect user against error while entering values

(e.g. typos).

• Usability problem N2: There are enough elements

in the user interface which increase the informa-

tion density.

Related attribute: Understandability / Information

Density

Level:B

Recommendation: It is recommended to replace

panels with a window.

The second transformation to be conducted takes an

«iPAQ Hx2490 Pocket PC» as platform. The migra-

tion to such platform raises a new redistribution of

the user interface elements. The small screen size

(240x320) is not sufficient to display all information.

The number of the concrete component to be grouped

is limited to the maximum number of concepts that

can be manipulated (5 in the case of «iPAQ Hx2490

Pocket PC»). Therefore, the user interface elements

are redistributed on several windows. The redistri-

bution of interface elements on several windows will

bring more steps to reach the goal. It should be noted

that with a small screen size the Information Den-

sity and the Brevity are the most relevant usability

attributes. The problem is that these two attributes

have a contradictory impact. It is recommended to

distribute the concrete components on several screens

in order to obtain better Information Density. How-

ever, redistribute elements from one screen to several

will influence negatively the Brevity attributes.

Learned Lesson. The case study allow us to learn

more about the potentialities and limitations of our

proposal and how it can be improved. The proposed

method allows the detection of several usability prob-

lems since the early stage of the development process.

The evaluation process may be a means to discover

which usability attributes are directly supported by

the modeling primitives or to discover limitations in

the expressiveness of these artifacts. The ranks of in-

dicators are extracted from existing studies which do

not consider the context variation. Therefore, many

more experimentationsare needed in order to propose

a repository of indicators in several cases (medium

screen size, small screen size, large screen size). The

same things for other metrics which are influenced by

the context variation. Another important aspect which

must be studied is the contradictory affect of usabil-

ity attributes. For example, for computing platform

with small screen size the information density and the

brevity has a contradictor affect. Increasing the in-

formation density will decrease certainly the brevity

attribute. Finally, the case study was very useful for

us. We can state that the method presented in this pa-

per can be a building block of an MDE method that

generate a user interface taken into account the con-

text variation of use while respecting human factors.

5 CONCLUSIONS AND FUTURE

RESEARCH WORKS

This paper presents a method for integrating usabil-

ity issue as a part of a plastic user interface devel-

opment process. The proposed method extends the

EarlyUsabilityEvaluationinModelDrivenFramework

29

Cameleon reference framework by integrating usabil-

ity issues to the development process. The early us-

ability measurement has the objective to discover the

usability problems presented in the intermediate arti-

fact. Therefore, the present paper proposes a usability

model which decomposes the usability on measurable

attributes and metrics that are based on the conceptual

primitives. Metrics are extracted from existing usabil-

ity guidelines with respect to their relation with con-

text features (user characteristics, platform features,

etc.). Many details about how to measure and inter-

prets attributes are presented.

If compared to the existing proposals, our frame-

work presents three main advantages: 1) costs are

very low: internal usability evaluation reduce con-

siderably the development cost, 2) system does not

have to be implemented, 3) it provides a proper details

about how to measure attributes and interpret their

scores.

The continuity of our research work leads directly

to the implementation of the usability driven model

transformation. We have to investigate the relation-

ship between usability attributes and their contradic-

tory influence to the whole usability of the user inter-

face. An empirical evaluation of the early usability

measurement is recommended to clearly demonstrate

the coherence between values obtained by our pro-

posal and those perceived by end-user.

REFERENCES

(1998). ISO/IEC 9241. Ergonomic Requirements for Office

Work with Visual Display Terminals (VDTs). ISO/IEC.

(2001). ISO/IEC 9126. Software engineering – Product

quality. ISO/IEC.

Abrahão, S. M. and Insfrán, E. (2006). Early usability eval-

uation in model driven architecture environments. In

QSIC, pages 287–294.

Ammar, L. B., Mahfoudhi, A., and Abid, M. (2012).

A usability evaluation process for plastic user inter-

face generated with an mde approach. In Software

Engineering Research and Practice, pages 323–329.

CSREA Press.

Aquino, N., Vanderdonckt, J., Condori-Fernández, N., Di-

este, O., and Pastor, O. (2010). Usability evalua-

tion of multi-device/platform user interfaces gener-

ated by model-driven engineering. In Proceedings

of the 2010 ACM-IEEE International Symposium on

Empirical Software Engineering and Measurement,

ESEM ’10, pages 30:1–30:10, New York, NY, USA.

ACM.

Bastien, J. C. and Scapin, D. L. (1993). Ergonomic crite-

ria for the evaluation of human-computer interfaces.

Technical Report RT-0156, INRIA.

Bouchelligua, W., Mahfoudhi, A., Mezhoudi, N., Dâassi,

O., and Abed, M. (2010). User interfaces modelling

of workflow information systems. In EOMAS, pages

143–163.

Calvary, G., Coutaz, J., and Thevenin, D. (2001). A unify-

ing reference framework for the development of plas-

tic user interfaces. In Proceedings of the 8th IFIP

International Conference on Engineering for Human-

Computer Interaction, EHCI ’01, pages 173–192,

London, UK, UK. Springer-Verlag.

Favre, J. M. (2004). Toward a Basic Theory to Model

Driven Engineering.

Fernandez, A., Insfran, E., and Abrahão, S. (2009). Inte-

grating a usability model into model-driven web de-

velopment processes. In Proceedings of the 10th In-

ternational Conference on Web Information Systems

Engineering, WISE ’09, pages 497–510, Berlin, Hei-

delberg. Springer-Verlag.

Gómez, J., Cachero, C., and Pastor, O. (2001). Con-

ceptual modeling of device-independent web applica-

tions. IEEE MultiMedia, 8(2):26–39.

Grislin, M. and Kolski, C. (1996). Human-machine in-

terface evaluation during the development of interac-

tives systems. TSI. Technique et science informatiques

ISSN 0752-4072 CODEN TTSIDJ, (3):265–296.

Hariri, M. (2008). Contribution à une méthode de concep-

tion et génération d’interface homme-machine plas-

tique.

Lacob, M. E. (2003). Readability and Usability Guidelines.

M. Leavit, B. S. (2006). Research Based Web Design &

Usability Guidelines.

Murata, M., Uchimoto, K., Ma, Q., and Isahara, H. (2001).

Magical number seven plus or minus two. In Proceed-

ings of the Second International Conference on Com-

putational Linguistics and Intelligent Text Processing,

pages 43–52, London, UK, UK. Springer-Verlag.

Nielsen, J. (1993). Usability Engineering. Morgan Kauf-

mann Publishers Inc., San Francisco, CA, USA.

Panach, J. I., Condori-Fernández, N., Vos, T. E. J., Aquino,

N., and Valverde, F. (2011). Early usability mea-

surement in model-driven development: Definition

and empirical evaluation. International Journal of

Software Engineering and Knowledge Engineering,

21(3):339–365.

Seffah, A., Donyaee, M., Kline, R. B., and Padda, H. K.

(2006). Usability measurement and metrics: A con-

solidated model. Software Quality Control, 14:159–

178.

ICEIS2013-15thInternationalConferenceonEnterpriseInformationSystems

30