Cloud-based Learning Environments: Investigating Learning

Activities Experiences from Motivation, Usability and Emotional

Perspective

Rocael Hernández Rizzardini

1

, Hector Amado-Salvatierra

1

and Christian Guetl

2,3

1

GES department, Galileo University, Guatemala, Guatemala

2

IICM, TU-Graz, Graz, Austria

3

Curtin Business School, Curtin University, Perth, Australia

Keywords: Cloud-based Learning Environments, Cloud-based Tools, e-Learning, Evaluation, Usability, Accessibility.

Abstract: Cloud education environments consider all the cloud services, such as Web 2.0 applications, content, or

infrastructure services. These services form an e-learning ecosystem which can be built upon the learning

objectives and the preferences of the learner group. A great variety of existing cloud services might be re-

purposed for educational activities and it can be taken advantage from already widely used services without

steep learning curves on their adoption. In this article is presented the design, deployment and evaluation of

learning activities using cloud applications and services. The experiences presented here are from Galileo

University in Guatemala with students from three different countries in Central America and Spain. This

study reports findings from motivational attitudes, emotional aspects and usability perception. Selected

cloud-based tools were used for the different learning activities in three courses in various application

domains. These activities include collaboration, knowledge representation, storytelling activities and social

networking. Experimentation results obtained aim to demonstrate that students are eager to use and have

new and more interactive ways of learning, which challenges their creativity and group organization skills,

while professors have a growing interest on using new tools and resources that are easy to use, mix and

reuse. Thus, future research should focus on incentives for motivating participation as well as on providing

systems with high usability, accessibility and interoperability that are capable of doing learning

orchestration.

1 INTRODUCTION

Trends for modern Virtual Learning Environments

(VLE) indicate a movement from a monolithic

paradigm to a distributed paradigm. Dagger et. al.

(2007) and Chao-Chunk and Skwu-Ching (2011)

call it the next generation of e-Learning

environments. It is clear that Virtual Learning

Environments need to be more scalable and improve

the real innovation they bring to education through

flexibility, due the increasing requirements that

higher institutions have. Actual work in Cloud

Computing has a focus on infrastructure layer rather

than application layer as shown in the work of Al-

Zoube et. al. (2010) and Chandran and Kempegowda

(2010). Still VLE is in many cases a simple

conversion of classroom-based content to an

electronic format, retaining its traditional

knowledge-centric structure as stated by Teo et. al.

(2006).

There is great potential in the use of multiple

cloud-based tools for learning activities and to create

a different learning environment, with new diversity

of tools driving to possibly enrich learning

experiences. There is a quest to create a Cloud

Education Environment, where a vast amount of

possible tools and services can be used, connected

and in the future orchestrated for learning and

teaching (Mikroyannidis, 2012).

Cloud computing application technologies are a

major technological trend that is shifting business

models and application paradigms; the cloud can

provide on-demand services through applications

served over the Internet for multiple set of devices in

a dynamic and very scalable environment (Sedayao,

2008). Thus, the significance of the technology for

709

Hernández Rizzardini R., Amado-Salvatierra H. and Guetl C. (2013).

Cloud-based Learning Environments: Investigating Learning Activities Experiences from Motivation, Usability and Emotional Perspective.

In Proceedings of the 5th International Conference on Computer Supported Education, pages 709-716

DOI: 10.5220/0004451807090716

Copyright

c

SciTePress

this study lies not only in cloud computing, but in

the application that reside in the cloud that can be

used for learning purposes, although as it will be

presented, many of them have not been intended for

learning in the first place, the applications presented

in this experience are actually used for learning.

Cloud-based tools have the potential to interoperate

with other systems; therefore it is possible to

systematically orchestrate a learning activity through

multiple cloud-based tools. The cloud-based tools

are normally seen as traditional and standalone web

2.0 tools, but now it can create integrated learning

experiences. This paper does not focus on the cloud-

computing infrastructure but rather on the findings

of using the existing cloud-based tools for learning.

Likewise social networking technologies provide

easy pathways for sharing these kinds of cloud

applications, related data, activities and for

socializing while at the same time enhancing the

collaborative experiences (Mazman and Kocak,

2010).

This paper is organized as follows: first we will

describe the test-beds used for this experience, the

learning activities designed and the learning

scenarios. Thereafter we will give a detailed

description of the instruments used, the methodology

description and results of our study, in which

students were asked to perform learning activities

individually and in groups using different type of

Cloud-based tools. Finally we will discuss our

findings, conclusions and some ideas of future

research.

2 THE EXPERIMENT

2.1 The Galileo University Test-bed

In this section we present a cloud-based learning

experience in Latin-American countries following

other successful learning experiences by Dagger et

al. (2007) and Chao-Chunk and Skwu-Ching (2011).

The learning experience happens in the Institute Von

Neumann (IVN) of Galileo University, Guatemala.

IVN is an online higher education institute. It

delivers online educational programs across the

country and those programs are open for other

countries.

The student population at IVN is mostly part-

time students; this is something quite common in the

entire University students. The courses are similar to

any other University course; most of the students do

their learning during the evening or in weekends

because of work.

It is a complete online learning degree, the topic

of the course is an e-Learning certification that

consists in several modules that specializes the

students into e-Learning from an instructional design

reference. The course does not have formal

synchronous sessions, although the use of chat with

professor and other peers is possible. Also the

students are expected to work 10 hours/week on

their studies, learning activities and collaborative

activities. The courses within the e-Learning

certification are designed in learning units that

usually last for 1 week each unit having a diversity

of online material such as video, audio, animations,

interactive content, forums, assignments and a wide

diversity of learning activities specially designed for

enhancing learning acquisition. The course uses the

institutional LMS that currently is .LRN LMS

(www.dotlrn.org), although some module are

alternative provided in Moodle LMS

(www.moodle.org). The students have the advice

and help from professional instructional designers to

build their online course. The Certification is

targeted to university professors, e-Learning

consultants, instructors that want to enhance their

knowledge about teaching with technology.

The presented learning experience has two

groups of more than 60 students, most of them

university professors, from different countries:

Guatemala, Honduras, El Salvador and Spain. The

courses titles are: course 2: Introduction to e-

Learning; course 3: e-Moderation and course 4:

Online activities design.

The first group (A) with 36 students from

Guatemala and Spain was evaluated with activities

prepared within courses 2 and 3. The second group

(B) with 30 students with students from universities

in Guatemala, Honduras and El Salvador was

evaluated with activities prepared within courses 3

and 4, thus the course 3: “e-Moderation” as common

course for all groups is used for comparative

analysis.

In this experience, students were assigned to

cloud-based learning activities for the first time,

most of them were not very familiar with related

technologies, but they had a preliminary course that

introduced them into the use of the institutional

LMS and related technologies.

The course professor introduced the cloud-based

learning activities as innovative and powerful tools

for learning, with the objective to elaborate all the

CSEDU2013-5thInternationalConferenceonComputerSupportedEducation

710

benefits that can create mind-set change, guiding the

students through the benefits that these type of

activities will have in their learning process (Chao-

Chun and Shwu-Ching 2011), something that proved

to be very helpful to avoid resistance and possible

fear to new and seen as complex tools. We collected

information form students in a pre-test and post-test

through an online survey from an exploratory

approach. Each group did two four-week courses,

between the courses there was a one-week off that

we used to do telephone interviews and gather

further information about the experience.

2.2 Learning Activities and Scenarios

We designed learning activities based on

instructional objectives, using as a base the past

standard non-cloud-based activities from previous

editions of the courses, and transforming them to

leverage the potential of the cloud ecosystem. The

designed and tested activities are presented, it is

important to mention that each activity was carefully

designed using a custom made instructional design

template that contains all activity related information

such as: learning objectives, instructions,

classification using Bloom’s revised taxonomy

(Anderson and Krathwohl 2001) and grading. Each

single step on the activity has a clear and explicit

grading. With a clear design of the activity, the

professor and instructional designer proceed to

select the most suitable tool based on previous

knowledge and experience with the tool, in the

presented experience most of the proposed tool has

been already used for other learning activities in

other courses, the three courses were:

Course 2 “Introduction to e-Learning”, had the

following learning activities:

Activity 2.1: Students had to do a research of a

given topic, and then write collaboratively an essay

in groups of four students each. This activity was

prepared with a control group setting for

comparison, where we divided the whole group

(A) of students in three segments with nine groups

(three groups per segment), first two segments

using cloud-based learning activities and the third

one using traditional desktop applications. The

first two segments were asked to use cloud

services: Google Docs (Google Docs-Page 2012)

and Wiki Spaces (Wiki Spaces-Page 2012) and the

other segment of three groups used traditional

word processor. Then students were invited to

represent the information with a time-line tool, the

cloud-based time-line tools used were Dipity

(Dipity-Page 2012) and Timetoast (Figure 2)

(Timetoast-Page 2012) and the traditional tool was

Power Point for segment three. Finally students

had to comment and discuss other groups’ results

in the LMS online discussion forums. A summary

of the tools used by groups are presented in Table

1.

Activity 2.2: Students (individually) had to do a

research and present knowledge gained through

mind map tools, the cloud application for this

activity was MindMeister (MindMeister-Page

2012) and Cacoo (Cacoo-Page 2012) (Figure 2).

Finally they were invited to discuss about other

peer contributions on the LMS discussion forum.

A comparison setting is presented in Table 2.

Table 1: Comparison setting for Activity 2.1.

Segment

Tools used for the learning

activity

1 (3 groups) Google Docs and Dipity

2 (3 groups) Wiki Spaces and Timetoast

3 (3 groups) Word Processor and PowerPoint

Table 2: Comparison setting for Activity 2.2.

No. of Students

Tools used for the learning activity

10 Cacoo

10 Mindmeister

16 PowerPoint

Figure 1: Screenshot of Timetoast time-line example.

Figure 2: Screenshot of Cacoo mind map example.

Cloud-basedLearningEnvironments:InvestigatingLearningActivitiesExperiencesfromMotivation,Usabilityand

EmotionalPerspective

711

Course 3: “e-Moderation”, had the following

activities:

Activity 3.1: Students had to synthesize

information learned in the course and publish it

using the cloud-tool Issuu (Issuu-Page 2012). Then

discuss on LMS forums.

Activity 3.2: Students had to do a research, create a

storytelling script and present it using one of the

following cloud-based tools: GoAnimate

(GoAnimate-Page 2012) (Figure 3), Xtranormal

(Xtranormal-Page 2012), Pixton (Pixton-Page

2012). Publish it in the social network Facebook

and comment other peers’ contributions.

Course 4: “Online activities design”, had the

following learning activities:

Activity 4.1: the group (B) of students had to build

collaboratively bookmarks based on a research

assignment using a base taxonomy provided by the

professor to classify the links provided by the

students. The Delicious bookmarking site

(Delicious-Page 2012) was used for the activity.

Activity 4.2: Students had to create online

satisfaction survey for courses, synthesize a

method and requirements for these types of

surveys using a mind-mapping tool and publish a

sample survey using Google forms (Google Docs-

Page 2012).

Activity 4.3: The learning activity focused on

modelling a process for creating visually attractive

digital posters with educational intentions, first by

using a mind-mapping to elaborate the concepts,

and then reflect them in an cloud-based tool called

Gloster (Gloster-Page 2012). In all activities,

students were required to learn about the tool in

order to perform their assignments.

Figure 3: Screenshot of Go-Animate storytelling example.

2.3 Research Methodology

We used standardized instruments by Fishbein and

Ajzen (1975) and Davis (1989) to measure this

experience; we also use the System Usability Scale

SUS by Brooke (1996) and the Computer Emotions

Scale (CES by Kay & Loverock, 2008). Through

online tests sent to the students with a pre-test and

post-test it were measured emotional aspects,

usability perception and performance, opinions and

motivation about the tools and cloud-based learning

activities. Pre and post-test were evaluated with

instructional designers, professors and students, to

observe and verify its validity for students; some

enhancements were introduced after a first review.

The initial test included a section of learning

preferences and previous online learning

experiences, a survey about the cloud-based tools

that were to be used for the experience and their

personal perceptions, then a motivation section and

finally an emotional aspects gathering section. The

post-test included personal evaluation of learning

effort using the cloud-based tools for the assigned

activities, personal opinions of the experience,

motivational aspects, usability and emotional

aspects, and open questions about the experience.

Since each class of students did two courses, the pre-

test was done before starting the first course, then

between first and second course, an random

telephone interview was conducted, and finally after

finishing the second course the post-test was sent to

students.

The CES instrument developed to measure emotions

related to learning new computer software, by Kay

and Loverock (2008), was quite instrumental for this

study and includes the following emotions: satisfied,

anxious, irritable, excited, dispirited, helpless,

frustrated, curious, nervous, disheartened, angry and

insecure. The questions were like “When I used the

cloud-based tool (and the names of the tools were

used) during the learning activity assignment (and

each of the assignment’s name were cited), I felt ...”

Answers used a four point Likert scale from (1) none

of the time to (4) All of the time.

The System Usability Scale (SUS) instrument by

Brooke (1996) contains 10 items regarding the

usability of cloud-based tools used for learning

activities. the answers were given on the 5-point

Likert scale, so that students could state their level

of agreement or disagreement. High mean values

indicate positive attitudes and tool evaluations.

The 10 items that composed the SUS questions

are:

1. I would use this tool regularly

2. I found it unnecessarily complex

CSEDU2013-5thInternationalConferenceonComputerSupportedEducation

712

3. It was easy to use

4. I would need help to use it

5. The various part of the tool worked well together

6. Too much inconsistency

7. I think others would find it easy to use

8. I found it very cumbersome to use

9. I felt very confident using the tool

10. I needed to understand how it worked in order to

get going.

According to Brooke (1996), SUS has proved to

be a valuable and reliable evaluation tool. It

correlates well with other subjective measures of

usability (eg. the general usability subscale of the

SUMI Software Usability Measurement Inventory).

Some of the main standards related to the

accessibility that can be applied in cloud-based

learning environments are presented in Amado et. al.

(2012). It is important to notice that tools and

learning activities prepared with cloud-based

learning environments should follow international

standards (e.g. W3C WCAG2.0, W3C WAI-ARIA)

to allow accessibility and usability to all the

students, including people with disabilities. The

research methodology includes the evaluation of

accessibility issues related to the cloud-based

learning activities.

Finally, telephone interviews were done with

some students and professors randomly selected and

only the ones that gave consent to participate on it.

Interviewers were instructed to ask about personal

opinions regarding the cloud-based tools and the

related learning activities, the conversations were

audio recorded and transcripts were written.

Using these instruments, the study is presented as

an exploratory approach with the aim to demonstrate

that students are eager to use and have new and

more interactive ways of learning.

3 RESULTS AND DISCUSSION

OF THE EXPERIENCE

From a total of 66 students from both groups, 45 of

the students gave their consent to participate in the

study by filling out at least one out of the two

presented questionnaires. Participation were equally

distributed with 48% of female and 52% of male

participants, (age average M=37, σ=14).

Participants were asked in the post-test and

telephone interviews about the experience. Some of

the more interesting positive and negative

impressions are presented with the emotional aspects

evaluation:

Positive impressions:

“I liked to know new activities and tools in the

web for more interaction with the student”

“I learned about many great tools that will help me

with my teaching activities, the experience showed

me that the activities can be very interactive and

innovative”

“The use of new tools for learning was fun and can

be applied with creativity to teach scientific

content.”

“What I liked is that I started using the tools in my

current courses.”

“I liked that the activities awaken creativity and

obtained interesting results and products.”

“The activities promote meaningful learning,

learning by doing so you will not forget, allows

flexibility in learning and I feel very satisfying to

achieve something new and different.”

“The tools used for the activities are pretty

dynamic and will make courses more interactive.”

Negative impressions:

“I needed more time to get to know the tools and

how to use it”

“The work load was increased for activities within

the new tools with an overhead with learning the

tools”

“I needed a lot of more time to achieve the results

with tools like Gloster, and I felt frustrated”

“The instructions were not clear”

“With some of the tools you need to purchase a

membership to upgrade and enable some

functionality”

“Some of the tools are not accessible and you can’t

use it in all operating systems, e.g. Flash based

tools”

Some of the main results of the post-test were:

95% of the participants liked the idea to use

innovative learning online tools to represent new

knowledge.

35% of the participants think that it was difficult to

complete the learning activities

50% of the participants think that they would need

more information and instructions to complete the

learning activities.

Only 10% of the participants expressed the

learning activities were boring.

70% of the participants considered that the time for

the activity was appropriate.

80% of the participants were positive about the

Cloud-basedLearningEnvironments:InvestigatingLearningActivitiesExperiencesfromMotivation,Usabilityand

EmotionalPerspective

713

expression that sharing results within groups

and comments about other participants helps to

learn new concepts related to the activity.

The learning experience presents the impressions

from participants, which indicates evidence of the

interest in learning activities highlighting the

interaction, innovation, flexibility and creativity,

capabilities that these cloud-based tools seem to

easily enable for the participants. The results

obtained appear to demonstrate that students are

eager to use and have new and more interactive

ways of learning, which challenges their creativity

and group organization skills.

The following subsections will present related

results from an Emotional, Motivation and Usability

perspective.

3.1 Emotional Aspects

From an emotional aspect perspective, the

instrument was based on the Computer Emotion

Scale (4pt. scale) developed by Kay and Loverock

(2008) to measure emotions related to learning new

computer software/learning tools in general, then the

post-test measured the emotions after using the tool

proposed for the learning activities with the

comparison in Table 3.

Research by Kay and Loverock (2008) in CES

showed 12 items describing four emotions:

Happiness (When I used the tool, I felt

satisfied/excited/curious.?);

Sadness (When I used the tool, I felt

disheartened/dispirited.?);

Anxiety (When I used the tool, I felt

anxious/insecure/helpless/nervous.?);

Anger (When I used the tool, I felt

irritable/frustrated/angry.?).

The summary with the four variables of the CES

scale for groups A and B is presented in Table 4.

The evaluation of emotional aspects from the

participants shows little difference in the results

between pre-test and post-test measures. In this

sense cloud-learning activities and instructor’s

motivation should focus on improve results looking

for students with high level of emotions related to

Happiness (e.g. satisfied, excited) and reduce

emotions related to Anger or Anxiety (e.g.

frustrated, helpless). Results with a 4pt. scale show a

positive reaction to “Happiness” and levels of

“Sadness”, “Anxiety” and “Anger” to improve while

working with cloud-based tools used for learning

activities.

Table 3: Computer Emotional Scale Comparison.

Emotion Pre-test results Post-test results

Satisfied 2.50 (σ = 0.65) 2.48 (σ = 0.65)

Anxious 1.42 (σ = 0.97) 1.24 (σ = 0.78)

Irritable 0.28 (σ = 0.45) 0.44 (σ = 0.51)

Excited 2.33 (σ = 0.72) 2.16 (σ = 0.85)

Dispirited 0.31 (σ = 0.47) 0.28 (σ = 0.46)

Helpless 0.47 (σ = 0.56) 0.52 (σ = 0.65)

Frustrated 0.39 (σ = 0.55) 0.32 (σ = 0.56)

Curious 2.33 (σ = 0.68) 2.12 (σ = 0.83)

Nervous 0.47 (σ = 0.56) 0.60 (σ = 0.65)

Disheartened 0.32 (σ = 0.42) 0.35 (σ = 0.46)

Angry 0.19 (σ = 0.40) 0.32 (σ = 0.48)

Insecure 0.47 (σ = 0.70) 0.40 (σ = 0.58)

Table 4: Summary CES-Scale Comparison.

Emotion

(4pt. scale)

Pre-test

results

Post-test

results

Reliability

Happiness 2.39 2.25 r = 0.75

Sadness 0.30 0.28 r = 0.57

Anxiety 0.71 0.69 r = 0.71

Anger 0.29 0.36 r = 0.78

3.2 Motivational Aspects

Deci et. al. (1991) promotes self-determination and

motivation that leads to the types of learning

outcomes that are beneficial to the student.

According to Deci et. al. (1991), intrinsically

motivated students engage in the learning process

without the necessity of reward or constraints.

Extrinsic motivation, on the other hand, provides

student with engagement in the learning process as a

means to an end, such as feedback or a grade. For

this study and adapted scale based on the work of

Tseng and Tsai (2010) was used. The scale by Tseng

and Tsai (2010) is used to measure motivations in

online peer assessment learning environments. For

this study, the instrument measures general attitudes

with two subscales for extrinsic and intrinsic

motivation. Intrinsic motivation is composed of

seven items and extrinsic motivation is composed of

four items. A single result is composed for each

subscale from the participant answers. Results from

the instrument and comparison between the two

groups (A, B) using course 3 (e-Moderation), are

presented in Tables 5 & 6. Results show a positive

measure of individual intrinsic motivation and a

regular measure of extrinsic motivation from the

point of view of the student related to the perceived

motivation from peers.

CSEDU2013-5thInternationalConferenceonComputerSupportedEducation

714

Table 5: Summary from intrinsic motivation for both

groups, means and t-Test results.

Group M Σ F T Df

A 76.87 14.43 0.43 -1.58 43

B 84.16 16.13

Table 6: Summary from extrinsic motivation for both

groups, means and t-Test results.

Group M Σ F T Df

A 64.97 17.43 0.33 -1.82 42

B 74.97 18.93

The comparison of Table 5&6, shows an interesting

higher value for intrinsic compared with extrinsic

motivation when being part of cloud based learning

activities.

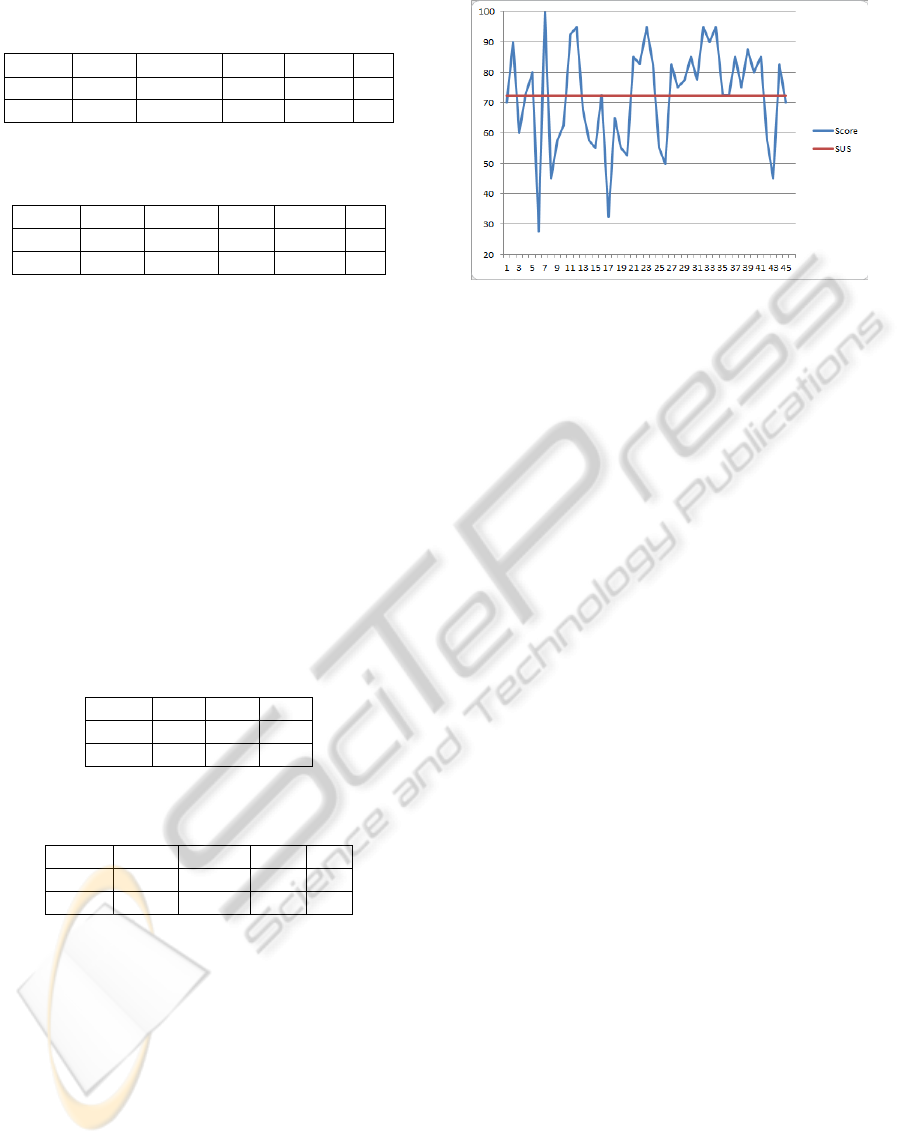

3.3 Usability Aspects

Students were asked about SUS instrument items

regarding usability, in general within the tools used

(GoAnimate, Dipity, Timetoast, Gloster,

Mindmeister, Cacoo, etc.). Respondents were asked

to record their immediate response to each item.

Results from the instrument are presented in Table 7

& 8.

Table 7: Summary from SUS instrument for both groups

in the experience, reliability and Levene’s test results.

Group R F Sign

A 0.91 2.61 0.11

B 0.70

Table 8: Summary from SUS instrument for both groups,

means and T-Test results.

Group M Σ T df

A 65.50 19.51 -2.5 43

B 77.60 13.45

The results for the usability perception for all the

participants are summarized in Figure 4.

The SUS mean combined score for both groups

is 72.22. The minimum score is 27 (achieved only 1

time) the maximum score is 100. This is a

considerable result that denotes how easily the

students have interacted with the cloud-based tools

used for learning purpose. The objective of the use

of this instrument was to explore about the usability

of the proposed cloud-based tools with an acceptable

reliability and mean values with great opportunities

to be improved.

Figure. 4. SUS – Usability of cloud-based learning tools.

(Horizontal: participants that fill the instrument, Vertical:

Usability score for each participant, the Horizontal line is

the SUS mean combined score 72.22).

4 CONCLUSIONS

The results present a low emotional barrier on using

a Cloud-Education Environment, which corresponds

with the 95% of participants indicating that they like

the idea of using this environment. There are high

motivation results from the instruments used, and

the SUS scale indicates that from the student’s

perception the cloud-based tools are highly usable.

The results obtained from the motivational

perspective appear to demonstrate a high value of

intrinsic motivation for students while being part of

cloud-based learning activities: this result is an

important requirement to engage the student in the

learning process without the necessity of reward or

constraints.

Analysis from professor’s perspective suggest

that while doing and planning learning activities, the

professor have a growing interest on using new tools

and resources that are easy to use, mix and reuse.

The Cloud Education Environment has a

promising future and further experimentation is

necessary. Still there are many open areas, such as

providing integrated systems with high usability,

accessibility and interoperability with the aim to

create a Cloud Education Environment that can be

orchestrated by professors.

ACKNOWLEDGEMENTS

We are grateful with Universidad Galileo and GES

Department for their great support in conducting this

study. This work is supported partially by the

Cloud-basedLearningEnvironments:InvestigatingLearningActivitiesExperiencesfromMotivation,Usabilityand

EmotionalPerspective

715

European Commission through ALFA III – ESVI-

AL project “Educación Superior Virtual Inclusiva -

América Latina”.

REFERENCES

Al-Zoube M, et. al. (2010) “Cloud Computing Based E-

Learning System”, Int. J. of Distance Education

Technologies.Vol. 8, Issue 2, ISSN: 1539-3100

Amado-Salvatierra HR, Hernández R & Hilera JR (2012).

Implementation of accessibility standards in the

process of course design in virtual learning

environments. Procedia Computer Science 14, 363-

370

Anderson, L. W., & Krathwohl, D. R. (Eds.). (2001). A

taxonomy for learning, teaching and assessing: A

revision of Bloom's Taxonomy of educational

outcomes: Complete edition, New York : Longman

Brooke, J. (1996). SUS: A “quick and dirty” usability

scale. In Usability evaluation in industry. London:

Taylor & Francis.

Chao-Chun K and Shwu-Ching S. (2011), Explore the

Next Generation of Cloud-Based E-Learning

Environment, LNCS 2011, Volume 6872/2011, 107-

114

Chandran D and Kempegowda S. (2010) “Hybrid E-

Learning Platform based on Cloud Architecture

Model: A Proposal, Proceeding IEEE ICSIP 2010, pp

534-537

Dagger, D. et. al. (2007) Service-Oriented E-Learning

Platforms From Monolithic Systems to Flexible

Services. Internet Computing IEEE, Vol. 11, Iss. 3, pp.

28-35

Davis, F. D. (1989). Perceived usefulness, perceived ease

of use, and user acceptance of information technology.

MIS Quarterly, 13(3), 319-340.

Deci L, Vallerand R, Pelletier L, Ryan R. (19911

"Motivation and Education: The Self-Determination

Perspective" Educational Psychologist, 26(3&4), 325-

346. Lawrence.

Fishbein, M., & Ajzen, I. (1975). Belief, attitude, intention

and behavior: An introduction to theory and research.

Reading MA: Addison-Wesley.

Kay, R.H., & Loverock, S. (2008). Assessing emotions

related to learning new software: The computer

emotion scale. Computers in Human Behavior. 24,

1605-1623.

Mazman SG and Kocak Y (2010). Modeling educational

usage of Facebook, Computers & Education, Volume

55, Issue 2, Elsevier, P. 444-453

Mikroyannidis, A (2012) "A Semantic Framework for

Cloud Learning Environments," in Cloud Computing

for Teaching and Learning: Strategies for Design and

Implementation, L. Chao, Ed.: IGI Global, 2012.

Teo, C.B., Chang, S.C.A., Leng, R.G.K. (2006): Pedagogy

Considerations for E- learning, http://www.itdl.org/

Journal/May_06/article01.htm (retrieved March 10,

2012)

Tseng, S.-C., & Tsai, C.-C. (2010). Taiwan college

students‘ self-efficacy and motivation of learning in

online peer-assessment environments. Internet and

Higher Education, 13, 164-169.

Sedayao J. (2008), Implementing and Operating an

Internet Scale Distributed Application Service

Oriented Architecture Principles Cloud Computing

using and Infrastructure, iiWAS2008, Austria, pp.

417-421, 2008.

Google Docs-Page(2012). Tool available online:

docs.google.com [last visit 24-09-2012]

Wiki Spaces-Page(2012). Available online:

www.wikispaces.com [last visit 24-09-2012]

Dipity-Page(2012). Tool available online:

www.dipity.com [last visit 24-09-2012]

Timetoast-Page(2012). Tool available online:

www.timetoast.com [last visit 24-09-2012]

MindMeister-Page(2012). Available online:

www.mindmeister.com[last visit 24-09-2012]

Cacoo-Page(2012). Tool available online:

www.cacoo.com [last visit 24-09-2012]

Issuu-Page (2012). Tool available online: www.issuu.com

[last visit 24-09-2012]

GoAnimate-Page(2012). Tool online:

www.goanimate.com [last visit 24-09-2012]

Xtranormal-Page(2012). Tool online:

www.xtranormal.com [last visit 24-09-2012]

Pixton-Page(2012). Tool available online:

www.pixton.com [last visit 24-09-2012]

Delicious-Page(2012). Tool available online:

www.delicious.com [last visit 24-09-2012]

Gloster-Page(2012). Tool available online:

www.gloster.com [last visit 24-09-2012]

CSEDU2013-5thInternationalConferenceonComputerSupportedEducation

716