Incremental Design of Organic Computing Systems

Moving System Design from Design-time to Runtime

Sven Tomforde

1

, J

¨

org H

¨

ahner

1

and Christian M

¨

uller-Schloer

2

1

Organic Computing Group, University of Augsburg, Eichleitnerstr. 30, 86159 Augsburg, Germany

2

System and Computer Architecture Group, Leibniz University Hannover, Appelstr. 4, 30167 Hannover, Germany

Keywords:

Design Process, System Engineering, Organic Computing, Adaptivity, Intelligent System Control.

Abstract:

System engineers are facing demanding challenges in terms of complexity and interconnectedness. Current

research initiatives like Organic or Autonomic Computing propose to increase the freedom of the system to

be developed using concepts like adaptivity and self-organisation. Adaptivity means that for such systems we

defer a part of the design process from design time to runtime. Therefore, we need a runtime infrastructure

which takes care of runtime modifications. This paper presents a meta-design process to develop adaptive

systems and parametrise the runtime infrastructure in a unified way. To demonstrate the proposed design

process, we applied it to a communication scenario and evaluate the resulting system in a realistic setting.

1 INTRODUCTION

Nowadays, the vision of Ubiquitous Computing

(Weiser, 1991) becomes increasingly realistic. Tech-

nology has become a fundamental part of human

lives and supports us embedded in the environments

that we encounter on a daily basis. Engineers build

technology-driven environments, where systems ob-

serve the conditions in the real world, derive plans of

how to act best in this environment, try to draw con-

clusions from observed behaviour, and finally act pro-

actively by applying actions and manipulating this en-

vironment. Recent research initiatives like Organic

Computing (OC), cf. (M

¨

uller-Schloer, 2004), develop

novel concepts to be able to handle the resulting com-

plex systems. In this context, OC focuses on develop-

ing autonomous entities that are acting without strict

central control and achieve global goals although their

decisions are based on local knowledge. Due to the

complexity of the particular tasks, not all possibly oc-

curring situations can be foreseen during the devel-

opment process of the system. Therefore, the system

must be adaptive and equipped with learning capabil-

ities, which leads to the ability to learn new strategies

for previously unknown situations.

OC postulates to move design time decisions as

typically taken by engineers to runtime and into the

responsibility of the OC system itself. Hence, such

an OC system requires a self-adaptation mechanism

which is generic in the sense that it is not designed

especially for each application. Instead, this mech-

anism forms a runtime infrastructure which must be

adapted to the particular problem using well-defined

parametrisable steps. This leads to a meta-design pro-

cess whose results provide the parametrisations of the

online adaptation mechanism.

This paper is organised as follows. Section 2 de-

scribes the architectural concept of OC systems, the

specific demands for an appropriate meta-design pro-

cess and the difference to traditional concepts. After-

wards, Section 3 introduces the novel process in de-

tail. This is evaluated using an example application

from the data communication domain in Section 4.

Finally, Section 5 summarises the paper and gives a

short outlook on current and future work.

2 SYSTEM DESIGN

This section discusses the basic system design for

OC according to the Multi-level Observer/Controller

(MLOC) framework (Tomforde, 2012). MLOC is a

three-layered framework that implements the desired

self-adaptation mechanism. Thereby, each of the lay-

ers has a certain functionality that has impact on the

design process introduced in this paper. Afterwards,

we give a brief overview of design processes and ex-

plain why a novel meta-process to incrementally de-

sign adaptive systems is needed.

185

Tomforde S., Hähner J. and Müller-Schloer C..

Incremental Design of Organic Computing Systems - Moving System Design from Design-Time to Runtime.

DOI: 10.5220/0004457901850192

In Proceedings of the 10th International Conference on Informatics in Control, Automation and Robotics (ICINCO-2013), pages 185-192

ISBN: 978-989-8565-70-9

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

2.1 Observer/Controller Design

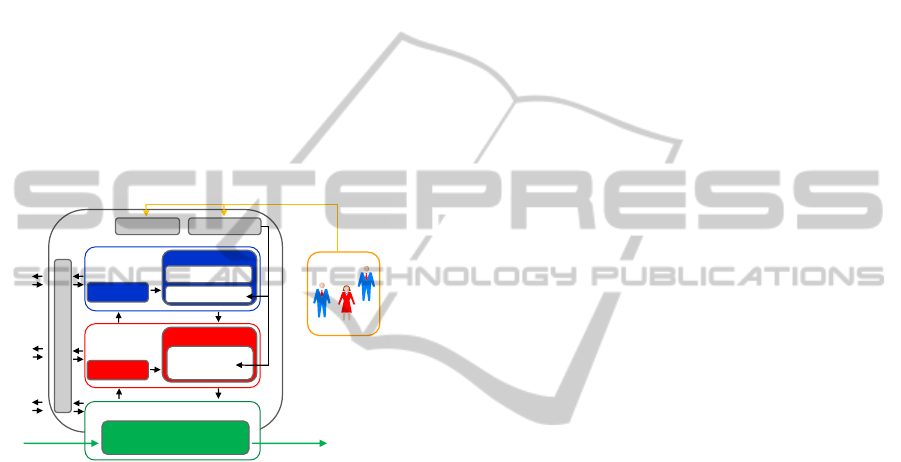

The MLOC framework for learning and self-

optimising systems provides a unified approach to au-

tomatically adapt technical systems to changing envi-

ronments, to learn the best adaptation strategy, and to

explore new behaviours autonomously. Figure 1 illus-

trates the encapsulation of different tasks by separate

layers. Layer 0 encapsulates the system’s productive

logic (the System under Observation and Control –

SuOC) which is parametrisable in terms of variable

configurations. Layer 1 establishes a control loop

with safety-based on-line learning capabilities, while

Layer 2 evolves the most-promising reactions to pre-

viously unknown situations. Layer 3 provides inter-

faces to the user and to neighbouring systems. Details

on the design approach, technical applications, and

related concepts can be found in (Tomforde, 2012).

Layer 3

Layer 0

Detector

data

Control

signals

User

System under Observation

and Control

Layer 1

Parameter selection

Observer

Controller

modified XCS

Layer 2

Offline learning

Observer

Controller

Simulator

EA

Collaboration mechanisms

Monitoring Goal Mgmt.

Figure 1: System Design.

2.2 Design Time to Runtime

OC systems are characterised by attributes like self-

organisation, self-configuration, self-protection, or

self-explanation. In consequence, this means that at

least a part of the design effort moves from design

time to runtime. OC therefore means to move design

time decisions to runtime. This relocation of design

activities comprises five aspects:

1) Runtime Exploration: Design space exploration

in traditional system design discovers all possibly oc-

curring situations during the design time, while OC

systems explore the configuration space using its Lay-

ers 1 and 2 at runtime.

2) Runtime Optimisation: The control mechanism

defined by Layers 1 and 2 of the MLOC framework

continuously optimises the performance of the OC

system. Thereby, especially the simulation-coupled

optimisation component of Layer 2 has to work un-

der certain constraints, since only limited computing

resources and time are available.

3) Online Validation: Searching the configuration

space for the optimal parameter setting in a certain

situation relies on the possibility to validate candi-

date solutions. In OC systems, this validation takes

place at runtime. Since approaches like trial-and-error

mean that bad (or illegal) solutions are tried out in re-

ality, OC proposes to use a sandbox approach where

solutions are validated in a simulated environment

(i.e. the simulation environment of Layer 2).

4) Continuous Revision: While classical design pro-

cesses freeze the design at a certain point in time and

the result goes into production, OC systems have to

work without freezing. This leads to a continuous

runtime reconfiguration process where all, even the

higher-level, design decisions must be adaptive and

changeable at runtime.

5) Runtime Yo-Yo Design: Current design and devel-

opment processes use models at design time to vali-

date the system before it is actually built. In OC, how-

ever, two different flavours of these models have to be

distinguished: a) prescriptive models reflect the clas-

sical top-down enforcement and b) descriptive mod-

els reflect the actual system state. As both models

are not necessarily always consistent, possible con-

tradictions have to be resolved or at least minimised

– which leads to a runtime version of Yo-Yo design.

Runtime modelling is currently gaining high interest

(e.g. for self-adaptive systems (Amoui et al., 2012)).

These five aspects of moving design time deci-

sions into the responsibility of the self-adaptation

mechanism of the organic system and into the run-

time define the specific requirements of OC for an

appropriate design process. Considering such a de-

sign process from a more general perspective, a di-

chotomy that partitions adaptive systems according to

the targeted functionality can be observed. One part is

(similar to traditional approaches) responsible for the

productive logic part, while the other part implements

the self-adaptivity aspects. Since traditional design

processes cover only the productive part, these five

aspects are novel and hence define the need of an OC-

specific approach. This assumption is substantiated in

the following by discussing the most prominent de-

sign and development processes as well as their ap-

plicability to the design of OC systems.

2.3 Related Work

The organisation and definition of design and devel-

opment processes has gained a high degree of at-

tention by research and industry since fast and suc-

cessful projects are a key factor for controlling costs.

As a result, approaches following different direc-

tions can be found in literature. Thereby, the most

ICINCO2013-10thInternationalConferenceonInformaticsinControl,AutomationandRobotics

186

prominent representatives belong to the field of it-

erative and incremental development (Larman and

Basili, 2003). These are the Waterfall model (Royce,

1988), V-model (Forsberg and Mooz, 1991) (and its

extension, the Dual-Vee model (Forsberg and Mooz,

1995)), the Y-Chart model (Gajski et al., 2003) or the

Spiral-model (Boehm, 1986) that all distinguish be-

tween several consecutive development phases, e.g.

describing a general concept of operations, refining

the system description iteratively with decreasing ab-

straction until a detailed design exists, and finally im-

plementing the description accordingly and testing it.

Here, the overall process is organised according to the

ongoing timeline of the project.

Besides these standard concepts, several more

hardware-driven approaches like the Design Cube

Model (Ecker and Hofmeister, 1992) or more re-

cent developments like the Chaos model (Raccoon,

1995) and agile methods (Erickson et al., 2005) can

be found. In addition, researchers focused on build-

ing reliable software in the sense of guaranteeing

the system’s correctness (see e.g. (Good, 1982), or

(Nafz et al., 2011) with a certain OC background). A

good overview of current design methodologies can

be found in (Pressman, 2012). All these processes

lack the possibility of applying them to the devel-

opment of OC systems due to a variety of reasons.

First, the most important OC aspect of moving the

design time optimisation process into the responsibil-

ity of the system itself at runtime is not addressed.

Instead, a generalised and time-line-based approach

is followed in most of the classic approaches. OC’s

structure is aligned according to the capabilities in-

stead of the time-line. In this context, the Yo-Yo ap-

proach is closest to what we want to achieve with OC

systems in terms of changing between top-down and

bottom-up constraints – but Yo-Yo design is meant as

a design-time process only.

Furthermore, all (including higher-level) design

decisions have to be revised continuously in OC sys-

tems (technically, this is limited by the constraints

of runtime-reconfigurable software or hardware so-

lutions). On the other hand, formal approaches are

hardly applicable due to vast situation and configura-

tion spaces and the entailed impossibility to anticipate

all potentially occurring situations and best responses

at design time. This leads to the insight that the gen-

eral design of OC systems requires a meta-design pro-

cess including runtime reconfiguration rather than a

standard organisational process structure for the de-

sign time part. The architectural concept as illustrated

by Figure 1 specifies three layers on top of the classi-

cal productive part of the system – comprising in to-

tal the application-independent self-adaptation mech-

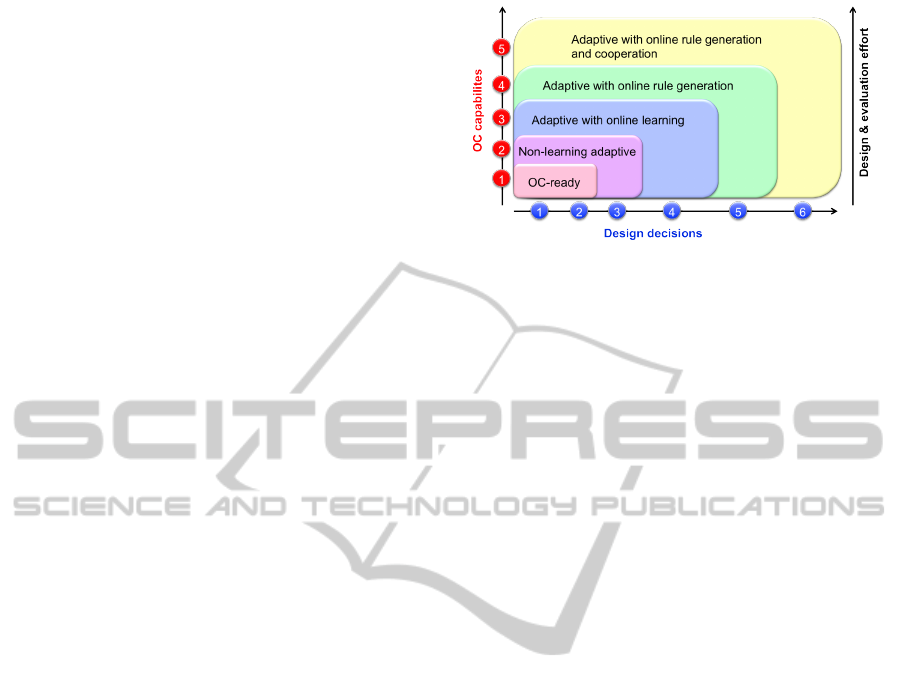

Figure 2: OC capabilities vs. design decisions.

anism. The task of an OC design process is to adapt

this generic mechanism to the particular problem us-

ing well-defined parametrisable steps.

3 INCREMENTAL DESIGN

OF ADAPTIVE SYSTEMS

The design of OC systems according to MLOC (see

Figure 1) describes a canonical way of adding self-

organised adaptivity and self-optimisation functional-

ity to a productive system. MLOC’s basic assump-

tion is that the productive part of the system – the

SuOC – is handled as an observable and parametris-

able black box. Accordingly, the control mechanism

defined by higher-layered O/C components does not

need detailed knowledge about what certain variable

parameters mean. In this context, the control mecha-

nism itself can be characterised by an increasing de-

gree of sophistication – depending on the design ef-

fort: increasing degree means that an OC system has

more possibilities to observe and analyse its state and

the environmental conditions as well as reacting more

appropriately to these. Considering the design pro-

cess and the corresponding effort, a system with a

lower degree of capabilities requires fewer design de-

cisions (see Figure 2). The next part explains the dif-

ferent capabilities named in Figure 2 in detail (a set of

six consecutive design decisions that have to be taken)

and combines them to a meta-design process.

(1) Observation Model: The first design decision

is concerned with the attributes to be observed. All

internal and environmental attributes with impact on

a) the adaptation or b) the process of measuring the

system performance need to be available.

(2) Configuration Model: The second design de-

cision defines the configuration interface to the pro-

ductive system – which parameters of the SuOC can

be altered at runtime in general and which are actually

subject to control interventions?

(3) Similarity Metric: Due to the possibly vast

IncrementalDesignofOrganicComputingSystems-MovingSystemDesignfromDesign-TimetoRuntime

187

situation and configuration spaces, OC systems have

to cope with an unbounded number of possibilities to

configure the SuOC. In order to initially close the con-

trol loop by activating a rule-based reconfiguration

mechanism, a quantification of similarity between sit-

uations is needed. This similarity serves as basis for

choosing the best available configuration for the cur-

rently observed situation.

(4) Performance Metric: Classical system de-

velopment is based on (mostly hard-coded) implicit

goals. In contrast, OC systems have to deal with ex-

plicit goals that are user-configurable at runtime – the

O/C component uses these goals to guide the system’s

behaviour. Automated (machine) learning needs feed-

back to distinguish between good and bad decisions

without the need of an external expert. In this con-

text, feedback is a quantification method for evaluat-

ing the system performance at runtime. It is derived

from observations in each step of the O/C loop.

(5) Validation Method: Automated machine

learning has two severe drawbacks: a) it is strongly

based on trial-and-error and b) it needs a large num-

ber of evaluations to find the best solution. MLOC

handles this problem by dividing the learning task

into two parts: a) online learning works on existing

and tested rules only, while b) offline learning in an

isolated environment explores novel behaviour with-

out affecting the system’s productive part. The sec-

ond aspect can be implemented using computational

models, approximation formulas, or simulation (test

actions under realistic conditions and therefore evalu-

ate their behaviour in a specific situation). The former

two are seldom available, but would be favoured over

the latter one due to quality and time reasons.

(6) Cooperation Method: OC distributes com-

putational intelligence among large populations of

smaller entities. These entities cooperate to achieve

common goals. In order to allow for such a division of

work between a set of self-motivated elements, com-

munication and social interaction are needed. Hence,

cooperation methods and standardised communica-

tion schemes are needed.

The idea of OC is to move design time decisions

to runtime. At runtime, the systems make their deci-

sions autonomously. Depending on the application,

this is potentially dangerous. This means that the

design process has to be planned carefully such that

the power of decision is transferred gradually from

the designer to the O/C structure. Three main de-

sign phases are defined, with each phase resulting in a

system with increasing autonomy and requiring more

validation (see Figure 3).

Phase 1: Preparation (OC-ready). The first

phase prepares a system for a later addition of higher-

Preparation:

OC-ready

• Definition of OC capabilities, models,

metrices, and methods.

• Implementation of interfaces.

Evaluation:

Open OC

Loop

• Offline analysis mode: data collection & analysis.

• Assistance mode: explicit user acknowledgement.

• Supervised mode: Automated O/C decisions.

Utilisation:

Closed OC

Loop

• Unsupervised operation.

Figure 3: The three OC design phases.

level OC capabilities by defining observation and con-

figuration models and interfaces. Building all new

systems OC-ready might be advantageous, regardless

whether these interfaces are later used or not.

Phase 2: Evaluation (Open O/C Loop). The

second phase adds observer(s) and controller(s) to the

system. This requires at least the definition of a sim-

ilarity metric. For higher degrees of OC capabilities,

further OC capabilities like a performance metric or

an online validation method have to be defined. This

phase stepwise closes the O/C loop. Initially, the eval-

uation begins by collecting and aggregating observa-

tion data and analysing them offline. In a second step,

the O/C loop is open and works in assistance mode,

i.e. the controller suggests certain control actions to

the user who has to explicitly acknowledge them be-

fore enactment. In the third step (supervised mode),

the O/C loop is closed but the situation observations

and the according actions are logged for a later offline

analysis in case of wrong decisions.

Phase 3: Utilisation (Closed O/C Loop). Finally

in the third phase, the systems work with closed O/C

loop and, at least in principle, without super-vision.

4 EVALUATION

The basic design approach as presented in Section 2.1

has been applied to various application scenarios,

including vehicular traffic control, production, and

mainframe systems (Tomforde, 2012). In the follow-

ing, we demonstrate the OC design process by apply-

ing it to an example application from the data com-

munication domain – the Organic Network Control

(ONC) system (Tomforde et al., 2011). Thereby, the

design decisions are discussed in detail and the be-

haviour of the resulting OC system is analysed using a

simulation-based approach. ONC has been developed

to dynamically adapt parameters of data communica-

ICINCO2013-10thInternationalConferenceonInformaticsinControl,AutomationandRobotics

188

tion protocols (i.e. buffer sizes, delays, or counters)

in response to changing environmental conditions. It

learns the best mapping between an observed situa-

tion of the environment and the most promising re-

sponse in terms of a parameter configuration. ONC

has been successfully applied to different types of

protocols, including mode-selection in wireless sen-

sor networks, BitTorrent as exemplary Peer-to-Peer

client, and mobile ad-hoc networks (MANets) (Tom-

forde et al., 2011). In the context of this paper, we

consider a reliable broadcast protocol for MANets

(the R-BCast, details on the protocol can be found in

(Kunz, 2003)) as application scenario for ONC.

4.1 Design Decisions for Organic

Network Control

(1) Observation Model: In a MANet environment,

the most important factor influencing the protocol’s

performance is the distribution of other nodes. Typi-

cally, the transmission range for Wifi-based MANets

is about 250 meter (the sending distance, while the

sensing distance is 500 meter). Therefore, a sector-

based approach as depicted in Figure 4 has been de-

veloped. The radius of the outer circle is equal to

the sensing distance of the node, as this is the most

remote point where messages of this node can inter-

fere with other ones. As nodes within the first cir-

cle are really close (50m), their exact position has no

impact. In contrast, the direction of the neighbour

becomes increasingly important for the second circle

(125m – 4 sectors), the third circle (200m – 8 sec-

tors), and the forth circle (250m – 16 sectors). Within

sensing range, two circles (375m and 500m) are in-

troduced and divided into 32 sectors each. A node is

assumed to be able to determine the current positions

of its neighbours in sensing range relative to its own

position, e.g. based on GPS, see (Pahlavan and Krish-

namurthy, 2001). Additionally, the node’s direction

of movement is stored since it has high influence on

the best parameter set. Due to the sector-based ap-

proach, situations are generalised, which is necessary

to avoid evolving a rule for each situation.

(2) Configuration Model: The configuration

model describes the possibilities of the OC system to

automatically manipulate the broadcast algorithm at

runtime. Here, we re-use the configuration possibili-

ties provided by the author of the protocol (which he

configured once at design time and kept static after-

wards). Table 1 lists the parameters and their config-

uration in the static case.

(3) Similarity Metric: A rule-based selection of

SuOC configurations has to discover the most promis-

ing rule in terms of a configuration set that is most-

Figure 4: Environment representation.

Table 1: Variable parameters of the R-BCast protocol.

Parameter Standard configuration

Delay 0.1 s

AllowedHelloLoss 3 messages

HelloInterval 2.0 s

δHelloInterval 0.5 s

Packet count 30 messages

Minimum difference 0.7 s

NACK timeout 0.2 s

NACK retries 3 retries

related to the currently observed situation. Therefore,

the system has to be able to compare situation de-

scriptions which is done based on a similarity met-

ric. In step 1, the situation description has been de-

fined as sector-based encryption of occurring nodes

in the neighbourhood. A measure for the similar-

ity of two entities (A, B) to determine the distance,

needs to deduct the possible influence of rotation

and reflection, initially. Afterwards, the formula for

the distance (δ) can be defined with r ∈ RADII and

s ∈ SECT ORS as follows:

δ(A,B) =

∑

r

∑

s

(A

r,s

− B

r,s

)

2

/r.distance

The function r.distance defines the radius size as

introduced before (50m, 125m, . . . ). A

r,s

gives the

number of neighbours within the sector s of radius

r for the situation description A. This means that the

importance of a node’s neighbour decreases if it is sit-

uated within an outer radius.

(4) Performance Metric: The learning mecha-

nism needs an online feedback to draw conclusions

from its past actions. In the context of MANet broad-

cast algorithms, Packet Delivery Ratio and Packet

Latency have to be considered. Both metrics are

network-wide figures and cannot be used at each node

locally. Nevertheless, the system aims at approxi-

mating both effects by reducing the number of for-

warded broadcasts and simultaneously assuring the

IncrementalDesignofOrganicComputingSystems-MovingSystemDesignfromDesign-TimetoRuntime

189

delivery of each broadcast. To achieve this, the fol-

lowing fitness function (Fit(x)) has been developed:

Fit(x) =

#RecMess

#FwMess

. Here, x represents the currently

observed network protocol instance. Since a new pa-

rameter set has to be applied for a minimum duration

to show its performance, evaluation cycles are used

defining discrete time slots – the control loop consist-

ing of Layers 0 and 1 is performed once every evalu-

ation cycle. The duration of these cycles depends on

how dynamic an environment is. The faster the en-

vironment changes, the shorter is the cycle (and the

more often is the SuOC adapted). Thus, the formula

above takes all messages sent and received within the

last cycle into account. It divides the sum of all re-

ceived messages (#RecMess) by the sum of all for-

warded messages (#FwMess). As a result, high effort

(unnecessary forwards) and low delivery rates (not

successful broadcasts) are penalised.

(5) Validation Method: The online validation of

the Layer 2 component re-uses the protocol’s imple-

mentation within the network simulation tool NS-2

(Fall, 1999). Thereby, the neighbouring nodes from

the node encoding of step 1 are initialised in rela-

tion to the simulated node’s position based on a ran-

domised approach within the particular sector. After-

wards, all nodes besides the simulated one are kept

static (i.e. they are not moving) which is a reasonable

approximation depending on short cycle rates.

(6) Cooperation Method: Cooperation e.g. al-

lows for knowledge sharing between nodes (Tom-

forde et al., 2011). Further collaboration mechanisms

are part of current research initiatives.

4.2 Experimental Results

The experimental setup has been chosen as follows.

The simulation environment is implemented in JAVA

using the Multi-Agent Simulation Toolkit MASON

(Luke et al., 2004). Within the simulated area of 1000

x 1000 meters, six agents are created at random po-

sitions and move according to a random-waypoint-

model (Lawler and Limic, 2010). From these agents,

one is equipped with the ONC system and the other

five agents perform the standard protocol configura-

tion. The sampling rate of ONC’s Layer 1 is set

to 1 s. The simulation relies on pseudo-randomised

movements by taking seeds into account – which

makes them repeatable and comparable to the usage

of the protocol’s standard configuration. The inves-

tigated simulation period covers seven hours; the re-

sults are discretised to blocks of half an hour each.

The evaluation compares an OC-ready variant (i.e.

the standard protocol version equipped with inter-

faces to observe it), an Open O/C Loop variant, and

a Closed O/C Loop variant (with and without experi-

ence). Thereby, the Open O/C Loop variant is mod-

elled by taking a pre-defined rule-base into account

that covers randomly chosen 10% of the occurring sit-

uations. The inexperienced Closed Loop variant starts

with an empty rule-base, while the experienced one

relies on the feedback and Layer 2 rule generations re-

ceived during three consecutive simulation runs. All

results are averaged values of 5 simulation runs.

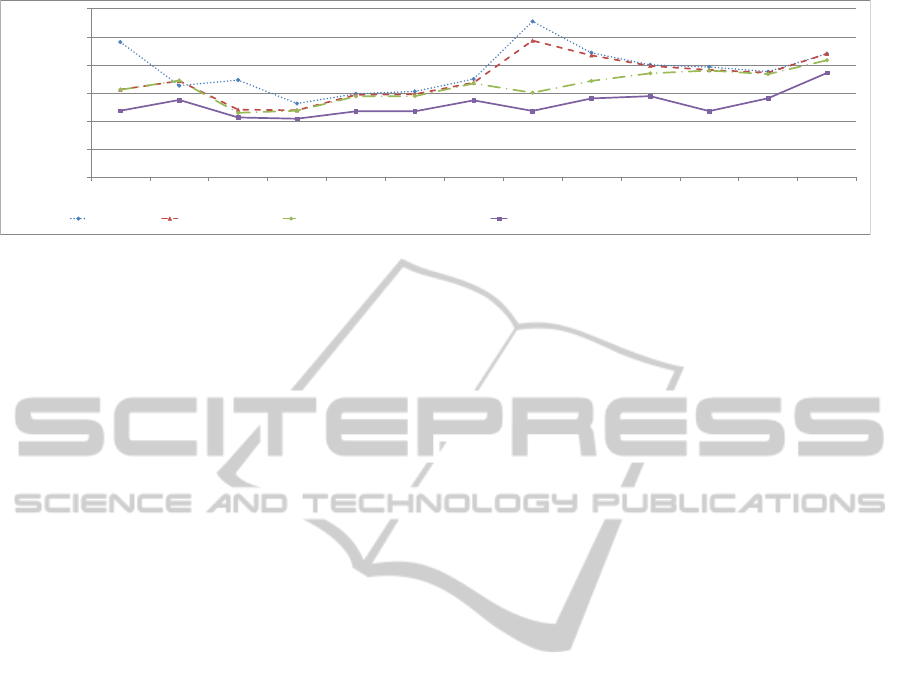

The first part of the evaluation analyses the general

impact of the additional ONC control in this scenario.

ONC’s goal contains two aspects: assure the deliv-

ery of broadcasts and decrease the overhead needed

to achieve this delivery. Since deliveries of broad-

casts can only be considered at network-level, all mes-

sages during the whole simulation are analysed. Fig-

ure 5 illustrates the delivery ratio of broadcast mes-

sages, which is defined as the number of received dis-

tinct broadcasts divided by the number of sent broad-

casts and the number of agent that have to receive

this broadcast. The figure illustrates the desired ef-

fect. The OC-ready solution – which is the proto-

col’s standard configuration for all six agents – re-

ported a delivery ratio of 98.73 % on average, which is

clearly improved by the ONC-control. The Open O/C

Loop variant resulted in a delivery ratio of 99.05%,

the Closed O/C Loop without experience variant in a

delivery ratio of 99.15%, and the Closed O/C Loop

with experience variant in a delivery ratio of 99.41 %

(all values are averages over the complete simulation

time). The second aspect in this context is the latency

needed to achieve this delivery ratio. The latency val-

ues have been determined as the averaged time to de-

liver a unique broadcast to a receiver. All four val-

ues (OC-ready, Open O/C Loop, Closed O/C Loop,

and Closed O/C Loop with experience)) are within a

similar range – the maximum deviation between two

values is 0.98%. Thus, the impact of ONC on the

latencies can be neglected.

The second part of the objective function aims at

minimising the overhead needed to deliver the broad-

casts successfully. In this context, overhead is de-

fined as all messages that do not belong to the dis-

tinct broadcast message delivered to each of the other

agents. In particular, this includes non-broadcast mes-

sages (e.g. NACK messages or “hello”-messages) and

broadcast duplicates or re-transmissions. Figure 6 de-

picts the results for all four simulations. The OC-

ready variant resulted in an average number of 45,785

overhead messages. This value has been decreased

in case of an activated ONC for one agent. The

Open O/C Loop variant resulted in 45, 142 overhead

messages (decrease of 1.41 %), the Closed O/C Loop

without experience variant resulted in 44,625 over-

ICINCO2013-10thInternationalConferenceonInformaticsinControl,AutomationandRobotics

190

0,978

0,98

0,982

0,984

0,986

0,988

0,99

0,992

0,994

0,996

0,998

1

0:30 1:00 1:30 2:00 2:30 3:00 3:30 4:00 4:30 5:00 5:30 6:00 6:30

Delivery Ratio

Simulation time (hour)

OC-ready Open O/C Loop Closed O/C Loop w/o experience Closed O/C Loop w/ experience

Figure 5: Delivery ratio of broadcast messages (higher values are better) for the four compared variants.

head messages (decrease of 2.60 %), and the Closed

O/C Loop with experience variant resulted in 43,406

overhead messages (decrease of 5.19%). This means,

that the capacity of the network has been increased

between 1.41 and up to 5.19% due to ONC control

– which is a significant improvement. Considering

the figure, all three ONC-based solutions are better

than the OC-ready variant (i.e. the reference solution)

– besides the overhead between 30 and 60 simulated

minutes. In this case, the standard configuration of

the protocol leads to better results than the Open O/C

Loop and Closed O/C Loop without experience ver-

sions. But this effect decreases with increasing capa-

bilities and therefore a longer learning duration.

The third part of the evaluation covers the as-

pect of analysing the required effort caused to achieve

the particular behaviour. This aspect is concerned

with the rule-generation and the SuOC-adaptation be-

haviour of ONC. Therefore, the following part in-

vestigates: a) the development of the rule base over

time, b) the closely-connected number of Layer 2

optimisations during the simulation period, and c)

the adaptation-demand realised by ONC. Due to the

setup, the population is kept static for the Open O/C

Loop variant – here, Layer 2 is not active. The

Closed Loop variants without and with experience

differ mainly due to the need of new rules. The for-

mer variant has to make heavy use of its Layer 2

component to discover novel behaviour, leading to

2,170rule-generations during the simulated period.

In contrast, the experienced variant got along with

139rule generations. Hence, the rule-base is con-

stantly increasing for the inexperienced variant, while

being nearly static for the experienced one.

Finally, the effort can be estimated analytically.

The sampling interval has been chosen as 1 s, which

means that the ONC-controlled agent had the oppor-

tunity to adapt its SuOC 3,600 times per simulated

hour. Analysis of the data showed that the Layer 1

component constantly took advantage of this possi-

bility to about 90 % each hour in all three variants.

Thus, every 10th chance to adapt the SuOC has not

been used. In general, the number of adaptations

corresponds to the speed at which the neighbour-

hood changes (and consequently to the nodes’ move-

ment speeds). Choosing lower movement speeds

would decrease the number of adaptations signifi-

cantly. But as choosing an appropriate rule depends

on a linear search of the rule base and the size of

the rule base converges to about 3,000 rules, the ef-

fort is manageable. Summarisingly, ONC control of

a MANet-based broadcast algorithm has been suc-

cessful. The overhead caused by retransmissions and

“hello”-messages has been significantly decreased,

while the delivery of broadcasts has been improved.

5 CONCLUSIONS

Standard design processes are not applicable to novel

demands like moving parts of the design time ef-

fort to runtime and into the responsibility of or-

ganic systems, which leads to a novel meta-design

process for the incremental development of adap-

tive systems as presented in this paper. This

novel design process provides a modularised concept

for building different stages of Organic Computing

(OC) systems ranging from an OC-ready variant to

an open Observer/Controller loop to a closed Ob-

server/Controller variant. These three stages are char-

acterised by an increasing degree of autonomy. In or-

der to demonstrate the increasing capabilities of the

OC system resulting from the novel process, an ap-

plication scenario from the data communication do-

main has been chosen. Here, the increased perfor-

mance of the control mechanism defined by the Ob-

server/Controller component has been shown in terms

of domain-specific metrics like Delivery Ratio and

Overhead (in terms of messages).

Current and future work will focus on both aspects

covered in this paper: the meta-design process and

the application scenario. For the design process, ap-

proaches to generalise the collaboration part as out-

IncrementalDesignofOrganicComputingSystems-MovingSystemDesignfromDesign-TimetoRuntime

191

19000

20000

21000

22000

23000

24000

25000

0:30 1:00 1:30 2:00 2:30 3:00 3:30 4:00 4:30 5:00 5:30 6:00 6:30

Overhaed (messages)

Simulation time (hour)

OC-ready Open O/C Loop Closed O/C Loop w/o experience Closed O/C Loop w/ experience

Figure 6: Overhead in messages (lower values are better) for the four compared variants.

lined by the sixth capability are needed – here, es-

pecially an automated detection of dependencies be-

tween control and observation parameters of neigh-

bouring systems would be useful in order to further

improve the quality of the adaptation process. In con-

trast, the network control example will be extended

by covering multiple protocols or physical resources

(e.g. switches using the Open Flow standard) instead

of one single protocol.

REFERENCES

Amoui, M., Derakhshanmanesh, M., Ebert, J., and Tahvil-

dari, L. (2012). Achieving dynamic adaptation via

management and interpretation of runtime models. J.

of Systems and Software, 85(12):2720 – 2737.

Boehm, B. (1986). A Spiral Model of Software Develop-

ment and Enhancement. ACM SIGSOFT Software En-

gineering Notes, 11(4):14 – 24.

Ecker, W. and Hofmeister, M. (1992). The design cube-a

model for vhdl designflow representation. In Design

Automation Conference, pages 752 –757.

Erickson, J., Lyytinen, K., and Siau, K. (2005). Agile

Modeling, Agile Software Development, and Extreme

Programming: The State of Research. Journal of

Database Management, 16(4):88 – 100.

Fall, K. (1999). Network Emulation in the Vint/NS Simu-

lator. In Proc. of 4th IEEE Symp. on Computers and

Communications (ISCC’99), page 244. IEEE.

Forsberg, K. and Mooz, H. (1991). The Relationship of Sys-

tem Engineering to the Project Cycle. In Proc. Symp.

of Nat. Council on System Eng., pages 57 – 65.

Forsberg, K. and Mooz, H. (1995). Application of the Vee to

Incremental and Evolutionary Development. In Proc.

of Nat. Council for Sys. Eng., pages 801 – 808.

Gajski, D., Peng, J., Gerstlauer, A., Yu, H., and Shin,

D. (2003). System Design Methodology and Tools.

Technical Report CECS-03-02, Center for Embedded

Computer Systems University of California, Irvine.

Good, D. I. (1982). The Proof of a Distributed System in

GYPSY. Technical Report 30, Institute for Comput-

ing Science, The University of Texas at Austin.

Kunz, T. (2003). Reliable Multicasting in MANETs. PhD

thesis, Carleton University.

Larman, C. and Basili, V. (2003). Iterative and incremental

development: A brief history. Computer, 36:47–56.

Lawler, G. F. and Limic, V. (2010). Random walk : a

modern introduction. Cambridge Studies in Advanced

Mathematics. Cambridge University Press.

Luke, S., Cioffi-Revilla, C., Panait, L., and Sullivan, K.

(2004). MASON: A New Multi-Agent Simulation

Toolkit. In Proc. of the 2004 Swarmfest Workshop.

M

¨

uller-Schloer, C. (2004). Organic Computing: On the fea-

sibility of controlled emergence. In Proc. of CODES

and ISSS, pages 2–5. ACM.

Nafz, F., Seebach, H., Stegh

¨

ofer, J.-P., Anders, G., and Reif,

W. (2011). Constraining Self-organisation Through

Corridors of Correct Behaviour: The Restore Invariant

Approach. In Organic Computing – A Paradigm Shift

for Complex Systems, pages 79 – 93. Birkh

¨

auser.

Pahlavan, K. and Krishnamurthy, P. (2001). Principles of

Wireless Networks: A Unified Approach. Prentice Hall

PTR, Upper Saddle River, NJ, USA.

Pressman, R. (2012). Software Engineering: A Practi-

tioner’s Approach. McGraw Hill, Boston, US.

Raccoon, L. B. S. (1995). The chaos model and the chaos

cycle. SIGSOFT Softw. Eng. Notes, 20(1):55–66.

Royce, W. W. (1988). The development of large software

systems. Software Engineering Project Management,

pages 1 – 9.

Tomforde, S. (2012). Runtime adaptation of tech-

nical systems: An architectural framework for

self-configuration and self-improvement at runtime.

S

¨

udwestdeutscher Verlag f

¨

ur Hochschulschriften.

ISBN: 978-3838131337.

Tomforde, S., Hurling, B., and H

¨

ahner, J. (2011). Dis-

tributed Network Protocol Parameter Adaptation in

Mobile Ad-Hoc Networks. In Informatics in Control,

Automation and Robotics, volume 89 of LNEE, pages

91 – 104. Springer.

Weiser, M. (1991). The computer for the 21st century. Sci-

entific American, 265(3):66–75.

ICINCO2013-10thInternationalConferenceonInformaticsinControl,AutomationandRobotics

192