Automatic Synthesis of Data Cleansing Activities

Mario Mezzanzanica, Roberto Boselli, Mirko Cesarini and Fabio Mercorio

Department of Statistics and Quantitative Methods - C.R.I.S.P. Research Centre, University of Milan-Bicocca, Milan, Italy

Keywords:

Data Quality, Data Management, Cleansing Algorithms, Model-based Reasoning.

Abstract:

Data cleansing is growing in importance among both public and private organisations, mainly due to the rel-

evant amount of data exploited for supporting decision making processes. This paper is aimed to show how

model-based verification algorithms (namely, model checking) can contribute in addressing data cleansing

issues, furthermore a new benchmark problem focusing on the labour market dynamic is introduced. The

consistent evolution of the data is checked using a model defined on the basis of domain knowledge. Then,

we formally introduce the concept of universal cleanser, i.e. an object which summarises the set of all cleans-

ing actions for each feasible data inconsistency (according to a given consistency model), then providing an

algorithm which synthesises it. The universal cleanser can be seen as a repository of corrective interventions

useful to develop cleansing routines. We applied our approach to a dataset derived from the Italian labour

market data, making the whole dataset and outcomes publicly available to the community, so that the results

we present can be shared and compared with other techniques.

1 INTRODUCTION

In the last two decades, the diffusion of Informative

Systems has increased at an explosive rate, contribut-

ing to the definition and realisation of many IT ser-

vices, also in the public sector. As a consequence, the

amount of data that organisations are now handling

is growing apace. Such data can contribute to anal-

yse, observe and explain social, economic and busi-

ness phenomena, as well as to assess decision mak-

ing activities, e.g. the evaluation of active policies,

resource allocation, service design and improvement.

However, it is well known that the quality of the data

is frequently very low (Fayyad et al., 2003) and, due

to the “garbage in, garbage out” principle, dirty data

strongly affect the information derived from them.

Hence, data cleansing is a mandatory step before us-

ing data for decision making purposes.

Data quality and cleansing issues have been ad-

dressed in many fields of the literature, by dealing

with several quality dimensions, see (Batini and Scan-

napieco, 2006). Here we focus on consistency, which

takes into account the violation of semantic rules de-

fined over a set of data items. This work concentrates

on information about a given subject, object or phe-

nomena, observed at multiple sampled time points:

the result is a longitudinal dataset, also known as

panel data, see (Singer and Willett, 2003; Bartolucci

et al., 2012) for details, which allows one to study

how data change along the time.

To this regard, let us consider the dataset showed

in Tab. 1 modelling a cruise ship travel plan, as pre-

sented by (Mezzanzanica et al., 2012). The ship trav-

els by sea and stops at the port of calls (intermediate

destinations). The harbour regulations require a noti-

fication prior to entry into port. In this scenario, we

suppose that a ship is required to perform a checkin

notification when entering a harbour and a checkout

when exiting. Looking at Tab. 1, one can note that the

departure date from Lisbon is missing, since a check-

out from Lisbon should be done prior to entering in

Barcelona. In this sense, the events sequence mod-

elling the travel of the ship S01 can be considered as

inconsistent.

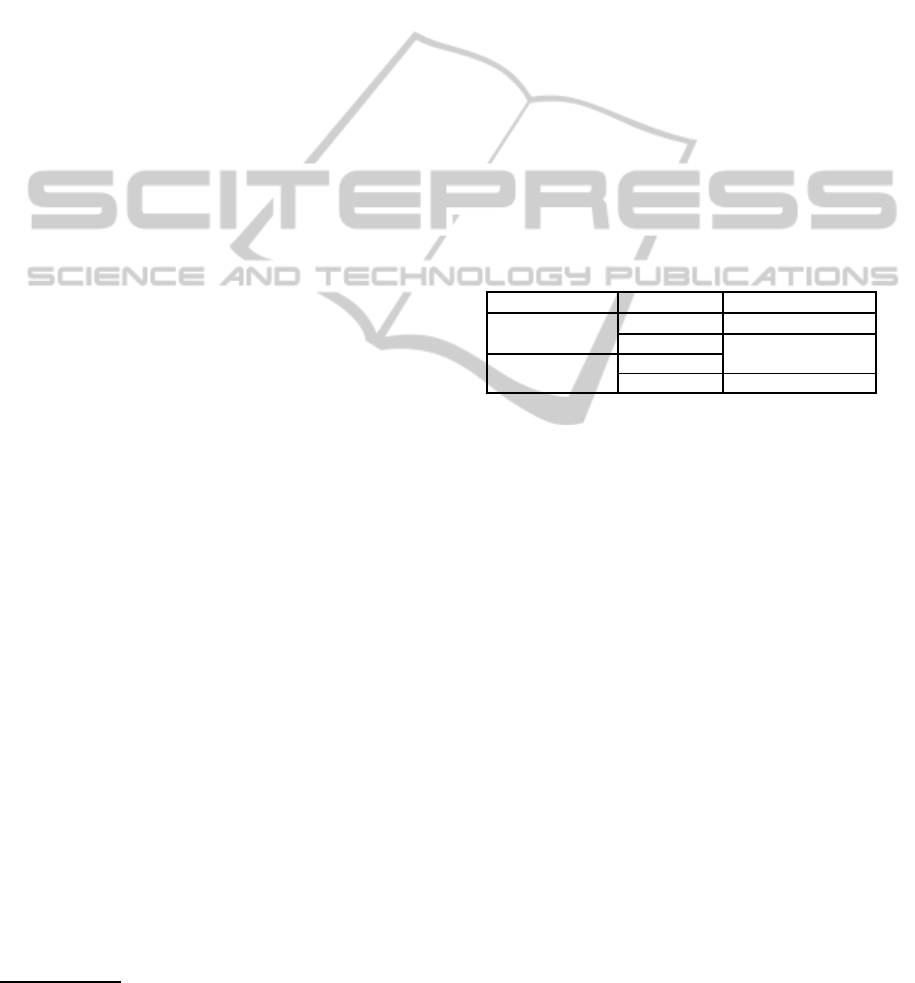

Table 1: Travel Plan of a Cruise Ship.

ShipID City Date Event Type

S01 Venice 12

th

April 2011 checkin

S01 Venice 15

th

April 2011 checkout

S01 Lisbon 30

th

April 2011 checkin

S01 Barcelona 5

th

May 2011 checkin

S01 Barcelona 8

th

May 2011 checkout

... ... ... ...

One can argue that ships are usually moored in

the harbour for 3 days, hence a cleansing activity

could set the missing departure date from Lisbon on

the 3

rd

May. Unfortunately, there is no certainty of

having guessed the real value, and having a consis-

138

Mezzanzanica M., Boselli R., Cesarini M. and Mercorio F..

Automatic Synthesis of Data Cleansing Activities.

DOI: 10.5220/0004491101380149

In Proceedings of the 2nd International Conference on Data Technologies and Applications (DATA-2013), pages 138-149

ISBN: 978-989-8565-67-9

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

tent dataset is required to obtain effective statistics

(e.g., missing dates may have unpredictable effects

when computing an indicator like active travel days/

overall cruise duration).

The aims of this work are twofold: (1) we describe

how a model-based reasoning (i.e., model checking)

can be used to describe and verify the consistent evo-

lution of the data along the time. Then, we show how

such model can be exploited to synthesise a Univer-

sal Cleanser: an object summarising the set of all

feasible cleansing actions for each feasible data in-

consistency; (2) We present a real-world problem in

the labour market context, providing both the source

datasets and the results publicly available to the com-

munity, so that the data can be shared and compared

with other studies.

2 RELATED WORK

Data quality and cleansing problems have widely

been addressed, a lot of works cross the boundaries of

different research fields, consequently it is not an easy

task framing them into a holistic classification. Fur-

thermore, there is no commonly agreed formal defini-

tion of data cleansing (Maletic and Marcus, 2010).

To the best of our knowledge not any other works

deal with database consistency issues related to (ar-

bitrarily long) sequences. Several existing works fo-

cus on constraint among attribute sets (single tuple

scope), others concentrate on entity resolution prob-

lems that requires a pairwise comparison (two tuples

scope). Furthermore, the finite state system approach

proposed in this paper can effectively capture the con-

sistency semantic of several historical or longitudinal

data.

In the data quality domain accessing the real data

is rarely feasible due to economic or practical con-

straints, indeed the cleansing activities can be per-

formed only relying on domain knowledge. For the

same reason this paper focuses on the consistency di-

mension, while dimensions that require access to the

real data (like accuracy) are not considered.

In this section we focused on works that identifies

and fix inconsistencies using domain knowledge. We

can distinguish among the following paradigms:

Rules based Error Detection and Correction al-

low users to specify rules and transformation needed

to clean the data, a survey can be found in (Maletic

and Marcus, 2010). Specifying rules can be a very

complex and time consuming task. Furthermore, both

bug fixing and maintenance along the time require a

non negligible effort.

Several approaches focus on integrity constraints

to identify errors, however they cannot address com-

plex errors or several inconsistencies commonly

found in real data (Fan, 2008; Maletic and Marcus,

2000). Other constraint types have been identified

in the literature: multivalued dependencies, embed-

ded multivalued dependencies, and conditional func-

tional dependencies. Nevertheless, according to Vardi

in (Vardi, 1987) there are still semantic constraints

that cannot be described by the latter. E.g., the con-

sistency model described in Sec. 6.

Machine Learning Methods can be used for er-

ror localisation and correction. These approaches ex-

ploit learning algorithms. After the training an al-

gorithm can be used to identify errors and inconsis-

tencies. Possible techniques and approaches are: un-

supervised learning, statistical methods, data profil-

ing, range and threshold checking, pattern recogni-

tion, clustering methodologies(Mayfield et al., 2009).

The training phase requires a satisfactory dataset to

be identified, however a clean dataset that can be used

as a reference is rarely available in the data quality

field. Therefore, human feedbacks are required to im-

prove the machine learning performances. Since the

underlying model built during the learning phase can-

not be easily accessed and interpreted by domain ex-

perts (e.g., an impact evaluation of the cleansing ac-

tivities can be hardly done), in this paper we explore a

different approach where the consistency models are

explicitly stated and verified.

Record Linkage (known as object identification,

record matching, merge-purge problem) aims to bring

together correspondingrecords from two or more data

sources or finding duplicates within the same one.

The record linkage problem falls outside the scope

of this paper, therefore it is not further investigated.

A survey can be found in (Batini and Scannapieco,

2006; Elmagarmid et al., 2007; Maletic and Marcus,

2010).

Consistent Query Answering works, e.g.

(Bertossi, 2006), focus on techniques for finding out

consistent answers from inconsistent data, i.e. the

focus is on automatic query modifications and not

on fixing the source data. An answer is considered

consistent when it appears in every possible repair

of the original database. Semantic constraints are

expressed using functional dependencies. The

functional dependencies works at the attribute level,

therefore they are not well suited to manage con-

sistency issues specific of longitudinal or historical

data. Furthermore, already with two Functional

Dependencies the problem of computing Consistent

Query Answers involving aggregate queries becomes

NP-complete (Bertossi, 2006). In (Bertossi et al.,

2011) an approach similar to consistent query

AutomaticSynthesisofDataCleansingActivities

139

answering exploits “matching dependencie”s and

“matching functions” instead of functional depen-

dencies. Matching dependencies were introduced as

declarative rules for data cleaning and entity resolu-

tion. Enforcing a matching dependency on a database

instance identifies the values of some attributes for

two tuples, provided that the values of some other

attributes are sufficiently similar (Bertossi et al.,

2011). Matching functions implement the semantic

through which different tuples referring to the same

entity are made equal. The latter work focuses on

data cleansing where mostly record linkage and

entity resolution problems are to be addressed. Such

problems are not considered in this paper. It is worth

to note that the partial order of semantic domination

among (cleansed) instances described in (Bertossi

et al., 2011), although conceived for a different

scenario, can contribute to the process of selecting a

correction among a set of several ones i.e., the policy

making task briefly introduced in Sec. 5. Due to lack

of space, the policy selection process is not further

investigated in this paper.

Other works in the field of automata and for-

mal verification theory are now shortly referenced.

The application of automata theory for inference pur-

poses was deeply investigated in (Vardi, 1992) in

the database domain. The problem of checking (and

repairing) several integrity constraint types has been

analysed in (Afrati and Kolaitis, 2009). Unfortu-

nately most of the approaches adopted can lead to

hard computational problems. Formal verification

techniques were applied to databases, to formally

prove the termination of triggers (Choi et al., 2006),

for semistructured data retrieval (Neven, 2002), and

to solve queries on semistructured data (Dovier and

Quintarelli, 2009).

Finally, many data cleansing toolkits have been

proposed for implementing, filtering, and transform-

ing rules over data. A detailed survey of those tools is

outside the scope of the paper. The interested reader

can refer to (Maletic and Marcus, 2010).

3 BACKGROUNDS

Model checking (see e.g., (Clarke et al., 1999)) is

a hardware/software verification technique to verify

the correctness of a given system. The system is de-

scribed in terms of state variables, whose evaluation

determines a state, and transition relations between

states, which specify how the system can move from

a state to the next one as a consequence of a given in-

put action. Focusing on explicit model checking tech-

niques, a model checker verifies whether a state tran-

sition system always satisfies a property by perform-

ing an exhaustive search in the system state-space

(i.e., the set of all the feasible system states).

The system is typically modelled as a Finite State

System, which can be formally defined as follows.

Definition 1 (Finite State System). A Finite State

System (FSS) S is a 4-tuple (S,I,A,F), where: S is a

finite set of states, I ⊆ S is a finite set of initial states,

A is a finite set of actions and F : S × A → S is the

transition function, i.e. F(s,a) = s

′

iff the system from

state s can reach state s

′

via action a.

Hence, a trajectory is a sequence of state, ac-

tion π = s

0

a

0

s

1

a

1

s

2

a

2

.. .s

n−1

a

n−1

s

n

such that ∀i ∈

[0,n],∀ j ∈ [0,n − 1], s

i

∈ S is a state, a

j

∈ A is an

action, and F(s

i

,a

i

) = s

i+1

.

Let S be an FSS according to Def. 1 and let ϕ be

an invariant condition specifying some properties to

be satisfied e.g., some consistency properties. Let a

state s

E

∈ E be an error state if the invariant formula

ϕ is not satisfied. Then, the set of error states E ⊆ S is

defined as the union of the states violating ϕ. We limit

the error exploration to at most T actions (the finite

horizon), i.e. only sequences reaching an error s

E

∈

E within the finite horizon are detected. Note that

this restriction has a limited practical impact in our

contexts although being theoretically quite relevant.

Informally speaking, a model checking problem is

composed by a description of the FSS to be explored

(by means of a model checker tool language), an in-

variant to verify and a finite horizon. A feasible solu-

tion, or error trace (if any) is a trajectory leading the

system from an initial state to an error one. Generally

speaking, a model checker is usually applied to verify

the correctness of a system model. In our context, we

use a model checker (i) to verify the data consistency

(i.e., if the data are conform to the model); (ii) to syn-

thesise a set of corrective actions (i.e., all the feasible

corrections activities to cleanse the data).

4 DATA CONSISTENCY VIA FSS

Finite State Systems are used to model event-driven

systems where the events are mapped to the actions of

Def. 1. A bridge between databases (containing lon-

gitudinal data) and event-driven system is required to

perform data quality verification using model check-

ing techniques. This connection can be done by por-

traying a database record as an event, i.e. a record

content or a subset thereof is interpreted as the de-

scription of an external world event modifying the

system state, and an ordered set of records as an event

sequence. To better clarify this concept, we formalise

the following.

DATA2013-2ndInternationalConferenceonDataManagementTechnologiesandApplications

140

Definition 2 (Event, Event Sequence, and Finite State

Event Dataset). Let R = (R

1

,. .. ,R

n

) be a schema re-

lation of a database, let e = (r

1

,. ..,r

m

) be an event

where r

1

∈ R

1

,. .. ,r

n

∈ R

n

, then e is a record of the

projection (R

1

,. .. ,R

m

) over R with m ≤ n.

A total order relation ∼ on events can be defined

such that e

1

∼ e

2

∼ . .. ∼ e

n

. An event sequence is

a ∼-ordered sequence of events ε = e

1

,. .. ,e

n

. A Fi-

nite State Event Dataset (FSED) is an event sequence

derived from a longitudinal dataset.

Intuitively, the application of model checking

techniques to data quality problems is driven by the

idea that a model describing the consistent evolution

of feasible event sequences (i.e., a consistency model

expressed by means of FSSs) can be used to verify if

the actual data (i.e., data retrieved from the database)

follow a consistent behaviour. Then, the problem of

verifying a database content consistency can be ex-

pressed as a model checking problem on FSSs: a, so-

lution for the latter (if any) will represent an inconsis-

tent sequence of tuples for the former. Hence, from

here on, we will refer without distinction to an action

as an event and vice versa.

Although a whole database content could be

checked by a single FSS, in several domains it is ad-

visable to split the data into different subsets (e.g., for

computational reason). Then, the subsets (each being

a separate FSED) can be checked separately. To this

aim we introduce the following:

Definition 3 (Finite State Event Database). Let S

i

be a FSED, we define a Finite State Event Database

(FSEDB) as a database DB whose content is DB =

S

k

i=1

S

i

where k ≥ 1.

It should be clear that performing a model-based

data consistency evaluation requires a twofold effort:

(1) to define a consistency model of the data evo-

lution, and (2) to verify the data source (e.g., the

FSEDB introduced before) against the consistency

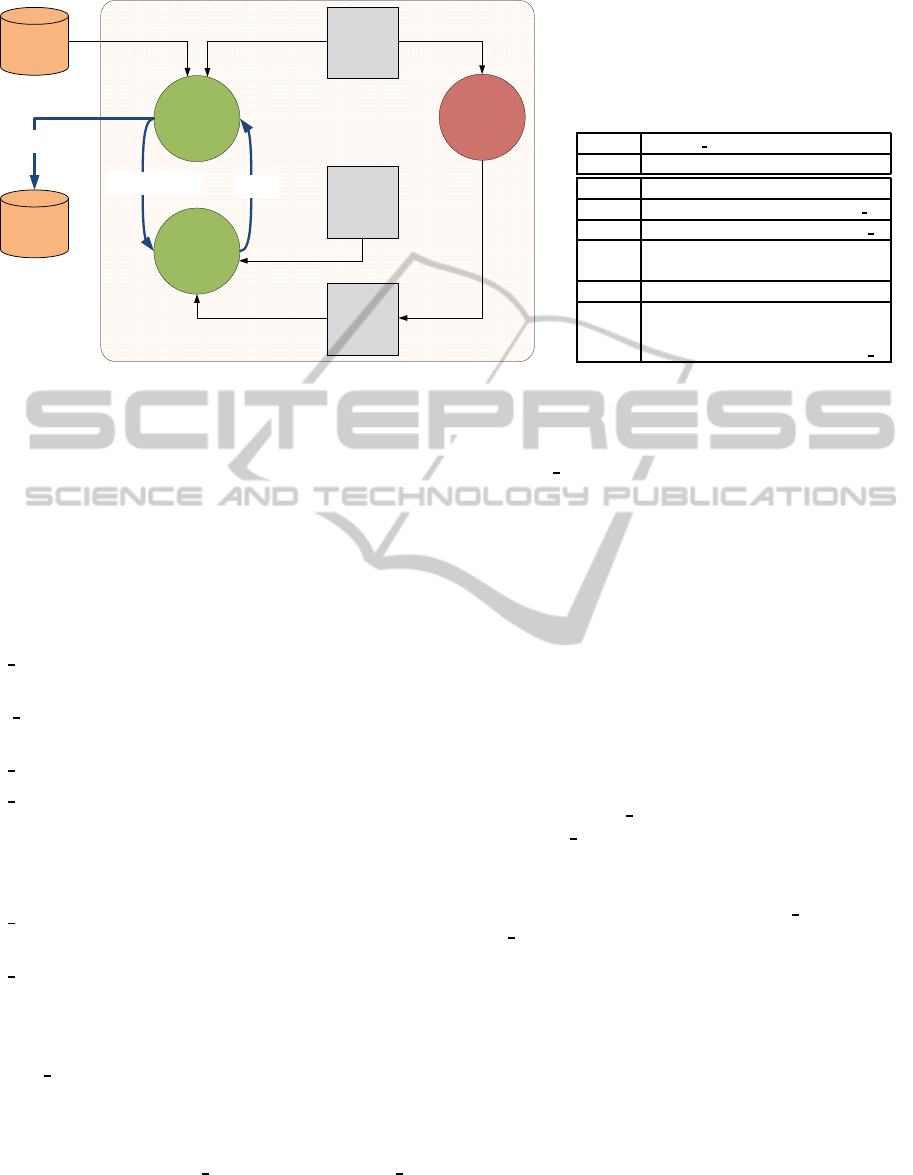

model. A schematic representation on how this task

can be accomplished by using a model checker is de-

picted in Fig. 1(b). We can distinguish three different

phases:

Step 1 (Data Modelling). A domain expert defines

the consistency model (e.g., Fig. 1(a)) describing

the correct evolution of the data through the model

checking tool language;

Step 2 (Data Verification). A dataset S

i

is retrieved

from the data source (S). The model checker auto-

matically generates an FSS representing the evo-

lution of the model defined by S

i

.

Step 3 (Data Analysis). The model checker looks

for an error trace on the FSS. A solution (if any)

represents an inconsistency affecting the dataset

on the sea

pos=sea

start

in the harbour

pos=harbour

city=CityX

EType

i

= “checkin

′′

∧City

i

= “CityX

′′

EType

i

= “checkout“∧City

i

=

′′

CityX“

(a)

Consistency

Model

Model

Checker

DBMS

S

S

+

i

S

−

i

let an event seq.

let a FSED S

i

S

i

is inconsistent

S

i

is consistent

(b)

Figure 1: (a) A Graphical representation of the consistency

model of the Travel Plan of a Cruise Ship domain. The

lower part of a node describes how the system state evolves

when an event happens. (b) A Graphical representation of

a model checking based data consistency verification of a

FSEDB.

S

i

. Otherwise the event sequence is considered

consistent.

The Cruise Ship Example. The following example

should clarify the matter. Let us consider the Cruise

Ship example as introduced in Tab. 1.

An FSED is the travel plan of a ship, the set of the

travel plans of the different ships is the FSEDB. An

event e

i

is composed by the attributes ShipID, City,

Date, and Event Type, namely e

i

= (ShipID

i

, City

i

,

Date

i

, EType

i

). Moreover, the total-order operator

∼ could be the binary operator ≤ defined over the

event’s attribute Date, hence ∀e

i

,e

j

∈ E, e

i

≤ e

j

iff

Date

e

i

≤ Date

e

j

. Finally, a simply consistency prop-

erty could be “if a ship checks in to harbour A, then

it will check out from A before checking in to the next

harbour“.

We can model this consistency property through

an FSS. A graphical representation is given in

Fig. 1(a), where the lower part of a node is used to

describe how the system state evolves when an event

happens. In our settings, the system state is composed

by (1) the variable pos, which describes the ship’s

position, and (2) the variable city describing the city

where the ship has arrived.

The data source S is an actual database instance

(e.g., an actual FSEDB) to be verified against the con-

sistency model. In such a case, for each different S

i

(i.e., for each different FSED) the model checker gen-

erates a different FSS modelling the S

i

consistency

evolution.

AutomaticSynthesisofDataCleansingActivities

141

4.1 From actual data to symbolic data

Unfortunately, since the consistency verification is

strongly related to the actual data (i.e., the FSS ex-

panded by the model checker models the evolution of

the database data), the identification of “generic” in-

consistent patterns or properties is hard to be accom-

plished.

To this aim, we use an abstraction of the actual

data, namely the symbolic data

1

, to discover generic

inconsistency patterns as well as to identify common

data properties. The following example should clarify

the concept.

The Cruise Ship Example. Let us consider again

the Cruise Ship example of Tab. 1. We recall

that e

i

= (ShipID

i

, City

i

, Date

i

, EType

i

), e

i

is

is an event, and each sequence or subsequence

of events is ordered with respect to the date val-

ues. Let us consider two inconsistent event se-

quences, related to two different ships, respec-

tively S

1

= (checkin,Venice),(checkout,Barcelona)

and S

2

= (checkin,Lisbon),(checkout,Naples). For

the sake of simplicity, we focus on very short se-

quences. As described before, these event sequences

will result in the generation of different FSSs. Nev-

ertheless, the inconsistencies found share a common

characteristic: the checkout has been made in a har-

bour different from the one where the last check-in

took place.

We replace the actual city domain data D

city

=

{Venice, Barcelona, Lisbon, Naples, ...} with a sym-

bolic domain composed by a (small) set of symbols

to identify some common inconsistency patterns in

the previous example. In other words, we can make

an abstraction of the domain D

city

by using only two

symbols, namely D

symbolic

City

= {City

X

,City

Y

}. Once a

map between actual to symbolic data has been done,

we can model the domain as shown in Tab. 2.

The number of symbols to be used, i.e. the sym-

bolic set cardinality has to be chosen according to the

criteria described below. More formally, we define

the following.

Definition 4 (Symbolic Data and Symbolic Do-

main). Let s be an FSS state and e be an

event with respectively s = x

1

,. ..,x

n

state vari-

ables and e = (r

1

,. .. ,r

m

) event attributes. Let D

be a finite (although very large) attribute domain

where {x

1

,. .. ,x

n

′

} ⊆ {x

1

,. .. ,x

n

} and {r

1

,. .. ,r

m

′

} ⊆

{r

1

,. .. ,r

m

} are instances of D, i.e., {x

1

,. .. ,x

n

′

} ∈ D

and {r

1

,. .. ,r

m

′

} ∈ D.

1

the idea is not new and it is inspired by the abstract

interpretation technique (Clarke et al., 1994).

An event e happening in the state s requires the

evaluation of x

1

,. ..,x

n

′

and r

1

,. .. ,r

m

′

values, namely

a configuration of n

′

+ m

′

different values of D. Then,

we define the Symbolic Domain of D as a set of dif-

ferent symbols d

1

,. ..,d

n

′

+m

′

, called Symbolic Data,

required to represent the values of D in the consis-

tency model, i.e. D

symbolic

= {d

1

,. .. ,d

n

′

+m

′

}.

In the Cruise Ship example the city state variable

and the City

i

event attribute both refer to the City

domain, therefore the latter can be replaced by the

symbolic domain D

symbolic

City

= {City

X

,City

Y

} in the au-

tomaton of Fig. 1(a). Finally, some trivial conditions

should be met before exploiting a Symbolic Domain

rather than the Actual Domain: (p1) no total order

relation is defined in the actual domain (or the total

order relation is not considered for the scope of the

analysis); (p2) No condition should compare a sym-

bol to a non-symbolic value (e.g. city = “Venice

′′

in

the Cruise Ship example).

Table 2: Values of the domain variables of the Cruise Ship

Example.

Variable Type Variable Domain Values

State Variables

Pos sea, harbour

City

City

x

, City

y

Event data

City

Event Type checkin, checkout

5 DATA CLEANSING VIA FSS

In the previous sections we described how the consis-

tency of a database event sequence can be modelled

and verified through model checking. Looking for-

ward, one can wonder if the consistency model can

be used as the basis to identify cleansing activities.

Namely, once the FSS describing the dataset consis-

tency evolution is generated, can the FSS be exploited

to identify the corrective events (or actions) able to

cleanse an inconsistent dataset?

Let us consider an inconsistent event sequence

having an action a

i

that leads to an inconsistent state

s

j

when applied on a (reachable) state s

i

. Intuitively,

a corrective action sequence represents an alternative

route leading the system from state s

i

to a state where

the action a

i

can be applied (without violating the

consistency rules). In other words, a cleansing ac-

tion sequence (if any) is a sequence of actions that,

starting from s

i

, makes the system able to reach a new

state on which the action a

i

can be applied resulting

in a consistent state. In this paper we assume that cor-

rections cannot delete or modify existing data as we

are intended to cleanse the data by preserving as much

as possible the source dataset.

DATA2013-2ndInternationalConferenceonDataManagementTechnologiesandApplications

142

More formally we can define the following.

Definition 5 (Cleansing Action Sequence). Let S =

(S,I,A,F) be an FSS, E be the set of errors states

(i.e. inconsistent states) and T be the finite horizon.

Moreover,

• let Ω =

[

i

i

∈I

Reach(i

i

) be the set of all the states

reachable from the initial ones;

• let π = s

0

a

0

.. . s

i

a

i

s

j

be an inconsistent trajec-

tory, that is a trajectory where s

j

∈ Ω is an incon-

sistent state (i.e., s

j

∈ E) and s

0

,. .. ,s

i

/∈ E.

Then, a T-cleansing action sequence for the pair

(s

i

,a

i

) is a non-empty sequence of actions A

c

=

c

0

,. .. ,c

n

∈ A, such that exists a trajectory π

c

= s

0

a

0

.. . s

i−1

a

i−1

s

i

c

0

s

i+1

.. . s

i+n

c

n

s

k

a

i

on S with |A

c

| ≤ T,

where all the states s

0

,. .. ,s

k

are consistent.

In the AI Planning field a Universal Plan (Schop-

pers, 1987) is a set of policies, computed off-line,

able to bring the system to the goal from any feasible

state (the reader can see (Cimatti et al., 1998; Della

Penna et al., 2012) for details). Similarly, we are in-

terested in the synthesis of an object, we call Univer-

sal Cleanser (UC), which summarises for each pair

(state, action) leading to an inconsistent state, the set

A

′

of all the feasible cleansing action sequences. This

UC is computed only once and then applied as an or-

acle to cleanse any kind of FSEDB. In this sense, a

(state, action) pair uniquely represents an error-code.

To this aim, we proceed as follows:

Step 1 (Data Modelling). A consistency model of

the system is formalised by means of a model

checking language as described in Sec. 4.

Step 2 (Database Modelling). A worst-case

FSEDB will be defined, i.e. a fictitious database

which contains all the possible event sequences,

both the consistent and the inconsistent ones,

composed by at most T events for each. Note

that this step does not require to really generate

such database, indeed it can be easily accom-

plished by allowing the model to receive any

kind of events. For the cruise ship example

a worst-case FSEDB is represented by all the

possible event sequences e

1

,. .. ,e

T

where the

variable values range in City

i

= {City

X

,City

Y

}

and EType

i

= {checkin,checkout}. Note that the

value of the finite horizon T can be identified as

the FSS diameter

2

.

2

Due to the limited space we provide only the intuition

about how this task can be accomplished. The value is com-

puted by the model checker as the diameter of the FSS, i.e.

the largest number of states which must be visited in or-

der to travel from one state to another excluding trajectories

which backtracks or loops.

Step 3 (Data Verification). Use the model checker

to generate the FSS representing all the inconsis-

tent sequences, starting from the database domain

model (step 2) and the consistency model (step

1), the whole process is shown in Fig. 1(b) as de-

scribed in Sec. 4

Step 4 (UC Synthesis). Explore the FSS to synthe-

sise the Universal Cleanser.

Now we are in state to formalise the Universal

Cleansing Problem (UCP) and its solution.

Definition 6 (Universal Cleansing Problem and So-

lution). A Universal Cleansing Problem (UCP) is a

triple D = {S ,E,T} where S = (S,I,A,F) is an FSS,

E be the set of error (or inconsistent) states computed

by the model checker, and T is the finite horizon.

A solution for D, or a Universal Cleanser for D is

a map K from the set Ω×A to a subset A

′

of the power

set of A, namely A

′

⊆ 2

A

, where for each inconsistent

trajectory π = s

0

a

0

.. . s

i

a

i

s

j

if A

′

6=

/

0 then A

′

must

contain all the possible T-cleansing action sequences

for the pair (s

i

,a

i

).

It is worth to highlight that, while on the one

hand the UC generated is domain-dependent, i.e. it

can deal only with event sequences conforming to

the model that generated it, on the other hand it is

data-independent since, once the UC is computed on

a worst-case FSEDB, it can be used to cleanse any

FSEDB. The pseudo code of the algorithm generat-

ing a Universal Cleanser is given in Algorithms 1 and

2. It has been implemented on the top of the UPMur-

phi tool (Della Penna et al., 2009) which has been en-

hanced with a disk-based algorithm to deal also with

big state spaces (Mercorio, 2013). The Algorithm 1

takes as input the FSS specification of the domain,

the set of error states given by the model checker (to

identify inconsistent trajectories) and a finite horizon

T. Then, it looks for a cleansing action sequence (ac-

cording to Def. 5) for each inconsistent (state, action)

pair. This work is recursively accomplished by the

Algorithm 2 which explores the FSS through a Depth-

First visit collecting and returning all the cleansing

solutions.

Running Example. Consider again the Cruise Ship

example of Tab. 1. We recall that an event e

i

is e

i

=

(ShipID

i

, City

i

, Date

i

, EType

i

) and each event se-

quence and subsequence is ordered with respect to the

event dates. It is worth to note that the finite horizon

T = 2 is enough to guarantee that any kind of incon-

sistency will be generated and then corrected using no

more than 2 actions. Consider that the main elements

of an event are EType

i

∈ {checkin,checkout},City

i

∈

AutomaticSynthesisofDataCleansingActivities

143

Table 3: 2-steps Universal Cleanser for the Cruise Ship Example.

([state],(action)) Corrective Actions

([pos = sea], (checkout,City

X

)) (checkin,City

X

)

([pos = harbour∧ city = City

X

], (checkout,City

Y

))

(checkout,City

X

)

(checkin,City

Y

)

([pos = harbour∧ city = City

X

], (checkin,City

Y

)) (checkout,City

X

)

([pos = harbour∧ city = City

X

], (checkin,City

X

)) (checkout,City

X

)

{City

X

,City

Y

}, i.e., 4 possible events. Then, we rep-

resent the wost-case FSEDB by considering into our

model all the possible 2-step event subsequences (i.e.,

simply enrich each node of the graph in Fig. 1(a) with

all the possible edges). Table 3 shows the Univer-

sal Cleansing for our example, which is minimal with

respect to the number of event variable assignments,

i.e., the missing pair ([pos = sea], (checkout,City

Y

))

fits on ([pos = sea], (checkout,City

X

)). The UC, once

generated, is able to cleanse any kind of FSEDB com-

pliant with the model from which it has been gener-

ated.

Algorithm 1: UNIVERSALCLEANSING.

Input: FSS S ,

set of error states E,

finite horizon T

Output: Universal Cleanser K

1: level ← 0; //to stop when T is reached

2: for all s ∈ S,a ∈ A s.t. F(s,a) = s

err

do

3: K [s,a] ← AUXUC(s,a,level)

4: return K

Algorithm 2: AUXUC.

Input: a state s,

an action a,

a finite horizon level

Output: list of correction sequences cs[]

1: cs[] ←

/

0 //list of correction sequences

2: cs

aux

[] ←

/

0 //aux list of correction sequences

3: i ← 0 //local cs[] index

4: if level < T then

5: for all a

′

∈ A s.t. F(s,a

′

) = s

′

with s

′

/∈ E do

6: if F(s

′

,a) = s

′′

s.t. s

′′

/∈ E then

7: cs[i] ← a

′

8: i ← i+ 1

9: else

10: cs

aux

[] ← AUXUC(s

′

,a,level + 1)

11: for all seq ∈ cs

aux

do

12: cs[i] ← a

′

∪ seq

13: i ← i+ 1

14: return cs[]

The Fig. 2(a) describes the overall cleansing pro-

cess. As a first step, a consistency model of the do-

main is defined while the Universal Cleanser is au-

tomatically synthesised according to the procedures

presented in Sec. 4. Then, the “Consistency Verifica-

tion” task verifies each sequence of the source (and

dirty) database S. When an inconsistency is found the

“Cleanse the Inconsistency” task scans the UC look-

ing for a correction. Since the UC may provide more

than one corrective actions for the same inconsis-

tency, a criterion (i.e., a policy) is required to select a

suitable correction. Once a correction has been prop-

erly identified, the inconsistency is fixed and the new

sequence is verified iteratively until no further incon-

sistencies are found. Finally, the new cleansed events

sequence is stored into the database “S Cleansed”.

It is worth noting that the cleansed results may

vary as the policy varies (i.e., the cleansed database

depends upon the input set of policies) which can be

fixed as well as evolve during the cleansing phase,

e.g., by using learning algorithms. Clearly, the best

suited policy for a given domain can be selected ac-

cording to several criteria, which often is driven by

the data analysis purposes. As an example, one could

be interested in studying the variation of an indicator

value computed on the cleansed data. To this aim, a

policy able to maximise or minimise the value of such

indicator should be applied, see e.g. (Mezzanzanica

et al., 2012). A discussion on how select a suitable set

of policies falls out of the scope of this work. Never-

theless, once the UC has been synthesised any kind of

policy can be applied.

6 AN OPEN DATA BENCHMARK

PROBLEM

The domain we are presenting is freely inspired by

the Italian labour market domain. Indeed, since the

1997, the Italian public administration has been devel-

oping an ICT infrastructure, called the “CO System”,

for recording data concerning employment and active

labour market policies, generating an administrative

archive useful for studying the labour market dynam-

ics, e.g., (Martini and Mezzanzanica, 2009). Accord-

ing to the Italian labour market laws, every time an

employer hires or dismisses an employee, or an em-

ployment contract is modified (e.g. from part-time

to full-time, or from fixed-term to unlimited-term), a

DATA2013-2ndInternationalConferenceonDataManagementTechnologiesandApplications

144

Cleanse the

Inconsistency

Cleansing

Feedback

Consistency

Model

Consistency

Verification

UC

Generation

S

Dirty

Universal

Cleanser

Cleansing

Policy

S

Cleansed

Sequence

consistent

Inconsistency found

on the sequence

(a)

State emp k[FT,Limited,CompanyX]

Action (st,PT,Limited,CompanyY)

Seq. 1 (cs,FT,Limited,CompanyX) → unemp

Seq. 2 (cn,PT,Limited,CompanyX) → emp i

Seq. 3 (cn,PT,Unlimited,CompanyX) → emp i

Seq. 4

(cn,FT,Unlimited,CompanyX)

(cs,FT,Unlimited,CompanyX) → unemp

... ...

Seq. 9 (cn,FT,Unlimited,CompanyX)

(cn,FT,Limited,CompanyX)

(cn,PT,Unlimited,CompanyX) → emp i

(b)

Figure 2: (a) A graphical representation of the Consistency Verification and Cleansing Processes. (b) Some corrective action

sequences given by the UC for the error-code 289 with st = start, cs = cessation, cn = conversion and ex = extension.

communication (i.e., an event) is sent to a job reg-

istry, managed at local level. These communications

are called Mandatory Communications.

6.1 Domain Modelling

Each mandatory communication is stored into a

record composed by the following attributes:

e

id: it represents an id identifying the communica-

tion;

w

id: it represents an id identifying the person in-

volved in the event;

e

date: it is the event occurrence date;

e

type: it describes the type of events occurring to

the worker career. Events types are the start or

the cessation of a working contract, the extension

of a fixed-term contract, or the conversion from a

contract type to a different one;

c

flag: it states whether the event is related to a full-

time or a part-time contract;

c

type: describes the contract type with respect to the

Italian law. Here we consider Limited, i.e. fixed-

term, and unlimited, i.e. unlimited-term, con-

tracts.

empr

id: it uniquely identifies the employer in-

volved in the event.

The evolution of a consistent worker’s career

along the time is described by a sequence of events

ordered with respect to e date and grouped by w id:

the sequence can be considered as longitudinal data.

Considering the terminology introduced in Def. 1 and

Def. 2, an FSED is the ordered set of events for

a given w

id, and the FSEDs union composes the

FSEDB. Now we closely look to the worker careers

consistency, where the consistency semantics is de-

rived from the Italian labour law, from the domain

knowledge, and from the common practice. Here are

reported some constraints:

c1: an employee can have no more than one full-time

contract active at the same time;

c2: an employee cannot have more than K part-time

contracts (signed by different employers); in our

context we assume K = 2 i.e., an employee cannot

have more than two part time jobs active at the

same time;

c3: a contract extension cannot change the existing

contract type (c type) and the part-time/full-time

status (c flag) e.g., a part-time and fixed-term

contract cannot be turned into a full-time contract

by an extension;

c4: a conversion requires either the c

type or the

c flag to be changed (or both).

For simplicity, we omit to describe some trivial

constraints e.g., an employee cannot have a cessation

event for a company for which she/he does not work,

an event cannot be recorded twice, etc.

The UPMurphi tool allows us to build an FSS un-

pon which we perform the data consistency task. A

worker’s career at a given time point (i.e., the sys-

tem state) is composed by three elements: the list of

companies for which the worker has an active con-

tract (C[]), the list of modalities (part-time, full-time)

for each contract (M[]) and the list of contract types

(T[]).

To give an example, C[0] = 12, M[0] = PT, T[0] =

AutomaticSynthesisofDataCleansingActivities

145

unlimited models a worker having an active unlim-

ited part-time contract with company 12.

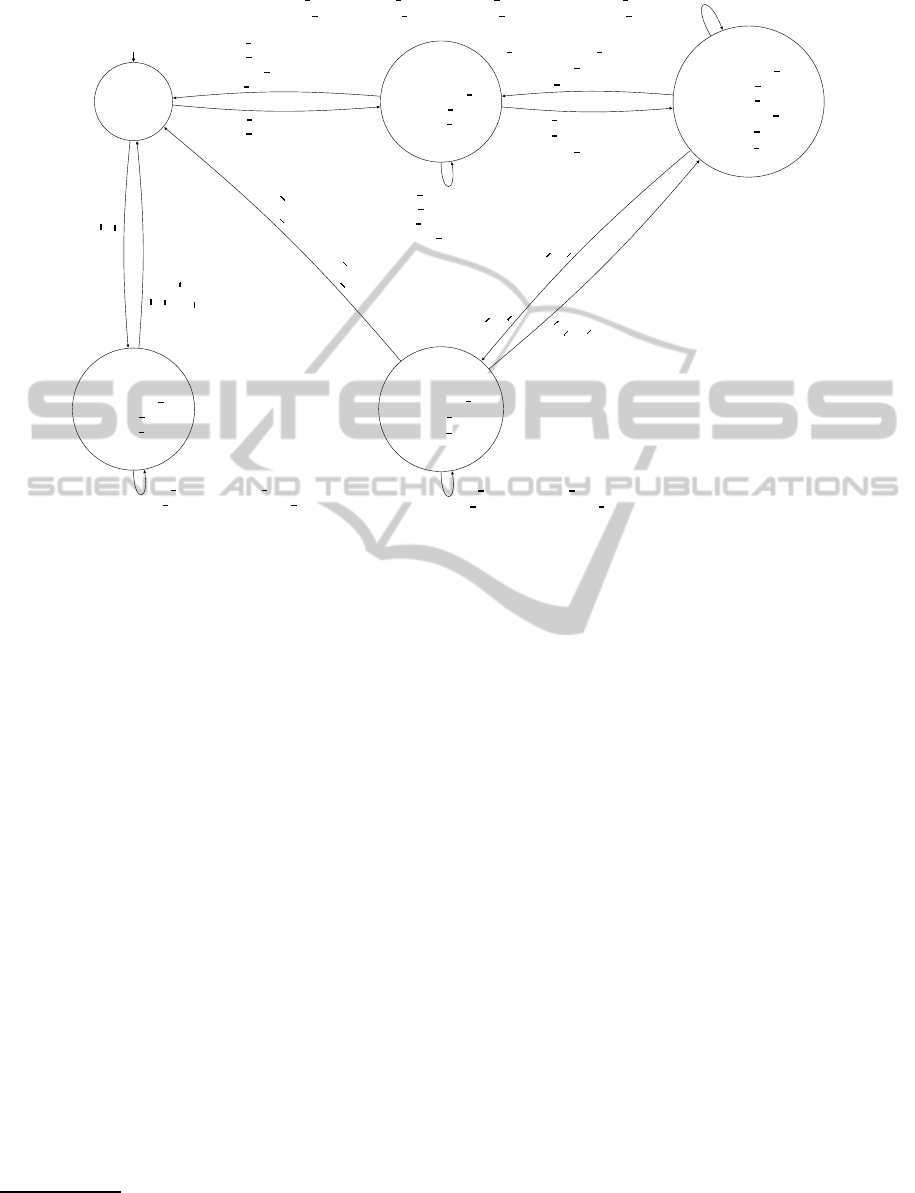

A graphical representation of the domain is

showed in Figure 3 and it outlines a consistent ca-

reer evolution. Note that, to improve the readabil-

ity, we omitted to represent conversion events as well

as inconsistent states/transitions (e.g., a worker ac-

tivating two full-time contracts), which are handled

by the FSS generated by the UPMurphi model. A

valid career can evolve signing a part-time contract

with company i, then activating a second part-time

contract with company j, then closing the second

part-time and then reactivating the latter again (i.e.,

unemp,emp

i

, emp

i, j

, emp

i

,emp

i, j

).

From Actual to Symbolic Data. A mapping from

actual to symbolic data has been identified as de-

scribed in Sec 4.1 taking into account both states and

events of the automaton of Fig. 3.

We recall that, for the sake of clarity the automa-

ton shows only the consistent transitions triggered by

the events allowed in a state, whilst the model checker

automatically manages also inconsistent transitions,

i.e. transitions triggered by events that lead to an “er-

ror state”.

The attributes c

type, e type, and c flag are al-

ready bounded and we left them as is, while the

empr

id attribute domain has been mapped on a sym-

bolic set of 3 symbols {empr

x

,empr

y

,empr

z

} accord-

ing to the process described in Sec. 4.1.

Finally, we highlight that the model satisfies the

conditions p1 and p2 introduces in the Sec. 4.1,

namely: (1) a total order relation for the empr id do-

main is defined but it is not considered in the automa-

ton, and (2) there are no conditions comparing a sym-

bolic value with a non symbolic one.

6.2 Experimental Results

Here we comment some results about the consistency

verification process performed on the dataset pre-

sented in Sec. 6.3. Note that, in order to analyse the

quality of the source dataset (wrt consistency), UP-

Murphi stops the verification algorithm when an in-

consistency is found, avoiding the evaluation of the

remaining part of the career. Indeed a further evalua-

tion of a career consistency may be affected by the

cleansing policy applied, then falsifying the results

about the quality of the source dataset.

As first step, we synthesised the UC, identify-

ing 342 different error-codes, i.e. all the possible

3-steps (state,action) pairs leading to an inconsistent

state of the model. Then, the verification process on

the dataset caught 92,598 inconsistent careers (i.e.,

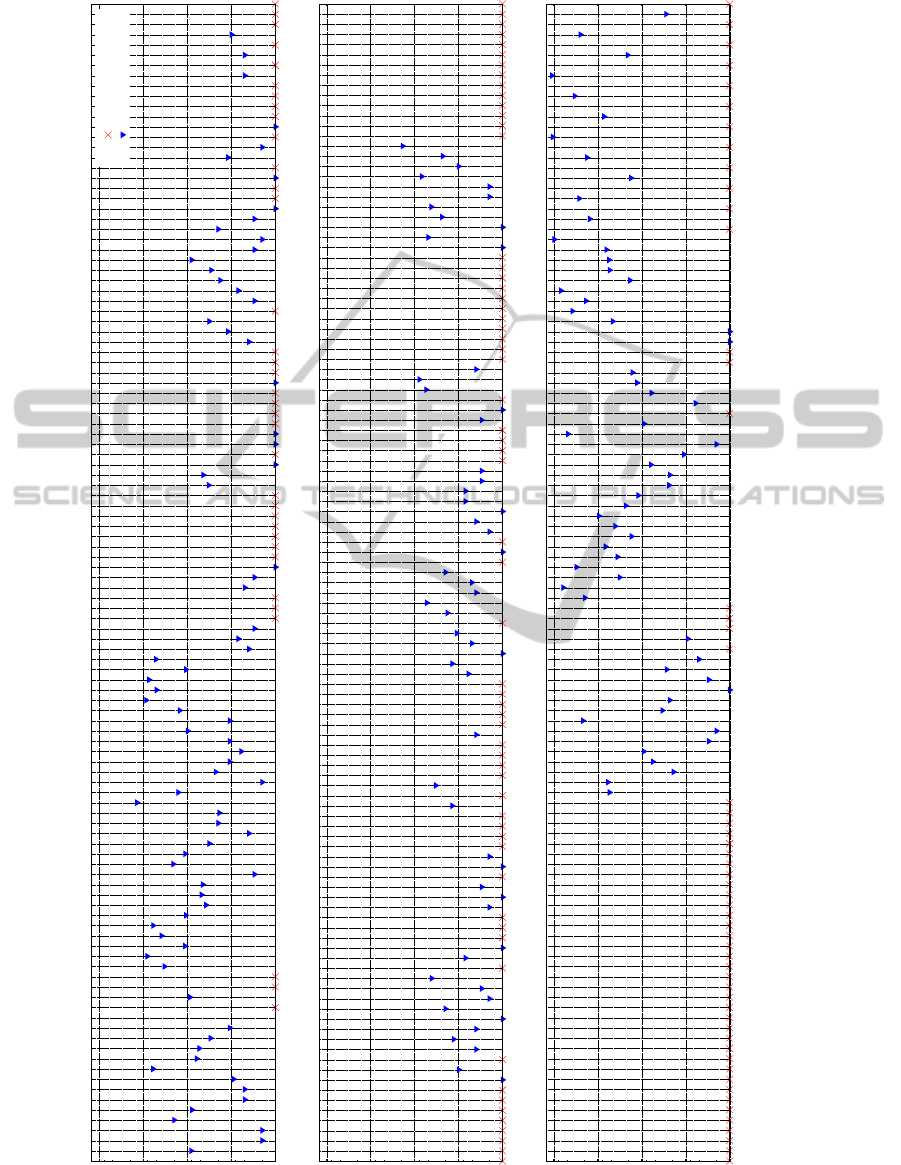

the 43% of total careers). The Fig. 4 shows a graph-

ical distribution of the error-codes found. The x-axis

reports the error-codes of the UC while the y-axis

summarises the number of careers affected by that er-

ror. Several analyses can be performed on such con-

sistency outcomes. Nevertheless, since the aim of

this work is to provide a technique and a benchmark

dataset so that other approaches, comparisons and sta-

tistical analysis can be performed on such data, we

restrict ourselves to consider the following.

• The closer the error-codes, the similar the error

characteristics. We discovered that the three most

numerous error codes (i.e., 335, 329 and 319 rep-

resenting about the 30% of total inconsistencies)

arose due to an extension, cessation or conversion

event received when the worker was in the unem-

ployed status. Hence, cleansing activities for such

careers may have a great impact on the quality of

the cleansed data.

• Some error-codes require no less than 3 correc-

tive actions to cleanse the data. As an exam-

ple we report the case of the error-code 53: A

worker having two active part-time contracts with

CompanyX and CompanyY receives the cessation

of a full-time contract for a third CompanyZ. In

such a case, a corrective action sequence requires

at least three actions to fix the inconsistencies,

i.e., to close the contract with CompanyX and

CompanyY and then to start a new full-time con-

tract with CompanyZ.

• The UC helps to discover cleansing activities that

might otherwise be neglected. To this regard, let

us consider the case of a worker having a full-

time contract with a CompanyX which receives a

start of a new part-time contract with CompanyY.

Looking at the model, a domain expert can ar-

gue that probably the worker has closed the full-

time contract, but the communication was lost. As

a consequence, a hand-written cleansing activity

may fix the inconsistency by closing the full-time

contract. Nevertheless, for such inconsistency

(i.e., the error-code 289) the UC returns 9 differ-

ent cleansing sequences, as shown in Tab. 2(b),

which can contribute in the identification of alter-

native cleansing policies.

It is worth noting that applying different correctiveac-

tions may lead to different cleansed states, as in the

latter example where fixing the inconsistency through

Seq.1 leads the worker career to the unemployed state

whilst the application of Seq.3 brings the career to

a different one (i.e., the emp

i). Hence, for a high-

quality cleansing process the joint utilisation of UC

and domain-dependent policies is required.

DATA2013-2ndInternationalConferenceonDataManagementTechnologiesandApplications

146

unemp

C[] =

/

0

M[] =

/

0

T[] =

/

0

startstart

emp

i

C[0] = empr

id

e

i

M[0] = c

flag

e

i

T[0] = c

type

e

i

emp

j

C[1] = empr

id

e

j

M[1] = c

flag

e

j

T[1] = c

type

e

j

emp

i, j

C[0] = empr

id

e

i

M[0] = c

flag

e

i

T[0] = c

type

e

i

C[1] = empr

id

e

j

M[1] = c

flag

e

j

T[1] = c

type

e

j

emp

k

C[0] = empr

id

e

k

M[0] = c

flag

e

k

T[0] = c

type

e

k

e type

e

i

= st

∧c

flag

e

i

= PT

e type

e

i

= cs

∧c

flag

e

i

= PT

∧empr

id

e

i

= C[0]

∧c

type

e

i

= T[0]

e type

e

j

= st∧

c

flag

e

j

= PT

∧empr

id

e

j

6= C[0]

e type

e

j

= cs∧ c

flag

e

j

= PT

∧empr

id

e

j

= C[1]

∧c

type

e

j

= T[1]

e

type

e

i

= st

∧c

flag

e

i

= PT

∧empr

id

e

i

6= C[1]

e

type

e

i

= cs∧ c

flag

e

i

= PT∧

empr

id

e

i

= C[0] ∧ c

type

e

i

= T[0]

e

type

e

j

= cs∧ c

flag

e

j

= PT

∧empr

id

e

j

= C[1] ∧ c

type

e

j

= T[1]

e

type

e

k

= st

∧c

flag

e

k

= FT

e

type

e

k

= cs

∧c

flag

e

k

= FT

∧empr

id

e

k

= C[0]

∧c

type

e

k

= T[0]

e type

e

i

= ex

∧c

flag

e

i

= PT

∧c

type

e

i

= T[0]

∧empr

id

e

i

= C[0]

(e type

e

j

= ex∧c

flag

e

j

= PT ∧ c

type

e

j

= T[1] ∧empr

id

e

j

= C[1])

∨(e

type

e

i

= ex∧c

flag

e

i

= PT ∧ c

type

e

i

= T[0] ∧empr

id

e

i

= C[0])

e type

e

j

= ex∧c flag

e

j

= PT

∧c

type

e

j

= T[0] ∧empr

id

e

i

= C[0]

e type

e

k

= ex∧c

flag

e

k

= FT

∧c

type

e

k

= T[0] ∧empr

id

e

k

= C[0]

Figure 3: A graphical representation of a valid worker’s career FSS where st = start, cs = cessation, cn = conversion and

ex = extension.

6.3 Online Dataset Description

The whole dataset and the experimental results

presented in Sec. 6.2 has been made publicly avail-

able for download

3

. The source archive contains

1,248,814 mandatory communications describing

the careers of 214,429 people observed starting from

the 1

st

January 2001 to the 31

st

December 2010. The

dataset is composed by the following tables:

The Worker Careers. It is a table composed by 7

columns, whose semantics has been detailed in

Sec. 6.1.

The Consistency Verification Results. It is a table

composed by three columns, namely the worker

id, the error code and the error index of the

event after the shortest consistent subsequence:

Considering a career composed by n events, an

error index i with 0 ≤ i < n means that i − 1

events make the career consistent whilst the i-th

event makes it inconsistent.

The Universal Cleanser. It has been generated ac-

3

http://goo.gl/zrbrR. The username is:

data2013materials@gmail.com Password: data2013

cording to Def. 6 on the consistency model of

Fig. 3.

7 CONCLUDING REMARKS

In this paper we have shown how a model-based

approach can be used to verify and cleanse a dirty

dataset, providing an algorithm (build on top of the

UPMurphi tool) to automatically synthesise a uni-

versal cleanser that, as a characteristic, is domain-

dependent (i.e., it copes with consistency issues

for a given domain) but data-independent (i.e., it

can cleanse any kind of dataset compliant with the

model).

Moreover, we presented a real-world scenario in

the labour market domain for which the universal

cleanser has been computed. As a further contri-

bution, an anonymous version of the dataset used

has been made available for download (according to

the current law and privacy requirements) together

with the cleanser and the consistency verification out-

comes. Our results confirm the usefulness of ex-

ploiting model-based verification and cleansing ap-

proaches in the data quality field, as it may help do-

main experts and decision makers to have a better

comprehension of the domain aspects, of the data pe-

AutomaticSynthesisofDataCleansingActivities

147

1

10

100

1000

10000

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

Cardinality (logarithmic scale)

Error Code

1

10

100

1000

10000

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

Cardinality (logarithmic scale)

1

10

100

1000

10000

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

Cardinality (logarithmic scale)

Consistency Verification - Results

Error Code present

Error Code not present

Figure 4: A graphical visualisation of the distribution of the error-codes found.

DATA2013-2ndInternationalConferenceonDataManagementTechnologiesandApplications

148

culiarities, and of the cleansing issues.

ACKNOWLEDGEMENTS

The authors would like to thank the anonymous re-

viewers for their valuable comments and suggestions.

REFERENCES

Afrati, F. N. and Kolaitis, P. G. (2009). Repair checking in

inconsistent Databases: Algorithms and Complexity.

In ICDT, pages 31–41. ACM.

Bartolucci, F., Farcomeni, A., and Pennoni, F. (2012). La-

tent Markov models for longitudinal data. Boca Ra-

ton, FL: Chapman & Hall/CRC Press.

Batini, C. and Scannapieco, M. (2006). Data Quality: Con-

cepts, Methodologies and Techniques. Data-Centric

Systems and Applications. Springer.

Bertossi, L. (2006). Consistent query answering in

databases. ACM Sigmod Record, 35(2):68–76.

Bertossi, L. E., Kolahi, S., and Lakshmanan, L. V. S. (2011).

Data cleaning and query answering with matching de-

pendencies and matching functions. In Milo, T., edi-

tor, ICDT, pages 268–279. ACM.

Choi, E.-H., Tsuchiya, T., and Kikuno, T. (2006). Model

checking active database rules under various rule pro-

cessing strategies. IPSJ Digital Courier, 2(0):826–

839.

Cimatti, R., Roveri, M., and Traverso, P. (1998). Automatic

OBDD-based generation of universal plans in non-

deterministic domains. In AAAI-98, pp. 875–881.,

pages 875–881. AAAI Press.

Clarke, E. M., Grumberg, O., and Long, D. E. (1994).

Model checking and abstraction. ACM Transactions

on Programming Languages and Systems (TOPLAS),

16(5):1512–1542.

Clarke, E. M., Grumberg, O., and Peled, D. A. (1999).

Model Checking. The MIT Press.

Della Penna, G., Intrigila, B., Magazzeni, D., and Mercorio,

F. (2009). UPMurphi: a tool for universal planning on

PDDL+ problems. In ICAPS 2009, pages 106–113.

AAAI Press.

Della Penna, G., Magazzeni, D., and Mercorio, F. (2012).

A universal planning system for hybrid domains. Ap-

plied Intelligence, 36(4):932–959.

Dovier, A. and Quintarelli, E. (2009). Applying

Model-checking to solve Queries on semistructured

Data. Computer Languages, Systems & Structures,

35(2):143 – 172.

Elmagarmid, A., Ipeirotis, P., and Verykios, V. (2007). Du-

plicate record detection: A survey. IEEE Transactions

on knowledge and data engineering, 19(1):1–16.

Fan, W. (2008). Dependencies revisited for improving data

quality. In the ACM SIGMOD-SIGACT-SIGART sym-

posium on Principles of database systems, pages 159–

170. ACM.

Fayyad, U. M., Piatetsky-Shapiro, G., and Uthurusamy, R.

(2003). Summary from the kdd-03 panel: data min-

ing: the next 10 years. ACM SIGKDD Explorations

Newsletter, 5(2):191–196.

Maletic, J. and Marcus, A. (2000). Data cleansing: beyond

Integrity Analysis. In IQ, pages 200–209.

Maletic, J. and Marcus, A. (2010). Data cleansing: A

prelude to knowledge discovery. In Data Mining

and Knowledge Discovery Handbook, pages 19–32.

Springer US.

Martini, M. and Mezzanzanica, M. (2009). The Federal Ob-

servatory of the Labour Market in Lombardy: Models

and Methods for the Costruction of a Statistical Infor-

mation System for Data Analysis. In Information Sys-

tems for Regional Labour Market Monitoring - State

of the Art and Prospectives. Rainer Hampp Verlag.

Mayfield, C., Neville, J., and Prabhakar, S. (2009). A Sta-

tistical Method for Integrated Data Cleaning and Im-

putation. Technical Report CSD TR-09-008, Purdue

University.

Mercorio, F. (2013). Model checking for universal planning

in deterministic and non-deterministic domains. AI

Communications, 26(2).

Mezzanzanica, M., Boselli, R., Cesarini, M., and Merco-

rio, F. (2012). Data quality sensitivity analysis on

aggregate indicators. In DATA 2012, pages 97–108.

SciTePress.

Neven, F. (2002). Automata theory for XML researchers.

SIGMOD Rec., 31:39–46.

Schoppers, M. (1987). Universal plans of reactive robots in

unpredictable environments. In Proc. IJCAI 1987.

Singer, J. and Willett, J. (2003). Applied longitudinal data

analysis: Modeling change and event occurrence. Ox-

ford University Press, USA.

Vardi, M. (1987). Fundamentals of dependency theory.

Trends in Theoretical Computer Science, pages 171–

224.

Vardi, M. Y. (1992). Automata Theory for Database Theo-

reticians. In Theoretical Studies in Computer Science,

pages 153–180. Academic Press Professional, Inc.

AutomaticSynthesisofDataCleansingActivities

149