Acquiring Method for Agents’ Actions using Pheromone

Communication between Agents

Hisayuki Sasaoka

Asahikawa National College of Technology, Shunkohdai 2-2, Asahikawa, Hokkaido, 071-8142, Japan

Keywords: The Swarm Intelligence, Ant Colony System, Multi-Agent System, Robocup.

Abstract: We have known that an algorithm of Ant Colony System (ACS) and Max-Min Ant System (MM-AS) based

on ACS are one of powerful meta-heuristics algorithms and some researchers have reported their

effectiveness of some applications using then. On the other hand, we have known that the algorithms have

some problems when we employed them in multi-agent system and we have proposed a new method which

is improved MM-AS. This paper describes some results of evaluation experiments with agents

implemented our proposed method. In these experiments, we have used seven maps and scenarios for

RoboCup Rescue Simulation system (RCRS). To confirm the effectiveness of our method, we have

considered agents’ action for fire-fighting in simulation and their improvements of scores.

1 INTRODUCTION

We know that real ants are social insects and there is

no central control and no manager in their colony.

However each ant can work very well (Gordon,

1999), (Keller and Gordon, 2009), (Wilson and

Duran, 2010). Dorigo et al. have inspired real ants’

feeding actions and their pheromone

communications. Then they have proposed the

algorithm of Ant System (Dorigo et al., 1996). Some

researchers have reported the effectiveness of

systems installed the algorithms and their improved

algorithms (Dorigo and Stützle, 2004), (Bonabeau et

al., 1999), (Bonabeau et al., 2000). Ant Colony

Optimization (ACO) and Ant Colony System (ACS)

have become a very successful and widely used in

some applications. These algorithms have been used

in some types of application programs (Hernandez et

al., 2008), (D'Acierno et al., 2006), (Balaprakash et

al., 2008). The system based on ACO and ACS are

used artificial ants cooperate to the solution of a

problem by exchanging information via pheromone.

Stützle, T. and Hoos, H.H. have proposed MAX-

MIN Ant System (MM-AS) (Stützle and Hoos,

2010). It derived from Ant System and achieved an

improved performance compared to AS and to other

improved versions of AS for travelling salesperson

problems (TSP).

There are a lot of distributed constraint

satisfiability problems and researchers tackle

problems by their method. For example, TSP,

network routing problems and so on. However, they

have no noise when they are solving problems and

information to resolve problems, for example

distances between visiting cities in TSP, are given in

advance. Moreover their situations have never

changed for each simulation steps. To resolve

problems in the real social, situations in environment

are always changing, dynamically. In some cases,

we are disable to know cues to resolve the problem

in advance. In other case, some outer noise gets

information erased or interpolation them.

On the other hand, in a situation of RoboCup

rescue simulation system, agents need to handle

huge amount of information and take actions

dynamically. Therefore, this simulation system of

RoboCup rescue is a very good test bed for multi-

agent research and we have used it in this research.

This paper addresses a problem of ACS and MM-AS

and we propose our proposed method based on MM-

AS and apply to agents of fire-brigade agents in my

team on RoboCup Rescue Simulation System

(Skinner and Ramchurn, 2010), (RoboCup Rescue

Simulation Project), (RoboCup Japan Open, 2013).

We have done some evaluation experiments and we

report the results of experiments.

91

Sasaoka H..

Acquiring Method for Agents’ Actions using Pheromone Communication between Agents.

DOI: 10.5220/0004538300910096

In Proceedings of the 5th International Joint Conference on Computational Intelligence (ECTA-2013), pages 91-96

ISBN: 978-989-8565-77-8

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

2 BASIC IDEA

2.1 Our Method

We have proposed an algorithm of method based on

MM-AS (Sasaoka, 2013). In our method, the range

of pheromone trail value is decided by hand from

preliminary experiment. Moreover, we have

confirmed that there is a noise of pheromone trail in

the initial steps of updating pheromone trails. Then,

our algorithm has calculated by formula (1) in the

initial steps. This ρ

init

aims to cut down effect from

the noise and the value is also decided by hand.

() (1 ) () ()

k

ij ij init ij

ttt

(1)

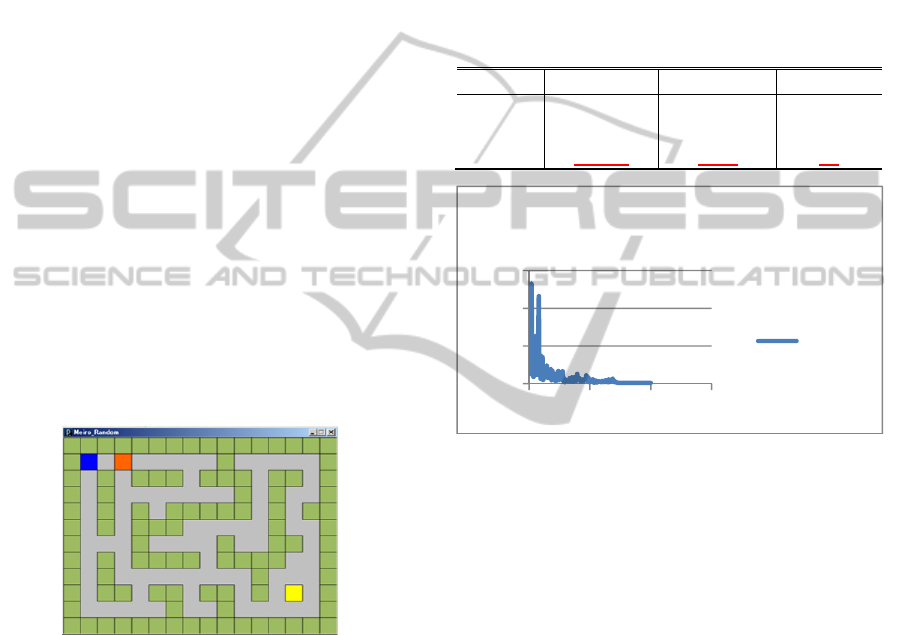

2.2 Preliminary Experiment

To confirm the effectiveness of our proposed

method, we have done some preliminary

experiments with a grid-world task. Figure 1(Nikkei

Software, 2011) shows the grid-world and a blue

grid means a place of start and a yellow grid means a

place of goal in the figure. Moreover light-gray parts

mean a pathway for an agent, light-green parts mean

walls and an orange grid means a position of a

moving agent. The shortest number of step by a

agent is 22 steps in this task.

Figure1: A task of grid-world in this experiment.

We prepared three types of agent to resolve this task

and we have repeated 50 times by each agent. They

are below,

agent A: moves randomly on the pathway.

agent B: is implemented an algorithm of ACS

and runs on the pathway.

agent C: is implemented an algorithm of our

proposed method and runs on the pathway.

Table 1 shows averages of steps, maximum

numbers of steps and minimum numbers of steps in

the experiments. Figure 2 shows improving number

of steps by agent C. From these results, agent C has

achieved the best result in the trials. Moreover, agent

C has found its shortest path at the 146th trial. From

the results and the figure, we confirmed the

effectiveness of our method. We have considered

that agent C has depressed negative effects on its

initial learning process. Agent B has not placed them

under the control. In this task, there are some loop

ways on pathway. For the loop way, agent B has run

the same loop way on numerous times. The reason is

that agent B has sprayed pheromone on pathway and

it has selected the highest concentration of

pheromone. However agent C has not get the same

situation as agent B.

Table1: Results of preliminary experiment.

Average Maximum Minimum

agent A 969.46 4302 78

agent B 1445.62 8217 78

agent C

470.92

2612 42

Figure2: Results by agent C (from 1st to 200th trial).

3 ABOUT ROBOCUP RESCUE

SIMULATION SYSTEM

We have employed RoboCup Rescue Simulation

system (RCRS) as a test-bed. This system has its

server and four different types of agents. They are a

fire-brigade agent, a police-force agent, an

ambulance agent and a civilian agent and they hold

correspondence with each program and they have

been able to simulate a situation of a city’s disaster.

Moreover the system has been able to simulate

different situations in each conditions and maps for

simulators.

The RoboCup Project System intends to promote

researches which scope the disaster mitigation,

search and rescue problems. Then we need to

develop three types of agents, which are a fire-

brigade agent, a police-force agent and an

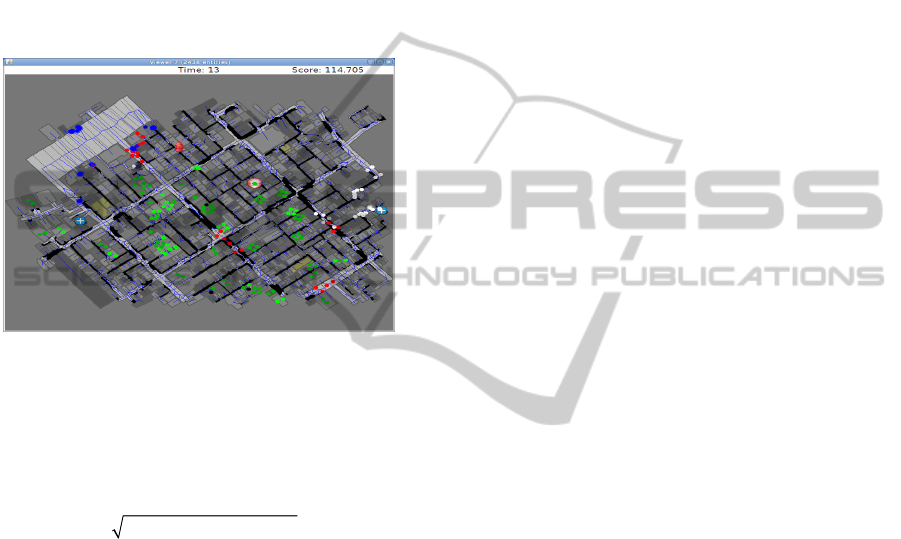

ambulance agent. Figure 3 shows a screen shot of a

performance of the simulation system. It shows a

map of city and deep gray rectangles indicates

0

1000

2000

3000

0 100 200 300

agentC

agentC

IJCCI2013-InternationalJointConferenceonComputationalIntelligence

92

buildings and light gray rectangle shows roads.

Black parts on the roads means blocks on the road

and agents cannot go through the place at the block.

In the figure, red circles indicate fire-brigade agents

and a mark of fire plug means a centre of fire-

brigade. Blue circles indicate police-force agents and

a mark of policeman helmet means a centre of

police-force agents. White circles indicate

ambulance agents and a mark of white cross means a

centre of ambulance agents. Green circles indicate

civilian agents and a mark of red house means an

emergency refuge centre.

Figure 3: An example of simulation map in RoboCup

Rescue Simulation system.

RCRS server program has evaluated actions by each

type of agents and it has calculated scores. The score

is calculated by formula (2).

of surviving civilian agent

of building damage

score a number

rate

(2)

4 OUR PROPOSED ALGORITHM

FOR FIRE-BRIGADE AGENTS

We have applied our algorithm to searching actions

to a water supplying point for fire-brigade agents.

The searching algorithm has two steps. It has shown

below,

1. In the case that the agents has no water to

extinguish a fire,

(1-a) in the case that the agent has known a way

to a water supply position, it heads along the way.

(1-b) in the case that the agent has not known a

way to a water supplying point, it heads a way in

random order.

2. In the other case, the agent has enough water, it

heads for a fire point.

Moreover, the action of updating pheromone has

two steps. It has shown below:

1. After the agent is able to get water, it does

“say” command to broadcast a point of water

supply position.

2. Other agents who do not have water track back.

5 EVALUATION EXPERIMENT

5.1 Procedures

We have developed experimental agents based on

sample agents whose source codes are included

RCRS simulator-package file. We have prepared

three different types of fire-brigade agents (Sasaoka,

2013). They are below,

Type-A Agents: are equal to base fire-brigade

agents.

Type-B Agents: are implemented our proposed

algorithm.

Type-C Agents: are implemented our proposed

algorithm. Moreover they select only best path

calculated by pheromone’s concentrate.

With these agents, we have prepared these four

different teams of fire-brigade teams based on the

agent Type-A, Type-B and Type-C. They are below,

Team A: has fire-brigade agent Type-A(50%),

Type-B(25%) and Type-C(25%).

Team B: has fire-brigade agent Type-B(50%),

Type-A(25%) and Type-C(25%).

Team C: has fire-brigade agent Type-C(50%),

Type-A(25%) and Type-B(25%).

Team D: has fire-brigade agent Type-A(33%),

Type-B(34%) and Type-C(33%).

From previous research (Sasaoka, 2013)., we

confirmed that Team C can achieve the best score

among them on only one map. In this research, we

have compared between this Team C and Team E.

Team E has been organized by sample agents which

are included RCRS simulator-package file and are

our base-agents.

Moreover we have prepared seven maps and

scenarios for RoboCup rescue simulation. They have

used in RoboCup 2012 international competition

(RoboCup Rescue Simulation Project) and RoboCup

Japan Open 2013 competition (RoboCup Japan

Open, 2013). They are below,

Map 1: a map is a central part of Kobe and a

score is 121.000 at the start of simulation.

Map 2: a map is Ritsumeikan University area

and a score is 84.772 at the start of simulation.

Map 3: a map is Virtual City and a score is

150.948 at the start of simulation.

Map 4: a map is a central part of Paris and a

AcquiringMethodforAgents'ActionsusingPheromoneCommunicationbetweenAgents

93

Table 2: Results of evaluation experiment.

A score at the start Team C Team E

Map 1 121.000

87.198

72.030

Map 2 84.772

12.350

6.976

Map 3 150.948

20.401

10.787

Map 4 140.000

38.408

27.640

Map 5 67.000

28.078

15.136

Map 6 106.000

39.808

35.785

Map 7 183.000

47.298

24.902

Average 121.817

39.077

27.608

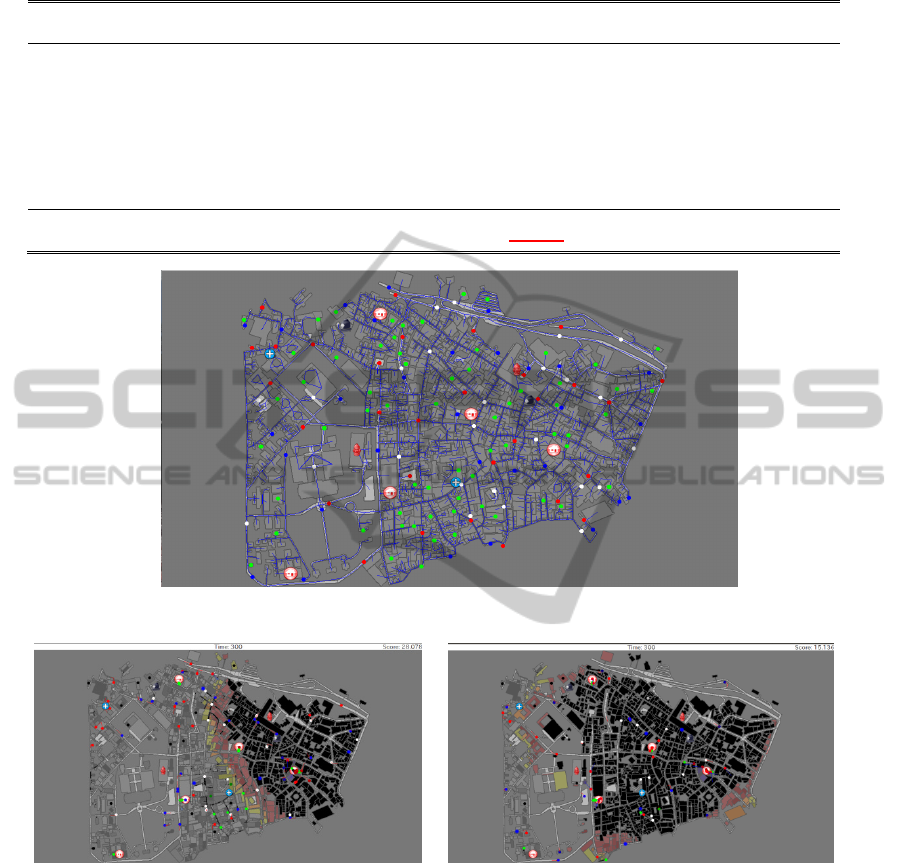

Figure 4: Map 5 before start of simulation.

Figure 5: Map 5 after 300 steps of simulation time

by Team C.

Figure 6: Map 5 after 300 stepsof simulation time by

Team E.

score is 140.000 at the start of simulation.

Map 5: a map is a central part of Istanbul and a

score is 67.000 at the start of simulation.

Map 6: a map is a central part of Mexico City

and a score is 106.000 at the start of simulation.

Map 7: a map is a central part of Eindhoven and

a score is 183.000 at the start of simulation.

5.2 Results

Table 2 shows the results of this experiment. The

average score of Team C is 45.427 and it is better

than the average score of Team E. Moreover each

score which is achieved by Team C is better than

score which is achieved by Team E.

6 CONSIDERATION

Figure 4 shows a situation in Map 5 before start of

simulation. Figure 5 shows a situation in Map 5 at

the end of simulation by Team C and Figure 6 shows

a situation in the same map at the end of simulation

by Team E. In these figures, black parts means

IJCCI2013-InternationalJointConferenceonComputationalIntelligence

94

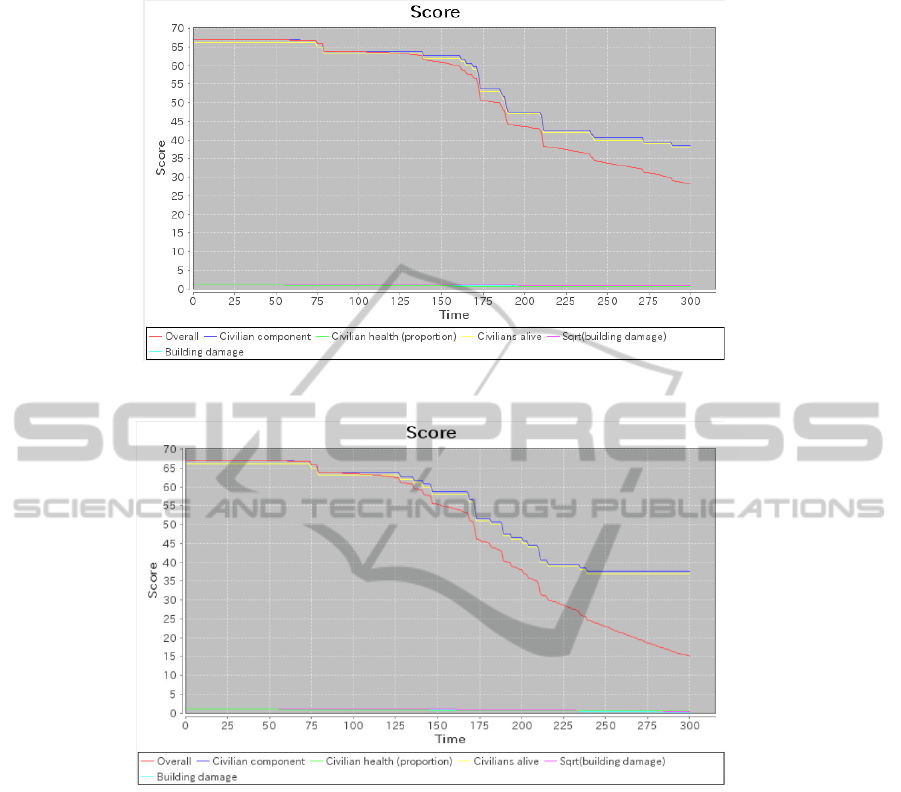

Figure 7: Score chart in Map 5 by Team C.

Figure 8: Score chart in Map 5 by Team E.

burned buildings, deep red parts means buildings

which are burned almost, red parts means buildings

which are burned half, yellow parts means buildings

burned a little and gray parts means buildings which

are not burned yet. From them, we can confirm that

fire-fighting actions by fire-brigade agents in Team

C are more effective than ones in Team E.

Figure 7 shows a score chart in Map5 by Team C

and Figure 8 shows a score chart in Map5 by Team

E. They are calculated by a RCRS server program.

In these score chart, red lines mean total scores in

each step of simulation time, blue lines mean

numbers of civilian agents’ component, green lines

mean rates of civilian agents’ health, yellow lines

mean number of civilian agents alive, pink lines

mean a root of buildings damage and aqua line mean

building damage. We have considered that actions of

preventing damages by fire-brigade agents in Team

C are more effective than ones in Team E.

However fire-brigade agents in Team C have not

prevented damages, perfectly. One of reasons is the

shortage of co-operation between hetero-types of

agent. For example, we can see that there are some

blockades on road in Figure 5. In this figure, black

parts on roads means blockades and police-force

agents need to remove blockades. However they do

not know what blockade other agents want to

remove first. Then the agents need to exchange

information each other.

7 CONCLUSIONS

We have reported results of evaluation experiments

AcquiringMethodforAgents'ActionsusingPheromoneCommunicationbetweenAgents

95

in multi-agent system using our proposed method.

From comparing between two teams in RoboCup

Rescue Simulation system, we have confirmed the

effectiveness of our method and we have considered

agents’ actions which are decided by our algorithm.

However there are some problems to resolve in our

method.

Then we have a plan to develop agents installed

our proposed algorithm on hetero-type agents and

realize co-operation between hetero-type agents

using pheromone communications.

ACKNOWLEDGEMENTS

We developed our experimental system with the

agents, which are based on source codes included in

packages of simulator-package file (Skinner and

Ramchurn, 2010), (RoboCup Rescue Simulation

Project), (RoboCup Japan Open, 2013).

This work was supported by Grant-in-Aid for

Scientific Research (C) (KAKENHI 23500196).This

work was also supported by TUT Programs on

Advanced Simulation Engineering.

REFERENCES

Gordon, D. M., 1999: Ants at work, THE FREE PRESS,

New York.

Keller, L., Gordon, E., 2009: the lives of Ants, Oxford

University Press Inc., New York.

Wilson, E. O., Duran, J. G., 2010: Kingdom of ants, The

John Hopkins University Press, Baltimore.

Dorigo, M., Maniezzo, V. and Colorni, A., 1996: The Ant

System: Optimization by a Colony of Cooperating

Agents. IEEE Trans. Syst. Man Cybern. B-26 (1996),

pp. 29 - 41,

Dorigo, M., Stützle, T., 2004: Ant Colony Optimization,

The MIT Press.

Bonabeau, E., Dorigo, M., Theraulaz, G., 1999: Swarm

Intelligence From Naturak ti Artificial Systems,

Oxford University Press.

Bonabeau, E., Dorigo, M., Theraulaz, G., 2000:

Inspiration for optimization from social insect

behaviour, Nature, Vol. 406, Number 6791, pp. 39-42.

Hernandez, H., Blum, C., Moore, J. H., 2008: Ant Colony

Optimization for Energy-Efficient Broadcasting in Ad-

Hoc Networks, in Proc. 6th International Conference,

ANTS 2008, Brussels, pp.25 –36.

D'Acierno, L., Montella, De Lucia, B. F., 2006: A

Stochastic Traffic Assignt Algorithm Based Ant

Colony Optimisation, in Proc. 5th International

Conference, ANTS 2006, Brussels, pp.25 –36.

Balaprakash, P., Birattari, M., Stützle, T., Dorigo,, M.,

2008: Estimation-based ant colony optimization and

local search for the probabilistic traveling salesman

problem, Journal of Swarm Intelligence, vol. 3,

Springer, pp. 223–242.

Stützle, T., Hoos, H.H., 2010: MAX-MIN ant system.

Future Generation Computer System 16(8), pp.889-

914(2000).

Skinner, C. and Ramchurn, S. 2010: The robocup rescue

simulation platform, In Proceedings of the 9th

International Conference on Autonomous Agents and

Multiagent Systems: volume 1-Volume 1, AAMAS ’10,

pp. 1647-1648(2010).

RoboCup Rescue Simulation Project Homepage,

http://sourceforge.net/projects/roborescue/

RoboCup Japan Open 2013 competition Official

Homepage, http://www.tamagawa.ac.jp/robocup2013/

Sasaoka, H., 2013: Evaluation for Method for Agents’

Action Using Pheromone Communication in Multi-

agent system, pp. 103- 106, J. of Machine Learning

and Computing, Vol.3, No1, IACSIT Press (2013).

Nikkei Software (in Japanese), 2011: Vol.14, No.7,

Nikkei BP Inc. (2011).

IJCCI2013-InternationalJointConferenceonComputationalIntelligence

96