A Cognitive Reference based Model for Learning Compositional

Hierarchies with Whole-composite Tags

Anshuman Saxena

1,2

, Ashish Bindal

1

and Alain Wegmann

1

1

Systemic Modeling Laboratory, I&C EPFL, Lausanne, Switzerland

2

TCS Innovation Labs, Bangalore, India

Keywords: Service Design, Part-whole Relations, Situated Conceptualization, Linguistic Markers, Digraph Analysis.

Abstract: A compositional hierarchy is the default organization of knowledge acquired for the purpose of specifying

the design requirements of a service. Existing methods for learning compositional hierarchies from natural

language text, interpret composition as an exclusively propositional form of part-whole relations.

Nevertheless, the lexico-syntactic patterns used to identify the occurrence of part-whole relations fail to

decode the experientially grounded information, which is very often embedded in various acts of natural

language expression, e.g. construction and delivery. The basic idea is to take a situated view of

conceptualization and model composition as the cognitive act of invoking one category to refer to another.

Mutually interdependent set of categories are considered conceptually inseparable and assigned an

independent level of abstraction in the hierarchy. Presence of such levels in the compositional hierarchy

highlight the need to model these categories as a unified-whole wherein they can only be characterized in

the context of the behavior of the set as a whole. We adopt an object-oriented representation approach that

models categories as entities and relations as cognitive references inferred from syntactic dependencies. The

resulting digraph is then analyzed for cyclic references, which are resolved by introducing an additional

level of abstraction for each cycle.

1 INTRODUCTION

A compositional hierarchy is the default

organization of knowledge acquired for the purpose

of specifying the design requirements of a service

(Saxena and Wegmann, 2012). A service seeks to

influence aspects of reality through the creation of

man-made artifacts. A compositional hierarchy

organizes the categories observed in reality in a

hierarchical manner such that the categories at the

lower level contribute to the behavior exhibited by

the categories at the higher level. Knowledge that

reveals the composition of some observed behavior

by identifying its constituent categories is, in

general, useful for engineering purposes.

Furthermore, a hierarchical organization of such

knowledge, structures the constituent categories

based on their relative strength of interactions

(Simon, 1962). The resulting levels correspond to

the different aspects of the composition, which can

either be tagged as novelty revealing composites or

simply structure enforcing composites. Novelty is a

subjective notion that resides in the ability of the

observer to discern a conceptualization into

coherent, though connected and possibly

overlapping, regions in semantic space. In the

context of compositional hierarchy, levels exhibiting

novel properties signify strong interdependence

among the descendant nodes. The inseparability of

the conceptual relevance of such descendant nodes

suggests that these nodes should be modeled as a

unified-whole wherein the individual nodes can only

be characterized in the context of the behavior of the

descendant set as a whole. For an artifact to deliver

desired results in a given situation, the design of the

artifact must possess an amount of variety that is at

least equal to the variety that the situation may

present (Ashby, 1964). An explicit

acknowledgement of the existence of such unified-

wholes as an integral part of the observed reality

helps ensure that the properties associated with the

unified-wholes are preserved in the target service,

which, in turn, amounts to adding variety to the

service specification, thereby increasing the

likelihood that the service yields desired benefits.

119

Saxena A., Bindal A. and Wegmann A..

A Cognitive Reference based Model for Learning Compositional Hierarchies with Whole-composite Tags.

DOI: 10.5220/0004542201190127

In Proceedings of the International Conference on Knowledge Discovery and Information Retrieval and the International Conference on Knowledge

Management and Information Sharing (KDIR-2013), pages 119-127

ISBN: 978-989-8565-75-4

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

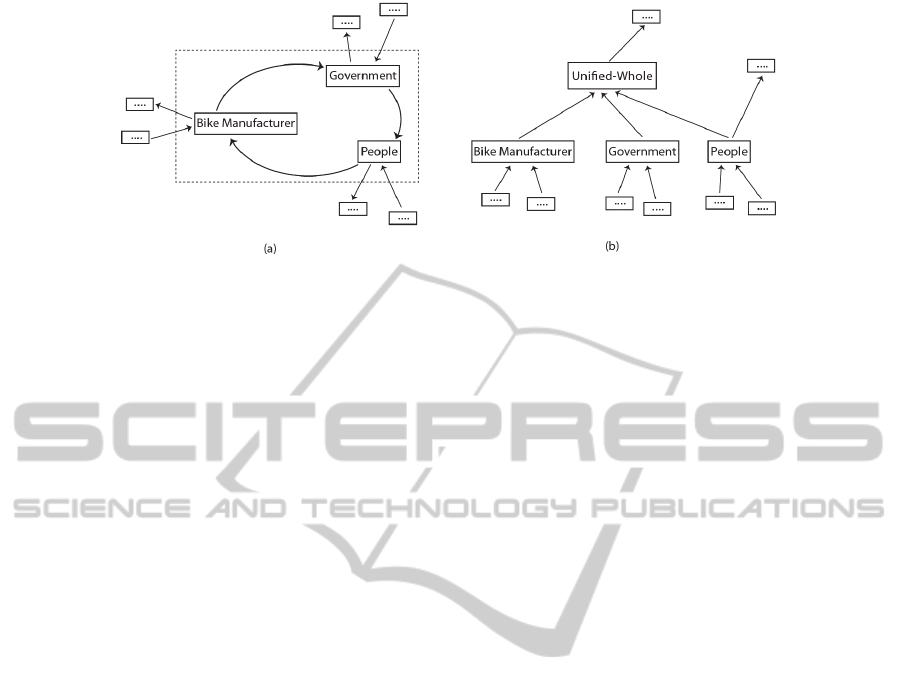

Figure 1: A compositional hierarchy extracted from the sample text T depicting the notion of unified-whole as a set of

strongly interdependent categories.

For example, consider the following sample text, T.

The interdependencies between the categories

occurring in this sample text and the corresponding

compositional hierarchy are depicted in figure 1.

T: Bike manufacturers are increasingly engaging

with people to identify new bike designs. The

demand for bikes has gone up. More and more

people are now riding bikes. The government has

improved the infrastructure by adding dedicated

bike lanes for riding the bikes. People feel safe in

bike lanes. Government is also encouraging bike

manufacturers to increase their production by

subsidizing their operations through tax waivers and

easy loans. More and more people riding the bike

results in a healthy society, which, in turn, lowers

the cost of health care for the government.

Large amount of information about various

aspects of the real world is available as natural

language documents. In the context of service

design, the socio-economic narrative that is most

relevant for modeling the real or intended behavior

of the participating actors, both human and

otherwise, is very often embedded in vision papers,

policy guidelines, surveys, and field-study reports

(Zarri, 1997). Conventional means for learning

compositional hierarchies from such unstructured

natural language text, interpret composition as an

exclusively propositional form of part-whole

relation. Propositions define a conceptualization as a

set of truth-conditions that are evaluated to ascertain

if a conceptualization holds in a given context. For

example, a part-whole conceptualization represented

in propositional form as part (wheel, bike) is

considered decodable from a given text if and only if

the text contains the natural language expression

‘wheel is part of the bike’.

Nevertheless, not all information encoded in

linguistic utterances may lend itself entirely to truth-

conditions based decoding (Wilson and Sperber,

1993). In addition, the utterance may also contain

information that is not analytically relevant to the

proposition, yet equally important in invoking the

perceptual experience associated with the meaning

of the utterance that the proposition seeks to model.

For example, to infer from the sample text T that the

categories - Bike manufacturer, Government and

People contribute to each other’s behavior and,

hence, constitute a unified-whole is quite

challenging. The text contains no explicit mention of

the unified-whole or any semantic relation that can

be mapped to the semantic primitives of a part-

whole relation (Winston et al., 1987). As a result, it

is difficult to devise linguistic markers that can be

used to extract such implicit compositional

information based on purely propositional forms of

part-whole relation.

A situated view of conceptualization (Barsalou,

2009) is grounded in the perceptual experience that

is associated with a category. In the context of

natural language processing, it models information

contained in a linguistic expression as not localized

in some fixed, predetermined lexical pattern but as

distributed across different aspects of the various

natural language expressions constituting the

discourse (Langacker, 2008). The basic idea is to

adopt an experientially grounded approach to

conceptualization and model composition at a pre-

conceptual level - as an embodied pattern of

cognitive reference, (Rosch, 1975; Tribushinina

2008). Cognitive reference provides a generalized

interpretation of composition as an interaction

between two categories such that one category

serves as the reference for understanding the other

and that this reference has some cognitive appeal to

the observer. The under specification of the

conceptual relevance of the cognitive appeal is

intentional as it allows to admit all possible aspects

of the behavior that a category may exhibit.

KDIR2013-InternationalConferenceonKnowledgeDiscoveryandInformationRetrieval

120

From a natural language processing point of view,

dependencies between different syntactic categories

provide a natural means of extracting linguistic

evidence for cognitive references. For example,

prepositions represent a syntactic category that can

be viewed as a semantic relation between a structure

that precedes it, e.g. a verb, or a noun-phrase, and

another one that follows it, e.g. a noun-phrase

(Saint-Dizier, 2006). Similarly, we can also interpret

the verb relations and attributive relations like

modifiers as evidence for cognitive referencing. In

the case of verbs, we get greater specificity by also

acknowledging the semantic role assignment done

by parsers (Jackendoff, 1987; Fillmore, 1968;

Dowty, 1991).

We adopt an object-oriented representation

(Sowa, 1984) approach that models categories as

entities and relations as cognitive references inferred

from syntactic dependencies. The resulting digraph

is then analyzed for cyclic references, which are

resolved by introducing an additional level of

abstraction for each cycle. Mutually interdependent

set of categories are considered conceptually

inseparable and assigned an independent level of

abstraction in the hierarchy. Presence of such levels

in the compositional hierarchy highlight the need to

model these categories as a unified-whole wherein

they can only be characterized in the context of the

behavior of the set as a whole.

2 CHARACTERIZING

COMPOSITION

Linguistic utterances encode two basic types of

information – information about the state of affair it

describes and information indicating various speech

acts it intends to perform (Wilson and Sperber,

1993). The first type of information is explicit in the

sense that the state of affairs described in the

utterance can be decoded directly from the various

lexical and syntactic constructs used in the utterance.

The second type of information is implicit, for

example, expressions of subjectivity, which need

additional knowledge support to be inferred. In this

section, we present two characterizations of

composition – the proposition based

characterization, which operates at the linguistic

level and the experientially situated characterization,

which operates at the pre-linguistic level.

2.1 Propositional Approach to

Composition

Propositional form of conceptualization is rooted in

the logical tradition, which defines a

conceptualization as a set of truth-conditions that are

evaluated to ascertain if a conceptualization holds in

a given context. In the context of natural language

processing, truth-conditions correspond to the

occurrence of the linguistic marker associated with

the proposition. For example, a part-whole

conceptualization represented in propositional form

as part (wheel, bike) is considered decodable from a

given text if and only if the text contains the natural

language expression ‘wheel is part of the bike’. The

linguistic marker here is a lexical pattern comprised

of named entities wheel, bike and the copula verb,

part. The proposition cannot be decoded from any

other natural language expression, for example,

‘wheel is attached to the bike’, or extended to part-

whole relations between other categories, for

example, ‘roads are required to bike’, unless

additional truth-conditions are associated with the

proposition. For the three expressions mentioned

above to be decodable as a conceptualization of part-

whole relation, the following truth conditions need

to be specified as three separate linguistic markers:

part (NE, NE), attach (NE, NE) and require (NE,

NE); where NE stands for named entities. Existing

information retrieval methods try to minimize the

false negatives associated with proposition based

concept extraction by expanding the set of linguistic

markers used to define the truth conditions of the

proposition being decoded. Various automatic and

semi-automatic schemes have been developed to

identify linguistic markers corresponding to the

different lexical and syntactic divergences (Dorr,

1993) that the linguistic interpretation of the

proposition may undergo. A widely used algorithm

for extracting semantic relations through the use of

lexico-syntactic patterns is described in (Hearst,

1992).

From a knowledge organization point of view,

logic based modeling of semantic relations help to

structure the concepts in ways that permit automated

inference making and is hence widely popular. As

part of this modeling tradition, the part-whole

relations limit composition to include only those

interactions between categories that can be

characterized along the following three dimensions –

whether the categories are functionally related to

each other; whether the categories can exist

independent of each other; and whether they are of

the same type (Winston et al., 1987). The primary

ACognitiveReferencebasedModelforLearningCompositionalHierarchieswithWhole-compositeTags

121

motivation to identify these semantic primitives of

part-whole relations is to maintain transitivity as an

invariant across all occurrences of part-whole

relations in natural language use. Transitivity is an

important logical property, in addition to

antisymmetry and reflexivity, that underlies much of

the inference-making in hierarchies, for example,

query expansion (Nie, 2003). These semantic

primitives are often used to further improve the

performance of decoding part-whole relations from

natural language text by generating linguistic

markers from some widely used keywords that

convey the meaning associated with the semantic

primitives underlying the propositional

interpretation of part-whole relations (Girju and

Moldovan, 2002; Khoo et al., 2000). For instance,

‘cause’ as a keyword for functional dependence,

‘component’ or ‘part’ as keywords for independence

of existence and ‘such as’ or ‘for example’ as

keywords for similarity of type. These keywords are

then used to identify lexico-syntactic patterns either

manually or semi automatically often with the aid of

lexical knowledge bases like WordNet (Miller

1990).

2.2 Situated Approach to Composition

Traditionally the focus has been on propositional

forms of knowledge thereby disregarding related

information readily available within the language

domain, for example, expressions of subjectivity and

linguistic expressions outside the proposition

(Narrog, 2005). One way of interpreting implicit

experiential information is to view them as encoded

in semantic relations that do not have an explicit

mapping to the semantic primitives associated with

part-whole relations. As a result they cannot be

decoded directly but can only be inferred from the

larger context in which they occur. Very often this

context may be distributed across several sentences.

Cognitively, conceptualization is situated (Barsalou,

2003). It is the reenactment of a combination of

prior experiences that together simulate a perceptual

experience in the form of a situation - experienced or

imaginary. A simulated situation captures only one

of many possible aspects of a category observed in

reality. Diverse aspects of a category may get

simulated across different situations. A situated view

of conceptualization is an experientially grounded

view of conceptualization and, in the context of

language, it models information contained in a

linguistic expression as not localized in some fixed

predetermined lexical pattern but as distributed

across different aspects of the expressions

constituting a discourse (Langacker, 2008). The

basic idea is to adopt an experientially grounded

approach to conceptualization and model

composition as a pre-conceptual embodied pattern.

Simulating perceptual experience from these

modal states is then an exercise of inferring and/or

composing a situation. The multi-modal experience

that the situation represents is reenacted at the

different modal systems thereby simulating an

experience of being in that specific situation. Such

multi-modal simulation based model of

conceptualization highlights the situated nature of

concepts and is referred to as situated

conceptualization (Barsalou, 2009). Such situation

specific inferences are, in principle, motivated by the

theory of situation semantics, where logical

inference is optimized when performed in the

context of specific situations (Barwise and Perry,

1983).

2.2.1 Cognitive Reference as an Embodied

Pre-linguistic Structure of

Composition

A pre-linguistic structure of conceptualization refers

to the organization of knowledge at a level of

abstraction that is higher than the linguistic level,

where organization is limited to explicitly stated

propositions. The knowledge available at such

higher levels of abstraction is experiential in nature,

with both explicit and implicit information encoded

in the linguistic utterance contributing to the

perception of the experience. Various cognitive

constructs have been proposed to motivate the

organization of knowledge at the pre-linguistic level.

These include the notion of force dynamics (Talmy

1988), image schemas (Lakoff and Johnson, 2003),

construals (Langacker, 1987), mental spaces

(Fauconnier, 1994) and reference point constructions

(Langacker Ronald, 1993).

Amongst these the notion of cognitive reference

point (CRP) construction lends itself naturally to the

modeling of composition at the pre-linguistic level.

CRP is the cognitive act of referring one entity by

invoking another (Rosch, 1975). A CRP models

composition to include not only propositional forms

of part-whole relation but any relation, distributed or

local, that establishes a link between two categories

such that link has some conceptual relevance and is

asymmetric in nature. The asymmetry requirement

of the link restricts the interpretation of CPR to only

those relations, which clearly distinguish foreground

information (focal category) from background

information (contextual category) and protects it

KDIR2013-InternationalConferenceonKnowledgeDiscoveryandInformationRetrieval

122

from the risk of degenerating to any meaningful

relation between categories. Meanwhile, the under

specification of the conceptual relevance of the

cognitive appeal is quite useful for modeling

composition as it allows to admit all possible aspects

of the behavior that a category may exhibit.

2.2.2 Interpreting Novelty from Circular

Cognitive Referencing

The notion of novelty can be explained both

ontologically and epistemologically (Bunge, 2004).

From an ontological point of view, novelty is said to

occur only when there is explicit knowledge about a

new category and adequate information to verify the

associated novel property using some truth-

conditional formulation. In the context of this work,

we follow an epistemic interpretation of novelty as

patterns of association, which only indicate the

occurrence of novelty and provide no additional

information that could help reify the ontological

status of the indicated novelty.

Cognitive reference provides a generalized

interpretation of composition as an interaction

between two categories such that one category

serves as the reference for understanding the other

and that this reference has some cognitive appeal to

the observer. As a result, mutually interdependent

set of categories are considered conceptually

inseparable and assigned an independent level of

abstraction in the hierarchy. Presence of such levels

in the compositional hierarchy highlight the need to

model these categories as a unified-whole wherein

they can only be characterized in the context of the

behavior of the set as a whole.

3 APPROACH

Instances of cognitive reference from text can be

interpreted from dependencies between lexical

elements in a sentence. The fundamental notion of

dependency is based on the idea that the syntactic

structure of a sentence consists of binary

asymmetrical relations between the lexical elements

of a natural language expression (Tesniere, 1959).

Dependency types commonly used in dependency

parsers include surface-oriented grammatical

functions, such as subject, object, modifiers, and a

set of more semantically oriented role types, such as

agent, patient, and goal (Nivre, 2005). Semantic

roles are theme revealing relations that express the

role that a noun phrase plays with respect to the

action or state described by a sentence (Jackendoff,

1987; Dowty, 1991). When these roles are defined

exclusively in relation to the sub-categorization

frame of the verb they are referred to as case roles

(Fillmore, 1968).

We use the Stanford dependency parser for

English language text (de Marneffe et al., 2006).

Following the terminology used in the Stanford

dependency manual, a dependency relation holds

between a governor and a dependent and is

represented as:

dependency(governor,dependent). Each

dependency connection, in principle, links a superior

term and an inferior term. The superior term receives

the name governor and the inferior the name

dependent. The superior/inferior characterization for

a pair of words is based on different morphological,

syntactic and semantic considerations. In the context

of this work, the interest is more to characterize

superior/inferior from a cognitive reference point of

view – superior as the one in the foreground (focal)

and inferior as the one in the background (context).

The background word contributes to the

understanding of the word in the foreground. As

mentioned in (Langacker, 1994), the structural

syntax based dependency framework and the

cognitive reference framework have substantial

similarity. The acknowledgement of the underlying

similarity encourages us to re-interpret dependency

relations between lexical items from a cognitive

reference perspective.

The Stanford dependency manual (De Marnee

and Manning, 2011) lists 53 grammatical relations.

Table 1 lists the dependencies considered in this

work and their re-interpretation as focal and

contextual categories.

Most dependencies can be interpreted directly as

a cognitive reference link, with the governor as the

focal category and the dependent as the context. For

subject dependencies, it is the other way round:

governor as the context and dependent as the focal

Table 1: Interpreting syntactic dependencies as cognitive references.

Type Syntactic dependency Cognitive reference

Verb *obj(A,B), agent(A,B)

*subj(A,B)

A(focal), B(contextual)

A(contextual), B(focal)

Preposition prep*(A,B) A(focal), B(contextual)

Attribute Modifiers amod(A,B) A(focal), B(contextual)

ACognitiveReferencebasedModelforLearningCompositionalHierarchieswithWhole-compositeTags

123

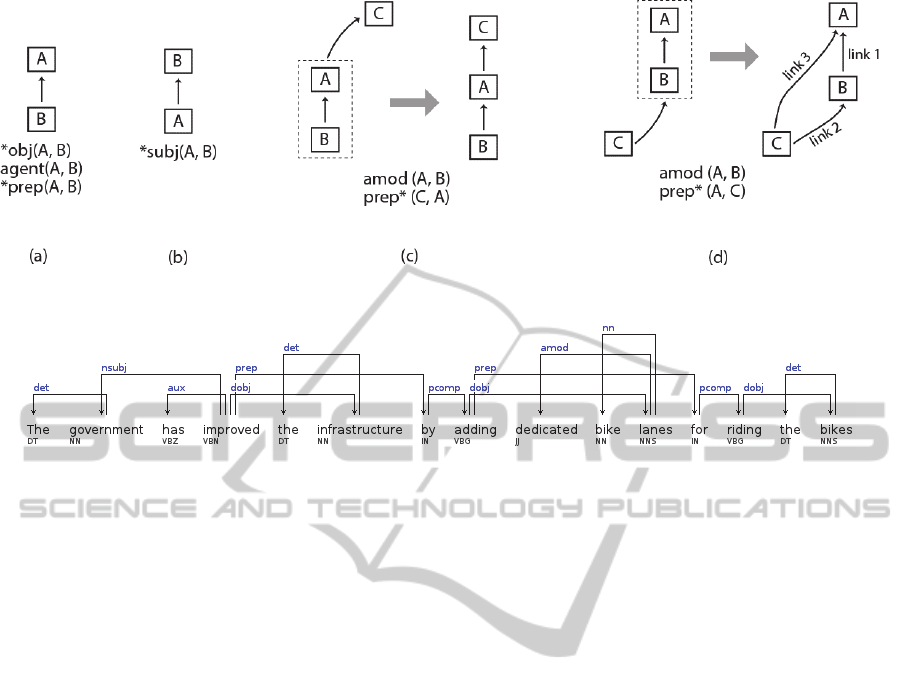

Figure 2: A visual depiction of the mappings from syntactic dependencies to cognitive reference.

Figure 3: Dependency graph visualization of S.

category. This is due to the interpretation of verb as

a link between its different arguments – the object

qualifying the meaning of the verb and subject being

qualified by verb as providing the context in which

the subject is being referred. A visual depiction of

the cognitive reference links inferred from syntactic

dependencies is provided in figure 2. A special

pattern resulting from a combination of attributive

and prepositional dependencies is worth mentioning.

The case where the focal categories are different,

e.g. figure 2(c), the combination of attributive and

prepositional qualifiers can be organized as a uni-

path hierarchy, which can be seen as context

refinement. The case where the focal categories are

the same results in a multipath-hierarchy as the focal

category can be interpreted in multiple contexts,

figure 2(d).

Consider the following sentence:

S: The government has improved the infrastructure

by adding dedicated bike lanes for riding the bikes.

The dependencies generated by the Stanford parser

for S are depicted as a graph in figure 3. This

visualization is obtained using a freely available

plug-in, DependenSee, from the Stanford natural

language processing group website (Group, 2012). It

is important to note that the cognitive reference

point relation links lexical elements with binary

asymmetrical relations as a result each sentence can

be depicted as a directed acyclic graph, DAG. As

explained earlier, the only semantics associated with

this link is encoded in its direction – the source

being the constituent category and the destination

the focal category. The DAG depicting the cognitive

reference links embedded in the sentence S is shown

in figure 4.

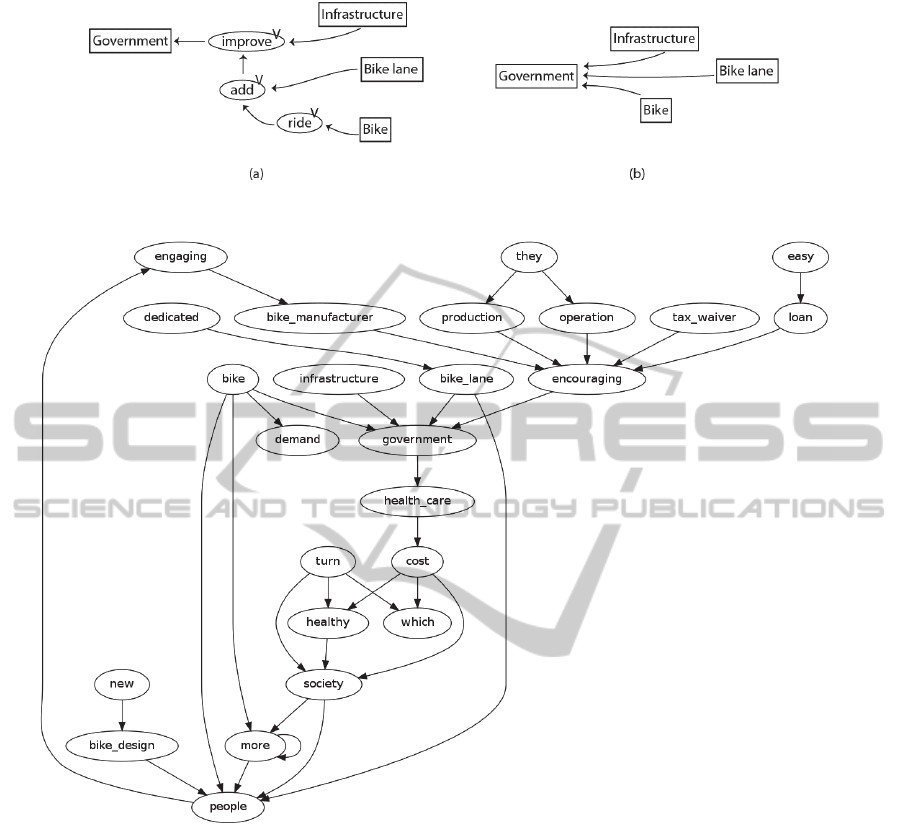

The DAG for each sentence in the text is merged

by only admitting one node per category.

Conceptually this amounts to making explicit the

implicit connections in the text. The resulting graph

is directed but not necessarily acyclic. The cycles in

the digraph correspond to interdependence between

categories. The digraph representing the cognitive

references between categories described in T is

shown in figure 5.

The cognitive reference digraph is then analyzed

for cycles by identifying the strong components of

the digraph. A strongly connected component of a

digraph is a maximal set of vertices in which there is

a path from any one vertex to any other vertex in the

set (Tarjan, 1972). The algorithm used to identify

the strongly connected components of the digraph is

due to KosarajuSharir and described in detail in

(Sedgewick, 2011).

4 CONCLUSIONS

Data mining is defined as “…the analysis of (often

large) observational data sets to find unsuspected

relationships and to summarize the data in novel

ways that are both understandable and useful to the

data owner” (Hand et al., 2001). Nevertheless,

efforts to find unsuspected relationships from data

KDIR2013-InternationalConferenceonKnowledgeDiscoveryandInformationRetrieval

124

Figure 4: Cognitive reference graph visualization of S.

Figure 5: Cognitive reference revealing digraph visualization of T.

and their use in formulating new hypothesis should

not be interpreted as the absence of any initial

hypothesis, which in the first place guides one to

find such unsuspected relationships. For example,

the use of distributional hypothesis that assumes

terms to be similar to the extent to which they share

similar linguistic contexts (Harris, 1968). In this case

what is unknown is the nature of similarity and its

relationship to different patterns of linguistic

context. Communicating this work to the data

mining community is equally relevant as it presents

a cognitive model of composition, which can be

used as a starting point for developing new data

mining schemes realize this model in a

computational setting.

The primary purpose of this work is to suggest

an alternate conceptualization of composition and

show how it can be more rewarding by making it

easy to identify novel aspects of observed reality.

From a relevance point of view, the work was

motivated based on its usability in a very concrete

domain that of service design specification by

linking novelty to the notion of requisite variety,

thereby making service design conscious of the need

to anticipate the operating environment conditions

and account for them by including enough flexibility

in their design. The current exposition is, however,

limited in its scope to only identify the existence of

novelty and not to provide any conceptual

interpretation of the novelty of the unified-wholes.

An important future work in this regard is to

apply this model of extracting compositional

ACognitiveReferencebasedModelforLearningCompositionalHierarchieswithWhole-compositeTags

125

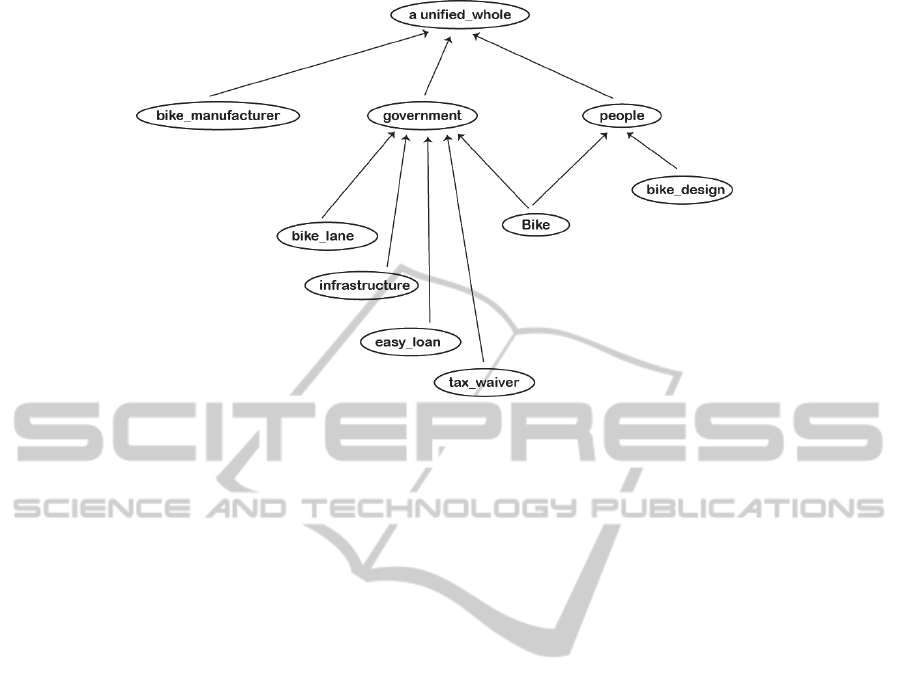

Figure 6: Compositional hierarchy representation of T depicting integrated-wholes.

hierarchies to diverse text samples and study the

extent to which it is able to detect cognitive

reference cycles. Based on our experimentation,

there could be situations where the cognitive

interdependence may not be detectable as a complete

cycle and some threshold based connectivity

measure might help further reduce the false

negatives associated to identify unified-whole in

compositional hierarchies.

REFERENCES

Ashby, W. R. (1964). Introduction to Cybernetics,

Methuen.

Barsalou, L. (2009). "Simulation, situated

conceptualization, and prediction." Philosophical

Transactions of the Royal Society B: Biological

Sciences 364(1521): 1281-1289.

Barsalou, L. W. (2003). "Situated simulation in the human

conceptual system." Language and Cognitive

Processes 18(5): 513-562.

Barwise, J. and J. Perry (1983). Situations and attitudes.

Cambridge, MA, MIT Press.

Bunge, M. (2004). Emergence and Convergence:

Qualitative Novelty and the Unity of Knowledge,

University of Toronto.

De Marnee, M.-C. and C. Manning (2011). Stanford typed

dependencies manual.

de Marneffe, M. C., B. Maccartney, et al. (2006).

Generating Typed Dependency Parses from Phrase

Structure Parses. LREC.

Dorr, B. J. (1993). Machine Translation: A view from the

lexicon. Cambridge, Massachusetts, The MIT Press.

Dowty, D. (1991). "Thematic Proto-Roles and Argument

Selection." Language 67(3): 547-619.

Fauconnier, G. (1994). Mental Spaces: Aspects of

Meaning Construction in Natural Language,

{Cambridge University Press}.

Fillmore, C. J. (1968). The Case for Case. Universals in

Linguistic Theory. E. H. Bach, Robert. New York,

Holt, Rinehart, and Winston.

Girju, R. and D. I. Moldovan (2002). Text Mining for

Causal Relations. Proceedings of the Fifteenth

International Florida Artificial Intelligence Research

Society Conference, AAAI Press: 360-364.

Group, S. N. L. P. (2012). "The Stanford parser: A

statistical parser." Retrieved October 07, 2012, from

http://nlp.stanford.edu/software/lex-parser.shtml.

Hand, D. J., P. Smyth, et al. (2001). Principles of data

mining, MIT Press.

Harris, Z. (1968). Mathematical structures of language,

Interscience Publishers.

Hearst, M. A. (1992). Automatic acquisition of hyponyms

from large text corpora. Proceedings of the 14th

conference on Computational linguistics - Volume 2.

Nantes, France, Association for Computational

Linguistics: 539-545.

Jackendoff, R. (1987). "The Status of Thematic Relations

in Linguistic Theory." Linguistic Inquiry 18(3): 369-

411.

Khoo, C. S. G., S. Chan, et al. (2000). Extracting causal

knowledge from a medical database using graphical

patterns. Proceedings of the 38th Annual Meeting on

Association for Computational Linguistics. Hong

Kong, Association for Computational Linguistics:

336-343.

Lakoff, G. and M. Johnson (2003). Metaphors We Live

By, University Of Chicago Press.

Langacker, R. (1987). Foundations of Cognitive

Grammar. Vol. 1: Theoretical Prerequisites. Stanford,

Stanford University Press.

KDIR2013-InternationalConferenceonKnowledgeDiscoveryandInformationRetrieval

126

Langacker Ronald, W. (1993). Reference-point

constructions. Cognitive Linguistics (includes

Cognitive Linguistic Bibliography). 4: 1.

Langacker, R. W. (1994). "Structural Syntax: The View

from Cognitive Grammar." Semiotique 6/7: 69-84.

Langacker, R. W. (2008). Cognitive Grammar: A Basic

Introduction. New York, Oxford University Press.

Miller, G. A. (1990). "Nouns in WordNet: A Lexical

Inheritance System." International Journal of

Lexicography 3(4): 245-264.

Narrog, H. (2005). "On defining modality again."

Language Sciences 27(2): 165-192.

Nie, J.-Y. (2003). "Query expansion and query translation

as logical inference." J. Am. Soc. Inf. Sci. Technol.

54(4): 335-346.

Nivre, J. (2005). Dependency Grammar and Dependency

Parsing.

Rosch, E. (1975). "Cognitive reference points." Cognitive

Psychology 7(4): 532-547.

Saint-Dizier, P. (2006). Introduction to the Syntax and

Semantics of Prepositions

Syntax and Semantics of Prepositions. P. Saint-Dizier,

Springer Netherlands. 29: 1-25.

Saxena, A. B. and A. Wegmann (2012). From Composites

to Service Systems: The Role of Emergence in Service

Design. IEEE International Conference on Systems,

Man, and Cybernetics. Seoul, Korea.

Sedgewick, R. (2011). Algorithms. Boston, MA, Addison-

Wesley.

Simon, H. A. (1962). "The Architecture of Complexity."

Proceedings of the American Philosophical Society

106(6): 467-482.

Sowa, J. F. (1984). Conceptual structures: Information

processing in mind and machine. Reading, MA,

Addison-Wesley.

Talmy, L. (1988). "Force Dynamics in Language and

Cognition." Cognitive Science 12(1): 49-100.

Tarjan, R. (1972). "Depth-First Search and Linear Graph

Algorithms." SIAM Journal on Computing 1(2): 146-

160.

Tesniere, L. (1959). Elements de syntaxe structurale.

Paris, Klincksieck.

Tribushinina, E. (2008). Cognitive reference points:

semantics beyond the prototypes in adjectives of space

and colour. Doctoral Thesis, Leiden University.

Wilson, D. and D. Sperber (1993). "Linguistic form and

relevance." Lingua 90(1–2): 1-25.

Winston, M., R. Chaffin, et al. (1987). "A Taxonomy of

Part-Whole Relations." Cognitive Science 11(4): 417-

444.

Zarri, G. (1997). Conceptual modelling of the “meaning”

of textual narrative documents. Foundations of

Intelligent Systems. Z. Ras and A. Skowron, Springer

Berlin / Heidelberg. 1325: 550-559.

ACognitiveReferencebasedModelforLearningCompositionalHierarchieswithWhole-compositeTags

127