Verifying the Usefulness of a Classification System of Best Practices

Meshari M. S. Alwazae, Erik Perjons and Harald Kjellin

Department of Computer and System Sciences, Stockholm University, Isafjordsgatan 39, Stockholm, Sweden

Keywords: Knowledge Management, Knowledge Transfer, Best Practice, Classification System.

Abstract: Transfer of best practices (BPs) within an organization can significantly enhance knowledge transfer.

However, in order to manage a large number of BPs within an organization, there need to be some structure

for how to classify the BPs. In this paper, we present a best practice (BP) system for classifying BPs and

evaluate how easy the system is to use for classifying best practices. The research approach applied was

design science, which is characterized by designing an artifact in this case a BP classification system, and

evaluating it. The evaluation was carried out by asking Master’s students to collect two BPs from

organizations and subsequently having them classify the BPs according to the BP classification system.

They were also asked to motivate their choices during their act of classification. The results of the

evaluation are promising: the BP system could be used for classifying BPs since students utilized all

possible values of the BP system during the act of classification. Also, it was easy for the students to justify

their classifications, which might be interpreted as an ease of using the BP classification system.

1 INTRODUCTION

The importance of managing organizational

knowledge, including the needs of knowledge

transfers within an organisation, has been receiving

research attention during the last two decades (Cross

and Baird, 2000; Davenport et al., 1998; Desouza

and Evaristo, 2003; Dinur et al., 2009; Hansen,

2002; Zack, 1999). The studies of the factors that

enable transfer of best practices (BPs) within an

organization can significantly enhance the

perception of knowledge transfer if knowledge

sharing is critical to an organization’s success. Other

directions of research into BPs include examining

whether these practices will actually work

sufficiently in the adopting organizations (Davies

and Kochhar, 2002; Zairi and Ahmed, 1999).

As its heart, BP aims to reuse best ways to solve

a problem or handle an issue. It is about gaining the

benefits of previous experiences to define possible

ways to conduct activities and solve problems

(Axelsson et al., 2011). Organizations use BP to

avoid making the same mistakes, while learning

from others’ experiences in order to produce equally

superior results. BPs should also be easily copied

and transferred throughout the organization. The

logic behind this is that any practice that has been

proven over time to be effective or valuable for one

organization might bring similar successful

outcomes while implemented in another (Fragidis

and Tarabanis, 2006). For instance, a central

committee of managers evaluated BPs at Ericsson

(Watson, 2007). Those managers met quarterly to

decide which of the practices were best suited to be

shared throughout the organization, and, thereby,

converting all departments’ practices. This gave

Ericsson a competitive advantage in their production

processes through a high degree of standardization.

In order to manage a large number of BPs in an

organization, there needs to be some structure for

how to classify the BPs. Such a structure will

support the users to easily find appropriate BPs as

well as providing its users with an understanding of

how BPs are related to other BPs. Such a structure

will also provide the identified BPs with context, an

important feature for successful implementation of

BPs. Furthermore, such a structure will also provide

an organization with an understanding about which

areas within the organization have a limited number

of BPs. The organization can then analyze this lack

of BPs in certain areas and decide if additional BPs

are needed. In this paper, such a structure is called a

BP classification system.

The goal of this paper is to present a BP

classification system for categorizing BPs and

evaluating how easily the system can be used to

405

M. S. Alwazae M., Perjons E. and Kjellin H..

Verifying the Usefulness of a Classification System of Best Practices.

DOI: 10.5220/0004549604050412

In Proceedings of the International Conference on Knowledge Discovery and Information Retrieval and the International Conference on Knowledge

Management and Information Sharing (KMIS-2013), pages 405-412

ISBN: 978-989-8565-75-4

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

classify BPs. The BP system was presented in

Alwazae et al. (2013) but not evaluated. In this

paper, the focus is on evaluating the BP system.

The structure of the paper is as follows. In

section 2, we present the research approach and in

section 3 we discuss the designed artifact, i.e. the BP

classification system. Section 4 describes the

evaluation of the system, while the analysis and

discussion is described in section 5. Related research

is discussed in section 6 followed by a conclusion in

section 7.

2 RESEARCH APPROACH

The research approach used is design science

(Hevner et al., 2004). It is characterized by the

design of artifacts (e.g. constructs, models,

frameworks, methods, prototypes and IT systems).

According to Hevner et al. (2004), design science is

originated in engineering disciplines aiming for

creating innovative artifacts for solving practical

problems. Therefore, design science research is an

activity aimed at generating and testing hypotheses

about the future, i.e. artifacts that can, when

introduced, solve problems for an organization

(Bider et al., 2012). Furthermore, for an artifact to

count as a design science solution, it needs to be

generic in nature, i.e. the artifact solution should be

applicable to not only one unique situation, but to a

class of similar situations, cf. Principle 1 of (Sein et

al., 2011).

Hevner et al. (2004) emphasize the importance of

evaluating a designed artifact in design science

research. Evaluating an artifact means evaluating its

ability to solve a practical problem. However,

evaluating an artifact in design science is difficult.

First, in order to evaluate an artifact in an

organization it has to be applied and then evaluated

to determine if the artifact has solved the problem at

hand. Second, the artifact needs to solve the problem

in many different organizations, since an artifact

needs to be a generic solution to qualify as a design

science solution.

Different strategies and approaches have been

presented to manage evaluations of artifacts in

design science. There are two main evaluation

strategies: ex ante and ex post evaluations (Pries-

Heje et al., 2008). Ex ante evaluation means that the

artifact is evaluated without being used in an

organization, while ex post evaluation requires the

artifact to be employed in an organization. An ex

ante evaluation often employs interviews, where

users express their views on the artifact. While ex

ante evaluations are often weak, ex post evaluations

may be considerably stronger. Such evaluations

require that an artifact is actually put into operation

before being evaluated. Furthermore, Sein et al

(2011) have points out that artifacts in the IT sector

are typically developed and shaped by their

interaction within an organizational context. Thus,

design science research needs to interleave

concurrently the activities of creating an artifact,

introducing it into practice, and evaluating it.

In this paper, we have designed a KM system (in

the form of a model for classifying BP), and

evaluate the artifact by using an ex ante evaluation.

The focus of the evaluation is not on the BP

system’s usefulness for an organisation, which will

be a future evaluation. Instead, the focus is on how

easy it is to classify BPs according to the system.

Therefore, this preliminary evaluation should be

seen as the first evaluative step. The goal of this first

step is to enhance the quality of the artifact. Later

steps will include evaluations which investigate if

the BP system is useful for an organisation.

Peffers et al. (2007) have presented a process for

design science research consisting of a number of

activities as described below. We used this process

to describe our work according to these activities:

1. Identify problems and motivation: The first

activity in the design science process according

to Peffers et al. (2007) is to identify a business

problem that motivates why the artifact (i.e. in

our case the BP classification system) needs to

be designed and developed. The business

problem in our research is the lack of support for

managing a large number of BPs within an

organization, including finding appropriate BPs,

relating BPs to each other and increase the

understanding of which areas of an organization

that are lacking BPs.

2. Define objectives of a solution: The second

activity defines desirable requirements on the

artifact that specify how the artifact solves the

problem. These requirements will guide the

design and development of the artifact and will

also form a basis for its evaluation. The main

requirement in our research is to assess how easy

it is to use the system to classify BPs.

3.

Design and develop: The third activity describes

the artifact in focus including how it was

designed and developed. In our research, a BP

classification system was developed based on an

in-depth literature survey of existing BP

frameworks. Based on this survey, presented in

Alwazae et al. (2013), we identified a set of

variables and values for classifying BPs.

KMIS2013-InternationalConferenceonKnowledgeManagementandInformationSharing

406

4. Demonstration: The forth activity aims at

showing the use of the artifact in an illustrative

or real-life case, thereby proving feasibility of

the artifact. In this paper, a demonstration has not

been carried out since the focus is on evaluation.

5. Evaluation: The fifth activity determines how

well the artifact solves the problem taking into

consideration solution objectives (i.e. the defined

requirements). In our research, the evaluation

was carried out by asking 20 students to each

collect two BPs from companies, asking them to

classify the BPs, i.e. apply the BP framework on

the collected BPs, and asking them to motivate

each choice of classification.

3 THE DESIGNED ARTIFACT:

THE BP SYSTEM

In this section, we describe the designed artifact, i.e.

the designed BP classification system (Alwazae et

al., 2013). The BP classification system consists of a

set of features, called variables and possible values

for each variable. The classification is based on the

argument that knowledge and BP are embedded

within a set of contextual dimensions that are critical

for the organization’s ability to organize, utilize and

extract value from the knowledge. The BP

classification system was designed based on a

literature study in which we focused on identifying

candidate papers by means of reviewing literature of

BP. To be a candidate paper, it needed to present

features for a BP system. They ended up with 26

candidate papers and, based on features (i.e.

variables) identified in these papers, they designed a

BP classification system. The variables of the BP

system were 1) degree of cooperation 2)

organizational level, 3) scope, 4) completeness of

description, 5) degree of quantification, 6)

implementation areas, 7) level of formalization.

Each variable had a set of possible values that can

vary depending on knowledge characteristics. Each

variable and value was explicitly defined. Please see

Appendix that includes a full description of each

variable and values.

Some of the advantages for the BP classification

system are that it can facilitate the identification of

an organization’s BPs to re-index their practices in

order to discover which practices are difficult to

classify. It helps to document the organization’s

practices making it easier to search and utilize BPs.

Additionally, it will prevent reinventing the wheel

when similar problems present themselves. Finally,

it decreases repetition of BP documentation since

there will be a repository of BPs to be shared within

the organization (ibid.).

4 EVALUATION

OF THE ARTIFACT:

THE BP SYSTEM

In this section, we describe the approach used to

evaluate how easily the BP system was utilized to

classify BPs. In this section, we also describe the

result of the evaluation. The evaluation was carried

out according to the steps presented below:

Step 1: We conducted the evaluation with 20

students who were attending a Master’s level

Knowledge Management course at the Department

of Computer and System Sciences at Stockholm

University. In this first step, we asked the students to

contact organizations and collect two written

descriptions of BPs from the organizations. The

descriptions needed to be structured according to a

template distributed electronically and in-person to

the students. The template highlighted important

parts of BP: background, problem, goal, method and

solution. The task, as well as the concept of BP, was

presented to the students during a lecture.

Step 2: The next step was to ask the students to

evaluate how easy it was to categorize the BP

according to the system. We created a structured

questionnaire, in which the students classified their

collected BPs according to the values and variables

as well as their motivation behind their chosen

values. This was done in a two-hour seminar. Then,

we examined incomplete answers or missing

answers by cross-referencing their responses with

their original collected BPs. In case of ambiguities in

their answers, students were asked for clarification

and complimentary additions.

Step 3:In this step, we compiled and analyzed the

results from the evaluation. We found that the

students collected BPs from twenty organizations of

varying sizes and which operated in diverse domains

such as IT, manufacturing, banking, governments

etc.

In this evaluation study, we used a convenience

sampling method, as we did not control the choice of

organizations involved in our study. We allowed the

students to choose any organization, whether small

medium or large, and in any domain. The only

requirement was that the organization studied should

focus on KM.

VerifyingtheUsefulnessofaClassificationSystemofBestPractices

407

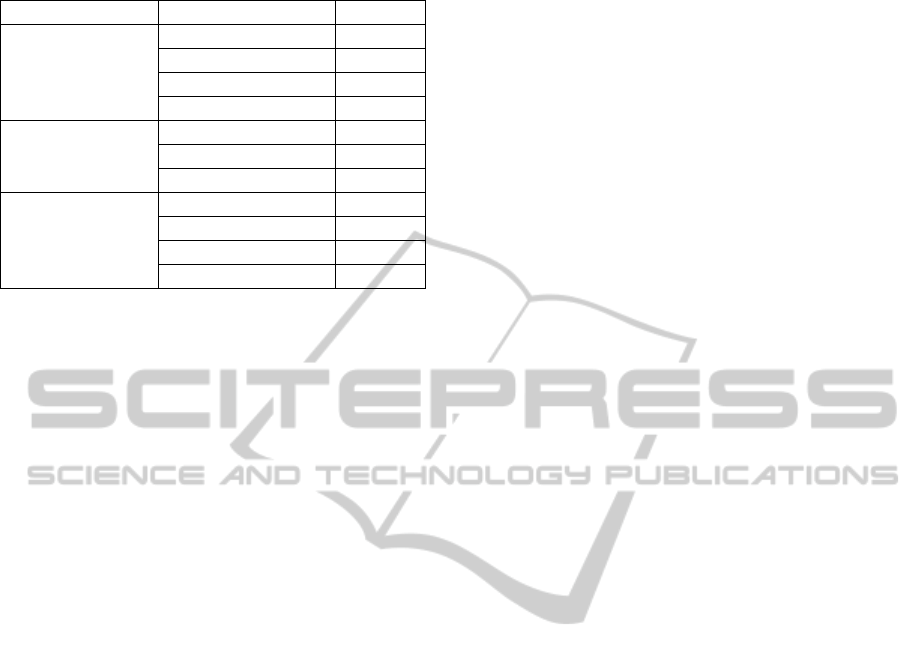

Table 1: Organizations studied.

Type Number

Organization

IT 13

Manufacturing 9

Public 4

etc. 8

Size

Small 8

Medium 12

Large 14

Interviewee

CIO 2

General Manager 10

Manager 13

Operational level 9

Table 1 presents the details of the organizations

from which the BPs were collected. Due to the

sensitivity of the material presented in this paper, we

do not name these organizations. However, some of

the organizations are major and well-known

multinational organizations.

We are aware of the fact that when one involves

students, the validity of the results may be strongly

jeopardized. To assure the quality of the data

collection, we prepared the Master’s students in

several consecutive steps. We first gave a lecture on

the subject and later, during a seminar, presented the

questionnaire and explained the motivation behind

each of its questions.

5 ANALYSIS AND DISCUSSION

In this section, we present the results of the

classification of BPs and our evaluation. We also

compare the results from the classification carried

out by students with the results from the literature

study presented in Alwazae et al. (2013). Finally, we

present an analysis of the evaluation.

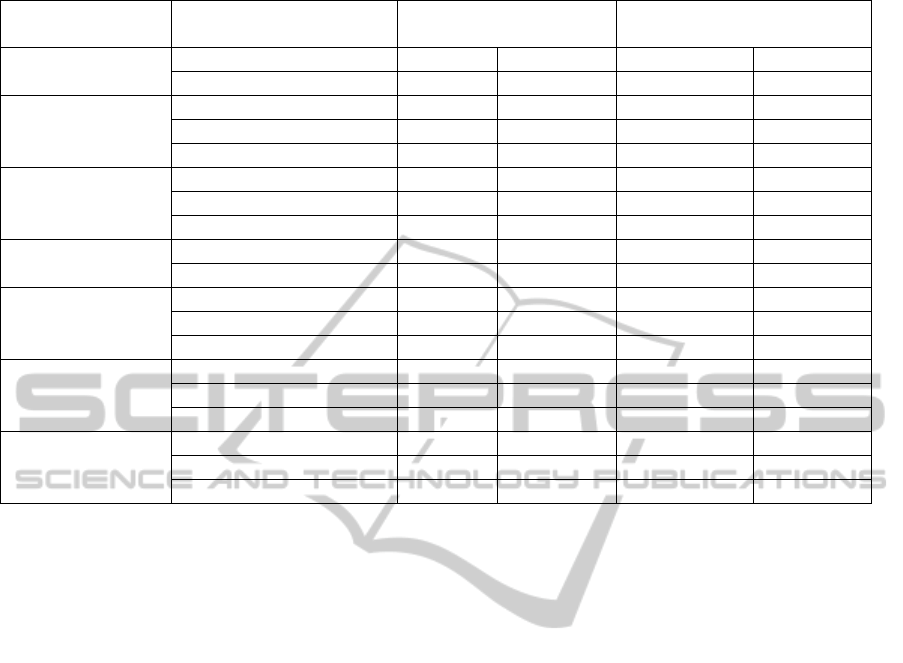

The results of the students’ classification of the

BPs, according to the BP system, are presented in

Table 2, under the heading “Occurrence in collected

BP out of 40”. The results of the students’

classification of their BPs are also compared with

the results from the literature study, see heading

“Occurrence in literature out of 26”. The literature

study was carried out as part of designing the BP

classification system (see Alwazae et al., 2013),

which classified papers and presented the occurrence

of values of each variable that signified the nature of

the BP and is presented in Table 2.

During the students’ classification of their BPs,

they originally encountered difficulties in

understanding the classification system with respect

to choosing the appropriate values for the variables

that suited their practice domain. However, after

refining and explaining the variables and values

presented in the BP system, they could easily

classify their BPs.

The results show that all values of the variables

were identifiable and utilized, which shows that the

BP system could work as an instrument for

classifying BPs. If only some values were present

and recognized, the BP system would have been of

limited aid in classification.

The results of the students’ classification of their

BPs was compared with the results from a literature

study, see Table 2. The comparison showed many

similarities between literary and student accounts.

This might show a legitimate and sound BP system

for classifying BPs. However, further investigation

needs to be carried out to make more conclusive

statements regarding the system’s valid usability.

The comparison also showed variance regarding

some values such as ‘technical area’, which occurred

8% in literature and 47.5% in industry. Also,

‘informal’ values occurred within 70% of the

literature and only 30% in industry. One way to

account for these variances may be that the students

came from a social-technical department; a large

group of students were collecting BPs from software

companies, see Table 1.

The students were also asked if they considered

some variables or values to be missing. In general,

the students claimed the BP system was exhaustive.

However, some students identified one additional

value pivotal for classifying BP. This value, within

the variable “Scope”, was department specific

(named “Departmental enterprise” in Table 2). The

students classified 8 BPs according to this value.

We define “Departmental enterprise” BPs as

focusing on specific work related tasks within a

department. However, this value does not exist

within the 26 collected papers and explains why this

value was not an option during classification.

We found that when examining organizational

BPs, an unforeseen value was documented, as was

seen with the value “Departmental enterprise.”

Although the students were able to identify a new

value, the identification of a new variable did not

occur. The reason for this could be that the values of

the variables may depend on the context of the

practice and may become more evident in future,

more comprehensive, studies.

KMIS2013-InternationalConferenceonKnowledgeManagementandInformationSharing

408

Table 2: Result of the classification from literature (Adapted from Alwazae et al., 2013) and from industry.

Variable Value

Literature occurrence

out of 26

Industry occurrence in

collected BP out of 40

Degree of

cooperation

Competitive 19 73% 24 60%

Collaborative 7 27% 16 40%

Organisational

level

Operational 17 65% 25 62.5%

Tactical 6 24% 5 12.5%

Strategy 3 11% 10 25%

Scope

Departmental enterprise 0 0 8 20%

Local Enterprises 17 65% 22 55%

Global enterprises 9 35% 10 25%

Completeness of

description

Complete with context 4 15% 12 30%

Basic parts 22 85% 28 70%

Degree of

Quantification

Qualitative measures 19 73% 19 47.5%

Quantitative measures 5 20% 14 35%

Mixed measures 2 8% 7 17.5%

Implementation

areas

Technical area 2 8% 19 47.5%

Business area 18 70% 15 37.5%

Management area 6 23% 6 15%

Level of

formalization

Informal 18 70% 12 30%

Semi-formal 5 19% 16 40%

Formal 3 11% 12 30%

The students were also asked to explain the

motivation behind their choice of values. The

students justified all the chosen values with some

exceptions (only 15 values out of 280 were not

clearly justified). In a majority of the cases (265 out

of 280), we interpreted student motivations as

convincing. For example for the choice of value

"Operational" (for the variable "Organisational

level") a typical motivation was "the BP is focusing

on an operational routine", and for the value

"Management" (for the variable "Implementation

area") a typical motivation was "the BP is supporting

decision making for upper management". From

these results we conclude that it was easy for

students to choose a certain value.

6 RELATED RESEARCH

In literature, there are different BP systems for

different domains. For instance, in the enterprise

architecture domain, The Open Group Architecture

Framework (The Open Group, 2011) and Zachman

model (Zachman, 2008) are well known systems,

while in the quality management domain the popular

systems are ISO 9000 (Peach, 2003) and Six Sigma

(Pyzdek and Keller, 2009). For the IT enterprise

management and IT governance domains, the

Control Objectives for Information and Related

Technology (COBIT) framework is more suitable

(ISACA, 2012). Also, the Information Technology

Infrastructure Library (ITIL) is a popular framework

for IT services (Hendriks and Carr, 2002). The

Balance scorecard is a popular framework

measuring the performance of an organization

(Martinsons et al., 1999). Common among these

frameworks is their summarization of many

experiences describing how work should be

organized between people within a particular context

(Graupner et al., 2009). Although these frameworks

do not directly address how BP is organized,

classified and performed, they can, however, offer

some guidance.

The literature about BP is mainly descriptive.

Some papers describe the BPs that an organization

has in place. Others are limited to describing the

dissemination of BP without discussing in detail the

necessary context related to the practices (Davies

and Kochhar, 2002). The description of necessary

background components (i.e. context) of BP would

help organizations determine whether a BP is

appropriate for their business or not. For instance,

within the ITIL framework, there is a lifecycle phase

with each phase including a number of specific

processes such as incident management and supplier

management (Nezhad et al., 2010). The descriptions

of these processes are directed to employees and to

be followed in their respective work domains. Such

a context, in this case the phases of the life cycle,

will support the classification of the processes, and

VerifyingtheUsefulnessofaClassificationSystemofBestPractices

409

could be used for classifying other BPs.

7 CONCLUSIONS

In this paper, a BP system for classifying BPs has

been evaluated. The evaluation shows promising

results. First, all values of the variables were used by

students when classifying BPs, which shows that the

BP system supports the classification scheme.

Second, the students could justify their choice of

values, which suggests the BP system is easy to use

for classification. Third, the comparison to the

classification of research papers in the BP area

shows similar classification results. This indicates

comprehensiveness when classifying BPs. Fourth;

the students claimed that the BP system was

exhaustive.

Our next step of further refining and evaluating

the BP system will be carried out according to the

design science strategy presented by Sein et al.

(2011). This artifact will be further developed in an

iterative evaluation process with feedback from BP

experts to provide an integrative perspective of

quality measures or checklist for the usefulness of

BP implementation. Therefore, to introduce this BP

system into practice and evaluating it is a necessary

step for its success.

REFERENCES

Alwazae, M., Kjellin, H., Perjons, E., 2013. A synthesized

classification system for best practices, VINE The

Journal of Information and Knowledge Management

Systems, Emerald Group Publishing Limited

(submitted).

Asrofah, T., Zailani, S., Fernando, Y., 2010. Best practices

for the effectiveness of benchmarking in the

Indonesian manufacturing companies, Emerald Group

Publishing Limited, Benchmarking: An International

Journal, Vol. 17 No. 1, pp. 115-143.

Axelsson K., Melin U., Söderström F., 2011. Analyzing

best practice and critical success factors in a health

information system case – Are there any shortcuts to

successful IT implementation?, 19th European

Conference on Information Systems, Tuunainen V,

Nandhakumar J, Rossi M, Soliman W (Eds.) Helsinki,

Finland, pp. 2157-2168.

Bider, I., Johannesson, P., Perjons, E., 2012. Design

science research as movement between individual and

generic situation-problem-solution spaces, In

Baskerville R., De Marco, M., Spagnoletti, P. (eds.)

Organizational Systems. An Interdisciplinary

Discourse, Springer.

Cross, R., Baird, L., 2000. Technology is not Enough:

Improving Performance by Building Organizational

Memory, Sloan Management Review, pp. 69–78.

Davenport, T.H., De Long, D.W., Beers, M.C., 1998.

Successful knowledge management projects. Sloan

Management Review pp. 43–57 Winter.

Davies, A. J., Kochhar, A. K., 2002. Manufacturing best

practice and performance studies: a critique,

International Journal of Operations & Production

Management, MCB University Press, Vol. 22 No. 3,

pp. 289-305.

Desouza, K., Evaristo, R., 2003. Global knowledge

management strategies. European Management

Journal 21, No. 1, pp. 62–67.

Dinur, A., Hamilton, R., Inkpen, A., 2009. Critical context

and international intrafirm best-practice transfers,

Elsevier Inc, Journal of International Management,

Vol. 15, pp. 432-446.

Fragidis, G., Tarabanis, K., 2006. From Repositories of

Best Practices to Networks of Best Practices,

International Conference on Management of

Innovation and Technology, IEEE, pp. 370-374.

Graupner, S., Motahari-Nezhad, H. R., Singhal, S., Basu,

S., 2009. Making processes from best practice

frameworks actionable, Enterprise Distributed Object

Computing Conference Workshops, 13th IEEE, ISBN:

978-1-4244-5563-8, 1-4 September 2009, pp. 25-34.

Hansen, M. T., 2002. Knowledge networks: explaining

effective knowledge sharing in multiunit companies.

Organization Science Vol. 13, pp. 232–248.

Hendriks, L., Carr, M., 2002. ITIL: Best Practice in IT

Service Management, in: Van Bon, J. (Hrsg.): The

Guide to IT Service Management, Band 1, London u.

a. 2002, pp. 131-150.

Hevner, A. R., March, S. T., Park, J., 2004. Design

Science in Information Systems Research, MIS

Quarterly, Vol. 28, Iss. 1, pp. 75–105.

ISACA., 2012. The Control Objectives for Information

and related Technology COBIT. (Online) 2012.

(Cited: March 10, 2013.) http://www.isaca.org/

COBIT/Pages/default.aspx.

Martinsons, M., Davison, R., Tse, D., 1999. The balanced

scorecard: a foundation for the strategic management

of information systems, Decision Support Systems,

Vol. 25. No.1, pp. 71-88.

Netland, T., Alfnes, E., 2011. Proposing a quick best

practice maturity test for supply chain operations,

Measuring Business Excellence, Vol.15 No.1,pp.66-76.

Reddy, W., McCarthy, S., 2006. Sharing best practice,

International Journal of Health Care Quality

Assurance, Vol. 19 No. 7, pp. 594-598.

Nezhad, H. R. M., Graupner, V., Bartolini, C., 2010. A

Framework for Modeling and Enabling Reuse of Best

Practice IT Processes, Business Process Management

Workshops pp. 226-231

Peach, R. W., 2003. The ISO 9000 handbook, McGraw-

Hill, 2003.

Peffers, K., Tuunanen, T., Rothenberger, M., Chatterjee,

S., 2007. A Design Science Research Methodology for

Information Systems Research, Journal of Mana-

gement Information Systems, Vol. 24, Iss. 3, pp. 45-77.

KMIS2013-InternationalConferenceonKnowledgeManagementandInformationSharing

410

Pries-Heje, J., Baskerville, R., Venable, J., 2008.

Strategies for Design Science Research Evaluation.

ECIS 2008 Proceedings.

Pyzdek, T., Keller, P., 2009. The Six Sigma Handbook: A

complete guide for green belts, black belts, and

managers at all levels, McGraw-Hill Professional, 3

nd

edition.

Ringsted, C., Hodges, B., Scherpier, A., 2011. The

research compass: an introduction to research in

medical education: AMEE guide No. 56. Medical

Teacher Vol. 33, pp. 695-709.

Sein, M. K., Henfridsson, O., Purao, S., Rossi, M.,

Lindgren, R., 2011. Action Design Research, MIS

Quarterly , Vol. 35, Iss.1, pp. 37–56.

The Open Group, 2011. The Open Group Architecture

Framework (TOGAF). [Online] 9.1, 2011. [Cited:

March 15, 2013.] http://www.opengroup.org/togaf/.

Watson, G. H., 2007. Strategic benchmarking reloaded

with six sigma: improving your company's

performance using global best practice, John Wiley &

Sons, Inc. New York, NY, USA ISBN:0470069082.

Xu, Y., Yeh, C., 2010. An Optimal Best Practice Selection

Approach Computational Science and Optimization

(CSO), 2010 Third International Joint Conference on

Computational Science and Optimization, No. 2, 28-

31 May 2010, pp. 242-246.

Zachman, J. A., 2008. The Zachman International

Enterprise Architecture. [Online] 2008. [Cited: March

10, 2013.] http://www.zachman.com/about-the-

zachman-framework.

Zack, M. H., 1999. Managing Codified Knowledge. Sloan

Management Review 45–58 Summer.

Zairi, M., Ahmed, P., 1999. Benchmarking maturity as we

approach the millennium?, Total Quality Management,

Taylor & Francis Ltd, Vol. 10 No. 4-5, pp.810-816.

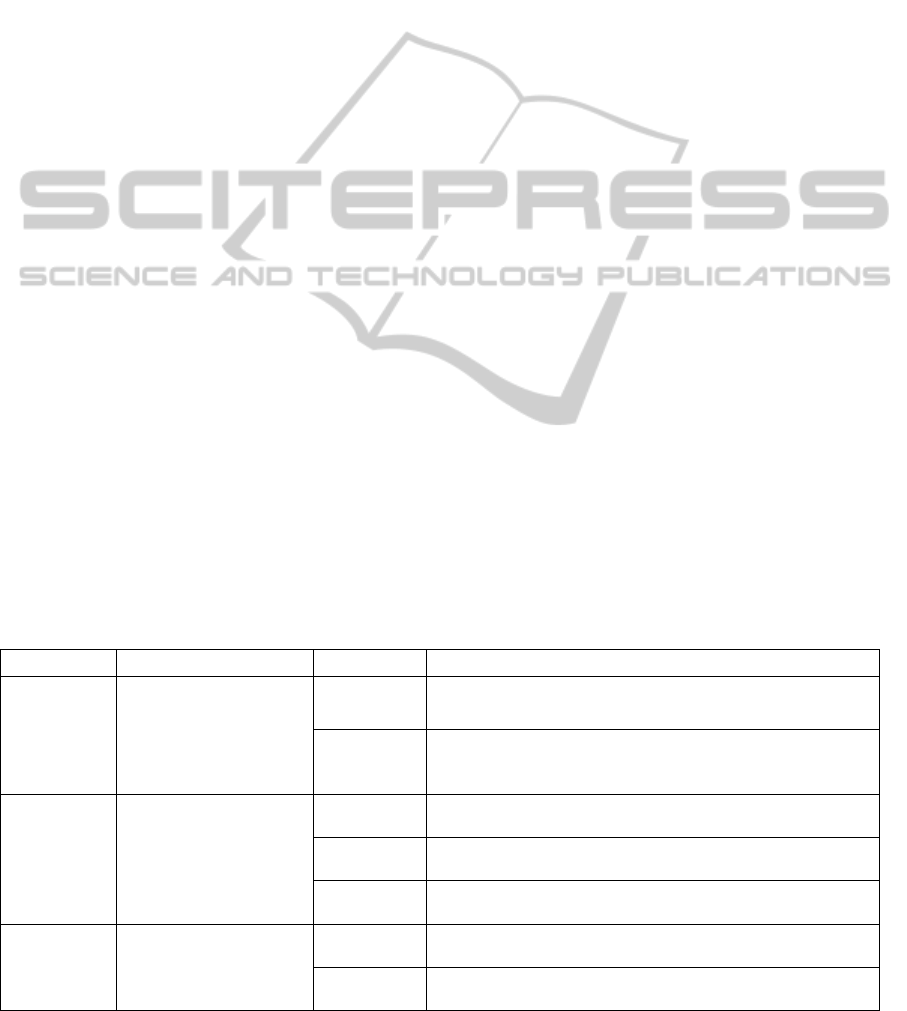

APPENDIX

Variable and values definition (Adapted from Alwazae et al., 2013).

Variable

Definition

Value Definition

Degree of

cooperation

Degree of cooperation

means the practice is

focusing on increasing

competitive edge or

increasing collaboration

Competitive

Competitive means that best practice is focus on making a,

practice, a product, or a service more competitive.

Collaborative

Collaborative means that best practices is focusing on

collaborative knowledge sharing for creativity and

ingenuity/innovativeness

Organisational

level

Organisational level means

the level in an organization

that the best practice

focuses on

Operational

Operational means that the best practice focuses on a particular

operational routine or business process

Tactical

Tactical means that the best practice focuses on tactical short-term

goals

Strategy

Strategy means that the best practice focuses on more overarching

strategic long-term goals

Scope

Scope means the area or

extension that the best

practices focusing on

Local

Enterprises

Local Enterprises means that the best practice focusing on one

single organization

Global

enterprises

Global enterprises means that the practice is focusing on a

multinational organization

VerifyingtheUsefulnessofaClassificationSystemofBestPractices

411

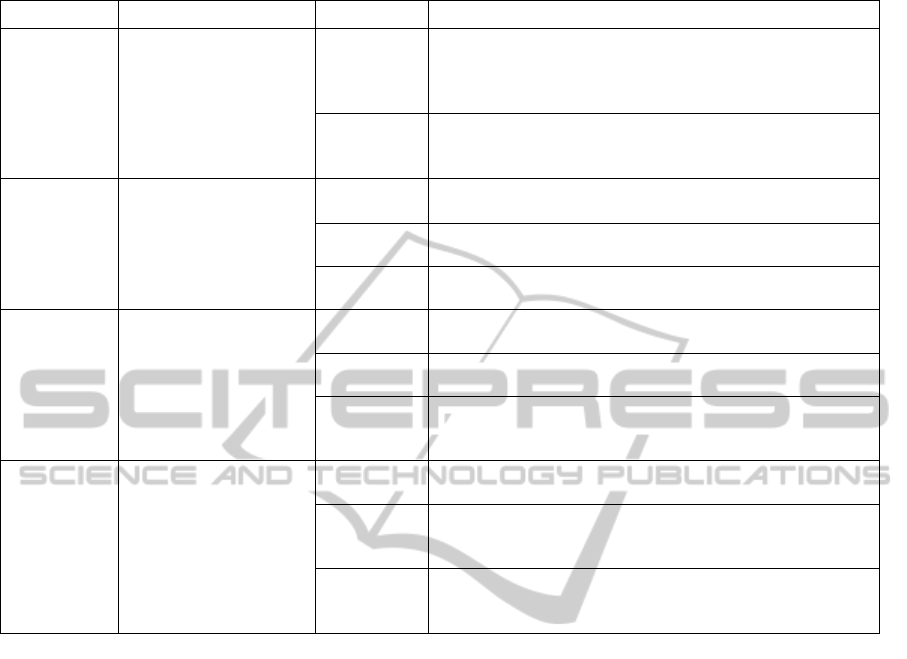

V

ariable and values definition (Adapted from Alwazae et al., 2013) (Cont.).

Variable

Definition

Value Definition

Completeness

of description

Completeness of

description means if the

description contains a

necessary context for using

the practice or just basic

parts

Complete

with context

Complete with context means that the practice can be used without

the user being familiar with the context because it contain the

context (that is, when to apply, where to apply, who applies it,

why to apply, and how to apply)

Basic parts

Basic parts means that the user of the practice must be familiar

with the context in order to know how to use the practice, that is, it

mainly includes how to apply it

Degree of

Quantification

Degree of quantification

means the type of valid

measures assigned to the

best practices

Qualitative

measures

Qualitative measures means that interpretive, soft, measures are

assigned to practices

Quantitative

measures

Quantitative measures means that numerical, hard, values are

assigned to practices

Mixed

measures

Mixed measures means that both soft and hard measures are

assigned to practices

Implementation

areas

Implementation area

means the area that a best

practices is aimed to be

applied in

Technical

area

Technical area means that the area of application of the best

practices is technical

Business area

Business area means that the area of application of the best

practices is including some kind of business processes

Management

area

Management area means that the area of application of the best

practices is geared to upper-management and organizational

leadership and governance

Level of

formalization

Level of formalization

means the level of

formalization of the best

practices

Informal

Informal means that the best practices have the form of soft,

informal suggestions

Semi-formal

Semi-formal means that best practices have the form of directing

functional considerations, i.e. business rules, via established

organizational procedures

Formal

Formal means that best practices have the form of formalized

procedure with official adaptation and often embedded in IT

implementation of best practices, such as ERP or BPM systems

KMIS2013-InternationalConferenceonKnowledgeManagementandInformationSharing

412