Continuous Test-Driven Development

A Novel Agile Software Development Practice and Supporting Tool

Lech Madeyski

1

and Marcin Kawalerowicz

2

1

Wroclaw University of Technology, Wyb.Wyspianskiego 27, 50-370 Wroclaw, Poland

2

Opole University of Technology, ul. Sosnkowskiego 31, 45-272 Opole, Poland

Keywords:

Continuous Testing, Test-Driven Development, TDD, Continuous Test-driven Development, CTDD, Agile.

Abstract:

Continuous testing is a technique in modern software development in which the source code is constantly unit

tested in the background and there is no need for the developer to perform the tests manually. We propose an

extension to this technique that combines it with well-established software engineering practice called Test-

Driven Development (TDD). In our practice, that we called Continuous Test-Driven Development (CTDD),

software developer writes the tests first and is not forced to perform them manually. We hope to reduce the

time waste resulting from manual test execution in highly test driven development scenario. In this article we

describe the CTDD practice and the tool that we intend to use to support and evaluate the CTDD practice in a

real world software development project.

1 INTRODUCTION

In 2001 a group of forward thinking software devel-

opers published ”Manifesto for Agile Software De-

velopment” (Beck et al., 2001). It proposes a set of

12 principles that the authors recommend to follow.

It is not without reason that the first principle of the

Agile Manifesto is that the highest priority is to sat-

isfy the customer, while the continuous delivery of

valuable software is a way to achieve it. This prin-

ciple is in the center of our research. We are targeting

the concept of test first programming, rediscovered by

Beck in the eXtreme Programming (XP) methodol-

ogy (Beck, 1999; Beck and Andres, 2004). One of the

XP concepts is that the development is driven by tests.

From this concept Test-Driven Development (TDD)

practice arose. It is proposed that using TDD one can

achieve better test coverage (Astels, 2003) and devel-

opment confidence (Beck, 2002). Empirical studies

by Madeyski (Madeyski, 2010a) showed that TDD is

better in producing loosely coupled software in com-

parison with traditional test last software development

practice.

In this paper we propose the extension of

TDD, called Continuous Test-Driven Development

(CTDD), in which we combine TDD with continuous

testing (Saff and Ernst, 2003) to solve the problem of

time consuming test running during the development.

Furthermore, we show the current state of tools

around continuous testing and present the tool we

use to empirically evaluate the CTDD practice (Au-

toTest.NET4CTDD), an open source continuous test-

ing plug-in for Microsoft Visual Studio Integrated De-

velopment Environment (IDE), that we modified to

perform an empirical study in industrial environment

on commercial software development project.

We have also performed a preliminary evaluation

(pre-test) of the AutoTest.NET4CTDD tool using a

survey inspired by Technology Acceptance Model

(TAM) (Davis, 1989; Venkatesh and Davis, 2000).

The survey was performed in a team of professional

software engineers from software development com-

pany located in Poland.

2 BACKGROUND

In the subsequent subsections we describe two pillars

of the Continuous Test-Driven Development practice

proposed in this paper, namely continuous testing and

Test-Driven Development practice.

2.1 Test-Driven Development

TDD constitutes an incremental development prac-

tice which is based on selecting and understanding

a requirement, specifying a piece of functionality as

260

Madeyski L. and Kawalerowicz M..

Continuous Test-Driven Development - A Novel Agile Software Development Practice and Supporting Tool.

DOI: 10.5220/0004587202600267

In Proceedings of the 8th International Conference on Evaluation of Novel Approaches to Software Engineering (ENASE-2013), pages 260-267

ISBN: 978-989-8565-62-4

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

a test, making sure that the test can potentially fail,

then writing the production code that will satisfy the

test condition (i.e. following one of the green bar pat-

terns), refactoring (if necessary) to improve the inter-

nal structure of the code, and ensuring that tests pass,

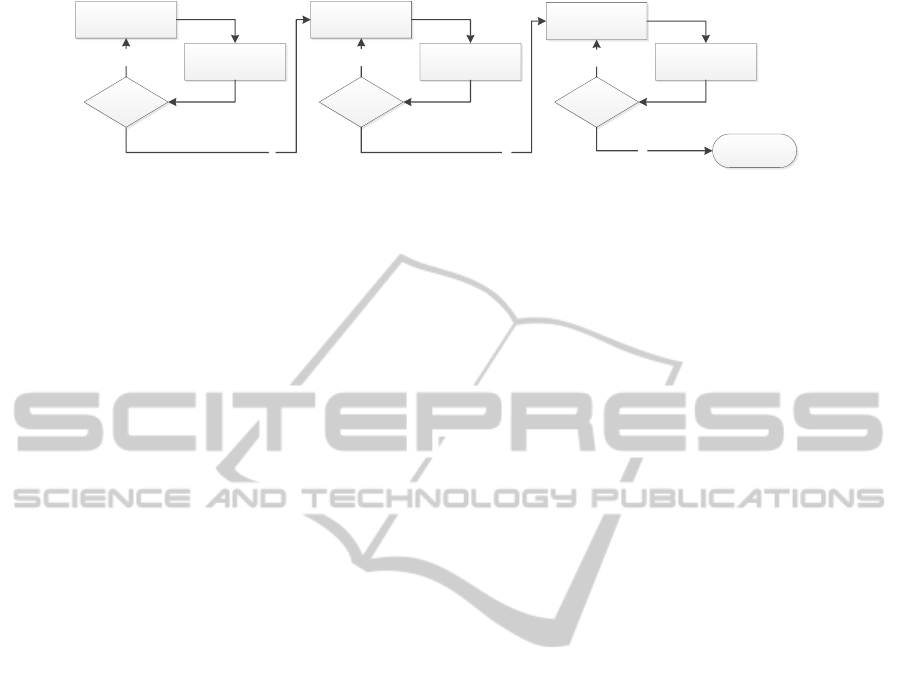

as shown in Figure 1.

TDD provides feedback through tests, and sim-

plicity of the internal structure of the code through

rigorous refactoring. The tests are supposed to be

run frequently, in the course of writing the production

code, thus driving the development process. The tech-

nique is usually supported by frameworks to write and

run automated tests (e.g. JUnit (Gamma and Beck,

2013; Tahchiev et al., 2010), NUnit (Osherove, 2009),

CppUnit, PyUnit and XMLUnit (Hamill, 2004)). A

good practical approach to TDD is provided in (Free-

man and Pryce, 2009), (Koskela, 2007), (Newkirk

and Vorontsov, 2004).

TDD has gained recent attention in professional

settings (Beck and Andres, 2004; Koskela, 2007; As-

tels, 2003; Williams et al., 2003; Maximilien and

Williams, 2003; Canfora et al., 2006; Bhat and Na-

gappan, 2006; Sanchez et al., 2007; Nagappan et al.,

2008; Janzen and Saiedian, 2008) and has made

first inroads into software engineering education (Ed-

wards, 2003a; Edwards, 2003b; Melnik and Maurer,

2005; M

¨

uller and Hagner, 2002; Pan

ˇ

cur et al., 2003;

Erdogmus et al., 2005; Flohr and Schneider, 2006;

Madeyski, 2005; Madeyski, 2006; Gupta and Jalote,

2007; Huang and Holcombe, 2009). For example,

Madeyski in his monograph on empirical evaluation

and meta-analysis of the effects of TDD (Madeyski,

2010a) points out that TDD leads to code that is

loosely coupled. As a consequence of Constantine’s

law, one may claim that TDD supports software main-

tenance due to loosely coupled code that is less error-

prone and less vulnerable to problems arising from

normal programming activities, e.g. modifications

due to design changes or maintenance (Endres and

Rombach, 2003). This important result, although not

codified as a law, has serious consequences with re-

spect to software development and maintenance costs.

2.2 Continuous Testing

Continuous Testing (CT) was introduced by Saff and

Ernst (Saff and Ernst, 2003) as a mean to reduce the

time waste for running the tests. The idea was coined

also by Gamma and Beck (Gamma and Beck, 2003)

as one of the features of a plugin they described was

ability to automatically run all the tests for a project

every time the project was built (they called this fea-

ture “auto-testing”). One of the goals of TDD is to

run the tests often. But while running the tests of-

ten the developer needs to interrupt his work often to

physically run the tests. Modern IDEs like Eclipse

or Visual Studio provide the possibility to keep the

codebase in compiled state. This practice is called

sometimes continuous compilation (automatic compi-

lation, automatic build). This approach eliminates the

waste from compiling the code manually after writ-

ing some source code by providing the functionality

in IDE to perform the build in background while the

developer is writing and/or saving the file. CT relies

on this approach and goes one step further by per-

forming the tests while the developer works. There is

no need to interrupt the work to run the tests. The tests

are ran automatically in the background and feedback

is provided to the developer immediately. Saff reports

that the waste that is eliminated by doing so is be-

tween 92 and 98% (Saff and Ernst, 2003) and has sig-

nificant effect on success in completing programming

tasks (Saff and Ernst, 2004). Good practical approach

to CT is given in (Rady and Coffin, 2011) and (Du-

vall et al., 2007).

There are numerous plug-ins for various IDEs and

other tools on the market that enable CT. The tools are

mostly provided as plug-ins for modern IDEs. The

first tools were developed for Eclipse IDE and Java.

Analogous tools were developed for Visual Studio

and .NET. There are also tools for other languages

like Ruby. Some of the tools supporting CT are In-

finitest (open source Eclipse and IntelliJ plug-in), JU-

nit Max (Eclipse CT plugin), Contester (Eclipse plug-

in from the students of Software Engineering Soci-

ety at Wroclaw University of Technology), NCrunch

(NCrunch is very rich commercial continuous testing

plug-in for Visual Studio), Autotest (continuous test-

ing for Ruby), Continuous Testing for VS (commer-

cial Visual Studio plug-in), AutoTest.NET (see sec-

tion 4.2 for details), Mighty Moose (packaged ver-

sion of AutoTest.NET).

Nowadays CT becomes recognized by the IDE

creators themselves. The newest version of Microsoft

Visual Studio 2012 comes with continuous testing

build-in. This option is available only in the two high-

est and most expensive versions of Visual Studio 2012

that is Premium and Ultimate.

Hence, the value of the CT practice became recog-

nized by the industry and our aim is to go even further

and to take advantage of the synergy of the both ideas

(TDD and CT) combined together into an agile soft-

ware development practice, as well as to collect an

empirical evidence on the usefulness of the proposed

practice in industrial settings.

ContinuousTest-DrivenDevelopment-ANovelAgileSoftwareDevelopmentPracticeandSupportingTool

261

Write the test

Run the test

Write the

functionality

Run the test

Y

Test fails?

Y

N

Refactor

Test fails?

Y

DoneN

Run the test

Test fails?

N

Figure 1: Test-Driven Development activities.

3 CONTINUOUS TEST-DRIVEN

DEVELOPMENT

In the aforementioned papers concerning CT we will

not find discussion of relationship between CT and

TDD. The only exception we know of is a MSc the-

sis by Olejnik supervised by the first author but their

research paper is not even submitted yet.

As the software industry employs millions of peo-

ple worldwide, even small increases in their produc-

tivity could be worth billions of dollars a year. Hence,

we decided to investigate a possible synergy of the

both aforementioned ideas (TDD and CT) and com-

bine them into an agile software development practice

which would demonstrate this synergy. Furthermore,

our aim is to provide a preliminary empirical evalua-

tion of the proposed practice which we call Continu-

ous Test-Driven Development (CTDD).

As in TDD the developer writes a potentially fail-

ing test for the functionality that not yet exists. Unlike

in the TDD there is no need to run the test. The test

is run under the hood by an IDE and the test feed-

back is provided to the developer. The developer can

start right away to create the functionality. When he

is done (or even while he is typing) the tests will be

run in the background and the feedback will be pro-

vided. There is no need to manually start the tests to

ensure the functionality passes the tests. That is the

case for refactoring activity too. There is no need to

perform the tests manually. The tests are continuously

performed in the background.

Figure 1 shows the common TDD strategy called

Red-Green-Refactor. The test is written. The test is

run but it fails (or even the code does not compile).

We have so called red bar. The functionality is writ-

ten (possibly faked) as quickly as possible. The tests

are run until the green bar is reached. The code is

refactored and the tests are run until the code reaches

desired form.

Figure 2 shows the enhanced Continuous Test-

Driven Development (CTDD) technique. All the

steps involving manual test executions are removed

because they are no longer being necessary. All the

tests are run in the background and feedback is pro-

vided straight forward and friction free to the devel-

oper. All other steps are still necessary (including

the refactoring). It is quite important to deliver the

test results to the developer without introducing addi-

tional noise to the development process. The devel-

oper should not be interrupted in his effort to produce

good software but he needs to be provided with visual

indicator that the process works as expected or not.

4 USING AutoTest.NET4CTDD TO

GATHER EMPIRICAL DATA IN

INDUSTRIAL SETTINGS

This section introduces a measurement infrastructure

we prepared in order to evaluate the CTDD practice

in professional settings.

4.1 Measurement Infrastructure

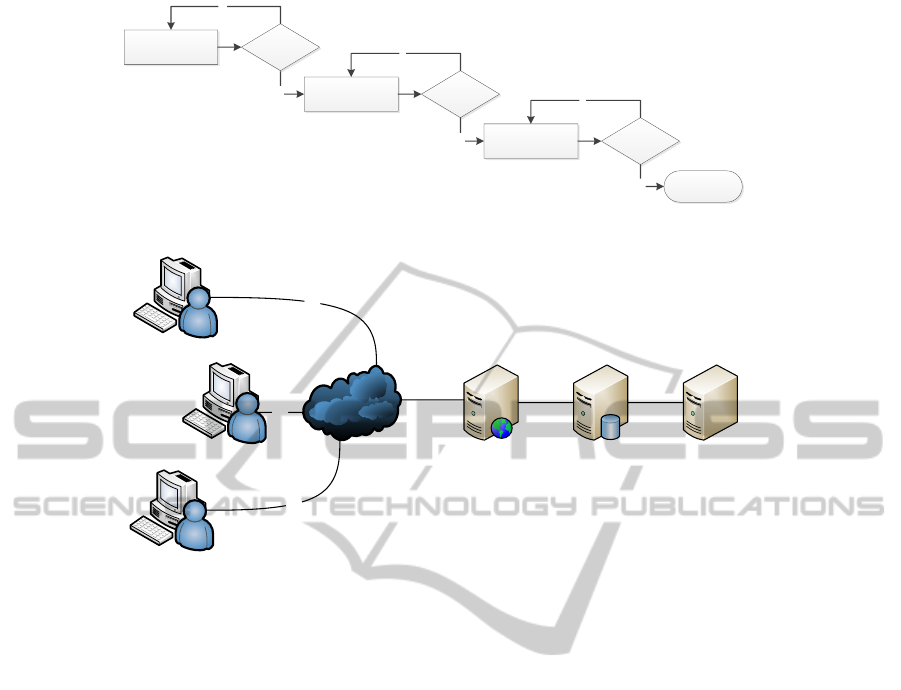

Figure 3 shows the software/hardware infrastructure

assembled to gather the empirical data.

Developers are using Microsoft Windows 7 com-

puters with Visual Studio 2010 installed. To support

the continuous testing AutoTest.NET4CTDD tool is

used (more information in Section 4.2). Measure-

ment data are gathered automatically using web ser-

vices and stored in Microsoft SQL Server database.

To protect our study from malfunctioning networks

or database we added fall-back logging capacities that

use local developer machine hard drives and text files.

We additionally store all the data in CSV flat file/s.

The data gathered will be than assessed using “Eval-

uator” showed on Figure 3. We purposefully left the

description of this part of our infrastructure because

it is being developed at the time of writing this paper.

Some inspirations are drawn from Zorro (Kou et al.,

2010), user action logger (M

¨

uller and H

¨

ofer, 2007)

and ActivitySensor (Madeyski and Szala, 2007).

ENASE2013-8thInternationalConferenceonEvaluationofNovelSoftwareApproachestoSoftwareEngineering

262

Write the test

Write the

functionality

Refactor

Y Test fails?

Y

N Test fails?

Y

DoneN

Test fails?

N

Figure 2: Continuous Test-Driven Development activities.

AutoTest.NET

Developer 1

AutoTest.NET

Developer 2

AutoTest.NET

Developer n

Intranet/

internet

Web Services

IIS

Database

SQL Server

WCF

WCF

WCF

Evaluator

Figure 3: Measurement infrastructure architecture.

4.2 AutoTest.NET4CTDD Description

AutoTest.NET was originally a .NET implementation

of Ruby Autotest framework. It was first started by

James Avary and hosted on Google Code and then

maintained by Svein Arne Ackenhausen on GitHub.

AutoTest.NET does not run all the tests all the

time. Such would have devastating influence on large

systems. First of all the tests are run only if something

significant changes - the developer saves the file. Sec-

ond not all the tests are run every time. AutoTest.NET

is intelligent enough to detect what changes influence

what tests and run only the tests that are relevant to the

change made. The tests are run in the background and

the report is provided in a friction free manner. The

development is not interrupted with any dialogue win-

dows. The system runs the tests in the background.

Nevertheless for large systems with a lot of tests de-

fined it might require high level of hardware equip-

ment. The computers the developers use need to be

fast. Along with the preliminary evaluation of Au-

toTest.NET4CTDD discussed in Section 6 we have

asked the developers if their IDE performs noticeably

slower with AutoTest.NET4CDD activated - major-

ity of developers reported no change in performance

(only 14% reported above average drop of perfor-

mance). But it is worth mentioning that the evaluation

was performed on average to small projects.

To prepare for the empirical evaluation of the

CTDD practice in industrial environment where Mi-

crosoft technologies are required, we have chosen Au-

toTest.NET open source plug-in. We were able to

fork it on GitHub add new functionality required to

gather measurement data (using Visual Studio 2012

CT functionality would lock us to the newest IDE

version and prevent us from extending it with mea-

surements). We have called it AutoTest.NET4CTDD

(abbreviation of AutoTest.NET for CTDD). The first

version of our plug-in is able to save the various mea-

surements, e.g. related to each build or test run. In the

investigated environment, with AutoTest.NET4TDD

installed, the empirical data will be stored in a ded-

icated database and on the developer machine. We

built into our version of AutoTest.NET (i.e. Au-

toTest.NET4CTDD) a client for simple web server

that we installed on one of the servers in the com-

pany. For web services Microsoft Windows Commu-

nication Foundation (WCF) framework is used.

AutoTest.NET4TDD is stored in “Impres-

sive Code” repository as a fork of the original

AutoTest.NET plug-in on GitHub. The cen-

tral web page for our plug-in is hosted under http://

madeyski.e-informatyka.pl/tools/autotestdotnet4ctdd/.

Our Plug-in is under constant development and

ContinuousTest-DrivenDevelopment-ANovelAgileSoftwareDevelopmentPracticeandSupportingTool

263

new functionalities are planned. It is planned to en-

rich AutoTest.NET4CTDD with functionality derived

from ActivitySensor Eclipse plug-in (Madeyski and

Szala, 2007).

5 RESEARCH GOAL,

QUESTIONS AND METRICS

The overall goal we are going to reach in the empirical

study we are preparing to is thorough evaluation of the

CTDD impact on software development efficiency.

However, the intermediate goal we are going to ad-

dress in this paper, a set of questions that define this

goal and the measurements needed to answer these

questions (as required by the GQM method (Basili

et al., 1994)) are as follows:

Goal: The evaluation of the new CTDD tool ac-

ceptance by professional software developers.

Question 1: What is the Perceived Usefulness of

the AutoTest.NET4CTDD Continuous Testing Tool?

Metrics:

M1.1 (better code actuator) — Au-

toTest.NET4CTDD will help to me produce better

code;

M1.2 (efficient work actuator) — Au-

toTest.NET4CTDD will help to work faster and

efficient;

M1.3 (test coverage actuator) — Au-

toTest.NET4CTDD will help to maintain better

test coverage;

M1.4 (subjective perceived usefulness) — Do I

want to use AutoTest.NET4CTDD in my projects?

Question 2: What is the Perceived Ease of Use of

the AutoTest.NET4CTDD Continuous Testing Tool?

Metrics:

M2.1 (ease of install) — I find the installation pro-

cess easy and straightforward;

M2.2 (ease of configuration and use) — Au-

toTest.NET4CTDD is easy to configure and use;

M2.3 (tool discoverability) — Use of Au-

toTest.NET4CTDD is self-explanatory;

M2.4 (tool performance) — Visual Studio per-

forms noticeably slower with AutoTest.NET4CTDD

plug-in activated;

M2.5 (quality of feedback) — Au-

toTest.NET4CTDD feedback window is useful.

Question 3: Are the developers intending to use

the AutoTest.NET4CTDD Continuous Testing Tool?

Metrics:

M3.1 (use intention) — Assuming I had access to,

I intend to use it;

M3.2 (use prediction) — Given that I had access

to, I predict that I would use it;

M3.3 (commitment level) — My personal level of

commitment to using AutoTest.NET4CTDD is low;

M3.4 (intention level) — My personal intention to

use AutoTest.NET4CTDD is high.

Question 4: How is the tool perceived in terms of

subjective norm?

Metrics:

M4.1 (approval level) — Most people that are im-

portant in my professional career would approve of

my use of AutoTest.NET4CTDD;

M4.2 (recommendation level) — Most people that

are important in my professional career would tend to

encourage my use of AutoTest.NET4CTDD.

Question 5: How is the tool perceived in terms of

organizational usefulness?

Metrics:

M5.1 (success ratio) — My use of Au-

toTest.NET4CTDD would make my organization

more successful;

M5.2 (benefit ratio) — My use of Au-

toTest.NET4CTDD would be beneficial for my

organization.

Table 1 shows 7-point Likert scale used to mea-

sure the metrics.

Table 1: Survey scale.

Answer Level

Strongly disagree 1

Disagree 2

Disagree somewhat 3

Undecided 4

Agree somewhat 5

Agree 6

Strongy agree 7

We have used the tool described above and per-

formed an empirical acceptance study on it.

6 A PRELIMINARY EVALUATION

OF AutoTest.NET4CTDD BY

DEVELOPERS

User acceptance of novel technologies and tools is

an important field of research. Although many mod-

els have been proposed to explain and predict the use

of a tool, the Technology Acceptance Model (Davis,

1989; Venkatesh and Davis, 2000) has been the one

which has captured the most attention of the Informa-

tion Systems community.

A survey based on Technology Acceptance Model

was carried out among a small group of six profes-

sional developers at one of the software development

ENASE2013-8thInternationalConferenceonEvaluationofNovelSoftwareApproachestoSoftwareEngineering

264

Table 2: TAM Survey results of the AutoTest.NET4CTDD Continuous Testing Tool.

Question Mode Median Q1 Q3

(25th percentile) (75th percentile)

Perceived Usefulness:

better code actuator 5 5 5 5.75

efficient work actuator 3 4.5 3.25 5

test coverage actuator 6 6 5.25 6.75

subjective perceived usefulness 5 5 4.25 5.75

Perceived Ease of Use:

ease of install 7 7 7 7

ease of configuration and use 4 4.5 4 5.75

discoverability 7 6.5 6 7

tool performance 4 4 2.5 4

quality of feedback 7 7 6.25 7

Intention to Use:

use intention 5 5 5 5

use prediction 5 5 5 5.75

commitment level 4 4 4 4.75

intention level 4 4.5 4 6.5

Subjective Norm

approval level 4 5 4 6.75

recommendation level 4 5.5 4.25 6

Organizational Usefulness:

success ratio 5 5 5 5.75

benefit ratio 5 5 5 5

companies in Opole, Poland. The developers were

asked to install the AutoTest.NET4CTDD tool, to use

it on a real project and to provide a preliminary eval-

uation of the tool using our web survey. We asked the

developers for example if they think they will be more

productive and efficient using the tool, if the tool in

their opinion will provide better way to maintain bet-

ter test coverage, it the tool is easy to use and useful

and if the want to use it at all and how committed to

use it they are. Table 2 shows results of the survey.

The questions were divided into 5 groups: Per-

ceived Usefulness (PU), Perceived Ease of Use

(EOU), Intention to Use (ITU), Subjective Norm

(SN), Organizational Usefulness (OU) of the Au-

toTest.NET4CTDD Continuous Testing Tool. The

PU and EOU are drawn from first version of TAM

model (Davis, 1989). SN is drawn from the sec-

ond version of TAM model (Venkatesh and Davis,

2000). PU and EOU are determinants of the inten-

tion to use AutoTest.NET4CTDD Continuous Testing

Tool among developers. SN is determinant of inten-

tion. ITU and OU are variations of determinants de-

scribing the direct intention to use (ITU) and an orga-

nizational impact of the AutoTest.NET4CTDD Con-

tinuous Testing Tool (OU).

After the questionnaire is completed, each item

may be analysed separately. Given the Likert Scale’s

ordinal basis, it makes sense to summarize the cen-

tral tendency of responses from a Likert scale by us-

ing the mode and the median, with variability mea-

sured by quartiles or percentiles i.e. first quartile Q1

(25th percentile which splits lower 25% of data), sec-

ond quartile which is median, third quartile Q3 (75th

percentile which splits lower 75%).

Both, mode and median (if we transform answers

of negated questions) show that the tool is perceived

as rather useful (although not necessarily will help

to work faster) and easy to use (although not nec-

essarily easy to configure). The preliminary inten-

tion of developers to use our tool is somewhere be-

tween positive and sitting on the fence. The subjective

norm and organizational usefulness seem to be dis-

cernible although slightly. The most positive results

regard the usefulness of the AutoTest.NET4CTDD

feedback window and the easiness of the installation

process. In both cases mode and median are equal to

7 (strongly agree). The former result is promising the

usefulness of the fast feedback from the tool is the key

concept which could determine the usefulness of the

CTDD development practice.

7 DISCUSSION, CONCLUSIONS

AND FUTURE WORK

In this paper we have described a novel combination

of TDD and CT that we called CTDD. It seems plau-

sible that the CTDD practice as an enhancement of the

TDD practice and supported by proper tool (we pro-

posed and made available such a tool) has a chance

ContinuousTest-DrivenDevelopment-ANovelAgileSoftwareDevelopmentPracticeandSupportingTool

265

not only to gain attention in professional settings, but

also impact the development speed and software qual-

ity. These kinds of effects have been investigated

with regard to the TDD practice, e.g. (Madeyski,

2010a; Madeyski, 2010b; Madeyski and Szala, 2007;

Madeyski, 2006; Madeyski, 2005). We showed that

the possible synergy effect between TDD and CT can

be useful and is worth empirical investigation.

In our empirical study that will came after this pa-

per we will measure the benefits that the CTDD prac-

tice deliver in the real-world software project. We will

use our AutoTest.NET4CTDD tool (which is an open

source tool, available to download) to constitute the

“C” that we added to the “TDD” to form CTDD. Per-

formed tool acceptance study showed promising re-

sults for further research. The developers found the

tool rather useful and easy to use. Extending the Au-

toTest.NET proved to be relatively easy task. We were

able to add the functionality for gathering measure-

ments quite fast. We have developed infrastructure

to confidently collect the measurement data. We are

certain that the tool gives us enough flexibility to add

new functionality in the future during the course of

our studies over CTDD.

ACKNOWLEDGEMENTS

Marcin Kawalerowicz is a fellow of the ”PhD Schol-

arships - an investment in faculty of Opole province”

project. The scholarschip is co-financed by the Euro-

pean Union under the European Social Fund.

REFERENCES

Astels, D. (2003). Test Driven development: A Practical

Guide. Prentice Hall Professional Technical Refer-

ence.

Basili, V. R., Caldiera, G., and Rombach, H. D. (1994). The

goal question metric approach. In Encyclopedia of

Software Engineering. Wiley.

Beck, K. (1999). Extreme Programming Explained: Em-

brace Change. Addison-Wesley, Boston, MA, USA.

Beck, K. (2002). Test Driven Development: By Example.

Addison-Wesley, Boston, MA, USA.

Beck, K. and Andres, C. (2004). Extreme Programming Ex-

plained: Embrace Change. Addison-Wesley, Boston,

MA, USA, 2nd edition.

Beck, K., Beedle, M., van Bennekum, A., Cockburn, A.,

Cunningham, W., Fowler, M., Grenning, J., High-

smith, J., Hunt, A., Jeffries, R., Kern, J., Marick, B.,

Martin, R. C., Mellor, S., Schwaber, K., Sutherland,

J., and Thomas, D. (2001). Manifesto for agile soft-

ware development. http://agilemanifesto.org/.

Bhat, T. and Nagappan, N. (2006). Evaluating the effi-

cacy of test-driven development: industrial case stud-

ies. In ISESE’06: ACM/IEEE International Sympo-

sium on Empirical Software Engineering, pages 356–

363, New York, NY, USA. ACM Press.

Canfora, G., Cimitile, A., Garcia, F., Piattini, M., and Vis-

aggio, C. A. (2006). Evaluating advantages of test

driven development: a controlled experiment with

professionals. In ISESE’06: ACM/IEEE Interna-

tional Symposium on Empirical Software Engineer-

ing, pages 364–371, New York, NY, USA. ACM

Press.

Davis, F. D. (1989). Perceived usefulness, perceived ease of

use, and user acceptance of information technology.

MIS Quarterly, 13(3):319–340.

Duvall, P., Matyas, S. M., and Glover, A. (2007). Con-

tinuous Integration: Improving Software Quality and

Reducing Risk (The Addison-Wesley Signature Series).

Addison-Wesley Professional.

Edwards, S. H. (2003a). Rethinking computer science ed-

ucation from a test-first perspective. In OOPSLA’03:

Companion of the 18th Annual ACM SIGPLAN Con-

ference on Object-Oriented Programming, Systems,

Languages, and Applications, pages 148–155, New

York, NY, USA. ACM.

Edwards, S. H. (2003b). Teaching software testing: auto-

matic grading meets test-first coding. In OOPSLA’03:

Companion of the 18th Annual ACM SIGPLAN Con-

ference on Object-Oriented Programming, Systems,

Languages, and Applications, pages 318–319, New

York, NY, USA. ACM.

Endres, A. and Rombach, D. (2003). A Handbook of Soft-

ware and Systems Engineering. Addison-Wesley.

Erdogmus, H., Morisio, M., and Torchiano, M. (2005). On

the Effectiveness of the Test-First Approach to Pro-

gramming. IEEE Transactions on Software Engineer-

ing, 31(3):226–237.

Flohr, T. and Schneider, T. (2006). Lessons Learned from an

XP Experiment with Students: Test-First Need More

Teachings. In M

¨

unch, J. and Vierimaa, M., editors,

PROFES’06: Product Focused Software Process Im-

provement, volume 4034 of Lecture Notes in Com-

puter Science, pages 305–318, Berlin, Heidelberg.

Springer.

Freeman, S. and Pryce, N. (2009). Growing Object-

Oriented Software, Guided by Tests. Addison-Wesley

Professional, 1st edition.

Gamma, E. and Beck, K. (2003). Contributing to Eclipse:

Principles, Patterns, and Plugins. Addison Wesley

Longman Publishing Co., Inc., Redwood City, CA,

USA.

Gamma, E. and Beck, K. (2013). JUnit.

http://www.junit.org/ Accessed Jan 2013.

Gupta, A. and Jalote, P. (2007). An experimental eval-

uation of the effectiveness and efficiency of the test

driven development. In ESEM’07: International Sym-

posium on Empirical Software Engineering and Mea-

surement, pages 285–294, Washington, DC, USA.

IEEE Computer Society.

Hamill, P. (2004). Unit test frameworks. O’Reilly.

ENASE2013-8thInternationalConferenceonEvaluationofNovelSoftwareApproachestoSoftwareEngineering

266

Huang, L. and Holcombe, M. (2009). Empirical inves-

tigation towards the effectiveness of Test First pro-

gramming. Information and Software Technology,

51(1):182–194.

Janzen, D. and Saiedian, H. (March–April 2008). Does

Test-Driven Development Really Improve Software

Design Quality? IEEE Software, 25(2):77–84.

Koskela, L. (2007). Test driven: practical tdd and accep-

tance tdd for java developers. Manning Publications

Co., Greenwich, CT, USA.

Kou, H., Johnson, P. M., and Erdogmus, H. (2010). Op-

erational definition and automated inference of test-

driven development with zorro. Automated Software

Engineering, 17(1):57–85.

Madeyski, L. (2005). Preliminary Analysis of the Effects

of Pair Programming and Test-Driven Development

on the External Code Quality. In Zieli

´

nski, K. and

Szmuc, T., editors, Software Engineering: Evolution

and Emerging Technologies, volume 130 of Frontiers

in Artificial Intelligence and Applications, pages 113–

123. IOS Press.

Madeyski, L. (2006). The Impact of Pair Programming and

Test-Driven Development on Package Dependencies

in Object-Oriented Design – An Experiment. Lecture

Notes in Computer Science, 4034:278–289.

Madeyski, L. (2010a). Test-Driven Development - An Em-

pirical Evaluation of Agile Practice. Springer.

Madeyski, L. (2010b). The impact of test-first programming

on branch coverage and mutation score indicator of

unit tests: An experiment. Information and Software

Technology, 52(2):169–184.

Madeyski, L. and Szala, L. (2007). The impact of test-

driven development on software development produc-

tivity - an empirical study. In Abrahamsson, P., Bad-

doo, N., Margaria, T., and Messnarz, R., editors, Soft-

ware Process Improvement, volume 4764 of Lecture

Notes in Computer Science, pages 200–211. Springer

Berlin Heidelberg.

Maximilien, E. M. and Williams, L. (2003). Assessing

test-driven development at IBM. In Proceedings of

the 25th International Conference on Software Engi-

neering, ICSE ’03, pages 564–569, Washington, DC,

USA. IEEE Computer Society.

Melnik, G. and Maurer, F. (2005). A cross-program inves-

tigation of students’ perceptions of agile methods. In

ICSE’05: International Conference on Software En-

gineering, pages 481–488.

M

¨

uller, M. M. and Hagner, O. (2002). Experiment about

test-first programming. IEE Procedings-Software,

149(5):131–136.

M

¨

uller, M. M. and H

¨

ofer, A. (2007). The effect of experi-

ence on the test-driven development process. Empiri-

cal Software Engineering, 12(6):593–615.

Nagappan, N., Maximilien, E. M., Bhat, T., and Williams,

L. (2008). Realizing quality improvement through

test driven development: results and experiences of

four industrial teams. Empirical Software Engineer-

ing, 13(3).

Newkirk, J. W. and Vorontsov, A. A. (2004). Test-Driven

Development in Microsoft .Net. Microsoft Press, Red-

mond, WA, USA.

Osherove, R. (2009). The Art of Unit Testing: With Exam-

ples in .Net. Manning Publications Co., Greenwich,

CT, USA, 1st edition.

Pan

ˇ

cur, M., Ciglari

ˇ

c, M., Trampu

ˇ

s, M., and Vidmar, T.

(2003). Towards empirical evaluation of test-driven

development in a university environment. In EURO-

CON’03: International Conference on Computer as a

Tool, pages 83–86.

Rady, B. and Coffin, R. (2011). Continuous Testing: with

Ruby, Rails, and JavaScript. Pragmatic Bookshelf, 1st

edition.

Saff, D. and Ernst, M. D. (2003). Reducing wasted devel-

opment time via continuous testing. In Fourteenth In-

ternational Symposium on Software Reliability Engi-

neering, pages 281–292, Denver, CO.

Saff, D. and Ernst, M. D. (2004). An experimental eval-

uation of continuous testing during development. In

ISSTA 2004, Proceedings of the 2004 International

Symposium on Software Testing and Analysis, pages

76–85, Boston, MA, USA.

Sanchez, J. C., Williams, L., and Maximilien, E. M. (2007).

On the Sustained Use of a Test-Driven Development

Practice at IBM. In AGILE’07: Conference on Agile

Software Development, pages 5–14, Washington, DC,

USA. IEEE Computer Society.

Tahchiev, P., Leme, F., Massol, V., and Gregory, G. (2010).

JUnit in Action. Manning Publications, Greenwich,

CT, USA, 2nd edition.

Venkatesh, V. and Davis, F. D. (2000). A theoretical exten-

sion of the technology acceptance model: Four longi-

tudinal field studies. Management science, 46(2):186–

204.

Williams, L., Maximilien, E. M., and Vouk, M. (2003).

Test-Driven Development as a Defect-Reduction

Practice. In ISSRE’03: International Symposium on

Software Reliability Engineering, pages 34–48, Wash-

ington, DC, USA. IEEE Computer Society.

ContinuousTest-DrivenDevelopment-ANovelAgileSoftwareDevelopmentPracticeandSupportingTool

267