Robotic Grasp Initiation by Gaze Independent Brain-controlled

Selection of Virtual Reality Objects

Christoph Reichert

1,2

, Matthias Kennel

3

, Rudolf Kruse

2

, Hans-Jochen Heinze

1,4,5

, Ulrich Schmucker

3

,

Hermann Hinrichs

1,4,5

and Jochem W. Rieger

6

1

Department of Neurology, University Medical Center A.ö.R., D-39120, Magdeburg, Germany

2

Department of Knowledge and Language Processing, Otto-von-Guericke University, D-39106, Magdeburg, Germany

3

Fraunhofer Institute for Factory Operation and Automation IFF, D-39106, Magdeburg, Germany

4

Leibniz Institute for Neurobiology, D-39118, Magdeburg, Germany

5

German Center for Neurodegenerative Diseases (DZNE), D-39120, Magdeburg, Germany

6

Department of Applied Neurocognitive Psychology, Carl-von-Ossietzky University, D-26111, Oldenburg, Germany

Keywords: BCI, P300, Oddball Paradigm, Grasping, MEG.

Abstract: Assistive devices controlled by human brain activity could help severely paralyzed patients to perform

everyday tasks such as reaching and grasping objects. However, the continuous control of anthropomorphic

prostheses requires control of a large number of degrees of freedom which is challenging with the currently

achievable information transfer rate of noninvasive Brain-Computer Interfaces (BCI). In this work we

present an autonomous grasping system that allows grasping of natural objects even with the very low

information transfer rates obtained in noninvasive BCIs. The grasp of one out of several objects is initiated

by decoded voluntary brain wave modulations. A universal online grasp planning algorithm was developed

that grasps the object selected by the user in a virtual reality environment. Our results with subjects

demonstrate that training effort required to control the system is very low (<10 min) and that the decoding

accuracy increases over time. We also found that the system works most reliably when subjects freely select

objects and receive virtual grasp feedback.

1 INTRODUCTION

Brain-Computer Interfaces (BCI) translate human

brain activity to machine commands (Wolpaw,

2013) and are in the focus of research to replace

motor functions of severely paralyzed patients. In

these patients, peripheral nerves do not provide any

signal, like the electromyogram (EMG), to control

prostheses (Kuzborskij et al., 2012). In the recent

years, highly invasive techniques were tested to

control prosthetic devices by voluntary modulation

of brain activity (Hochberg et al., 2012; Velliste et

al., 2008). In humans, the use of noninvasive

techniques, like the electroencephalogram (EEG), is

preferable over invasive recordings. Recently, it has

been shown that a large number of hand movements

can be discriminated with noninvasive EMG

(Kuzborskij et al., 2012). However, only a small

number of commands can be discriminated with

noninvasively assessed motor imagery and, as a

consequence, these systems do not allow for full

control of complex manipulators with many degrees

of freedom. Here we report progress in our

development of a noninvasive BCI that enables users

to grasp natural objects. Our approach combines the

development of both efficient brain decoding

techniques and autonomous actuator control to

overcome the limited information transfer from

noninvasive BCIs.

Commonly, movement commands are generated

by motor imagery tasks aiming to decode the

µ-rhythm (Pfurtscheller et al., 2000). However, a

considerable percentage of people are unable to

control motor imagery BCIs (Guger et al., 2003;

Vidaurre and Blankertz, 2010). In contrast, it was

shown that a larger fraction of people is able to

select items in speller paradigms using an oddball

task (Guger et al., 2009). The matrix speller was first

5

Reichert C., Kennel M., Kruse R., Heinze H., Schmucker U., Hinrichs H. and W. Rieger J..

Robotic Grasp Initiation by Gaze Independent Brain-controlled Selection of Virtual Reality Objects.

DOI: 10.5220/0004608800050012

In Proceedings of the International Congress on Neurotechnology, Electronics and Informatics (NEUROTECHNIX-2013), pages 5-12

ISBN: 978-989-8565-80-8

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

introduced by Farwell and Donchin (1988). In the

oddball task a P300, a positive EEG deflection, is

evoked when a rare target stimulus appears in a

series of irrelevant stimuli. While it is often assumed

that the accuracy of visually stimulated P300 speller

is independent of gaze direction, it has recently been

shown that the performance of the matrix speller

drops significantly if the eyes are not moved toward

the target (Brunner et al., 2010). The reason is that

two EEG components, the P300 and the N200,

contribute information when the centre of regard is

moved to the target (Frenzel et al., 2011), whereas

only the P300 is present if the eyes don’t move. This

could render the P300 paradigm less useful for

patients who cannot move their eyes.

In this work we demonstrate that the visual

oddball paradigm can be successful applied to

initiate targeted grasps in a visually complex virtual

environment with multiple realistic objects.

Importantly, we show that the paradigm developed

here works independent of the user’s ability to direct

gaze towards the target object. This is of high

relevance for the targeted user group.

The other approach in our strategy for the

development of a brain controlled robotic

manipulator is to implement an algorithm to provide

intelligent autonomous manipulation of predefined

objects. Here we propose a new analytical grasp

planning algorithm to achieve autonomous grasping

of arbitrary objects. In contrast to other motion

planning algorithms, our algorithm is not based on

Learning by Demonstration (for a review see

Sahbani, El-Khoury and Bidaud (2012)) and

involves, but is not limited to, the robot’s

kinematics.

2 MATERIALS AND METHODS

In this study we decoded in real-time the

magnetoencephalogram (MEG) of 17 subjects

(9 male, 8 female, mean age 26.6) to determine their

intention to select one of six selectable realistic

objects for grasping. We used the decoding results to

initiate a grasp of a robotic gripper. All subjects

gave written informed consent. The study was

approved by the ethics committee of the Medical

Faculty of the Otto-von-Guericke University of

Magdeburg.

2.1 Virtual Environment

We presented six objects (see Figure 1) placed at

fixed positions in a virtual reality environment. The

visual angle between outmost left and right objects

was 8.5°. We defined circular regions on the table

which were used i) to stimulate the subjects with

visual events by lighting up the object background

and ii) to provide cues and feedback by colouring

the region’s shape. A photo transistor placed on the

screen was used to synchronize the ongoing MEG

with the events displayed on the screen. To provide

realistic feedback, the model of a robot (Mitsubishi

RV E2) equipped with a three finger gripper

(Schunk SDH) was part of the scene. The virtual

robot is designed to mimic actual movements of the

real robot. Specifically, an autonomously calculated

grasp to the selected object is visualized.

Figure 1: VR scenario used for visual stimulation. This

snapshot shows one flash event of an object.

2.2 Paradigm

The paradigm we employed is based on the P300

potential which is evoked approximately 300 ms

after a rare target stimulus occurs in a series of

irrelevant stimuli (oddball paradigm). In our variant

of the paradigm, we marked objects by flashing their

background for 100 ms. Objects were marked in

random order with an interstimulus interval of

300 ms. Each object was marked five times per

selection trial resulting in a stimulation interval

length of 10 seconds.

Subjects were instructed to fixate the black cross

centred to the objects and to count how often the

target object was marked. The counting ensured that

attention was maintained on the stimulus stream. In

addition, subjects were instructed to avoid eye

movements and blinking during the stimulation

interval.

Each subject performed a minimum of seven

runs with 18 selection trials per run. The runs were

performed in three different modes that served

different purposes. The number of runs each subject

performed in each mode is listed in Table 1. We

NEUROTECHNIX2013-InternationalCongressonNeurotechnology,ElectronicsandInformatics

6

started with the instructed selection mode. In this

mode, the target object was cued by a light grey

circle at the beginning of a trial and subjects were

instructed to attend the cued object. Instructed

selection was used in the initial training runs in

which we collected data to train the classifier. In this

mode true classifier labels are available which are

required to train the classifier. We provided random

feedback during training runs because no classifier

was available in these initial runs. After the training

runs, each subject performed several instructed runs

with feedback. We denoted the second selection

mode free selection. In this mode, subjects were free

to choose the target object. In instructed selection

mode and in free selection mode, a green circle was

presented at the end of the trial on the decoded

object as feedback. All other objects were marked by

red circles. Free selection runs were performed after

the instructed selection runs. In the third mode, the

grasp selection mode, the virtual robot grasped and

lifted the decoded target for feedback. Grasp

selection runs were performed after free selection

runs. In both modes, the free selection and grasp

selection mode, the subject said “no” to signal that

the classifier decoded the wrong object and

remained silent otherwise.

Table 1: Number of runs the subjects performed in

different selection modes.

Subject

#

instructed

free grasp

training decoder

1 2 5 - -

2 4 4 1 -

3 3 4 - -

4-6 3 4 1 1

7 2 4 2 1

8 3 3 2 1

9 2 4 2 1

10 2 4 2 -

11 2 4 2 1

12 2 5 2 -

13-17 2 4 2 1

The results reported in this paper arise from online

experiments. We did not exclude early sessions,

causing slight changes in the experimental protocol

during the study (Table 1). The number of runs

performed in the different modes depended on cross

validated classifier performance estimation and the

development of detection accuracy. In total, five

subjects performed three, one subject four and the

remaining 11 subjects two initial training runs. Two

subjects performed only instructed selections.

Twelve of the subjects performed one run in the

grasp selection mode. Here, only six instead of 18

trials were performed, due to the longer feedback

duration.

2.3 Data Acquisition and Processing

The MEG was recorded with a whole-head BTi

Magnes 248-sensors system (4D-Neuroimaging, San

Diego, CA, USA) at a sampling rate of 678.17 Hz.

Simultaneously, the electrooculogram (EOG) was

recorded for subsequent inspection of eye

movements. MEG data and event channels were

instantaneously forwarded to a second workstation

capable of processing the data in real-time. The data

stream was cut into intervals including only the

stimulation sequence. The MEG data were then

band-pass filtered between 1 Hz and 12 Hz and

down sampled to 32 Hz sampling rate. Then, the

stimulation interval was cut in overlapping 1000 ms

segments starting at each flash event. In instructed

selection mode, the segments were labelled as target

or nontarget segments depending on whether the

target or a nontarget object was marked.

We used a linear support vector machine (SVM)

as classifier because it provided reliably high

performance in single trial MEG discrimination

(Quandt et al., 2012; Rieger et al., 2008). These

previous studies showed that linear SVM is capable

of selecting appropriate features in high dimensional

MEG feature spaces. We performed classification in

the time domain, meaning that we used the magnetic

flux measured in 32 time steps as classifier input. To

reduce the dimensionality of the feature space, we

empirically excluded 96 sensors located farthest

from the vertex (the midline sensor at the position

halfway between inion and nasion) which is the

expected site of the P300 response. We further

reduced the number of sensors by selecting the 64

sensors providing the highest sum of weights per

channel in an initial SVM training on all preselected

152 sensors of the training run data. The selected

feature set (64 sensors × 32 samples = 2048

features) was then used to train the classifier again

and retrain the classifier after each run conducted in

instructed selection mode.

2.4 Grasping Algorithm

In this section we describe the general procedure of

our grasp planning algorithm, whereas we present

the mathematical details in the Appendix. The

algorithm was developed to physically drive a robot

arm, but in this experiment it was used to provide

virtual reality feedback. Importantly, in this strategy

the robot serves as an intelligent, autonomous

RoboticGraspInitiationbyGazeIndependentBrain-controlledSelectionofVirtualRealityObjects

7

actuator and does not drive predefined trajectories.

The algorithm assumes that object position and

shape coordinates relative to the manipulator are

known to the system. In this experiment, coordinates

of CAD-modelled objects were used. However,

coordinates could as well be generated by a 3D

object recognition system.

Central to our approach is that the contact

surfaces of the gripper’s fingers and the surfaces of

the objects were rasterized with virtual point poles.

We assumed an imaginary force field between the

poles on the manipulator and the poles on the target

object (see Appendix for details). The goal of the

algorithm is to initially generate a manipulator

posture that ensures a force closure grasp. The

following grasp is organized by closing the hand in a

real world scenario and by locking the object

coordinates relative to the finger surface coordinates

in the virtual scenario.

3 RESULTS

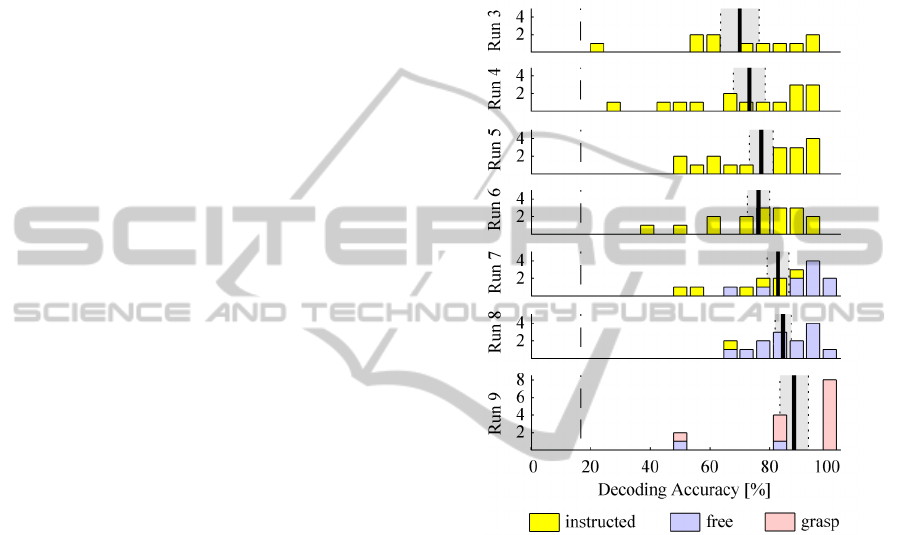

3.1 Decoder Accuracy

We determined the decoding accuracy as the ratio of

correctly decoded objects divided by the total

number of object selections. All subjects performed

the task reliably above guessing level which was

16.7 %. On average, the intended object selections

were correctly decoded from the MEG data in

77.7 % of all trials performed. Single subject

accuracies ranged from 55.6 % to 92.1 %. In the

instructed selection mode the average accuracy was

73.9 % and 85.9 % in the free selection mode. This

performance difference is statistically significant

(Wilcoxon rank sum test: p=0.03). When subjects

received feedback by moving the virtual robot to the

grasp target, the average accuracy was even higher

and reached 91.2 %. Figure 2 depicts the evolution

of decoding accuracies over runs. The height of the

bars indicates the number of subjects (y-axis) who

achieved the respective decoding performance out of

19 possible percentage bins. Each histogram shows

the results from one run and the performance bins

are equally spaced from 0 % to 100 %. The

histograms are chronologically ordered from top to

bottom. Yellow bars indicate results from instructed

selection runs, blue bars indicate free selection run

results and light red bars indicate results in runs with

grasp feedback. Vertical dashed lines indicate the

guessing level and thick solid lines indicate the

average decoding accuracies over subjects (standard

error marked grey). The average decoding accuracy

increases gradually over the course of the

experiment. Moreover, the histograms show that the

highest accuracy over subjects was achieved in free

selection runs. Note that our system achieved perfect

detection in eight of the twelve subjects who

received virtual grasp feedback. However, only six

selections were performed by each subject in these

grasp selection runs.

Figure 2: Performance histograms. The ordinate indicates

the number of subjects who achieved a certain decoding

accuracy. The histograms show data from different runs

and code the type of run by colour. See text for details.

An established measure for the comparison of BCIs

is the information transfer rate (ITR) which

combines decoding accuracy and number of

alternatives to a unique measure. We calculated the

ITR according to the method of Wolpaw et al.

(2000) at 3.4 to 12.0 bit/min for single subjects and

8.1 bit/min on average. Note that the maximum

achievable bit rate with the applied stimulation

scheme is 15.5 bit/min.

For online eye movement control, we observed

the subjects’ eyes on a video screen. In addition, we

inspected the EOG measurements offline. Both

methods confirmed that subjects followed the

instruction to keep fixation.

NEUROTECHNIX2013-InternationalCongressonNeurotechnology,ElectronicsandInformatics

8

3.2 Grasping Performance

We evaluated the execution duration of the online

grasp calculation for different setups and objects.

We implemented our grasping algorithm with the

ability to distribute force computations to several

parallel threads. Here, we permitted five threads

employing a 2.8 GHz AMD Opteron 8220 SE

processor. We calculated grasps of the six objects

shown in Figure 1. To assess effects of object

position, we arranged the objects at different

positions within the limits of our demonstrating

robot’s work space. Each object was placed once at

each of the positions depicted in Figure 1. The time

needed to plan the trajectory and execute the grasp

until reaching force closure is listed in Table 2 for

each object/position combination. The diagonal of

the table represents the actual object/position setup

during our experiment.

Table 2: Duration of grasp planning calculation for all

object/position combinations in seconds. UL=upper left,

LL=lower left, UR=upper right, LR=lower right.

Object

position

Object #

#1 #2 #3 #4 #5 #6

Left

33.0

68.5 11.0 14.1 16.0 24.5

UL 25.5

34.0

16.5 13.5 39.0 46.7

LL 11.0 72.6

11.0

18.0 22.5 65.0

UR 15.5 42.5 15.0

12.5

37.0 46.0

LR 12.0 48.5 11.0 19.0

22.5

24.5

Right 11.0 35.6 13.0 14.5 19.5

29.5

The results indicate that the duration of grasp

planning depends on many parameters. The most

important determinant of execution time is the

number of point poles (Ο

)) which depends on the

level of detail of the object surface as well as the

physical constraints of the robot and object position.

We observed that even minimal differences in object

arrangement appear to have strong influence on

force closure termination. This is also indicated by

different execution times of identical objects at

symmetric positions (e.g. left/right). We consider it

likely that these differences are caused by numerical

precision issues due to the high number of

summations in equations (3) and (4) (see Appendix).

4 DISCUSSION

In the present work we demonstrated that the

oddball paradigm is well suited for use in a BCI to

reliably select one of several objects for grasping.

Importantly, this was achieved independent from eye

movements. We demonstrated that the performance

of the system improves with training. Furthermore,

our results suggest that performance improves even

further when subjects obtain more control in the free

selection and with realistic visual feedback. This

suggests that BCI control in our P300 paradigm is

improved with an increasing sense of agency.

A gaze independent BCI based on directing covert

attention is a fundamental requirement for patients

who cannot easily orient gaze to the target object.

Earlier reports suggested that eye movements greatly

improve performance in a P300 speller (Brunner et

al., 2010; Frenzel et al., 2011; Treder and Blankertz,

2010), due to contribution from visual areas to brain

wave classification (Bianchi et al., 2010). We extend

these previous studies and show that the P300-

paradigm is well suited for a gaze independent

object grasping BCI. We achieved independence

from visual components by instructing our subjects

to fixate and by excluding occipital sensors from the

analysis. This approach simulates a realistic setting

with patients who cannot move their eyes and are

therefore dependent on covert attention shift based

activation for control. To date, only a small number

of studies successfully implemented such a more

restrictive covert attention P300 approach (Aloise et

al., 2012; Liu et al., 2011; Treder et al., 2011).

We observed increasing decoder accuracy in the

course of the experiment. This suggests that the

increasing amount of training is beneficial for

performance in our BCI paradigm. However, due to

classifier updates performed in the course of the

experiment, the learning process is likely bilateral

and involves both the subjects and the classifier

(Curran and Stokes, 2003). Importantly, when

subjects were free to select the target object, the

decoding success was significantly higher compared

to the instructed selections. This suggests a strong

role for task involvement and the sense of agency in

our paradigm. When subjects performed runs

receiving grasp feedback, most of them achieved

perfect decoding accuracy. We expect the reliability

of the system to be further increased by extending

the stimulation interval (Aloise et al., 2012;

Hoffmann et al., 2008). Note that system reliability

is often more important for the user than a rapid but

error prone detection of intention.

The system presented here is efficient for use

with nearly no training. Most subjects performed

less than ten minutes of training in order to provide

data to the decoding algorithm. This is a very small

effort compared to motor imagery based systems

aiming to control movement in a few degrees of

freedom (Hochberg et al., 2012; Wolpaw and

RoboticGraspInitiationbyGazeIndependentBrain-controlledSelectionofVirtualRealityObjects

9

McFarland, 2004).

In online closed-loop BCI studies the decoding

algorithm has to be fixed before the start of the

actual experiment. We decided to use SVM

classification because this is an established classifier

for high dimensional feature spaces that provides

high and robust generalization by upweighting

informative and downweighting uninformative

features (Cherkassky and Mulier, 1998).

Furthermore, it was shown that linear SVM was

equally accurate for P300 detection compared to

Fisher’s linear discriminant and stepwise linear

discriminant analysis (Krusienski et al., 2006).

Several existing studies make use of extended linear

discriminant analysis algorithms applied to EEG

data (Liu et al., 2011; Treder et al., 2011). However,

because MEG data are based on a much larger

amount of sensors, these approaches are not

applicable in a suitable time.

In order to reduce the burden of controlling a

complex manipulator with many degrees of freedom

by voluntary modulation of brain activity, we

combined a P300 BCI with a grasping system that

autonomously executes the grasp requiring only a

very low input bit rate, namely the command to

grasp an object known to the system. To execute the

grasp intended by the BCI user, we developed an

algorithm for autonomous grasp planning that can

place a reliable grasp on natural objects. The

execution times we achieved were practical for the

proposed task even though not optimal. In this work

we did not focus on timing optimization. However,

improvements to speed up the calculations are in our

focus of future work. The proposed algorithm is

universal in the sense that it is not restricted to a

specific manipulator. Consequently, this algorithm

should also be easily transferable to arbitrary

prosthetic devices suitable for grasping potential

target objects with a force closure grasp.

As input brain signal for the BCI, we used the

MEG. This noninvasive technique measures

magnetic fields of cortical dipoles. While the

dynamic signal characteristics are comparable to

those in EEG, MEG tends to provide higher spatial

resolution (Bradshaw et al., 2001). We are aware

that this modality is not suitable for daily use and

particularly not for use of a prosthetic device. In

fact, we consider our study basic research, and to our

knowledge, this is the first implementation of a

MEG based P300 closed loop BCI.

5 CONCLUSIONS

We showed that noninvasive BCI in combination

with an intelligent actuator can be used in real world

settings to grasp and manipulate objects. This is an

important step towards the development of assistive

systems for severely impaired patients.

ACKNOWLEDGEMENTS

This work has been supported by the EU project

ECHORD number 231143 from the 7th Framework

Programme and by Land-Sachsen-Anhalt Grant

MK48-2009/003.

REFERENCES

Aloise, F., Schettini, F., Aricò, P., Salinari, S., Babiloni,

F., and Cincotti, F. (2012). A comparison of

classification techniques for a gaze-independent P300-

based brain-computer interface. Journal of Neural

Engineering, 9(4), 045012. doi:10.1088/1741-

2560/9/4/045012.

Bianchi, L., Sami, S., Hillebrand, A., Fawcett, I. P.,

Quitadamo, L. R., and Seri, S. (2010). Which

physiological components are more suitable for visual

ERP based brain-computer interface? A preliminary

MEG/EEG study. Brain Topography 23(2), 180-185.

doi:10.1007/s10548-010-0143-0.

Bradshaw, L. A., Wijesinghe, R. S., and Wikswo, Jr, J.

(2001). Spatial filter approach for comparison of the

forward and inverse problems of

electroencephalography and magnetoencephalography.

Annals of Biomedical Engineering, 29(3), 214-226.

doi:10.1114/1.1352641.

Brunner, P., Joshi, S., Briskin, S., Wolpaw, J. R., Bischof,

H., and Schalk, G. (2010). Does the 'P300' speller

depend on eye gaze? Journal of Neural Engineering,

7(5), 056013. doi:10.1088/1741-2560/7/5/056013.

Cherkassky V. and Mulier, F. (1998). Learning from

Data: Concepts, Theory, and Methods. John Wiley &

Sons.

Curran, E. A. and Stokes, M. J. (2003). Learning to

control brain activity: a review of the production and

control of EEG components for driving brain-

computer interface (BCI) systems. Brain and

Cognition, 51(3), 326-336. doi:10.1016/S0278-

2626(03)00036-8.

Ericson, C. (2005). Real-time collision detection.

Amsterdam: Elsevier.

Farwell, L. A. and Donchin, E. (1988). Talking off the top

of your head: toward a mental prosthesis utilizing

event-related brain potentials. Electroencephalography

and Clinical Neurophysiology, 70(6), 510-523.

NEUROTECHNIX2013-InternationalCongressonNeurotechnology,ElectronicsandInformatics

10

Frenzel, S., Neubert, E., and Bandt, C. (2011). Two

communication lines in a 3 × 3 matrix speller. Journal

of Neural Engineering, 8(3), 036021.

doi:10.1088/1741-2560/8/3/036021.

Guger, C., Daban, S., Sellers, E., Holzner, C., Krausz, G.,

Carabalona, R., Gramatica, F., and Edlinger, G.

(2009). How many people are able to control a P300-

based brain-computer interface (BCI)? Neuroscience

Letters, 462(1), 94-98.

doi:10.1016/j.neulet.2009.06.045.

Guger, C., Edlinger, G., Harkam, W., Niedermayer, I., and

Pfurtscheller, G. (2003). How many people are able to

operate an EEG-based brain-computer interface

(BCI)? IEEE Transactions on Neural Systems and

Rehabilitation Engineering, 11(2), 145-147.

doi:10.1109/TNSRE.2003.814481.

Hochberg, L. R., Bacher, D., Jarosiewicz, B., Masse, N.

Y., Simeral, J. D., Vogel, J., Haddadin, S., Liu, J.,

Cash, S. S., van der Smagt, P., and Donoghue, J. P.

(2012). Reach and grasp by people with tetraplegia

using a neurally controlled robotic arm. Nature,

485(7398), 372-375. doi:10.1038/nature11076.

Hoffmann, U., Vesin, J.-M., Ebrahimi, T., and Diserens,

K. (2008). An efficient P300-based brain-computer

interface for disabled subjects. Journal of

Neuroscience Methods, 167(1), 115-125.

doi:10.1016/j.jneumeth.2007.03.005.

Khatib, O. (1986). Real-time obstacle avoidance for

manipulators and mobile robots. The International

Journal of Robotics Research, 5(1), 90-98.

Krusienski, D. J., Sellers, E. W., Cabestaing, F., Bayoudh,

S., McFarland, D. J., Vaughan, T. M., & Wolpaw, J.

R. (2006). A comparison of classification techniques

for the P300 speller. Journal of Neural Engineering,

3(4). doi: 10.1088/1741-2560/3/4/007.

Kuzborskij, I., Gijsberts, A., and Caputo, B. (2012).On the

challenge of classifying 52 hand movements from

surface electromyography. Proceedings of the Annual

International Conference of the IEEE Engineering in

Medicine and Biology Society (EMBC).

doi:10.1109/EMBC.2012.6347099.

Liu, Y., Zhou, Z., and Hu, D., (2011). Gaze independent

brain-computer speller with covert visual search tasks.

Clinical Neurophysiology, 6.

doi:10.1016/j.clinph.2010.10.049.

Pfurtscheller, G., Neuper, C., Guger, C., Harkam, W.,

Ramoser, H., Schlögl, A., Obermaier, B., and

Pregenzer, M. (2000). Current trends in Graz

Brain-Computer Interface (BCI) research. IEEE

Transactions on Rehabilitation Engineering, 8(2),

216-219. doi:10.1109/86.847821.

Quandt, F., Reichert, C., Hinrichs, H., Heinze, H. J.,

Knight, R. T., and Rieger, J. W. (2012). Single trial

discrimination of individual finger movements on one

hand: A combined MEG and EEG study. Neuroimage,

59(4), 3316-3324.

doi:10.1016/j.neuroimage.2011.11.053.

Rieger, J. W., Reichert, C., Gegenfurtner, K. R., Noesselt,

T., Braun, C., Heinze, H.-J., Kruse, R., and Hinrichs,

H. (2008). Predicting the recognition of natural scenes

from single trial MEG recordings of brain activity.

Neuroimage, 42(3), 1056-1068.

doi:10.1016/j.neuroimage.2008.06.014.

Sahbani, A., El-Khoury, S., and Bidaud, P. (2012). An

overview of 3D object grasp synthesis algorithms.

Robotics and Autonomous Systems, 60(3), 326-336.

doi:10.1016/j.robot.2011.07.016.

Siciliano, B. and Khatib, O. (2008).

Springer handbook of

robotics. Springer.

Siciliano, B. and Villani, L. (1999). Robot force control.

Boston: Kluwer Academic.

Treder, M. S. and Blankertz, B. (2010). (C)overt attention

and visual speller design in an ERP-based

brain-computer interface. Behavioral Brain Functions,

6, 28. doi:10.1186/1744-9081-6-28.

Treder, M. S., Schmidt, N. M., and Blankertz, B. (2011).

Gaze-independent brain-computer interfaces based on

covert attention and feature attention. Journal of

Neural Engineering, 8(6), 066003. doi:10.1088/1741-

2560/8/6/066003.

Velliste, M., Perel, S., Spalding, M. C., Whitford, A. S.,

and Schwartz, A. B. (2008). Cortical control of a

prosthetic arm for self-feeding. Nature, 453(7198),

1098-1101. doi:10.1038/nature06996.

Vidaurre, C. and Blankertz, B. (2010). Towards a cure for

BCI illiteracy. Brain Topography, 23(2), 194-198.

doi:10.1007/s10548-009-0121-6.

Wolpaw, J. R. (2013). Brain-computer interfaces.

Handbook of Clinical Neurology 110, 67-74.

doi:10.1016/B978-0-444-52901-5.00006-X.

Wolpaw, J. R., Birbaumer, N., Heetderks, W. J.,

McFarland, D. J., Peckham, P. H., Schalk, G.,

Donchin, E., Quatrano, L. A., Robinson, C. J., and

Vaughan, T. M. (2000). Brain-computer interface

technology: a review of the first international meeting.

IEEE Transactions on Rehabilitation Engineering,

8(2), 164-173. doi:10.1109/TRE.2000.847807.

Wolpaw, J. R. and McFarland, D. J. (2004). Control of a

two-dimensional movement signal by a noninvasive

brain-computer interface in humans. Proceedings of

the National Academy of Sciences of the United States

of America, 101(51), 17849-17854.

doi:10.1073/pnas.0403504101.

APPENDIX

In section 2.4 we stated the rasterizing of the object

and gripper surfaces with virtual point poles. Here

we describe the algorithm in more detail.

Our grasp planning algorithm is organized by

simulating the action of forces between target object

and manipulator in consecutive time frames. While

the object poles

are defined as positive, the

manipulator poles

are defined as negative. In

accordance with Khatib (1986), we assume that

opposite poles attract each other while like poles do

RoboticGraspInitiationbyGazeIndependentBrain-controlledSelectionofVirtualRealityObjects

11

not interact. The magnitude of the force between two

poles

and

we calculated as

,

(1)

where

is the distance between the poles,

and the unit of is arbitrary. The exponential

function limits to a maximum of 1 unit. This

avoids infinite forces at collision scenarios and

provides a suitable scaling to instantiate both

propulsive forces between manipulator and object

and repulsive forces to reject manipulator poles that

penetrate the object’s boundary.

The total propulsive force

affecting one

point pole

on the manipulator is calculated from

a set of object point poles

where

∶

∈

∧

⋅

0

(2)

which indicates that only pairwise point poles with

an angle between the surface normal

and

greater than 4

⁄

are involved. We included this

constraint to restrict interactions to opposing surface

force vectors. The force

that moves the

manipulator is then calculated as

∑

,

∈

.

(3)

The manipulator’s effective joint torque can be

calculated by means of the Jacobian generated

from the joint angles and the point poles

(Siciliano and Villani, 1999) by

,

(4)

where external moments are considered

0

. In

order to simulate the manipulator movement, we

calculated the new joint angle

of an axis by

solving the equation system

∆

∆∗

(5)

∆

∆∗

(6)

where is the inertia tensor of the robot’s solid

elements and

defines one of the manipulator

axes. We chose a heuristically dynamic calculation

of the time frame length ∆ which is proportional to

the mean distance between the set of point poles

and

.

Collision detection was performed for the new

posture before a new time frame was assigned to be

valid and the position update was sent to the

manipulator. We used standard techniques (Ericson,

2005) to detect surface intersections. If intersections

were detected, repulsive forces were calculated for

the affected point poles directing to their position of

the last valid time frame and satisfying equation (1).

If no intersections were detected, the robot moved to

the new coordinates. This procedure was repeated

until the force closure condition (Siciliano and

Khatib, 2008) was detected.

NEUROTECHNIX2013-InternationalCongressonNeurotechnology,ElectronicsandInformatics

12