Machine Understanding and Avoidance of Misunderstanding

in Agent-directed Simulation and in Emotional Intelligence

Tuncer Ören

1

, Mohammad Kazemifard

2

and Levent Yilmaz

3

1

School of Electrical Eng. & Computer Science,University of Ottawa, 800 King Edward Ave.,Ottawa,ON,Canada

2

Department of Computer Engineering, Razi University, Kermanshah, Iran

3

Department of Computer Science and Software Engineering, Auburn University, Auburn, AL, U.S.A.

Keywords: Agents with Emotional Intelligence, Emotional Intelligence Simulation, Machine Understanding,

Avoidance of Misunderstanding.

Abstract: Simulation is being applied in many very important projects and often it is a vitally important infrastructure

for them. Several types of computational intelligence techniques have been part of the abilities of simula-

tion. An important aspect of intelligence is the ability to understand. Agent-directed simulation (ADS) is a

comprehensive paradigm to cover all aspects of synergy of software agents and simulation and our approach

is to develop agents with understanding abilities. After a brief review of ADS, our paradigms of machine

understanding is presented. The article clearly indicates types of misunderstandings that might occur. Our

research plans are to avoid some of the misunderstandings which could occur and especially to have self-

attesting abilities in our applications to document which types of misunderstandings are avoided.

1 INTRODUCTION

Simulation is being applied in many very important

projects and often it is a vitally important infrastruc-

ture for them. Several types of computational intelli-

gence techniques have been part of the abilities of

simulation (Ören, 1995); (Yilmaz and Ören, 2009).

An important aspect of intelligence is the ability

to understand. Our research on machine understand-

ing started with understanding of simulation pro-

grams (Ören et al., 1990) and evolved to understand-

ing software in general (Ören, 1992), then to

understanding systems (Ören, 2000), to agents with

ability to understand emotions (Kazemifard et al.,

2009), and finally to machine understanding in emo-

tional intelligence simulation (Kazemifard, et al.,

2013).

Failure avoidance has been recently intro-duced

to advanced simulation studies as a paradigm in

addition to validation and veri-fication studies (Ören

and Yilmaz 2009).

This article is built on our previous work

especially on machine understanding as applied to

agents used in simulation and to misunderstanding.

However, in this article, we provide three additional

machine understanding paradigms in addition to our

basic machine understanding paradigm.

After this introduction, we start with a concise

review of our view of agent directed simulation

which provides a comprehensive framework to

consider all aspects of the synergy of software

agents and simulation. In section 3, we discuss our

four paradigms for machine understanding. Section

4 covers a systemati-zation of most types of

misunderstanding appli-cable to machine

understanding. Sections 5 covers the conclusions

and some of our plans for future research.

2 AGENT-DIRECTED

SIMULATION

Agent-Directed Simulation (ADS) is a unifying and

comprehensive framework that allows integration of

agent and simulation technologies (Ören, 2000).

Agents are often considered as model design

metaphors in the development of simulations. Yet,

this narrow view limits the potential of agents in

improving various other dimensions of simulation

(Yilmaz and Ören, 2009). To this end, ADS is

comprised of three distinct, yet related areas that can

be grouped under two categories as follows:

Simulation for Agents (i.e., agent simulation)

318

Ören T., Kazemifard M. and Yilmaz L..

Machine Understanding and Avoidance of Misunderstanding in Agent-directed Simulation and in Emotional Intelligence.

DOI: 10.5220/0004635003180327

In Proceedings of the 3rd International Conference on Simulation and Modeling Methodologies, Technologies and Applications (SIMULTECH-2013),

pages 318-327

ISBN: 978-989-8565-69-3

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

involves the use of simulation modeling method-

methodology and technologies to analyze, design,

model, simulate, and test agent systems. This

includes, but is not limited to using agents as

model design elements (i.e., agent-based

modeling).

Agents for Simulation: (1) Agent-supported

simulation involves the use of agents as support

facilities to enable computer assistance in

simulation-based problem solving (e.g., simulation

experiment management); (2) Agent-based

simulation, on the other hand, focuses on the use

of agents for the generation of model behavior

(e.g., simulator coordination, run-time models) in a

simulation study as well as agent-initiated

simulation.

In agent simulation, agents possess high-level

mechanisms that include communication protocols

for interaction, task allocation, coordination of

actions, and conflict resolution at varying levels of

sophistication. Agent-based simulation focuses on

the use of agent technology to monitor and generate

model behavior. This is similar to the use of

Artificial Intelligence techniques for the generation

of model behavior (e.g., qualitative simulation and

knowledge-based simulation). Agents can provide

cognitive architectures that allow reasoning and

planning and serve as run-time models of simulation

model behavior management such as dynamic model

updating and symbiotic simulation. That is, context-

awareness of intelligent agents can facilitate

simulator coordination, where runtime decisions for

model staging and updating takes place to facilitate

dynamic composability. On the other hand, agent-

supported simulation enables the use of agents to

support simulations as well as simulation studies by

enhancing cognitive capabilities in problem

specification, simulation experiment management,

and behavior analysis.

Often, agent-supported simulation is used for the

following purposes (Yilmaz and Ören, 2009):

To provide computer assistance for frontend and/or

backend interface functions;

to process elements of a simulation study

symbolically (for example, for consistency checks

and built-in reliability); and

to provide cognitive abilities to the elements of a

simulation study, such as learning or

understanding abilities.

3 MACHINE

UNDERSTANDING

In the study of natural phenomena, the role of simu-

lation is often cited as “to gain insight” which is

another way of expressing “to under-stand.” Under-

standing is one of the important philosophical topics.

From a pragmatic point of view, it has a broad appli-

cation potential in many computerized studies in-

cluding program understanding, machine vision,

fault detection based on machine vision as well as

situation awareness and assessment. Therefore, sys-

tematic studies of the elements, structures, archi-

tectures, and scope of applications of com-puterized

understanding systems as well as the characteristics

of the results (or products) of understanding pro-

cesses are warranted.

Dictionary definitions of “to understand” include

the following: to seize the meaning of, to accept as a

fact, to believe, to be thoroughly acquainted with, to

form a reasoned judgment concerning something, to

have the power of seizing meanings, forming rea-

soned judgments, to appreciate and sympathize with,

to tolerate, and to possess a passive knowledge of a

language.

For machine understanding, or computerized un-

derstanding, we aim a limited scope as was ex-

pressed in a previous publication: “We say that a

system ‘knows about’ a class of objects, or relations,

if it has an internal relation for the class which ena-

bles it to operate on objects in this class and to

communicate with others about such operations.

Thus, if a system knows about X, a class of objects

or relations on objects, it is able to use an (internal)

representation of the class in at least the following

ways: receive information about the class, generate

elements in the class, recognize members of the

class and discriminate them from other class mem-

bers, answer questions about the class, and take into

account information about changes in the class

members” (Zeigler 1986)." (Ören, 2000). For addi-

tional clarification of understanding and its philo-

sophical roots see Ören (2000).

3.1 Machine Understanding:

Basic Paradigm

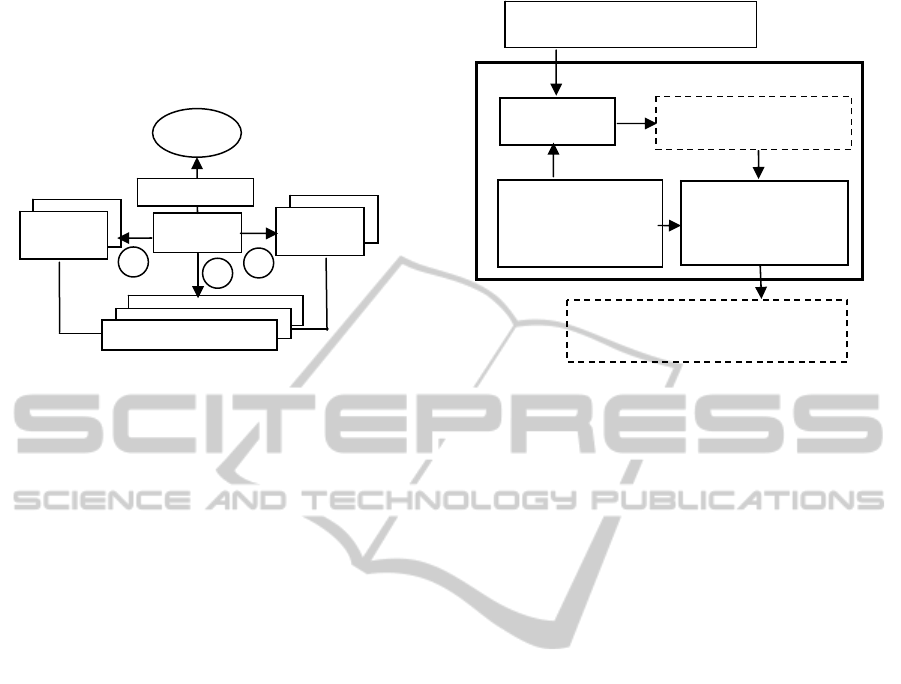

As seen in Figure 1, an understanding system re-

quires the provision of a meta-model, the perception

of the source in terms of the elements and constrains

depicted in the meta-model, and an analyser that

allows mapping of the perceived elements to con-

structs of the meta-model. In this context, a model is

an abstraction of phenomena or system, whereas a

MachineUnderstandingandAvoidanceofMisunderstandinginAgent-directedSimulationandinEmotionalIntelligence

319

meta-model provides an abstraction of the properties

of the model itself. A model conforms to its meta-

model in the way that a computer program conforms

to the grammar of the programming language in

which it is developed.

Figure 1: Machine understanding – Basic paradigm.

A system A can understand an entity B (Entity,

Relation, Attributes) if and only if three conditions

are satisfied (Ören et al., 2007):

A can access C, a meta-model of Bs. (C is the

meta-level knowledge of A about Bs.) The meta-

model can be unique or multiple, fixed, evolvable,

replaceable, or functionally equivalent (similar but

not identical) to another one. In the basic para-

digm, we assume that the meta-model is unique

and fixed, i.e., is non-evolvable and non replacea-

ble.

A can analyze and perceive B to generate D. (D is

a perception of B by A with respect to C.)

A can map relationships between C and D for

existing and non-existing features in C and/or D to

generate result (or product) of understanding pro-

cess.

As shown in Figure 2, a functional decomposi-

tion reveals that an understanding system has a me-

ta-model, an analyzer, and an evaluator. The meta-

model stores knowledge about Bs. The analyzer

analyzes inputs with respect to C to produce a per-

ception of B. The evaluator can compare the percep-

tion of B with the meta-model to provide additional

information about B, such as its non-observable

characteristics and how this instance of B relates to

other Bs. The product of the understanding process

has the following characteristics:

It depends on the understanding system; that is,

another understanding system may have a different

understanding of the same entity.

For a system A, understanding depends on: (1) its

meta-model, (2) its analyzer, and (3) its evaluator;

that is, with a different meta-model, analyzer, or

evaluator, the understanding may differ.

Figure 2: Functional decomposition of an under-standing

system (Arrows indicate information flow).

3.2 Machine Understanding:

Extended Paradigms

The basic paradigm of understanding can be extend-

ed

three ways to (1) rich understanding, (2) explor-

tory understanding, and (3) theory-based under-

standing. As will be clarified in the sequel, the four

metaphors for machine under-standing have the

following characteristics: Basic paradigm of under-

standing: system has background knowledge (i.e.,

meta-knowledge) to understand. Rich understand-

ing: All or some of the understanding elements may

have more than one version. Exploratory under-

standing: Background knowledge (meta-model) has

to be found or developed to process the perception.

Theory-based understanding: A theory (or theoreti-

cal model) if formulated without any observation;

then technology has to be developed for observation

of phenomena. Once the phenomena are observed

(perceived) they can confirm the theory which in

turn is used to explain the phenomena.

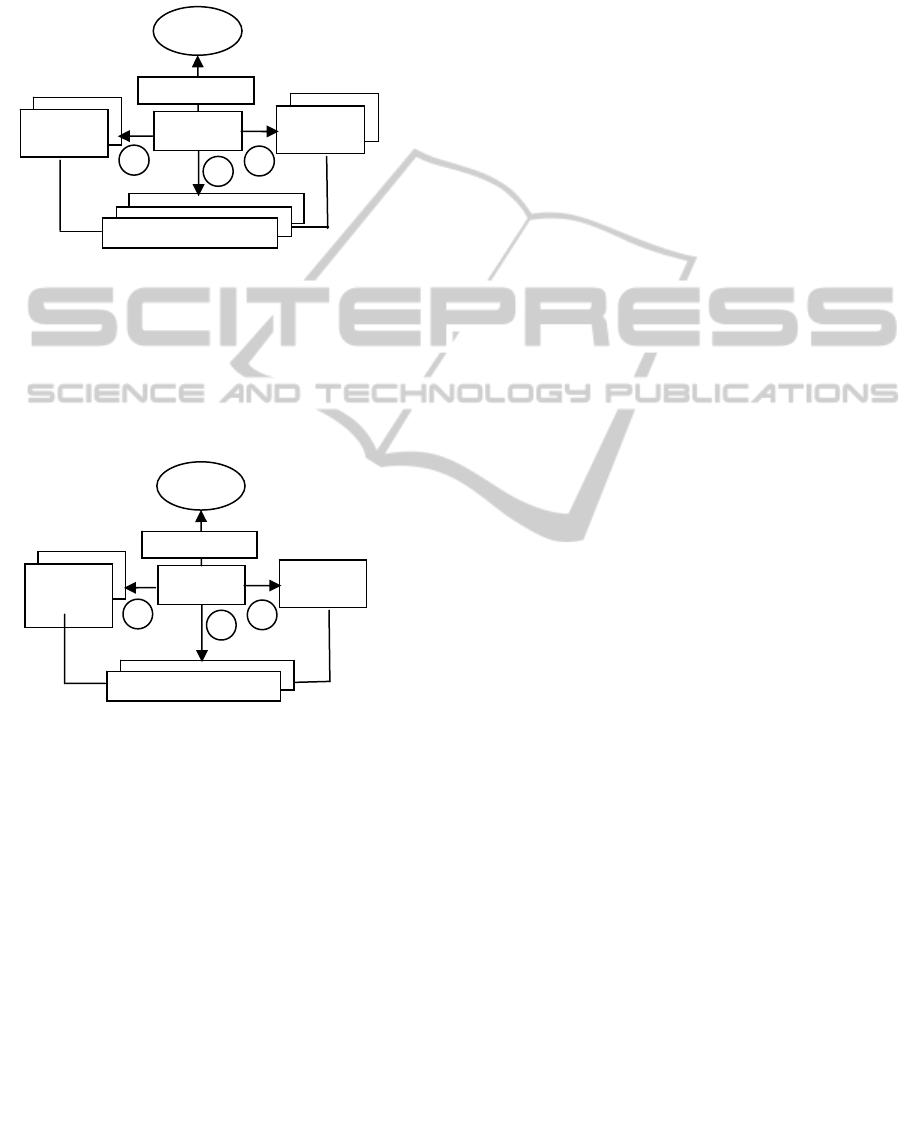

3.2.1 Rich Understanding Paradigm

A model of rich understanding is represented in

Figure 3. The difference of basic model of under-

standing and rich understanding stems from the

following:

There can be more than on meta-model in rich

understanding – some may focus on different as-

pects or may have different resolutions.

There can be more than on perception of the entity

to be understood.

There can be different interpretations of the per-

ception(s) with respect to meta-model(s).

B: entity to be understoo

d

Understandin

g

s

y

stem A

Analyze

r

D: perception of B (by A)

with respect to C

C: meta-model of Bs

(meta-knowledge

about Bs

)

Evaluator (interprets

the relationship

between C & D)

U: an understanding of B

b

y A

with respect to C

Entity B

System A

Cs:

meta-models

Ds: percep-

tions

Relationships: C(s)-D(s)

can understand

1

2

3

SIMULTECH2013-3rdInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

320

The total number of understandings may be the

Cartesian product of the meta-model(s), percep-

tion(s) and interpretations. Rich understanding can

allow multi-understanding and switchable under-

standing.

Figure 3: Rich understanding.

3.2.2 Exploratory Understanding

Paradigm

Exploratory understanding process (see Figure 4) starts

with a perception D. Formulation of basic knowledge to

interpret perception D requires

Figure 4: Exploratory understanding.

meta-knowledge to be formulated and/or to be found. This

would require formulation and testing of hypotheses. In

exploratory understanding, changing the point of view

may be very useful to understand the phenomenon or the

entity.

3.2.3 Theory-based Understanding

Paradigm

Theory-based understanding starts with a hypothesis

(or theory); then necessary technology would be

developed to perceive (detect) relevant phenomena

that would be tested later. A well known example is

the gravitational waves (ripples of spacetime caused

by events such as colliding neutron stars and merg-

ing black holes) which were predicted in 1916 by

Einstein based on his theory of general relativity.

Still technology to detect gravitational waves is not

available

.

As another example, in nuclear physics, several

models to explain elementary particles have been

developed over the years; this exemplifies the exist-

ence of several meta-models. Pictorial representation

of theory-based understanding would be similar to

Figure 3 representing rich understanding.

3.3 Machine Understanding

of Emotions:

Emotional Intelligence Simulation

According to the theory of emotional intelligence

(Mayer and Salovey, 1997), four psychological

abilities that enable humans to relate emotionally to

one another are: (1) emotion perception, (2) thought

facilitation using emotions, (3) emotion understand-

ing, and (4) emotion management. The ability to

understand emotions is desirable in intelligent agents

(Dias and Paiva, 2009), (Kazemifard et al., 2009),

(Kazemifard et al., 2012).

"Emotion understanding is a cognitive activity of

making inferences using knowledge about emotions

about why an agent is in an emotional state (e.g.,

unfair treatment makes an individual angry) and

which actions are associated with the emotional state

(e.g., an angry individual attacks others)" (Kazemi-

fard et al., 2013).

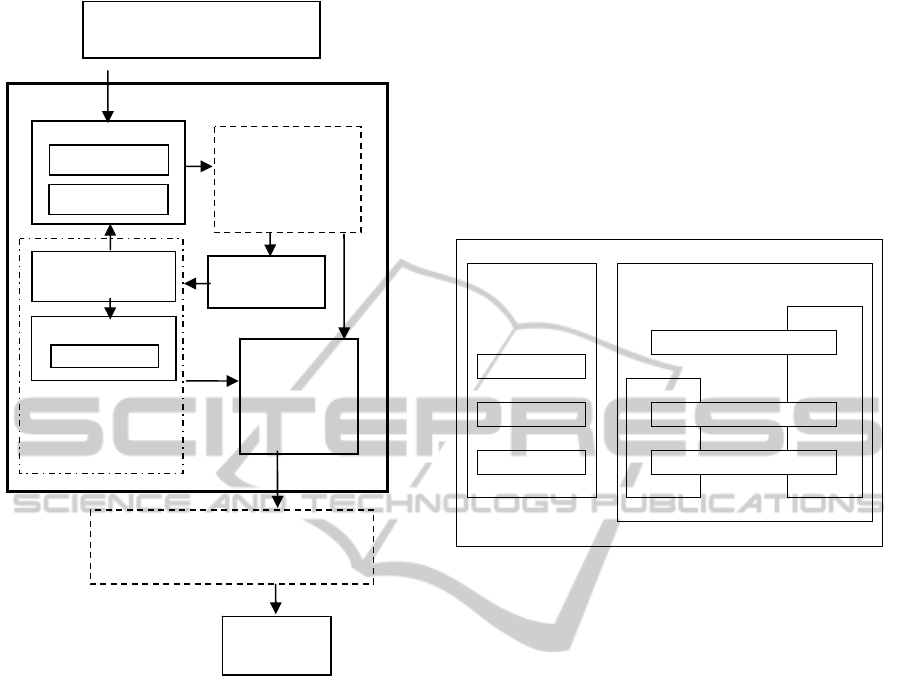

A functional decomposition of our emotion un-

derstanding framework –which is an extension of

our basic machine understanding paradigm– is de-

picted in Figure 5.

Our emotion understanding framework consists

of four elements (Kazemifard et al., 2013):

a meta-model or knowledge about agents and emo-

tions. It consists of an episodic memory to store

observed details of experienced events and a se-

mantic memory to store general knowledge about

emotions, such as their similarities and relation-

ships among emotions and experiences in episodic

memory. The semantic memory includes semantic

graphs to represent knowledge about past emotion-

al experience(s).

a perceptor (or analyzer) to perceive agents and

emotions. It assigns similar agents to types and

perceives the emotional states of agents.

an evaluator of the perceived agent and the emo-

tion(s) with respect to the meta-knowledge, that is

the states of the episodic and semantic memories.

a memory modulator to update meta-model based

on observed emotional reactions of agents to act

ions.

Entity B

System A

Cs: Meta-

models

D: percep-

tion

Relationshi

p

s: C

(

s

)

-D

(

s

)

can understan

d

2

1

3

Entity B

System A

Cs:

meta-models

Ds: percep-

tions

Relationshi

p

s: C

(

s

)

-D

(

s

)

can understan

d

1

2

3

MachineUnderstandingandAvoidanceofMisunderstandinginAgent-directedSimulationandinEmotionalIntelligence

321

Figure 5: Functional decomposition of the framework of

emotion understanding (Kazemifard et al., 2013).

4 MISUNDERSTANDING

There are three possibilities for the outcome of an

understanding system,: (1) the system can provide

an understanding of the entity or phenomenon, (2)

the system cannot understanding it and can or cannot

declare its inability to understand, and (3) the system

provides a flawed understanding, i.e., it misunder-

stands the entity or phenomenon and does not warn

the (human or another agent) user about its short-

coming(s). Failures in understanding have been first

elaborated by Ören and Yilmaz (2011).

Before starting to develop and implement misun-

derstanding avoidance algorithms, a systematic

approach to study causes of misunderstanding would

be very useful. This is the aim in this article.

As depicted in Figure 6, there are two main

groups of sources for an understanding system not to

function properly. They are inability to understand-

ing and filters causing misunderstanding.

Failures in understanding have been first elabo-

rated by Ören and Yilmaz (2011). In this article, we

further discriminate two sources of filters, namely

internal or self-imposed filters and externally im-

posed filters for context, biases, and fallacies. How-

ever, externally imposed filters are not elaborated

extensively. Furthermore, in this article, our basic

machine understanding paradigm has also been

extended with three other machine understanding

paradigms.

Inability to

understand

Filters causing

misunderstanding

due to:

external

Context

Meta-model

internal

Perception

Biases

Interpretation

Fallacies

Figure 6. Inabilities and filters that can induce misunder-

standing.

4.1 Inabilities to Understand Properly

Inabilities to understanding properly may depend on

the meta-model, perception, and interpretation of the

perception with respect to meta-model.

4.1.1 Misunderstanding Due to Meta-model

In the sequel, misunderstandings due to meta-model

are elaborated on for the basic understanding para-

digm, rich understanding, exploratory understand-

ing; and theory-based understanding as well as with

respect to the memories used in emotional intelli-

gence. Misunderstanding based on meta-model is

knowledge-deficient misunderstanding.

4.1.1.1 In Basic Understanding Paradigm

Misunderstanding due to meta-model may be one of

the four types:

not having necessary knowledge (uninformed

system),

not having necessary knowledge of proper resolu-

tion (superficially informed system) [superficial

understanding],

use of erroneous, incomplete, inconsistent, irrele-

vant, or corrupt meta-model (ill-

B: agent and its emotion

to be understood

Emotion understanding system A

Anal

y

zers

for emotions

for agents

D: perception of

B (by A)

with respect to

status of C

Memory mod-

ulator

Evaluator

(interprets the

relationship

between C &

D

)

Episodic memory

Semantic memo

r

y

S. graph

C:

meta-model of Bs

(meta-knowledge

about Bs)

Target action

U: an understanding of agent B and

its emotional state by A

with respect to C

SIMULTECH2013-3rdInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

322

informed/misinformed system) [ill-informed un-

derstanding] [misinformed understanding];

deliberately applying wrong meta-model (dogmat-

ic point of view). [This type of dogmatic under-

stand can be called meta-model induced dogmatic

understanding.]

4.1.1.2 In Rich Understanding Paradigm

Misunderstanding may be due to:

limited knowledge base (lack of additional meta

model(s) [knowledge-deficient misunderstanding],

inability to switch to other meta-model(s).

4.1.1.3 In Exploratory Understanding

Paradigm

Misunderstanding may be due to:

non existence of pertinent meta-model,

inability to find a pertinent meta-model,

inability to formulate needed hypotheses about

meta-models and to test them,

inability to adopt a different perspective [misun-

derstanding due to rigid perspective].

4.1.1.4 In Theory-based Understanding

Paradigm

Lack of understanding may be due to:

lack of appropriate theory or paradigm,

non acceptance of appropriate theory or paradigm

[theory-induced lack of understanding].

4.1.2 Misunderstanding Due to Perception

What cannot be perceived may normally not be

understood. Exception is the case of theory-based

understanding where theoretical knowledge precedes

experimental validation. Misunderstanding based on

perception is perception-deficient misunderstanding.

4.1.2.1 In Basic Understanding Paradigm

Some sources of problems for misunderstanding due

to perception (lack of perception, misperception)

are:

lack of appropriate ability to perceive [inability to

perceive],

inability to discriminate [perceptual confusion],

focus on an irrelevant aspect (domain, nature,

scope, granularity, modality) [irrelevant percep-

tion],

inability to discern goal(s) behind action(s) [super-

ficial perception],

hallucination in the absence of stimulus.

"The halo effect is a type of cognitive bias in

which our overall impression of a person influ-

ences how we feel and think about his or her char-

acter" (Cherry). Hence, halo effect may cause in-

appropriate and false perception; therefore may

cause misunderstand.

Perception component of an understanding system

should be able to discriminate deception [deception-

induced misunderstanding].

4.1.2.2 In Rich Understanding Paradigm

Misunderstanding may be due to:

inability to perceive reality from different perspec-

tives. [Tunnel vision understanding is only one

way to perceive and interpret; which is not the ap-

propriate way].

Hence, the following types of misunderstandings can

be distinguished: meta-model focused dogmatic

understanding, perception focused dogmatic under-

standing, and interpretation focused dogmatic un-

derstanding.

4.1.2.3 In Exploratory Understanding

Paradigm

Misunderstanding may be due to misperception

[misperception-induced misunderstanding].

4.1.2.4 In Theory-based Understanding

Paradigm

Misunderstanding may be due to instrumentation

error. An example is the claim made in early 2012

that "particles can travel faster than the speed of

light" as physicists operating the Large Hadron Col-

lider at the CERN laboratory claimed before detect-

ing a bad connection which invalidated the claim

[instrumentation-induced misunderstanding].

4.1.3 Misunderstanding

Due to Misinterpretation

Inappropriate pairing of meta-model(s) and percep-

tion(s) may lead to misunderstanding. Misinterpre-

tations may be done unwillingly or willingly. Mis-

understanding based on interpretation is

interpretation-deficient misunderstanding.

4.1.3.1 In Basic Understanding Paradigm

Misinterpretation is a source of misunderstanding

and may be due to:

lack of pertinent knowledge processing ability in

interpretation,

MachineUnderstandingandAvoidanceofMisunderstandinginAgent-directedSimulationandinEmotionalIntelligence

323

misinterpretation of motivation [misunderstanding

due to misinterpretation of motivation],

illusion which is a misinterpretation of a true sen-

sation, and

schizophrenic understanding which –as an aberra-

tion– leads to misinterpretations of reality.

4.1.3.2 In Rich Understanding Paradigm

Misunderstanding may be due to:

lack of appropriate meta-model(s) [knowledge-

deficient misunderstanding],

inability to access appropriate meta-model,

inability to use and to pair relevant perception and

relevant meta-model (misinterpretation).

4.1.3.3 In Exploratory Understanding

Paradigm

Misunderstanding may stem from the following

facts:

there is not yet an appropriate meta-model as a

basis for evaluation of the perception,

the granularities of the perception and the meta-

model may not match.

4.1.3.4 In Theory-based Understanding

Paradigm

Lack of interpretation or misinterpretation may be

due to the following facts:

theory was wrong and

technology is not yet ripe to observe with needed

precision.

4.1.4 Misunderstanding in Emotional

Intelligence

Emotions may have contradictory manifestations.

For example, the behaviour of an athlete crying after

winning a match, may be due to his emotional status

and distress while he is extremely joyful.

The contents and/or misinterpretations of the two

types of memories involved in emotional intelli-

gence can also be source of misunderstandings. For

example, strong past psychological experiences as

coded in the episodic memory may cause unbal-

anced behaviour.

The other causes of misunderstanding in emo-

tional intelligence are, as seen in section 4.1 (inabili-

ties to understand properly) and as discussed in

section 4.2 (filters affecting misunderstanding).

4.2 Filters Affecting Misunderstanding

Three types of filters such as context, biases, and

fallacies may affect understanding and cause misun-

derstanding. Filters can be internal or imposed ex-

ternally.

4.2.1 Context in Misunderstanding

Perception and/or interpretation in an improper con-

text can be source of misunderstanding [context-

induced misunderstanding]. Hence, one can identify:

context-sensitive understanding, context-insensitive

understanding, and double standards in understand-

ing. Context-dependent understanding would require

specification of the context. It would be desirable to

have context-aware understanding. The types of

misunderstandings are context unaware misunder-

standing and context-dependent misunderstanding.

4.2.2 Biases

Several types of biases such as group biases, cultural

biases, cognitive biases, emotive biases, personality

biases as well as effects of dysrationalia and irra-

tionality affect quality of understanding. Biases may

lead to biased understanding which may be errone-

ous understanding, incomplete understanding, in-

consistent understanding and irrelevant understand-

ing.

4.2.2.1 Group Bias in Misunderstanding

The group can be limited by a family, company,

institution, region, nation, interest, affinity, and/or

religion. A group member may have tunnel vision

which might affect understanding process [tunnel-

vision dogmatic understanding]. Sometimes mem-

bers may be instructed and even be indoctrinated

about a certain way of understanding. At extreme

cases, understanding can be blocked to lead to

blocked understanding.

4.2.2.2 Cultural Bias in Misunderstanding

Values and symbols differ for various cultures;

hence a same entity may be interpreted differently

based on the cultural background to lead culture-

induced misunderstanding.

4.2.2.3 Cognitive Bias in Misunderstanding

Cognitive bias is a "common tendency to acquire

and process information by filtering it through one's

own likes, dislikes, and experiences. [cognitive bias-

SIMULTECH2013-3rdInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

324

induced misunderstanding]

"Dunning-Kruger effect "those with limited

knowledge in a domain: (1) they reach mistaken

conclusions and make errors, but (2) their incompe-

tence robs them of the ability to realize it." "High

cognitive complexity individuals differ from low

cognitive complexity individuals not only in

knowledge processing abilities in general but in

understanding, in particular.

4.2.2.4 Emotive Bias in Misunderstanding

Certain types of emotions affect reasoning abilities

to cause misunderstanding [emotive bias-induced

misunderstanding, emotion-induced misunderstand-

ing]. For example, anger negatively affects reason-

ing, hence understanding ability. Effect of anger in

misunderstanding leads to anger-induced misunder-

standing. Joy may lead to euphoria which in turn

may affect understanding [joy-induced misunder-

standing].

4.2.2.5 Personality Bias in Misunderstanding

Some personality types are prone to anger; hence

their understanding ability can easily be affected to

lead misunderstanding [personality-induced misun-

derstanding].

4.2.2.6 Effects of Dysrationalia

in Misunderstanding

Dysrationalia is the inability to think and behave

rationally despite adequate intelligence (Stanovitch,

1993). It affects ability to understand properly [dys-

rationalia-induced misunder-standing].

4.2.2.7 Effects of Irrationality

in Misunderstanding

Irrationality may have two types of effects in mis-

understanding (Ariely, 2008) [irrationality-induced

misunderstanding]:

lack of ability to understand properly and

ability to distort understanding of others to cause

distorted understanding.

4.2.3 Fallacies in Misunderstanding

Fallacy is misconception resulting from incorrect

reasoning. A logical fallacy is an element of an ar-

gument that is flawed, essentially rendering invalid

the line of reasoning, if not the entire argument.

Fallacies in information distortion as well as delib-

erate misperception and misinterpretation are

sources of misunderstanding [fallacy-based misun-

derstanding]. They may exist as deliberate use of

unfit metamodel in understanding. Two categories of

fallacies are paralogism and sophism.

4.2.3.1 Paralogism in Misunderstanding

Paralogism is unintentional use of invalid argument

in reasoning. It causes misunderstanding due to

misperception, mis-interpretation, and/or mis-

justification of background knowledge (meta-model)

[paralogism-based misunderstanding].

4.2.3.2 Sophism in Misunderstanding

Sophism is deliberately using invalid argument

displaying ingenuity in reasoning in the hope of

deceiving someone. Some recent techniques in lie

detection in text analysis can also be used to detect

sources of attempt to misguide in understanding.

Misunderstandings due to fallacies can be delib-

erate misunderstanding (giving the illusion of not

understanding) and induced misunderstanding. Data

and evidences may be tempered or doctored by the

entity which attempts to understand and/or by an

outside agent. The individuals (or their representa-

tives, such as software agents) need to notice that

their understanding is being tempered [doctored or

tempered-evidence-based misunderstanding]. Hen-

ce, recognizing why a reality is presented in a cer-

tain way is helpful not to be trapped in misunder-

standing.

A type of misunderstanding is mutual misunder-

standing. Avoiding mutual misunderstanding is very

important to find reconciliatory solutions at different

levels of relationships.

4.3 Documentation of Understanding

It would be very desirable for an understanding

system to be able to document its abilities and limi-

tations. In this way, a user (human or another agent)

can have an informed trust to the results of an under-

standing system. Based on the systematization used

in this article, this type of documentation may in-

clude the following:

Meta-model(s) available and used

Perception(s)

Interpretation(s)

Contents of episodic and semantic memories

Types and contents of filters used.

A challenging situation in understanding is the case

when an understanding system does not have any

knowledge (or meta-model) about the entities it is

MachineUnderstandingandAvoidanceofMisunderstandinginAgent-directedSimulationandinEmotionalIntelligence

325

asked or required to understand. In this case, the

system would need to search and get appropriate

background knowledge and meta-model(s) and/or be

able to formulate and test hypotheses to formulate a

meta-model.

5 CONCLUSIONS

This article is a sequel to our joint work on multi-

understanding especially applied to understand hu-

man behavior and failure avoidance in simulation

studies. On understanding, we expanded our basic

multi-understanding paradigm and continue to sys-

tematize our exploration of sources of misunder-

standing.

We plan to implement some cases of misunder-

standing to avoid misunderstanding in agent simula-

tion of human behavior and especially in emotional

intelligence simulation.

Another line of research we plan to continue is to

realize context-aware agents for advanced simula-

tion studies. Context aware agents may also be use-

ful in other applications.

In both cases, we will attempt to develop soft-

ware agents capable to attest their limits of under-

standing by generating proper detailed documenta-

tion of their limits of understanding.

For human misunderstanding, the books by

Heyman (2012) and Young (1999) may be useful. In

addition to them, the book by Herman and Chomsky

(1988) would be useful for external distortions of

understandings [distortion-induced misunderstand-

ing].

REFERENCES

Ariely, D., 2008. Predictably irrational: The hidden forces

that shape our decisions. Harper Collins, 2

nd

edition in

2012.

Cherry, K. What is the halo effect? About.com.

http://psychology.about.com/od/socialpsychology/f/ha

lo-effect.htm

Dias, J., Paiva, A., 2009. Agents with emotional

intelligence for storytelling. 8th International

Conference on Autonomous Agents and Multi-agent

Systems, Doctoral Mentoring Program Budapest,

Hungary.

Herman, E., Chomsky, N., 1988. Manufacturing consent –

A propaganda model, Pantheon Books, New York.

Heyman, D., 2012. The Little Book of Big Misunderstand-

ings, AllPolitics Communications Inc. at Smashwords.

Kazemifard, M., Ghasem-Aghaee, N., Ören, T. I., 2009.

Agents with ability to understand emotions. In:

Summer Computer Simulation Conference, 2009,

Istanbul, Turkey. SCS, San Diego, CA, 254-260.

Kazemifard, M., Ghasem-Aghaee, N., Ören, T. I., 2012.

Emotive and cognitive simulations by agents: Roles of

three levels of information processing. Cognitive

Systems Research, 13, 24-38.

Kazemifard, M., Ghasem-Aghaee, N., Koenig, B. L.,

Ören, T. I., 2013. An emotion understanding

framework for intelligent agents based on episodic and

semantic memories. Journal of Autonomous Agents

and Multi-Agent Systems.

(DOI) 10.1007/s10458-012-9214-9.

Mayer, J. D., Salovey, P., 1997. What is emotional

intelligence. In: Salovey, P., Sluyter, D. (eds.)

Emotional Development and Emotional Intelligence:

Educational Implications. New York: Basic Books.

Ören, T. I., 1992. Towards program understanding

systems. (Invited Plenary Paper). In: Proceedings of

the First Turkish Symposium on Artificial Intelligence

and Neural Networks, Oflazer, K., Akman, V.,

Guvenir, H. A., Halici, U., (eds.), 25-26 June, 1992,

Bilkent University, Ankara, Turkey, pp. 3-12.

Ören, T. I., 1995. (Invited contribution). Artificial intelli-

gence and simulation: A typology. In: Proceedings of

the 3rd Conference on Computer Simulation, Raczyn-

ski, S., (ed.), Mexico City, Mexico, Nov. 15-17, pp. 1-

5.

Ören, T. I., 2000. (Invited Opening Paper). Understanding:

A taxonomy and performance factors. In: Thiel, D.,

(ed.) Proc. of FOODSIM’2000, June 26-27, 2000,

Nantes, France. SCS, San Diego, CA, pp. 3-10.

Ören, T. I., 2012. Evolution of the discontinuity concept in

modeling and simulation: From original idea to model

switching, switchable understanding, and beyond.

Special Issue of Simulation –The Transactions of SCS.

Vol. 88, issue 9, Sept. 2012, pp. 1072-1079. DOI:

10.1177/0037549712458013.

Ören, T. I., Abou-Rabia, O., King, D. G., Birta, L. G.,

Wendt, R. N., 1990. (Plenary Paper). Reverse

engineering in simulation program understanding. In:

Problem Solving by Simulation, Jávor, A., (ed.)

Proceedings of IMACS European Simulation Meeting,

Esztergom, Hungary, August 28-30, 1990, pp. 35-41.

Ören, T. I., Ghasem-Aghaee, N., Yilmaz, L., 2007. An

ontology-based dictionary of understanding as a basis

for software agents with understanding abilities. In:

Proceedings of the Agent-Directed Simulation Sympo-

sium of the Spring Simulation Multiconference

(SMC'07), pp. 19-27. Norfolk, VA, March 2007.

Ören, T. I.,.Yilmaz, L., 2009. Failure avoidance in Agent-

Directed Simulation: Beyond conventional V&V and

QA. In L. Yilmaz and T.I. Ören (eds.). Agent-Directed

Simulation and Systems Engineering. Systems

Engineering Series, Wiley-Berlin, Germany, pp. 189-217.

Ören, T. I., Yilmaz, L., 2011. Semantic agents with

understanding abilities and factors affecting

misunderstanding. In: Elci, A., Traore, M. T., Orgun,

M. A. (eds.)

Semantic Agent Systems: Foundations

and Applications, Springer-Verlag. pp. 295-313.

SIMULTECH2013-3rdInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

326

Stanovich, K. E., 1993. Dysrationalia: A new specific

learning disability. Journal of Learning Disabilities,

26(8), 501–515.

Yilmaz L., Ören, T. I., 2009. Agent-directed simulation,

Chapter 4 in Yilmaz, L., Ören, T. I. (eds.) Agent-

directed Simulation and Systems Engineering, pp.

111-144. Wiley Series in Systems Engineering and

Management. Wiley.

Young, R. L., 1999. Understanding Misunderstand-ings:

A Guide to More Successful Human Interac-tion. Uni-

versity of Texas Press, Austin, TX.

Zeigler, B. P., 1986. Systems knowledge: A definition and

its implications. In: Modelling and Simula-tion Meth-

odology in the Artificial Intelligence Era, Elzas, M.S.,

Ören, T. I., Zeigler, B. P. (eds.), North-Holland, Am-

sterdam, pp. 15-17.

MachineUnderstandingandAvoidanceofMisunderstandinginAgent-directedSimulationandinEmotionalIntelligence

327