Efficiency of SSVEF Recognition from the Magnetoencephalogram

A Comparison of Spectral Feature Classification and CCA-based Prediction

Christoph Reichert

1,2

, Matthias Kennel

3

, Rudolf Kruse

2

, Hermann Hinrichs

1,4,5

and Jochem W. Rieger

1,6

1

Department of Neurology, University Medical Center A.¨o.R., D-39120 Magdeburg, Germany

2

Department of Knowledge and Language Processing, Otto-von-Guericke University, D-39106 Magdeburg, Germany

3

Fraunhofer Institute for Factory Operation and Automation IFF, D-39106 Magdeburg, Germany

4

Leibniz Institute for Neurobiology, D-39118 Magdeburg, Germany

5

German Center for Neurodegenerative Diseases (DZNE), D-39120 Magdeburg, Germany

6

Department of Applied Neurocognitive Psychology, Carl-von-Ossietzky University, D-26111 Oldenburg, Germany

Keywords:

BCI, SSVEP, CCA, MEG, Virtual Reality Objects.

Abstract:

Steady-state visual evoked potentials (SSVEP) are a popular method to control brain–computer interfaces

(BCI). Here, we present a BCI for selection of virtual reality (VR) objects by decoding the steady-state vi-

sual evoked fields (SSVEF), the magnetic analogue to the SSVEP in the magnetoencephalogram (MEG). In a

conventional approach, we performed online prediction by Fourier transform (FT) in combination with a mul-

tivariate classifier. As a comparative study, we report our approach to increase the BCI-system performance in

an offline evaluation. Therefore, we transferred the canonical correlation analysis (CCA), originally employed

to recognize relatively low dimensional SSVEPs in the electroencephalogram (EEG), to SSVEF recognition

in higher dimensional MEG recordings. We directly compare the performance of both approaches and con-

clude that CCA can greatly improve system performance in our MEG-based BCI-system. Moreover, we find

that application of CCA to large multi-sensor MEG could provide an effective feature extraction method that

automatically determines the sensors that are informative for the recognition of SSVEFs.

1 INTRODUCTION

Brain–computer interfaces (BCI) are intended to as-

sist patients who suffered a severe loss of motor

control. One of the most robust physiological sig-

nals used to control a BCI are the steady-state vi-

sual evoked potentials (SSVEP) (Vialatte et al., 2010).

This signal is a stimulus driven neuronal oscillation

that can be measured over the visual cortex and re-

flects the fundamental frequencyof a flickering stimu-

lus a person focuses on. In BCI-applications multiple

stimuli with different frequencies are simultaneously

presented and the task is to decide from the SSVEP

which flicker frequency the subject is focussing.

Most studies measure SSVEPs noninvasively with

the electroencephalogram (EEG) (Bin et al., 2009;

Friman et al., 2007; Horki et al., 2011; Lin et al.,

2007; M¨uller-Putz et al., 2005; Volosyak, 2011). In

this study we investigate the discrimination of steady

state visual evoked fields (SSVEF) from the magne-

toencephalogram (MEG). Recently, it was shown that

classification of event related magnetic fields can pro-

vide higher accuracies than classification of simul-

taneously recorded event related potentials (Quandt

et al., 2012). To date, only a few studies investigated

SSVEFs (M¨uller et al., 1997; Thorpe et al., 2007) and

we are not aware of any study that tested the success

of SSVEFs to provide neuro-feedback in a BCI set-

ting. In our paradigm we present virtual reality ob-

jects with relatively small stimulation surfaces. The

scenario simulates a real world setting in which pa-

tients could select objects for grasping with a robotic

gripper (Reichert et al., 2013).

Our aim was to compare the performance of

the Fourier feature based classification approach of

SSVEFs, which is standard in the EEG, to a canonical

correlation based approach and to improve decoding

of SSVEFs in MEG. We first performed online decod-

ing with Fourier features without feature selection. In

order to improve the decoding performance, we did

233

Reichert C., Kennel M., Kruse R., Hinrichs H. and Rieger J..

Efficiency of SSVEF Recognition from the Magnetoencephalogram - A Comparison of Spectral Feature Classification and CCA-based Prediction.

DOI: 10.5220/0004645602330237

In Proceedings of the International Congress on Neurotechnology, Electronics and Informatics (BrainRehab-2013), pages 233-237

ISBN: 978-989-8565-80-8

Copyright

c

2013 SCITEPRESS (Science and Technology Publications, Lda.)

an additional offline analysis with canonical correla-

tion analysis (CCA). This method was successfully

applied to EEG based SSVEPs (Bin et al., 2009; Lin

et al., 2007; Horki et al., 2011) and provided excellent

decoding performance. The concept of CCA is to find

an optimal channel weighting to detect the SSVEP.

However, in the aforementioned EEG studies at most

nine channels are included in the weighting. In the

MEG, however, reliable weights must be found for a

much higher number of channels. Thus, the relative

performance of a BCI based on MEG derived Fourier

features or CCA is currently unclear.

In addition, we aimed to test potential perfor-

mance gains that may be obtained by inclusion of

stimulation frequency harmonics (M¨uller-Putz et al.,

2005) or variations of length of analyzed signal in-

terval. The latter is of particular importance for high

throughput BCIs and their usability.

2 METHODS

In total, 22 subjects participated in the experiment.

The MEG was recorded with a BTi system, equipped

with 248 magnetometers, at a sampling rate of

678.17Hz and processed in real-time. The study was

approved by the ethics committee of the Medical Fac-

ulty of the Otto-von-Guericke University of Magde-

burg. All subjects gave written informed consent.

2.1 Stimulation and Task

A VR scenario consisting of four different objects

(mobile phone, banana, pear, cup) placed in a square

configuration on a table was projected on a screen 1m

in front of the subject. The edges of the square formed

by the objects were 8.5

◦

visual angle long. A circu-

lar region of the table surface under each object was

used to provide flicker stimulation and feedback. On

each trial, objects were placed in random order but

the stimulation frequencies were held fixed for each

position (upper left: 6.67 Hz, lower right: 8.57 Hz,

lower left: 10.0Hz, upper right: 15.0 Hz). The sub-

jects were instructed to direct their gaze to a prede-

fined target object. Flicker duration was 5s, followed

by the appearance of a green circular shape around the

decoded object.

The online decoding experiment consisted of

training runs and test runs, each run consisted of 32

trials. While in training runs random feedback was

provided, in test runs the trained learning algorithm

was used to decode the object selected by the subject.

Subjects performed six to nine runs. Three subjects

only completed training runs (excluded from online

results); three subjects completed four, 16 subjects

two training runs.

2.2 Online Processing

The online decoding of SSVEFs was performed con-

ventionally by extracting amplitude information via a

Fourier transform (FT) for each channel c and stim-

ulation frequency f. In order to reduce the number

of channels to process and to primarily capture vi-

sual potentials, we preselected 59 occipital sensors as-

sumed to assess activity from visual areas. We used

a 4.5 s data segment starting at stimulation onset to

determine the spectral feature

F( f, c) = k

1

N

N

∑

n=1

x

n,c

· e

−2πift

n

k (1)

where x

n,c

is the magnetic flux at sample point n in

channel c and t

n

denotes the time of the n

th

sample.

A regularized logistic regression (rLR) classifier was

trained on the spectral brain patterns. The classifier

was trained using the trials from training runs and up-

dated after each test trial by adding the recently ac-

quired data. This was possible because the target ob-

jects were instructed by the experimenter.

2.3 Offline Analysis

We did offline classification via rLR in a leave-one-

run-out cross validation (CV) as well as in simulated

online validation (SOV). While CV involves all avail-

able runs except the current test run, SOV involves

only preceding trials in the classifier training. Thus,

SOV mimics the process in a real BCI experiment.

Classification based on CCA was carried out indepen-

dent of training data. Therefore, here the determina-

tion of overall decoding accuracies is unaffected by

the validation scheme.

2.3.1 Feature Extraction

The decoding method described in section 2.2

(FT/rLR) was applied both online and offline. In ad-

dition, we employed the CCA which finds the weights

W

x, f

and W

y, f

that maximize the correlation ρ

CCA

( f)

between the optimal linear combination x

f

= X

T

W

x, f

of the brain signals X and the linear combination

y

f

= Y

T

f

W

y, f

of a reference signal Y

f

. For each fre-

quency f the signal Y

f

is modeled as

Y

f

=

sin2πft

cos2π ft

(2)

where t denotes time and Y

f

can be extended by ap-

pending the sine and cosine of multiples of f to enable

the involvement of harmonics.

NEUROTECHNIX2013-InternationalCongressonNeurotechnology,ElectronicsandInformatics

234

2.3.2 Prediction of Selections

The multivariate rLR classifier was applied on FT

features. In CCA, each stimulation frequency pro-

vides one reference signal and the reference signal

that provide maximum canonical correlation ρ

CCA

( f)

with brain activity indicates the classes. Serving

as a training-independent classifier, we determined

the frequency that revealed the maximum correlation

(MC) between x

f

and y

f

:

f

max

= argmax(ρ

CCA

( f)) (3)

for each trial separately. We compared the fea-

ture/classifier combinations FT/rLR and CCA/MC by

investigating the impact of the analysis window width

and the involvement of harmonics. The identical pre-

selected sensors were used as described in section 2.2.

3 RESULTS

Subjects correctly selected the target object in 74.4%

of trials on average (25% chance performance), when

performing the experiment online.

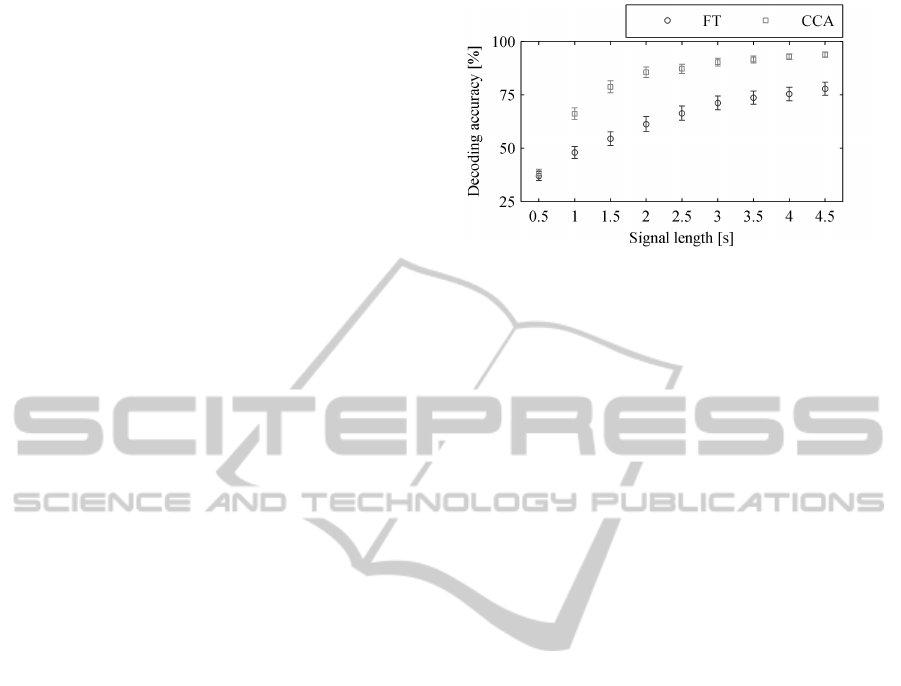

Figure 1 shows the comparison of correct dis-

crimination rates obtained with offline FT/rLR and

CCA/MC with different window widths. Averages

and standard errors were calculated over subjects.

Here, FT features were derived without harmonics

and CCA features were obtained involving two har-

monics. Obviously, CCA/MC considerably enhances

the decoding accuracies. For example, with 4.5s win-

dow width CCA/MC achieved on average 93.8% cor-

rect classifications compared to 77.9% using FT fea-

tures. The highest accuracies were always found at

the maximum window width. However, for CCA a

steeper and faster increase of correct decoding rates

with increasing window width is obvious. This sug-

gests that CCA features might provide good perfor-

mance with shorter stimulation intervals than FT fea-

tures. This is also indicated by the observation that

the optimal information transfer rate (Wolpaw et al.,

2000) is obtained with 3.0 s window widths (corre-

sponding to 13.6bit/min) for FT/rLR but with 1.5 s

window widths (corresponding to 36.7bit/min) for

CCA/MC. When we tested the influence of harmonics

with time windows 4.5 s wide, we found that accuracy

obtained with FT-features considerably increased to

81.2% (p < 0.015, paired Wilcoxon sign-rank test)

when two harmonics were added. However, accuracy

obtained with CCA fell only slightly (to 92.3%) but

consistently (p < 0.001, paired Wilcoxon sign-rank

test) when the two harmonics were deleted and only

the fundamental frequency was used. This indicates

Figure 1: Dependence of decoding accuracy on signal inter-

val length (windows of 0.5–4.5 ms width) and feature space.

Average CV performance over subjects is shown. Squares

represent accuracies obtained with CCA/MC classification

and circles depict accuracies obtained with FT feature/rLR

classification. Error bars show the standard error of the

mean.

that the superiority of CCA over FT features does not

depend on a higher dimensional dependent variable

space spanned by the reference functions.

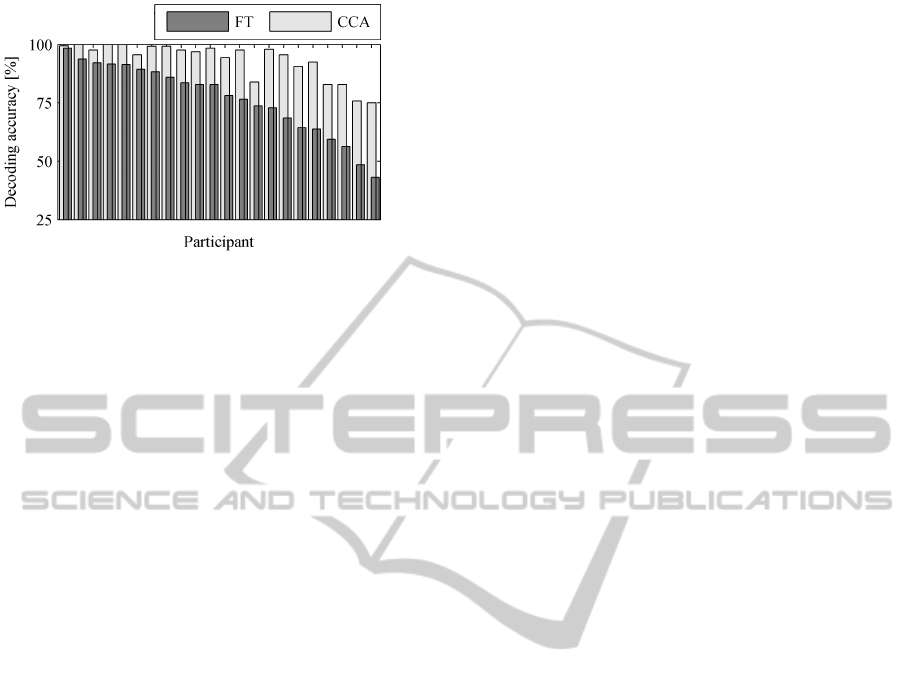

In order to verify BCI applicability, we performed

an SOV. The group results of the SOV deviate slightly

from the online results since here all subjects were in-

volved in the analysis, regardless of the number of

test runs they performed, and a constant number of

two training runs was assumed. We depict the sin-

gle subject results in Figure 2. There, subjects are

sorted by performance obtained by the FT decoding

method. It is important to note that the CCA/MC

method involves all performed trials for validation

and single trials are validated independent of oth-

ers. In contrast, the rLR requires training data and

was re-trained with each successive trial starting from

the third run. The average decoding accuracy was

76.6% with the FT/rLR method and much higher at

93.7% with the CCA/MC method. Fourteen out of

22 subjects obtained accuracies above 95% with the

CCA/MC method.

Computation time for the FT algorithm using

equation 1 as well as for CCA has linear complex-

ity with regard to the signal length. A single thread

on a 2.8GHz AMD Opteron 8220 SE processor takes

1.3 ms for the FT but 19.9ms for the CCA with a 1 s

long segment, 59 channels, 678.17Hz sampling rate

and four frequencies without harmonics. Although

FT providesan advantage in terms of processing time,

CCA processing time is still short enough for applica-

tion in real-time experiments.

4 DISCUSSION

We compared FT as a conventional spectral feature

EfficiencyofSSVEFRecognitionfromtheMagnetoencephalogram-AComparisonofSpectralFeatureClassificationand

CCA-basedPrediction

235

Figure 2: Single subject performance obtained in an SOV.

Each of the 22 subjects shows improvement of decoding

accuracy with the CCA method (light gray bars) compared

to classification of FT features (dark gray bars, sorted in

descending order).

extraction method combined with multivariate classi-

fication to CCA/MC regarding their performance in

MEG based BCIs. With the CCA approach, decoding

accuracy was considerably improved compared to FT.

This held already for analysis windows as short as one

second. The higher accuracy of CCA even with short

data windows considerably increased the information

transfer rates as compared to FT. This result suggests

that CCA/MC is an efficient method for high through-

put SSVEF-BCIs. A further advantage of CCA/MC

over FT/rLR in the context of BCI is that CCA/MC, as

opposed to FT/rLR does not require training blocks.

Thus, lengthy initial phases for acquisition of train-

ing data can be avoided. In BCI-practice, the model

estimation for each single trial in CCA can lead to im-

proved robustness against sensor replacement, sensor

malfunction and non-static brain patterns. This ren-

ders the CCA method as a flexible and reliable feature

extraction method for multi-channel BCIs controlled

by shifting attention to oscillating visual stimuli.

We confirmed in our study the finding that in-

clusion of harmonics significantly increases classifi-

cation accuracy in SSVEP BCIs (M¨uller-Putz et al.,

2005). The performance increase was small for CCA

but statistically significant. However, other authors

did not find such a benefit (Bin et al., 2009). Im-

portantly, the better performance of CCA/MC than

FT/rLR was independent of whether harmonics were

included or not.

In this study we demonstrate for the first time that

magnetic SSVEFs are suitable to control a BCI. In

particular, SSVEFs were decoded for selection of ob-

jects, presented in a VR scenario. Furthermore, we

showed that the CCA is a powerful method to rapidly

detect target frequencies in the MEG. The method in-

troduced in this work is capable of decomposing fre-

quency components of sources that are spatially dis-

tributed over dense sensor arrays. Importantly, the

proposed algorithm can be executed in several mil-

liseconds and, consequently, it is suited for BCI im-

plementation and can be applied online.

Even though MEG is not suited for home use,

this modality is suited for BCI development. Despite

reports of higher ITRs in some EEG based SSVEP

studies (Bin et al., 2009) we believe that the BCI

accuracy strongly depends on the visual stimulation.

Therefore, comparison of ITRs should be treated with

caution. Furthermore, an MEG system could serve

as a training device to familiarize patients with BCI

paradigms.

ACKNOWLEDGEMENTS

This work was supported by the EU project ECHORD

number 231143 from the 7th Framework Programme,

by Land-Sachsen-Anhalt Grant MK48-2009/003 and

by a Lower Saxony grant for the Center of Safety Crit-

ical Systems Engineering.

REFERENCES

Bin, G., Gao, X., Yan, Z., Hong, B., and Gao, S.

(2009). An online multi-channel SSVEP-based brain-

computer interface using a canonical correlation anal-

ysis method. J Neural Eng, 6(4):046002.

Friman, O., Volosyak, I., and Gr¨aser, A. (2007). Mul-

tiple channel detection of steady-state visual evoked

potentials for brain-computer interfaces. IEEE Trans

Biomed Eng, 54(4):742–750.

Horki, P., Solis-Escalante, T., Neuper, C., and M¨uller-Putz,

G. (2011). Combined motor imagery and SSVEP

based BCI control of a 2 DoF artificial upper limb.

Med Biol Eng Comput, 49(5):567–577.

Lin, Z., Zhang, C., Wu, W., and Gao, X. (2007). Frequency

recognition based on canonical correlation analysis

for SSVEP-based BCIs. IEEE Trans Biomed Eng,

54(6 Pt 2):1172–1176.

M¨uller, M. M., Teder, W., and Hillyard, S. A. (1997). Mag-

netoencephalographic recording of steady-state visual

evoked cortical activity. Brain Topogr, 9(3):163–168.

M¨uller-Putz, G. R., Scherer, R., Brauneis, C., and

Pfurtscheller, G. (2005). Steady-state visual evoked

potential (SSVEP)-based communication: impact of

harmonic frequency components. J Neural Eng,

2(4):123–130.

Quandt, F., Reichert, C., Hinrichs, H., Heinze, H. J., Knight,

R. T., and Rieger, J. W. (2012). Single trial dis-

crimination of individual finger movements on one

hand: A combined MEG and EEG study. Neuroim-

age, 59(4):3316–3324.

Reichert, C., Kennel, M., Kruse, R., Heinze, H. J.,

Schmucker, U., Hinrichs, H., and Rieger, J. W. (2013).

NEUROTECHNIX2013-InternationalCongressonNeurotechnology,ElectronicsandInformatics

236

Robotic Grasp Initiation by Gaze Independent Brain-

Controlled Selection of Virtual Reality Objects. NEU-

ROTECHNIX 2013 - International Congress on Neu-

rotechnology, Electronics and Informatics. In Press.

Thorpe, S. G., Nunez, P. L., and Srinivasan, R. (2007). Iden-

tification of wave-like spatial structure in the SSVEP:

comparison of simultaneous EEG and MEG. Stat

Med, 26(21):3911–3926.

Vialatte, F.-B., Maurice, M., Dauwels, J., and Cichocki, A.

(2010). Steady-state visually evoked potentials: focus

on essential paradigms and future perspectives. Prog

Neurobiol, 90(4):418–438.

Volosyak, I. (2011). SSVEP-based Bremen-BCI interface–

boosting information transfer rates. J Neural Eng,

8(3):036020.

Wolpaw, J. R., Birbaumer, N., Heetderks, W. J., McFar-

land, D. J., Peckham, P. H., Schalk, G., Donchin, E.,

Quatrano, L. A., Robinson, C. J., and Vaughan, T. M.

(2000). Brain-computer interface technology: a re-

view of the first international meeting. IEEE Trans

Rehabil Eng, 8(2):164–173.

EfficiencyofSSVEFRecognitionfromtheMagnetoencephalogram-AComparisonofSpectralFeatureClassificationand

CCA-basedPrediction

237