MobBIO: A Multimodal Database Captured with a

Portable Handheld Device

Ana F. Sequeira

1,2

, Jo

˜

ao C. Monteiro

1,2

, Ana Rebelo

1

and H

´

elder P. Oliveira

1

1

INESC TEC, Porto, Portugal

2

Faculdade de Engenharia, Universidade do Porto, Porto, Portugal

Keywords:

Biometrics, Multimodal, Database, Portable Handheld Devices.

Abstract:

Biometrics represents a return to a natural way of identification: testing someone by what (s)he is, instead

of relying on something (s)he owns or knows seems likely to be the way forward. Biometric systems that

include multiple sources of information are known as multimodal. Such systems are generally regarded as an

alternative to fight a variety of problems all unimodal systems stumble upon. One of the main challenges found

in the development of biometric recognition systems is the shortage of publicly available databases acquired

under real unconstrained working conditions. Motivated by such need the MobBIO database was created

using an Asus EeePad Transformer tablet, with mobile biometric systems in mind. The proposed database is

composed by three modalities: iris, face and voice.

1 INTRODUCTION

In almost everyone’s daily activities, personal iden-

tification plays an important role. The most tradi-

tional techniques to achieve this goal are knowledge-

based and token-based automatic personal identifica-

tions. Token-based approaches take advantage of a

personal item, such as a passport, driver’s license,

ID card, credit card or a simple set of keys to dis-

tinguish between individuals. Knowledge-based ap-

proaches, on the other hand, are based on something

the user knows that, theoretically, nobody else has

access to, for example passwords or personal iden-

tification numbers (Prabhakar et al., 2003). Both

of these approaches present obvious disadvantages:

tokens may be lost, stolen, forgotten or misplaced,

while passwords can easily be forgotten by a valid

user or guessed by an unauthorized one. In fact, all

of these approaches stumble upon an obvious prob-

lem: any piece of material or knowledge can be fraud-

ulently acquired (Jain et al., 2000).

Biometrics represents a return to a more natu-

ral way of identification: many physiological or be-

havioural characteristics are unique between different

persons. Testing someone by what this someone is,

instead of relying on something he owns or knows

seems likely to be the way forward (Monteiro et al.,

2013).

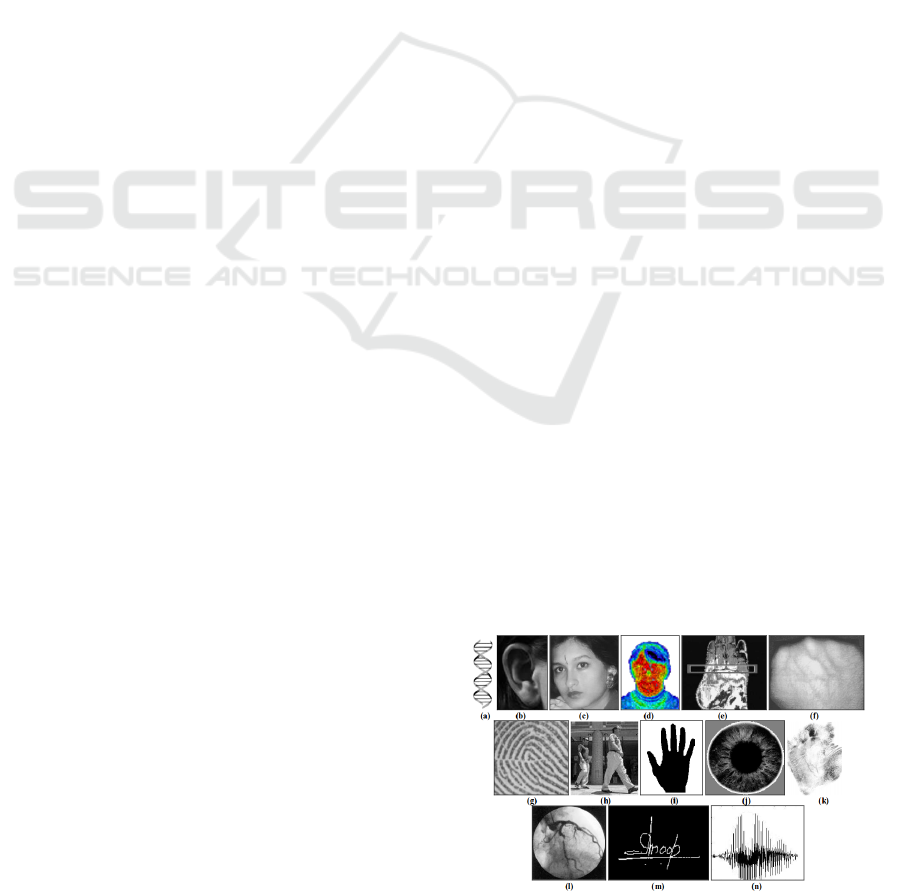

Several biological traits in humans show a con-

siderable inter-individual variability: fingerprints and

palmprints, the shape of the ears, the pattern of the

iris, among others, as depicted on Figure 1. Biomet-

rics works by recognizing patterns within these bio-

logical traits, unique to each individual, to increase

the reliability of recognition. The growing need for

reliability and robustness, raised some expectations

and became the focal point of attention for research

works on biometrics.

Most biometric systems deployed in real-world

applications rely on a single source of information

Figure 1: Examples of some of the most widely studied bio-

metric traits: (a) DNA, (b) Ear shape, (c) Face, (d) Facial

Thermogram, (e) Hand Thermogram, (f) Hand veins, (g)

Fingerprint, (h) Gait, (i) Hand geometry, (j) Iris, (k) Palm

print, (l) Retina, (m) Keystroke and (n) Voice. Extracted

from (Jain et al., 2002).

133

Sequeira A., Monteiro J., Rebelo A. and Oliveira H..

MobBIO: A Multimodal Database Captured with a Portable Handheld Device.

DOI: 10.5220/0004679601330139

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 133-139

ISBN: 978-989-758-009-3

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

Table 1: Comparative data analysis of some common biometric traits. Adapted from (Jain et al., 2000) and (Proenc¸a, 2007).

Requirements

Traits Universality Uniqueness Collectability Permanence

DNA High High Low High

Ear Medium Medium Medium High

Face High Low High Medium

Facial Thermogram High High High Low

Hand Veins Medium High High Medium

Fingerprint Medium High High Medium

Gait Low Low High Low

Hand Geometry Medium Medium High Medium

Iris High High Medium High

Palm Print Medium High Medium High

Retina High High Low Medium

Signature Medium Low High Low

Voice Medium Low Medium Low

to perform recognition, thus being dubbed unimodal.

Extensive studies have been performed on several bi-

ological traits, regarding their capacity to be used

for unimodal biometric recognition. Table 1 summa-

rizes the analysis performed by Jain (Jain et al., 2000)

and Proenc¸a (Proenc¸a, 2007), regarding the qualita-

tive analysis of individual biometric traits, consider-

ing the four factors laid out in the previous section.

Careful analysis of the advantages and disadvantages

laid out in the previously referred table seems to in-

dicate a couple of general conclusions: (1) there is

no “gold-standard” biometric trait, i.e. the choice of

the best biometric trait will always be conditioned

by the means at our disposal and the specific appli-

cation of the recognition process; (2) some biomet-

ric traits seem to present advantages that counterbal-

ance other trait’s disadvantages. For example, while

voice’s permanence is highly variable, due to external

factors, the iris patterns represent a much more sta-

ble and hard to modify trait. However, iris acquisition

in conditions that allow accurate recognition requires

specialized NIR illumination and user cooperation,

while voice only requires a standard sound recorder

and even no need for direct cooperation of the indi-

vidual.

This line of thought seems to indicate an alterna-

tive way of stating the two conclusions outlined in the

previous paragraph: even though there is no “best”

biometric trait per se, marked advantages might be

found by exploring the synergistic effect of multi-

ple statistically independent biometric traits, so that

each other’s pros and cons counterbalance resulting in

an improved performance over each other’s individ-

ual accuracy. Biometric systems that include multi-

ple sources of information for establishing an identity

are known as multimodal biometric systems (Ross and

Jain, 2004). It is generally regarded, in many refer-

ence works of the area, that multimodal biometric sys-

tems might help cope with a variety of generic prob-

lems all unimodal systems generally stumble upon,

regardless of their intrinsic pros and cons (Jain et al.,

1999). These problems can be classified as:

1. Noisy data: when external factors corrupt the

original information of a biometric trait. A fin-

gerprint with a scar and a voice altered by a cold

are examples of noisy inputs. Improperly main-

tained sensors and unconstrained ambient condi-

tions also account for some sources of noisy data.

As an unimodal system is tuned to detect and rec-

ognize specific features in the original data, the

addition of stochastic noise will boost the prob-

abilities of false identifications (Jain and Ross,

2004).

2. Intra-class variations: when the biometric data ac-

quired from an individual during authentication

is different from the data used to generate the

template during enrolment (Jain and Ross, 2004).

This may be observed when a user incorrectly in-

teracts with a sensor (e.g. variable facial pose) or

when a different sensor is used in two identifica-

tion approaches (Ross and Jain, 2004).

3. Inter-class similarities: when a database is built on

a large pool of users, the probability of different

users presenting similarities in the feature space of

the chosen trait naturally increases (Ross and Jain,

2004). It can, therefore, be considered that every

biometric trait presents an asymptotic behaviour

towards a theoretical upper bound in terms of its

discrimination, for a growing number of users en-

rolled in a database (Jain and Ross, 2004).

4. Non-universality: when the biometric system fails

to acquire meaningful biometric data from the

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

134

user, in a process known as failure to enrol

(FTE) (Jain and Ross, 2004).

5. Spoof attacks: when an impostor attempts to

spoof the biometric trait of a legitimately enrolled

user in order to circumvent the system (Jain et al.,

2002).

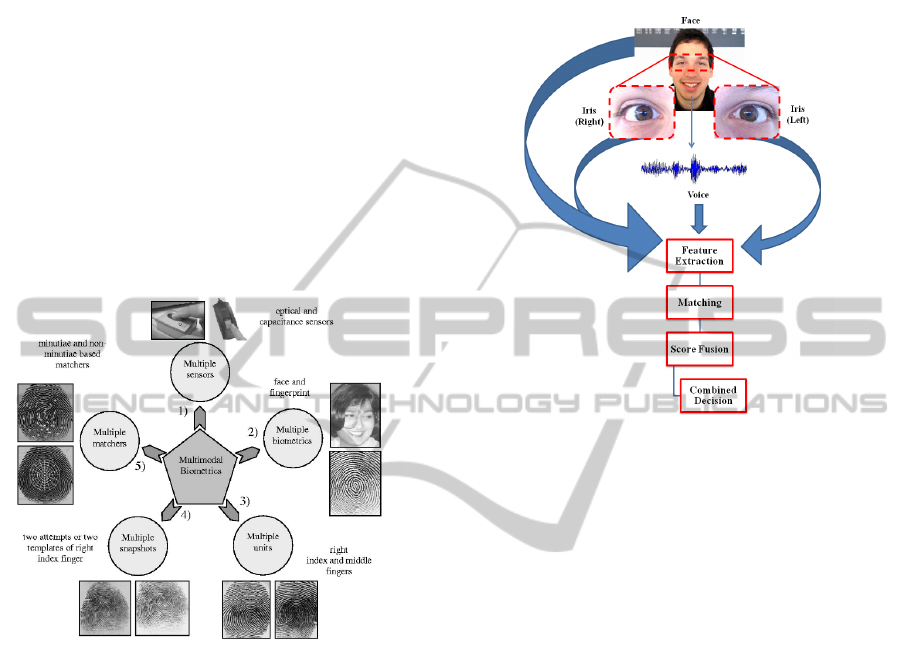

It is intuitive to note that taking advantage of the

evidence obtained from multiple sources of informa-

tion will result in an improved capability of tackling

some of the aforementioned problems. These sources

might be more than just a set of distinct biometric

traits. Other options, such as multiple sensors, mul-

tiple instances, multiple snapshots or multiple feature

space representations of the same biometric are also

valid options, as depicted on Figure 2 (Jain and Ross,

2004).

Figure 2: Scenarios in a multimodal biometric system.

From (Ross and Jain, 2004).

The development of biometric recognition sys-

tems is generally limited by the shortage of large

public databases acquired under real unconstrained

working conditions. Database collection represents

a complicated process, in which a high degree of

cooperation from a large number of participants is

needed (Oliveira and Magalh

˜

aes, 2012). For that

reason, nowadays, the number of existing public

databases that can be used to evaluate the perfor-

mance of multimodal biometric recognition systems

is quite limited.

Motivated by such need we present a new

database, named MobBIO, acquired using a portable

handheld device, namely an Asus EeePad Trans-

former tablet. With this approach we aim to tackle

not only the ever growing need for data, but also

to provide a database whose acquisition environment

follows the rapid evolution of our networked society

from simple communication devices to mobile per-

sonal computers. The proposed database is composed

by three modalities: iris, face and voice. A possi-

ble schematics of a multimodal system trained for the

MobBIO database is presented on Figure 3.

Figure 3: Flowchart of a generic multimodal system work-

ing on the modalities present in the MobBIO database.

The remainder of this paper is organized as fol-

lows: Section 2 summarizes the state-of-the-art con-

cerning available multimodal biometric databases;

Section 3 presents the MobBIO database and its

specifications; and finally the conclusions and future

work prospects regarding possible improvement to

the database are summarized in Section 4.

2 MULTIMODAL DATABASES

A strong trend observed as of lately is the appear-

ance of multimodal databases. As already referred,

it seems obvious that the complementarity of some

biometric traits will bring advantages and, conse-

quently, a more accurate biometric recognition. When

it comes to the choice of a biometric trait a vast list

of possibilities is found, as shown in previous sec-

tion. This diversity gives rise, in existing multimodal

databases, to many possible combinations of traits.

The first multimodal database with 5 modali-

ties and time variability, launched by the Multi-

modal Biometric Identity Verification project, was the

BIOMET (Garcia-Salicetti et al., 2003). The database

was constructed in three different sessions, with three

and five months spacing between them and contains

samples of face, voice, fingerprint, hand shape and

handwritten signature.

MobBIO:AMultimodalDatabaseCapturedwithaPortableHandheldDevice

135

On 2003, the Biometric Recognition Group -

ATVS made public and freely available the MCYT-

Bimodal Biometric Database (Fierrez-Aguilar et al.,

2003). This database includes fingerprint and hand-

written signature, in two versions containing data

from 75 and 100 users, respectively offline and online

signature acquisition.

Within the M2VTS project (Multi Modal Veri-

fication for Teleservices and Security applications)

the database XM2VTS (Poh and Bengio, 2006) was

launched, comprising several datasets including face

images and speech samples. According to its authors,

the goal of using a multimodal recognition scheme

is to improve the recognition efficiency by combin-

ing single modalities, namely face and voice features.

At cost price, sets of data taken from this database are

available including high quality color images, 32 KHz

16-bit sound files, video sequences and a 3d Model of

each subjects head.

In the aforementioned databases there are sev-

eral limitations, such as the absence of important

traits (e.g., iris), limitations at sensors level (e.g.,

sweeping fingerprint sensors), and informed forgery

simulations (e.g., voice utterances pronouncing the

PIN of another user) (Ortega-Garcia et al., 2010).

The BioSec Multimodal Biometric Database Base-

line (Fierrez-Aguilar et al., 2007) was an attempt to

overcome some of these limitations. This database

included real multimodal data from 200 individuals

in two acquisition sessions including fingerprint, iris,

voice and face. However the two releases of this

database are now under construction and are not avail-

able at the moment. An enlarged version of the previ-

ous database is The Multiscenario Multienvironment

BioSecure Multimodal Database (BMDB) (Ortega-

Garcia et al., 2010) which comprises signature, fin-

gerprint, hand and iris acquired in three different sce-

narios. This database is not freely accessed.

The WVU/CLARKSON: JAMBDC - Joint Multi-

modal Biometric Dataset Collection project gave rise

to a series of biometric datasets, available under re-

quest and with costs. Integrated within the afore-

mentioned project, the West Virginia University con-

structed two releases of biometric data containing six

distinct biometric modalities: iris, face, voice, finger-

print, hand geometry and palmprint. The two releases

differ only in the number of subjects. Within the

same initiative, the Clarkson University created an-

other dataset which contains image and video files for

the same modalities except for hand geometry (Cri-

halmeanu et al., 2007).

The MOBIO database (McCool et al., 2012) con-

sists of bi-modal audio and video data taken from 152

people. The speech samples and the face videos were

recorded using two mobile devices: a mobile phone

and a laptop computer.

The emergence of portable handheld devices, for

multiple everyday activities, has created a necessity

for the development of mobile identity verification ap-

plications. The objective of research is to create a re-

liable, portable way of identifying and authenticating

individuals. To pursue this goal, the availability of

testing databases is crucial, so that results obtained

by different methods may be compared. It is noted

that the existing databases do not completely fulfill

the requirements of this line of research. On one hand,

there are limitations in the variety and combination of

biometric traits, and on the other hand some of the

databases are not public accessible limiting their us-

ability.

3 MobBIO: DATABASE

OVERVIEW

The reasons to create the MobBIO multimodal

database are related, on one hand, with the raising in-

terest in mobile biometrics applications and, on the

other hand, with the increasing interest in multimodal

biometrics. These two perspectives motivated the cre-

ation of a database comprising face, iris and voice

samples acquired in unconstrained conditions using

a mobile device, whose specifications will be detailed

in further sections. We also stress the fact that there is

no multimodal database with similar characteristics,

regarding both the traits and the unique acquisition

conditions.

As voice is the only acoustic-based biometric trait

and the facial traits - face and iris - are the most in-

stinctive regions for a mobile device wielder to pho-

tograph, we chose these three traits for the MobBIO

database. In the choice of such traits it was also taken

into account that the design of consumer mobile de-

vices is extremely sensitive to cost, size, and power

efficiency and that the integration of dedicated bio-

metric devices is, thus, rendered less attractive (Shi

et al., 2011). However, the majority of the developed

iris recognition systems rely on near-infrared (NIR)

imaging rather than visible light (VL). This is due

to the fact that fewer reflections from the cornea in

NIR imaging result in maximized signal-to-noise ra-

tio (SNR) in the sensor, thus improving the contrast

of iris images and the robustness of the system (Mon-

teiro et al., 2013). As NIR illumination is not an ac-

ceptable alternative we obtain iris images with simple

VL illumination, even though this results in consider-

ably noisier images.

Mobile device cameras are known to present lim-

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

136

itations due to their increasingly thin form factor.

Therefore, these devices inherently lack high qual-

ity optics like zoom lenses and larger image sensors.

Nevertheless, for most daily uses, the quality is con-

sidered good enough by most consumers (Tufegdzic,

2013). Regarding acoustic measurements, no hard-

ware improvements can solve the problems that harm

the performance of voice recognition: environmental

noise and voice alterations by external noises, such as

emotional state or illness, need to be accounted for, by

the algorithm (Khitrov, 2013). Multimodal approach

may help counter image-based difficulties, like low

illumination or rotated images, with voice-based fea-

tures or vice-versa. By exploring multiple sensors the

intrinsic hardware-based limitations of each one can

be balanced by the other, resulting in a synergistic ef-

fect in terms of biometric data quality.

The creation of this database seems a valuable re-

source for future research and its purpose goes far

beyond its immediate application in the “MobBIO

2013: 1st Biometric Recognition with Portable De-

vices Competition”

1

that was launched in January of

2013. This competition is embraced by ICIAR2013

2

.

3.1 Description of the Database

The MobBIO Multimodal Database comprises the

biometric data from 105 volunteers. Each individual

provided samples of face, iris and voice. The nation-

alities of the volunteers were mainly portuguese but

also participated volunteers from U.K., Romania and

Iran. The average of ages was approximately 34, be-

ing the minimum age 18 and the maximum age 69.

The gender distribution was 29% females and 71%

males.

The volunteers were asked to sit, in two different

spots of a room with natural and artificial sources of

light, and then the face and eye region images were

captured by sequential shots. The distance to the cam-

era was variable (10-50 cm) depending on the type of

image acquired: closer for the eye region images and

farther away for face images. For the speech sam-

ples, the volunteers were asked to get close to the in-

tegrated microphone of the device and the recorder

was activated and deactivated by the collector. The

equipment used for the samples acquisition was an

Asus Transformer Pad TF 300T, with Android ver-

sion 4.1.1. The device has two cameras one frontal

and one back camera. The camera we used was the

back camera, version TF300T-000128, with 8 MP of

resolution and autofocus.

1

http://www.fe.up.pt/∼mobbio2013/

2

http://www.iciar.uwaterloo.ca/iciar13/

For the voice samples, the volunteers were asked

to read 16 sentences in Portuguese. The collected

samples had an average duration of 10 seconds. Half

of the read sentences presented the same content for

every volunteer, while the remaining half were ran-

domly chosen among a fixed number of possibili-

ties. This was done to allow both the application of

text-dependent and text-independent methods, which

comprise the majority of the most common speaker

recognition methodologies (Fazel and Chakrabartty,

2011).

The iris images were captured in two different

lighting conditions, with variable eye orientations and

occlusion levels, so as to comprise a larger variabil-

ity of unconstrained scenarios. For each volunteer 16

images (8 of each eye) were acquired. These images

were obtained by cropping a single image comprising

both eyes. Each cropped image was set to a 300×200

resolution. Some examples of iris images are depicted

in Figure 4.

The iris images can, by themselves, constitute an

important tool of work concerning iris recognition in

mobile devices environment. This dataset is provided

with manual annotation of both the limbic and pupil-

lary contours, so that the segmentation methods ap-

plied to its images can be evaluated. An example of

such annotation is shown in Figure 5.

Face images were captured in similar conditions

as iris images, in two different lighting conditions. A

total of 16 images were acquired from each volunteer,

with a resolution of 640 × 480. Some examples are

illustrated in Figure 6.

4 CONCLUSIONS

The increased use of handheld devices in everyday ac-

tivities, which incorporate high performance cameras

and sound recording components, has created the pos-

sibility for implementing image and sound process-

ing applications for identity verification. The aim to

produce reliable methods of identifying and authen-

ticating individuals in portable devices is of utterly

importance nowadays. The research in this field re-

quire the availability of databases that resemble the

unconstrained conditions of this scenarios. We aim

to contribute to the research in this area by deploy-

ing a multimodal database whose characteristics are

valuable to the development of state-of-the-art meth-

ods in multimodal recognition. The manual annota-

tion of iris images is a strong point of this database as

it allows the evaluation of developed methods of seg-

mentation with this noisy images. For the future, the

other samples will also be annotated manually: the

MobBIO:AMultimodalDatabaseCapturedwithaPortableHandheldDevice

137

(a) (b) (c) (d)

(e) (f) (g) (h)

Figure 4: Examples of iris images from MobBIO database: a) Heavily occluded; b) Heavily pigmented; c) Glasses reflection;

d) Glasses occlusion; e) Off-angle; f) Partial eye; g) Reflection occlusion and h) Normal.

(a) (b) (c) (d)

(e) (f) (g) (h)

Figure 6: Examples of face images from MobBIO database.

Figure 5: Example of a manually annotated iris image.

face will be identified in face images and the silence

and speech will be identified in the sound recordings.

It might be argued that the use of this particular

one in the research community may be limited. It

would be better if the voice samples were recorded

both in English as well as Portuguese, and the images

stored in several resolutions and more challenging

real-life conditions, such as variable illuminations.

This set of suggestions will surely be taken into con-

sideration for future improvements over the present

dataset.

This database has already been tested in another

work (Monteiro et al., 2014) concerning iris seg-

mentation. Also, the iris image collection has al-

lowed the construction of a dataset of fake images

(MobBIOfake), composed by printed copies and their

respective originals. This database was developed

for the purpose of iris liveness detection research,

and was already tested in the scope of a different

work (Sequeira et al., 2014).

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

138

ACKNOWLEDGEMENTS

The authors author would like to thank Fundac¸

˜

ao

para a Ci

ˆ

encia e Tecnologia (FCT) - Portugal the fi-

nancial support for the PhD grants with references

SFRH/BD/74263/2010 and SFRH/BD/87392/2012.

REFERENCES

Crihalmeanu, S., Ross, A., Schuckers, S., and Hornak, L.

(2007). A protocol for multibiometric data acqui-

sition, storage and dissemination. Technical report,

WVU, Lane Department of Computer Science and

Electrical Engineering.

Fazel, A. and Chakrabartty, S. (2011). An overview of

statistical pattern recognition techniques for speaker

verification. IEEE Circuits and Systems Magazine,

11(2):62–81.

Fierrez-Aguilar, J., Ortega-garcia, J., Torre-toledano, D.,

and Gonzalez-rodriguez, J. (2003). Mcyt baseline cor-

pus: A bimodal biometric database. IEE Proc.Vis. Im-

age Signal Process., 150:395–401.

Fierrez-Aguilar, J., Ortega-Garcia, J., Torre-Toledano, D.,

and Gonzalez-Rodriguez, J. (2007). Biosec baseline

corpus: A multimodal biometric database. Pattern

Recognition, pages 1389–1392.

Garcia-Salicetti, S., Beumier, C., Chollet, G., Dorizzi, B.,

les Jardins, J. L., Lunter, J., Ni, Y., and Petrovska-

Delacr

´

etaz, D. (2003). Biomet: a multimodal person

authentication database including face, voice, finger-

print, hand and signature modalities. In Audio-and

Video-Based Biometric Person Authentication, pages

845–853. Springer.

Jain, A., Bolle, R., and Pankanti, S. (2002). Introduction to

biometrics. In Biometrics, pages 1–41.

Jain, A., Hong, L., and Kulkarni, Y. (1999). A multimodal

biometric system using fingerprint, face and speech.

In Proceedings of 2nd International Conference on

Audio-and Video-based Biometric Person Authentica-

tion, Washington DC, pages 182–187.

Jain, A., Hong, L., and Pankanti, S. (2000). Biometric iden-

tification. Communications of the ACM, 43(2):90–98.

Jain, A. K. and Ross, A. (2004). Multibiometric systems.

Communications of the ACM, 47(1):34–40.

Khitrov, M. (2013). Talking passwords: voice biometrics

for data access and security. Biometric Technology

Today, 2013(2):9 – 11.

McCool, C., Marcel, S., Hadid, A., Pietikainen, M., Mate-

jka, P., Poh, N., Kittler, J., Larcher, A., Levy, C.,

Matrouf, D., et al. (2012). Bi-modal person recog-

nition on a mobile phone: using mobile phone data.

In IEEE International Conference on Multimedia and

Expo Workshops, pages 635–640. IEEE.

Monteiro, J. C., Oliveira, H. P., Sequeira, A. F., and Car-

doso, J. S. (2013). Robust iris segmentation under un-

constrained settings. In Proceedings of International

Conference on Computer Vision Theory and Applica-

tions (VISAPP), pages 180–190.

Monteiro, J. C., Sequeira, A. F., Oliveira, H. P., and Car-

doso, J. S. (2014). Robust iris localisation in challeng-

ing scenarios. In CCIS Communications in Computer

and Information Science. Springer-Verlag.

Oliveira, H. P. and Magalh

˜

aes, F. (2012). Two uncon-

strained biometric databases. In Image Analysis and

Recognition, pages 11–19. Springer.

Ortega-Garcia, J., Fierrez, J., Alonso-Fernandez, F., Gal-

bally, J., Freire, M. R., Gonzalez-Rodriguez, J.,

Garcia-Mateo, C., Alba-Castro, J.-L., Gonzalez-

Agulla, E., Otero-Muras, E., et al. (2010). The mul-

tiscenario multienvironment biosecure multimodal

database (bmdb). Pattern Analysis and Machine In-

telligence, IEEE Transactions on, 32(6):1097–1111.

Poh, N. and Bengio, S. (2006). Database, protocols

and tools for evaluating score-level fusion algorithms

in biometric authentication. Pattern Recognition,

39(2):223–233.

Prabhakar, S., Pankanti, S., and Jain, A. K. (2003). Biomet-

ric recognition: Security and privacy concerns. Secu-

rity & Privacy, IEEE, 1(2):33–42.

Proenc¸a, H. (2007). Towards Non-Cooperative Biometric

Iris Recognition. PhD thesis.

Ross, A. and Jain, A. K. (2004). Multimodal biometrics:

An overview. In Proceedings of 12th European Signal

Processing Conference, pages 1221–1224.

Sequeira, A. F., Murari, J., and Cardoso, J. S. (2014).

Iris liveness detection methods in mobile applications.

In Proceedings of International Conference on Com-

puter Vision Theory and Applications (VISAPP).

Shi, W., Yang, J., Jiang, Y., Yang, F., and Xiong, Y. (2011).

Senguard: Passive user identification on smartphones

using multiple sensors. In IEEE 7th International

Conference on Wireless and Mobile Computing, Net-

working and Communications, pages 141–148.

Tufegdzic, P. (2013). iSuppli: Smartphone cameras are

getting smarter with computational photography; Last

check: 06.06.2013.

MobBIO:AMultimodalDatabaseCapturedwithaPortableHandheldDevice

139