Dense Long-term Motion Estimation via Statistical Multi-step Flow

Pierre-Henri Conze

1,2

, Philippe Robert

1

, Tom´as Crivelli

1

and Luce Morin

2

1

Technicolor, Cesson-Sevigne, France

2

INSA Rennes, IETR/UMR 6164, UEB, Rennes, France

Keywords:

Long-term Motion Estimation, Dense Point Matching, Statistical Analysis, Long-term Trajectories, Video

Editing.

Abstract:

We present statistical multi-step flow, a new approach for dense motion estimation in long video sequences.

Towards this goal, we propose a two-step framework including an initial dense motion candidates generation

and a new iterative motion refinement stage. The first step performs a combinatorial integration of elementary

optical flows combined with a statistical candidate displacement fields selection and focuses especially on

reducing motion inconsistency. In the second step, the initial estimates are iteratively refined considering

several motion candidates including candidates obtained from neighboring frames. For this refinement task,

we introduce a new energy formulation which relies on strong temporal smoothness constraints. Experiments

compare the proposed statistical multi-step flow approach to state-of-the-art methods through both quantitative

assessment using the Flag benchmark dataset and qualitative assessment in the context of video editing.

1 INTRODUCTION

Dense motion estimation has known significant im-

provements since early works but deals mainly with

matching consecutive frames. Resulting dense mo-

tion fields, called optical flows, can straightforwardly

be concatenated to describe the trajectories of each

pixel along the sequence (Corpetti et al., 2002; Brox

and Malik, 2010; Sundaram et al., 2010). However,

both estimation and accumulation errors result in

dense trajectories which can rapidly diverge and be-

come inconsistent, especially for complex scenes in-

cluding non-rigid deformations, large motion, zoom-

ing, poorly textured areas, illumination changes...

Moreover, concatenating motion fields computed be-

tween consecutive frames does not allow to recover

trajectories after temporary occlusions.

Recent works have contributed to the purpose of

dense long-term motion estimation. Multi-frame op-

tical flow formulations (Salgado and S´anchez, 2007;

Papadakis et al., 2007; Werlberger et al., 2009; Volz

et al., 2011) have been presented but their tempo-

ral smoothness constraints are generally limited to a

small number of frames. (Sand and Teller, 2008) pro-

poses a sophisticated framework to compute semi-

dense trajectories using a particle representation but

the full density is not achieved. To overcome these

issues, Garg et al. describe in (Garg et al., 2013) a

variational approach with subspace constraints to gen-

erate trajectories starting from a reference frame in a

non-rigid context. They assume that the sequence of

displacement of any point can be expressed as a linear

combination of a low-rank motion basis. Therefore,

trajectories are estimated assuming that they must lie

close to this low dimensional subspace which im-

plicitly acts as a long-term regularization. However,

strong a-priori assumptions on scene contents must

be provided and dense tracking of multiple objects is

possible only if the reference frame is segmented.

The alternative concept of multi-step flow (Criv-

elli et al., 2012b; Crivelli et al., 2012a) focuses on

how to construct dense fields of correspondences over

extended time periods using multi-step optical flows

(optical flows computed between consecutive frames

or with larger inter-frame distances). Multi-step flow

sequentially merges a set of displacement fields at

each intermediate frame, up to the target frame. This

set is obtained via concatenation of multi-step optical

flows with displacement vectors already computed for

neighbouring frames. Multi-step estimations can han-

dle temporary occlusions since they can jump occlud-

ing objects. Contrary to (Garg et al., 2013), multi-step

flow considers both trajectory estimation between a

reference frame and all the images of the sequence

(from-the-reference) and motion estimation to match

each image to the reference frame (to-the-reference).

Despite its ability to handle both scenarios, multi-

step flow has two main drawbacks. First, it performs

545

Conze P., Robert P., Crivelli T. and Morin L..

Dense Long-term Motion Estimation via Statistical Multi-step Flow.

DOI: 10.5220/0004683005450554

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 545-554

ISBN: 978-989-758-009-3

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

the selection of displacement fields by relying only

on classical optical flow assumptions that can some-

times fail between distant frames. Second, the can-

didate displacement fields are based on previous esti-

mations. It ensures a certain temporal consistency but

can also propagate estimation errors along the follow-

ing frames of the sequence, until a new available step

gives a chance to match with a correct location again.

These limitations can be resolved by extending

to the whole sequence the combinatorial multi-step

integration and the statistical selection described in

(Conze et al., 2013) for dense motion estimation be-

tween a pair of distant frames. The underlying idea is

to first consider a large set composed of combinations

of multi-step optical flows and then to study the spa-

tial redundancy of the resulting candidates through a

statistical selection to finally select the best matches.

Toward our goal of dense motion estimation in

long video shots, we present the statistical multi-step

flow two-step framework. First, it extends (Conze

et al., 2013) to generate several initial dense corre-

spondences between the reference frame and each of

the subsequent images independently. Second, we

propose to provide an accurate final dense matching

by applying a new iterative motion refinement which

involves strong temporal smoothness constraints.

2 Statistical Multi-step Flow

Let us consider a sequence of N + 1 RGB images

{I

n

}

n∈[[0,...,N]]

including I

ref

considered as a reference

frame. In this work, we focus on dense motion es-

timation between the reference frame I

ref

and each

frame I

n

of the sequence and we aim at computing

from-the-reference and to-the-reference displacement

fields. From-the-reference displacement fields link

the reference frame I

ref

to the other frames I

n

and

therefore describe the trajectory of each pixel of I

ref

along the sequence. To-the-reference displacement

fields connect each pixel of I

n

to locations into I

ref

.

The proposed statistical multi-step flow performs

two main stages. The generation of several initial

dense motion correspondencesfor each pair of frames

{I

ref

,I

n

} independently is described in Section 2.1.

Section 2.2 presents the iterative motion refinement

through strong temporal consistency constraints.

2.1 Initial Motion Candidates

Generation

The goal of the initial motion candidates generation

is to compute for each pixel x

ref

(resp. x

n

) of I

ref

(resp. I

n

) K candidate positions in I

n

(resp. I

ref

). Each

Figure 1: Multiple motion candidates are generated via a

guided-random selection among all possible motion paths.

This combinatorial integration (Conze et al., 2013) is done

independently for each pair {I

ref

,I

n

} which limits the corre-

lation between candidates selected forneighbouring frames.

pair of frames {I

ref

,I

n

} is processed independently.

Our explanations focus on the estimation of from-the-

reference displacement fields. In the following, we

describe the input data and recall the baseline method

(Conze et al., 2013) before focusing on how it has

been improved and extended to the whole sequence.

2.1.1 Input Optical Flows Fields

As inputs, our method considers a set of optical flow

fields estimated from each frame of the sequence in-

cluding I

ref

. These optical flows are previously es-

timated between consecutive frames or with larger

steps (Crivelli et al., 2012b), i.e. larger inter-frame

distances. Let S

n

= {s

1

,s

2

,... ,s

Q

n

} ⊂ {1,...,N − n}

be the set ofQ

n

possible steps at instant n. The follow-

ing set of optical flow fields starting from I

n

is there-

fore available: {v

n,n+s

1

,v

n,n+s

2

,... ,v

n,n+s

Q

n

}.

Input optical flow fields are provided with at-

tached occlusion and inconsistency masks. For the

pair {I

n

,I

n+s

i

} with s

i

∈ {1,..., N − n}, the occlusion

mask attached to the optical flow field v

n,n+s

i

indicates

the visibility of each pixel of I

n

in I

n+s

i

. The inconsis-

tency mask attached to v

n,n+s

i

distinguishes consistent

and inconsistent optical flow vectors among the ones

starting from pixels marked as visible (Robert et al.,

2012). This feature follows the idea that the backward

flow should be the exact opposite of the forward flow.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

546

2.1.2 Baseline Method (Conze et al., 2013)

The combinatorial multi-step integration and the sta-

tistical selection on which we rely on work as follows.

For the current pair {I

ref

,I

n

}, the combinatorial

multi-step integration consists in first of all consider-

ing all the possible from-the-reference motion paths

which start from each pixel x

ref

, run through the

sequence and end in I

n

. These motion paths are

built by concatenating all the possible sequences of

un-occluded input multi-step optical flow vectors be-

tween I

ref

and I

n

. A reasonable number of N

s

motion

paths are then selected through limitations in terms

of number of concatenations N

c

and via a guided-

random selection. Each remaining motion path leads

to a candidate position in I

n

(Fig. 1 top). Finally, we

obtain a set T

ref,n

(x

ref

) = {x

i

n

}

i∈[[0,...,K

x

ref

−1]]

of K

x

ref

candidate positions in I

n

for each pixel x

ref

of I

ref

.

A statistical-based selection stage then selects the

optimal candidate position among T

ref,n

(x

ref

). This

procedure involves: 1) a statistical criterion which

pre-selects a small set of candidates based on spatial

density and intrinsic inconsistency values; 2) a global

optimization which fuses these candidates to obtain

the optimal one while including spatial regularization.

2.1.3 Improvements

The combinatorial multi-step integration and the sta-

tistical selection we briefly reviewed has been im-

proved to provide further focus to inconsistency re-

duction between from/to-the-reference vectors. First,

we use only multi-step optical flow vectors considered

as consistent according to their inconsistency masks

to generate motion paths between I

ref

and I

n

. Sec-

ond, we introduce an outlier removal step before the

statistical selection which orders the candidates of

T

ref,n

(x

ref

) with respect to their inconsistency values.

A percentageR

%

of bad candidatesis removedand the

selection is performed on the remaining ones. Third,

at the end of the combinatorial integration and the se-

lection procedure between I

ref

and I

n

, the optimal dis-

placement field is incorporated into the processing be-

tween I

n

and I

ref

which aims at enforcing the motion

consistency between from/to-the-reference fields.

Compared to (Conze et al., 2013), our displace-

ment fields selection procedure combines differently

statistical selection and global optimization. For

each x

ref

∈ I

ref

, we select among T

ref,n

(x

ref

) K

sp

=

2 × K candidates through statistical selection, with

K

sp

< K

x

ref

. Then, we randomly group by pairs

these K

sp

candidates and choose the K best ones

x

k

n

∀k ∈ [[0,...,K− 1]] by pair-wise fusing them follow-

ing a global flow fusion approach. Finally, this same

previous estimation

candidates from neighbouring frames

initial candidates

candidate coming from

inverted

Figure 2: The displacement field d

∗

ref,n

is questionned by

generating for each pixel x

ref

competing candidates in I

n

.

global optimization method fuses these K best candi-

dates to obtain an optimal one: x

∗

n

. In other words,

these two last steps give a set of candidate displace-

ment fields

d

k

ref,n

and finally d

∗

ref,n

, the optimal one.

For pairs of frames relatively close or in case of

temporary occlusions, the statistical selection is not

adapted due to the small amount of candidates. There-

fore, between K+ 1 and K

sp

candidates, we use only

the global optimization up to obtain the K best ones.

Our approach is applied bi-directionally. An ex-

actly similar processing between I

n

and I

ref

leads to K

initial to-the-reference candidate displacement fields.

2.1.4 Extention to the whole Sequence

This improved version of the combinatorial integra-

tion and the statistical selection of (Conze et al., 2013)

processes independently all the pairs {I

ref

,I

n

}. Only

N

c

, the maximum number of concatenations, changes

with respect to the temporal distance between frames.

In practice, N

c

is computed using Eq. (1) which leads

to a good compromise between a too large number

of concatenations which would lead to large propa-

gation errors and the opposite situation which would

limit the effectiveness of the statistical processing due

to an insufficient number of candidates.

N

c

(n) =

| n− ref | if | n − ref |≤ 5

α

0

.log10(α

1

.|n− ref|) otherwise

(1)

The guided-random selection (Conze et al., 2013)

which selects for each pair of frames {I

ref

,I

n

} one

part of all the possible motion paths limits the corre-

lation between candidates respectively estimated for

neighbouring frames. This avoids the situation in

which a single estimation error is propagated and

therefore badly influences the whole trajectory. The

example Fig. 1 shows the motion paths selected by

the guided-random selection for pairs {I

ref

,I

n

} and

DenseLong-termMotionEstimationviaStatisticalMulti-stepFlow

547

{I

ref

,I

n+1

}. We notice that motion paths between

I

ref

and I

n+1

are not highly correlated with those be-

tween I

ref

and I

n

. Indeed, the sets of optical flow

vectors involved in both cases are not the same ex-

cept for v

ref,ref+1

and v

ref,n−1

which are then con-

catenated with different vectors. v

n−2,n

contributes

for both cases but the considered vectors do not start

from the same position. These considerations about

the statistical independence of the resulting displace-

ment fields are not addressed by existing methods for

which a strong temporal correlation is inescapable.

2.2 Iterative Motion Refinement

The previous stage guarantees a low correlation be-

tween the initial motion candidates respectively es-

timated for pairs {I

ref

,I

n

}. Without losing this key

characteristic, this second stage aims at iteratively re-

fining the initial estimates while enforcing the tempo-

ral smoothness along the sequence.

We propose to question the matching between

each pixel x

ref

(resp. x

n

) of I

ref

(resp. I

n

) and the

selected position x

∗

n

(resp. x

∗

ref

) in I

n

(resp. I

ref

) es-

tablished during the previous iteration (or the initial

motion candidates generation stage if the current iter-

ation is the first one). For this task, we generate sev-

eral competing candidates which are compared to x

∗

n

(resp. x

∗

ref

) through a global optimization approach.

2.2.1 Competing Candidates

The competing candidates used to question x

∗

n

(resp.

x

∗

ref

) are illustrated in Fig. 2 and deals with:

• the K initial candidate positions x

k

n

(resp. x

k

ref

)

∀k ∈ [[0, .. ., K− 1]] (obtained Section 2.1),

• a candidate position coming from the previous es-

timation of d

∗

n,ref

(resp. d

∗

ref,n

) which is inverted

to obtain x

r

n

(resp. x

r

ref

), as illustrated in Fig. 2,

• candidates from neighbouring frames to enforce

temporal smoothing. Let W be the temporal win-

dow of width w centered around I

n

. Between I

ref

and I

n

, we use the optical flow fields v

m,n

between

I

m

and I

n

with m ∈ [[n−

w

2

,... ,n+

w

2

]] and m 6= n

to obtain from x

∗

m

∈ I

m

the new candidate x

m

n

in I

n

.

2.2.2 Global Optimization Approach

We perform a global optimization method in order to

fuse the previously described competing candidates

into a single optimal displacement field.

In the from-the-reference case, we introduce L =

{l

x

ref

} as a labeling of pixels x

ref

where each label

indicates x

l

x

ref

n

, one of the candidates listed above. Let

Figure 3: Matching cost and Euclidean distances ed

n,m

and

ed

m,n

defined with respect to each temporal neighboring

candidate x

∗

m

and involved in the proposed energy. These

three terms act as strong temporal smoothness constraints.

d

l

x

ref

ref,n

be the corresponding motion vector. We define

the energy in Eq. (2) and minimize it with respect to

L using fusion moves (Lempitsky et al., 2010):

E

ref,n

(L) = E

d

ref,n

(L) + E

r

ref,n

(L) =

∑

x

ref

ρ

d

(ε

d

ref,n

)

+

∑

x

ref

,y

ref

α

x

ref

,y

ref

ρ

r

(

d

l

x

ref

ref,n

(x

ref

) − d

l

y

ref

ref,n

(y

ref

)

1

) (2)

The data term E

d

ref,n

, described with more details

in Eq. (3), involves both matching cost and inconsis-

tency value with respect to d

l

x

ref

ref,n

(Conze et al., 2013).

In addition, we propose to introduce strong temporal

smoothness constraints into the energy formulation:

ε

d

ref,n

= C(x

ref

,d

l

x

ref

ref,n

(x

ref

)) + Inc(x

ref

,d

l

x

ref

ref,n

(x

ref

))

+

n+

w

2

∑

m=n−

w

2

m6=n

C(x

l

x

ref

n

,x

∗

m

− x

l

x

ref

n

) + ed

m,n

+ ed

n,m

(3)

The temporal smoothness constraints translate

into three new terms which are computed with respect

to each neighbouring candidate x

∗

m

defined for the

frames inside the temporal window W. These terms

are illustrated in Fig. 3 and deal more precisely with:

• the matching cost between x

l

x

ref

n

∈ I

n

and x

∗

m

of I

m

,

• the euclidean distance ed

m,n

between x

l

x

ref

n

and the

ending point of the optical flow v

m,n

starting from

x

∗

m

(see Eq. (4)). ed

m,n

encourages the selection of

x

m

n

, the candidate coming from I

m

via the optical

flow field v

m,n

and therefore tends to strengthen

the temporal smoothness. Indeed, for x

m

n

, the eu-

clidean distance ed

m,n

is equal to 0.

ed

m,n

=

(x

ref

+ d

l

x

ref

ref,n

) − (x

ref

+ d

∗

ref,m

+ v

m,n

)

2

(4)

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

548

• the euclidean distance ed

n,m

between x

∗

m

and the

ending point of the optical flow vector v

n,m

start-

ing from x

l

x

ref

n

(see Eq. (5)). If v

m,n

is consistent,

i.e. v

m,n

≈ −v

n,m

, ed

n,m

is approximately equal to

0 for x

m

n

, the candidate coming from I

m

, whose

selection is again promoted.

ed

n,m

=

(x

ref

+ d

∗

ref,m

) − (x

ref

+ d

l

x

ref

ref,n

+ v

n,m

)

2

(5)

The regularization term E

r

ref,n

involves motion

similarities with neighbouring positions, as shown in

Eq. (2). α

x

ref

,y

ref

accounts for local color similarities

in the reference frame I

ref

. The robust functions ρ

d

and ρ

r

are respectively the negative log of a Student-t

distribution and the Geman-McClure function.

The refinement of to-the-reference displacement

fields with our approach is straightforward except that

the data term involves neither the matching cost be-

tween the current candidate and the temporal neigh-

bouring one nor the euclidean distance ed

m,n

due to

trajectories which can not be handled in this direction.

The global optimization method fuses the dis-

placement fields by pairs and finally chooses to up-

date or not the previous estimations with one of the

previously described candidates. The motion refine-

ment phase consists in applying this technique for

each pair of frames {I

ref

,I

n

} in from-the-reference

and to-the-reference directions. The pairs {I

ref

,I

n

}

are processed in a random order in order to encourage

temporal smoothnesswithout introducing a sequential

correlation between the resulting displacement fields.

This motion refinement phase is repeated itera-

tively N

it

times where one iteration corresponds to the

processing of all the pairs {I

ref

,I

n

}. The proposed

statistical multi-step flow is done once the initial mo-

tion candidates generation and the N

it

iterations of

motion refinement have been performed.

3 EXPERIMENTS

Our experiments focus on the following sequences:

MPI S1 (Granados et al., 2012) Fig.4 and 6a-h, Hope

Fig.6i-p, Newspaper Fig.6q-t, Walking Couple Fig.7

and Flag (Garg et al., 2013) Fig.8. The proposed sta-

tistical multi-step flow is referred to as StatFlow in the

following. For the experiments, the following param-

eters have been used: N

c

= 7, N

s

= 100, R

%

= 50%,

K = 3, α

0

= 3, α

1

= 15, w = 5. The set of steps and

input optical flow estimators will be specified for each

experiment and each sequence.

Experiments have been conducted as follows. In

Section 3.1, we evaluate the performance of our ex-

tended version of the combinatorial integration and

the statistical selection (Conze et al., 2013) through

registration and PSNR assessment. The effects of the

iterative motion refinement are also studied. Then, we

compare StatFlow to state-of-the-art methods through

quantitative assessment using the Flag dataset (Garg

et al., 2013) (Section 3.2) and qualitative assessment

via texture propagation and tracking (Section 3.3).

3.1 Registration and PSNR Assessment

The first experiment aims at showing how the im-

provements we made with respect to (Conze et al.,

2013) impacts the quality of the displacement fields.

We focus on frames pairs taken from MPI S1 and

Newspaper (NP). The sets of steps are 1− 5, 10 (NP),

15 (MPI S1), 20 (NP) and 30 (NP). The algorithms are

performed taking input multi-step optical flows com-

puted with a 2D version of the disparity estimator de-

scribed in (Robert et al., 2012), referred to as 2D-DE.

We compare the optimal displacement fields ob-

tained in output of our initial motion estimates gener-

ation (Section 2.1) with those resulting from (Conze

et al., 2013). The comparison is done through reg-

istration and PSNR assessment. For a given pair

{I

ref

,I

n

}, the final fields are used to reconstruct

I

ref

from I

n

through motion compensation and color

PSNR scores are computed between I

ref

and the reg-

istered frame for non-occluded pixels.

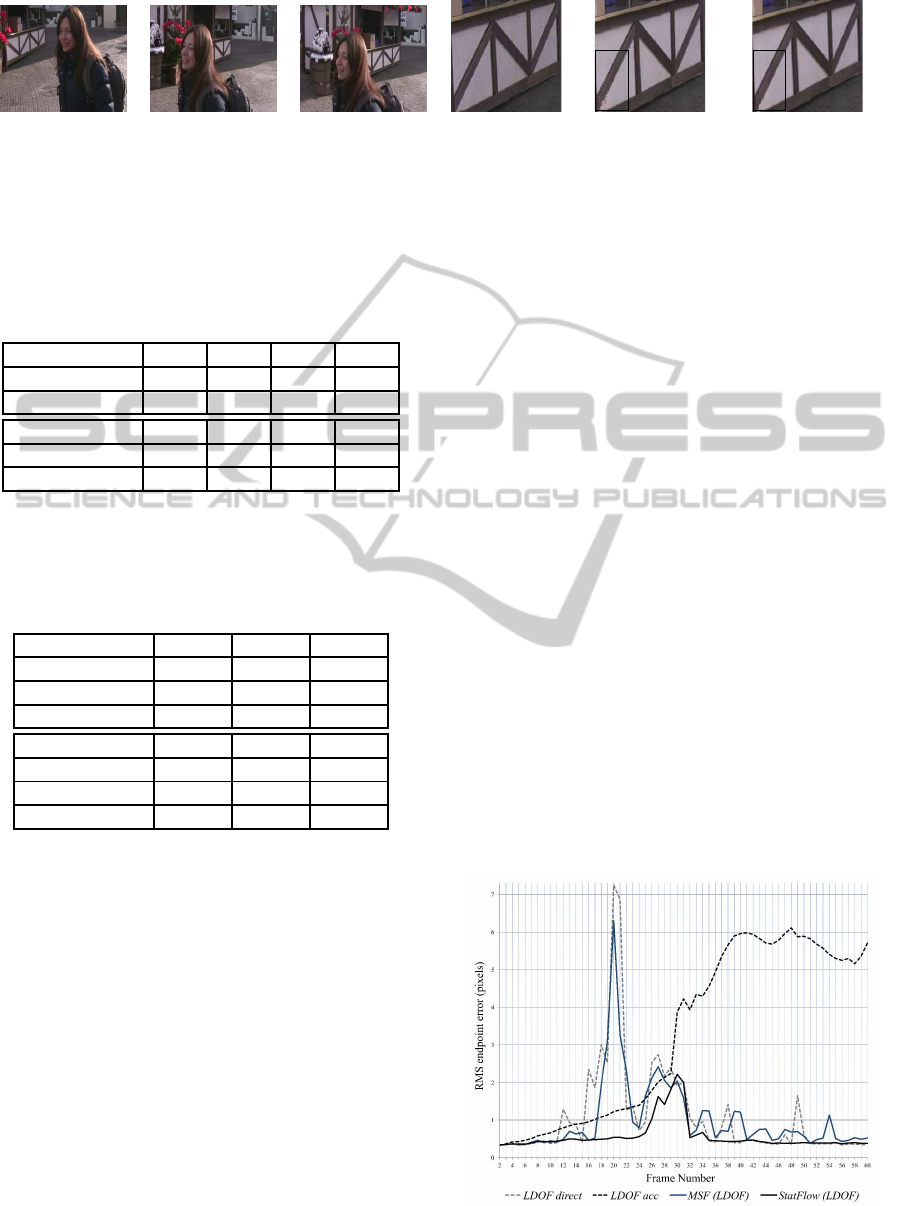

Tables 1 and 2 show the PSNR scores for various

distances between I

ref

and I

n

respectivelyon the kiosk

of MPI S1 (Fig.4) and on whole images of News-

paper (Fig.6q-t). Results on MPI S1 show that the

initial phase of StatFlow outperforms the combinato-

rial integration and the statistical selection of (Conze

et al., 2013) for all pairs. An example of registra-

tion of the kiosk for a distance of 20 frames is given

Fig.4. Multi-step estimations deal satisfactorily with

the temporary occlusion. Experiments on Newspaper

reveal the same finding: the novelty in terms of incon-

sistency reduction improves the displacement fields

quality. Moreover, the iterative motion refinement

stage (N

it

= 9) allows to obtain better PSNR scores

for all pairs compared to the initial stage of StatFlow.

3.2 Comparisons with Flag Dataset

Quantitative results have been obtained using the

dense ground-truth optical flow data provided by the

Flag dataset (Garg et al., 2013) for the Flag sequence

(Fig. 8). Experiments focus on:

DenseLong-termMotionEstimationviaStatisticalMulti-stepFlow

549

(a) I

25

(b) I

40

(c) I

45

(d) I

25

(e) (Conze et al., 2013) (f) StatFlow initial phase

Figure 4: Source frames of the MPI S1 sequence (Granados et al., 2012) and reconstruction of the kiosk of I

25

from I

45

with: e) the combinatorial integration and the statistical selection introduced in (Conze et al., 2013), f) the proposed extended

version described in Section 2.1 (initial phase of StatFlow). Black boxes focus on differences between both methods.

Table 1: Registration and PSNR assessment with the com-

binatorial integration and the statistical selection introduced

in (Conze et al., 2013) and the proposed extended version

described in Section 2.1 (initial phase of StatFlow). PSNR

scores are computed on the kiosk of MPI S1 (Fig. 4).

Frame pairs {25,45} {25,46} {25,47} {25,48}

(Conze et al., 2013) 21.83 24.98 25.56 25.83

StatFlow initial phase 29.02 28.4 27.27 27.23

Frame pairs {25,49} {25,50} {25,51} {25,52}

(Conze et al., 2013) 25.04 24.83 24.48 24.3

StatFlow initial phase 26.84 26.33 26.1 25.69

Table 2: Registration and PSNR assessment with: 1) com-

binatorial integration and statistical selection introduced in

(Conze et al., 2013), 2) proposed extended version (Stat-

Flow init. phase), 3) whole StatFlow method. PSNR scores

are computed on whole images of Newspaper (Fig.6q-t).

Frame pairs {160,180} {160,190} {160,200}

(Conze et al., 2013) 22.50 21.21 18.59

StatFlow initial phase 22.70 21.39 19.28

StatFlow 22.93 22.18 20.25

Frame pairs {160,210} {160,220} {160,230}

(Conze et al., 2013) 17.12 15.87 15.76

StatFlow initial phase 18.21 17.12 16.58

StatFlow 18.68 17.40 16.81

• direct estimation between each pair {I

ref

,I

n

}

using LDOF (Brox and Malik, 2011), ITV-L1

(Wedel et al., 2009) and the keypoint-based non-

rigid registration of (Pizarro and Bartoli, 2012),

• concatenation of optical flows computed between

consecutive frames using LDOF (LDOF acc),

• multi-frame subspace flow (MFSF) (Garg et al.,

2013) using PCA or DCT basis,

• multi-step flow fusion (MSF) (Crivelli et al.,

2012a) with LDOF multi-step optical flows,

• StatFlow (N

it

= 3) with LDOF optical flows.

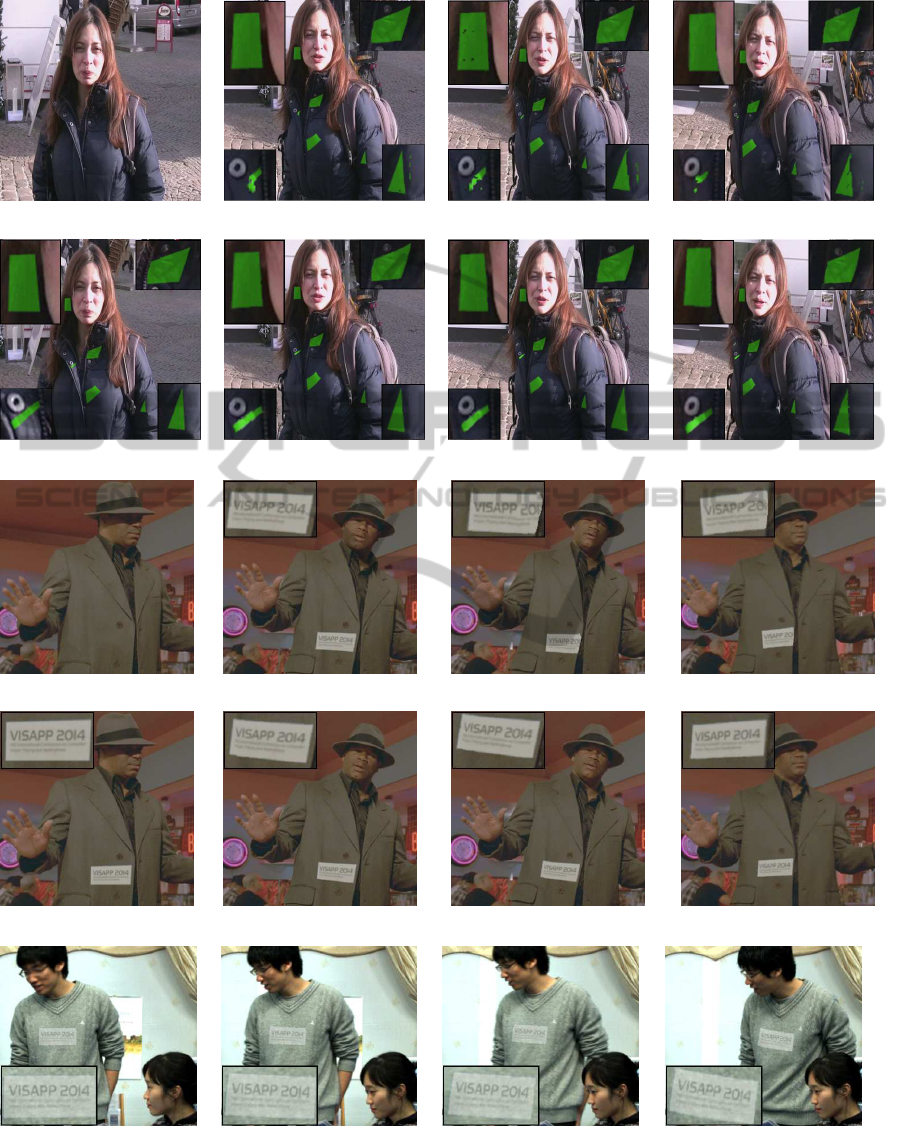

For the comparison task, Tab. 3 gives for all the previ-

ously described methods the RMS (root mean square)

endpoint errors between the respective obtained dis-

placement fields and the ground-truth data. RMS er-

rors are estimated for all the foreground pixels and

for all the pairs of frames {I

ref

,I

n

} together. RMS er-

rors computed for each pair of frames are shown in

Fig.5 for all the methods based on LDOF: LDOF di-

rect, LDOF acc, MSF (LDOF) and StatFlow (LDOF).

The last two multi-step strategies have considered as

inputs steps 1− 5, 8, 10, 15, 20, 25, 30, 40 and 50.

We can firstly observe that LDOF acc rapidly di-

verge. This is due to both estimation errors which are

propagated along trajectories and accumulation errors

inherent to the interpolation process. Moreover, the

results obtained through direct motion estimation are

reasonably good, especially for (Pizarro and Bartoli,

2012). LDOF direct gives a lower RMS endpoint er-

ror than LDOF acc (1.74 against 4). However, it is

not possible to draw conclusions in the light of the

Flag sequence because the flag comes back approx-

imately to its initial position at the end of the se-

quence (Fig.8a,g). Motion estimation for complex

scenes cannot generally rely only on direct estimation

and combining optical flow accumulations and direct

matching is clearly a more suitable strategy.

Tab. 3 and Fig. 5 prove that with the same optical

flows as inputs, StatFlow shows a clear improvement

compared to MSF (0.69 against 1.41). Although both

methods achieve the same quality for first pairs or for

some pairs which coincide with existing steps, other

displacement fields are computed with a better ac-

curacy using StatFlow. Moreover, StatFlow(LDOF)

Figure 5: RMS endpoint errors for each pair {I

ref

,I

n

} along

Flag sequence (Fig. 8) with different methods.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

550

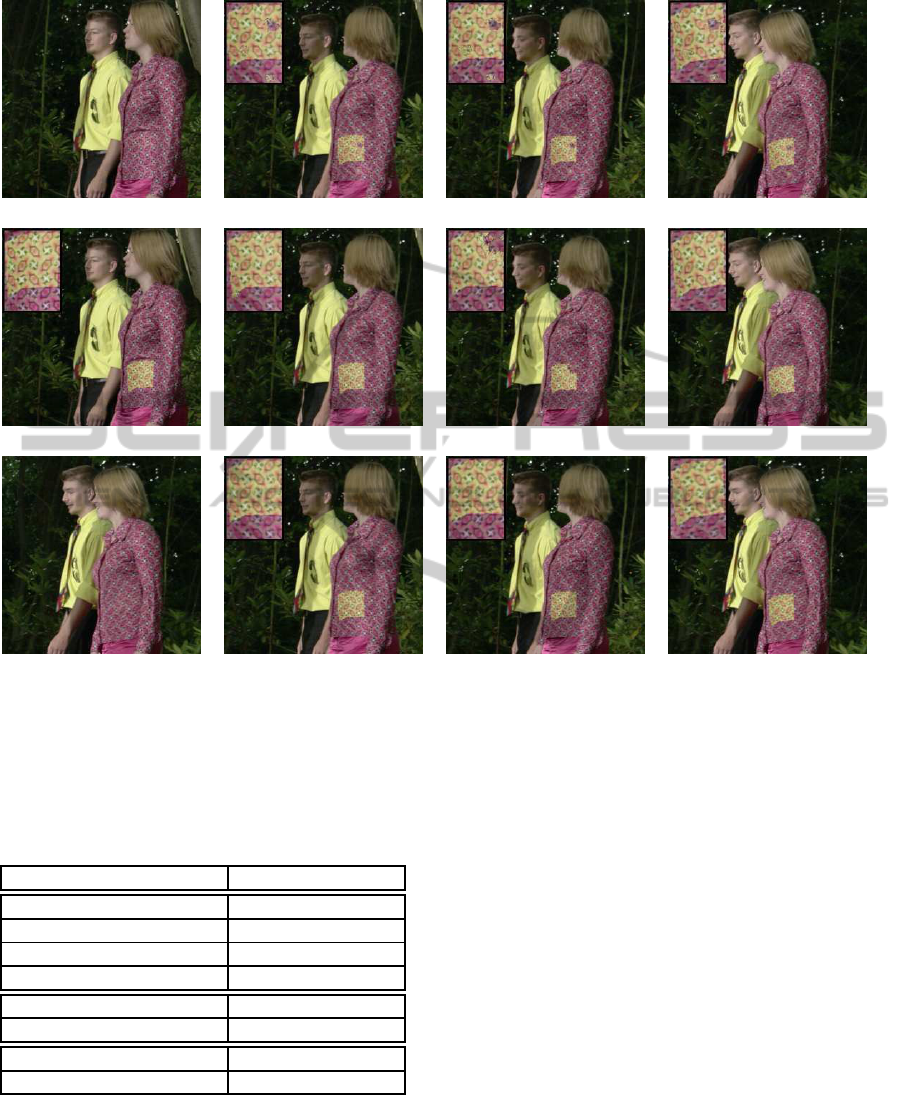

(a) Original image I

115

(c) Prop. to I

125

, MSF (2D-DE) (d) Prop. to I

130

, MSF (2D-DE) (e) Prop. to I

137

, MSF (2D-DE)

(b) Texture insertion in I

115

(f) Prop. to I

125

, StatFlow (2D-DE) (g) Prop. to I

130

, StatFlow (2D-DE) (h) Prop. to I

137

, StatFlow (2D-DE)

(i) Original image I

5036

(k) Prop. to I

5046

, MSF (2D-DE) (l) Prop. to I

5052

, MSF (2D-DE) (m) Prop. to I

5063

, MSF (2D-DE)

(j) Logo insertion in I

5036

(n) Prop. to I

5046

, StatFlow (2D-DE) (o) Prop. to I

5052

, StatFlow (2D-DE) (p) Prop. to I

5063

, StatFlow (2D-DE)

(q) Logo insertion in I

230

(r) Prop. to I

210

, StatFlow (2D-DE) (s) Prop. to I

196

, StatFlow (2D-DE) (t) Prop. to I

170

, StatFlow (2D-DE)

Figure 6: Texture/logo insertion in I

115

(resp. I

5036

and I

230

) and propagation along the MPI-S1 (resp. Hope and Newspaper)

sequence up to I

137

(resp. I

5063

and I

170

) using: 1) multi-step flow fusion (MSF) (Crivelli et al., 2012a) with multi-step optical

flow fields from (Robert et al., 2012) (2D-DE): MSF(2D-DE); 2) the proposed statistical multi-step flow (StatFlow) with

2D-DE multi-step optical flow fields: StatFlow (2D-DE).

DenseLong-termMotionEstimationviaStatisticalMulti-stepFlow

551

(a) Original image I

0

(d) Propagation to I

20

, LDOF acc (e) Propagation to I

25

, LDOF acc (f) Propagation to I

40

, LDOF acc

(b) Logo insertion in I

0

(g) Prop. to I

20

, MSF (2D-DE) (h) Prop. to I

25

, MSF (2D-DE) (i) Prop. to I

40

, MSF (2D-DE)

(c) Original image I

40

(j) Prop. to I

20

, StatFlow (2D-DE) (k) Prop. to I

25

, StatFlow (2D-DE) (l) Prop. to I

40

, StatFlow (2D-DE)

Figure 7: Texture insertion in I

0

and propagation up to I

40

(Walking Couple sequence). We compare: d-f) concatenation of

LDOF (Brox and Malik, 2011) optical flow fields computed between consecutive frames (LFOF acc); g-i) multi-step flow

fusion (MSF) (Crivelli et al., 2012a) using multi-step optical flow fields from (Robert et al., 2012) (2D-DE); j-l) the proposed

statistical multi-step flow (StatFlow) using 2D-DE multi-step optical flow fields.

Table 3: RMS endpoint errors for different methods on the

Flag benchmark dataset (Garg et al., 2013).

Method RMS endpoint error (pixels)

StatFlow (LDOF) 0.69

MSF (Crivelli et al., 2012a) (LDOF) 1.41

LDOF direct (Brox and Malik, 2011) 1.74

LDOF acc (Brox and Malik, 2011) 4

MFSF-PCA (Garg et al., 2013) 0.69

MFSF-DCT (Garg et al., 2013) 0.80

(Pizarro and Bartoli, 2012) direct 1.24

ITV-L1 direct (Wedel et al., 2009) 1.43

reaches the same RMS error with respect to MFSF-

PCA, the best one of the MFSF approaches, with 0.69.

This proves that StatFlow is competitive compared to

challenging state-of-the-art methods.

3.3 Texture Propagation and Tracking

We aim now at showing that our method provides sat-

isfying results in a wide set of complex scenes. More-

over, we focus on the comparison between StatFlow

(N

it

= 9) and MSF (Crivelli et al., 2012a) to prove that

StatFlow performs a more efficient integration and se-

lection procedure compared to MSF using the same

optical flows as inputs. Experiments have been firstly

conducted in the context of video editing: we evaluate

the accuracy of both methods by motion compensat-

ing in I

n

∀n textures/logos manually inserted in I

ref

.

In Fig. 6 and 7, textures/logos have been respec-

tively inserted in I

115

of MPI S1, I

5036

of Hope, I

230

of Newspaper and I

0

of Walking Couple. To-the-

reference fields computedwith StatFlow (2D-DE) and

MSF (2D-DE) serve to propagate textures/logos up to

respectively I

137

, I

5063

, I

170

and I

40

. 2D-DE has been

chosen for its good results for video editing tasks. The

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

552

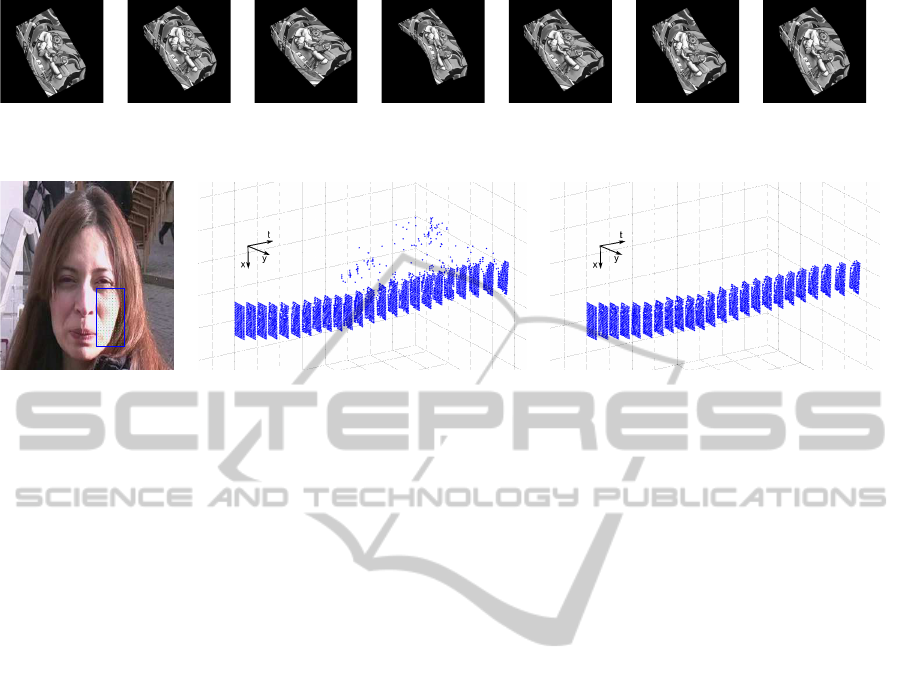

(a) I

1

(b) I

10

(c) I

20

(d) I

30

(e) I

40

(f) I

50

(g) I

60

Figure 8: Source frames of the Flag sequence (Garg et al., 2013).

(a) I

115

with tracking area (b) Point tracking from I

115

to I

138

, MSF (2D-DE) (c) Point tracking from I

115

to I

138

, StatFlow (2D-DE)

Figure 9: Point tracking from I

115

up to I

138

, MPI-S1 sequence (Granados et al., 2012). We compare: b) multi-step flow

fusion (MSF) (Crivelli et al., 2012a) using multi-step optical flow fields from (Robert et al., 2012) (2D-DE); c) the proposed

statistical multi-step flow (StatFlow) method using 2D-DE multi-step optical flow fields.

steps involved are: 1− 5, 8 (Hope), 10, 15 (except for

NP), 20 (Hope, NP), 30 (MPI S1, NP).

Given these results, it appears that MSF some-

times distorts structures (bottom left zoom Fig.6c-

e, Fig.6l,m), makes shadow textures appear (bot-

tom right zoom Fig.6c-e) and does not estimate mo-

tion with accuracy (top right zoom Fig.6e, Fig.6l,m).

Visual results with StatFlow reveal a better long-

term propagation (see also Fig.6r-t). Fig.7 compares

StatFlow(2D-DE) and MSF(2D-DE) with LDOF acc.

We observe that LDOF acc badly performs motion es-

timation for periodic structures. MSF encounters also

matching issues (Fig.7h) whereas StatFlow performs

propagation without any visible artifacts.

Finally, StatFlow and MSF are assessed through

point tracking. In Fig. 9, the bottom right part of

the woman face is tracked from I

115

to I

138

(MPI S1).

The 2D+t visualization indicates that some trajecto-

ries drift to the background with MSF. This illustrates

the inherent issue of MSF which propagates estima-

tion errors due to the sequential processing. Con-

versely, StatFlow provides accurate fields while lim-

iting the temporal correlation between displacement

fields respectively estimated for neighbouringframes.

4 CONCLUSIONS

We present statistical multi-step flow, a two-step

framework which performs dense long-term motion

estimation. Our method starts by generating initial

dense correspondences with a focus on inconsistency

reduction. For this task, we perform a combinato-

rial integration of consistent optical flows followed

by an efficient statistical selection. This procedure

is applied independently between a reference frame

and each frame of the sequence. It guarantees a

low temporal correlation between the resulting cor-

respondences respectively estimated for each of these

pairs. We propose then to enforce temporal smooth-

ness through a new iterative motion refinement. It

considers several motion candidates including candi-

dates from neighboring frames and involves a new

energy formulation with temporal smoothness con-

straints. Experiments evaluate the effectiveness of

our approach compared to state-of-the-art methods

through quantitative assessment using dense ground-

truth data and qualitative assessment via texture prop-

agation and tracking for a wide set of complex scenes.

REFERENCES

Brox, T. and Malik, J. (2010). Object segmentation by long

term analysis of point trajectories. European Confer-

ence on Computer Vision, pages 282–295.

Brox, T. and Malik, J. (2011). Large displacement optical

flow: descriptor matching in variational motion esti-

mation. IEEE Transactions on Pattern Analysis and

Machine Intelligence, 33(3):500–513.

Conze, P.-H., Crivelli, T., Robert, P., and Morin, L. (2013).

Dense motion estimation between distant frames:

combinatorial multi-step integration and statistical se-

lection. In IEEE International Conference on Image

Processing.

Corpetti, T., M´emin,

´

E., and P´erez, P. (2002). Dense esti-

DenseLong-termMotionEstimationviaStatisticalMulti-stepFlow

553

mation of fluid flows. IEEE Transactions on Pattern

Analysis and Machine Intelligence, 24(3):365–380.

Crivelli, T., Conze, P.-H., Robert, P., Fradet, M., and P´erez,

P. (2012a). Multi-Step Flow Fusion: Towards accu-

rate and dense correspondences in long video shots.

British Machine Vision Conference.

Crivelli, T., Conze, P.-H., Robert, P., and P´erez, P. (2012b).

From optical flow to dense long term correspon-

dences. In IEEE International Conference on Image

Processing.

Garg, R., Roussos, A., and Agapito, L. (2013). A vari-

ational approach to video registration with subspace

constraints. International Journal of Computer Vision.

Granados, M., Kim, K. I., Tompkin, J., Kautz, J., and

Theobalt, C. (2012). MPI-S1. http://www.mpi-

inf.mpg.de/ granados/projects/vidbginp/index.html.

Lempitsky, V., Rother, C., Roth, S., and Blake, A. (2010).

Fusion moves for Markov random field optimization.

IEEE Transactions on Pattern Analysis and Machine

Intelligence, 32(8):1392–1405.

Papadakis, N., Corpetti, T., and M´emin, E. (2007). Dy-

namically consistent optical flow estimation. In IEEE

International Conference on Computer Vision.

Pizarro, D. and Bartoli, A. (2012). Feature-based de-

formable surface detection with self-occlusion reason-

ing. International Journal of Computer Vision.

Robert, P., Th´ebault, C., Drazic, V., and Conze, P.-H.

(2012). Disparity-compensated view synthesis for

s3D content correction. In SPIE IS&T Electronic

Imaging Stereoscopic Displays and Applications.

Salgado, A. and S´anchez, J. (2007). Temporal constraints

in large optical flow estimation. In Computer Aided

Systems Theory Eurocast, pages 709–716.

Sand, P. and Teller, S. J. (2008). Particle video: Long-range

motion estimation using point trajectories. Interna-

tional Journal of Computer Vision, 80(1):72–91.

Sundaram, N., Brox, T., and Keutzer, K. (2010). Dense

point trajectories by GPU-accelerated large displace-

ment optical flow. European Conference on Computer

Vision, pages 438–451.

Volz, S., Bruhn, A., Valgaerts, L., and Zimmer, H. (2011).

Modeling temporal coherence for optical flow. In

IEEE International Conference on Computer Vision.

Wedel, A., Pock, T., Zach, C., Bischof, H., and Cremers,

D. (2009). An improved algorithm for TV-L1 optical

flow. In Statistical and Geometrical Approaches to

Visual Motion Analysis, pages 23–45. Springer.

Werlberger, M., Trobin, W., Pock, T., Wedel, A., Cremers,

D., and Bischof, H. (2009). Anisotropic Huber-L1 op-

tical flow. British Machine Vision Conference.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

554