A Novel Fusion Algorithm for Visible and Infrared Image using

Non-subsampled Contourlet Transform and Pulse-coupled Neural

Network

Chihiro Ikuta

1

, Songjun Zhang

2

, Yoko Uwate

1

, Guoan Yang

3

and Yoshifumi Nishio

1

1

Department of Electrical and Electronic Engineering, Tokushima University, 2-1 Minami-Josanjima, Tokushima, Japan

2

Department of Computing Mathematics School of Science, Xi’an Jiaotong University,

No.28 Xianning West Road, Xi’an City, Shaanxi Province, China

3

Department of Automation Science and Technology, School of Electronic and Information Engineering,

Xi’an Jiaotong University, No.28 Xianning West Road, Xi’an City, Shaanxi Province, China

Keywords:

Image Fusion, Visible Image, Infrared Image, Pulse Coupled Neural Network, Non-subsampled Contourlet

Transform.

Abstract:

An image fusion algorithm between visible and infrared images is significant task for computer vision ap-

plications such as multi-sensor systems. Among them, although a visible image is clear perfectly able to be

seen through the naked eyes, it is often suffers with noise; while an infrared image is unclear but it has high

anti-noise property. In this paper, we propose a novel image fusion algorithm for visible and infrared images

using a non-subsampled contourlet transform (NSCT) and a pulse-coupled neural network (PCNN). First, we

decompose two original images above mentioned into low and high frequency coefficients based on the NSCT.

Moreover, each low frequency coefficients for both images are duplicated at multiple scales, and are processed

by laplacian filter and average filter respectively. Finally, we can fuse the normalized coefficients by using the

PCNN. Conversely, we can reconstruct a fused image based on the low and high frequency coefficients, which

are fused by using the inverse NSCT. Experimental results show that the proposed image fusion algorithm

surpasses the conventional and state-of-art image fusion algorithm.

1 INTRODUCTION

Image fusion plays an important role in the computer

vision and image processing fields. In recent years,

many image fusion algorithm are applied to com-

puter vision, pattern recognition and image process-

ing fields such as multi-focus and multi-sensors im-

age fusion, and so on (Xu and Chen, 2004) (Wang

et al., 2008) (Qu et al., 2008). Especially, an image

fusion algorithm between visible and infrared images

is significant for computer vision and image process-

ing applications. The contourlet transform is a new

two-dimensional extension of the wavelet transform

using multi-scale and directional filter banks (Yang

et al., 2010). And then, a non-subsampled contourlet

transform (NSCT) is developed by Da Cunha, Zhou

and Do (da Cunha et al., 2006). The NSCT has a

fully shift invariant property than the contourlet, leads

to better frequency selectivity, directivity and regular-

ity (Zhou et al., 2005). On the other hand, we know

that a pulse-coupled neural network (PCNN) is pre-

sented by Eckhorn in 1990 (Eckhorn, 1990). This

method is developed based on the experimental ob-

servations of synchronous pulse bursts in cat cortex.

It is characterized by the global coupling and pulse

synchronization of neurons. And the PCNN has ex-

cellent performance in image edge detection applica-

tions. Recently, several image fusion algorithm based

on the NSCT and PCNN have been developed, for ex-

ample, based on spatial frequency-motivated PCNN

in NSCT domain of Qu (Qu et al., 2008), stationary

wavelet-based NSCT and PCNN of Yang (Yang et al.,

2009), based on NSCT-PCNN of Ge for visible and

infrared image (Ge and Li, 2010), a simplified PCNN

in NSCT domain of Liu (Liu et al., 2012), and so on.

These image fusion algorithm implemented better fu-

sion performance for various image processing appli-

cations.

However, hardly any work based on NSCT-PCNN

algorithm for the visible and infrared image. There-

fore, in this paper, we consider to utilize the NSCT for

implementing multi-scale decomposition, and PCNN

160

Ikuta C., Zhang S., Uwate Y., Yang G. and Nishio Y..

A Novel Fusion Algorithm for Visible and Infrared Image using Non-subsampled Contourlet Transform and Pulse-coupled Neural Network.

DOI: 10.5220/0004732601600164

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 160-164

ISBN: 978-989-758-003-1

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

for implementing image fusion. Furthermore, we pro-

pose a novel images fusion algorithm for visible and

infrared image based on the NSCT and PCNN. And

the quality of a fusion image is improved by using

the PCNN for the low frequency coefficients in NSCT

domain. In order to the purpose, we employed two

different filters, which are average filter and laplacian

filter before image fusion using the PCNN, and better

image fusion performance is demonstrated than based

on the PCNN only.

This paper is organized as follows. In section

2, we describe an image fusion algorithm used the

NSCT and PCNN. In section 3, we propose the new

image fusion algorithm for visible and infrared im-

ages. In section 4, we show the experimental results,

and compare with the conventional method about im-

age fusion. Finally, in section 5, we state the conclu-

sion and future works.

2 IMAGE FUSION ALGORITHM

Generally, the image fusion algorithm above men-

tioned mainly has three steps. In the first step, images

are decomposed into some coefficients by the NSCT

decomposition algorithm. In the next step, the coef-

ficients from two images are compared and fused by

the PCNN. Finally, the fused coefficients reconstruct

into one new image by the NSCT reconstruction al-

gorithm.

2.1 NSCT

The NSCT is compatible with the image fusion by

using the PCNN, because this method does not ex-

ist the up sampling and down sampling (Zhou et al.,

2005). The NSCT use pyramid filter banks to imple-

ment a multi-scale decomposition and a directional

decomposition as shown in Fig. 1 (da Cunha et al.,

2006). In the case of multi-scale decomposition, an

image is decomposed into the low frequency coeffi-

cients and high frequency coefficients, and these op-

erations are repeated at different scale. After that,

the coefficients is decomposed into directional com-

ponents in the high frequency banks.

2.2 PCNN

Eckhorn proposed a neural network model from cat’s

visual cortex in 1990 (Eckhorn, 1990). The PCNN is

proposed by Thomas for the application of this mech-

anism in 1998 (Lindblad and Kinser, 2005). It is

known that this method can be applied to the image

processing, in addition can also be applied to image

Image

Multi-scale

Decomposition

Directional

Decomposition

Figure 1: The non-subsampled contourlet transform for the

image decomposition.

fusion (Jhonson and Padgett, 1999). When we apply

the PCNN to an image processing, one pixel data in

an image inputs the one neuron. Accepters of neuron

are composed of a feeding part and a linking part, as

follows. The feeding part is described by Eq. (1).

F

lk

ij

(n) = S

lk

ij

,

(1)

where F is an output of feeding part and S is an

external stimulus. Eq. (2) is the linking part.

L

lk

ij

(n) = e

−α

L

L

lk

ij

(n− 1)

+V

L

∑

pq

W

lk

ij, pq

Y

lk

ij, pq

(n− 1),

(2)

where L is an output of linking part, V is a normal-

ization coefficient and W is a weight to connect other

neurons. An internal state is calculated by outputs of

the feeding and linking part as shown in Eq. (3).

U

lk

ij

(n) = F

lk

ij

(n){1+ βL

lk

ij

(n)},

(3)

where U is an internal state. The output of neuron is

calculated from comparison between the internal state

and a threshold. The threshold is described by Eq. (4).

θ

lk

ij

(n) = e

−α

θ

θ

lk

ij

(n− 1) +V

θ

Y

lk

ij

(n− 1),

(4)

where θ is a threshold. In the PCNN, an activation

function is a step function. The output of the neuron

is described by Eq. (5).

Y

lk

ij

=

n

1, U

lk

ij

(n) > θ

lk

ij

(n)

0, otherwise.

(5)

We choose these parameters by heuristic. In this

condition, we tune that the conventional method has

become more high performance, and the model of

PCNN shown in Fig. 2.

In the simulation, the PCNN is inputted in the de-

composition coefficients of two images. In the appli-

cation for the image processing, the one neuron re-

ceive one pixel of the coefficients. Thus, the num-

ber of neurons depend on the image size. The PCNN

iterates process to the number of times. After that,

ANovelFusionAlgorithmforVisibleandInfraredImageusingNon-subsampledContourletTransformandPulse-coupled

NeuralNetwork

161

ܻ

ܻ

ߙ

ி

ߙ

ܹ

,

ܯ

,

ܵ

1

ܨ

1 + ߚܮ

ܸ

ఛ

ߙ

ఛ

ߠ

+

-

ܷ

ܻ

…

…

Linking

Feeding

Step function

Figure 2: Block diagram of a single neuron of the PCNN.

the two images which are processed by the PCNN are

compared and are fused by Eq. (6).

I

lk

ij

=

n

A

lk

ij

, Y

lk

ij,A

> Y

lk

ij,B

B

lk

ij

, otherwise,

(6)

where I is an fused image, A is coefficients of the im-

age A, and B is coefficients of the image B.

3 PROPOSED IMAGE FUSION

ALGORITHM

In this paper, we introduce a novel image fusion pro-

cessing for the low frequency coefficients. Gener-

ally, the high frequency region of an image include

the edge and texture information, the low frequency

region concentrate almost of power for an image.

Whereas image energy of the most natural scenes

is mainly concentrated in the low frequency region.

Hence, we consider that the quality of an image can

be improved by tuning the low frequency of image.

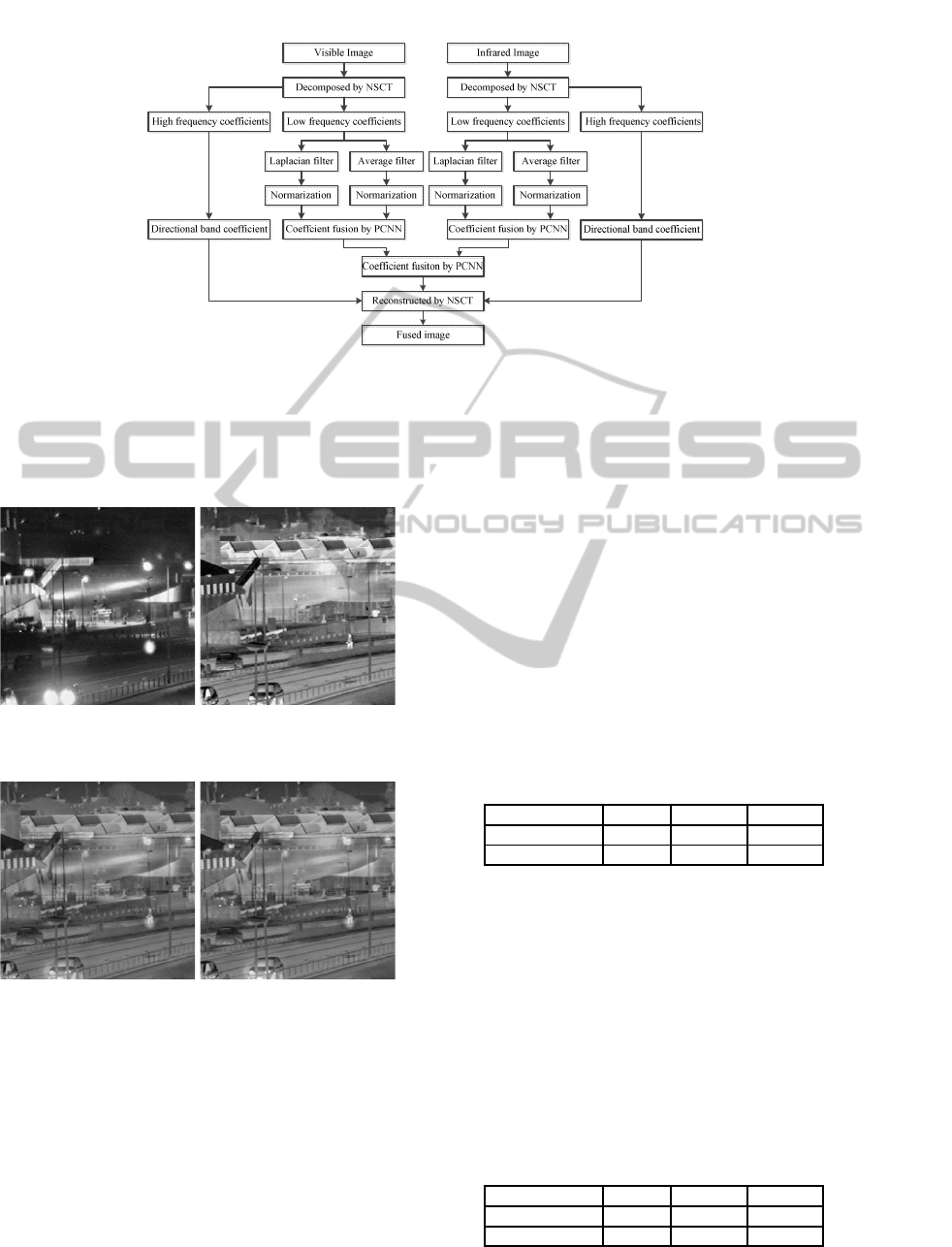

Process of proposed image fusion algorithm is il-

lustrated in Fig. 3. Firstly, we decompose two image

into the low frequency coefficients and the high fre-

quency coefficients by the NSCT. In addition, each

low frequency coefficients for both images are du-

plicated at multiple scales. And then, on one hand,

the coefficients is filtering by the laplacian filter ex-

pressed in Eq. (7). The laplacian filter is an edge de-

tection operator, it can enhance the edge effects of an

image. On the other hand, the coefficient is filtered by

the average filter shown in Eq. (8). The average filter

simplified the all of low coefficients, and can fuse the

both coefficients which is normalized from the lapla-

cian and average filter for increasing the intensity of

an image in the low frequency domain.

Laplacianfilter =

−1 −1 −1

−1 9 −1

−1 −1 −1

(7)

Averagefilter =

0.11 0.11 0.11

0.11 0.12 0.11

0.11 0.11 0.11

(8)

After filter process, we normalize the two coefficients.

The two coefficients are fused by the PCNN image

fusion algorithm. We process coefficients of image A

and image B, and the two fused coefficients are fused

by using Eq. (6). As shown in Fig. 3, the images are

decomposed five levels by the pyramidal filter bank

of NSCT. Thereby, the coefficient of five different

frequency bands are generated, respectively. Among

them, we mainly used filtering technique for the low

frequency bands, which are two lower frequency lev-

els. There realized the fusion processing for visible

image and infrared image using the algorithm above.

Finally, the both fused coefficient above mentioned

from two different images are fused by the PCNN,

and the high frequencycoefficients are further decom-

posed to be directional band coefficient which fused

by the PCNN, it is called as the second fusion for vis-

ible image and infrared image separately. Therefore,

we can reconstruct the visible and infrared image us-

ing all the low and high frequency coefficient by the

inverse NSCT. We investigated that the quality of

a fused image is improved by filtering the low fre-

quency coefficients. It is because that laplacian filter

and average filter increased an intensity of image in

the low frequency.

4 SIMULATIONS

In this section, we show the computer simulation of

proposed image fusion algorithm. Here use two kinds

of image sets, and investigate the image fusion perfor-

mance. Furthermore, we analyze the quality of image

fusion for the proposed algorithm with the low fre-

quency coefficients to the PCNN and high frequency

coefficients to the PCNN. And that parameters of the

PCNN are set as p× q = 3 × 3, α

L

= 1.0, α

θ

= 0.2,

β = 3, V

L

= 1.0, V

θ

= 20,

W =

0.707 1 0.707

1 0 1

0.707 1 0.707

,

and the maximal iteration number is n = 200. Here,

we adopted three kinds of methods exist for evalu-

ating image fusion quality, which are the Mutual In-

formation (MI), entropy, and Standard Deviation (St.

Dev.). The MI shows that the fused image might have

valuable information of both original images. The en-

tropy shows that the fused image carries average in-

formation. The St. Dev. shows the statistical distri-

bution of fused image. In the first simulation, we use

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

162

Figure 3: The proposed image fusion algorithm.

the original images in Fig. 4, and the fused images

are shown in the Fig. 5. From the Fig. 5, we can see

that the original images are fused well and its visual

performance is as good as original images. .

(a) Visible image. (b) Infrared image.

Figure 4: Target images for image fusion.

(a) PCNN with NSCT. (b) Proposed algorithm.

Figure 5: Fused image by different methods.

Table 1 shows the experimental results and com-

parisons for the image fusion performance in the

Fig. 4. From this results we can see that the pro-

posed image fusion algorithm has better quality of

fused image than the PCNN. It means that the origi-

nal images held the characteristics even if the original

images are processed by two filters, namely laplacian

and average filters. We focus on the low frequency

coefficients for the localized property and edge en-

hancements of the original coefficients by using the

both filters, hence the mutual information, entropy

and standard deviation are much improved, respec-

tively. Especially, the proposed method has a high

MI performance and a high standard deviation of the

fused image. In the proposed model, the coefficients

are preprocessed by the filters, thereby the original

image is varied. However, the result of the proposed

method obtains the high MI performance. The stan-

dard deviation generally shows the edge performance.

The PCNN responds the edge. The proposed method

enhances the edge by the laplacian filter from the orig-

inal image coefficients. In the conventional method,

the fused image is made from original images. Thus,

the fused image has only characteristics of the origi-

nal image.

Table 1: Fusion performance index of different methods.

MI Entropy St. Dev.

PCNN+NSCT 2.4956 7.0300 32.713

Proposed 2.8358 7.1275 34.865

In the next simulation, we use the test images and

can obtain the fused images as shown in Fig. 6 and

Fig. 7 respectively. From the Fig. 7, we can see that

the original images are fused well and its visual per-

formance is as good as original images.

From Table 2, the proposed image fusion algo-

rithm has better quality of fused image than the image

fusion of the PCNN. The mutual information and the

standard deviation are improved well. This result’s

trend is similar to the Table. 1. Thereby, we can say

that the proposed method has a better image fusion

performance than the conventional method.

Table 2: Fusion performance index of different .

MI Entropy St. Dev.

PCNN+NSCT 5.5801 7.4862 53.454

Proposed 6.0821 7.5491 54.669

ANovelFusionAlgorithmforVisibleandInfraredImageusingNon-subsampledContourletTransformandPulse-coupled

NeuralNetwork

163

(a) Visible image. (b) Infrared image.

Figure 6: Target images for image fusion.

(a) PCNN with NSCT. (b) Proposed algorithm.

Figure 7: Fused image by different methods.

5 CONCLUSIONS

In this paper, we have proposed the novel image fu-

sion algorithm for visible and images by using NSCT

and PCNN. We processed the low frequency coef-

ficients which are decomposed by the NSCT, in ad-

dition filtered by the laplacian and the average filter.

There, the coefficients were fused by the PCNN. Fi-

nally, the fused image is reconstructed using the low

frequency and high frequency coefficient by the in-

verse NSCT. From experimental results, the proposed

image fusion algorithm has better quality than the im-

age fusion performance for using the PCNN only.

REFERENCES

da Cunha, A., Zhou, J., and Do, M. (2006). The nonsub-

sampled contourlet transform: Theory, design, and ap-

plications. IEEE Transaction on Image Processing,

15(10):3089–3101.

Eckhorn, R. (1990). Feature linking via synchronization

among distributed assemblies: Simulations of result

from cat visual cortex. Neural Computation, 2:293–

307.

Ge, Y. and Li, X. (2010). Image fusion algorithm based

on pulse coupled neural networks and nonsubsam-

pled contourlet transform. Proc. Second International

Workshop on Education Technology and Computer

Science, 3:27–30.

Jhonson, J. and Padgett, M. (1999). Pcnn models and ap-

plications. IEEE Transactions of Neural Network,

10(3):480–498.

Lindblad, T. and Kinser, J. (2005). Image Processing using

Pulse-Coupled Neural Networks. Springer Publisher.

Liu, F., Li, J., and Huang, C. (2012). Image fusion al-

gorithm based on simplified pcnn in nonsubsampled

contourlet transform domain. International Work-

shop on Information and Electronics Engineering,

29:1434–1438.

Qu, X., Yan, J., Xiao, H., and Zhu, Z. (2008). Image fu-

sion algorithm based on spatial frequency-motivated

pulse coupled neural networks in nonsubsampled con-

tourlet transform domain. Acta Automatica Sinica,

34(12):1508–1514.

Wang, M., Peng, D., and Yang, S. (2008). Fusion of multi-

band sar images based on nonsubsampled contourlet

and pcnn. Proc. 4th International Conference on Nat-

ural Computation, pages 529–533.

Xu, B. and Chen, Z. (2004). A multisensor image fusion al-

gorithm based on pcnn. Proc. the 5th World Congress

on Intelligent Control and Automation, pages 3679–

3682.

Yang, G., Wetering, H. V. D., Hou, M., Ikuta, C., and Liu, Y.

(2010). A novel design approach for contourlet filter

banks. IEICE Transactions on Information and Sys-

tems, E93-D(7):2009–2011.

Yang, S., M. Wang, Y. L., Qi, W., and Jiao, L. (2009).

Fusion of multiparametric sar images based on sw-

nonsubsampled contourlet and pcnn. Signal Process-

ing, 89(12):2596–2608.

Zhou, J., da Cunha, A., and Do, M. (2005). Nonsubsampled

contourlet transform: Construction and application in

enhancement. Proc. International Conference on Im-

age Processing, pages 469–472.

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

164