Ego-motion Recovery and Robust Tilt Estimation for Planar Motion

using Several Homographies

M

˚

arten Wadenb

¨

ack and Anders Heyden

Centre for Mathematical Sciences, Lund University, Lund, Sweden

Keywords:

SLAM, Homography, Robotic Navigation, Planar Motion, Tilt Estimation.

Abstract:

In this paper we suggest an improvement to a recent algorithm for estimating the pose and ego-motion of a

camera which is constrained to planar motion at a constant height above the floor, with a constant tilt. Such

motion is common in robotics applications where a camera is mounted onto a mobile platform and directed

towards the floor. Due to the planar nature of the scene, images taken with such a camera will be related by

a planar homography, which may be used to extract the ego-motion and camera pose. Earlier algorithms for

this particular kind of motion were not concerned with determining the tilt of the camera, focusing instead on

recovering only the motion. Estimating the tilt is a necessary step in order to create a rectified map for a SLAM

system. Our contribution extends the aforementioned recent method, and we demonstrate that our enhanced

algorithm gives more accurate estimates of the motion parameters.

1 INTRODUCTION

One of the long-standing aims in robotics research is

the development of algorithms for autonomous nav-

igation. A popular class of such algorithms are the

ones concerned with so called Simultaneous Locali-

sation and Mapping (SLAM), in which a mobile plat-

form, equipped with an array of suitable sensors (laser

scanners, cameras, odometers, sonar, ...), explores

and maps the surrounding environment while keep-

ing track of its own location with respect to the map.

The map created in the process should mark notable

objects and landmarks in a way which allows for re-

liable re-identification. The type of map that can be

created is highly dependent on the kinds of sensors

employed and on the environment being mapped, and

can range from sparsely placed points to dense and

detailed textured 3D models.

Using cameras to build the map is becoming in-

creasingly attractive, as they are cheap compared to

many of the other sensors, and since the traditional

obstacle of high computational cost becomes less in-

hibiting with time as computational power increases.

Another advantage of using cameras is that it allows for

utilisation of the increasingly sophisticated methods

and great experience that the computer vision commu-

nity has produced during the past few decades. Indeed,

scene reconstruction from images is a classical and

continually studied problem in computer vision, and

various methods have been proposed for both general

cases and specialised applications.

Many of the successful general reconstruction tech-

niques are based on epipolar geometry, and in partic-

ular the fundamental matrix, which was introduced

independently in (Faugeras, 1992) and (Hartley, 1992).

Such methods make the implicit assumption that the

data are not positioned in one of the so called criti-

cal configurations, and in many practical cases such

degeneracies are indeed very unlikely to occur. How-

ever, one of the less unlikely critical configurations

occurs when the data points are coplanar — indeed,

the application to navigation that we describe in this

paper requires the data points to lie in a plane. Since

planar structures are very common in man-made en-

vironments, this is an area in which specialised al-

gorithms which can avoid degeneracy can have great

advantages.

While invariant local features, for instance SIFT

(Lowe, 2004) and other similar features, are standard

in Structure from Motion (SfM), their use in camera

based SLAM has been less prevalent. One of the main

reasons for this is probably, as observed in (Davison

et al., 2007), that though such features allow for accu-

rate and robust re-identification, their computational

cost has traditionally been obstructive for real time

applications. Although this is essentially still a valid

point, particularly on embedded systems or with high

resolution images, computational power continues to

635

Wadenbäck M. and Heyden A..

Ego-motion Recovery and Robust Tilt Estimation for Planar Motion using Several Homographies.

DOI: 10.5220/0004744706350639

In Proceedings of the 9th International Conference on Computer Vision Theory and Applications (VISAPP-2014), pages 635-639

ISBN: 978-989-758-009-3

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

improve. In our view, feature based approaches are

inevitably becoming feasible for real-time operation.

2 RELATED WORK

A robot mapping application not only requires an in-

cremental reconstruction, as data becomes available

sequentially, but in contrast to Structure from Motion

approaches such as the popular Bundler system de-

scribed in (Snavely et al., 2008), the order in which

views are added in a more or less predetermined order.

Though the views are added to the reconstruction in a

fixed order, some SLAM approaches allow the robot

path itself to be planned so that the images can be taken

from locations which make the reconstruction better

(Haner and Heyden, 2011), but we will in this paper

consider the path to already be decided. Some very

early work which respects the restriction on the order

of views is (Harris and Pike, 1988), in which a Kalman

filter was used to estimate camera position based on

inter-image point correspondences throughout a short

image sequence. Probabilistic viewpoints based on

extended Kalman filters (EKF) remain popular in later

systems such as the vSLAM system (Karlsson et al.,

2005) and the MonoSLAM system (Davison et al.,

2007).

The systems mentioned above allow general 3D

camera motion, but this is not always necessary or even

desired. A camera that has been mounted onto a mo-

bile platform will typically perform two-dimensional

motion since it remains at a fixed height above the

ground, and with this knowledge one can eliminate

some of the uncertainty which 3D motion allows. Our

work continues in the spirit of (Liang and Pears, 2002)

and (Hajjdiab and Lagani

`

ere, 2004) and others, in that

we intend to navigate using images of the floor. Since

the scene is planar, the images will be related by planar

homographies.

Liang and Pears find the robot rotation angle ϕ by

noting that the eigenvalues of the inter-image homog-

raphy are (up to scale)

1

and

e

±iϕ

, and they derive an

expression for the translation from the eigenvectors.

One drawback of this method is that it does not deter-

mine the tilt. Determining the tilt allows a rectified

map to be created, and is therefore highly desirable.

A more recent method described in (Wadenb

¨

ack

and Heyden, 2013) starts with estimating the tilt

R

ψθ

,

and then performs a QR decomposition of

R

T

ψθ

HR

ψθ

to determine ϕ and the translation (t

x

,t

y

).

We show in this paper how to extend their estima-

tion algorithm to use more than one homography for

estimating the tilt. This improves robustness to noise

and erroneous measurements.

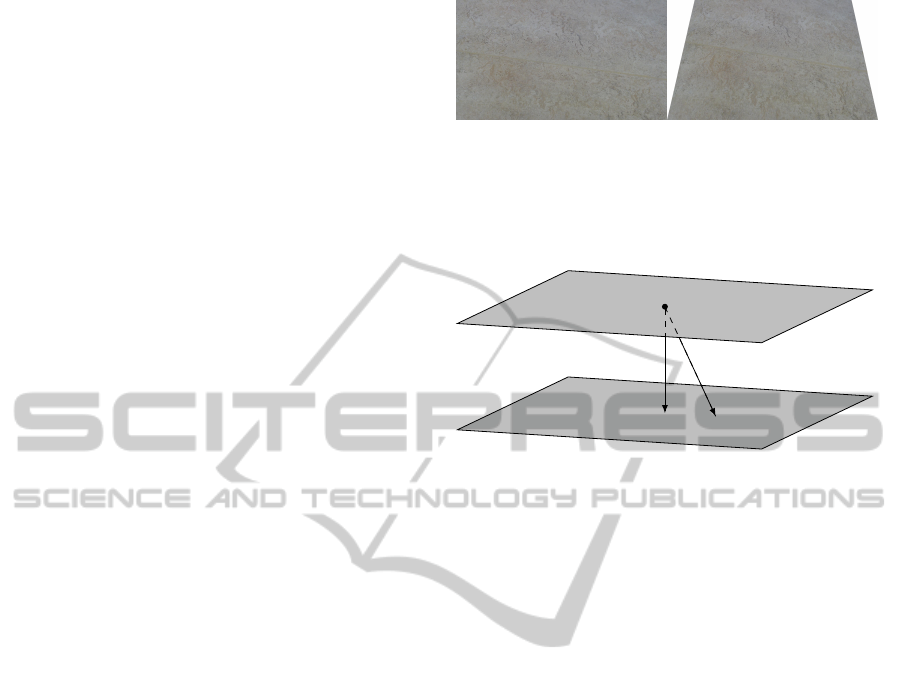

(a) Original image. (b) Rectified image.

Figure 1: A typical image taken by a camera under the

conditions described in this paper is shown in Figure 1(a). A

rectified version, as if seen straight from above, can be seen

in Figure 1(b). In order to rectify such images, it is necessary

to be able to estimate the camera tilt.

z = 1

floor plane

plane normal

camera vector

z = 0

camera centre

Figure 2: The camera moves freely in the plane

z = 0

, and

can rotate about the normal of the plane, but the angle to the

plane normal (tilt) is held constant.

3 PROBLEM GEOMETRY

We shall consider the navigation of a mobile platform

equipped with a single camera that has been mounted

rigidly onto the platform and directed towards the floor.

This setup means that the camera will move at con-

stant height in a plane parallel to the floor, and have

a constant angle to the plane normal (tilt). Figure 1

shows a typical image from one of our datasets, taken

under the conditions described here. Figure 2 shows

an illustration of the geometrical situation. We will

further assume zero skew and square pixels, and that

the camera parameters remain constant during the mo-

tion (no zooming or refocusing). It will be convenient

to work with a global coordinate system in which the

camera moves in the plane

z = 0

and the ground plane

is represented by the plane z = 1.

As already noted, two images will be related by a

planar homography

H

. We model the camera motion

by a translation

t = (t

x

,t

y

)

and a rotation

R

ϕ

an angle

ϕ

about the normal of the floor plane (the

z

-axis). Using

homogeneous coordinates in the plane, the motion of

the camera is represented by the transformation

R

ϕ

T

,

with

T =

1 0 −t

x

0 1 −t

y

0 0 1

. (1)

If the camera is tilted, the camera coordinate system

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

636

and the world coordinate system are related by a ro-

tation

R

ψθ

= R

ψ

R

θ

. This means that the inter-image

homographies will be of the form

H = λR

ψθ

R

ϕ

TR

T

ψθ

, (2)

where λ 6= 0 is an unknown scale parameter.

Estimating the homographies from the images can

be done using point correspondences and a robust

method such as RANSAC. This is not the focus of

our work, and we will henceforth assume that well-

estimated homographies are available, without con-

cerning ourselves with how they were obtained.

4 PARAMETER RECOVERY

Suppose we have a number of homographies of the

form in (2), that is,

H

j

= λ

j

R

ψθ

R

ϕ

j

T

j

R

T

ψθ

, j = 1, .. .N, (3)

and want to recover the motion parameters. As ob-

served in (Wadenb

¨

ack and Heyden, 2013), the prod-

ucts

M

j

=

m

j

11

m

j

12

m

j

13

m

j

12

m

j

22

m

j

23

m

j

13

m

j

23

m

j

33

= H

T

j

H

j

(4)

are all independent of ϕ.

An iterative scheme is also presented which alter-

nates between solving for

ψ

and

θ

, keeping the other

one fixed. Their paper demonstrates that this can be

accomplished by finding the null space of the matrix

Ψ

j

=

b

m

j

11

−

b

m

j

22

−2

b

m

j

23

b

m

j

11

−

b

m

j

33

b

m

j

12

b

m

j

13

0

0

b

m

j

12

b

m

j

13

(5)

in the ψ case (where

b

M = R

T

θ

MR

θ

), and of the matrix

Θ

j

=

b

m

j

11

−

b

m

j

22

−2

b

m

j

13

b

m

j

33

−

b

m

j

22

b

m

j

12

−

b

m

j

23

0

0

b

m

j

12

−

b

m

j

23

(6)

in the

θ

case (with

b

M = R

T

ψ

MR

ψ

). It can clearly be

seen that these matrices have at least rank two, except

in the case where the bottom two rows are identically

zero, so a one dimensional null space is expected. Due

to measurement errors the null space will in practice

be trivial, and a one dimensional approximation is

computed as the singular vector

v = (v

1

,v

2

,v

3

)

cor-

responding to the smallest singular value. In the

ψ

case, any vector

v

in the null space should be a scalar

multiple of (c

2

ψ

,c

ψ

s

ψ

,s

2

ψ

), which gives

ψ =

1

2

arcsin

2v

2

v

1

+ v

3

, (7)

while in the same way, the the solution in the

θ

case is

a scalar multiple of (c

2

θ

,c

θ

s

θ

,s

2

θ

), and

θ =

1

2

arcsin

2v

2

v

1

+ v

3

. (8)

This paper presents the insight that if the tilt

R

ψθ

re-

mains constant, then the matrices

Ψ

j

all should have

the same null space. Instead of considering each

Ψ

j

separately, we can therefore solve

Ψv =

Ψ

1

.

.

.

Ψ

N

c

2

ψ

c

ψ

s

ψ

s

2

ψ

= 0. (9)

In the same way, we may combine the equations for

θ

into

Θv =

Θ

1

.

.

.

Θ

N

c

2

θ

c

θ

s

θ

s

2

θ

= 0. (10)

The angles are computed from the solution

v

in the

same way as above using (7) and (8).

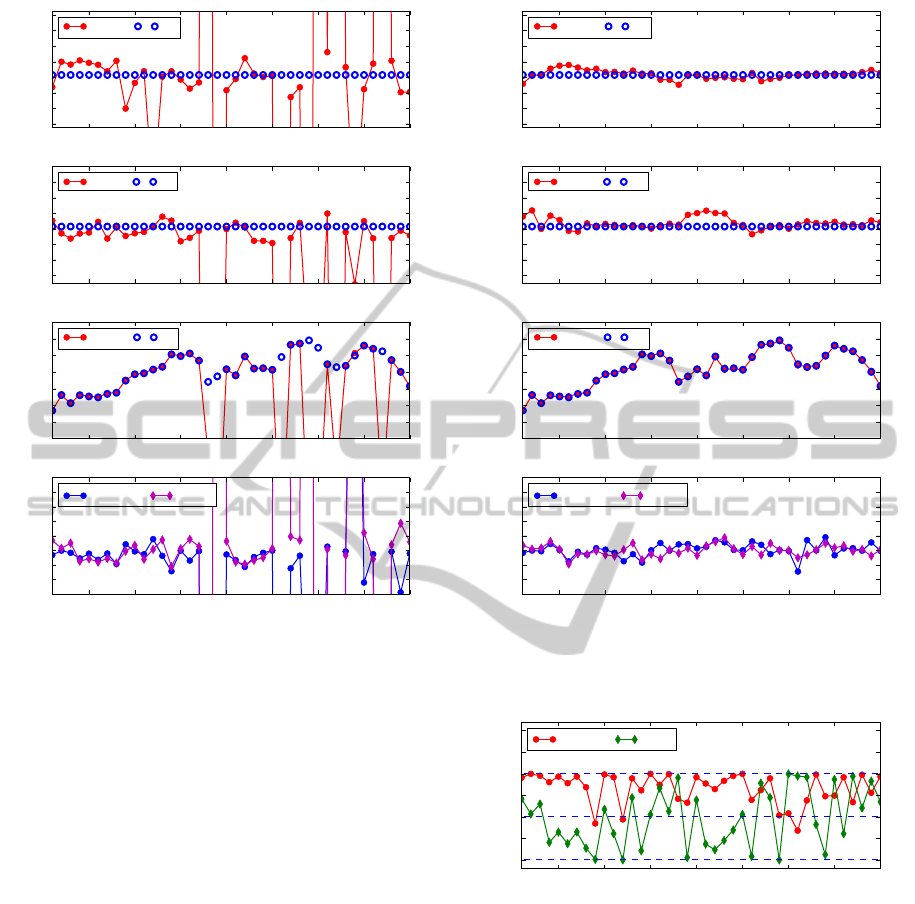

5 EXPERIMENTS

For the purpose of comparing the unmodified algo-

rithm outlined in (Wadenb

¨

ack and Heyden, 2013) with

our enhanced version, we have randomly generated

a large number of homographies of the form in

(3)

.

Gaußian noise with standard deviation of

0.5°

was

added to each of the angles, intended to simulate mea-

surement noise. Figure 3 shows the estimation results

obtained using only one homography at a time, and

Figure 4 shows the results using our proposed method

with five homographies used at each step. The same

number of iterations were used for the two methods.

Note that the scale on the axes is the same in both fig-

ures, for the benefit of easier comparison. It is readily

seen that the proposed method drastically decreases the

number of cases where the algorithm fails to converge.

It should be pointed out that while the results from

the unmodified method can be much improved using

filtering techniques, the same is true for our enhanced

method.

The unmodified algorithm was reported to have

difficulties when the translation was close to a pure

x

-translation or a pure

y

-translation. In the case of an

x

-translation,

θ

would be poorly estimated, and con-

versely for a

y

-translation. Figure 5 shows the

x

- and

y

components of the translation used to generate the

homographies, normalised by the length of the trans-

lation in that step. Certainly, some of the translations

are close to pure

x

-translations or

y

-translations, and

some of them do indeed coincide with bad estimates

Ego-motionRecoveryandRobustTiltEstimationforPlanarMotionusingSeveralHomographies

637

5 10 15 20 25 30 35 40

homography number

−2.2

−2.0

−1.8

−1.6

−1.4

−1.2

−1.0

−0.8

ψ (degrees)

ψ

∗

ψ

5 10 15 20 25 30 35 40

homography number

4.6

4.8

5.0

5.2

5.4

5.6

5.8

6.0

θ (degrees)

θ

∗

θ

5 10 15 20 25 30 35 40

homography number

10

20

30

40

50

60

70

80

φ (degrees)

ϕ

∗

ϕ

5 10 15 20 25 30 35 40

homography number

−0.10

−0.05

0.00

0.05

0.10

0.15

0.20

0.25

t ∗−t

t

∗

x

−t

x

t

∗

y

−t

y

Figure 3: Tilt and motion parameters estimated from one

homography at a time using the unmodified method. The

starred parameters are the estimates.

in Figure 3. The proposed method, on the other hand,

handles these translations without significant difficul-

ties, as Figure 4 confirms.

6 CONCLUSIONS

In this paper we have extended the estimation method

in (Wadenb

¨

ack and Heyden, 2013) to use more than

one homography to estimate the tilt. This enhancement

produces a robuster and more accurate estimate, which

demonstrably allows the other motion parameters to

be recovered with higher precision. The problems with

ill-conditioned motion patterns that were reported in

for the original algorithm have also been remedied by

using more than one homography at a time.

ACKNOWLEDGEMENTS

This work has been funded by the Swedish Founda-

tion for Strategic Research through the SSF project

ENGROSS, http://www.engross.lth.se.

5 10 15 20 25 30 35 40

homography number

−2.2

−2.0

−1.8

−1.6

−1.4

−1.2

−1.0

−0.8

ψ (degrees)

ψ

∗

ψ

5 10 15 20 25 30 35 40

homography number

4.6

4.8

5.0

5.2

5.4

5.6

5.8

6.0

θ (degrees)

θ

∗

θ

5 10 15 20 25 30 35 40

homography number

10

20

30

40

50

60

70

80

φ (degrees)

ϕ

∗

ϕ

5 10 15 20 25 30 35 40

homography number

−0.10

−0.05

0.00

0.05

0.10

0.15

0.20

0.25

t ∗−t

t

∗

x

−t

x

t

∗

y

−t

y

Figure 4: Tilt and motion parameters estimated from five

homographies at a time using our proposed method. The

starred parameters are the estimates.

5 10 15 20 25 30 35 40

homography number

−1.0

−0.5

0.0

0.5

1.0

1.5

2.0

t

x

/|t| and t

y

/|t|

t

x

/|t| t

y

/|t|

Figure 5: The

x

- and

y

components of the translation that

was used to generate the homographies, normalised by the

length of the translation in that step. Some of the translations

used are apparently close to pure

x

-translations or pure

y

-

translations, which were reported to be problematic for the

original algorithm.

REFERENCES

Davison, A. J., Reid, I. D., Molton, N. D., and Stasse,

O. (2007). MonoSLAM: Real-Time Single Camera

SLAM. IEEE Transactions on Pattern Analysis and

Machine Intelligence, 29(6):1052–1067.

Faugeras, O. D. (1992). What can be seen in three dimen-

sions with an uncalibrated stereo rig? In Proceedings

VISAPP2014-InternationalConferenceonComputerVisionTheoryandApplications

638

of the Second European Conference on Computer Vi-

sion, volume 588 of ECCV ’92, pages 563–578, Santa

Margherita Ligure, Italy. Springer-Verlag.

Hajjdiab, H. and Lagani

`

ere, R. (2004). Vision-Based Multi-

Robot Simultaneous Localization and Mapping. In

CRV ’04: Proceedings of the 1st Canadian Confer-

ence on Computer and Robot Vision, pages 155–162,

Washington, DC, USA. IEEE Computer Society.

Haner, S. and Heyden, A. (2011). Optimal View Path Plan-

ning for Visual SLAM. In Proceedings of the 17th

Scandinavian Conference on Image Analysis (SCIA),

volume 6688 of Lecture Notes in Computer Science,

pages 370–380. Springer Berlin Heidelberg.

Harris, C. G. and Pike, J. M. (1988). 3D Positional In-

tegration from Image Sequences. Image and Vision

Computing, 6(2):87–90.

Hartley, R. I. (1992). Estimation of Relative Camera Po-

sitions for Uncalibrated Cameras. In Proceedings of

the Second European Conference on Computer Vision,

volume 588, pages 579–587, Santa Margherita Ligure,

Italy. Springer-Verlag.

Karlsson, N., Bernardo, E. D., Ostrowski, J. P., Goncalves,

L., Pirjanian, P., and Munich, M. E. (2005). The vS-

LAM Algorithm for Robust Localization and Mapping.

In ICRA ’05: Proceedings of the 2005 IEEE Interna-

tional Conference on Robotics and Automation, pages

24–29, Barcelona, Spain. IEEE.

Liang, B. and Pears, N. (2002). Visual Navigation using

Planar Homographies. In ICRA ’02: Proceedings of the

2002 IEEE International Conference on Robotics and

Automation, pages 205–210, Washington, DC, USA.

Lowe, D. G. (2004). Distinctive Image Features from Scale-

Invariant Keypoints. International Journal of Com-

puter Vision, 60(2):91–110.

Snavely, N., Seitz, S. M., and Szeliski, R. (2008). Modeling

the World from Internet Photo Collections. Interna-

tional Journal of Computer Vision, 80(2):189–210.

Wadenb

¨

ack, M. and Heyden, A. (2013). Planar Motion

and Hand-Eye Calibration Using Inter-Image Homo-

graphies from a Planar Scene. In Proceedings of

VISIGRAPP 2013, pages 164–168, Barcelona, Spain.

SCITEPRESS.

Ego-motionRecoveryandRobustTiltEstimationforPlanarMotionusingSeveralHomographies

639