Asynchronous Argumentation with Pervasive Personal Communication

Tools

Yuki Katsura

1

, Hajime Sawamura

2

, Takeshi Hagiwara

2

and Jacques Riche

3

1

Graduate School of Science and Technology, Niigata University, Niigata, Japan

2

Institute of Science and Technology, Niigata University, Niigata, Japan

3

Department of Computer Science, Katholieke Universiteit Leuven, Leuven, Belgium

Keywords:

Asynchronous Argumentation, Multiple-valued Argumentation, iPad, PIRIKA.

Abstract:

In this paper, we propose an argument-based communication tool for humans and agents, which supplements

and alternates the current communication system such as Twitter, Line, etc. in order to allow us to make

a more deliberate and logical human communication. For this purpose, we devised asynchronous argumen-

tation based on our logic of multiple-valued argumentation. It may be as well reworded as asymptotic or

incremental argumentation since agents could approach towards truth or justification every time argument is

put forward by an agent. We have made real the asynchronous argumentation system, named PIRIKA (pilot

of the right knowledge and argument), on the pervasive personal tool, iPad. Finally some lessons learned from

the experimental uses of PIRIKA are reported.

1 INTRODUCTION AND

MOTIVATION

In this paper, we propose an argument-based commu-

nication tool for humans and agents, which supple-

ments and alternates the current communication sys-

tems such as Twitter

1

, Line

2

, etc. in order to allow us

to make a more deliberate and logical human commu-

nication. This is an attempt to make a clear departure

from surface communication with a few words toward

deep communication with argumentation which em-

phasizes the relationship of a conclusion with reasons

all the time.

Social networking software is reshaping the world

we live in. In these days, much popularity has been

seen in communication tools on the Internet that link

people and organizations, instead of linking docu-

ments only. To mention a few, Skype

3

(for direct com-

munication), Line and Mail (for asynchronous com-

munication), Twitter and niconico

4

(for asynchronous

communication with general public), etc.

1

Twitter is a registered trademark of Twitter, Inc..

2

Line is a trademark of Line company.

3

Skype is a registered trademark or a trademark of

Skype Limited.

4

niconico is a registered trademark or a trademark of

Dwango company.

Among other things, Line is an instant messaging

application on smartphones and PCs. It, launched in

Japan in 2011, now reached 300 million users over a

short amount of time in the world. The main features

of Line seems to be twofold. One is the so-called

mere-exposure effect, which is a psychological phe-

nomenon by which people tend to develop a prefer-

ence for things merely because they are familiar with

them. In social psychology, this effect is sometimes

called the familiarity principle. In studies of inter-

personal attraction, the more often a person is seen

by someone, the more pleasing and likeable that per-

son appears to be. The other is the so-called elevator

pitch, which is a short summary used to quickly and

simply define a person, profession, product, service,

organization or event and so on. With LINE, peo-

ple nowadays tend to communicate with each other in

short messages very often. Short and Quick are keys

there, revealing a light or surface communication.

On the other hand, electronic mail which is rel-

atively surface communication tool is a method of

exchanging digital messages from an author to one

or more recipients. It has become the most widely

used medium of communication not only within the

business world but also in our daily lives although it

has some disadvantages such as loss of context, in-

formation overload, speed of correspondence and so

on. But from the viewpoint of communication style,

105

Katsura Y., Sawamura H., Hagiwara T. and Riche J..

Asynchronous Argumentation with Pervasive Personal Communication Tools.

DOI: 10.5220/0004751101050114

In Proceedings of the 6th International Conference on Agents and Artificial Intelligence (ICAART-2014), pages 105-114

ISBN: 978-989-758-016-1

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

email solves two basic problems of communication:

logistics and synchronization.

The problem of logistics: Much of the business

world relies upon communications between people

who are not physically in the same building, area or

even country; setting up and attending an in-person

meeting, or telephone call can be inconvenient, time-

consuming, and costly. Email provides a way to ex-

change information between two or more people with

no set-up costs.

The problem of synchronization: With real time

communication by meetings or phone calls, partici-

pants have to work on the same schedule, and each

participant must spend the same amount of time in

the meeting or call. Email allows asynchrony; each

participant may control their own schedule indepen-

dently.

In contrast with email, there can be two ways

of use of argumentation: synchronous and asyn-

chronous. Argumentation is usually held in such a

synchronous way that participants gather in the same

time and place. The asynchronous argumentation we

advocate in this paper can solve the same synchro-

nization problem as that of email above, but with tak-

ing deep communication into account all the time.

The asynchronous argumentation we intend can

be seen in the flow of argumentation. Let us consider

a look-and-feel scenario of pervasive arguing agents

we aim at realizing on top of the pervasive personal

tools such as iPad and iPhone

5

. Suppose that there

are agents who have gathered knowledge on an issue

concerned with on a routine basis, and conceivedtheir

own arguments on it (asynchronous preparation for

argumentation). Then, the knowledge gathering may

be done by humans or helped by e-secretaries who

might reside in pervasive personal tools as avatars.

Someday, an agent may wish to know such a col-

lective view as what the present voices of the people

around it are like and how they can be converged to

a popular opinion. For example, amendment to the

constitution, increase in consumption tax, etc. would

be keen interest to people in any country. Then, the

agent can start argumentation to know the result on

an issue which it has been concerned about, using the

arguing agent on the pervasive personal tool.

The argument participants will be general public

who now connect to the argument server. But they

could obtain argumentresults which are not assertions

of opinions only but lines of reasoning leading from

some premises to a conclusion. It should be noted

that this is a kind of non-monotonic phenomenon of

reasoning realized by argumentation. Actually, con-

clusions, once drawn, may later be withdrawn after

5

iPad and iPhone are trademarks of Apple Inc.

a new agent will have come on argumentation scene

or stage with additional information. In this manner,

arguing agents on the pervasive personal tools could

produce a well-reasoned judgment (warranted asser-

tion), construed as a form of inquiry conducted con-

joinedly and asynchronously.

In this paper, we describe a realization of asyn-

chronous argumentation which allows such a sce-

nario of futuristic communication on pervasive per-

sonal tools. The paper is organized as follows. In

the following sections 2 and 3, we briefly introduce

part of EALP (Extended Annotated Logic Program-

ming) and LMA (Logic of Multiple-valued Argumen-

tation)(Takahashi and Sawamura, 2004) to make the

paper self-contained. They are an underlyinglogic for

practicing the asynchronous argumentation on iPad.

In Section 4, we illustrate a series of use of PIRIKA

on top of iPad which allows for asynchronous argu-

mentation, using typical screenshots appearing in the

argument process. Section 5 summarizes advanta-

geous points of our work as an evaluation, which we

have confirmed from participants in experimental and

daily uses. The final section includes conclusion and

future work.

2 OVERVIEW OF EALP

EALP is an underlying knowledge representation lan-

guage that we formalized for our logic of multiple-

valued argumentation LMA. EALP has two kinds

of explicit negation: epistemic explicit negation ‘¬’

and ontological explicit negation ‘∼’, and the default

negation ‘not’. Intuitively, ∼ is almost the same as

the classical negation, not the negation-failure as in

Prolog, and ¬ a negation based on our epistemology.

They are supposed to yield a momentum or driving

force for argumentation or dialogue in LMA. In what

follows, we describe an outline of EALP.

2.1 Language

Definition 1 (Annotation as Truth-values and An-

notated Atoms (Kifer and Subrahmanian, 1992)).

We assume a complete lattice (T ,≤) of truth val-

ues, and denote its least and greatest element by ⊥

and ⊤ respectively. The least upper bound operator

is denoted by ⊔. An annotation is either an element

of T (constant annotation), or an annotation variable

on T . If A is an atomic formula and µ is an anno-

tation, then A: µ is an annotated atom. We assume

an annotation function ¬ : T → T , and define that

¬(A:µ) = A:(¬µ). ¬A:µ is called the epistemic ex-

plicit negation(e-explicit negation) of A : µ.

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

106

Definition 2 (Annotated Literals). Let A : µ be an

annotated atom. Then ∼ (A:µ) is the ontological ex-

plicit negation (o-explicit negation) of A : µ (we sim-

ply write ∼ (A:µ) as ∼ A:µ when no confusion arises).

An annotated objective literal is either ∼ A:µ or A:µ.

The symbol ∼ is also used to denote complementary

annotated objective literals. Thus ∼∼ A:µ = A:µ. If L

is an annotated objective literal, then notL is a default

negation of L, and called an annotated default literal.

An annotated literal is either of the form notL or L.

Definition 3 (Extended Annotated Logic Programs

(EALP)). An extended annotated logic program

(EALP ) is a set of annotated rules of the forms: H ←

L

1

& ... &L

n

, or H, where H is an annotated objective

literal, and L

i

(1 ≤ i ≤ n) are annotated literals.

The head of a rule is called a conclusion of a rule.

Annotated objective literals and annotated default lit-

erals in the body of the rule are called antecedents of

the rule and assumptions of the rule respectively. For

simplicity, we assume that a rule with annotation vari-

ables or objective variables represents every ground

instance of it. We identify a distributed EALP with

an agent, and treat a set of EALPs as a multi-agent

system.

2.2 Interpretation

Definition 4 (Extended Annotated Herbrand

Base). The set of all annotated literals constructed

from an EALP P on a complete lattice T of truth val-

ues is called the extended annotated Herbrand base

H

T

P

.

Definition 5 (Interpretation). Let T be a complete

lattice of truth values, and P be an EALP. Then, the in-

terpretation on P is the subset I ⊆ H

T

P

of the extended

annotated Herbrand base H

T

P

of P such that for any

annotated atom A,

1. If A : µ ∈ I and ρ ≤ µ, then A : ρ ∈ I (downward

heredity);

2. If A : µ ∈ I and A : ρ ∈ I, then A : (µ ⊔ ρ) ∈ I

(tolerance of difference);

3. If ∼ A:µ ∈ I and ρ ≥ µ, then ∼ A:ρ ∈ I (upward

heredity).

The conditions 1 and 2 of Definition 5 reflect the

definition of the ideal of a complete lattice of truth

values. The ideals-based semantics was first intro-

duced for the interpretation of GAP by Kifer and

Subrahmanian (Kifer and Subrahmanian, 1992). Our

EALP for argumentation also employs this since it

was shown that the general semantics with ideals is

more adequate than the restricted one simply with a

complete lattice of truth values (Takahashi and Sawa-

mura, 2004). We define three notions of inconsisten-

cies corresponding to three concepts of negation in

EALP.

Definition 6 (Inconsistency). Let I be an interpreta-

tion. Then,

1. A:µ ∈ I and ¬A:µ ∈ I ⇐⇒ I is epistemologically

inconsistent (e-inconsistent).

2. A:µ ∈ I and ∼ A:µ ∈ I ⇐⇒ I is ontologically

inconsistent (o-inconsistent).

3. A : µ ∈ I and notA : µ ∈ I, or ∼ A : µ ∈ I and

not ∼ A:µ ∈ I ⇐⇒ I is inconsistent in default

(d-inconsistent).

When an interpretation I is o-inconsistent or d-

inconsistent, we simply say I is inconsistent. We do

not see the e-inconsistency as a problematic inconsis-

tency since by the condition 2 of Definition 5, A:µ ∈ I

and ¬A:µ = A:¬µ ∈ I imply A:(µ⊔ ¬µ) ∈ I and we

think A : µ and ¬A : µ are an acceptable differential.

Let I be an interpretation such that ∼ A:µ ∈ I. By the

condition 1 of Definition 5, for any ρ such that ρ ≥ µ,

if A : ρ ∈ I then I is o-inconsistent. In other words,

∼ A:µ rejects all recognitions ρ such that ρ ≥ µ about

A. This is the underlying reason for adopting the con-

dition 3 of Definition 5. These notions of inconsis-

tency yield a logical basis of attack relations described

in the multiple-valued argumentation of the next sec-

tion.

Definition 7 (Satisfaction). Let I be an interpretation.

For any annotated objective literal H and annotated

literal L and L

i

, we define the satisfaction relation de-

noted by ‘|=’ as follows.

• I |= L ⇐⇒ L ∈ I

• I |= L

1

& ··· &L

n

⇐⇒ I |= L

1

, ... , I |= L

n

• I |= H ← L

1

& ··· &L

n

⇐⇒ I |= H or I 6|=

L

1

& ··· &L

n

.

3 OVERVIEW OF LMA

In formalizing logic of argumentation, the most pri-

mary concern is the rebuttal relation among argu-

ments since it yields a cause or a momentum of ar-

gumentation. The rebuttal relation for two-valued ar-

gument models is most simple, so that it merely ap-

pears between the contradictory propositions of the

form A and ¬A. In case of multiple-valued argumen-

tation based on EALP, much complication is to be in-

volved into the rebuttal relation under the different

concepts of negation. One of the questions arising

from multiple-valuedness is, for example, how a lit-

eral with truth-value ρ confronts with a literal with

truth-value µ in the involvement with negation. In the

next subsection, we outline important notions proper

AsynchronousArgumentationwithPervasivePersonalCommunicationTools

107

to logic of the multiple-valued argumentation LMA in

which the above question is reasonably solved.

3.1 Annotated Arguments

Definition 8 (Reductant and Minimal Reductant).

Suppose P is an EALP, and C

i

(1 ≤ i ≤ k) are anno-

tated rules in P of the form: A:ρ

i

← L

i

1

& ... &L

i

n

i

, in

which A is an atom. Let ρ = ⊔{ρ

1

,... , ρ

k

}. Then the

following annotated rule is a reductant of P.

A:ρ ← L

1

1

& ... &L

1

n

1

& .. . &L

k

1

& .. . &L

k

n

k

.

A reductant is called a minimal reductant when

there does not exist non-empty proper subset S ⊂

{ρ

1

,... , ρ

k

} such that ρ = ⊔S

Definition 9 (Annotated Arguments). Let P be an

EALP. An annotated argument in P is a finite se-

quence Arg = [r

1

,... , r

n

] of rules in P such that for

every i (1 ≤ i ≤ n),

1. r

i

is either a rule in P or a minimal reductant in P.

2. For every annotated atom A:µ in the body of r

i

,

there exists a r

k

(n ≥ k > i) such that A:ρ (ρ ≥ µ)

is head of r

k

.

3. For every o-explicit negation ∼ A : µ in the body

of r

i

, there exists a r

k

(n ≥ k > i) such that ∼ A:

ρ (ρ ≤ µ) is head of r

k

.

4. There exists no proper subsequence of [r

1

,... , r

n

]

which meets from the first to the third conditions,

and includes r

1

.

We denote the set of all arguments in P by Args

P

,

and define the set of all arguments in a set of EALPs

MAS = {KB

1

,... , KB

n

} by Args

MAS

= Args

KB

1

∪···∪

Args

KB

n

(⊆ Args

KB

1

∪···∪KB

n

). This means that each

agent has its own knowledge base and do not know

other agent’s ones before starting arguments. This

is a natural assumption for argument settings, differ-

ently from other argumentation models (Rahwan and

Simari, 2009).

3.2 Attack Relation

The semantics of the argumentation depends on what

sort of attack relation is considered to deal with con-

flicts among arguments. It would be reasonable to

think that conflicts among arguments occur when the

interpretation satisfying a set of arguments is incon-

sistent.

Definition 10 (Rebut). Arg

1

rebuts Arg

2

⇐⇒ there

exists A:µ

1

∈ concl(Arg

1

) and ∼ A:µ

2

∈ concl(Arg

2

)

such that µ

1

≥ µ

2

, or exists ∼ A:µ

1

∈ concl(Arg

1

) and

A:µ

2

∈ concl(Arg

2

) such that µ

1

≤ µ

2

.

Definition 11 (Undercut). Arg

1

undercuts Arg

2

⇐⇒

there exists A : µ

1

∈ concl(Arg

1

) and notA : µ

2

∈

assm(Arg

2

) such that µ

1

≥ µ

2

, or exists ∼ A : µ

1

∈

concl(Arg

1

) and not ∼ A:µ

2

∈ assm(Arg

2

) such that

µ

1

≤ µ

2

.

Definition 12 (Strictly Undercut). Arg

1

strictly un-

dercuts Arg

2

⇐⇒ Arg

1

undercuts Arg

2

and Arg

2

does not undercut Arg

1

.

We also define the combined attack relation asso-

ciated with o-inconsistency and d-inconsistency.

Definition 13 (Defeat). Arg

1

defeats Arg

2

⇐⇒ Arg

1

undercuts Arg

2

, or Arg

1

rebuts Arg

2

and Arg

2

does

not undercut Arg

1

.

When an argument defeats itself, such an argu-

ment is called a self-defeating argument. For exam-

ple, [p :t ← not p :t] and [q:f ←∼ q:f, ∼ q :f] are

all self-defeating. In this paper, however, we rule

out self-defeating argumentsfrom argument sets since

they are in a sense abnormal, and not entitled to par-

ticipate in argumentation or dialogue.

In this paper, we employ defeat and strictly under-

cut to specify the set of justified arguments where d

stands for defeat and su for strictly undercut.

Definition 14 (Acceptable and Justified Argument

(Dung, 1995)). Suppose Arg

1

∈ Args and S ⊆ Args.

Then Arg

1

is acceptable wrt. S if for every Arg

2

∈

Args such that (Arg

2

,Arg

1

) ∈ d there exists Arg

3

∈ S

such that (Arg

3

,Arg

2

) ∈ su. The function F

Args,d/su

mapping from P (Args) to P (Args) is defined by

F

Args,d/su

(S) = {Arg ∈ Args | Arg is acceptable wrt.

S}. We denote a least fixpoint of F

Args,d/su

by

J

Args,d/su

. An argument Arg is justified if Arg ∈ J

d/su

;

an argument is overruled if it is attacked by a justi-

fied argument; and an argument is defensible if it is

neither justified nor overruled.

Since F

x/y

is monotonic, it has a least fixpoint,

and can be constructed by the iterative method (Dung,

1995).

Justified arguments can be dialectically deter-

mined from a set of arguments by the dialectical proof

theory.

Definition 15 (Dialogue (Prakken and Sartor, 1997)).

An dialogue is a finite nonempty sequence of moves

move

i

= (Player

i

,Arg

i

), (i ≥ 1) such that

1. Player

i

= P (Proponent) ⇐⇒ i is odd;

and Player

i

= O (Opponent) ⇐⇒ i is even.

2. If Player

i

= Player

j

= P (i6= j) then Arg

i

6= Arg

j

.

3. If Player

i

= P (i ≥ 3) then (Arg

i

,Arg

i−1

) ∈ su;

and if Player

i

= O (i ≥ 2) then (Arg

i

,Arg

i−1

) ∈ d.

In this definition, it is permitted that P = O, that

is, a dialogue is done by only one agent. Then, we say

such an argument is a self-argument (monologue).

Definition 16 (Dialogue Tree (Prakken and Sartor,

1997)). A dialogue tree is a tree of moves such that

every branch is a dialogue, and for all moves move

i

=

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

108

(P,Arg

i

), the children of move

i

are all those moves

(O,Arg

j

) ( j ≥ 1) such that (Arg

j

,Arg

i

) ∈ d.

Definition 17 (Provably x/y-justified). Let x be

d(efeat) and y su(strictly undercut). An x/y-dialogue

D is a winning x/y-dialogue ⇐⇒ the termination

of D is a move of proponent. An x/y-dialogue tree T

is a winning x/y-dialogue tree ⇐⇒ every branch

of T is a winning x/y-dialogue. An argument Arg is a

provably x/y-justified argument ⇐⇒ there exists a

winning x/y-dialogue tree with Arg as its root.

We have the sound and complete dialectical

proof theory for the argumentation semantics J

Args,x/y

(Takahashi and Sawamura, 2004).

4 ASYNCHRONOUS

ARGUMENTATION

Our former PIRIKA (Tannai et al., 2013) is a syn-

chronous argumentation in the sense that every agent

who wants to participate in argumentation prepare

its own knowledge base once prior to argumentation.

Then, it produces an outcome of argumentation and

displays it, as in many argumentation systems de-

veloped so far (Rahwan and Simari, 2009). In this

section, we turn such a synchronous argumentation

to a more flexible asynchronous one, by redesigning

PIRIKA on the top of the pervasive personal commu-

nication tool, iPad, toward a new futuristic communi-

cation tool.

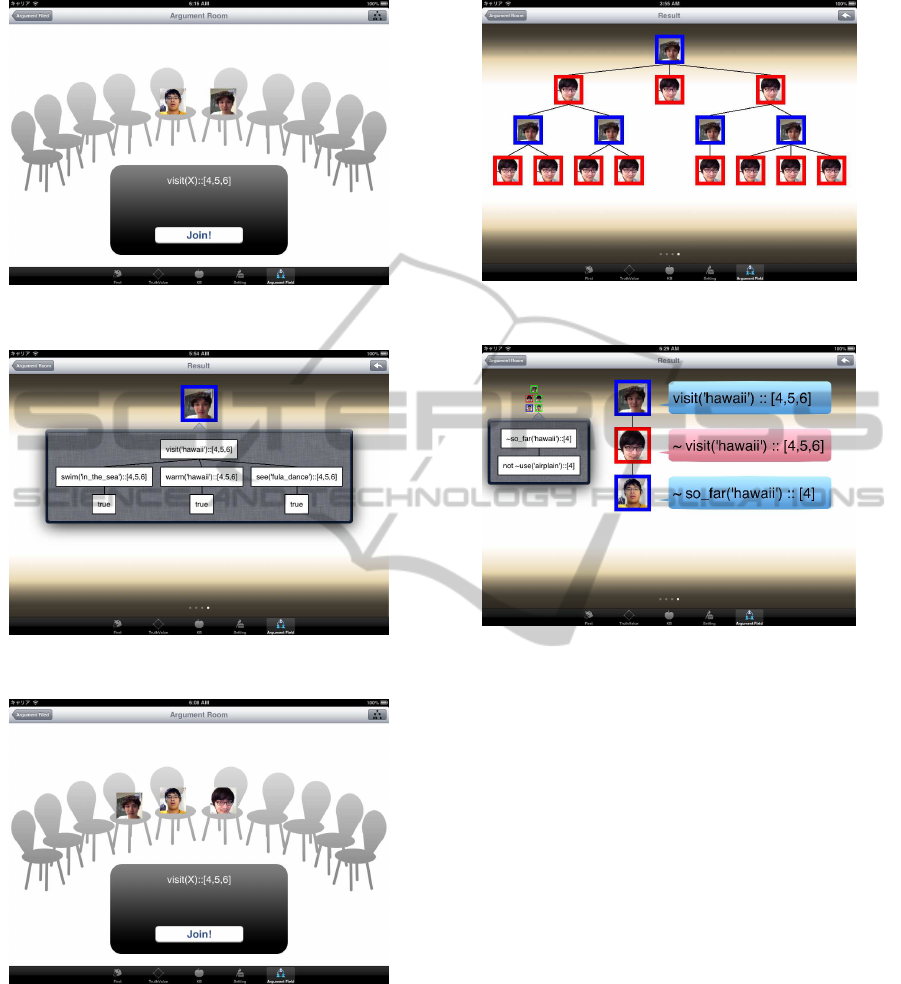

In this section, we illustrate a series of use of

PIRIKA on top of iPad which allows for asyn-

chronous argumentation, like a live argument using

typical screenshots appearing in the argument pro-

cess. This is because PIRIKA is the first implemen-

tation of the argumentation system on pervasive per-

sonal information equipments as far as we know, and

we think that in order for readers to understand both

our argumentation process and its realization on iPad,

it would be necessary to describe the overall story of

PIRIKA step by step from beginning to end without

omitting any details, even if it contains one of the

standard display of iPad.

We take up a so-called schedule management

problem which is a typical target for which agent sys-

tems have been developing their capabilities such as

interaction, negotiation, cooperation and so on. We

demonstrate a new approach to realizing the sched-

ule management system by the asynchronous argu-

mentation on iPad. Then participating agents use

not only calendar information but also preferential

knowledge base of their own in EALP. Following

Definition 1in Section2, the annotation employed is

a complete lattice of the power set of the monthly

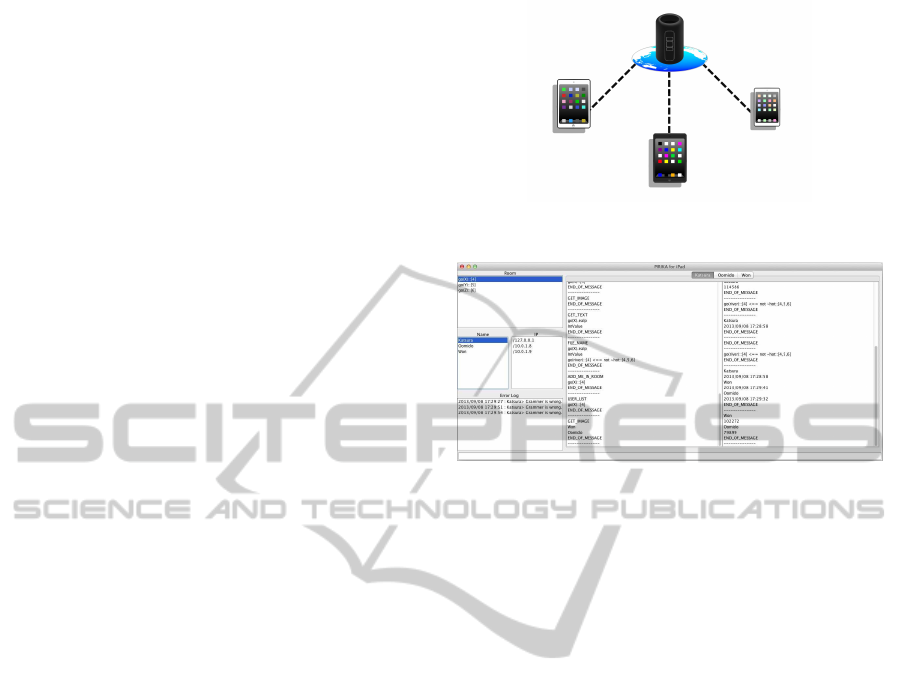

Figure 1: Asynchronous argumentation system PIRIKA on

iPad as a client-server system.

Figure 2: Screenshot of the PIRIKA server.

dates, i.e., hP ({1,...,31}),⊆i with the set inclusion

⊆ as the lattice ordering. This type of annotation

may be somewhat deviant, but allows for representing

temporal information, and hence works well conve-

niently with the schedule management problem, such

as visit(niigata) : {3,4} representing ‘we visit Niigata

on 3rd and 4th’. Furthermore, PIRKA allows for rep-

resenting and inquiring indefinite issues such as ques-

tions or problems satisfying certain conditions such

as visit(X) : Y, where X and Y are variables. Such

an expressivity is a desideratum particularly for the

schedule management system since we naturally in-

quire ‘When and where we should visit?’ for schedule

coordination (Oomidou et al., 2013)

4.1 PIRIKA on iPad as a Client-server

System

Figure 1 shows an overall look-and-feel of PIRIKA

on iPad as a client-server system. Figure 2 is a screen-

shot of the PIRIKA server where the presently con-

nected clients on the leftmost pane, and the commu-

nication log among the server and clients on the right

pane, are listed, so that users can monitor argument

processes generated by the dialectical proof theory

(Definition 15-17) in Section 3.

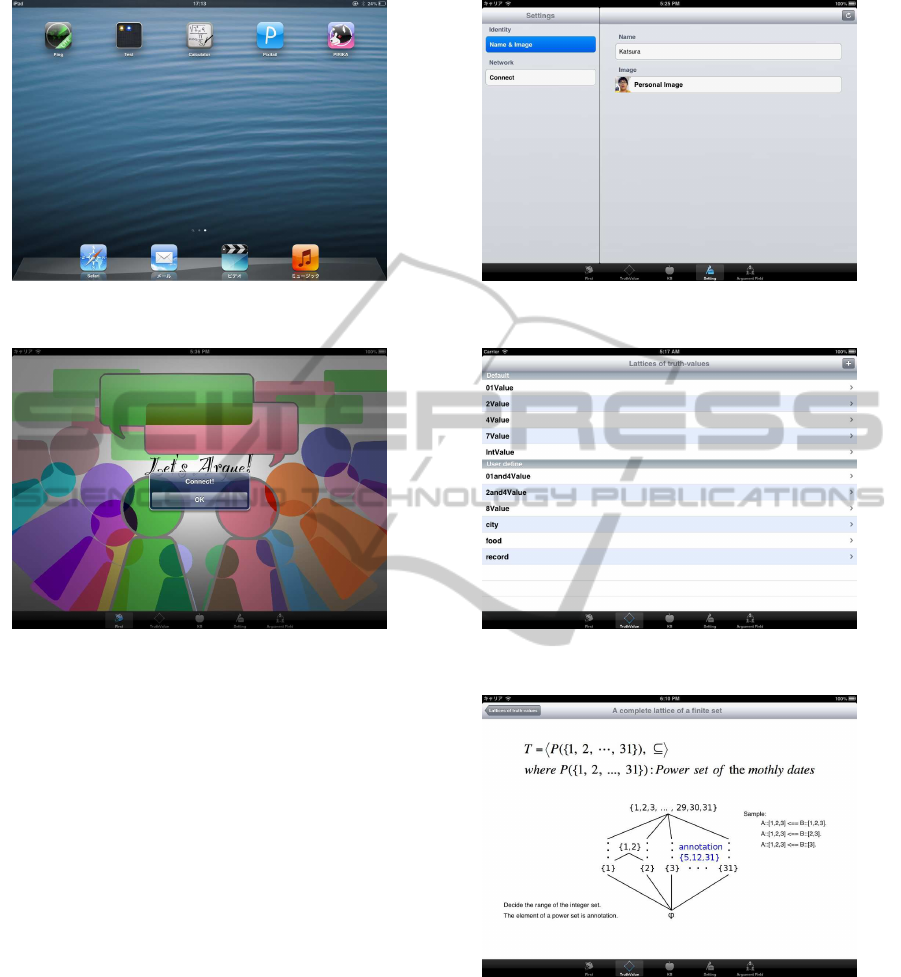

4.2 Invoking PIRIKA on iPad

Figure 3 is an initial screenshot on the standard screen

of iPad which includes the PIRIKA icon. By tapping

it, we proceed to the user/agent registration page.

AsynchronousArgumentationwithPervasivePersonalCommunicationTools

109

Figure 3: Pirika icon on the top page of iPad.

Figure 4: Connecting to PIRIKA server.

4.3 Registering Agents with the

Argument Server

Agent (as avatar of human) who wants to commit to

argumentation has to register its name and image with

the argument server which predesignates its IP and

PORT numbers. Figure 4 shows a successful connec-

tion to the server, and Figure 5 shows a screenshot for

registering agent’s name and image with the argument

server.

4.4 Preparing a Lattice of Truth Values

for Dealing with Uncertainty

In this stage, agents prepare a lattice of truth values

for dealing with uncertainty,depending on application

domains, following Definition 1. There is prepared an

editor for specifying truth values as a complete lattice.

Actually this is a standard text editor with which a

complete lattice of truth values are stipulated in terms

of Prolog.

Users can either use the built-in truth values or

specify a user-defined truth values by using the truth

Figure 5: Setting up agent information

Figure 6: Truth values editor.

Figure 7: Lattice of the power set of the monthly dates.

values editor as shown in Figure 6, in which the up-

per pane includes the built-in truth values such as

two values T W O = {t,f}, four values F OUR =

{⊥,t,f,⊤}, the power set of dates P({1,...,31}), the

unit interval of reals ℜ[0, 1], its product ℜ[0,1]

2

, and

Jaina’s seven values JAI N A = {t, f,i,ft, fi,it, fit},

and in the lower pane user-defined ones. Figure 7 de-

picts a built-in lattice of the power set of the monthly

dates.

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

110

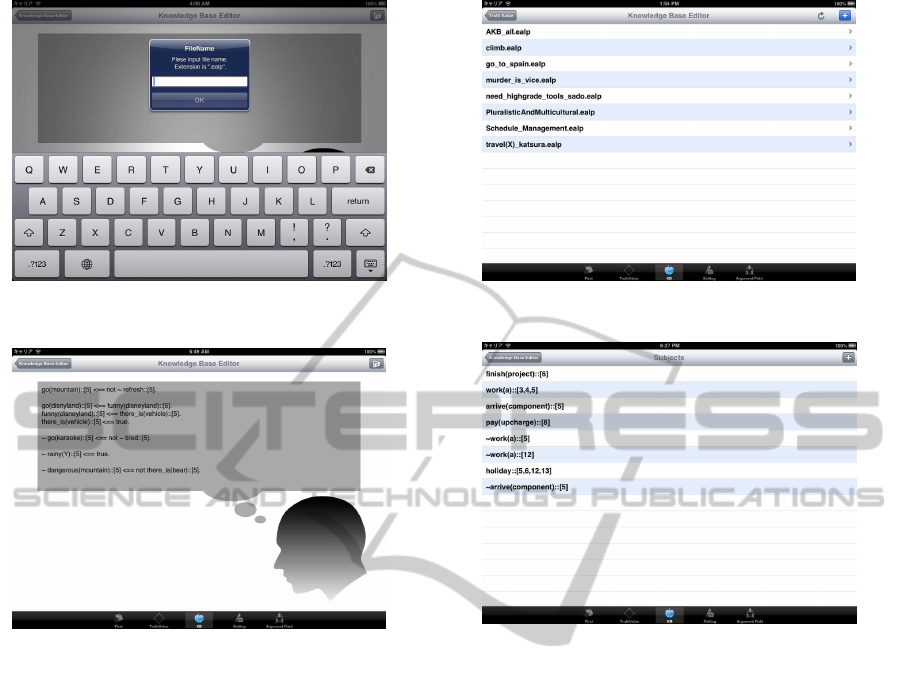

Figure 8: Knowledge base editor of PIRIKA on top of iPad.

Figure 9: Knowledge base for argumentation.

4.5 Designing Knowledge Bases under

the Specified Truth Values in Terms

of EALP

The annotation in EALP plays an essential role in

specifying argument knowledge since it allows agents

to represent their epistemic or cognitive states for

propositions that describe the argument world. Once

the annotation has been specified, the next step is

to provide the argument knowledge that agent con-

ceives. Agent specifies its own argument knowledge

in terms of EALP (Definition 3) by using the knowl-

edge base editor as in Figure 8 with the keyboard, re-

sulting in a bunch of knowledge listed in Figure 9.

Figure 10 shows a list of knowledge bases that agent

has accumulated with respect to every argument topic

it has committed so far.

4.6 Starting Argumentation on

Submitted Issues/Claims in LMA

The argumentation starts by submitting agendas or

selecting possible agendas which PIRIKA helpfully

Figure 10: List of various knowledge bases.

Figure 11: Suggested agendas by PIRIKA.

suggests to agents envisaged from the knowledge

base. Figure 11 shows a list of suggested agendas by

PIRIKA.

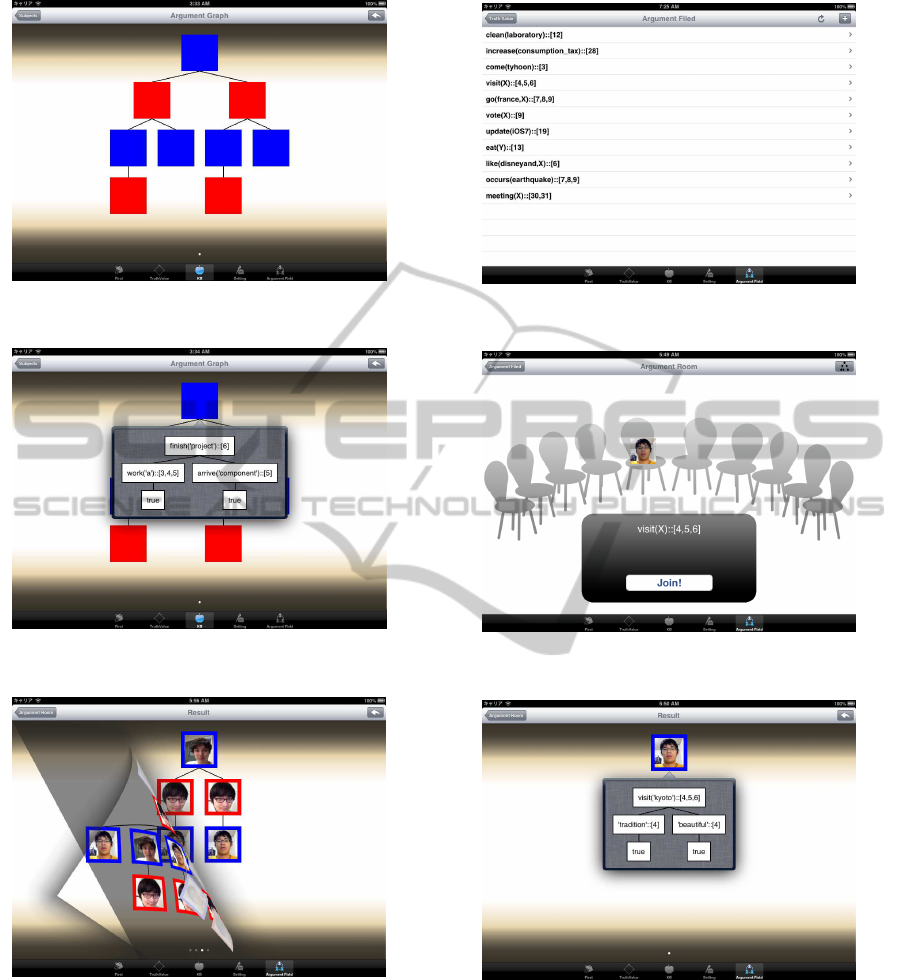

4.7 Visualizing the Live Argumentation

Process and Diagramming

Arguments

At this stage, PIRIKA launches an argument on an is-

sue or claim which has been submitted by the agent,

and generatesall the possible dialogue trees according

to the dialectical proof theory of LMA (Definition 15-

16). Figure 12 shows a dialogue tree which contains

only one winning dialogue tree (Definition 17). Such

a visualization or diagramming is the most important

part of PIRIKA since we are not only concerned with

argument results but also the overall structure and

flow of an argument now developing. We further can

see the structure of an argument itself in an argument

tree form by long pressing the node on the dialogue

tree (Figure 13). Then, we can of course use such

physical features as pinch, swipe, tap, etc. actions of

iPad that would be helpful to further support visual-

ization and diagramation (Figure 14).

AsynchronousArgumentationwithPervasivePersonalCommunicationTools

111

Figure 12: Dialogue tree.

Figure 13: Argument tree at a node.

Figure 14: Swiping for looking the next dialogue tree.

So far, one agent attempted to argue about his own

issue in a monological way. From here, we will il-

lustrate how agents can enter into the argumentation

asynchronously. Any agent can see what issues are

now being argued among agents by pressing the tab

‘argument field’ on the lower rightmost corner of the

screen, and commit to it if it wishes to do so anytime

and anywhere. In Figure 15, there can be seen many

issues, now developed on the Internert.

Figure 15: Entering into the asynchronous argumentation.

Figure 16: Asynchronous argument process (1).

Figure 17: Asynchronous argument process (2).

4.8 Asynchronous Argumentation

Process

In the argumentation field (agora), there is one agent

sitting on the chair (Figure 16). His argument is jus-

tified since there is no attacking argument from other

parties for now (Figure 17), subject to Definition 17.

Then, another agent comes to the agora on the

Internet, wanting to commit the on-going argument

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

112

Figure 18: Asynchronous argument process (3).

Figure 19: Asynchronous argument process (4).

Figure 20: Asynchronous argument process (5).

with his knowledge through his own iPad. Actually

it can join by clicking the ‘Join’ button on the agora.

Now the augment agora consists of two agents (Figure

18). Thus, the argument on the common issue begins

among two agents, and results in some justified argu-

ments. Figure 19 shows one of them. Furthermore,

the third agent appears in the agora, again wanting

to participate in the on-going argumentation with his

knowledge through his own iPad (Figure 20).

Figure 21: Argument graph (6).

Figure 22: Winning argument tree (7).

Likewise, the argumentation on the common issue

begins among three agents, and results in some dia-

logue trees. Figure 21 shows one of them.

4.9 Determining the Status of an

Argument

Figure 22 is a winning dialogue tree (Definition 17),

showing that the issue ‘visit(hawaii)::[4,5,6]’ is jus-

tified for now. It says that the travel destination

Hawaii was definitely sought as a result of the sched-

ule coordination through the LMA-based argumenta-

tion. This, however, is not a final justification status

of the given issue. The advent of further new agents

who may emerge from outside asynchronously may

change the result. This phenomena evidences no less

non-monotonicity in argumentation.

5 LESSONS LEARNED FROM

THE USES OF PIRIKA

We have attempted various experimental uses of

AsynchronousArgumentationwithPervasivePersonalCommunicationTools

113

PIRIKA on iPad over the Internet. The assessment of

its effectiveness should be done only through various

empirical uses since we have no theoretical commu-

nication model for PIRIKA for now. This also can

apply for other communication tools such as Twitter,

Line, email etc. that have begun as brainchild of en-

gineers. Someday in the future, however, psycholo-

gists or sociologists may be to analyze and explain

how and what those communication modes and styles

imply for people and society.

Here we summarize advantageous points at this

stage as follows, which we have confirmed from par-

ticipants in experimental and daily uses:

• Asynchrony in argumentation: This is our first in-

tended goal of asynchrony like in communication

with email, which was found potentially useful

since it allows agents and people to make argu-

ments at whim at any time but still in a logically

disciplined manner. Actual usefulness for users

will be verified through real or simulated ongoing

arguments.

• Logical and critical thinking: Argumentation

starting from grounds raises persuasive and crit-

ical power toward other parties. Put it differently,

the asynchronous argumentation tends to bring us

an attitude to take deeper thought all the time even

for the little things.

• Visualization of argument structure : We can no-

tice which part of the argumentative dialogue is

weak or strong since PIRIKA visualizes resulting

in dialogue structures. So we could put forward

alternativeargumentsin no time, takingadvantage

of the feature of the asynchronous argumentation.

6 CONCLUSIONS AND FUTURE

WORK

In this paper, we have proposed the asynchronous ar-

gumentation as a new way to communication with a

pervasive personal tool, iPad. We also have tried it

out, resulting in a good reputation from users who

participated in experimental uses in the daily life.

Somewhat philosophically speaking, the asyn-

chronous argumentation may be as well reworded as

asymptotic or incremental argumentationsince agents

could approach towards truth or justification every

time argument is put forward by an agent. So the

philosophy of the asynchronous argumentation might

be said to be sort of gradualism or incrementalism.

On the other hand, the asynchronous argumentation

obviously practices so-called non-monotonicity in its

pragmatic expansion of argumentation. We would

also say that it implicitly includes the laws of the

negation of the negation in the Engels’ dialectics

(Sawamura et al., 2000).

The next step is to port PIRIKA on iPad to mobile

phones like iPhone, a more pervasive personal tool.

It is expected that such an attempt will open up a new

horizon for a more deliberate and logical human com-

munication through computational argumentation re-

search as well as for the social network service in the

future.

The open source software and the video clip of

PIRIKA on iPad are available at URL http://www.cs.

ie.niigata-u.ac.jp/Paper/Research/aappct/visit(X).m4v,

PIRIKA-ios.zip. And also PIRIKA will become

available from the Apple store for free.

REFERENCES

Dung, P. (1995). On the acceptability of arguments and its

fundamental role in nonmonotonic reasoning, logics

programming and n-person games. Artificial Intelli-

gence, 77:321–357.

Kifer, M. and Subrahmanian, V. S. (1992). Theory of gen-

eralized annotated logic programming and its applica-

tions. J. of Logic Programming, 12:335–397.

Oomidou, Y., Sawamura, H., Hagiwara, T., and Riche, J.

(2013). Non-standard uses of pirika: Pilot of the right

knowledge and argument. volume 8291 of Lecture

Notes in Computer Science, pages 229–244. Springer.

Prakken, H. and Sartor, G. (1997). Argument-based ex-

tended logic programming with defeasible priorities.

J. of Applied Non-Classical Logics, 7(1):25–75.

Rahwan, I. and Simari, G. R. E. (2009). Argumentation in

Artificial Intelligence. Springer.

Sawamura, H., Umeda, Y., and Meyer, R. K. (2000). Com-

putational dialectics for argument-based agent sys-

tems. In 4th International Conference on Multi-Agent

Systems (ICMAS 2000), pages 271–278. IEEE Com-

puter Society.

Takahashi, T. and Sawamura, H. (2004). A logic of

multiple-valued argumentation. In Proceedings of the

third international joint conference on Autonomous

Agents and Multi Agent Systems (AAMAS’2004),

pages 800–807. ACM.

Tannai, S., Ohta, S., Hagiwara, T., Sawamura, H., and

Riche, J. (2013). The state of the art in the develop-

ment of a versatile argumentation system based on the

logic of multiple-valued argumentation. In Proc. of

International Conference on Agents and Artificial In-

telligence (ICAART2013), pages 217–224. INSTICC.

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

114