The Application of Mobile Devices for the Recognition of Malignant

Melanoma

Dmitriy Dubovitskiy

1

, Vladimir Devyatkov

2

and Glenn Richer

3

1

Oxford Recognition Ltd, Cambridge, U.K.

2

Bauman Moscow State Technical University, Moscow, Russia

3

Partner, Rising Curve LLP, Cambridge, U.K.

Keywords:

Skin Cancer, Mobile Device, Pattern Analysis, Decision Making, Object Recognition, Image Morphology,

Machine Vision, Computational Geometry.

Abstract:

Robotic systems and autonomous decision making systems are increasingly becoming a significant part of

our everyday routines. Object recognition is an area of computer science in which automated algorithms

work behind a graphical user interface or similar vehicle for interaction with users or some other feature

of the external world. From a user perspective this interaction with the underlying algorithm may not be

immediately apparent. This paper presents an outline of a particular form of image interpretation via mobile

devices as a method of skin cancer screening. The use of mobile hardware resources is intrinsically inter-

connected with the decision making engine built into the processing system. The challenging fundamental

problem of computational geometry is in offering a software - hardware solution for image recognition in

a complex environment where not all aspects of that environment can fully be captured for use within the

algorithm. The unique combination of hardware - software interaction described in this paper brings image

processing within such an environment to the point where accurate and stable operation is possible, offering

a higher level of flexibility and automation. The Fuzzy logic classification method makes use of a set of

features which include fractal parameters derived from generally understood Fractal Theory. The automated

learning system is helping to develop the system into one capable of near-autonomous operation. The methods

discussed potentially have a wide range of applications in ‘machine vision’. However, in this publication, we

focus on the development and implementation of a skin cancer screening system that can be used by non-

experts so that in cases where cancer is suspected a patient can immediately be referred to an appropriate

specialist.

1 INTRODUCTION

The wide-scale availability of mobile devices offers

the public a range of hardware with built in digi-

tal cameras, with an associated increased potential

for digital image processing. The fast-growing im-

age capturing CCD/CMOS array development is ca-

pable of generating ever larger amounts of data for

processing. The newer internet connections for such

devices, with 3G and 4G data transfer rates, could

deliver an image to power an image processing sta-

tion in an appectable period of time. Storage facili-

ties have also developed to extremely large capacities,

to the extent that a human is unable to process these

volumes of data by manual/visual methods. Given

this situation, technological developments in indus-

try and science will, in future, have to rely increas-

ingly on stable and robust robotic tools to interpret the

acquired data. This applies significantly to the area

medical diagnostics, although the application of auto-

mated approaches in this field still presents a number

of challenges. Innovations in the application of au-

tomatic image recognition will increasing help meet

these challenges in the future.

The increased storage capacity, improved data

transfer rates and processing speeds now enable the

development of image recognition tools for small

hand-held devices. A mobile device’s camera and

navigational human interface leads to a relationship

between the software and hardware built into the de-

vice; there is then a further relationship with the im-

age processing server that assists in the processing of

the complex mathematical equations that necessarily

underpin computerised image processing.

The particular concern of this paper is the use

of such devices for the recognition of malignant

140

Dubovitskiy D., Devyatkov V. and Richer G..

The Application of Mobile Devices for the Recognition of Malignant Melanoma.

DOI: 10.5220/0004803701400146

In Proceedings of the International Conference on Biomedical Electronics and Devices (BIODEVICES-2014), pages 140-146

ISBN: 978-989-758-013-0

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

melanoma associated with abnormal moles. A key

challenge is to overcome the limited ability of com-

puterised image processing techniques to replicate the

visual techniques that a human specialist uses when

making similar assessments. Consequently, the pro-

cesses used by computerised image recognition mod-

els have to be capable of producing a level of accu-

racy of assessment and diagnosis that is comparable

to that achieved by specialists, but at the same time

this must be done using approaches and algorithms

that are fundamentally different from the process of

human interpretation. A key factor is the need for

segmentation, that is the process of dividing a given

image into a number of segments that will each have

something to contribute towards carrying out an anal-

ysis in a meaningful way. A initial task is to determine

the boundaries of the captured image so that the anal-

ysis is only applied where appropriate. The ability to

compensate for variations in features, light conditions

and the nature of image itself requires an altogether

more complex approach.

These challenges can be addressed in a number of

ways. For example, the consideration of colours and

patterns within the image recognition algorithm con-

tribute towards the definition of boundaries and seg-

ments, including the essential external boundary of

the image. Much of this process is concerned with the

identification of edges of one sort or another (Abdou

and W.K.Pratt, 1979). Indeed this identification is a

pre-requisite for image recognition and a fundamen-

tal step towards ensuring stability and robustness in

decision-making. Establishing the relevant segments

of the image depends on two essential features of the

image: firstly, those areas that can be considered sim-

ilar to each other; and secondly, the identification of

discontinuity. The task of the image recognition pro-

cess is precisely to make the distinction between those

features.

The algorithm used in this application includes a

number of innovations. It does not depend on the

identification of first and second order gradients in a

conventional manner, nor does it make use of thresh-

olds in order to consider binarisation. Rather, self-

organising fuzzy sets are utilised in order to optimise

the Knowledge Data Base (KDB) for the application.

The system includes features that are based on the tex-

tural properties of an image defined in terms of fractal

geometric parameters including the Fractal Dimen-

sion (FD) and Local Texture Detectors(LTD) which is

an important theme in medical image analysis. How-

ever, in this paper we focus on one particular applica-

tion, namely, the skin cancer diagnosis for screening

patients through a mobile device.

2 SKIN CANCER FEATURE

DETECTION AND

CLASSIFICATION

Colour image processing is becoming increasingly

important in object analysis and pattern recognition.

There are a number of algorithms for understanding

two- and three-dimensional objects in a digital im-

age. The colour content of an image is very impor-

tant for reliable automatic segmentation, object detec-

tion, recognition, classification and contributes signif-

icantly to image processing operations required and

the object recognition methodologies applied (Free-

man, 1988). Colour processing and colour interpreta-

tion is critical to the diagnosis of many medical con-

ditions and the interpretation of the information con-

tent of an image by both man and machine. (e.g.

(E.R.Davies, 1997), (Louis and Galbiati, 1990) and

(Snyder and Qi, 2004)).

A typical colour image consists of mixed RGB

signals. A grey-tone image appears as a normal black

and white photograph. However, on closer inspec-

tion it may be seen that it is composed of individual

picture cells or pixels. In fact, a digital image is an

[x, y] array of pixels. We may already have a given

image of an object that can be described by the func-

tion f (x, y) and has a set of features S = {s

1

, s

2

, ..., s

n

}.

The key task is to examine a sample and to establish

how ‘close’ this sample is to the reference image, re-

quiring the creation of a function that can establish the

degree of proximity. All recognition is a process of

comparing features against some pre-established tem-

plate, a process that has to operate within the bounds

of certain conditions and tolerances. We may con-

sider four stages in this process: (i) image acquisition

and filtering (in order to remove noise, although even

at this stage, a proper understanding of what noise is

and what may be pertinent information is essential);

(ii) accurate location of the object, through edge de-

tection (iii) measurement of the parameters of the ob-

ject; and (iv) an estimation of the class of the object.

Various aspects of these stages are considered below,

with a focus on those features of design and imple-

mentation that are most advantageous for the devel-

opment of applications for skin cancer screening.

The image to be acquired has to be suitable for

integration within the application. In the case of

mobile devices the camera is intrinsically bound up

with the operating system of the device. Images ob-

tained using a typical camera of this type are rela-

tively noise free and are digitised using the mobile

device’s standard CCD/CMOS camera. Nevertheless,

the capturing of good quality images with consistent

brightness and contrast features remains critical. The

TheApplicationofMobileDevicesfortheRecognitionofMalignantMelanoma

141

most important aspect is compatibility with the sam-

ple images used. The system discussed in this pa-

per is based on an object detection technique that in-

cludes a novel mobile device segmentation method

that must be applied at the time of taking the picture.

This includes those features associated with an object

for which fractal models are well suited (Dubovitskiy

and Blackledge, 2012), (Dubovitskiy and Blackledge,

2008), (Dubovitskiy and Blackledge, 2009). This sys-

tem provides an output (i.e. a decision) using a knowl-

edge database which generates a result - the diag-

noses. The new ‘expert data’ in the application field

creates a knowledge database by using an automated

self learning technique. The old supervised training

model for objects is well known (Zadeh, 1975).

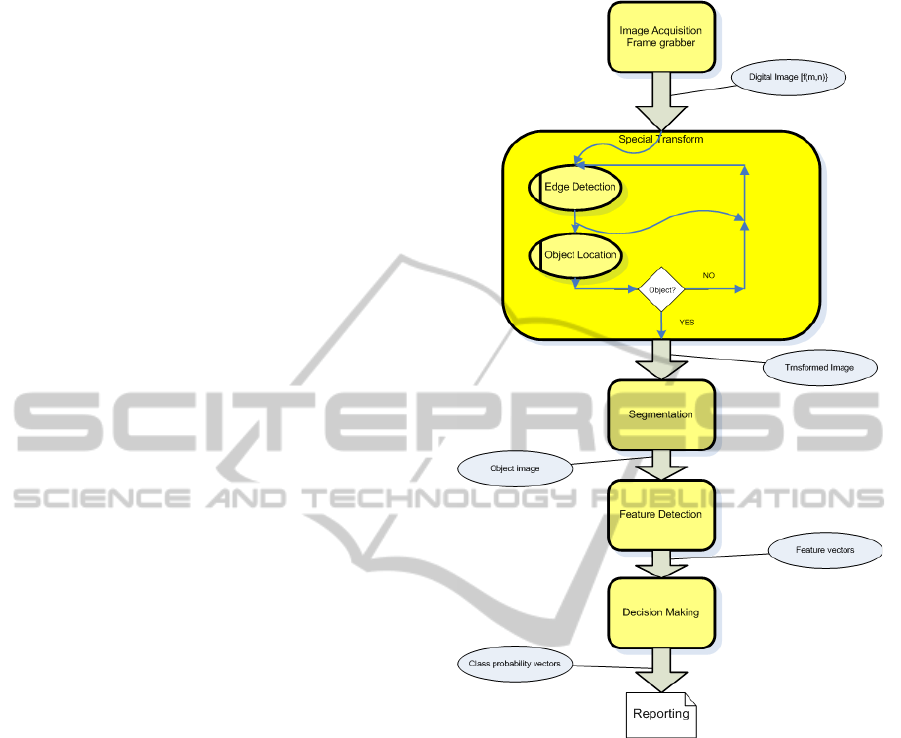

The recognition process is illustrated in Figure 1,

a process that includes the following steps:

1. Image Acquisition and Filtering.

A mobile device capture of a physical object is

digitally imaged and the data transferred to mem-

ory, e.g. using current image acquisition hard-

ware available on a mobile device. The image is

filtered to reduce noise and to remove unneces-

sary features such as light flecks. The most im-

portant of these is the Preliminary image Guide-

line Function(PGF). The PGF works recursively

to reach a stable object fixation. As soon as an

object or mole in our application is detected the

system transmits it to the server.

2. Special Transform: Edge Detection.

The digital image function f

m,n

is transformed

into

˜

f

m,n

to identify regions of interest and pro-

vide an input dataset for segmentation and feature

detection operations (Nalwa and Binford, 1986).

This transformation avoids the use of conven-

tional edge detection filters which have proved to

be highly unreliable in the application under con-

sideration here.

3. Segmentation.

The image { f

m,n

} is segmented into individ-

ual objects { f

1

m,n

}, { f

2

m,n

}, . . . to perform a sepa-

rate analysis of each region. This step includes

such operations as auto thresholding, morpholog-

ical analysis, edge or contour tracing (Dubovit-

skiy and Blackledge, 2009) and the convex hull

method (Dubovitskiy and McBride, 2013).

4. Feature Detection.

Feature vectors {x

1

k

}, {x

2

k

}, . . . are computed from

the object images { f

1

m,n

}, { f

2

m,n

}, . . . and corre-

sponding transformed images {

˜

f

1

m,n

}, {

˜

f

2

m,n

}, . . . .

The features are numeric parameters that charac-

terise the object, including its texture. The com-

puted vectors consist of Euclidean and fractal ge-

Figure 1: Recognition process.

ometric parameters together with one and two di-

mensional statistical measures. The border of

the object is described in one-dimensional terms

while the surface on and around the object is de-

lineated in two dimensions.

5. Decision Making.

This involves assigning a probability to a pre-

defined set of classes (Vadiee, 1993). Class

probability vectors are estimated through the ap-

plication of fuzzy logic (Mamdani, 1976) and

probability theory to the object feature vectors

{x

1

k

}, {x

2

k

}, . . . . Establishing a quantitative rela-

tionship between features and class probabilities

is a critical aspect of this process, and one that has

previously caused problems, i.e.

{p

j

} ↔ {x

k

}

↔ indicates a transformation from class proba-

bility to feature vector space. A ‘decision’ is

BIODEVICES2014-InternationalConferenceonBiomedicalElectronicsandDevices

142

the estimated class of the object coupled with a

probabilistic determination of accuracy (Sanchez,

1976).

Associations between the features and object pat-

tern attributes forms an automatic learning con-

text for the KDB (Dubovitskiy and Blackledge,

2009),(Dubovitskiy and Blackledge, 2008), whereby

the representation of the object is assembled into the

feature vector (Grimson, 1990), (Ripley, 1996). The

KDB depends on establishing equivalence relations

that express a fit of evaluated objects to a class with

independent semantic units. The pattern recognition

task is accomplished by assigning a particular class to

an object. In the next section we consider the main

focus of this paper, the use of mobile devices, and the

loop back to the automated learning algorithm.

2.1 Mobile Device Picture Acquisition

The graphical user interface of mobile device pro-

vides PGF. The user has to be taken through an au-

tomated system to evaluate light condition, focus,

shadow and other relevant features. If some of the

conditions are not suitable for image recognition the

system provides guidelines to assist with the process.

The guidelines may even ask the user to go to another

location where the light will be sufficient to support

the decision making process. The screen message

will indicate that the ”best result could be achieved at

that point”. The use of the inbuilt compass and grav-

itational sensors of mobile devices helps to produce

exceptionally good recognition results. The gravita-

tional sensor in combination with the object’s posi-

tion can guide the user to capture the most suitable

image perspective. The compass assists to select the

best lighting condition to avoid the point of evapora-

tion and shadows.

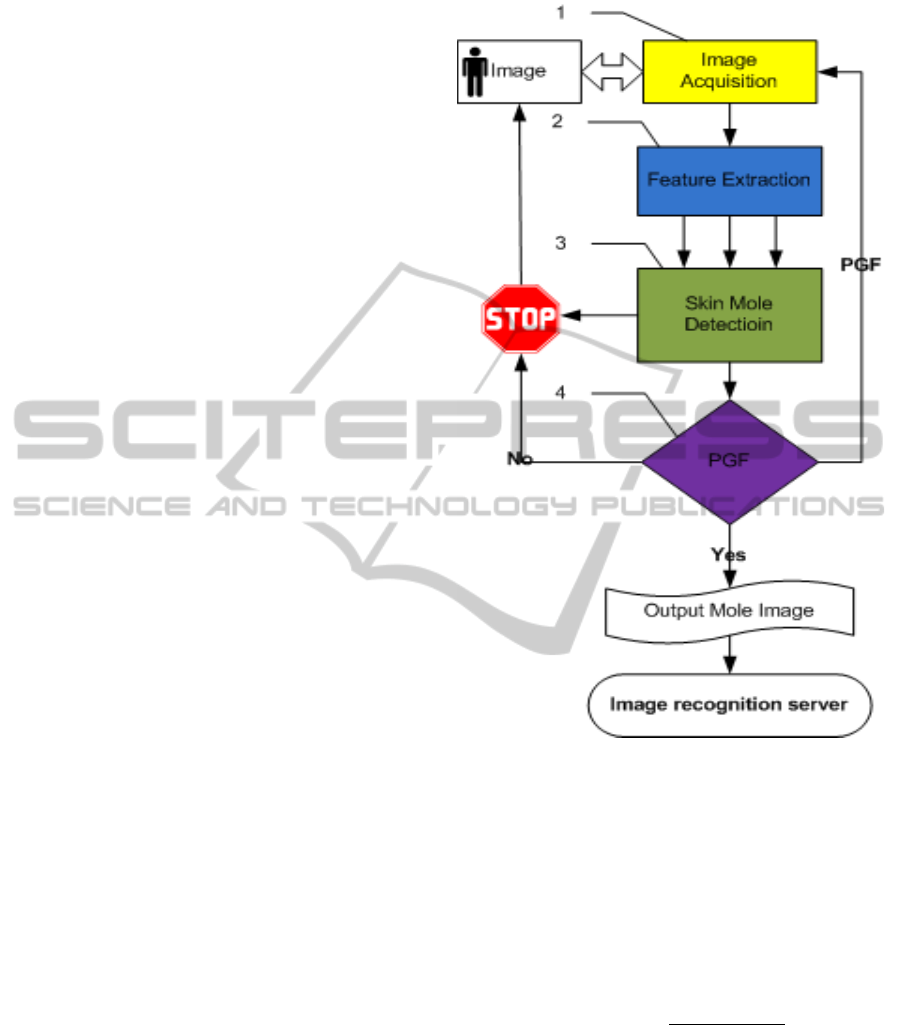

The block scheme diagram for the mobile device

segmentation for the mole and skin location is present

in Figure 2 and includes the following steps:

The Figure 2 represents a mobile device section

of the Malignant Melanoma recognition process. The

mobile device component has a loopback via PGF and

it would not accept an image with low quality, itself

applying a quality control system to the initial stages

of the process of skin cancer recognition. The main

steps of the mobile system are present in four sec-

tions of figure 2. The object acquisition is shown in

section 1 2 and it is a standard mobile device cam-

era control module. The output of section 1 is an

image function f (x, y) with set of exposure parame-

ters E = {e

1

, e

2

, ..., e

n

}. Mobile device pre-processing

software is present in section 2 and has set of parame-

ters the value between 0....1. The set of mobile device

Figure 2: Mobile device mole - skin searching process.

features is P = {p

1

, p

2

, ..., p

n

}. Each parameter rep-

resents the current environment for image capture, for

example Exposure time, Aperture, Focus section by X

and by Y , Light uniformity by X and by Y , Skin dis-

tribution, Mole positions, Mole distribution. Then the

vector P = {p

1

, p

2

, ..., p

n

} is input to section 3. Sec-

tion 3 is responsible for making decisions about the

image environment. The decision making function is:

S(d

exposure

) =

2π(P

n+1

− P

n

)

D

n+1

− D

n

The output S is the representation of the environ-

ment condition. If S is closer to 0 then the user is

advised to point to the mole. Then if S is closer to

1 the the system routes via PGF. The actual value of

S is device dependent and could be set through the

software installation procedure. The D is the scalar

distance for each parameter P for the particular num-

ber of iteration n. Section 4 provides guidelines for

the user. The PGF is the probabilistic function with

TheApplicationofMobileDevicesfortheRecognitionofMalignantMelanoma

143

a vector of values of parameters S = {d

i

}. The PGF

transform the vector of values of parameters S = {d

i

}

by use of the membership function m

j

(x) from Fuzzy

Logic theory and the output is vector G by using the

following expression:

G

i, j

=

1 −

N

∑

k=1

p

i, j

(S

k

i, j

) −

p

i, j

(S

i, j

)

p

i, j

(x

k

i, j

)

.

The matrix of weight factors p

i, j

is formed at the

stage of software installation to the mobile device ac-

cordingly. In other terms, it is touch pad calibration,

assigning weight coefficients for the i

th

parameter and

j

th

class.

The result of the weight matching procedure is

that all parameters G

i, j

have been computed. Then

each G

i, j

has a semantic table of guide rules for the

touch pad and user. After several iterations the PGF’s

parameters G

i, j

is to come to the 1. At that point the

image looks as shown on Figure 4 and ready to be

transferred to the main decision making server.

The novel PGF procedure allows the saving of

a lot of computational resources at the usual image

pre-processing stage for an accurate decision mak-

ing function. The usual low brightness, contrast, im-

age graininess and geometrical distortions no longer

inhibit the most efficient edge detection and texture

computation.

2.2 Fuzzy Logic Automated Leaning

Object recognition is another difficult part of digi-

tal image processing. Each object has to be present

in computer memory with all possible characteristic

features and as compact as possible, in order to al-

low real time processing. The basis for the object’s

area is textural feature. The Fractal structure is most

suitable for describing such objects from the natural

world. Some Euclidean and morphological measures

are also captured as part of property of the object.

All objects have a list of parameters. This list of pa-

rameters has been considered in previous publications

(Dubovitskiy and Blackledge, 2012), (Dubovitskiy

and Blackledge, 2008), (Dubovitskiy and Blackledge,

2009) and can be varied depending on the applica-

tion. Using an excessive number of parameters does

not impact the accuracy of recognition but can slow

down the whole process. Here we present a novel

approach to the organising of membership function

through a special automated learning procedure. The

use of a Fuzzy Logic engine with automated member-

ship function formation has several advantages. The

one disadvantage is that we have to extend the set of

parameters for an object with extra characteristic val-

ues. But as computational resources in modern CPU

and FPGA are nearly unlimited this is not a problem

and as a result we obtain a user friendly system. The

excessive features which do not characterise an ob-

ject will be insignificant by putting their coefficient

to 0 in the membership function during learning time.

The whole process can be divided onto three stages:

Decision making, Learning and Correction process.

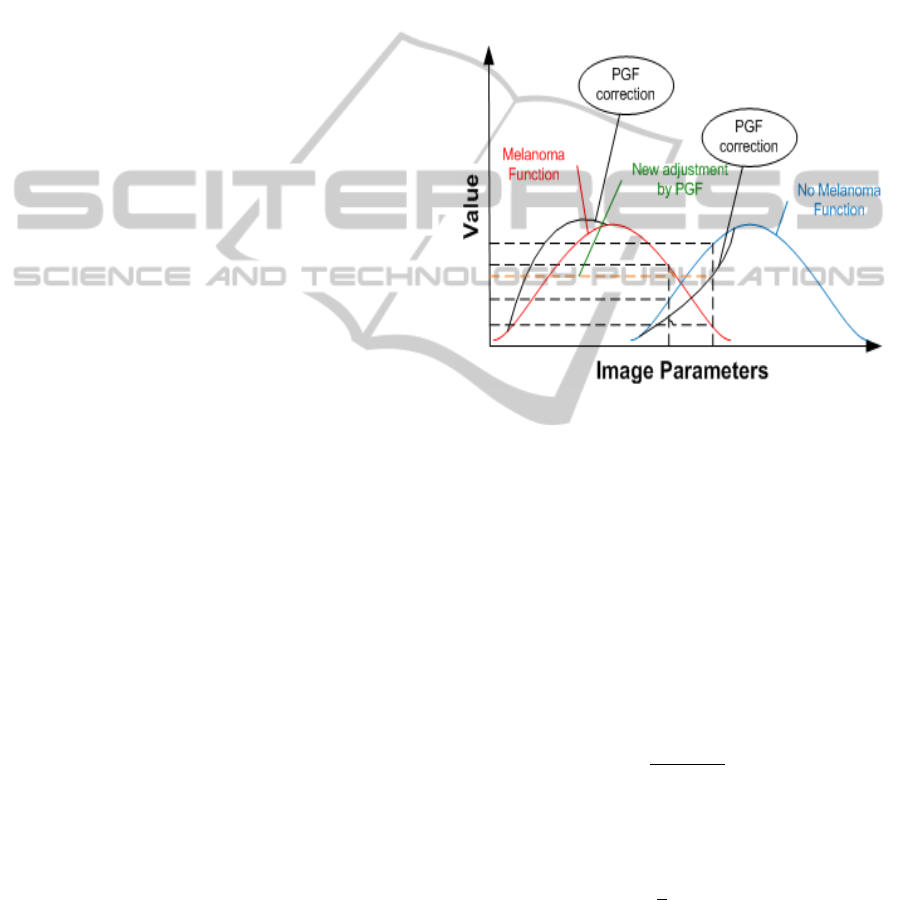

The Fuzzy Logic membership function is present

in Figure 3:

Figure 3: Fuzzy logic automated leaning.

The information about the object’s classes is

stored in the KDB and can be stored as a file (*.kdb)

and loaded to the system depending on the applica-

tion. The object information in the KDB is repre-

sented through probability coefficients for the partic-

ular class. The class probability is vector p = {p

j

}.

It is estimated from the object feature vector x =

{x

i

} and membership functions m

j

(x) defined in the

knowledge database and is shown on the blue and

green lines on Figure 3. If m

j

(x) is a membership

function, then the probability for each j

th

class and i

th

feature is given by value vector:

p

j

(x

i

) = max

"

σ

j

x

i

− x

j,i

· m

j

(x

j,i

)

#

where σ

j

is the distribution density of values x

j

at the

point x

i

of the membership function. The next step is

to compute the mean class probability given by

hpi =

1

j

∑

j

w

j

p

j

where w

j

is the weight coefficient matrix. This value

is used to select the class associated with

p( j) = min [(p

j

· w

j

− hpi) ≥ 0]

BIODEVICES2014-InternationalConferenceonBiomedicalElectronicsandDevices

144

providing a result for a decision associated with the

j

th

class. The weight coefficient matrix is adjusted

during the learning stage of the algorithm.

The automatic learning procedure uses the PGF

settings from the mobile device. These settings pro-

vide information about image quality. The G

i, j

vec-

tor is used to consider the correction value at each

recognition process. The correction value coefficient

is stored separately and updates the main KDB once

a day. The presence of correction value is not guar-

anteed to be used for membership function formation.

The decision to use automated correction is defined

by assessing the density distribution for the member-

ship function for each class of objects. The use of

density propagation as part of a particular class func-

tion is present in Section 2.3 and we use low density

as the indicator for correction. The actual value is

mobile device hardware dependent.

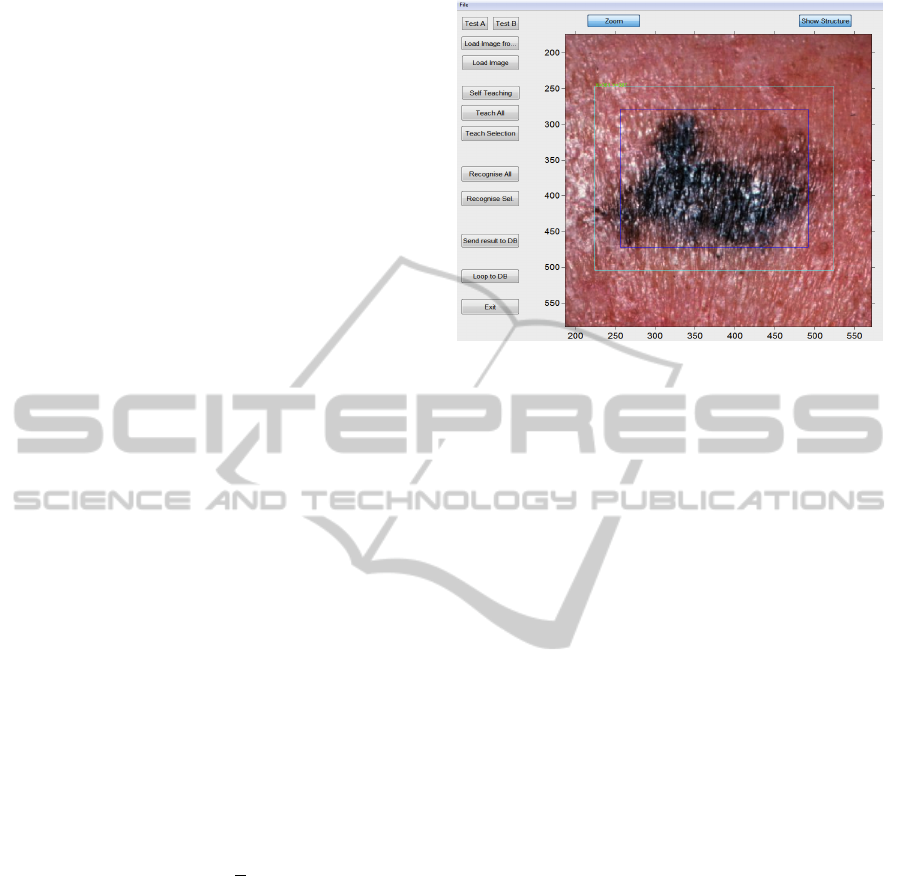

2.3 Image Recognition Server

The result of the image recognition process is pre-

sented via the GUI interface for the image processing

server on Figure 4. The device returns the diagno-

sis and graphical comments to the user. The system

can provide further recommendations, depending on

country of application, including contacts of a local

GP or other health care provider as necessary.

The decision criterion method considered here

represents a weighing-density minimax expression.

The estimation of decision accuracy is achieved by

using the density function

d

i

=

|

x

σ

max

− x

i

|

3

+ [σ

max

(x

σ

max

) − p

j

(x

i

)]

3

with an accuracy determined by

P = x

j

p

j

− x

j

p

j

2

π

N

∑

i=1

d

i

.

The system has been tested on 654 available im-

ages of skin conditions and produced an overall accu-

racy of approximately 74 percent correct responses.

The correct response is when the recognition system

is assigning the same class and precision within 10

percent deviation from the result of a dermatologist or

human eye estimate. The comparison to the biopsy is

not producing better performance as the robot is the

copy of dermatologist. There are some cases when

even a dermatologist would be unable to make a cer-

tain decision without special equipment. The oper-

ational use of the automated correction function the

system can deliver the highest accuracy. The best

result would be achieved if we could get agreement

for the estimate class separation for precision between

Figure 4: Image recognition server.

several dermatologists for initial setup. However, due

to ethical confidentiality we are unable to publish test-

ing data due to nondisclosure agreements. In difficult

cases an expert could interact with the image recogni-

tion robot to adjust the settings for a particular mobile

device.

In practice it is difficult to get exactly the same

skin evaluation parameters from several doctors. The

problem is with precision - how certain are they with

the diagnosis. By mixing knowledge databases of dif-

ferent sources we create chaos in automated decision

making. In our current paper we have decided to eval-

uate the system with one doctor for now.

3 CONCLUSIONS

The focus of this paper is Malignant Melanoma

screening and in particular with the process of devel-

oping a methodology for implementing applications

for such screening via mobile devices. The approach

we have described involves two essential elements: (i)

the partial analysis of an image in terms of its fractal

structure on a mobile device CPU to get the best ex-

posure condition for the available camera (ii) an au-

tomated learning system via a fuzzy logic engine to

classify an object based on its euclidean and fractal

geometric properties, achieved via access to the im-

age processing server. The combination of these two

aspects is used to define an approach to image pro-

cessing and an image analysis engine that is unique.

The inclusion of mobile device parameters - in terms

of improving vision systems such as the one consid-

ered here - remains to be fully understood and will

form part of future investigations.

As things currently stand, the approach to the

TheApplicationofMobileDevicesfortheRecognitionofMalignantMelanoma

145

analysis of images described above is not in itself suf-

ficient as a system of image recognition and classi-

fication. However, increases in processing rates, the

growth of the availability of relatively cheap digital

storage and the capacity to transfer data to and from

an image processing server at high speed all con-

tribute towards a significant future potential for the

use of hand held devices in the diagnosis of skin can-

cer associated with moles. Future work will include

improvements to the automation of the fuzzy logic

engine used with current hardware and mobile de-

vices. As reliability and validation is extended there

is considerable potential for the expansion of the ap-

proach we have described within the context of medi-

cal screening and to other areas of application beyond

the medical sphere.

ACKNOWLEDGEMENTS

The authors are grateful for consultancy of Dr Daniela

Piras.

REFERENCES

Abdou, I. and W.K.Pratt (1979). Quantitative design and

evaluation of enhancement thresholding edge detec-

tors. Proc. IEEE, 1(67):753–763.

Dubovitskiy, D. A. and Blackledge, J. M. (2008). Sur-

face inspection using a computer vision system that

includes fractal analysis. ISAST Transaction on Elec-

tronics and Signal Processing, 2(3):76 –89.

Dubovitskiy, D. A. and Blackledge, J. M. (2009). Texture

classification using fractal geometry for the diagnosis

of skin cancers. EG UK Theory and Practice of Com-

puter Graphics 2009, pages 41 – 48.

Dubovitskiy, D. A. and Blackledge, J. M. (2012). Tar-

geting cell nuclei for the automation of raman spec-

troscopy in cytology. In Targeting Cell Nuclei for

the Automation of Raman Spectroscopy in Cytology.

British Patent No. GB1217633.5.

Dubovitskiy, D. A. and McBride, J. (2013). New ‘spider’

convex hull algorithm for an unknown polygon in ob-

ject recognition. BIODEVICES 2013: Proceedings of

the International Conference on Biomedical Electron-

ics and Devices, page 311.

E.R.Davies (1997). Machine Vision: Theory, Algorithms,

Practicalities. Academic press, London.

Freeman, H. (1988). Machine vision. Algorithms, Architec-

tures, and Systems. Academic press, London.

Grimson, W. E. L. (1990). Object Recognition by Comput-

ers: The Role of Geometric Constraints. MIT Press.

Louis, J. and Galbiati, J. (1990). Machine vision and digital

image processing fundamentals. State University of

New York, New-York.

Mamdani, E. H. (1976). Advances in linguistic synthesis of

fuzzy controllers. J. Man Mach., 8:669–678.

Nalwa, V. S. and Binford, T. O. (1986). On detecting

edge. IEEE Trans. Pattern Analysis and Machine In-

telligence, 1(PAMI-8):699–714.

Ripley, B. D. (1996). Pattern Recognition and Neural Net-

works. Academic Press, Oxford.

Sanchez, E. (1976). Resolution of composite fuzzy relation

equations. Inf.Control, 30:38–48.

Snyder, W. E. and Qi, H. (2004). Machine Vision. Cam-

bridge University Press, England.

Vadiee, N. (1993). Fuzzy rule based expert system-I. Pren-

tice Hall, Englewood.

Zadeh, L. A. (1975). Fuzzy sets and their applications to

cognitive and decision processes. Academic Press,

New York.

BIODEVICES2014-InternationalConferenceonBiomedicalElectronicsandDevices

146