Gesture Vocabulary for Natural Interaction with Virtual Museums

Case Study: A Process Created and Tested Within a Bilingual Deaf Children

School

Lucineide Rodrigues da Silva, Laura Sánchez Garcia and Luciano Silva

Department of Computer Science, Federal University of Paraná, Curitiba, Paraná, Brazil

Keywords: Gesture Vocabulary, Virtual Museum, Natural Interaction.

Abstract: The research described in this paper aimed at creating a gesture interface for a 3D virtual museum

developed by a research group of Image Processing. Faced to the challenge of using sound methodologies in

order to create a genuine natural interface, the group joined to a Computer Human Interaction group that has

worked for seven years focusing the social inclusion and development of Deaf Communities. In this context,

the research investigated the state-of-the-art of Natural Interaction and gestures vocabulary creation in

related literature and placed the case study at a bilingual school (Brazilian Sign Language and written

Portuguese) for deaf children. The paper reports the results of some specially relevant works from literature

and describes the process of developing the vocabulary together with its validation. As the main

contributions of this research, we can mention the addition of a previous state – the observation of potential

users interacting with the physical scenario that motivates the innovative virtual uses in order to investigate

the actions and gestures used n the physical environment – to a well known author’s process that has as its

starting point the set of expected functions and the exemplification of a more active way of bringing

potential users to the stage of defining the right gestures vocabulary, which brings more help than just

interacting with users to get their opinion (asking them to match a feature to the gesture they would like to

use to employ it or demonstrating a gesture and seeing what feature users would expect that gesture to

trigger). Finally, the paper establishes the limitations of the results and proposes future research.

1 INTRODUCTION

The great challenge in gestural interaction is the

creation of the gesture vocabulary to be used in the

application (Nielsen et al., 2004). In many cases, this

task is done arbitrarily, considering only technical

aspects for the recognition of the gestures, which is

not adequate from the point of view of the user, who

first has to learn a vocabulary and only then get to

use it.

The Natural User Interface - NUI (Wigdor and

Wixon, 2011) is a concept built in recent decades

that has gained great momentum due to new

technologies which have begun to allow interaction

through gestures, touch and voice. NUIs purse to

make the interaction between the user and the

system easier and more intuitive, and can take

advantage of various devices to reach this objective.

The research described in this paper aimed at

creating a gestures interface for a 3D virtual

museum project of IMAGO research group. Faced to

the challenge of using sound methodologies in order

to create a genuine natural interface, the group

joined to a Computer Human Interaction group that

has worked for seven years focusing the social

inclusion and development of Deaf Communities. In

this context, the research investigated the state-of-

the-art of Natural Interaction in literature and placed

the case study at a bilingual school (Brazilian Sign

Language and written Portuguese) for deaf children.

Deaf people relate to the world in a gestural-

visual manner, and most are not fully proficient in

written Portuguese; this makes them one of the main

beneficiaries of gestural interaction, along with

illiterate people.

A virtual exhibition has several benefits. First, it

benefits the exhibitor, who keeps the objects safe

during the exhibitions, even when transport is

necessary. It also makes it possible to disseminate

the project in various physical locations. It also

allows the user to see works from different countries

and cultures in an easier and often more enabling

5

Rodrigues da Silva L., Garcia L. and Silva L..

Gesture Vocabulary for Natural Interaction with Virtual Museums - Case Study: A Process Created and Tested Within a Bilingual Deaf Children School.

DOI: 10.5220/0004886700050013

In Proceedings of the 16th International Conference on Enterprise Information Systems (ICEIS-2014), pages 5-13

ISBN: 978-989-758-029-1

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

way.

Additionally, the visitors are presented with a

new form of interacting with the exhibited objects

they cannot not experience in the real world.

While developing a gestural interface, the

objective should not be to make it generic, since

gestures are not universally interpreted, but rather to

develop specially an interface and interaction

environment for a given application (Nielsen et al.,

2004). In order to reach this objective, this research

utilized a user-centered approach to define the

gesture vocabulary.

The present paper is subdivided as follows: A

bibliographic review is presented in Section 2. The

proposed methodology is presented in Section 3.

Next, Section 4 discusses the planning and execution

of the experiments. Section 5 presents the

conclusion and future work.

2 REVISION OF LITERATURE

According to Nielsen (1993), “usability" refers to

how easily accessing the interface seems to the user

and is associated to five quality components:

learning curve, efficiency in executing tasks,

memorization, few mistakes, and satisfaction.

Norman and Nielsen (2010) think that gestural

interfaces led to a step backwards in usability, and

affirm that gestural systems need to follow basic

rules of interaction design to be properly called

“natural”.

Conducting tests with users is the most basic and

useful method to assess a system’s usability

(Nielsen, 1993). The test consists of locating

representative users, asking them to execute

representative tasks with the prototype, and noticing

what they manage to execute and what difficulties

they encounter.

Defining which gestures will be used, in other

words, composing the gesture vocabulary of a

system, is considered to be one of the most difficult

stages in the development of a gestural interaction

system (Nielsen et al., 2004).

Grandhi et al. (2011) affirm that very often the

gesture vocabulary is defined arbitrarily, considering

the ease of implementation, which forces the user to

first learn the vocabulary in order to utilize the

system afterwards.

In Boulos et al. (2011) a gesture-based

navigation system was developed for Google Earth.

The gestures it used were also defined arbitrarily,

taking the existing functionalities of the application

into account to create the gesture vocabulary.

On the way to a better product, Chino et al.

(2013) developed a georeferenced gestural

interaction application that uses gestures selected

from the addressed functionality but still without the

users participation, and they realized that the quality

of their prototype had been partially compromised

by that methodological decision.

Nielsen et al. (2004) propose a user-based

approach in four steps to define the gesture

vocabulary. The first step consists of identifying the

application’s functions, using existing applications

as parameters.

The second step refers to finding the gestures

that represent the functions identified in the first

step. With this objective, experiments with users are

conducted in scenarios that implement the functions

necessary for the application. In these scenarios, the

users are told about the functions they must request

from the test operator through gestures. All of the

experiments in this step must be recorded.

In the third step, the videos are evaluated in order

to extract the gestures used for the interaction.

The author emphasizes that the selection of

gestures must not be restricted to those identified in

the test, but rather that they should only be an

inspiration for defining the gesture vocabulary.

The last step consists of testing the resulting

gesture vocabulary, which could even lead to

changes in the vocabulary. This stage is composed

of three tests. In this step, a score should be

calculated and attributed to each test. At the end of

the tests, the lower the score, the better. Test 1

evaluates the semantic interpretation of the gestures.

It is necessary to give a list of the functions and to

present the gestures, asking the user to identify the

corresponding functions. The score is equal to the

number of mistakes divided by the number of

gestures in the list.

Test 2 evaluates the memorization of the

gestures. The user is shown the test vocabulary in

order for him or her to try it and understand it. The

names of the functions are presented in their logical

order of application. The user must execute the

gesture related to the function shown. Upon each

mistake, the presentation must be restarted,

presenting the vocabulary to the user at every

attempt. The scoring corresponds to the number of

times the presentation had to be restarted.

Test 3 is a subjective evaluation of the

ergonomics of the gestures. The gesture vocabulary

is presented and the user is asked to execute the

sequence x times.

Between the execution of each gesture, use the

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

6

following list to score each one: 1) Easy. 2) Slightly

tiring. 3) Tiring. 4) Very troublesome. 5) Impossible.

Saffer (2009) states that “simple, basic tasks

should have equally simple, basic gestures to trigger

or complete them” and, also, that good interactive

gestures are simple and elegant.

This author also says (2009) that since human

beings are physical creatures, they prefer to interact

with physical things. I this sense, the author

characterizes interactive gestures as the style that

allows for a natural interaction with digital objects in

a physical way. He comments about the product

designer Naoto Fukasawa´s observation that the best

designs are those that “dissolve in behaviour”,

adding that the promise of interactive gestures is in

the fact that we will empower “the gestures that we

already do and give them more further influence and

meaning”. He concludes, then, that “the most natural

designs are those that match the behaviour of the

system to the gestures humans might already do to

enable that behaviour”.

In order to involve the user into the process of

determining the appropriate gestures for the system,

Saffer proposes doing it along with the users, either

by asking them to match a feature to the gesture they

would like to use to employ it or demonstrating a

gesture and seeing what feature users would expect

it to trigger.

Based on Nielsen et al. (2004) procedure, we

proposed a methodology to define the gesture

vocabulary for a system for visiting a virtual

museum. In this process the authors share Saffer’s

characterization of natural gestural interfaces and

propose and alternative to bring users to the

vocabulary creation process. The experiment was

conducted with the participation of a deaf

community. This methodology is presented in the

next section.

3 METHODOLOGY FOR THE

CONSTRUCTION OF THE

GESTURE VOCABULARY

The analysis of similar work showed that, in general,

the methodologies for creating a gesture vocabulary

begin after defining the actions which the

application will use. Consequently, the process is

based only on defining the gestures according to the

desired actions. In our case, we began the process of

creating the vocabulary in the previous step, starting

with the following research question: “What actions

would the users execute in the physical environment

if they were allowed to handle objects in order to

learn about them?”

Considering the context of a museum

environment, as in the case of this project, we

concluded that, since in the real world we can only

observe the object from a distance, we did not

foresee the ways in which the users of the virtual

museum would take advantage of the possibilities

opened up by virtual innovative interaction.

Therefore, in this research we attempted to propose a

methodology that allows for the creation of a gesture

vocabulary even when there are no actions defined

for the application.

A three-dimensional visualization system makes

many contributions in this direction. Mendes (2010)

emphasizes that in this way, the objects can “be

accessed and explored virtually, with a high level of

detail, reducing the risk of irreversible damages due

to transport or physical handling”.

Besides the advantages obtained from the point

of view of the digitized object made available for

visualization, there are also other benefits to a user

who interacts with this system, such as more

interaction with the exhibited objects, or creating

greater interest among the public, which now has

another incentive to visit the exhibition.

Van Beurden et al. (2011) conducted research

comparing the use of gestural interaction with the

use of physical interaction devices. The result

demonstrated that, for the users, gestural interaction

is easier, more enjoyable, and natural, allowing for

intuitive and more engaging learning.

This analogy, similar to Saffer’s conception of

gestural interaction, together with the space to

provide innovative experiences in visiting virtual

museums if compared to the limited possibilities of

common visits to physical museums in general, led

us to explore the physical scenario as the one to

inform the designer of the capabilities needed.

The proposed methodology consists of three

stages with the objective of solving a given task

regarding the museums. 1) Identification of the set

of actions from the analysis of videos of users

behavior in the real scenario; 2) Creation of the

gesture vocabulary from the data collected in step 1

modulated by theoretical bases of Computer Vision

and Computer Human Interaction; 3) Validation of

the appropriateness of the vocabulary created.

The first stage defines the actions and gestures

that represent such actions from user observations in

a real environment. According to the objective of the

application to be developed, a scenario should be

prepared where the user can interact, in a natural and

unlimited manner, with physical objects similar to

GestureVocabularyforNaturalInteractionwithVirtualMuseums-CaseStudy:AProcessCreatedandTestedWithina

BilingualDeafChildrenSchool

7

those used in the application. This scenario should

be planned in such a way that the user is led to

execute a task that is sufficient to identifying the

necessary set of actions. The task must allow for

exploration of all the possible actions thought for the

application. All user interactions in this scenario

should be recorded using images, sounds and/or

other relevant means according to the context of the

experiment.

The analysis of the data produced in the real

scenario will help identify the actions and the ways

in which they were executed by the user. This

analysis is critical for defining the actions and

gestures. It is necessary to evaluate, from data,

which actions are relevant in the goal seeking and,

for such actions, which gestures were more used and

are therefore more likely to be recognized. After this

stage, the gesture vocabulary produced must be

validated.

The validation of the gesture vocabulary must

also take place with the user participation. The steps

that make up this stage are:

1. Validation of the set of actions defined related to

the fulfillment of the actions needed to execute

the application together with the evaluation of

the need to insert/modify any functions and/or

gestures in the application;

2. Validation of the set of gestures regarding the

adequacy of their use in fulfilling the actions of

the application;

3. Evaluation of user satisfaction while using the

application;

4. Evaluation of the sufficiency of information

about the actions/gestures supplied by the

application;

This validation stage can be carried out from

different approaches. We propose the development

of an application prototype so the user can interact

and ultimately evaluate it. This prototype does not

necessarily need to have all its gesture recognition

capabilities funcional. It is possible to simulate

gesture recognition, or even use images to represent

gestures.

Additionally, we propose the use of a

questionnaire for evaluation on the part of the user,

and another to be filled out by the person in charge

of the experiment after observing users’ interaction

with the application interface.

To validate the proposed methodology, a case

study of the virtual museum was conducted with the

cooperation of a deaf community, and is described in

the following section.

4 DESCRIPTION OF THE

CASE-STUDY

This section presents the planning and the execution

of the physical scenario experiment, the analysis of

the information registered in that scenario that

allowed the creation of the gesture vocabulary for

the application, and the planning and the execution

of the experiment with the prototype for its final

validation.

4.1 Experiment of Interaction of Deaf

Children with Physical Objects in

the Real World - Planning and

Execution

While planning our experiment, we came across the

following question: What type of object can,

simultaneously, motivate the participant to carry out

the proposed task and represent 3D objects common

in virtual museums?

While considering the type of object to be used,

context was identified as absolutely essential when

handling objects of personal use (and/or which are

part of people’s routine). This dependence on

context refers, on one hand, to the shape of the

object, especially in the case of objects supported

artificially in the museum, and on the other hand the

usefulness of the object, since it determines focuses

of observation. The dimensions and weight may also

influence the manners of handling.

In response to this issue, the following objects

were defined (see Figure 1 from left to right): a vase

made of paper, a box with decorative candles, a

sculpture with a support, a decorative ball and a

ceramic vase with a wavy shape. The sculpture and

the vases were chosen because they are objects

frequently found in museums and exhibitions.

The box and the decorative ball were selected to

verify the behavior of participants upon viewing

small objects with no clear use. It was also possible

to verify the difference, if any, in viewing a larger

object, such as the paper vase, and smaller ones,

such as the decorative ball. According to the objects

chosen, it was also possible to determine if it was

more natural for the participant to use one or two

hands during the visualization, as well as whether

the object’s size would influence this choice.

As soon as the objects were defined, it was

necessary to determine a task for the participant that

demanded viewing of the object from all

perspectives. The potential users wee asked to

describe the objects precisely and allowed to

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

8

Figure 1: Objects used during the experiments.

As soon as the objects were defined, it was

necessary to determine a task for the participant that

demanded viewing of the object from all

perspectives. The potential users wee asked to

describe the objects precisely and allowed to

manipulate them anyway they wanted to.

The entire experiment was recorded for

subsequent analysis. The setting was defined to use

three cameras, which would provide top, lateral and

frontal views of all the gestures executed by each

participant.

The experiments were conducted along with

students and teachers from the Prof. Ilza de Souza

Santos Municipal School of Special Education for

the Deaf. The experiment involved 15 people

divided into four groups. The groups were classified

according to each participant’s degree of proficiency

in Brazilian Sign language (Língua Brasileira de

Sinais, LIBRAS).

Throughout the text, identification of the

participants will be done using the group in which

the participant was located.

Student proficient in LIBRAS. Group A,

composed of five participants;

Student not proficient in LIBRAS. Group B,

composed of five participants;

Student not proficient in LIBRAS and with motor

impairment. Group C, composed of two

participants;

Teacher proficient in LIBRAS. Group D,

composed of three participants.

Each participant, with the exception of those in

Group C, was individually taken to a room where

there was a table supporting the objects to be

viewed. For the participants in Group C, the

experiment had to be assembled in a separate

location due to the need for space for the

wheelchairs to maneuver, which prevented this

group’s accessing the original experiment room.

Immediately at the beginning of the experiment,

it was perceived that the planned instructions were

somewhat unnecessary, since most participants

entered the room and began to interact with the

objects spontaneously.

Participants freely interacted with the objects.

The participants observed, commented on the

objects, and questioned the interpreter about them.

The importance of communication was noted

during the experiment. While participants from

Groups A and D talked about the characteristics of

each object during the interaction, and gave their

opinions about them, participants from Group B

barely exchanged words. In this group, there were

some cases where there was a blatant lack of

understanding regarding what needed to be done, as

well as a certain reluctance to visualize and

manipulate each object. With Group C, with younger

participants, their enthusiasm for participating was

clear. However, due to motor difficulty, not all the

signs could be interpreted, a fact that hindered

communication.

The recorded videos were analyzed for the

segmentation of the gestures and actions performed

by the participants. The following section presents

the analysis of the videos from the experiment in

detail.

4.2 Theoretically Supported Analysis

of the Videos for the Creation of

the Gestures Vocabulary

The videos of the experiment were personally

analyzed by the first author of this paper. Each

action performed by the participants was identified

and classified, except for the actions used for

communication between the participants and the

interpreter. Each user action was evaluated

according to the objective and the way in which each

gesture was performed, and a list with the

actions/objectives of all the participants was

produced.

From this list, the most recurrent actions and the

objectives that were applicable to the museum

context were selected to be used in the application.

The emerged recurrent actions were the following,

organized by the authors by semantic categories:

Pick up the object (from the table) – “Pegar”

(Figure 2);

Let the object go (from the hands to the table) –

“Soltar”;

Move the object closer (to the user) -

“Aproximar”;

Move the object away (from the user).

“Afastar”

Turn the object (in several directions,

along different axes) – “Girar”;

GestureVocabularyforNaturalInteractionwithVirtualMuseums-CaseStudy:AProcessCreatedandTestedWithina

BilingualDeafChildrenSchool

9

Observe the object (corresponding to none action

at all).

There were some isolated actions that were

dependent on specific characteristics of the object,

for example, throwing the decorative ball upwards.

In these cases, the actions were context-dependent

and were disconsidered for the application, as the

objective was to identify gestures applicable to the

majority types of objects present in museums.

From the characteristics of the gestures it was

possible to address the question raised during the

experiment planning. As for the existence of

differences between the visualization of large or

small objects, the experiment showed that bigger

objects elicited users to automatically use two hands

to pick them up or let them go. To turn and view

occluded parts of the object, even for smaller

objects, the students preferred to use both hands.

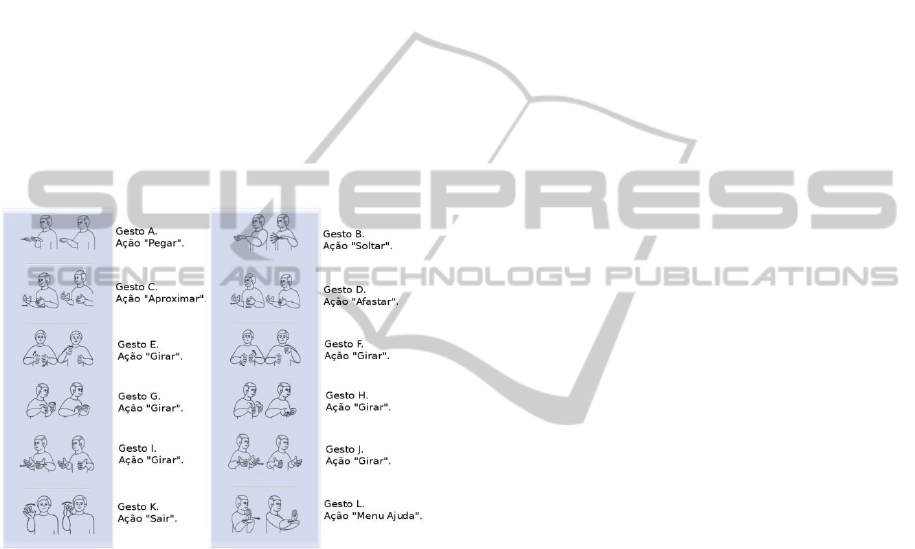

Figure 2: List of gestures and respective actions used in

the interface.

Although this stage was clearly based on data

analysis, established knowledge from Image

Processing and from Computer Human Interaction,

respectively for segmentation and semantic

completeness. In this way, the x,y,z axes were

adopted for objects movements to cover the objects

views within the 3D space, determining 6 basic

turning actions. Also, gestures and corresponding

actions were organized in subsets - by opposite pairs

and by semantic in general - to make their

perception and interpretation clearer and actions of

exit – “Sair” and help menu – “Menu Ajuda” were

added.

After defining the actions and gestures which

should compose our vocabulary, we went to the

validation stage.

4.3 Experiment for Validation of the

Constructed Vocabulary - Planning

and Execution

The validation must be carried out with the same

target public and objective (task) defined in the

experiment with the physical objects whose handling

generated the vocabulary.

The viewer developed by Vrubel et al. (2009) for

the visualization of 3D models used in the

experiment was developed within a project called

3D Virtual Museum (IMAGO) which encompasses

the process of digital preservation from the

acquisition of object data to their availability on the

Internet (Mendes, 2010). The 3D objects used in our

prototype came also from this project.

Buttons with images representing the gestures

were inserted in the viewer. The images were

created following the standards in Capovilla and

Raphael’s LIBRAS dictionary (2001). In this

lexicon, LIBRAS signs are represented by images

composed by two states and a symbol describing

some movement. The objective was for the

participants to press the buttons that represented the

gestures they wanted to use. The buttons used and

their respective actions are shown in Figure 2. The

action “turning" was subdivided, due to the necessity

of expressing the rotation of an object on the 3D

space. It is important to notice that this would have

been unnecessary if the prototype’s images

interpretation had worked out (i.e., if it had been

already implemented).

Initially the participants received instructions

about the objective of the experiment. The

instructions were composed of a video, a textual

description, and an image for each gesture. These

instructions were available in the program’s “help”

menu and could be accessed by the participant as

many times as necessary.

The interface and interaction environment

developed for the viewer initially showed a screen

for the participants to choose which object they

wanted to view (See Figure 3). On this screen, all

the available gestures were shown in order to not

influence the participant’s selection. As for

clarification, the buttons on the right side of the

screen of Figure 3 correspond exactly to the set of

buttons showed in Figure 2.

After selecting the object to be viewed, a screen

showed the participants the buttons they could use to

see the object from all points of view. (See Figure 4,

whose buttons also correspond exactly to the ones

showed in Figure 2).

The validation of the chosen gestures was carried

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

10

out with the same target public. This stage followed

the same execution parameters of the previous stage.

In this stage a group of five people was formed,

composed of three participants from Group A, one

from Group B, and one from Group D.

The first point observed during the validation

was how difficult it was to understand the task to be

Figure 3: Snapshot of the interface for selecting the

object.

Figure 4: Snapshot of the interface for manipulating the

selected object.

executed. Each participant was shown the

instructions present in the “help” menu with the

mediation of the interpreter, who conducted the

interpretation in real time. In many cases, the

participant only fully understood how to use the

program after interacting with it for a few moments.

After the interaction with the objects, a written

questionnaire was utilized to evaluate the gestures

and the selected actions.

All five participants were able to carry out the

required task and considered the available gestures

adequate for the action performed. However, four of

them had some difficulty in identifying the gestures

required to execute the task. In these cases, many of

the difficulties were related to the fact that they had

difficulties in understanding the interaction

environment, a difficulty external to what was being

evaluated, i.e. designed from the working

hypotheses that the prototype could not be

implemented in time. After some clicks they were

able to maintain interaction normally, which was

noted by the fact that all were able to view the

objects they wanted to.

To pick up an object on the screen, the

participant first had to select it and then click the

button associated to the gesture corresponding to

“pick up". This dynamic was a complicating factor

for three of the participants, and understanding it

took them some time. Some participants thought that

the gestures defined for the actions “pick up” and

“let go” were very similar, which ended up causing

confusion in the use of the corresponding buttons.

As for the actions selected for the application,

four of the five participants judged them to be

sufficient for the interaction. Four of the five

participants thought that the selected gestures were

easy to recognize. Many users associated the

gestures selected for the interaction to its LIBRAS

sign. This attribution occurs due to the fact that

many LIBRAS signs are iconic, especially those

related to spacial actions. In the case of the action

“pick up”, the selected gesture corresponds to the

verb “to pick up” in LIBRAS.

The videos used in the “help” menu were

considered relevant by the five participants and they

all enjoyed participating in the experiment. When

asked if there was anything that could be improved

to enable deaf people to use virtual museums on the

Internet, their only point regarded the problem of the

similarity of the gestures corresponding to “picking

up” an object and “letting it go”. After a better

evaluation of these gestures, we noticed that even in

the real world these gestures are very similar, and

what usually differentiates them is the context -

whether the person has the object in hand or not.

This is a question which needs to be studied more

deeply for gestural interaction applications in virtual

museums.

5 CONCLUSIONS

This paper presented a methodology for creating

gesture vocabularies for computational systems in

general. A case of a virtual museum was used to

validate the methodology with a focus on the deaf

community.

The tests demonstrated that the proposed method

was effective for creating the gesture vocabulary, as

proved by the validation experiment. The gestures

identified from the participant observation were

easily recognizable, understandable, and were

GestureVocabularyforNaturalInteractionwithVirtualMuseums-CaseStudy:AProcessCreatedandTestedWithina

BilingualDeafChildrenSchool

11

compatible with the actions performed, essential

prerequisites for a gestural system to be truly natural

for the user.

Readers should note that the number of users

involved does not allow for results generalization.

However, our proposal was concentrated on getting

indicatives of the processes’ difficulties. For this

kind of experiment, Nielsen (2000) recommends the

participation of 5 users. This is because he points out

that the test with one user can find approximately

30% of the problems and, also, that each new test

brings less new problems and more known ones,

being 5 the number to respond for 85% of the

problems and the best cost/benefit.

A graphic interface and interaction environment

was used for the representation of the gestures to

validate the vocabulary. According to the

experiment, this was a valid strategy for the

participant to view and handle the virtual object. Of

the five participants, all managed to carry out the

required task and thought that the available gestures

were adequate for the action performed.

From the experiments it was possible to notice

that it is easier to understand the activity when the

system is shown in use. Therefore, instead of

presenting textual content, image, and video as a

help, it would be more appropriate to produce a

video showing it in typical interactions. After using

the program for a time, the user adapted to the

environment.

As the main contributions of present work we

can see the following:

a) as for movements representation: the extension

of the graphical 2D language to represent some

movements not present in Brazilian Sign

Language (LIBRAS) though, as we argued

before, this would be of no use in “real virtual

museums” (where the image interpretation

capacities were implemented);

b) the extension of Nielsen et al’s process with a

previous state – the observation of potential users

interacting with the physical scenario that

motivates the innovative virtual uses. This stage

is specially critical when there is space for

innovation in the transposition of physical tasks

to virtual environments, as it is the case of virtual

museums;

c) the exemplification of an alternative and more

active way of bringing potential users to the

stage of defining the right gestures vocabulary

suggested by Saffer (2009) through the planning

and execution of a physical scenario to bring

insights about the innovative virtual space when

compared to the physical real one.

The use of new gesture recognition technologies

such as the Kinect can make the experience of

visiting virtual museums more pleasant. We

propose the application of this gesture

vocabulary using this device for its

interpretation. This would avoid the problems

introduced in the experiments by the necessity of

using an intermediate 2D representation of the

3D movements.

One critical experiment planned is the one

involving deaf and non deaf users in order to make a

comparative analysis of results. This will prove or

refute the hypotheses that results are extensible to

non deaf users and, additionally, allow us to see

whether the deaf culture – that sees the World from

a gestural-visual prism – brings any special feature

to our scene.

Still for future work we propose the planning and

execution of the experiments of creating and

validating the gestures vocabulary in an statistical

manner - crossing exhaustively objects different

variables (high, weight,…) and taking an statistic

sample.

We also propose the application of the

methodology for the creation of gesture vocabularies

proposed by the present paper for other types of

natural interactive applications to verify its degree of

generality related to application domains and, also,

to identify if the set of gestures and actions proposed

here can be seen as the “core” set of gestures and

actions for gestural interaction interfaces

environments in general.

REFERENCES

Moore, R., Lopes, J., 1999. Paper templates. In

TEMPLATE’06, 1st International Conference on

Template Production. SCITEPRESS.

Beurden, M. V., IJsselsteijn, W., and de Kort, Y. 2011.

User experience of gesture-based interfaces: A

comparison with traditional interaction methods on

pragmatic and hedonic qualities. In the 9th

International Gesture Workshop: Gesture in

Embodied Communication and Human-Computer

Interaction, Athens, Greece.

Boulos, M. N. K., Blanchard, B. J.,Walker, C., Montero,

J., Tripathy, A., and Gutierrez-Osuna, R. 2011. Web

gis in practice x: a microsoft kinect natural user

interface for google earth navigation. International

Journal of Health Geographics, 10.

Capovilla, F. C. and Raphael, W. D. 2001. Dicionário

Enciclopédico Ilustrado Trilíngue da Língua de Sinais

Brasileira. EdUSP - Editora da Universidade de São

Paulo, 2 edition.

Chino, D. Y. T., Romani, L. A. S., Avalhais, L. P. S.,

Oliveira, W. D., do Valle Gonçalves, R. R., Jr., C. T.,

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

12

and Traina, A. J. M. 2013. The nina framework - using

gesture to improve interaction and collaboration in

geographical information systems. In International

Conference on Enterprise Information Systems -

ICEIS, pages 58–66.

Grandhi, S. A., Joue, G., and Mittelberg, I. 2011.

Understanding naturalness and intuitiveness in gesture

production: insights for touchless gestural interfaces.

In Proceedings of the 2011 annual conference on

Human factors in computing systems, CHI, pages 821–

824, New York, NY, USA. ACM.

Imago, G. Museu virtual 3D: Preservação digital de

acervos culturais e naturais. http://

www.imago.ufpr.br/museu3d/.

Mendes, C. M. 2010. Visualização 3D interativa aplicada

à preservação digital de acervos naturais e culturais.

Master’s thesis, Universidade Federal do Paraná.

Nielsen, J. 1993. Usability Engineering. Morgan

Kaufmann, 1 edition.

Nielsen, J. 2000. “Test with 5 Users”, Alertbox.

http://www.useit.com/alertbox/20000319.html [Picked

up in May,2003].

Nielsen, J. 2003. Introduction to usability.

http://www.useit.com/alertbox/20030825.html.

Nielsen, M., St¨orring, M., Moeslund, T. B., and Granum,

E. 2004. A procedure for developing intuitive and

ergonomic gesture interfaces for hci. In Camurri, A.

and Volpe, G., editors, Gesture-Based Communication

in Human-Computer Interaction, volume 2915 of

Lecture Notes in Computer Science, chapter 38, pages

105–106. Springer Berlin / Heidelberg, Berlin,

Heidelberg.

Norman, D. A. and Nielsen, J. 2010. Gestural interfaces: a

step backward in usability. Interactions, 17(5):46–49.

Saffer, D. 2009. Designing Gestural Interfaces, O’Reilly

Media, Inc. Canada.

Vrubel, A., Bellon, O. R. P., and Silva, L. 2009. A 3D

reconstruction pipeline for digital preservation. IEEE

Conference on Computer Vision and Pattern

Recognition, 0:2687–2694.

Wigdor, D. and Wixon, D. 2011. Brave NUI World:

Designing Natural User Interfaces for Touch and

Gesture. Morgan Kaufmann, 1 edition.

GestureVocabularyforNaturalInteractionwithVirtualMuseums-CaseStudy:AProcessCreatedandTestedWithina

BilingualDeafChildrenSchool

13