Generative Modeling of Itemset Sequences Derived from Real Databases

Rui Henriques

1

and Claudia Antunes

2

1

KDBIO, Inesc-ID, Instituto Superior T

´

ecnico, University of Lisbon, Lisbon, Portugal

2

Dep. Comp. Science and Eng., Instituto Superior T

´

ecnico, University of Lisbon, Lisbon, Portugal

Keywords:

Hidden Markov Models, Itemset Sequences, Real-world Databases.

Abstract:

The problem of discovering temporal and attribute dependencies from multi-sets of events derived from real-

world databases can be mapped as a sequential pattern mining task. Although generative approaches can offer

a critical compact and probabilistic view of sequential patterns, existing contributions are only prepared to deal

with sequences with a fixed multivariate order. Thus, this work targets the task of modeling itemset sequences

under a Markov assumption. Experimental results hold evidence for the ability to model sequential patterns

with acceptable completeness and precision levels, and with superior efficiency for dense or large datasets.

We show that the proposed learning setting allows: i) compact representations; ii) the probabilistic decoding

of patterns; and iii) the inclusion of user-driven constraints through simple parameterizations.

1 INTRODUCTION

Recent work in the area of data mining reveals the im-

portance of defining learning methods to simultane-

ously mine temporal and cross-attribute dependencies

in real-world data (Henriques and Antunes, 2014).

For this purpose, multi-dimensional and relational

structures have been mapped as itemset sequences,

temporally ordered sets of itemsets. This turns the

mining of itemset sequences applicable not only to

transactional databases like market basket analysis,

but also over multi-dimensional databases as ob-

served in healthcare and business domains. However,

the common option to explore itemset sequences, se-

quential pattern mining (SPM), has not been largely

adopted due to its voluminous results and parameter-

ization needs. Additionally, although generative ap-

proaches allow for effective pattern-centered analyzes

of multivariate sequences of fixed order, there are not

yet experiments that show whether or not they can

be extended to consider itemsets of varying length

(cross-attribute occurrences) with acceptable perfor-

mance. In this work we rely on parameterized hidden

Markov models (HMMs) to deliver a compact and

generative representation of sequential patterns that

combine frequent co-occurrences (intra-transactional

analysis) and precedences (inter-transactional analy-

sis).

With the goal of overcoming the critical problems

of traditional SPM methods, some approaches rely on

compressed representations or define a deterministic

generator of sequential patterns (Mannila and Meek,

2000). However, they can still grow exponentially.

Additionally, these methods cannot disclose the like-

lihood of a pattern to be generated when assuming

underlying noise distributions. To tackle these draw-

backs, formal languages and HMMs have been ap-

plied to solve SPM task (Chudova and Smyth, 2002;

Laxman et al., 2005; Jacquemont et al., 2009). How-

ever, these generative solutions are not able to model

itemset sequences and depend on restrictive assump-

tions regarding the size, shape and noise of patterns.

This paper answers the question: to which extent

can HMMs address these challenges. To answer we,

first, propose solutions based on alternative Markov-

based architectures. Second, we evaluate their per-

formance by assessing the efficiency and the output

matching against deterministic outputs for synthetic

data and real databases. To the best of our knowl-

edge, this is the first systematized work on how to use

HMMs over multivariate symbolic sequences.

This paper is structured as follows. In Section 2,

generative SPM is formalized and motivated. Section

3 describes the proposed solutions. Results are pro-

vided in Section 4 and their implications synthesized.

2 BACKGROUND

Recent research shows that real-world databases can

264

Henriques R. and Antunes C..

Generative Modeling of Itemset Sequences Derived from Real Databases.

DOI: 10.5220/0004898302640272

In Proceedings of the 16th International Conference on Enterprise Information Systems (ICEIS-2014), pages 264-272

ISBN: 978-989-758-027-7

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

be expressively mined by mapping them as sets of

itemset sequences (Henriques et al., 2013). Here,

these mappings are seen as a pre-processing step of

the target methods. Sequential pattern mining (SPM),

originally proposed by (Agrawal and Srikant, 1995),

still is a default option to explore itemset sequences.

Let an item be an element from an ordered set Σ.

An itemset I is a set of non-repeated items. A se-

quence s is an ordered set of itemsets. A sequence

a=a

1

...a

n

is a subsequence of b=b

1

...b

m

(a⊆b), if

∃

1≤i

1

<..<i

n

≤m

: a

1

⊆ b

i

1

,..,a

n

⊆ b

i

n

. A sequence is max-

imal, with respect to a set of sequences, if it is not

contained in any other sequence of the set. The il-

lustrative sequence s

1

={a}{be}=a(be) is contained in

s

2

=(ad)c(bce) and is maximal w.r.t. S={ae, (ab)e}.

Definition 1. Given a set of sequences S and some

user-specified minimum support threshold θ, a se-

quence s ∈ S is frequent if contained in at least θ se-

quences. The sequential pattern mining task aims

for discovering the set of maximal frequent sequences

(sequential patterns) in S.

Considering a database S={(bc)a(abc)d, a(ac)c,

cad(acd)} and a support threshold θ=3, the set

of maximal sequential patterns for S under θ is

{a(ac), cc}. Traditional SPM approaches rely on pre-

fixes and suffixes, subsequences with specific mean-

ings, and on the (anti-)monotonicity property to de-

liver complete and deterministic outputs. However,

these outputs are commonly highly voluminous and

the frequency is a deterministic function (cannot flex-

ibly consider underlying noise distributions).

Alternatives have been proposed, with a first class

focused on formal languages and on the construction

of acyclic graphs that define partial orders and con-

straints between items (Guralnik et al., 1998; Lax-

man et al., 2005). Probabilistic generative models

as neural networks, hidden Markov models (HMMs)

and stochastic grammars hold the promise to deliver

compact representations given by the underlying lat-

tices (Ge and Smyth, 2000). The expressive power,

simplicity and propensity towards sequential data of

HMMs turn them an attractive candidate.

Definition 2. Consider a discrete alphabet Σ, a first-

order discrete HMM is a pair (T, E) that defines

a stochastic finite automaton where a set of con-

nected hidden states X={x

1

, ..x

k

} is expressed by a

probability transition matrix T =(t

i j

), with observable

emissions described by probability emission matrix

E=(e

i

(σ)) = (e

iσ

), where 1<i<k, 1<j<k and σ ∈ Σ.

Under a first-order Markov assumption, emissions

depend on the current state only. Let the system be in

state x

i

: it has a probability t

i j

=P(x

j

|x

i

) of moving

to x

j

state and probability e

iσ

=P(σ|x

i

) of emitting σ

item. (T, E) defines the HMM architecture.

Preferred emissions and transitions (paths with

higher generation probability) are usually associated

with regions that may have structural and functional

significance. For specific architectures, different pat-

terns such as periodicities or gap-based patterns can

be revealed by analyzing the learned (T, E) parame-

ters (Baldi and Brunak, 2001). Based on this observa-

tion, alternative Markov-based approaches have been

proposed for the mining of patterns using different:

i) task formulations, ii) assumptions, and iii) learning

settings (Chudova and Smyth, 2002; Ge and Smyth,

2000; Laxman et al., 2005; Murphy, 2002).

The commonly target tasks include the discov-

ery of generative strings

1

as consensus patterns and

profiles (across a set of sequences) or motifs (within

one sequence). These tasks have been mainly applied

to univariate sequences (Chudova and Smyth, 2002;

Ge and Smyth, 2000; Fujiwara et al., 1994; Mur-

phy, 2002), with some exceptions allowing numeric

sequences with a fixed multivariate order (Bishop,

2006) and graph structures (Xiang et al., 2010). Ad-

ditionally, the majority is centered on the discovery

of contiguous items, not accounting for items’ prece-

dences of arbitrary distance.

Previous work by (Laxman et al., 2005; Jacque-

mont et al., 2009; Cao et al., 2010) provide important

principles for the decoding of sequential patterns but

both fail to model co-occurrences.

What makes the problem difficult is that few is

known a priori about what these patterns may look

like. Typically, the number and disposal of prece-

dences and co-occurrences can significantly vary

across patterns. State-of-the-art approaches (Chudova

and Smyth, 2002; Murphy, 2002) place assumptions

regarding the type, length and number of patterns, and

commonly assume that patterns do not overlap. These

restricted formulations require background knowl-

edge that may not be available.Even so, traditional

learning settings of HMMs may still present signifi-

cant additional challenges to pattern-based tasks. One

of them is the convergence of emission probabilities.

The spurious background matches in long sequences

can lead to false detections, making pattern discov-

ery difficult. The Viterbi algorithm alleviates this

problem (Bishop, 2006) but does not guarantee the

convergence of emission probabilities. In literature,

three learning settings have been proposed. (Murphy,

2002) requires emission distributions to be (nearly)

deterministic, i.e., each state should only emit a single

symbol, although this symbol is not specified. This

is achieved using the minimum entropy prior (Brand,

1

Given an alphabet Σ, a generative string is a distribu-

tion over Σ allowing substitutions with noise probability ε

GenerativeModelingofItemsetSequencesDerivedfromRealDatabases

265

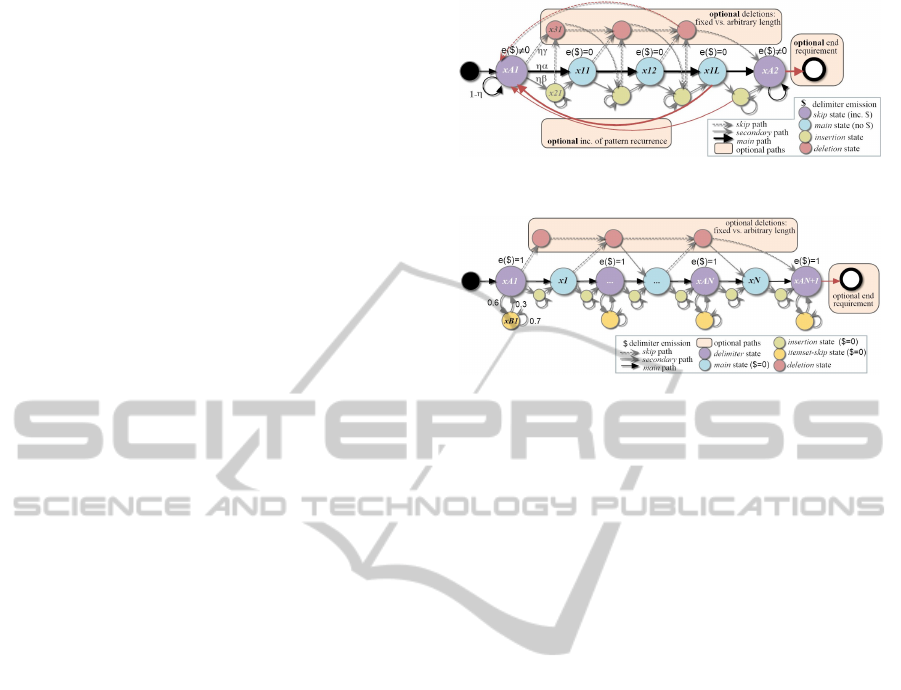

Figure 1: Pattern mining with fully inter-connected arch.

1999)

2

. An alternative is to use a mixture of Dirichlets

(Brown et al., 1993). Finally, Chudova (Chudova and

Smyth, 2002) introduces a Bayes error framework.

3 TECHNICAL SOLUTIONS

In this section we propose solutions to mine sequen-

tial patterns using HMMs without the need to rely

on assumptions. We overview existing architectures,

propose a simple data mapping for their application

over itemset sequences, define initialization, decod-

ing and learning principles, and, finally, incrementally

propose more expressive architectures.

3.1 Existing HMM Architectures

The paradigmatic case of pattern mining over uni-

variate sequences is to use fully inter-connected ar-

chitectures (Baldi and Brunak, 2001), illustrated in

Fig.1, plus an efficient method (based on propagation

on graphs or dynamic programming) to exploit the

model. Such method can retrieve patterns of arbitrary

length by exploiting the most probable transitions and

emissions. The criteria of whether is frequent or not

may either depend on the ranking position of the pat-

tern or on the overall generation probability.

Although the fully inter-connected architecture

can be always used as a default option, in order

to minimize the introduced problem of emissions’

convergence there are alternative HMM architec-

tures with sparser connectivity. A basic architecture

adopted for motif discovery in univariate sequences is

one that explicitly models the pattern shape and uses

a distribution that either guarantees the convergence

of emission probabilities, such as in (Murphy, 2002),

or allows for a fixed error threshold, such as in (Chu-

dova and Smyth, 2002). An important assumption for

these architectures, illustrated in Fig.2, is whether the

target pattern emerge from the supporting sequences

or/and from the recurrence of the pattern within each

sequence. For this case, a new state and transitions

2

Assuming e

j

to be multinomial emissions for a x

j

state,

entropy is given by: H(e

j

)=−Σ

σ

e

jσ

log(e

jσ

)

Figure 2: Motif discovery with shape-specific architecture.

Figure 3: LRA: precedences of arbitrary length.

{t

45

,t

55

} need to be included, and x

4

→ x

1

transition

deleted (t

41

=0). Additionally, transition probabilities,

T , need to be carefully initialized. For instance, the

self-loop transitions encode the expected length of the

inter-pattern segments by using, for instance, a geo-

metric distribution.

However, this architecture does not support pat-

terns with non-contiguous items. For the discovery

of more relaxed patterns, Left-to-Right Architectures

(LRAs) (Baldi and Brunak, 2001; Liu et al., 1995)

are commonly adopted in biology and speech recog-

nition. LRAs consider both insertions (to allow spar-

sity) between pattern items and deletions (skip states)

characterized by void emissions. Deletions can be

used both to skip noisy occurrences or discover pat-

terns with reduced length. With LRAs, a large num-

ber of precedences can be retrieved through the anal-

ysis of emissions along the main path. These emis-

sions can be though as the set of symbols for aligning

sequences. Fig.3 illustrates this architecture. Uniform

initialization of transition probabilities without a prior

that favors transitions toward the main states should

be avoided in order to guarantee that main states are

selected (instead of only insert-delete combinations).

3.2 Proposed Solutions

To be able to process itemset sequences under a

Markov assumption, we propose a simple mapping of

each sequence of itemsets introducing a special sym-

bol for delimiters, Σ ∪ {$}. Illustrating, a sequence of

itemsets (ab)ca(ac) is now mapped into a univariate

sequence $ab$c$a$ac$, where $ is the symbol that

delimits co-occurrences.

Under this mapping, we can apply existing HMM

architectures prepared to deal with univariate se-

quences. The retrieval of patterns from the underlying

lattices results in combined sequences of both regular

items and delimiters. Empty itemsets (sequent delim-

iters) are removed from the decoded patterns.

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

266

3.2.1 Structural Principles

Initialization Principles. To define the initialization

of transition probabilities, T, we propose the use of

metrics based on simple statistics over the dataset S

and on pattern expectations. Fig.2 shows the ini-

tializations for motif-oriented architecture where the

average length of sequences is 20 items, and where

the probability of visiting an item belonging to a

pattern is α=33,(3)% based on the expected average

pattern size (3 items) and recurrence (9 inter-pattern

items). When alternative paths are available (as in

fully-interconnected architectures), a random weight

(ε>0) can be added to each transition in order to fa-

cilitate the learning convergence.

Finally, the emission probabilities, E, should be

equal for all the items in order to not bias the learn-

ing. The emission probabilities of delimiters should:

i) slightly increase for fully-interconnected architec-

tures in order to guarantee the distinction between

precedences and co-occurrences, and ii) slightly de-

creased for LRAs to avoid that all of the main path

emissions converge to the delimiter symbol.

Learning Settings. The distribution underlying the

learning of emissions probabilities must guarantee

strong convergence of emissions for the accurate de-

coding of patterns, but simultaneously avoid a too

strong convergence that limits the coverage of inter-

esting patterns. The use of entropy or Dirichlet distri-

butions offer too strong convergence that restricts the

decoding of multiple patterns along the model paths,

while the use of the Bayes error rate (Chudova and

Smyth, 2002) was observed to be difficult for pat-

terns with significant autocorrelation and with a peri-

odic structure (as bcbcbc or aaaa). The use of Viterbi

(Bishop, 2006) is a good compromise since it guaran-

tees the learning convergence without requiring a too

restrictive number of eligible emissions per state.

Decoding Principles. Beyond the definition, initial-

ization and learning of the generative model, there is

the need to define principles for a robust and efficient

decoding of patterns from lattices. First, the use of the

anti-monotonic property to prune paths. Second, the

use of probability thresholds that traduces the crite-

rion that defines whether a pattern is or not frequent.

This threshold should be weighted by the length of

the pattern to avoid a bias towards small patterns.

3.2.2 Extending the Existing Architectures

Although the existing architectures can be applied as-

is using the proposed data mapping and parameter ini-

tializations, they are prone to decoding errors. Note

the case where a state emits a reduced number of

items with high probability, but one of these items is

Figure 4: CoIA: discovery of co-occuring items.

Figure 5: IPA: discovery of inter-transactional patterns.

the itemset delimiter. For this common case, the dis-

tinction between precedences and co-occurrences be-

comes blurry, in particular if the state has a self-loop

transition (as in fully-interconnected architectures).

To improve accuracy, we propose new architectures

with dedicated states to emit delimiters.

Before introducing them, consider an extension of

LRAs where main and insert states only emit regular

items, delimited by two states that can only emit the

delimiter item with transitions to self-looping states.

This architecture, referred as CoIA (Co-occuring

Items Architecture) and illustrated in Fig.4, captures

intra-transactional patterns by seeing each itemset as

a univariate sequence. A transition to the initial state

can be used to consider the pattern recurrence within

a sequence.

Two variations can be considered over this archi-

tecture. First, an end state can be linked to the ar-

chitecture. This guarantees that, at least, one intra-

transactional pattern per sequence is used to learn the

left-to-right emissions. The end state can be imple-

mented by adding an ending symbol at the end of

each input sequence. Second, deletion states can be

removed. This turns the intra-transactional patterns of

fixed length, which simplifies the learning, although it

restricts the original potential for decoding patterns of

arbitrary length along the main path.

Now consider the proposed Itemset-Precedences

Architecture (IPA) illustrated in Fig.5. With this ar-

chitecture we can model inter-transactional patterns

by decoding learned emissions along the main path.

Two aspects of IPA should be noticed. First, insert

states are used to remove non-frequent items. Second,

each state dedicated to emit delimiters has a transi-

tion to a self-looping state in order to allow for gaps

between itemsets. In this way, we transit from con-

GenerativeModelingofItemsetSequencesDerivedfromRealDatabases

267

Figure 6: SPA: discovery of sequential patterns.

tiguous items to item precedences.

Beyond not capturing intra-transactional patterns,

IPA suffers from another drawback. Since there is no

guarantee that most of the input sequences will reach

state x

p

, the significance of precedences decoded from

the first portions of the path is greater than from the

last portions. This turns the pattern decoding algo-

rithms more complex as they need to reduce the tol-

erance (cut-off thresholds) along the path in order to

avoid the output of patterns prone to errors.

Similarly to the CoIA architecture, we can con-

sider a variation of IPA that includes deletion states,

which allows for a well-distributed level of signifi-

cance for the learned probabilities across regions.

Finally, for the learning of generative models that

combine precedences and co-occurrences, we pro-

pose the integration of the previous CoIA and IPA ar-

chitectures. A CoIA architecture is applied between

each sequent pair of delimiter states from the IPA ar-

chitecture. A simplification of the resulting architec-

ture, sequential patterns architecture (SPA), is illus-

trated in Fig.6. SPA is prone to deliver shapes as the

one described by the (ab)(bcd)a pattern.

The number of discovered precedences can be ar-

bitrary by introducing deletion states across delim-

iter states. The size of the intra-transactional pat-

terns can be also arbitrary (unless the user is inter-

ested in specific pattern shapes) by considering dele-

tion states within each CoIA component. Finally,

the end requirement or the recurrence within each se-

quence (loop to initial state) are possible variations.

Beyond reducing the probability of decoding pat-

terns that are not frequent, SPA has a very efficient

decoding step. There is only the need to analyze com-

binations of emissions along the main path.

3.2.3 Convergence of emissions

A critical drawback of previous SPA, IPA and CoIA

architectures is that they cannot model a large number

of patterns. These architectures rely on one main path

only, where each state commonly emits a reduced

number of items with significant probability, which

commonly results in a compact set of patterns. This

turns this method to be not competitive with determin-

istic peers and, therefore, of limited utility. Although

one can reduce the threshold probabilities of the de-

coding phase or decrease the convergence threshold

of the adopted HMM learning algorithm (to relax the

convergence of emissions), this significantly degrades

the quality of the output patterns.

A simple solution to avoid this problem is to adopt

an iterative scheme, where each iteration is composed

of three phases – learning, decoding, and masking of

patterns – until patterns cannot be further decoded.

We propose an alternative solution that relies on

multiple paths, so the sum of the compact sets of pat-

terns from each path approximates the true number of

frequent patterns. The number of paths can be defined

by dividing the expected number of frequent patterns

by the average number of patterns able to decoded

from each SPA component.

4 RESULTS

The target hidden Markov models

3

were adapted from

the HMM-WEKA extension prepared for classifica-

tion (implemented according to (Bishop, 2006; Mur-

phy, 2002) sources). The extensions were imple-

mented using Java (JVM version 1.6.0-24) and the

following experiments were computed using an Intel

Core i5 2.80GHz with 6GB of RAM.

We adopted synthesized datasets based on IBM

Generator tool

4

by fixing values for all, except one,

of the parameters, and by varying the value for the

remaining parameter. The default dataset contains

m=2.500 sequences, with an average of n=10 trans-

actions each, each transaction with l=4 items on av-

erage. The alphabet has 1.000 items. The average

length of maximal patterns is set to 4 and maximal

frequent transactions set to 2. The values for different

sequential patterns and transactional patterns were set

to 1.000 and 2.000, respectively. This default setting

generates near 10.000 sequential patterns for a sup-

port of 1% (with the majority of them having more

than 5 items), and more than 400 sequential pattens

for a support of 4%. The varied parameters include

the number of items per itemsets, the number of item-

sets per sequence, and the number of available items

(density). These combinatorial set of datasets were

tested for the architectures introduced in previous sec-

tion, whose properties are illustrated in Table 1.

In order to validate if the proposed solutions have

an acceptable performance, it is critical to assess ef-

ficiency of the learning-and-decoding stages against

3

Software: http://web.ist.utl.pt/rmch/software/hmmevoc

4

http://www.cs.loyola.edu/∼cgiannel/assoc gen.html

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

268

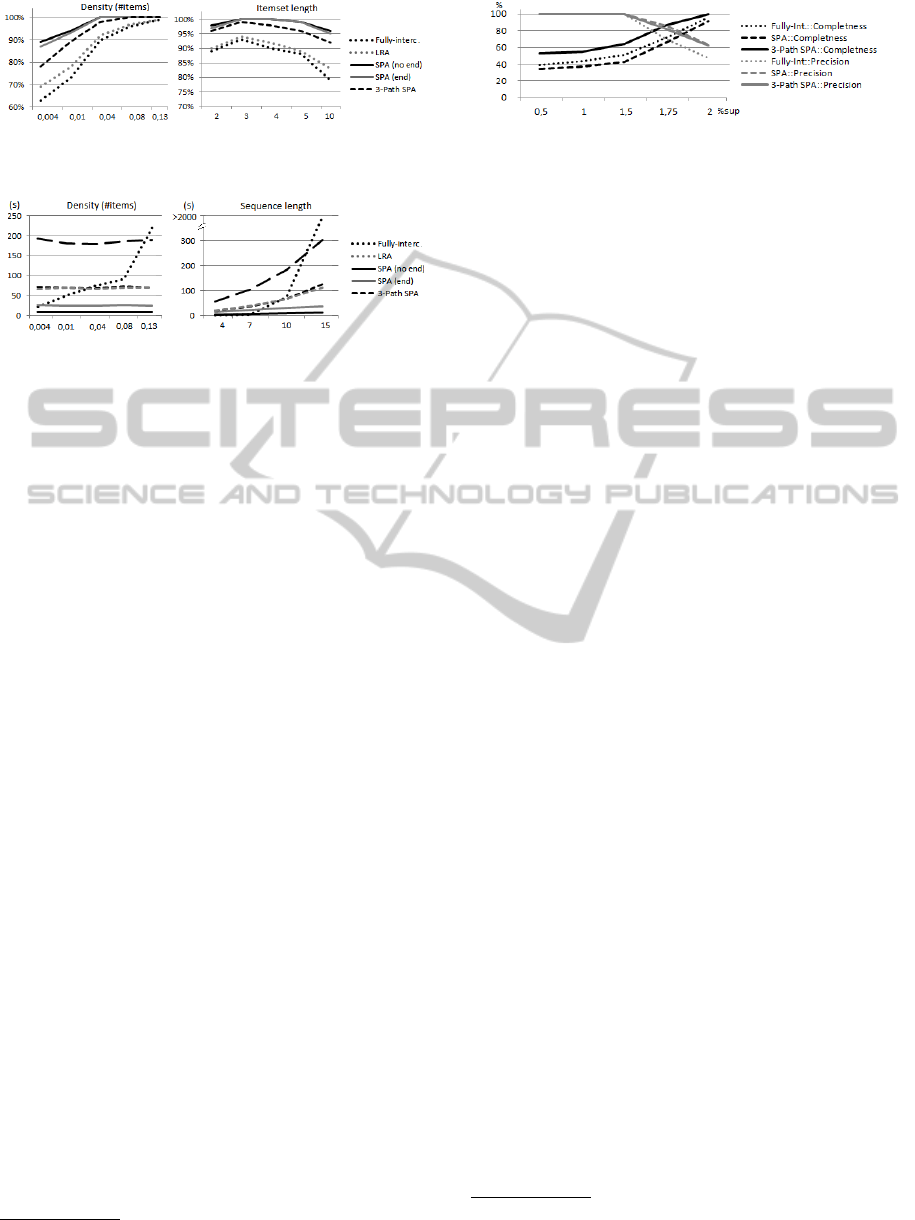

Figure 7: Completeness and precision of alternative archi-

tectures for varying minimum support.

traditional SPM approaches and their ability to extract

all frequent patterns (completeness) and only those

ones (precision) (Jacquemont et al., 2009). These

metrics provide an understanding on whether is it

possible to decode patterns from compact generative

models that match the output of deterministic ap-

proaches. Completeness is the fraction of frequent se-

quential patterns that were decoded by our approach.

Correctness or precision is the fraction of decoded se-

quential patterns that are also retrieved by determin-

istic miners.

Completeness =

|GenerativeOut put∩DeterministicOut put|

|DeterministicOut put|

Precision =

|GenerativeOut put∩DeterministicOut put|

|GenerativeOut put|

The provided results for these metrics are an aver-

age using 10 datasets per parametrization. Addition-

ally, we statistically tested the significance of the ob-

served differences by using a paired two-sample two-

tailed t-Student test with 9 degrees of freedom.

4.1 Completeness-precision

An initial view on how the generative outputs com-

pare against deterministic outputs for varying lev-

els of support is depicted in Fig.7. When assessing

these results against the number of frequent patterns

of the dataset, two major observations can be derived.

First, our generative approach is able to cover all fre-

quent itemsets with support above ∼5%, and the com-

pleteness level degrades for lower supports (2% sup-

port delivers >3000 frequent patterns) although the

larger architectures can still cover a large number of

Table 1: Tested HMM architectures.

Architecture Properties

Fully Interc. 10 states. Delimiter emissions allowed on every state.

LRA

Main path with 14 states (max number of items and

delimiters in pattern using expectations). Delimiter

emissions allowed on main and insertion states.

SPA (no end

requirement)

Max N precedences with max L co-occurrences each

(N=8 and L=4 are the default data expectations).

SPA (end

requirement)

Max N precedences with max L co-occurrences each

(N=8 and L=4 are the default data expectations).

Multi-path SPA Three SPA (end requirement) paths.

Figure 8: Completeness of alternative architectures against

parameterizable datasets (θ=4%).

frequent patterns (>1500). Second, precision levels

are 100% above 1% of support, which means that

our approach is able to only deliver patterns whose

frequency is >1% – important since generative ap-

proaches allow for noisy occurrences. Multi-path

SPA is significantly superior than remaining options

in terms of completeness, while all the proposed ar-

chitectures were significantly better than traditional

fully-interconnected and LRA architectures in terms

of precision.

Completeness. Fig.8 illustrates the completeness of

the proposed architectures to capture patterns with

support above 4%. Note that an increase of support

to 6% results in an approximated levels of 100% for

all the architectures across datasets.

Two major observations result from the analy-

sis. First, multi-path and fully-interconnected archi-

tectures achieve a good completeness since they can

focus on different subsets of probable emissions along

the alternative architectural paths. Second, the lev-

els of completeness degrade for higher densities and

itemset length. This is a natural result of the explosion

of patterns discovered by deterministic approaches

under such hard settings. Note that an increase of

multi-path SPA to six paths is able to hold complete-

ness levels above 96% for all the adopted settings.

Precision. Fig.9 illustrates the precision of the pro-

posed architectures, that is, the fraction of decoded

patterns deterministically frequent (support higher

than 1.5%). Note that a decrease of support to 0.8%

results in an approximated levels of 100% for all the

architectures across datasets. Generative approaches

hold high levels of precision (>90%) across the ma-

jority of dataset settings. There is a slight decrease of

precision for short itemsets since the number of de-

terministic patterns is smaller than the average num-

ber of decoded patterns and for large itemsets due

to a cumulative decoding error associated with larger

patterns and the intra-transactional size constraints

adopted for SPA architectures. Additionally, the ob-

served decrease in precision for high levels of spar-

sity is not only explained by a reduced set of de-

terministic patterns (potentially smaller than the de-

coded set) but also by an intrinsic difficulty to guar-

antee the convergence towards a reduced set of emis-

GenerativeModelingofItemsetSequencesDerivedfromRealDatabases

269

Figure 9: Precision of alternative architectures against pa-

rameterizable datasets (θ=1.5%).

Figure 10: Efficiency against parameterizable datasets.

sions that can block larger decoded outputs. Finally,

fully-interconnected and LRA architectures are not as

competitive as SPA-based architectures due to the ad-

ditional error propagation associated from not con-

straining delimiter emissions to dedicated states.

4.2 Efficiency

The comparison of efficiency for the alternative archi-

tectures against PrefixSPAN

5

(Pei et al., 2001), one

of the most efficient deterministic SPM algorithms,

applied with a support threshold of 1% is illustrated

in Fig.10. Under this threshold, the number of de-

terministic frequent patterns vary between 1.000 pat-

terns (sparser and smaller datasets) to near 100.000

patterns (denser and larger datasets).

Generative approaches are particularly suitable

over dense datasets against deterministic approaches,

whose performance rapidly deteriorates for densities

above 10%. Datasets with densities beyond 20%

are very common across a large number of domains.

Interestingly, the performance of the generative ap-

proaches does not significantly change with varying

densities. This is explained by a double-effect: learn-

ing convergence deteriorates with an increased den-

sity, but this additional complexity is compensated by

a higher efficiency per iteration since there is a sig-

nificantly lower number of emission probabilities to

learn per state.

Additionally, generative approaches scale better

with an increased number of itemsets per sequence

than PrefixSPAN. Under the default settings, PrefixS-

PAN is only efficient for sequences with less than 15

itemsets. Understandably, fully-interconnected and

LRA are the most efficient solutions due their inher-

ent structural simplicity.

5

http://www.philippe-fournier-viger.com/spmf/

Figure 11: Completeness-precision for Foodmart dataset.

4.3 Real-world databases

Our approach was applied in sparse and dense real-

world databases. Fig.11 illustrates the performance

of our approach over itemset sequences derived from

the Foodmart data-warehouse

6

pentaho/mondrian/mysql-foodmart-database.

Each itemset sequences is composed of temporally

ordered basket sales from a specific customer be-

tween 1997-98 (average of 6 items per basket and 6

baskets). Two important observations result from this

analysis. First, the best Markov-based architectures

are able to achieve 100% precision levels while still

being able to recover more than half of the frequent

patterns for very low levels of support (θ=0.5%).

Second, for medium levels of support (2%), our

generative approach is able to cover all the frequent

patterns, although it additionally delivers patterns

with a lower support (1.5-2%) that can penalize the

precision.

Secondly, we applied our approach over the dense

Plan dataset

7

, which is not tractable for determinis-

tic SPM approaches even when considering a con-

strained number of instances (<1000). In line with

previous efficiency observations, our generative al-

ternatives were able to learn emission and transition

probabilities in useful time. In fact, our approach is

critical for similar dense cases as it is able to decode

patterns based on the most accentuated probability

differences across the learned lattices.

4.4 Discussion

Generative SPM approaches provide more scalable

principles than deterministic peers to deal with dense

datasets and with very large sequences. Even when

considering complex architectures, generative ap-

proaches tend to perform better in terms of efficiency

for dataset with these properties. Additionally, the

analyzed precision-completeness levels is over 90%

for the most expressive architectures across settings,

which guarantees the deterministic significance of the

decoded patterns. These are particularly attractive

levels knowing that the probabilistic learning of pat-

6

https://sites.google.com/a/dlpage.phi-integration.com/

7

http://www.cs.rpi.edu/∼zaki/software/plandata.gz

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

270

terns accounts for noisy occurrences that can lead to a

substantially different (but similarly interesting) out-

put. The observed performance shows that compact

representations of large outputs is possible for SPM

over itemset sequences using generative models.

Additionally, these models present three intrinsic

properties of interest. First, they provide a probabilis-

tic view for the sequential patterns’ support that ac-

counts for occurrences under noise distributions. Sec-

ond, the probability of generating any sequential pat-

tern can be assessed on a query-basis. Note that de-

terministic approaches only disclose support for the

frequent patterns. Third, the introduction of back-

ground knowledge and constraints, as the selection of

specific pattern shapes in accordance with domain ex-

pectations, can be easily incorporated in the mining

process by parameterizing the target architecture.

5 CONCLUSION

This article proposes a methodology for the defini-

tion of generative models under a Markov assump-

tion to explore itemset sequences, an increasingly

adopted data format to capture temporal and cross-

attribute dependencies in real-world data. This allows

a compact and probabilistic view of sequential pat-

terns that tackles the problems of existing generative

approaches, which can only deal with constrained for-

mulations of the task. The methodology covers multi-

ple architectures and learning settings that guarantee

the relevance of the decoded patterns.

We show that the efficiency of the proposed SPM

generative approaches is competitive with traditional

SPM deterministic approaches on synthetic and real

data. Additionally, the proposed approaches hold

good levels of output-matching across a wide variety

of synthetic datasets. This is considerably attractive

since generative approaches offer a probabilist view

of patterns where the notion of pattern relevance is

rather different than the traditional counting support

as it allows for noise distributions underlying pattern

occurrences. This opens a new door for the gen-

erative formulation of SPM. This formulation holds

the potentiality to deliver: compact representations

of commonly large outputs; a probabilistic view of

patterns (allowing for noise distributions, an alterna-

tive view of the traditional support); pruned searches

under user-driven constraints; and a basis for query-

driven decoding of patterns of interest.

Relevant future directions include: the assessment

of changes in classifiers performance when adopting

pattern-sensitive generative models; the study of the

potential to dynamically self-learn expressive archi-

tectures from data; and the analysis of the impact of

these generative models for a broader-range of real-

world databases.

ACKNOWLEDGEMENTS

This work was supported by Fundac¸

˜

ao para a

Ci

ˆ

encia e Tecnologia under the project D2PM,

PTDC/EIA-EIA/ 110074/2009, and the PhD grant

SFRH/BD/75924/2011.

REFERENCES

Agrawal, R. and Srikant, R. (1995). Mining sequential pat-

terns. In ICDE, pages 3–14. IEEE CS.

Baldi, P. and Brunak, S. (2001). Bioinformatics: The Ma-

chine Learning Approach. Adaptive Comp. and Mach.

Learning. MIT Press, 2nd edition.

Bishop, C. (2006). Pattern Recognition and Machine

Learning. Info. Science and Stat. Springer.

Brand, M. (1999). Structure learning in conditional proba-

bility models via an entropic prior and parameter ex-

tinction. Neural Comput., 11(5):1155–1182.

Brown, M., Hughey, R., Krogh, A., Mian, I. S., Sj

¨

olander,

K., and Haussler, D. (1993). Using dirichlet mix-

ture priors to derive hidden markov models for pro-

tein families. In 1st IC on Int. Sys. for Molecular Bio.,

pages 47–55. AAAI Press.

Cao, L., Ou, Y., Yu, P. S., and Wei, G. (2010). Detecting

abnormal coupled sequences and sequence changes in

group-based manipulative trading behaviors. In ACM

SIGKDD, pages 85–94. ACM.

Chudova, D. and Smyth, P. (2002). Pattern discovery

in sequences under a markov assumption. In ACM

SIGKDD, pages 153–162. ACM.

Fujiwara, Y., Asogawa, M., and Konagaya, A. (1994).

Stochastic motif extraction using hidden markov

model. In ISMB, pages 121–129. AAAI.

Ge, X. and Smyth, P. (2000). Deformable markov model

templates for time-series pattern matching. In ACM

SIGKDD, pages 81–90. ACM.

Guralnik, V., Wijesekera, D., and Srivastava, J. (1998).

Pattern directed mining of sequence data. In ACM

SIGKDD, pages 51–57.

Henriques, R. and Antunes, C. (2014). Learning predictive

models from integrated healthcare data: Capturing

temporal and cross-attribute dependencies. In HICSS.

IEEE.

Henriques, R., Pina, S. M., and Antunes, C. (2013). Tem-

poral mining of integrated healthcare data: Methods,

revealings and implications. In SDM: 2nd IW on Data

Mining for Medicine and Healthcare. SIAM Pub.

Jacquemont, S., Jacquenet, F., and Sebban, M. (2009). Min-

ing probabilistic automata: a statistical view of se-

quential pattern mining. Mach. Learn., 75(1):91–127.

GenerativeModelingofItemsetSequencesDerivedfromRealDatabases

271

Laxman, S., Sastry, P., and Unnikrishnan, K. (2005).

Discovering frequent episodes and learning hidden

markov models: A formal connection. IEEE TKDE,

17:1505–1517.

Liu, J., Neuwald, A., and Lawrence, C. (1995). Bayesian

models for multiple local sequence alignment and

gibbs sampling strategies. American Stat. Ass.,

90(432):1156–1170.

Mannila, H. and Meek, C. (2000). Global partial orders

from sequential data. In ACM SIGKDD, pages 161–

168. ACM.

Murphy, K. (2002). Dynamic Bayesian Networks: Repre-

sentation, Inference and Learning. PhD thesis, UC

Berkeley, CS.

Pei, J., Han, J., Mortazavi-Asl, B., Pinto, H., Chen, Q.,

Dayal, U., and Hsu, M. (2001). Prefixspan: Min-

ing sequential patterns by prefix-projected growth. In

ICDE, pages 215–224. IEEE CS.

Xiang, R., Neville, J., and Rogati, M. (2010). Modeling

relationship strength in online social networks. In IC

on World wide web, WWW, pages 981–990. ACM.

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

272