Descriptive Models of Emotion

Learning Useful Abstractions from Physiological Responses during Affective

Interactions

Rui Henriques

1

and Ana Paiva

2

1

KDBIO, Inesc-ID, Instituto Superior T

´

ecnico, University of Lisbon, Lisbon, Portugal

2

GAIPS, Inesc-ID, Instituto Superior T

´

ecnico, University of Lisbon, Lisbon, Portugal

Keywords:

Descriptive Models, Mining Physiological Signals, Measuring Affective Interactions.

Abstract:

Supervised recognition of emotions from physiological signals has been widely accomplished to measure

affective interactions. Less attention is, however, placed upon learning descriptive models to characterize

physiological responses. In this work we delve on why and how to learn discriminative, complete and usable

descriptive models based on physiological signals from emotion-evocative stimuli. By satisfying these three

properties, we guarantee that the target descriptors can be expressively adopted to understand the physiological

behavior underlying multiple emotions. In particular, we explain why classification and unsupervised learning

models do not address these properties, and point new directions on how to adapt existing learners to met them

based on theoretical and empirical evidence.

1 INTRODUCTION

Monitoring the physiological responses to multiple

emotion-evocative stimuli has been widely accom-

plished in order to understand and recognize emo-

tions. The use of physiological signals to measure,

describe and affect human-robot interactions is crit-

ical since they track subtle affective changes that are

hard to perceive, and are neither prone to social mask-

ing nor have the heightened context-sensitivity of im-

age, audio and survey-based analysis. A large stream

of literature has been dedicated either to study how

to learn classification models to recognize emotions

from labeled signals (Jerritta et al., 2011; Wagner

et al., 2005) or to convey emotion-dependent physio-

logical aspects from scientific experiments (Cacioppo

et al., 2007; Andreassi, 2007). However, there is a

clear research gap on how to learn descriptive mod-

els from labeled signals. In fact, learning descrip-

tive models is increasingly relevant to dynamically

derive informative and usable abstractions from ex-

periments, to monitor sensor-based data, and to take

the study of emotion-evocative stimuli up to a wider

range of affective states.

This paper makes two major contributions to the

field. First, we see why the existing models learned

from physiological responses are poorly descriptive.

Second, we go further on how to adapt them in or-

der to guarantee that they are flexible, discrimina-

tive, complete and usable. We focus our contributions

along three major sets of descriptors: feature-based

learners, generative sequential learners, and pattern-

centric learners. For each set, we discuss and present

critical strategies for the learning of robust descriptive

models from emotion-centered physiological data.

This paper is structured as follows. Section 2 de-

fines the major requirements that guarantee the utility

of the target descriptors, and covers major contribu-

tions and limitations from existing work. Section 3 re-

lies on theoretical and empirical evidence to propose

three major types of descriptive models that are able

to address the surveyed limitations of existing mod-

els. An integrative view on the complementarity of

the proposals is also discussed. Finally, the major im-

plications of our work are synthesized.

2 BACKGROUND

Physiological responses are increasingly measured to

derive accurate analysis from affective interactions.

Although there are numerous principles on how to

recognize affective states from (streaming) signals,

less attention is being paid to the task of character-

izing affective states. This task is referred as emo-

393

Henriques R. and Paiva A..

Descriptive Models of Emotion - Learning Useful Abstractions from Physiological Responses during Affective Interactions.

DOI: 10.5220/0004902703930400

In Proceedings of the International Conference on Physiological Computing Systems (OASIS-2014), pages 393-400

ISBN: 978-989-758-006-2

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

tion description from physiological responses. Al-

though we use throughout this work the term emotion,

our contributions are generalizable to non-affective

responses to stimuli, such as subject responses to non-

empathic robot motion and speech.

Illustrative applications of emotion description in-

clude: measuring human interaction with artificial

agents, assisting clinical research (emotion-centered

understanding of addiction, affect dysregulation, al-

coholism, anxiety, autism, attention deficit, depres-

sion, drug reaction, epilepsy, menopause, locked-in

syndrome, pain management, phobias and desensiti-

zation therapy, psychiatric counseling, schizophrenia,

sleep disorders, and sociopathy), studying the effect

of body posture and exercises in well-being, disclos-

ing responses to marketing and suggestive interfaces,

reducing conflict in schools and prisons through the

early detection of hampering behavior, fostering edu-

cation by relying on emotion-centered feedback to es-

calate behavior and increase motivation, development

of (pedagogic) games, and self-awareness enhance-

ment.

Def.1 Consider a set of annotated signals

D=(x

1

,...,x

m

), where each instance is a tuple

x

i

=(~y,a

1

,...,a

n

,c) where ~y is a (multivariate) signal,

a

i

is an annotation related with the subject or experi-

mental setting, and c is the labeled emotion, stimulus,

task or environmental condition. Given D, emotion

description task aims to learn a model M that char-

acterizes the discriminative properties of ~y for each

emotion c in a complete and usable way.

This definition implies that the learning of de-

scriptive models of emotions from labeled signals

should satisfy four major requirements:

• flexibility: descriptive models cope with the com-

plex and variable physiological expression of

emotions within and among individuals;

• discriminative power: descriptive models capture

and enhance the different physiological responses

among emotions. Discriminatory ability can be

seen at the level of a single emotion or at the level

of a group of emotions from the target set (e.g.

isolation of emotions with positive valence);

• completeness: descriptive models contain all of

the discriminative properties and, when the recon-

stitution of the signal behavior is relevant, of flex-

ible sequential abstractions;

• usability: descriptive models are compact and the

abstractions of physiological responses are easily

interpretable.

Multiple physiological modalities have been adopted

to monitor emotions, including electrodermal activity

to identify engagement and excitement states, respira-

tory volume and rate to recognize negative-valenced

emotions, and heat contractile activity to separate

positive-valenced emotions (Wu et al., 2011; Hen-

riques et al., 2012; Cacioppo et al., 2007). Other

modalities with studied emotion-driven behavior in-

clude multiple forms of brain activity, cardiovascular

activity and muscular activity (Cacioppo et al., 2007).

Descriptive models can either capture one or multi-

ple physiological modalities under Def.1 by compos-

ing multivariate signals from the (either univariate or

multivariate) physiological signals from each modal-

ity. When this is the case, we assume that proper dedi-

cated pre-processing techniques are applied over each

modality, such as smoothing, low-pass filtering and

neutralization of cyclic behavior for respiratory and

cardiac signals (Lessard, 2006).

Emotion description can be applied for experi-

ments with different types of stiumli (discrete vs. con-

tinuous, high-agreement vs. self-report) and multi-

plicity of users (user-dependent vs. user-independent

studies). Recovering Def.1, subjective stimuli com-

monly recurs to the optional a

j

annotations to infer

c, and the x

i

instances can either be obtained for one

or for multiple subjects. Additionally, not only dis-

crete models of an high-agreement set emotions can

be target (Ekman and W., 1988), but also more flexi-

ble models, such as recent work focused on recogniz-

ing states that are a complex mix of emotions (”the

state of finding annoying usability problems”) (Pi-

card, 2003). Additionally, a

i

annotations can be com-

bined to capture dimensional valence-arousal axes

(Lang, 1995), Weiner’s attributions, and Ellsworth’s

dimensions and agency (Oatley et al., 2006).

Emotion description as it is defined has been seen

as an optional byproduct of emotion recognition from

physiological signals. In particular, when measur-

ing affective interactions with humans, solid contribu-

tions on recognizing emotions have been provided for

interactions in social contexts (Wagner et al., 2005),

with robots (Kulic and Croft, 2007; Leite et al.,

2013), with computer interfaces (Picard et al., 2001),

and with multi-modal adaptive virtual scenarios (Rani

et al., 2006). Despite the large attention dedicated to

classification and unsupervised learning models, they

are not able to answer the previously introduced four

requirements as they were developed for a different

goal. Below we describe and enumerate the major

limitations associated with the two major groups of

classification models to perform emotion recognition

(feature-based and generative models) and with unsu-

pervised models.

PhyCS2014-InternationalConferenceonPhysiologicalComputingSystems

394

Limitations of Feature-based Learning Models

Feature-based models are a function of features ex-

tracted from the observed signals for each class

(Povinelli et al., 2004; Nanopoulos et al., 2001). They

can either be deterministic or probabilistic and are

the typical choice for emotion recognition. Some of

the most common learning functions include: ran-

dom forests, k-nearest neighbors, Bayesian networks,

support vector machines, canonical correlation analy-

sis, neural networks, linear discriminant analysis, and

Marquardt-back propagation (Maaoui et al., 2008;

Jerritta et al., 2011; Mitsa, 2009). These extracted

features are statistical (mean, deviation), temporal

(rise and recovery time), or more complex (multi-

scale sample entropy, sub-band spectra) (Haag et al.,

2004). Methods for their extraction include rect-

angular tonic-phasic and moving-sliding windows;

transformations (Fourier, wavelet, Hilbert, singular-

spectrum); component analysis; projection pursuit;

auto-associative networks; multidimensional scaling;

and self-organizing maps (Haag et al., 2004; Lessard,

2006; Jerritta et al., 2011). The features that might

not have significant correlation with the emotion un-

der assessment are removed using sequential for-

ward/backward/floating selection, branch-and-bound

search, principal component analysis, Fisher projec-

tion, Davies-Bouldin index, and analysis of variance

methods (Jerritta et al., 2011; Bos, 2006). This im-

proves the simplicity and the discriminative power of

feature-based models.

Let us see why feature-based models as-is are not

prone for emotion description. First, features hardly

capture flexible behavior (e.g. motifs underlying com-

plex rising and decaying responses) and are strongly

dependent on directive thresholds (e.g. peak ampli-

tude to compute frequency-based features). Addi-

tionally, the multiplicity of physiological expression

per emotion is hardly modeled since the majority of

methods rely on prototype features for each emotion.

Second, although a wide range of complementary fea-

tures can be extracted to provide a complete descrip-

tion of each emotion, they cannot be adopted to re-

trieve abstractions of sequential behavior of the sig-

nal. Third, discriminative power is highly depen-

dent on the chosen methods and mainly consider at

emotion’s individual level. Discriminative power is

achieved through: i) feature selection, ii) weighting

of features, iii) entropy-based functions, among oth-

ers. Note, however, that these strategies are only pre-

pared to assess the ability to differentiate individual

emotions. If a particular feature is able to differenti-

ate two sets of emotions but does not isolate a single

emotion, it is commonly discarded. Finally, usability

is highly dependent on the chosen classifiers.

Limitations of Generative Learning Models

Generally, generative sequential models learn lat-

tices from (multivariate) physiological signals. Al-

though they are the common option for speech and

video recognition, only recently became more promi-

nently adopted for emotion recognition (Henriques

et al., 2013; Kulic and Croft, 2007; Henriques et al.,

2012). Common sequential models include dynamic

Bayesian networks, such as hidden Markov models

(HMMs) (Murphy, 2002), time-sensitive neural net-

works (NNs), such as time-sensitive NNs(Guimar

˜

aes,

2000) or time-delay NNs (Berthold and Hand, 1999),

(temporal) support vector machines (SVMs) (Burges,

1998), and logistic regressions. In particular, we use

HMMs as the illustrative model due to their matu-

rity, expressive power, inherent simplicity and flexible

parameter-control (Rabiner and Juang, 2003). Lat-

tices are commonly defined by the underlying au-

tomaton (characterized by transaction and value emis-

sion probabilities) according to a specific architecture.

Similarly to feature-based models, these models

have properties that deteriorate their ability to be used

as descriptive models. First, although the use of

large interconnected lattices can capture multiplicity

of physiological expression due to the large number

of paths, it becomes hard to abstract such multiplic-

ity of expressions per emotion from the analysis of

the lattices. This hampers their flexibility as a de-

scriptive model. Second, although the most probable

sequences characterizing the physiological can be re-

trieved from the lattices, specific behavior can be lost

during the learning process (e.g. frequency features),

deteriorating the completeness of the learned models.

Third, commonly one lattice is learned independently

for each emotion (classification is then performed by

evaluating the generative probability of a new sig-

nal on each one of the learned lattices), and, thus,

the models do not accentuate discriminative behav-

ior. In fact, the differences among lattices can be very

subtle as they are typically observed for a small sub-

set of transition and emission probabilities. Finally,

although generative models offer a compact view of

physiological responses per emotion, they tend to be

highly complex and, therefore, hardly usable. This is

particularly problematic if there is not a clear conver-

gence for a specific subsets of transitions and emis-

sions.

Limitations of Unsupervised Learning Models

The properties of unsupervised learners, such as

(bi)clustering models (Madeira and Oliveira, 2004)

and collections of temporal patterns (M

¨

orchen, 2006;

Han et al., 2007), deserve a closer attention in the

context of emotion description from physiological re-

DescriptiveModelsofEmotion-LearningUsefulAbstractionsfromPhysiologicalResponsesduringAffectiveInteractions

395

sponses. Although we refer to these models as un-

supervised, we assume that the target local regulari-

ties are learned in the context of a specific emotion.

For instance, biclusters can disclose strong correla-

tions between a specific subset of features that are

only observed for a subset of signals. Similarly, tem-

poral pattern, such as sequential patterns or motifs ex-

tracted directly from the physiological signal, can be

discovered and used to enrich the target descriptive

models.

These local models are flexible, as they can iso-

late multiple responses per emotion either within and

across individuals. However, they do not met the re-

maining desirable descriptor’s properties. First, sim-

ilarly to generative models, unsupervised local mod-

els typically are not prepared to discover discrimina-

tive patterns or biclusters. Second, mining local reg-

ularities do not guarantee completeness since unsu-

pervised methods are not exhaustive, i.e., they easily

fail to consider specific features or sequential aspects

of the signal of interest. Finally, although listing lo-

cal regularities promotes simplicity, it is necessary to

guarantee that this set is compact and navigable. In

the absence of an organized structure for the presen-

tation of these regularities, these models are hardly

usable.

3 DESCRIPTIVE MODELS

In the previous section, we explored the limitations of

relying on widely-adopted classification models and

on unsupervised models to perform emotion descrip-

tion from physiological data. Although these mod-

els show multiple properties of interest that can be

seized within descriptive models, they fail to satisfy

the introduced four requirements. In this section, we

propose a set of principles for each class of models

in order to guarantee their compliance with these re-

quirements.

3.1 Feature-based Classification Models

To guarantee that feature-based models are flexible, it

is either necessary to choose a classification model

that is able to group distinctive physiological re-

sponses per emotion. This need is derived from

the observation that single emotion-evocative stim-

ulus can elicit small-to-large groups of significantly

different physiological responses. Lazy learners and

random forests implicitly are able to deal with this

aspect. However, many of remaining classification

models are not prepared to deal with such flexible

paths of expression per class. In order to adapt them,

it is crucial to understand how to refine these mod-

els. This can be done by analyzing the variances

of features per emotion or by clustering responses

per emotion with a non-fixed number of clusters as

proposed in (Henriques and Paiva, 2014). For these

cases, new labels associated with the major physio-

logical responses per emotion are considered during

the learning process, so the learned models properly

capture these ramifications of expression per emotion.

To guarantee the completeness of feature-based

models two strategies are suggested. First option is to

combine these models with the output of sequential

generative models as they provide different but com-

plementary views (Henriques et al., 2013). However,

this option does not solve the fact that disclosing pro-

totype values for the features from each emotion can

be misleading. Exemplifying, mean or median values

are inconclusive when variance is high. Nevertheless,

outputting simultaneously the feature and a deviation

metric can still be misleading as often the observed

values can hardly be approximated by Gaussian dis-

tributions (Lessard, 2006). Therefore, we propose a

second option, that is to rely on an approximated dis-

tribution for the most significant and discriminative

sets of features. At least, Gaussian and Poisson tests

should be considered for a more correct interpretation

of how a particular feature characterize a particular

emotion.

To guarantee the discriminative power of the se-

lected features, not only feature selection should

be considered, but also feature weighting methods

should be adopted in order to rank the features ac-

cording to their ability to separate emotions (Liu and

Motoda, 1998). However, the majority of these meth-

ods rely on metrics, such as entropy ratios, that are

only prepared to deal with differentiation at an indi-

vidual level. Thus, we advise the adoption of feature

weighting methods that are additionally able to sep-

arate sets of emotions (e.g. isolate intense emotions

or separate positive from negative valence), such as

some methods for the analysis of the variance of sta-

tistical models (Surendiran and Vadivel, 2011).

Finally, substantial research has been dedicated

to guarantee the usability of feature-based models,

with particular incidence on its understandability, re-

producibility and ability to retrieve embedded knowl-

edge. Rule-based models, as decision trees, and

Bayesian networks are illustrative usable models as-

is. In order to foster the usability of more com-

plex models, such as support vector machines in

high-dimensional spaces and neural networks, visual-

ization techniques and rule-extraction methods have

been largely proposed (M

¨

orchen, 2006).

PhyCS2014-InternationalConferenceonPhysiologicalComputingSystems

396

3.2 Generative Classification Models

For generative models, the physiological responses

for a specific emotion are characterized by the learned

lattice. However, lattices are commonly complex,

and, therefore, providing them as-is as descriptors is

not a good option. Specific states can have a large

number of equi-probable emissions and there is an

heightened complexity associated with the identifi-

cation of the most probable paths (Murphy, 2002).

Therefore, we propose two strategies in order to guar-

antee a more delineated convergence and the retrieval

of the most probable prototype patterns. The com-

bined application of these strategies guarantee the

flexibility of the target generative models.

The first strategy relies on an adapted learning set-

ting that guarantees acceptable levels of convergence

in combination with an algorithmic search that is able

to decode the most probable sequences from the lat-

tices. In the context of HMMs, Viterbi is a learning

setting that guarantees acceptable levels of conver-

gence for both the transition and emission probabil-

ities (Murphy, 2002). Other more restrictive learning

settings can be adopted either recurring to minimum

entropy prior (Brand, 1999) or mixtures of Dirichlets

(Brown et al., 1993). The goal here is to guarantee

that a only a compact set of symbols can be emitted

per state. Retrieving the most probable sequential re-

sponses per emotion is simply a matter of defining

efficient methods to explore the most probable transi-

tions and emissions (Henriques and Antunes, 2014).

For this goal, the specification of the minimum prob-

ability threshold for the path and for individual emis-

sions and transitions are the adopted criteria to decode

the abstractions of the signal for a particular emotion.

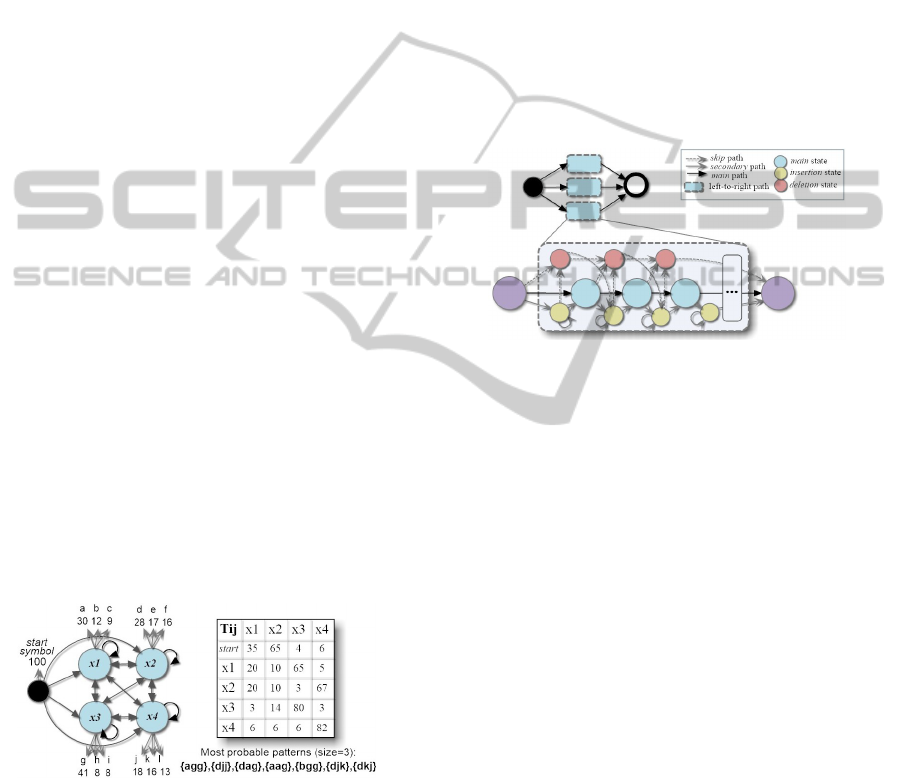

This step is illustrated in Fig.1.

Figure 1: Decoding of most probable signal responses for

an emotion-centered fully-interconnected Markov model.

The second strategy enhances flexibility through

the use of more expressive architectures for the lat-

tices. The commonly adopted fully-interconnected

lattices are complex. Additionally, the more re-

strictive left-to-right architectures are only prepared

to abstract a major physiological response per emo-

tion (Murphy, 2002), degrading the flexibility of the

model. In a left-to-right architecture, lattices have

a set of main states from which abstracted sequen-

tial responses can be derived. To tackle the problems

of these commonly adopted architectures, we pro-

pose the use of multi-path architectures (Henriques

and Antunes, 2014). A multi-path architecture is the

sound parallel composition of left-to-right architec-

tures. The number of paths most exceed the expected

number of distinct physiological responses per emo-

tion. When such knowledge is not available, the num-

ber of paths can be increased until a specific conver-

gence criterion is satisfied. An illustrative multi-path

architecture is presented in Fig.2. Under this archi-

tecture, the retrieval of sequential prototype behavior

is just a matter of retrieving the most probable se-

quences from the set of main states from each one of

these paths.

Figure 2: Multi-path architecture: composition of left-to-

right architectures to model distinct responses per emotion.

To promote completeness, the most probable

physiological responses can be synthesized according

to regular expressions. Additionally, two further op-

tions can be adopted. Hierarchical disclosure of less

probable behaviors can be done by discovering prob-

able sequences in lattices under more relaxed thresh-

olds. Second, these regular expressions can be inte-

grated with the complementary feature-based classifi-

cation models.

Since each lattice is learned independently for

each emotion, differences among lattices can be very

subtle, which deteriorates the ability to retrieve dis-

criminative probable sequences. In order to guarantee

the discriminative power of generative models, two

strategies can be adopted. First, after learning the lat-

tices, a method based on graph differences can be ap-

plied in order to accentuate the points of divergence

among a set of lattices by adapting their transition

and emission probabilities (Murphy, 2002). Second,

methods for the mining of discriminative frequent se-

quences can be adopted in order to retrieve sequential

behavior that is highly specific for one or few of the

overall lattices (emotions) (Han et al., 2007).

The usability of generative models can be eas-

ily achieved recurring to two major strategies. First,

by listing the most probable sequential behavior for

each emotion by decoding the lattices. Similarly to

DescriptiveModelsofEmotion-LearningUsefulAbstractionsfromPhysiologicalResponsesduringAffectiveInteractions

397

feature-based models, weights or data structures can

be used to prioritize and organize the most proba-

ble sequential behaviors per emotion, promoting us-

ability. Second, multi-path architectures can be vi-

sually displayed due to the highly constrained tran-

sition paths. In this setting, only the main states per

path need to be displayed, and only the most probable

emissions per states should be included.

3.3 Unsupervised Local Models

Feature-based and generative classification models

can be extended and combined for the delivery of ade-

quate descriptive models. In this section, we see how

unsupervised models can be adopted to enhance the

properties of the target descriptive models. In partic-

ular, we focus our discussion on two major types of

local models: (bi)clustering models and temporal pat-

tern mining models.

Biclusters can be either used over feature-based

datasets to find subsets of instances with strong cor-

relation among a subset of features (Madeira and

Oliveira, 2004) or over signal-based datasets to find

subsets of instances correlated with local properties

of the signal (Madeira et al., 2010). In particular, ex-

tensions are available in literature to allow for scaling

and shifting biclusters for both options. Although one

bicluster can be seen as a simple feature, it is of in-

terest to capture it within descriptive models not as a

simple boolean variable (meaning that they can either

be or not be discovered for a specific emotion) but

to further disclose its properties. And, therefore, it is

important to guarantee that this disclosure is compli-

ant with the target requirements. Models of biclusters

are flexible and, by nature, not complete. To guaran-

tee the discriminative power of these models, mean-

ing that only discriminative biclusters are discovered,

several strategies have been proposed (Wang et al.,

2010). These same strategies can be used to compose

models where biclusters are ranked by discriminatory

relevance, which fosters their usability.

Multiple temporal patterns have been proposed,

such as sequential patterns, calendric rules, tempo-

ral association rules, motifs, episodes, containers and

partially-ordered tones (Mueen et al., 2009; M

¨

orchen,

2006). Each one holds different properties that have

been largely considered to be of interest for the analy-

sis of signal-based data (Geurts, 2001). For instance,

in (Leite et al., 2013), sequential patterns have been

retrieved to visually display the differences of multi-

ple affective states. In fact, and similarly to biclusters,

temporal patterns can be captured as a boolean feature

by feature extraction methods. However, in order to

zoom in their characteristics for emotion description,

more detailed models can be targeted. In particular,

when discriminative temporal patterns are considered

(Exarchos et al., 2008; Tseng and Lee, 2009), these

models are commonly a rank of temporal patterns ac-

cording to their confidence in relation to a particular

emotion (percentage of instances supporting this pat-

tern for a particular emotion from the overall dataset)

(Li et al., 2001). Again, although completeness can-

not be achieved (unless feature-based or generative

models are also present), the remaining properties

can be satisfied recurring to principles from methods

prone to find structured models of compact discrimi-

native patterns (Tseng and Lee, 2009).

4 CONCLUSIONS

In this work we introduce the task of emotion de-

scription from physiological signals and motivate its

relevance for measuring affective interactions. Four

structural properties for the definition of useful de-

scriptive models are synthesized. They are: flexibil-

ity, discriminative power, completeness and usability.

The limitations from considering emotion description

as a byproduct of emotion recognition are covered. In

particular, we explore why feature-based and gener-

ative classification models are not able to satisfy the

introduced properties.

In order to overcome the problems associated with

existing models, this work proposes multiple strate-

gies to extend existing models in order to guarantee

the delivery of robust descriptive models. This set of

strategies, derived from theoretical and empirical evi-

dence, is the central contribution of our work. In par-

ticular, we show how to improve the flexibility, dis-

criminative power, completeness and usability of su-

pervised models (either deterministic or generative)

and unsupervised models recurring to state-of-the-art

methods from the fields of data mining, signal pro-

cessing and multivariate analysis.

Promoting the quality of descriptive physiological

models of emotions opens a new door for the psycho-

physiological study and real-time monitoring of af-

fective interactions. Therefore, in a context where the

use of non-intrusive wearable sensors is rapidly in-

creasing, this paper establish solid foundations for up-

coming contributions on this novel and critical field of

research.

ACKNOWLEDGEMENTS

This work was supported by FCT (Fundac¸

˜

ao para

a Ci

ˆ

encia e a Tecnologia) under the project PEst-

PhyCS2014-InternationalConferenceonPhysiologicalComputingSystems

398

OE/EEI/LA0021/2013, by the project EMOTE from

the EU 7

th

Framework Program (FP7/2007-2013) un-

der grant agreement n.317923, and under the PhD

grant SFRH/BD/75924/2011.

REFERENCES

Andreassi, J. (2007). Psychophysiology: Human Behavior

And Physiological Response. Lawrence Erlbaum.

Berthold, M. and Hand, D. J., editors (1999). Intelligent

Data Analysis: An Introduction. Springer-Verlag New

York, Inc., Secaucus, NJ, USA, 1st edition.

Bos, D. O. (2006). Eeg-based emotion recognition the

influence of visual and auditory stimuli. Emotion,

57(7):1798–806.

Brand, M. (1999). Structure learning in conditional proba-

bility models via an entropic prior and parameter ex-

tinction. Neural Comput., 11(5):1155–1182.

Brown, M., Hughey, R., Krogh, A., Mian, I. S., Sj

¨

olander,

K., and Haussler, D. (1993). Using dirichlet mix-

ture priors to derive hidden markov models for protein

families. In Int. Sys. for Molecular Bio., pages 47–55.

AAAI Press.

Burges, C. J. C. (1998). A tutorial on support vector

machines for pattern recognition. Data Mining and

Knowledge Discovery, 2:121–167.

Cacioppo, J., Tassinary, L., and Berntson, G. (2007). Hand-

book of psychophysiology. Cambridge University

Press.

Ekman, P. and W., F. (1988). Universals and cultural differ-

ences in the judgments of facial expressions of emo-

tion. Personality and Social Psychology, 53:712–717.

Exarchos, T., Tsipouras, M., Papaloukas, C., and Fotiadis,

D. (2008). A two-stage methodology for sequence

classification based on sequential pattern mining and

optimization. Data Knowl. Eng., 66(3):467–487.

Geurts, P. (2001). Pattern extraction for time series clas-

sification. In PKDD, pages 115–127, London, UK.

Springer-Verlag.

Guimar

˜

aes, G. (2000). The induction of temporal grammat-

ical rules from multivariate time series. In Colloquium

on Grammatical Inference: Alg. and App., pages 127–

140, London, UK. Springer-Verlag.

Haag, A., Goronzy, S., Schaich, P., and Williams, J. (2004).

Emotion recognition using bio-sensors: First steps to-

wards an automatic system. In Affective D.Sys., vol-

ume 3068 of LNCS, pages 36–48. Springer.

Han, J., Cheng, H., Xin, D., and Yan, X. (2007). Frequent

pattern mining: current status and future directions.

Data Min. Knowl. Discov., 15(1):55–86.

Henriques, R. and Antunes, C. (2014). Learning predictive

models from integrated healthcare data: Capturing

temporal and cross-attribute dependencies. In HICSS.

IEEE.

Henriques, R. and Paiva, A. (2014). Seven principles to

mine flexible behavior from physiological signals for

effective emotion recognition and description in affec-

tive interactions. In PhyCS.

Henriques, R., Paiva, A., and Antunes, C. (2012). On the

need of new methods to mine electrodermal activity

in emotion-centered studies. In AAMAS’12, 8th IW on

ADMI. Springer-Verlag LNAI series.

Henriques, R., Paiva, A., and Antunes, C. (2013). Access-

ing emotion patterns from affective interactions using

electrodermal activity. In Affective Comp. and Intel.

Interaction (ACII). IEEE Computer Society.

Jerritta, S., Murugappan, M., Nagarajan, R., and Wan, K.

(2011). Physiological signals based human emotion

recognition: a review. In CSPA, 2011 IEEE 7th Inter-

national Colloquium on, pages 410 –415.

Kulic, D. and Croft, E. A. (2007). Affective state estimation

for human-robot interaction. Trans. Rob., 23(5):991–

1000.

Lang, P. (1995). The emotion probe: Studies of motivation

and attention. American psychologist, 50:372–372.

Leite, I., Henriques, R., Martinho, C., and Paiva, A. (2013).

Sensors in the wild: Exploring electrodermal activ-

ity in child-robot interaction. In HRI, pages 41–48.

ACM/IEEE.

Lessard, C. S. (2006). Signal Processing of Random Physi-

ological Signals. S.Lectures on Biomedical Eng. Mor-

gan and Claypool Publishers.

Li, W., Han, J., and Pei, J. (2001). Cmar: Accurate and effi-

cient classification based on multiple class-association

rules. In ICDM, pages 369–376. IEEE CS.

Liu, H. and Motoda, H. (1998). Feature Selection for

Knowledge Discovery and Data Mining. Kluwer Aca-

demic Pub.

Maaoui, C., Pruski, A., and Abdat, F. (2008). Emotion

recognition for human-machine communication. In

IROS, pages 1210 –1215. IEEE/RSJ.

Madeira, S., Teixeira, M. N. P. C., S

´

a-Correia, I., and

Oliveira, A. (2010). Identification of regulatory mod-

ules in time series gene expression data using a lin-

ear time biclustering algorithm. IEEE/ACM TCBB,

1:153–165.

Madeira, S. C. and Oliveira, A. L. (2004). Bicluster-

ing algorithms for biological data analysis: A sur-

vey. IEEE/ACM Trans. Comput. Biol. Bioinformatics,

1(1):24–45.

Mitsa, T. (2009). Temporal Data Mining. DMKD. Chapman

& Hall/CRC.

M

¨

orchen, F. (2006). Time series knowledge mining. Wis-

senschaft in Dissertationen. G

¨

orich & Weiersh

¨

auser.

Mueen, A., Keogh, E. J., Zhu, Q., Cash, S., and Westover,

M. B. (2009). Exact discovery of time series motifs.

In SDM, pages 473–484.

Murphy, K. (2002). Dynamic Bayesian Networks: Repre-

sentation, Inference and Learning. PhD thesis, UC

Berkeley, CS Division.

Nanopoulos, A., Alcock, R., and Manolopoulos, Y. (2001).

Information processing and technology. chapter

Feature-based classification of time-series data, pages

49–61. Nova Science Pub.

Oatley, K., Keltner, and Jenkins (2006). Understanding

Emotions. Blackwell P.

Picard, R. W. (2003). Affective computing: challenges.

International Journal of Human-Computer Studies,

59(1-2):55–64.

DescriptiveModelsofEmotion-LearningUsefulAbstractionsfromPhysiologicalResponsesduringAffectiveInteractions

399

Picard, R. W., Vyzas, E., and Healey, J. (2001). Toward

machine emotional intelligence: Analysis of affective

physiological state. IEEE Trans. Pattern Anal. Mach.

Intell., 23(10):1175–1191.

Povinelli, R. J., Johnson, M. T., Lindgren, A. C., and Ye,

J. (2004). Time series classification using gaussian

mixture models of reconstructed phase spaces. IEEE

Trans. on Knowl. and Data Eng., 16(6):779–783.

Rabiner, L. and Juang, B. (2003). An introduction to hidden

Markov models. ASSP Magazine, 3(1):4–16.

Rani, P., Liu, C., Sarkar, N., and Vanman, E. (2006). An em-

pirical study of machine learning techniques for affect

recognition in human-robot interaction. Pattern Anal.

Appl., 9(1):58–69.

Surendiran, B. and Vadivel, A. (2011). Feature selection

using stepwise anova discriminant analysis for mam-

mogram mass classification. IJ on Signal Image Proc.,

2(1):4.

Tseng, V. and Lee, C.-H. (2009). Effective temporal data

classification by integrating sequential pattern min-

ing and probabilistic induction. Expert Sys.App.,

36(5):9524–9532.

Wagner, J., Kim, J., and Andre, E. (2005). From physiologi-

cal signals to emotions: Implementing and comparing

selected methods for feature extraction and classifica-

tion. In ICME, pages 940 –943. IEEE.

Wang, M., Shang, X., Zhang, S., and Li, Z. (2010). Fdclus-

ter: Mining frequent closed discriminative bicluster

without candidate maintenance in multiple microarray

datasets. In ICDM Workshops, pages 779–786. IEEE

CS.

Wu, C.-K., Chung, P.-C., and Wang, C.-J. (2011). Extract-

ing coherent emotion elicited segments from physio-

logical signals. In WACI, pages 1–6. IEEE.

PhyCS2014-InternationalConferenceonPhysiologicalComputingSystems

400