Domain-dependent and Observer-dependent Follow-up

of Human Activity

A Normative Multi-agent Approach

Benoît Vettier and Catherine Garbay

Laboratoire d'Informatique de Grenoble (LIG), AMA team, Université Joseph Fourier / CNRS, Grenoble, France

Keywords: Cognitive Systems, Ambient Intelligence, Agent Models and Architectures, Normative Multi-agent

Systems.

Abstract: We propose in this paper a novel approach for human activity follow-up that draws on a distinction between

domain-dependent and observer-dependent viewpoints. While the domain-dependent (or intrinsic)

viewpoint calls for the follow-up and interpretation of human activity per se, the observer-dependent (or

extrinsic) viewpoint calls for a more subjective approach, which may involve an evaluative dimension,

regarding the human activity or the interpretation process itself. Of interest are the mutual dependencies that

tie both processes over time: the observer viewpoint is known to shape domain-dependent interpretation,

while domain-dependent interpretation is core to the evolution of the observer viewpoint. We make a case

for using a normative multi-agent approach to design monitoring systems articulating both viewpoints. We

illustrate the proposed approach potential by examples from daily life scenarios.

1 INTRODUCTION

We propose in this paper a novel architecture for

human activity monitoring, which draws on a

distinction between two complementing universes of

discourse: the intrinsic universe of the activity

domain, and the extrinsic universe of the observer(s)

following this activity. While the domain-dependent

(or intrinsic) viewpoint calls for the follow-up and

interpretation of human activity per se, the observer-

dependent (or extrinsic) viewpoint calls for a more

subjective approach, which may involve an

evaluative dimension, regarding the human activity

or the interpretation process itself. Of interest are the

mutual dependencies that tie both processes over

time: the observer viewpoint is known to shape

domain-dependent interpretation, while domain-

dependent interpretation is core to the evolution of

the observer viewpoint. We make a case for using a

normative multi-agent approach to design monito-

ring systems articulating both viewpoints. In this

approach, we distinguish between intrinsic agents,

whose role is to build interpretation hypotheses from

the data at hand, and extrinsic agents, whose role is

to observe, adapt and frame the former process. Both

kinds of agents are launched by norms that express

in a declarative way the intrinsic and extrinsic

constraints that shape their activity. They share a

common multidimensional trace and evolve in

mutual dependency: intrinsic agents processing may

result in the launching of extrinsic agents, which

may in turn frame back intrinsic agents activities.

We illustrate the proposed approach potential by

examples from daily life scenarios.

2 STATE OF THE ART

We consider interpretation as a matter of generating,

selecting and testing hypotheses in front of evolving

data, contexts and requirements. Context

representation is a major issue in both Monitoring

and Ambient Intelligence (Brémond and Thonnat,

1998). In monitoring, human activity is captured in

the form of multi-sensor temporal data, that are

redundant, incomplete and ambiguous: the

physiological profile of a person may vary according

to various factors like the time of the day or the

geographical location; conversely, a given set of

data may correspond to several different patterns of

activity. Context sensitivity, personalization and pro-

activeness are important properties of the system to

be designed; their embodiment within broader social

contexts calls for considering other factors like

673

Vettier B. and Garbay C..

Domain-dependent and Observer-dependent Follow-up of Human Activity - A Normative Multi-agent Approach.

DOI: 10.5220/0004918106730678

In Proceedings of the 6th International Conference on Agents and Artificial Intelligence (ICAART-2014), pages 673-678

ISBN: 978-989-758-015-4

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

compliance with social conventions, awareness to

the inner state of emotion or motives, or the ability

to act and interact in a consistent and transparent

way (Aarts and de Ruyter, 2009). The analysis

process must therefore imply several levels of

abstraction, from the local level of the data to a more

global level of norms, functional requirements and

goals (Weber and Glynn, 2006). Although some

contextual situations are fairly stable, discernible,

and predictable, there are many others that are not:

for (Greenberg, 2001), context must be seen as a

dynamic construct evolving with time, episodes of

use, social interaction, internal goals, and local

influences. Following (Klein et al., 2006), we will

approach interpretation not as a state of knowledge,

but rather as “a process of fitting data into a frame

that is continuously replaced and adapted to fit the

data”. As a consequence, we may not reduce the

understanding process to the description and

handling of contextual elements, nor to the mere

application of data-driven or goal-driven methods.

On the contrary, this process must be seen as the

constant perception-action loop, which consists in

focus, perception, interpretation, context modelling

and anticipation. The paradigm of Multi-Agent

Systems allows for multiple, heterogeneous entities

to be handled through a unified communication /

cooperation frame (Isern et al., 2010). The

heterogeneity of agents is considered as a

requirement to encompass the variety of states a

person can find oneself in; a large knowledge base

of interconnected interpretation models can thus be

explored, as a dynamic population of multiple

hypotheses on several levels of abstraction. A law

enforcement approach has been proposed (Carvalho

et al., 2005) to support dependable open software

development in the context of ambient intelligence

environments. Following these lines of approach, we

propose using the normative agent paradigm as a

way to design flexible context-aware norm-driven

systems. Normative MAS are a class of Multi-Agent

Systems in which agent behaviour is not only guided

by their mere individual objectives but also

regulated by norms specifying in a declarative way

which actions are considered as legal or not by the

group (Castelfranchi, 2006). Agents acting in such a

system may be seen as “socially autonomous": they

do not only pursue their own goals but are also able

to adopt goals from the outside, and act in the best

interest of the society. An additional control over the

adoption of goals is therefore needed, in the form of

norms, which operate as external incentives for

action (Dignum et al., 2000). These norms are

designed as condition-action rules, triggered by a

dedicated engine, and result in agent notifications.

Agents subscribe to norms and may adopt them or

not, which may result in penalties. Norms may

finally be adapted to cope with the evolution of

context (Boissier et al., 2011). The system dynamics

therefore depends not only on the agent dynamics

but also on the dynamic of the norms.

3 PROPOSED ARCHITECTURE

3.1 Proposed View

According to (Hébert, 2002), we propose to

distinguish between intrinsic and extrinsic

perspectives to interpretation. Intrinsic interpretation

is domain-dependent: it is based on attributes and

classes that are inherent to the activity domain.

Intrinsic interpretation drives the construction of

hypotheses, which may be concurrent and

contradictory, as to whether a person is running,

walking, or staying quiet. Extrinsic interpretation is

observer-dependent: it relies on attributes and

classes that are inherent to the observer domain.

Extrinsic interpretation drives the construction of an

evaluative view over human activity, regarding

whether this activity is normal or alarming. It may

further apply to the way intrinsic interpretation is

conducted and provides a view as to whether this

process is efficient, informative, or for example open

or restricted to few hypotheses. These two universes

of discourse are mutually dependent. Indeed, the

evolution of intrinsic activity properties may yield

the evolution of extrinsic evaluation models, e.g. a

skiing or walking activity calls for different evalua-

tive models. Conversely, the observer’s extrinsic

evaluation provides a perspective view that drives

what is looked at, e.g. focus on speed if there is a

risk of fall for a skiing activity, focus on location if

there is a risk of getting lost for a walking activity.

Interpretation is modelled as a dynamic

exploration process driven in a context-sensitive

way. As proposed in a previous paper (Vettier and

Garbay, 2014), it is abductive in nature and

modelled as a perception-action loop combining

prediction and verification stances. This process is

situated with respect to a set of intrinsic and

extrinsic require-ments. Intrinsic requirements are

domain-dependent: interpretation hypotheses are to

be grounded into the evidence of data and the realm

of the activity at hand. Their confidence has to be

high enough for the hypothesis to be maintained:

otherwise other hypotheses must be evaluated.

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

674

Extrinsic require-ments are domain-independent but

observer-dependent (focus on a given range of

activity, drive the interpretation process toward a

given efficiency). These heterogeneous frames of

interpretation are subject to evolution, to cope with

the evolving contexts or requirements from the

observer: the current focus or expected performance

may be modified in front of unexpected critical

states for example. These features of monitoring

system were stated early by (Hayes-Roth, 1995).

We propose normative, rule-governed agents as a

way to design declarative, flexible context-aware

policy-driven systems, embedding sense-making

within constraints from various domains. As

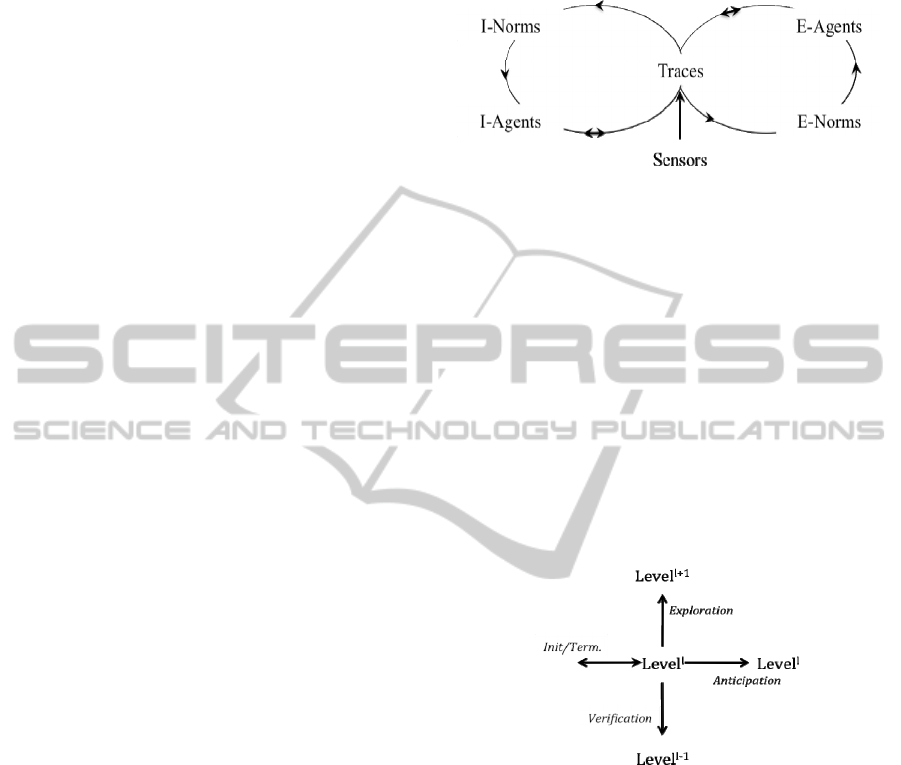

illustrated in Figure 1, there are two main types of

agents, intrinsic and extrinsic. These agents are

formalized as a tuple: A = Role, Range, Regular,

Corrective. Role denotes the role of the agent

(interpretation hypothesis followed by the intrinsic

agent, requirement checked by the extrinsic agent).

Range denotes the level of achievement of the

agent’s role (confidence of the interpretation process

or fitness of requirement). Regular defines regular

methods for data interpretation (intrinsic agent) or

requirement checking (extrinsic agent), depending

on the type of the agent. The corrective method

defines how to regulate the interpretation process

(deposit new interpretation hypotheses, modify

intrinsic or extrinsic requirements). The agent is

situated with respect to a shared, multidimensional

trace. This trace is formalized as a tuple: T =

IntState, IntPast, Intrinsic, Extrinsic. It involves

information about the current and past state of

interpretation (IntState, IntPast): current hypotheses

with their confidence degree, running agents, current

interpretation efficiency… as well as information

about current Intrinsic and Extrinsic requirements.

The agent activity is framed by a set of norms

expressing intrinsic or extrinsic requirements. The

norms are triggered depending on patterns from the

trace and launch the agents. Any norm, intrinsic or

extrinsic, is formalized as a tuple: N = Type,

Weight, Context, Flag,Bearer, Object. Type

defines the type of the norm. Weight allows to

prioritize the rules. Context represents an overall

evaluation condition (as the overall system state or

human situation). Flag allows to bypass the

requirement when necessary.Bearerdenotes the

targeted element (agent or trace). Object is a

compound field, typically written as “launch

(conditions actions)”, characterizing the conditional

action attached to the norm (launching of agent

behaviour). The role of intrinsic norms and agents is

to evolve the state of interpretation while the role of

extrinsic norms and agents is to evolve the frame of

interpretation.

Figure 1: A dual perspective on human activity follow-up.

I-agents perform data interpretation, governed by intrinsic

norms. This domain-dependent interpretation process is

framed by E-Agents, working under extrinsic or observer-

dependent requirements.

3.2 I-Agents Life Cycle

The role of an I-Agent is to interpret incoming data

at given abstraction levels, according to provided

field of perception and models. Reasoning is of an

abductive nature and the agents navigate among

several behaviours (Initialization, Exploration,

Anticipation, Termination), according to a life cycle

governed by intrinsic norms (Figure 2).

Figure 2: Agents life cycle as governed by intrinsic filters.

An intrinsic agent is a tuple: I-Agent = Hyp,

Conf, Verif, Pred. Hyp denotes the hypothesis

followed by the agent and Conf

a confidence range

(low, medium, high) for this hypothesis: this field is

time stamped. Verif denotes the Regular agent

behaviour: its role is to proceed to confidence

computation, according to provided components and

model. This confidence value will be deposited in

the trace and processed by norms. Pred denotes the

Corrective agent behaviour: its role is to propose

further interpretation hypotheses. We distinguish

between three prediction mechanisms: Anticipation,

Exploration and Termination. Anticipation is raised

by the corresponding norms in case of a lowering

confidence that is persistent over time: a transition

Domain-dependentandObserver-dependentFollow-upofHumanActivity-ANormativeMulti-agentApproach

675

toward another hypothesis at the same abstraction

level has to be proposed. Conversely, Exploration is

raised by the corresponding norms in case of a high

level confidence that is persistent over time: a

transition toward a higher level hypotheses might be

proposed, to open the current interpretation toward

new hypotheses spaces. The agents deposit the new

hypotheses in the Trace, with a null confidence.

They will be processed by dedicated norms, whose

role is to launch Intrinsic agents (Initialization

behaviour) for further processing (Verification).

Termination is launched in case of a low confidence

range persistent over some additional time, to stop

processing the corresponding hypothesis.

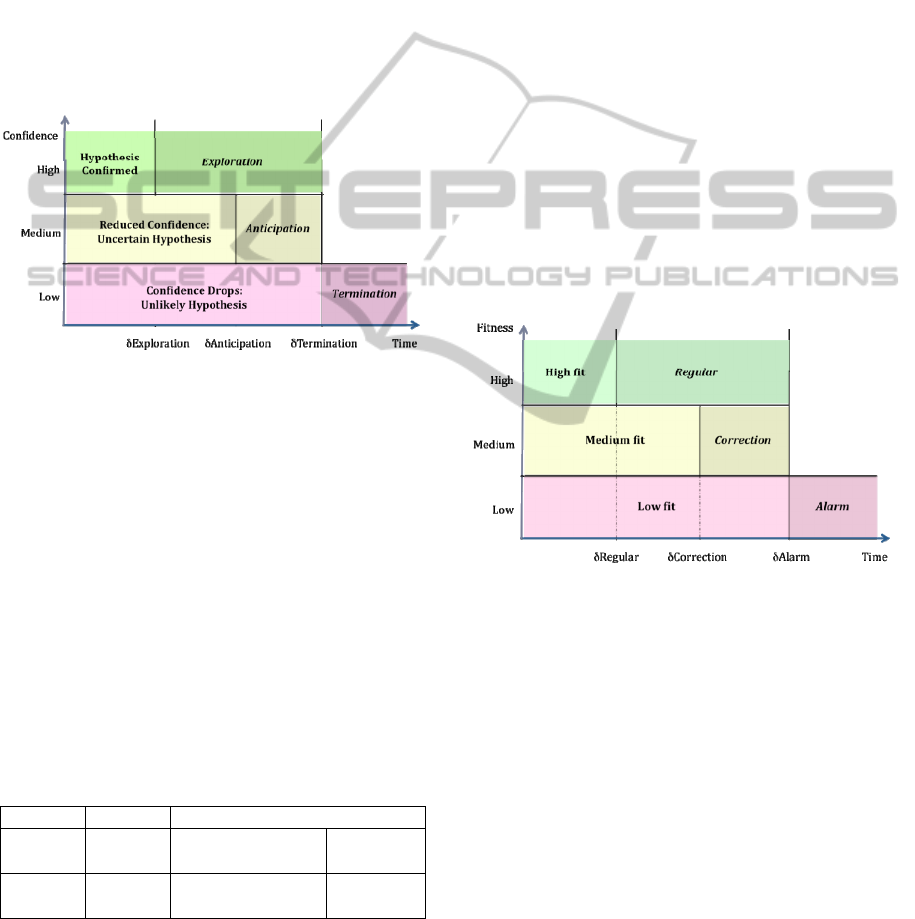

Figure 3: Correspondence between confidence degree and

I-Agents behaviours.

Figure 3 illustrates the correspondence between

Confidence level evolution and behaviour launch.

As may be seen, this process is regulated by several

parameters: two confidence thresholds C

low

and

C

medium

to assess whether an hypothesis has a low,

medium or high confidence, and three durations

thresholds: δ

Term

, δ

Ant

, δ

Expl

, to assess whether the

agent must be launched within an Exploration,

Anticipation or Termination behaviour. These

thresholds are part of the current agent Intrinsic

requirements and are shared through the Trace. They

may be subject to evolution, depending on the action

of Extrinsic agents.

Table 1: I-Norms examples (type, weights and flags

omitted).

Context Bearer Object

Conf

Null

Physio.

State

Conf < high & T >

Anticipation

Anticipation

Conf

Null

Activity

State

Conf = high & T >

Exploration

Exploration

The role of I-Norms is to frame the interpretation

process according to intrinsic requirements. To this

end, they launch their targeted I-Agents into one of

these 4 Corrective behaviours, depending on their

current hypothesis confidence degree and on the

time spent within the same confidence range. Some

I-Norm examples are provided in Table 1.

3.3 E-Agents Life Cycle

The role of E-agent is to ensure the follow-up of the

interpretation process according to observer-

dependent requirements. An Extrinsic-agent E is a

tuple: E-Agent = Requirt, Fit, Verif, Corr. Requirt

denotes the requirement that the agent follows (e.g.

stay in a Basal physiological state, keep

interpretation Parsimonious or Readable …). Fit

denotes a fitness range (low, medium, high) for this

hypothesis: this field is time stamped. Verif denotes

the regular E-agent behaviour: check the extent to

which the requirement is followed. Corr denotes the

corrective agent behaviour. It is launched by E-

Norms in case of a lack of compliance with the

considered requirement. Its role is to ensure the

“fitness” between the interpretation process and the

observer’s requirements.

Figure 4: Correspondence between fitness level and E-

Agents behaviours.

We distinguish between three corrective

mechanisms: I-Corr, E-Corr and Alarm. I-Corr holds

when a modification of intrinsic requirements will

ensure a better fit of the interpretation process to

existing extrinsic requirement. On the contrary, E-

Corr holds when the extrinsic requirements themsel-

ves have to evolve to fit some transitions in the

observed situation. Alarm will be raised in case of a

failure of the corrective attempts, which results in a

persistent lack of fit between the observer and the

interpretative process. Figure 4 illustrates the

correspondence between Fitness level and launching

of behaviours. As in Figure 3, this process is

regulated by several parameters. The role of the E-

Norm is to launch the proper regular or corrective

measures, depending on context. I-Corr (resp. E-

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

676

Corr) corrective measures will modify some intrinsic

(resp. extrinsic) parameters in the trace: e.g. rate of

anticipation, level of confidence required (resp.

expected level of performance, expected activity).

Examples of such norms are provided in Table 2.

Table 2: E-Norms examples.

Context Bearer Object

Parsimony I-Agent

NHyp >

Parsimony

I-Corr (Thigh

Basal E-Agent Winded

E-Corr (Heart

disease)

The Parsimony norm in Table 2 relates to how

well the hypothesis generation works: its role is to

control the current number of hypotheses to be

actively processed. Both lack and plethora of likely

hypotheses bring ambiguity and uncertainty, and

therefore low effectiveness. A solution to correct

these deviations is to modify the confidence and

duration thresholds: in case of lacking hypotheses,

reducing Anticipation will push more agents in the

Anticipation policy and thus increase resampling;

conversely, in case of too many hypotheses,

increasing the Thigh threshold will discriminate

more so that only the most likely hypotheses are

validated. Regulation at the extrinsic level may

occur in case of deviation from basal state: this may

require the verification of some further pathological

state. This is the role of the “Basal” E-Norm

example in Table 2: the occurrence of a Winded

Hypothesis, when the person is supposed to be in a

basal state, during a daily life scenario calls for

checking specific non basal physiological states and

therefore for a modification of the observer

expectations (extrinsic requirements Heart disease)

as regards the current state of the person.

4 APPLICATION

We present in this section an application to human

monitoring. The person is wearing a combination of

physiological (heart rate, breath rate...) and

actimetric (acceleration, position) unobtrusive

sensors capturing its physiology and activity. He/she

is following an outdoor scenario (hiking).

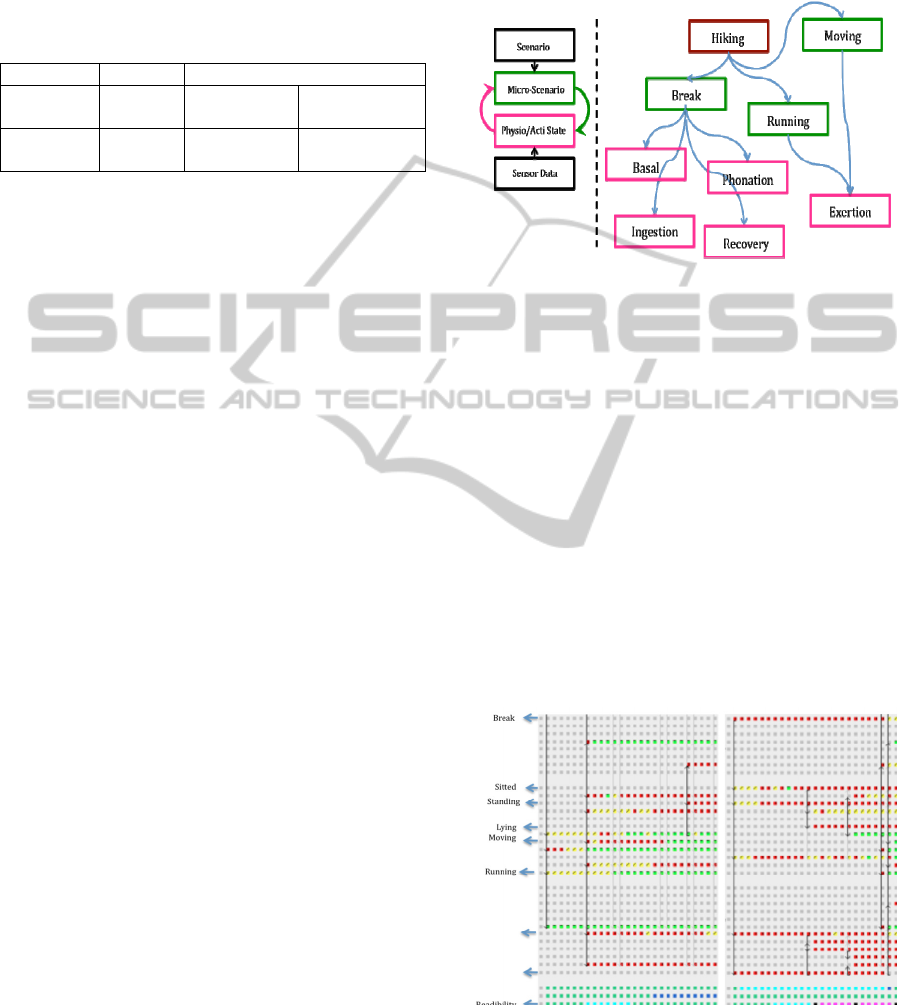

4.1 Knowledge Elements

A priori knowledge is provided to the agents, in the

form of an ontology (Figure 5), together with models

to interpret incoming data and norms to regulate

interpretation. We distinguish between 4 abstraction

levels: the one of the overall scenario, the one of the

micro-scenario (conjunction of states), the one of the

states (designing physiology or activity), and the one

of the data.

Figure 5: An example view of a hypothesis network

corresponding to a hiking scenario.

For state agents, confidence is computed from raw

data elements. For example, computation of the

Basal State confidence Basal will involve:

Basal.K == {HeartRate, BreathRate, SkinTemp}.

For micro-scenario agent, it is computed from state

components. For example, a Break micro-scenario

will involve the following states:

Break:K =={Recovery Phonation Basal}.

4.2 Experiments

Figure 6 shows the follow-up of a hiking activity.

We show in this experiment that modifying an

extrinsic requirement as regards a micro-scenario to

be verified modifies the way interpretation develops.

(a) (b)

Figure 6: Influence of extrinsic requirement on

interpretation: (a) hike; (b) break.

The dot’s colors indicate the level of confidence in

the considered hypothesis (red for Low, yellow for

Domain-dependentandObserver-dependentFollow-upofHumanActivity-ANormativeMulti-agentApproach

677

Medium, green for High). In case (a), the

interpretation is oriented toward a “hiking” micro-

scenario, which is consistent with the data, while it

is oriented toward a “break” in (b), which is not

consistent. As may be seen, the readability in case

(b) is bad. The system is lost in a collection of

wrong hypotheses. We show in figure 7a the result

of modifying the confidence thresholds so that

hypothesis verification becomes more difficult. This

was performed on the experiment of Figure 6a. A

better readability is obtained. A dynamic modifica-

tion of the verification rate, for the example on

Figure 6b is illustrated in Figure 7b. We observe a

reduced number of low hypothesis, due to a higher

termination rate (with some latency).

(a) (b)

Figure 7: Influence of intrinsic requirement on

interpretation: (a) increase of high threshold on case 6a;

(b) increase of verification rate on case 6b, while the

system is running (indicated by the vertical arrow).

5 CONCLUSIONS

We have proposed in this paper a novel approach to

handle both domain-dependent (or intrinsic) and

observer-dependent (or extrinsic) viewpoints on

human activity follow-up. A normative multi-agent

architecture is proposed, which draw on a distinction

between I-Agents and E-Agents, whose role is

respectively to ensure the follow-up of the observed

activity and the running interpretation. Their

processing result in the modification of a common

trace where results from both follow-up are stored.

Our design further draws on a distinction between I-

Norms and E-Norms, which regulate the behaviour

of the corresponding agents, based on information

from the trace, and express domain-dependent as

well as observer-dependent requirements. Further

work is needed to better formalize the approach (in

particular the deontic dimension of norm

application) and increase the experimental

background.

REFERENCES

Aarts, E. and de Ruyter, B. (2009), New Research Pers-

pectives on Ambient Intelligence. Journal of Ambient

Intelligence and Smart Environments, 1(1):5-14.

Boissier, O., Balbo, F. and Badeig, F. (2011), Controlling

multi- party interaction within normative multi-agent

organizations. In M. De Vos, N. Fornara, J. Pitt and G.

Vouros (Eds) Coordination, Organization, Institutions

and Norms in Agent Systems VI, LNAI 6541,

Springer-Verlag, pages 653-680.

Brémond F. and Thonnat M. (1998), Issues of representing

context illustrated by video-surveillance applications.

International Journal on Human-Computer Studies,

48(3): 375-391.

Carvalho, G. R., Paes, R. B., Choren, R., Alencar, P. S. C

and Pereira de Lucena C.J. (2005), Increasing

Software Infrastructure Dependability through a Law

Enforcement Approach. In Proceeding of the 1st

International Symposium on Normative Multiagent

Systems, NORMAS 2005, Hatfield, U.K. pages 65-72.

Castelfranchi, C. (2006), The architecture of a social

mind: the social structure of cognitive agents. In Sun,

R. (Eds.) Cognition and Multi-Agent Interaction: from

Cognitive Modeling to Social Simulation, Cambridge

University Press: Cambridge.

Dignum, F., Morley, D., Sonnenberg, E. & Cavedon, L.

(2000), Towards Socially Sophisticated BDI Agents.

In Proceedings of the 4th International Conference on

Multi-Agent Systems, ICMAS 2000, Boston, MA,

USA, pages 111-118.

Greenberg, S. (2001), Context as a dynamic construct.

Human-Computer Interaction Journal, 16(2): 257-268.

Hayes-Roth, B. (1995), An architecture for adaptive

intelligent systems. Artificial Intelligence, 72(1-

2):329–365.

Hébert, L. (2002), La Sémantique interprétative en

résumé. Texto ! (http://www.revue-texto.net/Inedits/

Inedits.html).

Isern, D., Sanchez, D., Moreno, A. (2010), Agents applied

in health care: A review. International Journal of

Medical Informatics, 79(3):145-166.

Klein, G., Phillips, J., Rall, E. and Peluso, D. (2006), A

data/frame theory of sensemaking. In R. R. Hoffman

(Ed.), Expertise out of context: Proceedings of the 6th

International Conference on Naturalistic Decision

Making. Mahwah, NJ: Lawrence Erlbaum &

Associates.

Vettier, B., Garbay, C. (2014), Abductive Agents for

Human Activity Monitoring, International Journal on

Artificial Intelligence Tools, Special Issue on Agents

Applied in Health Care, 23(1), to appear.

Weber K. and Glynn, M. (2006), Making sense with

institutions: Context, thought and action in Weick’s

theory. Organization Studies, 27(11):1639-1660.

ICAART2014-InternationalConferenceonAgentsandArtificialIntelligence

678