Development and Evaluation of Human-Computer Interface based on

Facial Motor Unit Activity

Carlos M. M. Queiroz

1

, Slawomir J. Nasuto

2

and Adriano O. Andrade

1

1

Faculty of Electrical Engineering, Federal University of Uberlândia, Av. João Naves de Ávila, 2121, Uberlândia, Brazil

2

School of Systems Engineering, University of Reading, Reading, U.K.

1 STAGE OF THE RESEARCH

Interfaces that enable human-computer interaction

have progressed significantly. In the past decade a

lot of effort has been directed to the development

and improvement of perceptual interfaces, i.e.,

interfaces that promote interaction with the

computer without the use of conventional keyboard

or mouse. This type of interface combines the

understanding of natural human capabilities (e.g.,

communication, motor, cognitive and perceptual

skills) with the use of these for interaction with the

computer, taking into account the ways in which

people naturally interact with each other and with

the world. The search for more natural forms of

interaction has directed recent research for the study

of biological signals that have the potential to

encode control strategies adopted by the central

nervous system (CNS). In this context, information

obtained through the activity of motor units - such as

firing rate, waveform of action potentials and

recruitment strategy - can be used in the

development of human-computer interfaces.

Therefore, this research proposes in an

unprecedented manner, the development and

evaluation of a human-computer interface based on

information extracted from motor units (MUs). The

interface development will consist of two steps: i)

preparation of a flexible sensor array capable of

detecting activity of MUs of facial muscles; ii)

implementation of tools for signal processing

capable of extracting information from MUs and

translation of this information into control signals.

The evaluation of the interface will consider: i) the

quantification of learning related to the use of the

interface; ii) the analysis of the correlation between

learning and the dynamics of neural oscillation

obtained by means of electroencephalographic

signals; iii) the comparison of the new proposed

interface with the Muscle Academy (Andrade et al.,

2012), which is a myoelectric interface recently

developed by our research group. The current stage

of this study is described below.

1.1 The Choice of the Biosignal

Acquisition System

The experiments that will be carried out in this

research require the use of a large number of input

channels. Since we will be collecting simultaneous

information from EMG sensor array together with

brain activity (EEG) it was necessary to find

commercial equipment, flexible enough to deal with

particularities of distinct biosignals and also with the

requirement of a large number of channels.

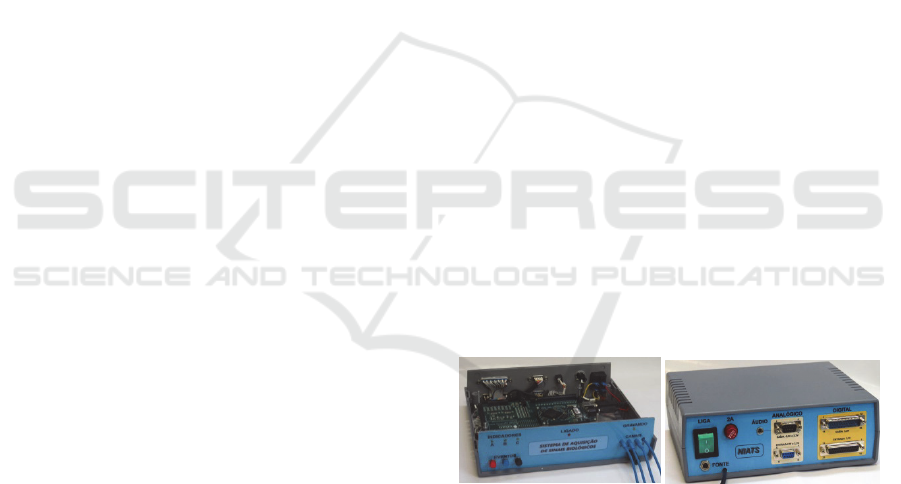

a) b)

Figure 1: The designed box to accommodate the

acquisition system board. a) Front view with cover open;

b) Back view.

Based on the analysis of a number of available

commercial systems it was verified that the

RHD2000-series amplifier (Intan Technologies,

USA) would be suitable for the research. The main

features of this signal conditioner are: A/D converter

of 16 bits; support of up to 256 input channels

(configurable to distinct types of biopotential

according to their inherent characteristics);

possibility of sampling rates varying from 1 kS/s to

30 kS/s; and finally, customizable multi-platform

software based on the C++/Qt graphical user

interface. Figure 1 shows a box designed to

accommodate the printed circuit board and the

acquisition system and via connectors provide

access to some input and output signals (analog and

digital). Figure 2 shows an example of the main

screen of the graphical user interface during the

acquisition of several EMG signals.

47

M. Queiroz C., J. Nasuto S. and O. Andrade A..

Development and Evaluation of Human-Computer Interface based on Facial Motor Unit Activity.

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

Figure 2: Main screen shot of the graphic user interface

control software (Intan Technologies, USA).

1.2 EMG Sensor Array

The development of a human-computer interface

based on the activity of MUs requires sensors with

contact areas of adequate size to provide the

selectivity required to detect isolated action

potentials of MUs. However, this selectivity should

not require high accuracy in repositioning the sensor

near the MU of interest which would prevent

everyday usage of the interface for non-technical

people. Thus, taking into account these aspects, we

developed the sensor arrays in two shapes: circular

and concentric surface. This current design was

made on a rigid surface and it is illustrated in Figure

3.

a) b) c)

Figure 3: The three sensors array designed. a) Circular

sensor array (diameter of 2mm and distance between

electrodes (DE) of 4mm); b) Circular sensor array

(diameter of 3mm and DE of 4mm); c) Concentric sensor

array (internal diameter of 2mm, external diameter of 6m

and DE of 7mm).

To avoid the repositioning difficulties of

circulars arrays between usage sections, the pairs of

bipolar sensors (electrodes) in arrays were spatially

distributed in such way to facilitate the alignment of

at least one couple in the direction of the muscle

fibers. Figure 4 shows the two adopted forms of

distribution for bipolar channels. In both settings the

electrode pairs were oriented at 45° but with

different distances between electrodes.

Figure 4: Scheme of distributing the pair of electrodes

(bipolar) oriented every 45° with different distances

between electrodes. b) Short distance b) Large distance.

The capture of input signals of the proposed

human-computer interface is composed of three

arrays, one for the Frontal and two for the Temporal

muscles. To design this set of arrays, we explored

the fact that the conditioning circuit and the digital

converter are miniaturized, so it is possible to place

them closer to the detection region, aiming to

capture data with better signal to noise ratio. Figure

5a shows a set of sensor array and Figure 5b shows

its use by an individual. The signal conditioner and

digital converter circuit (1) and the connector (2) are

highlighted in the figure.

a) b)

Figure 5: a) Set of sensor arrays used to capture EMG

signals from facial muscles. b) Set of sensor arrays in use

by an individual. The signal conditioner and digital

converter circuit (1) and the connector (2) are highlighted.

2 OUTLINE OF OBJECTIVES

The general objective of this research is to develop

and evaluate a human-computer interface based on

facial motor unit activity.

The specific objectives to achieve this goal can

be divided into: i) develop and evaluate a flexible

1

2

BIOSTEC2014-DoctoralConsortium

48

array sensor fabricated by using silver ink,

composed of nano-silver particles of high purity,

developed by researchers at the Institute of

Chemistry, Federal University of Uberlândia; ii)

evaluate and implement techniques of

multidimensional signal processing capable of

mapping the MU activity of facial muscles in

commands necessary for human computer

interaction; and iii) evaluate the learning of a user

while a user employs the human-computer interface

activated by facial movements.

Figure 6: Human-computer interface based on

electromyography of facial muscles. Source: extracted

with permission from (Andrade et al., 2012).

3 RESEARCH PROBLEM

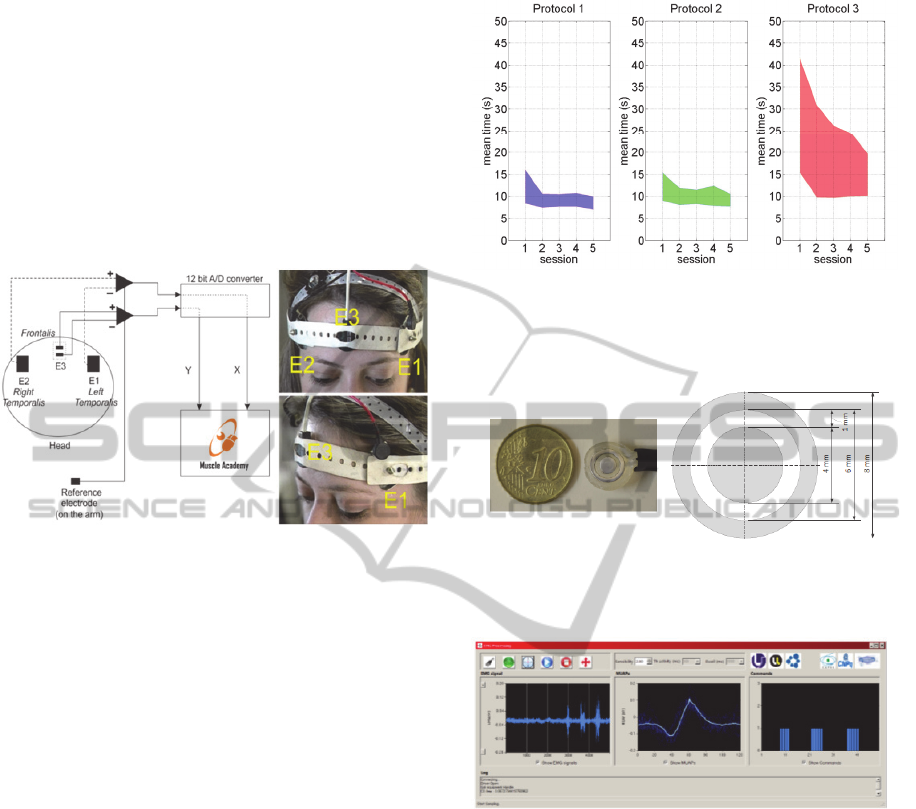

Recently, our group developed and evaluated a

human-computer interface (Andrade et al., 2012)

called Muscle Academy that allows complete

control of a computer cursor through the activation

of the frontal and temporal muscles.

The use of this interface has already been

evaluated by healthy individuals and people with

disabilities of upper limbs motor. Figure 6 presents a

basic schematic about how the sensors are

positioned on the facial muscles. The system

evaluation was performed by analysis of three

different protocols with progressive levels of

difficulty.

The evaluation results showed that there is a user

learning curve during the interface usage in five

different experimental sessions for all protocol types

(see Figure 7). However, there is a significant

discrepancy among the learning curve protocol 3

(with greater difficulty) and other protocols. This

reflects the difficulty of users access the smaller

objects in a computer interface, and also the

difficulty of fine motor control while performing this

task.

Figure 7: Results related to the learning to use the "Muscle

Academy". The mean time in seconds is the unit of

measure used to quantify the learning. Source: extracted

with permission from (Andrade et al., 2012).

Figure 8: Concentric sensor used in detection of Motor

Units Action Potentials developed by our research group

(Júnior, 2013).

Figure 9: Graphical interface illustrating sequences of

action potentials extracted in real time and translation of

them into commands (Júnior, 2013).

In order to solve this problem and allow the user

greater control interface, we developed a second

control strategy based on the detection of the activity

of MUs of only one facial muscle. For this purpose

we designed a concentric sensor (see Figure 8) able

to detect activities of MUs and a strategy to translate

this information in commands similar to those

reported in (Andrade et al., 2012). Examples of the

activity of MUs detected by the concentric sensor

are shown in Figure 9.

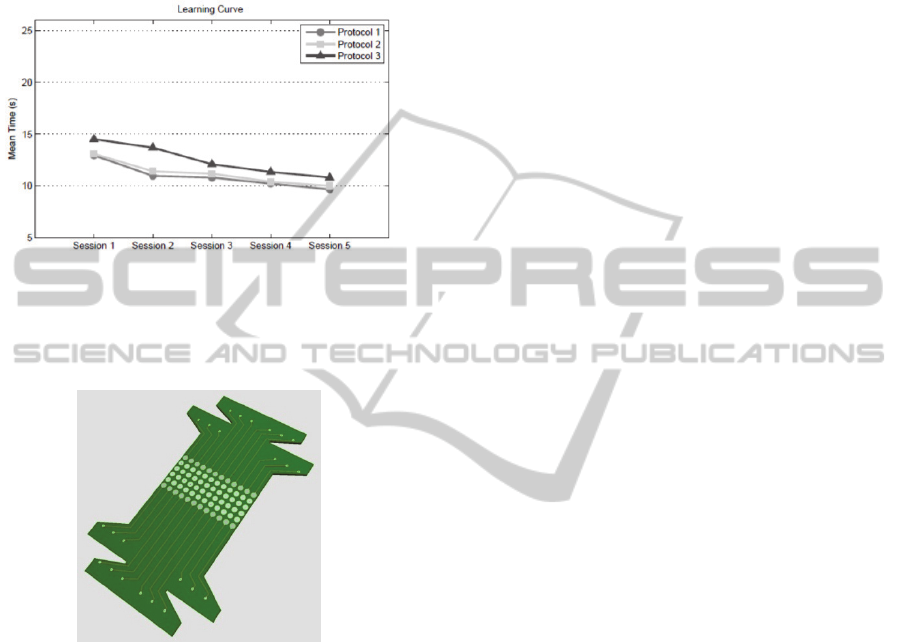

The results of the evaluation of this new

interface, illustrated in Figure 10, show that the

incremental learning over experimental sessions, and

that the discrepancy of learning is less among the

three protocols when compared to the results shown

DevelopmentandEvaluationofHuman-ComputerInterfacebasedonFacialMotorUnitActivity

49

in Figure 7. Thus, the problem of fine control

detected in Muscle Academy was largely solved.

However, from a practical standpoint, the use of this

interface is limited due to the great difficulty of

positioning of the sensor in the proximity of MUs of

interest.

Figure 10: Results concerning on learning of the use of the

system based on analysis of motor activity detected by

concentric electrode (Júnior, 2013). The mean time in

seconds is the unit of measure used to quantify the

learning.

Figure 11: Prototype of flexible sensor array for detection

of motor unit activity.

Given this context, the main purpose of this

research is to propose, implement and evaluate a

new control strategy based on processing of the

myoelectric activity from the facial MU detected by

sensor array (see an example of the prototype

flexible sensor array in Figure 11). It is expected that

whit this new control strategy, the sensor placement

problem is solved by expanding the contact area of

the sensor, and, also, that the learning on how to use

the interface is facilitated.

4 STATE OF THE ART

With the advancement of perceptual interfaces, i.e.

interfaces that promote interaction with the

computer without using keyboard or mouse

conventional, each time more research and

technologies have emerged in order to understand

the natural human capabilities (e.g., communication,

motor, cognitive and perceptual skills) and to

consider them in the process of human-computer

interaction (Oviatt and Cohen, 2000).

The use of perceptual interfaces is of particular

interest, but not limited to the field of rehabilitation

and assistive technology. Patients suffering motor or

cognitive limitations can benefit by the use of this

technology to facilitate and encourage interaction

with the environment and especially with computers.

Such interaction is each more present in our lives,

for example, television sets and video games can

now be controlled by body movements.

Currently there are many strategies that can be

used to obtain user information from a perceptive

interface. The basic idea is to convert information

from user input into commands that can be

interpreted by an application (Oviatt and Cohen,

2000); (Turk and Robertson, 2000).

The strategies can be broadly divided into the

following categories with respect to the type of

sensor used for the detection of the input signal

(Higginbotham et al., 2007): (i) pressure / touch

(Bourhis et al., 2002), (ii) motion and gesture

recognition (Javanovic and MacKenzie, 2010), (iii)

speech recognition (Majewski and Kacalak, 2006)

and (iv) biopotentials (Chin et al., 2008).

The main motivation for using biopotentials is,

unlike on-off approaches, the possibility to obtain a

more natural and proportional control of the human-

computer interface (Higginbotham et al., 2007);

(Ahsan et al., 2009). An evaluation of review studies

(Andrade et al., 2011); (Tai et al., 2008) that have

been published recently about the applications of

different types of biopotentials (e.g.,

electroencephalogram, electromyogram, electro-

oculogram) in human-computer interaction suggests

that the use of electromyographic (EMG) is probably

the most common and the reason may be the great

success of the use of this signals acting as the input

informations of interfaces that control prosthetic

devices (Englehart et al., 2001); (Hargrove et al.,

2007); (Huang et al., 2005); (Jiang et al., 2009).

5 METHODOLOGY

For the development and evaluation of human-

computer interface is proposed an experimental

scheme with appropriate resources to enable the use

of the interface by two distinct groups and the

BIOSTEC2014-DoctoralConsortium

50

recording of data from central and peripheral

nervous system.

5.1 Definition of Experimental Groups

and Criteria for Inclusion and

Exclusion

In total, 20 individuals of both genders, from

different ages groups, divided into two groups, will

be recruited to participate in the experiments

proposed in this research.

Experimental group 1 (G1): it will be composed

of 10 healthy subjects (i.e. without disabilities in

upper limbs), of both genders, aged over 18 years.

Experimental group 2 (G2): it is composed of

individuals over 18 years, both genders, with motor

disorders of the upper limbs (i.e. paralysis,

amputations, congenital malformations, changes in

motoneuron) that prevents the individual to move

the mouse with his hands. Individuals should not

present neurological disorders which disturb the

concentration or physical limitation that prevents the

contraction of the muscles Temporal and Frontal.

Subjects who are unable to perform the contraction

of these muscles will be excluded from the

experimental group.

The subjects of the experimental group G1 will

be recruited randomly in the population, whereas

subjects in the experimental group G2 will be

recruited in institutions that serve people with

neuromotor disabilities. All individuals participate

voluntarily in this study. The procedures of this

research will be previously explained to the subjects

for their full awareness about what will be

accomplished. Each individual and/or his legally

responsible will fill in and sign an Informed Consent

Form proving that will be aware about the protocols

and research, and also, that agrees to perform the

experiment, without receiving any charge for

participation. The confidentiality and personal

information of research participants will be

maintained.

5.2 Definition of Training Protocols

and Data Collection

The training protocol and evaluation of human-

computer interface of this research is similar to that

used in the evaluation of the Muscle Academy

(Andrade et al., 2012). The main difference is that

this protocol will include the recording of brain

activity (electroencephalogram detected as standard

10-20) simultaneously to the MU activity (detected

by arrays of flexible sensors, placed on facial

muscles) in order to provide a more detailed

evaluation of the learning process due to the use of

the interface. This type of analysis will be performed

off-line and it is detailed in the next section.

The system evaluation will be performed in

acclimatized room, with only the presence of the

evaluator and the subject (with the accompanying, if

necessary) and equipment to carry out the research.

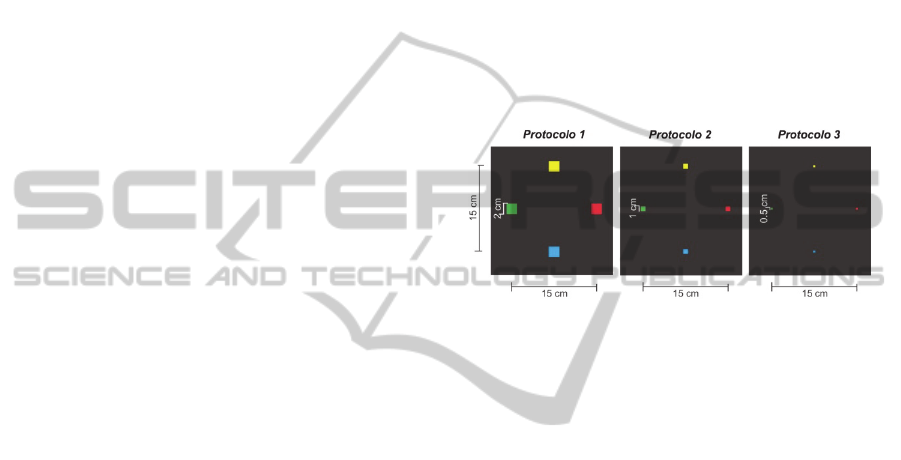

This study is divided into three protocols varying

the size of the buttons to be clicked according to

each protocol (Protocol 1, buttons 2 cm x 2 cm,

Protocol 2 buttons 1 cm x 1 cm and protocol 3,

buttons 0.5 cm x 0.5 cm), and each button has a

different colour (GREEN, YELLOW, RED and

BLUE) being arranged in a cross shape (Figure 12).

Figure 12: Interface of experimental protocols with

different difficulty levels. Source: (Andrade et al., 2012).

The distance between the centers of the buttons

in the 3 protocols is constant, and its area varies

from one protocol to another, thereby increasing the

difficulty as decreases the area of the buttons.

The goal of this interface is to allow the subject

to control the cursor, and so, the learning can be

quantified, considering the time taken to perform the

specific tasks as a good parameter to measure

learning progress. The following tasks will be

requested to the subjects:

1. Clockwise: move the cursor to the green button

and click, move the cursor to the yellow button

and click, move the cursor to the red button and

click, move the cursor to the blue button and

click, and finally move the cursor to the green

button and click;

2. Counterclockwise: move the cursor to the green

button and click, move the cursor to the blue

button and click, move the cursor to the red

button and click, move the cursor to the yellow

button and click, and finally move the cursor to

the green button and click;

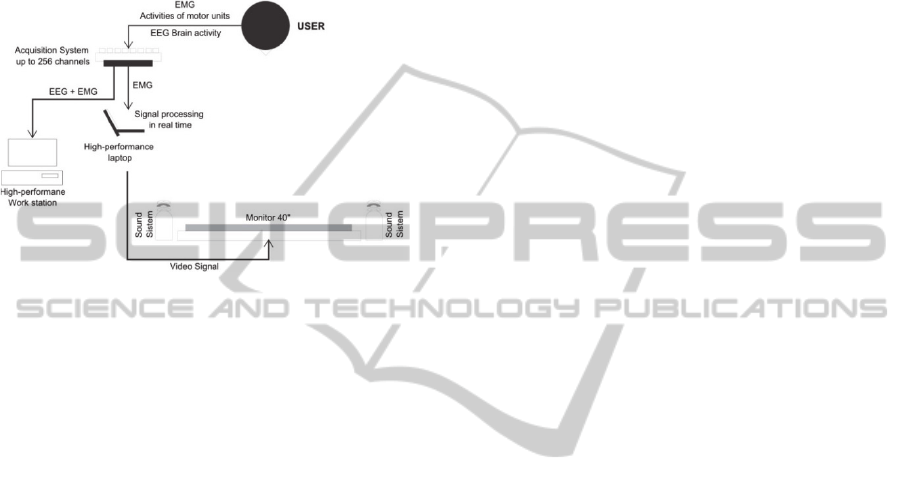

Figure 13 shows a schematic which includes the

main elements involved in data collecting where

muscle and brain activities are simultaneously

recorded and stored on a workstation with high

performance for offline analysis. The standard 10-20

DevelopmentandEvaluationofHuman-ComputerInterfacebasedonFacialMotorUnitActivity

51

will be used for the positioning of EEG sensors. The

MU activity, detected by sensor arrays on facial

muscles, is converted in real time by software

available in a high-performance laptop in commands

that enable the control of a cursor for interacting

with the graphical interface shown in Figure 12. The

user will receive continuous feedback audible and

visual interaction.

Figure 13: Main components involved in data collection

and analysis.

5.3 Analysis of Learning through the

Record of Muscle and Brain

Activities

During the interaction with the graphical interface,

shown in Figure 12, the activities of the MUs and

brain (EEG) will be recorded simultaneously. The

purpose of this registry is to perform offline analysis

in order to understand the correlation between motor

learning and brain dynamics, arising from the use of

the interface. This analysis will enable the

development of alternative indices that can quantify

and characterize learning in human computer

interaction. These indices will be confronted with

the traditional for the measurement of runtimes tasks

illustrated in Figures 7 and 10.

The analysis of the correlation between muscle,

brain and learning activities will be studied using the

technique of signal processing PLS (Partial Least

Squares), which is a multivariate statistical tool

widely used in studies with the aim of verifying

correlations between brain activity and behaviour

(Martı

́

nez-Montes et al., 2004, Krishnan et al.,

2011).

6 EXPECTED OUTCOME

Considering the main objective of this doctoral work

is to develop and evaluate a human-computer

interface based on MU activity of the facial muscles

and taking into account the methodology adopted, it

is expected to achieve some goals.

A first expected practical outcome is the

development of a flexible sensor array based on an

ink composed of nanoparticles of pure silver capable

of detecting biopotentials which has numerous

applications in rehabilitation, neurology, assistive

technology, and others. This type of technology can

integrate tools used in the assessment of the

neuromuscular system, for the purpose of diagnosing

diseases that affect nerves and muscles. The great

advantage of using this technology is its low cost

and ease of application. This approach eliminates the

usage of sophisticated and expensive technologies to

silver deposit on surfaces and allows the sensors

production with different shapes so adapting to

various muscles.

Once we have the right conditions to capture the

desired biopotentials, another important achievement

is to obtain a computer program which implements a

human-computer interface capable of interpreting

the MU activity. When compared to other existing

technologies, it is expected that this enables the user

to more precise control of the interface through the

more subtle and natural movements, and thus reduce

the incidence of muscle fatigue and discomfort to

the user. Whereas the developed interface is

independent of the system or device to be controlled,

then the same has applications in games (serious

games) used for rehabilitation purposes, control

environments (e.g., smart homes), automated

wheelchairs, bioefeedback systems to control stress

or emotions.

Finally, because of the need to evaluate the

interface developed, it is expected the development

of a neuromotor learning index capable of

quantifying and evaluating the learning of

individuals using the human-computer interface. The

main innovation of this index is take into account

components of the central nervous system (brain -

EEG) and peripheral (muscle - EMG), and not only

the user´s response time. From a practical

perspective, this index can be used to measure the

contribution level of the central and peripheral

nervous system on learning. Furthermore, it can be

used for assessment of human-computer interface,

because this index can help diagnose of learning

disabilities that do not have standardized tests.

ACKNOWLEDGEMENTS

The authors would like to thank the financial support

BIOSTEC2014-DoctoralConsortium

52

of the Brazilian government through the following

agencies: CAPES (Coordination for the

Improvement of Higher Level Personnel), CNPq

(National Council for Research and Development)

and FAPEMIG (Research Support Foundation of

Minas Gerais), IFTM (Federal Institute of Triângulo

Mineiro).

REFERENCES

Ahsan, M. R., Ibrahimy, M. I. & Khalifa, O. O. 2009. Emg

Signal Classification For Human Computer

Interaction: A Review. European Journal Of Scientific

Research, 33, 480-501.

Andrade, A. O., Bourhis, G., Losson, E., Naves, E. L. M.,

Pinheiro, C. G., Jr. & Pino, P. 2011. Alternative

Communication Systems For People With Severe

Motor Disabilities: A Survey. Biomedical Engineering

Online, 10, 31.

Andrade, A. O., Pereira, A. A. & Kyberd, P. J. 2012.

Mouse Emulation Based On Facial Electromyogram.

Biomedical Signal Processing And Control.

Bourhis, G., Pino, P. & Leal-Olmedo, A. Communication

Devices For Persons With Motor Disabilities: Human-

Machine Interaction Modeling. Systems, Man And

Cybernetics, 2002 Ieee International Conference On,

2002. Ieee, 6 Pp. Vol. 3.

Chin, C. A., Barreto, A., Cremades, J. G. & Adjouadi, M.

2008. Integrated Electromyogram And Eye-Gaze

Tracking Cursor Control System For Computer Users

With Motor Disabilities. Journal Of Rehabilitation

Research And Development, 45, 161.

Englehart, K., Hudgin, B. & Parker, P. A. 2001. A

Wavelet-Based Continuous Classification Scheme For

Multifunction Myoelectric Control. Biomedical

Engineering, Ieee Transactions On, 48, 302-311.

Hargrove, L. J., Englehart, K. & Hudgins, B. 2007. A

Comparison Of Surface And Intramuscular

Myoelectric Signal Classification. Biomedical

Engineering, Ieee Transactions On, 54, 847-853.

Higginbotham, D. J., Shane, H., Russell, S. & Caves, K.

2007. Access To Aac: Present, Past, And Future.

Augmentative And Alternative Communication, 23,

243-257.

Huang, Y., Englehart, K. B., Hudgins, B. & Chan, A. D.

2005. A Gaussian Mixture Model Based Classification

Scheme For Myoelectric Control Of Powered Upper

Limb Prostheses. Biomedical Engineering, Ieee

Transactions On, 52, 1801-1811.

Javanovic, R. & Mackenzie, I. S. 2010. Markermouse:

Mouse Cursor Control Using A Head-Mounted

Marker. Computers Helping People With Special

Needs. Springer.

Jiang, N., Englehart, K. B. & Parker, P. A. 2009.

Extracting Simultaneous And Proportional Neural

Control Information For Multiple-Dof Prostheses

From The Surface Electromyographic Signal.

Biomedical Engineering, Ieee Transactions On, 56,

1070-1080.

Júnior, C. G. P. 2013. Assistive Technology For The

Severe Motor Impaired By Using Online Processing

Of Motor Unit Action Potentials Of Facial Muscles.

Phd Phd Thesis, Federal University Of Uberlândia.

Krishnan, A., Williams, L. J., Mcintosh, A. R. & Abdi, H.

2011. Partial Least Squares (Pls) Methods For

Neuroimaging: A Tutorial And Review. Neuroimage,

56, 455-475.

Majewski, M. & Kacalak, W. 2006. Natural Language

Human-Machine Interface Using Artificial Neural

Networks. Advances In Neural Networks-Isnn 2006.

Springer.

Martínez-Montes, E., Valdés-Sosa, P. A., Miwakeichi, F.,

Goldman, R. I. & Cohen, M. S. 2004. Concurrent

Eeg/Fmri Analysis By Multiway Partial Least Squares.

Neuroimage, 22, 1023-1034.

Oviatt, S. & Cohen, P. 2000. Perceptual User Interfaces:

Multimodal Interfaces That Process What Comes

Naturally. Commun. Acm, 43, 45-53.

Tai, K., Blain, S. & Chau, T. 2008. A Review Of

Emerging Access Technologies For Individuals With

Severe Motor Impairments. Assistive Technology, 20,

204-221.

Turk, M. & Robertson, G. 2000. Perceptual User

Interfaces. Communications Of The Acm, 43.

DevelopmentandEvaluationofHuman-ComputerInterfacebasedonFacialMotorUnitActivity

53