A Cloud Accountability Policy Representation Framework

Walid Benghabrit

1

, Herv

´

e Grall

1

, Jean-Claude Royer

1

, Mohamed Sellami

1

, Monir Azraoui

2

,

Kaoutar Elkhiyaoui

2

, Melek

¨

Onen

2

, Anderson Santana De Oliveira

3

and Karin Bernsmed

4

1

Mines Nantes, 5 rue A. Kastler, F-44307 Nantes, France

2

EURECOM, Les Templiers, 450 Route des Chappes F - 06410 Biot Sophia Antipolis, France

3

SAP Labs France, 805 avenue du Dr Donat Font de l’Orme F - 06250 Mougins Sophia Antipolis, France

4

SINTEF ICT, Strindveien 4, NO-7465 Trondheim, Norway

Keywords:

Accountability, Data Protection, Framework, Policy Language, Policy Enforcement.

Abstract:

Nowadays we are witnessing the democratization of cloud services. As a result, more and more end-users

(individuals and businesses) are using these services for achieving their electronic transactions (shopping, ad-

ministrative procedures, B2B transactions, etc.). In such scenarios, personal data is generally flowed between

several entities and end-users need (i) to be aware of the management, processing, storage and retention of

personal data, and (ii) to have necessary means to hold service providers accountable for the usage of their

data. In fact, dealing with personal data raises several privacy and accountability issues that must be consid-

ered before to promote the use of cloud services. In this paper, we propose a framework for the representation

of cloud accountability policies. Such policies offer to end-users a clear view of the privacy and accountability

obligations asserted by the entities they interact with, as well as means to represent their preferences. This

framework comes with two novel accountability policy languages; an abstract one, which is devoted for the

representation of preferences/obligations in an human readable fashion, a concrete one for the mapping to

concrete enforceable policies. We motivate our solution with concrete use case scenarios.

1 INTRODUCTION

According to (Pearson et al., 2012), accountability re-

gards the data stewardship regime in which organi-

zations that are entrusted with personal and business

confidential data are responsible and liable for pro-

cessing, sharing, storing and otherwise using the data

according to contractual and legal constraints from

the time it is collected until when the data is destroyed

(including onward transfers to third parties). Obli-

gations associated to such responsibilities can be ex-

pressed in an accountability policy, which is a set of

rules that defines the conditions under which an ac-

countable entity must operate.

Today, there is neither an established standard for

expressing accountability policies nor a well defined

way to enforce these policies. Since cloud services of-

ten combine infrastructure, platform and software ap-

plications to aggregate value and propose new cloud

applications to individuals and organizations, it is fun-

damental for an accountability policy framework to

enable “chains of accountability” across cloud ser-

vices addressing regulatory, contractual, security and

privacy concerns.

In the context of the EU FP7 A4Cloud project

1

we

are currently working on defining a framework where

accountability policies will be enforceable across the

cloud service provision chain by means of account-

ability services and tools. Accountable organizations

will make use of these services to ensure that obli-

gations to protect personal data and data subjects’

rights

2

are observed by all who store and process

the data, irrespective of where that processing occurs.

Under the perspective of the concept of accountabil-

ity, we have elicited the following types of account-

ability obligations that must be considered while de-

signing our policy framework:

• Access and Usage Control rules - express which

rights should be granted or revoked regarding the

1

The Cloud Accountability Project:

http://www.a4cloud.eu/.

2

This work mainly focus on the European Data Protec-

tion directive (Directive, E. U., 1995).

489

Benghabrit W., Grall H., Royer J., Sellami M., Azraoui M., Elkhiyaoui K., Önen M., Santana De Oliveira A. and Bernsmed K..

A Cloud Accountability Policy Representation Framework.

DOI: 10.5220/0004949104890498

In Proceedings of the 4th International Conference on Cloud Computing and Services Science (CLOSER-2014), pages 489-498

ISBN: 978-989-758-019-2

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

use and the distribution of data in cloud infrastruc-

tures, and support the definition of roles as spec-

ified in the Data Protection Directive, e.g. data

controller and data processor.

• Capturing privacy preferences and consent - to ex-

press user preferences about the usage of their per-

sonal data, to whom data can be released, and un-

der which conditions.

• Data Retention Periods - to express time con-

straints about personal data collection.

• Controlling Data Location and Transfer - clear

whereabouts of location depending on the type of

data stored and on the industry sector processing

the data (subject to specific regulations) must be

provided. Accountability policies for cloud ser-

vices need to be able to express rules about data

localization, such that accountable services can

signal where the data centers hosting them are

located. Here we consider strong policy binding

mechanisms to attach policies to data.

• Auditability - Policies must describe the clauses

in a way that actions taken upon enforcing the

policy can be audited in order to ensure that the

policy was adhered to. The accountability policy

language must specify which events have to be au-

dited and what information related to the audited

event have to be considered.

• Reporting and notifications - to allow cloud

providers to notify end-users and cloud customers

in case of policy violation or incidents for in-

stance.

• Redress - express recommendations for redress in

the policy in order to set right what was wrong and

what made a failure occur.

In this paper we provide a cloud accountability

policy representation framework designed while con-

sidering the aforementioned requirements. We define

an abstract yet readable language, called AAL, for

accountability obligations representation in a human

readable fashion. We also define a concrete policy

enforcement language, called A-PPL, as an extension

of the PPL (Ardagna et al., 2009) language. The pro-

posed framework, offers the means for a translation

from abstract obligations expressed in AAL to con-

crete policies in A-PPL.

The rest of this paper is organized as follows. Sec-

tion 2 describes related work. Section 3 gives an

overview on the main components of our policy rep-

resentation framework. We present the abstract ac-

countability policy language we propose in Section 4

and the concrete one in Section 5. Section 6 describes

a realistic use case as a proof of concept to our work.

Section 7 discusses our work and presents directions

for future work.

2 RELATED WORK

In the following, we provide an overview of related

work in the field. We organize this section along the

following categories that relate to our contribution in

this paper: accountability in computer science, obli-

gations in legal texts and directives, enforcement and

policy languages.

2.1 Accountability

There is a recent interest and active research for ac-

countability which overlap several domains like se-

curity (Weitzner et al., 2008; Zhifeng Xiao, 2012;

Pearson and Wainwright, 2013), language represen-

tation (M

´

etayer, 2009; DeYoung et al., 2010), au-

diting systems (Feigenbaum et al., 2012; Jagadeesan

et al., 2009), evidence collection (Sundareswaran

et al., 2012; Haeberlen et al., 2010) and so on. How-

ever, only few of them consider an interdisciplinary

view of accountability taking into account legal and

business aspects. We particularly emphasize the work

from (Feigenbaum et al., 2012) and (Pearson and

Wainwright, 2013) since they provide a general, con-

crete view and yet an operational approach.

Regarding tool supports and frameworks we can

find several proposals (Wei et al., 2009; Haeberlen

et al., 2010; Zou et al., 2010), but none of them

provides a holistic approach for accountability in

the cloud, from end-user understandable sentences to

concrete machine-readable representations. In (Sun-

dareswaran et al., 2012), authors propose an end-to-

end decentralized accountability framework to keep

track of the usage of the data in the cloud. They sug-

gest an object-centered approach that packs the log-

ging mechanism together with users’ data and poli-

cies.

2.2 Obligations in Regulations

There is an international trend in protecting data, for

instance in Europe with Directive 95/46/EC (Direc-

tive, E. U., 1995), the HIPAA rules (US Congress,

2002) in the USA and the FIPPA act (Legislative As-

sembly of Ontario, 1988) in Canada. As an exam-

ple, Directive 95/46/EC states rules to protect per-

sonal data in case of processing or transferring data to

other countries. There exist some attempts to formal-

ize or to give rigorous analyses of this kind of rules.

In (M

´

etayer, 2009) the authors present a restricted

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

490

natural language SIMPL (SIMple Privacy Language)

to express privacy requirements and commitments.

In (Breaux and Anton, 2005) the authors describe a

general process for developing semantic models from

privacy policy goals mined from policy documents.

In (Kerrigan and Law, 2003), the authors develop an

approach where contracts are represented by XML

documents enriched with logic metadata and assis-

tance with a theorem prover. In (DeYoung et al.,

2010) the authors provide a formal language to ex-

press privacy laws and a real validation on the HIPAA

and GLBA (US Congress, 1999) sets. These works

either are not end-to-end proposals, only cover data

privacy not accountability or are only formal propos-

als without an enforcement layer.

2.3 Enforcement and Policies

A number of policy languages have been proposed in

recent years for machine-readable policy representa-

tion. We reviewed several existing policy languages

(see (Garaga et al., 2013) for details), defined either

as standards or as academic/industrial proposals.

Existing policy languages have focused on spe-

cific obligations. The eXtensible Access Con-

trol Markup Language (XACML) (OASIS Standard,

2013) aims at providing a declarative language for ac-

cess control. The XML-based languages P3P (Plat-

form for Privacy Preferences Project) (Marchiori,

2002), PPL (Primelife Policy Language) (Ardagna

et al., 2009) and SecPal4P (Becker et al., 2010)

are used to describe privacy policies and data col-

lection policies. SLAng (Lamanna et al., 2003)

and ConSpec (Aktug and Naliuka, 2008) are de-

signed to automatize contract negotiations and to

monitor the agreed contract statements. However, all

these proposals fail to provide elements for specifying

accountability-specific obligations such as logging,

reporting, audits, evidence collection and redress.

Having identified the limitations of existing lan-

guages, we analyzed their extensibility and their suit-

ability to express accountability obligations. Exten-

sible languages such as XACML and PPL appear to

be the most suitable. In our work we consider PPL

since it provides elements that capture the best ac-

countability obligations (see Section 5). In a nut-

shell, PPL extends XACML, an XML-based language

aimed for access control, to provide an automatic

means to define and manage privacy policies. PPL

allows expressing data handling policies (on the data

controller side) and data handling preferences (on the

data subject side) that are evaluated and matched to

output a sticky policy that travels with the data down-

stream. Therefore, PPL specifies statements on ac-

cess control, authorizations and obligations. In ad-

dition, the language provides a way to declare some

accountability-specific obligations such as logging

and notifications. However, they fail to capture these

obligations accurately and may be unpractical when

directly used within an accountability policy. Besides,

auditing is not part of PPL since its focus is on privacy

and not accountability.

3 THE CLOUD

ACCOUNTABILITY

FRAMEWORK

In this section, we provide an overview of our pro-

posed policy representation framework. Such frame-

work must allow end-users to easily express their ac-

countability obligations and preferences and even be

complete and rigorous enough to be run by a policy

execution engine. Hence we are faced with the fol-

lowing dilemma: the policy must be written by an

end-user, which does not necessarily have skills in a

certain policy language and the policy must be ma-

chine understandable at the same time. Machine un-

derstandable means that sentences can be read, under-

stood and executed by a computer.

In this context, we propose a policy representa-

tion framework (see Figure 1) that allows a user, step

(1) in Figure 1, to express his accountability needs

in a human readable fashion and (2) offers the neces-

sary means to translate them (semi-)automatically

3

to

a machine understandable format.

A4Cloud Policy Representation

Framework

Human/Machine Readable

Representation (AAL)

Machine Understandable

Representation (A-PPL)

Human Readable

Accountability obligations

(1)

(2)

Cloud

Actor

(M)

Figure 1: Overview on the accountability policy represen-

tation framework.

Accountability as it appears in legal, contractual

and normative texts about data privacy make explicit

four important roles that we consider in our proposal:

• Data subject: this role represents any end-user

which has data privacy concerns, mainly because

he outsourced some of its data to a cloud provider.

3

here “semi” means that sometimes human assistance

could be needed.

ACloudAccountabilityPolicyRepresentationFramework

491

• Data processor: this role is attributed to any com-

putational agent which processes some personal

data. It should act under the control of a data con-

troller.

• Data controller: it is legally responsible to the data

subject for any violations of its privacy and to the

data protection authority in case of misconduct.

• Auditor: it represents data protection authorities

which are in charge of the application of laws and

directives.

3.1 Step (1). Human/machine Readable

Representation

To express accountability obligations we define an

Abstract Accountability Language (AAL), which is

devoted to expressing accountability obligations in an

unambiguous style and which is close to what the end-

user needs and understands. As this is the human

readable level, this language should be simple, akin

to a natural logic, that is a logic expressed in a subset

of a natural language.

For instance, a simple access control obligation to

state that “the data d cannot be read by all agents”

will be formulated in a human/machine readable fash-

ion using our accountability language as “MUSTNOT

ANY:Agent.READ(d:Data)”. Details on the AAL syn-

tax are provided in Section 4.

3.2 Step (2). Machine Understandable

Representation

In this step (called the mapping), the account-

ability obligations expressed in AAL are (semi-

)automatically translated into a machine understand-

able policy. We target a policy language that is able

to enforce classic security means (like access or usage

controls) but also accountability obligations. Such au-

tomatic translation may need several passes, due to

the high level of abstraction of AAL.

As analyzed in Section 2, the PrimeLife Policy

Language (PPL) (Ardagna et al., 2009) seems the

most convenient language for privacy policies repre-

sentation. It can be extended to address specific ac-

countability obligations such as auditability, notifica-

tion or logging obligations. Hence, we propose an

extension to PPL, A-PPL for accountable PPL, which

supports such obligations. The details of this exten-

sion are described in Section 5.

4 ABSTRACT LANGUAGE

We introduce in this section AAL (Abstract Account-

ability Language), which is devoted to expressing ac-

countability obligations in an unambiguous human

readable style. The AAL concepts are presented in

Section 4.1, its syntax in Section 4.2 and we provide

an outlook on our approach for a machine understand-

able representation of AAL policies in Section 4.3.

4.1 AAL Concepts

As explained in (Feigenbaum et al., 2012) an ac-

countable system can be defined with five steps: pre-

vention, detection, evidence collection, judgment and

punishment. We follow this line for the foundation

of our accountability language. In AAL, usage con-

trol expressions represent the preventive description

part. Audit expressions encompass the detection, ev-

idence collection and judgment parts. Finally, rec-

tification expressions represent the punishment de-

scription part. We use the term rectification since

these expressions don’t cover only punishment, but

also remediation, compensation, sanction and penalty.

Thereby, an AAL sentence is a property (more for-

mally a distributed system invariant) expressing usage

control, auditing and rectification. The general form

of an AAL sentence is: UsageControl Auditing

Rectification and the informal meaning is: try to

ensure the usage control, in case of an audit, if a vi-

olation is observed then the rectification applies. The

reader should also note that there are two flavors of

AAL sentences:

• User preferences: expressing the obligations a

data subject wants to be satisfied, for instance he

does not want its data to be distributed over the

network or only used for statistics by a given data

processor, and so on.

• Processor obligations (sometimes called obliga-

tions or policies): these are the obligations the

data processor declares to ensure regarding the

data management and processing.

Finally, as many policy representation languages, we

consider permission, obligation and prohibition in

AAL. They occur in various approaches, like in PPL,

or in the ENDORSE project

4

. Permission, obli-

gation and prohibition are respectively expressed in

AAL sentences with these keywords: MAY, MUST and

MUSTNOT, as advocated by the IETF RFC 2119 (Brad-

ner, 1997).

4

http://ict-endorse.eu/

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

492

4.2 AAL Syntax

Figure 2 shows the syntax of AAL using a Backus-

Naur Form (BNF) (Knuth, 1964) like syntax. AAL

allows the expression of Clauses representing obli-

gations that have to be met either in an account-

ability policy or preference. A Clause has one

usage expression and optionally

5

an audit and a

rectification expression: Exp (’AUDITING’ Exp)?

(’IF VIOLATED THEN’ Exp)?. The expression Exp

of a clause can be either atomic or composite. Com-

posite Exps are written in the form Exp (’OR’ |

’AND’ | ’ONLYWHEN’ | ’THEN’) Exp.

As an example, consider the user preference of a

data subject who grants read access to an agent A on

its data D. This usage control is a permission, which

can be expressed as follows.

MA Y A . RE AD ( D : Da ta )

But the full accountability sentence does imply

that an auditor B will audit the system and, in case

of violations, can sanction the data controller C.

MA Y A . RE AD ( D : Da ta )

AU D I T ING M US T B. AU D IT ( C . lo gs )

IF_ V I O L A T E D _ T H E N MU S T B . S A NC T I O N (C )

Further examples of user preferences and proces-

sor obligations expressed in AAL are provided in Sec-

tion 6.

4.3 Machine Understandability

Generating machine understandable policies from ac-

countability preferences and obligations written in

AAL can be easily done when dealing with usage con-

trol clauses. However, this mapping is less obvious

for clauses with temporal modalities and with audit-

ing. The main issue for such mapping is the gap be-

tween the AAL language, which is property-oriented,

and the machine understandable language, which is

operational. To fill this gap we need more artifacts,

Figure 3 provides an overview on our proposed map-

ping process.

According to this figure, we can see that go-

ing from a human/machine readable representation

in AAL to a machine understandable representation

of the accountability preferences/obligations (arrow

numbered (2) in Figure 3) is done through three steps:

• (2’.1). First, a temporal logic is used to make

more concrete AAL sentences as temporal logic

properties. Indeed, in an accountability policy we

should represent the notions of permission, obli-

gation and prohibition. In addition, there is a need

5

Items followed by ? are optional expressions.

A4Cloud Policy Representation Framework

Technology Model

Pivot Model

Human Readable

Accountability obligations

Human/Machine

Readable Representation

Temporal Logic

for Accountability

Machine Understandable

Representation

Policy Calculus

(1)

(2)

(2'.1)

(2'.3)

(2'.2)

(AAL)

(A-PPL)

Figure 3: Overview on the machine understandable transla-

tion of AAL.

to express conditions and various logical combi-

nations. Furthermore, one important thing is to

have time, at least logical discrete time, for in-

stance to write: “X writes some data and then

stores some logs”. Our target is a temporal logic

with time, one concrete candidate is mCRL2 (Cra-

nen et al., 2013).

• (2’.2). Second, a policy calculus is used to de-

scribe the operational semantics associated to the

concrete properties defined in (2’.1). This cal-

culus is based on the concept of reference mon-

itor (Schneider, 2000) for both the agents and

the data resources. It relies on a previous work

for distributed agent communicating via mes-

sages (Allam et al., 2012). This operational se-

mantics provides means for abstractly executing

the temporal logic expressions. This process is

known as “program synthesis”, starting from a

property it generates a program ensuring/enforc-

ing the property.

• (2’.3). Finally, the generated policy using our pol-

icy calculus is (semi-)automatically translated to

a machine understandable policy based on prede-

fined transformation rules. Our target is the A-

PPL extension of PPL which is described in the

next section.

5 CONCRETE LANGUAGE

In this section, we present an enhanced version of

PPL with extensions that address the identified limita-

tions of the language to accurately map accountability

obligations. We name this accountability policy lan-

guage (A-PPL).

Our accountability policy representation frame-

work maps AAL clauses to concrete and operational

machine understandable policies. As already men-

tioned in section 2.3, in order not to define yet another

completely new language to map accountability obli-

gations to machine-understandable policies, we con-

ducted a preliminary study on existing languages and

among all the possible candidates, PPL seems the one

ACloudAccountabilityPolicyRepresentationFramework

493

(1 ) Cl au s e ::= ( LO G G I NG ? E xp ( A U D I TI N G Ex p )? ( I F _ V I O L A T E D _ THEN E xp )? ) +

(2 ) Exp ::= Mo d a l | Exp ( OR | AN D | O N LY W H E N | T HE N ) Exp

(3 ) Moda l ::= ( MA Y | M US T | M U S TN O T ) A c t io n

(4 ) Ac ti o n ::= Age n t . Op er ( Par a m ) (( B E F OR E | AF TE R ) T im e )?

(5 ) Agen t ::= ur i

(6 ) Ope r ::= RE A D | W RI T E | LO G | S EN D | N O TIF Y | se r vi c e [ Ag e n t _ p r o v i d er ]

(7 ) Tim e ::= da t e | du r a ti o n

(8 ) Para m ::= C o n st a n t | V ar i a b l e

(9 ) Co n s ta n t :: = st r i n g L i t e r a l

(1 0) V a r iab l e :: = Li t era l ?

Figure 2: Excerpt of the AAL Syntax.

that best captures the accountability concepts. There-

fore, in this section, we present how A-PPL extends

PPL to address accountability obligations.

PPL implicitly identifies three roles: the data sub-

ject, data controller and data processor roles. Besides,

PPL defines an obligation as a set of triggers and ac-

tions. Triggers are events related to the obligation that

are filtered by a condition and that trigger the execu-

tion of actions. Therefore, PPL defines markups to

declare an obligation. Inside the obligation environ-

ment, one can specify a set of triggers and their related

actions.

5.1 Extension of Roles

To address accountability concerns in a cloud envi-

ronment, it might be necessary to include in the pol-

icy a reference to the role of the subject to which the

policy is applied to. For instance, in PPL, it was not

possible to identify the Data Controller. We therefore

suggest adding to the PPL <Subject> element a new

attribute, attribute:role, for this purpose. Further-

more, in addition to the four roles PPL inherently con-

siders (Data Subject, Data Controller, Downstream

Data Controller, Data Processor), A-PPL extends PPL

with one additional role. We add the auditor role that

is considered as a trusted third party that can conduct

independent assessment of cloud services, informa-

tion systems operations performance and security of

the cloud implementation. This new role is important

to catch accountability specific obligations such as au-

ditability, reporting notification and remediation.

5.2 Extension of Actions and Triggers

We add to PPL a set of new A-PPL actions

and triggers in order to map accountability obli-

gations. In particular, we enhance the action of

logging ActionLog and notification ActionNotify

that already exist in PPL. For instance, while

PPL currently enables notification thanks to the

ActionNotifyDataSubject, A-PPL defines a new

and more general ActionNotify action in which one

can define the recipient of the notification thanks to

a newly defined parameter recipient;moreover, the

additional Notificationtype parameter defines the

purpose of the notification which can be policy vi-

olation, evidences or redress for example. On the

other hand, the current ActionLog action in PPL

fails to capture accountability obligations. The new

ActionLog action in A-PPL introduces many addi-

tional parameters to provide more explicit informa-

tion on the logged event: for example, timestamp de-

fines the time of the event, and Resource location

identifies the resource the action was taken on.

We also create two actions related to auditabil-

ity: ActionAudit that creates an evidence request

and ActionEvidenceCollection that collects re-

quested evidence. In addition, auditability requires

the definition of two new triggers related to evi-

dence: TriggerOnEvidenceRequestReceived that

occurs when an audited receives an evidence re-

quest and TriggerOnEvidenceReceived that oc-

curs when an auditor receives the requested evi-

dence. Similarly, when an update occurs in a pol-

icy or in a user preference, the update may trigger

a set of actions to be performed. Thus, we create

two additional triggers: TriggerOnPolicyUpdate

and TriggerOnPreferenceUpdate. Finally, to han-

dle complaints that a data subject may file in

the context of remediability, we define the trigger

TriggerOnComplaint that triggers a set of specific

actions to be undertaken by an auditor or/and a data

controller.

6 VALIDATION

In this section we validate the accountability policy

representation framework by extracting obligations

from one of the use cases documented in the A4Cloud

public deliverable DB3.1 (Bernsmed et al., 2013) and

illustrate their representation in AAL and A-PPL.

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

494

6.1 The Health Care Use Case

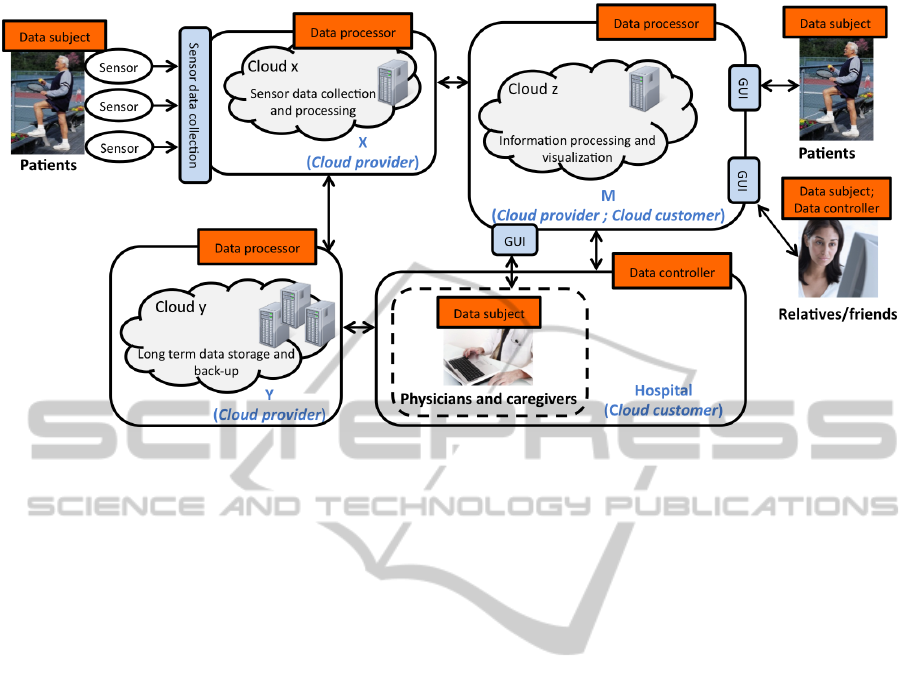

This use case concerns the flow of health care infor-

mation generated by medical sensors in the cloud.

The system, which is illustrated in Figure 4, is used

to support diagnosis of patients by the collection and

processing of data from wearable sensors. Here, we

investigate the case where medical data from the sen-

sors will be exchanged between patients, their fami-

lies and friends, the hospital, as well as between the

different Cloud providers involved in the final service

delivery.

In this use case the patients are the data subjects

from whom (sensitive) personal data is collected. The

hospital is ultimately responsible for the health care

services and will hence act as one of the data con-

trollers for the personal data that will be collected.

The patients’ relatives may also upload personal data

about the patients and can therefore be seen as data

controllers (as well as data subjects, when personal

data about their usage of the system is collected from

them). As can be seen in Figure 4, the use case will

involve cloud services for sensor data collection and

processing (denoted cloud provider “X”), cloud ser-

vices for data storage (denoted cloud provider “Y”)

and cloud services for information sharing (denoted

cloud provider “M”), which will be operated by a col-

laboration of different providers. Since the primary

service provider M, with whom the users will inter-

face, employs two sub-providers, a chain of service

delivery will be created. In this particular case, the M

platform provider will be the primary service provider

and will act as a data processor with respect to the

personal data collected from the patients. Also the

sub-providers, X and Y, will act as data processors.

The details of the use case is further described in

DB3.1 (Bernsmed et al., 2013).

6.2 Obligations for the Use Case

We have identified a number of obligations for this

use case, which needs to be handled by the account-

ability policy framework. Here we list three examples

and we explain how they will be expressed in AAL

and mapped into A-PPL. Note that the complete list

of obligations is much longer, but we have chosen to

outline those that illustrate the most important rela-

tionships between the involved actors. Due to space

limitations we do not include the complete A-PPL

policies here; the reader is referred to the project doc-

umentation (Garaga et al., 2013) to see the full policy

expressions.

Obligation 1: The Data Subject’s Right to Access,

Correct and Delete Personal Data. According the

Data Protection Directive (Directive, E. U., 1995),

data subjects have (among others) the right to access,

correct and delete personal data that have been col-

lected about them. In this use case it means that the

hospital must allow read and write grant access to pa-

tients as well as relatives with regard to their personal

data that have been collected and stored in the cloud.

There must be also means to enforce the deletion of

such data.

The AAL expression catching the obligations as-

sociated to the patient is:

( M AY P at i e n t . R EA D (D : D at a ) OR

MA Y Pa t ie n t . W R I TE ( D : Da ta ) OR

MA Y Pa t ie n t . DE LE T E ( D : D a ta ))

AU D I T ING

MU ST A u d it o r . A U DIT ( ho s p ita l . log s )

IF_ V I O L A T E D _ T H E N

MU ST A u d it o r . SA N CTI O N ( ho s p it a l )

The policy includes the ALWAYS operator since this

property is expected to be true at any instant. The pol-

icy also includes the condition D.subject=Patient

to express that this expression only concerns the per-

sonal data of the Patient. This policy also expresses

the audit and rectification obligations that have to be

ensured by an external Auditor.

Using the accountability policy representation

framework, the AAL expression will be mapped into

two different A-PPL expressions; one for permitting

read and write access to the patients and another one

for enforcing the data controller to delete the per-

sonal data whenever requested. Read and write access

control is achieved through XACML rules. Regard-

ing deletion of data, a patient can express data han-

dling preferences that specify the obligation that the

data controller has to enforce to delete the personal

data. This obligation can be expressed using the A-

PPL obligation action ActionDeletePersonalData,

which will be used by the patient to delete personal

data that has been collected about him.

An explicit audit clause implies that information

related to the usage control property are logged (the

amount and the nature of this information is not dis-

cussed here). Thus the audit clause is translated into

an AuditAction which is responsible to manage the

interaction with the auditor. This runs an exchange

protocol with the auditor which ends with two re-

sponses: either no violation of the usage has been

detected or a violation exists. In the latter, some rec-

tification clauses should be specified.

In the sequel we only consider usage control

clauses since the translation process for audit and rec-

tification is similar to the previous example.

ACloudAccountabilityPolicyRepresentationFramework

495

Figure 4: An overview over the main actors involved in the health care use case.

Obligation 2: The Data Controller Must Notify

the Data Subjects of Security or Personal Data

Breaches. This obligation defines what will happen

in case of a security or privacy incident. In AAL it

will be expressed by the hospital as:

MA Y ho s p it a l . VI O L A T E P O L I CY () THE N

( M U ST h o sp i t a l . N O T IF Y [ Pa t ien t ]( " in c id e n t " ) A ND

( A NY : A g ent in ho s p it a l . re l a t i ve s [ Pa t i e nt ] THE N

MU ST h o s pit a l . NO TI F Y [ A NY ]( " i n c i den t " ) ))

In A-PPL, such notification is expressed through

the obligation action ActionNotify. It takes as pa-

rameters, the recipient of the notification (here, the

data subject) and the type of the notification (here, se-

curity breach).

Obligation 3: The Data Processor Must, upon Re-

quest, Provide Evidence to the Data Controller on

the Correct and Timely Deletion of Personal Data.

To express the timely deletion of personal data, which

in addition will be logged to be used as evidence,

the following AAL expression can be used by the

provider M:

MU ST M. D EL E T E (D : Da t a ) TH E N

MU ST M. LO G S ( " d e l et e d " , D , c u r r e n t D ate )

In A-PPL, the obligation trigger

TriggerPersonalDataDeleted combined with

the obligation action ActionNotify will notify the

data subject of the deletion of its data. In addition,

if necessary, the obligation action ActionLog will

allow the provider M to log when personal data have

been deleted.

The three examples that we have provided in this

section represent a snapshot of the full power of AAL

and A-PPL. In (Bernsmed et al., 2013) we outline

more examples of obligations for the health care use

case, which among other things demonstrate how in-

formed consent can be gathered from the patients be-

fore their data is being processed, how the purpose

of personal data collection can be specified and con-

trolled, how the data processor M can inform the hos-

pital of the use of sub-processors and how the data

processors can facilitate for regulators to review evi-

dence on their data processing practices.

7 CONCLUSION

Dealing with personal data in the cloud raises several

accountability and privacy issues that must be consid-

ered to promote the safety usage of cloud services.

In this paper we tackle the issue related to account-

ability obligations and preferences representation. We

propose a cloud accountability policy representation

framework. This framework enables accountability

policy expression in a human readable fashion using

our abstract accountability language (AAL). Also, it

offers the means for their mapping to concrete en-

forcement policies written using our accountability

policy language (A-PPL). Our framework applies the

separation of concerns principle by separating the ab-

stract language from the concrete one. This choice

makes both contributions, i.e. AAL and A-PPL, self-

contained and allows their independent use. The abil-

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

496

ity of our framework to represent accountability obli-

gations was validated through a realistic health care

use case.

Our future research work will focus on the map-

ping from AAL to A-PPL. As part of our implemen-

tation perspectives, we are currently working on two

prototypes. An AAL editor that assists end-users in

writing their preferences/obligations and implements

the required artifacts to map them to concrete policies

in A-PPL. We also started the development of an A-

PPL policy execution engine that will be in charge of

interpreting and matching A-PPL policies and prefer-

ences

ACKNOWLEDGEMENTS

This work was funded by the EU’s 7th framework

A4Cloud project.

REFERENCES

Aktug, I. and Naliuka, K. (2008). ConSpec – a formal lan-

guage for policy specification. In Electronic Notes

in Theoretical Computer Science, volume 197, pages

45–58.

Allam, D., Douence, R., Grall, H., Royer, J.-C., and

S

¨

udholt, M. (2012). Well-Typed Services Cannot Go

Wrong. Rapport de recherche RR-7899, INRIA.

Ardagna, C. A., Bussard, L., De Capitani Di Vimer-

cati, S., Neven, G., Paraboschi, S., Pedrini,

E., Preiss, S., Raggett, D., Samarati, P., Tra-

belsi, S., and Verdicchio, M. (2009). Primelife

policy language. http://www.w3.org/2009/policy-

ws/papers/Trabelisi.pdf.

Becker, M. Y., Malkis, A., and Bussard, L. (2010). S4P:

A generic language for specifying privacy preferences

and policies. Microsoft Research.

Bernsmed, K., Felici, M., Oliveira, A. S. D., Sendor, J.,

Moe, N. B., R

¨

ubsamen, T., Tountopoulos, V., and Has-

nain, B. (2013). Use case descriptions. Deliverable,

Cloud Accountability (A4Cloud) Project.

Bradner, S. (1997). IETF RFC 2119: Key words for use

in RFCs to Indicate Requirement Levels. Technical

report.

Breaux, T. D. and Anton, A. I. (2005). Deriving semantic

models from privacy policies. In Sixth IEEE Interna-

tional Workshop on Policies for Distributed Systems

and Networks (POLICY ’05), pages 67–76.

Cranen, S., Groote, J. F., Keiren, J. J. A., Stappers, F. P. M.,

de Vink, E. P., Wesselink, W., and Willemse, T. A. C.

(2013). An overview of the mCRL2 toolset and its

recent advances. TACAS’13, pages 199–213, Berlin,

Heidelberg. Springer-Verlag.

DeYoung, H., Garg, D., Jia, L., Kaynar, D., and Datta, A.

(2010). Experiences in the logical specification of the

HIPAA and GLBA privacy laws. In 9th Annual ACM

Workshop on Privacy in the Electronic Society (WPES

’10), pages 73–82.

Directive, E. U. (1995). Directive 95/46/EC of

the European Parliament and of the Council of

24 October 1995 on the protection of individ-

uals with regard to the processing of personal

data and on the free movement of such data

. http://ec.europa.eu/justice/policies/privacy/docs/95-

46-ce/dir1995-46 part1 en.pdf.

Feigenbaum, J., Jaggard, A. D., Wright, R. N., and Xiao,

H. (2012). Systematizing ”accountability” in com-

puter science. Technical Report YALEU/DCS/TR-

1452, University of Yale.

Garaga, A., de Oliveira, A. S., Sendor, J., Azraoui, M.,

Elkhiyaoui, K., Molva, R.,

¨

Onen, M., Cherrueau, R.-

A., Douence, R., Grall, H., Royer, J.-C., Sellami, M.,

S

¨

udholt, M., and Bernsmed, K. (2013). Policy Repre-

sentation Framework. Technical Report D:C-4.1, Ac-

countability for Cloud and Future Internet Services -

A4Cloud Project.

Haeberlen, A., Aditya, P., Rodrigues, R., and Druschel,

P. (2010). Accountable virtual machines. In OSDI,

pages 119–134.

Jagadeesan, R., Jeffrey, A., Pitcher, C., and Riely, J.

(2009). Towards a theory of accountability and audit.

In Proceedings of the 14th European conference on

Research in computer security, ESORICS’09, pages

152–167, Berlin, Heidelberg. Springer-Verlag.

Kerrigan, S. and Law, K. H. (2003). Logic-based regulation

compliance-assistance. In International Conference

on Artificial Intelligence and Law, pages 126–135.

Knuth, D. E. (1964). backus normal form vs. backus naur

form. Commun. ACM, 7(12):735–736.

Lamanna, D. D., Skene, J., and Emmerich, W. (2003).

SLAng: A Language for Defining Service Level

Agreements. In Proceedings of the The Ninth IEEE

Workshop on Future Trends of Distributed Computing

Systems, pages 100–, Washington, DC, USA. IEEE

Computer Society.

Legislative Assembly of Ontario (1988). Freedom of in-

formation and protection of privacy act (r.s.o. 1990, c.

f.31).

Marchiori, M. (2002). The platform for privacy prefer-

ences 1.0 (P3P1.0) specification. W3C recommen-

dation, W3C. http://www.w3.org/TR/2002/REC-P3P-

20020416/.

M

´

etayer, D. L. (2009). A formal privacy management

framework. Formal Aspects in Security and Trust,

pages 1–15.

OASIS Standard (2013). eXtensible Access Control

Markup Language (XACML) Version 3.0. 22 January

2013. http://docs.oasis-open.org/xacml/3.0/xacml-

3.0-core-spec-os-en.html.

Pearson, S., Tountopoulos, V., Catteddu, D., S

¨

udholt, M.,

Molva, R., Reich, C., Fischer-H

¨

ubner, S., Millard, C.,

Lotz, V., Jaatun, M. G., Leenes, R., Rong, C., and

Lopez, J. (2012). Accountability for cloud and other

future internet services. In CloudCom, pages 629–

632. IEEE.

ACloudAccountabilityPolicyRepresentationFramework

497

Pearson, S. and Wainwright, N. (2013). An interdisciplinary

approach to accountability for future internet service

provision. International Journal of Trust Management

in Computing and Communications, 1(1):52–72.

Schneider, F. B. (2000). Enforceable security policies.

ACM Transactions on Information and System Secu-

rity, 3(1):30–50.

Sundareswaran, S., Squicciarini, A., and Lin, D. (2012).

Ensuring distributed accountability for data sharing in

the cloud. Dependable and Secure Computing, IEEE

Transactions on, 9(4):556–568.

US Congress (1999). Gramm-leach-bliley

act, financial privacy rule. 15 usc 6801-

6809. http://www.law.cornell.edu/uscode/

usc sup 01 15 10 94 20 I.html.

US Congress (2002). Health insurance portabil-

ity and accountability act of 1996, privacy

rule. 45 cfr 164. http://www.access.gpo. gov/-

nara/cfr/waisidx 07/45cfr164 07.html.

Wei, W., Du, J., Yu, T., and Gu, X. (2009). Securemr: A

service integrity assurance framework for mapreduce.

In Proceedings of the 2009 Annual Computer Security

Applications Conference, pages 73–82, Washington,

DC, USA. IEEE Computer Society.

Weitzner, D. J., Abelson, H., Berners-Lee, T., Feigenbaum,

J., Hendler, J., and Sussman, G. J. (2008). Information

accountability. Commun. ACM, 51(6):82–87.

Zhifeng Xiao, Nandhakumar Kathiresshan, Y. X. (2012).

A survey of accountability in computer networks and

distributed systems. Security and Communication

Networks, 5(10):1083–1085.

Zou, J., Wang, Y., and Lin, K.-J. (2010). A formal service

contract model for accountable SaaS and cloud ser-

vices. In International Conference on Services Com-

puting, pages 73–80. IEEE.

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

498