Multi-dimensional Resource Allocation for Data-intensive

Large-scale Cloud Applications

Foued Jrad

1

, Jie Tao

1

, Ivona Brandic

2

and Achim Streit

1

1

Steinbuch Centre for Computing, Karlsruhe Institute of Technology, 76128 Karlsruhe, Germany

2

Information Systems Institute, Vienna University of Technology, 1040 Vienna, Austria

Keywords:

Cloud Computing, Multi-Cloud, Resource Allocation, Scientific Workflow, Data Locality.

Abstract:

Large scale applications are emerged as one of the important applications in distributed computing. Today,

the economic and technical benefits offered by the Cloud computing technology encouraged many users to

migrate their applications to Cloud. On the other hand, the variety of the existing Clouds requires them to

make decisions about which providers to choose in order to achieve the expected performance and service

quality while keeping the payment low. In this paper, we present a multi-dimensional resource allocation

scheme to automate the deployment of data-intensive large scale applications in Multi-Cloud environments.

The scheme applies a two level approach in which the target Clouds are matched with respect to the Ser-

vice Level Agreement (SLA) requirements and user payment at first and then the application workloads are

distributed to the selected Clouds using a data locality driven scheduling policy. Using an implemented Multi-

Cloud simulation environment, we evaluated our approach with a real data-intensive workflow application in

different scenarios. The experimental results demonstrate the effectiveness of the implemented matching and

scheduling policies in improving the workflow execution performance and reducing the amount and costs of

Intercloud data transfers.

1 INTRODUCTION

With the advantages of pay-per-use, easy-to-use and

on-demand resource customization, the Cloud com-

puting concept was quickly adopted by both indus-

try and the academia. Over the last decade, a lot of

Cloud infrastructures have been built which distin-

guish themselves in the type of offered service, ac-

cess interface, billing and SLA. Hence, today Cloud

users have to make manual decisions about which

Cloud to choose in order to meet their functional

and non-functional service requirements while keep-

ing the payment low. This task is clearly a burden

for the users because they have to go through the

Web pages of Cloud providers to compare their ser-

vices and billing policies. Furthermore, it is hard for

them to collect an maintain the all needed information

from current commercial Clouds to make accurate de-

cisions.

The raising topic of Multi-Cloud addresses the in-

teroperability as well the resource allocation across

heterogeneous Clouds. In this paper we focus from

a user perspective on the latter problem, which has

been proved to be NP-hard. A challenging task for

the resource allocation is how to optimize several

user objectives like minimizing costs and makespan

while fulfilling the user required functional and non-

functional SLAs. Since in Multi-Cloud data trans-

fers are performed through Internet between data-

centers distributed in different geographical locations,

another challenge is how to distribute the workloads

on these Clouds in order to reduce the amount and

cost of Cloud-to-Cloud (Intercloud) data transfers.

Today, related work on Cloud resource allocation

is typically restricted to optimizing up to three ob-

jectives which are cost, makespan and data locality

(Deelman et al., 2008). Attempts to support other

objectives (e.g. reliability, energy efficiency) (Fard

et al., 2012) cannot be applied directly to Multi-

Cloud environments. However, the support for more

SLA constraints like the Cloud-to-Cloud latency and

Client-to-Cloud throughput, which both have high

importance for data-intensive Mutli-Cloud applica-

tions, is still missing.

Motivated by the above considerations, we pro-

pose a multi-dimensional resource allocation scheme

to automate the deployment of large scale Multi-

Cloud applications. We facilitate the support of multi-

691

Jrad F., Tao J., Brandic I. and Streit A..

Multi-dimensional Resource Allocation for Data-intensive Large-scale Cloud Applications.

DOI: 10.5220/0004971906910702

In Proceedings of the 4th International Conference on Cloud Computing and Services Science (MultiCloud-2014), pages 691-702

ISBN: 978-989-758-019-2

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

ple generic parameters by applying a two stage alloca-

tion approach, in which the target Clouds are selected

using an SLA-based matching algorithm at first and

then the application workloads are distributed to the

selected Clouds using a data locality driven schedul-

ing policy. For the SLA-based matching, we adopted

from the economic theory a utility-based algorithm

which scores each non-functional SLA attribute (e.g.

availability, throughput and latency) and then calcu-

lates the user utility based on his payment willing-

ness and the measured Cloud provider SLA metrics.

Overall, our optimization is done with four objectives:

makespan, cost, data locality and the satisfaction level

against the user requested non-functional SLA.

In order to validate our resource allocation

scheme, we investigate in this paper the deployment

of data-intensive Multi-Cloud worlflow applications.

Our idea of supporting Multi-Cloud workflows comes

from the observation of following scenario: A cus-

tomer works on several Clouds and stores data on

them. There is a demand of jointly processing all of

the data to form a final result. This scenario is simi-

lar to the collaborative work on the Grid. For exam-

ple, the Worldwide LHC Computing Grid (WLCG)

1

involves more than 170 computing centers, where

the community members often work on a combined

project and store their data locally on own sites. A

workflow application within such a project must cover

several Grid resource centers.

We evaluated our allocation scheme using a Mutli-

Cloud workflow framework, we developed based on

the CloudSim (Calheiros et al., 2011) simulation

toolkit. The reason for applying a simulator rather

than real Clouds is that our evaluation requires dif-

ferent Cloud platforms with various properties in in-

frastructure Quality of Service (QoS). In addition, the

simulator allows us to fully validate the proposed con-

cept with various scenarios and hence to study the

developed resource matching and data locality opti-

mization schemes.

The experimental results show that our multi-

dimensional allocation scheme offers benefits to users

in term of performance and cost compared to other

policies. Overall, this work makes the following con-

tributions:

1. A multi-dimensional resource allocation scheme

for large scale Multi-Cloud applications.

2. An efficient utility-based matching policy to se-

lect Cloud resources with respect to user SLA re-

quirements.

3. A data locality driven scheduling policy to mini-

mize the data movement and traffic cost.

1

http://lcg.web.cern.ch

4. An extensive simulation-based evaluation with a

real workflow application.

The remainder of the paper is organized as fol-

lows: Section 2 presents the related work on data

locality driven workflow scheduling. Section 3 de-

scribes the Multi-Cloud workflow framework used to

validate our approach. Section 4 and Section 5 de-

scribe the functionality of the matching and schedul-

ing algorithms implemented in this work. Section 6

presents the simulation environment and the evalua-

tion results gathered from the simulation experiments.

Finally, Section 7 concludes the paper and provides

the future work.

2 RELATED WORK

Over the past decade, the scheduling of data-intensive

workflows is emerged as an important research topic

in distributed computing. Although the support of

data locality have been heavily investigated on Grid

and HPC, only few approaches apply data locality for

Cloud workflows. A survey of these approaches is

provided in (Mian et al., 2011). In this section we fo-

cus on works dealing with data locality driven work-

flow scheduling in Multi-Cloud environments.

The authors in (Fard et al., 2013) adopted from

the game theory a dynamic auction-based schedul-

ing strategy called BOSS to schedule workflows in

Multi-Cloud environments. Although their conducted

experiments show the effectiveness of their approach

in reducing the cost and makespan compared to tra-

ditional multi-objective evolutionary algorithms, the

support for data locality is completely missing in their

work. Szabo et al. (Szabo et al., 2013) implemented a

multi-objective evolutionary workflow scheduling al-

gorithm to optimize the task allocation and ordering

using the data transfer size and execution time as fit-

ness functions. Their experimental results prove the

performance benefits of their approach but not yet

the cost effectiveness. Although the authors claim

the support for Multi-Cloud, in their evaluation they

used only Amazon EC2-based

2

Clouds. Yuan et al.

(Yuan et al., 2010) proposed a k-means clustering

strategy for data placement of scientific Cloud work-

flows. Their strategy clusters datasets based on their

dependencies and supports reallocation at runtime.

Although their simulation results showed the bene-

fits of the k-means algorithm in reducing the num-

ber of data movements, their work lacks the evalua-

tion of the execution time and cost effectiveness. In

(Pandey et al., 2010) the authors use particle swarm

2

http://aws.amazon.com/ec2

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

692

optimization (PSO) techniques to minimize the com-

putation and traffic cost when executing workflows on

the Cloud. Their approach is able to reduce execution

cost while balancing the load among the datacenters.

The authors in (Jin et al., 2011) introduced an effi-

cient data locality driven task scheduling algorithm

called BAR (Balance and reduce). The algorithm ad-

justs data locality dynamically according to the net-

work state and load on datacenters. It is able to reduce

the completion time. However, it has been tested only

with MapReduce (Dean and Ghemawat, 2008)-based

workflow applications.

The examination of the previous mentioned works

shows that the support of data locality in the schedul-

ing improves the performance and minimizes the cost

of workflow execution on Cloud. A more clever so-

lution allowing the support of more generic workflow

applications is to implement task scheduling as part

of a middleware on top the Cloud infrastructures. In

such way, it would be possible to support more user-

defined SLA requirements, like latency and availabil-

ity in the task scheduling policies. Since, a proper

SLA-based resource selection can have also signifi-

cant impact on the performance and cost, we inves-

tigate in this work the effect of different resource

matching policies on the scheduling performance of

data-intensive workflows. The cost effectiveness of

such matching policies in Multi-Cloud environments

have been investigated in (Dastjerdi et al., 2011), but

they have not been evaluated with scientific work-

flows.

In contrast to the previous works, the resource

allocation scheme introduced in this paper allows

the execution of workflows on top of heterogeneous

Clouds by supporting an SLA-based resource match-

making combined with a data locality driven task

scheduling. In addition to a cost evaluation, we study

the impact of the matching and scheduling on data

movement and makespan using a real scientific appli-

cation.

3 BACKGROUND

In order to validate and evaluate our multi-

dimensional resource allocation scheme we use a

broker-based workflow framework, we implemented

in a previous work (Jrad et al., 2013b) to support

the deployment of workflows in Multi-Cloud environ-

ments. In this section we describe briefly the main

components of the framework.

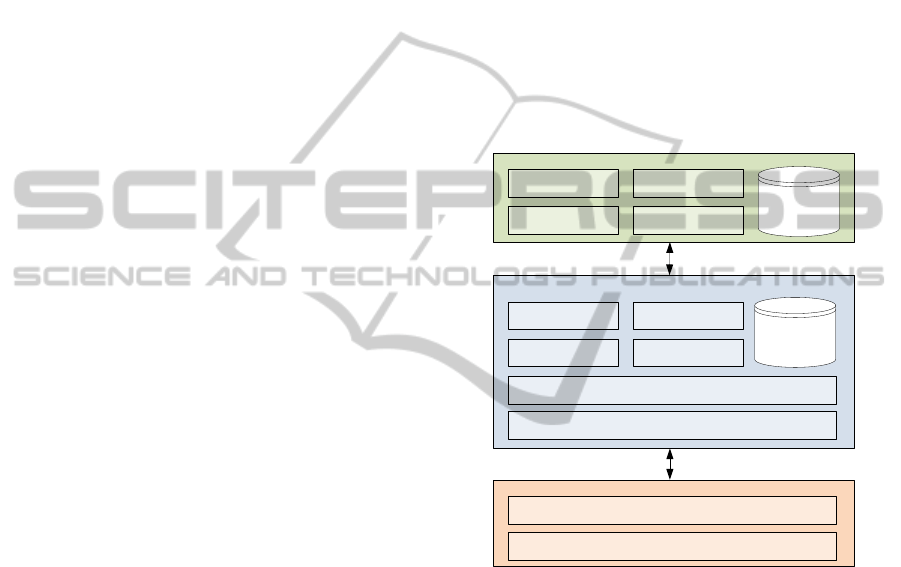

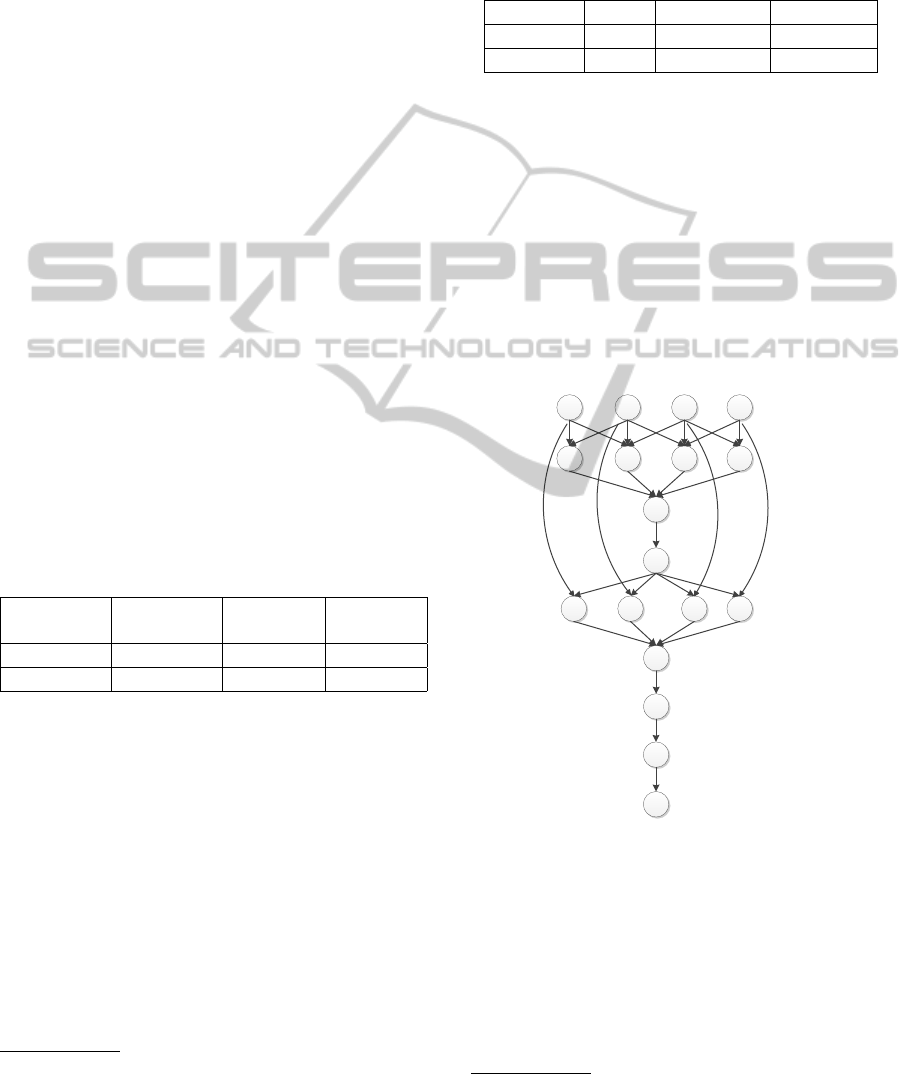

Figure 1 depicts the architecture of the Multi-

Cloud workflow framework developed on top of

Cloudsim. The Cloud Service Broker, as shown in

the middle of the architecture, assists users in find-

ing the suitable Cloud services to deploy and execute

their workflow applications. Its main component is

a Match-Maker that performs a matching process to

select the target Clouds for the deployment. A sched-

uler assigns the workflow tasks to the selected Cloud

resources. The architecture includes also a Data Man-

ager to manage the data transfers during the work-

flow execution. The entire communication with the

underlying Cloud providers is realized through stan-

dard interfaces offered by provider hosted Intercloud

Gateways. A Workflow Engine deployed on the client

side, delivers the workflow tasks to the Cloud Service

Broker with respect to their execution order and data

flow dependencies.

Client

Workflow Engine Clustering Engine

Replica

catalog

UI Workflow Parser

Intercloud Gateway

CloudSim

Monitoring and Discovery Manager

Match Maker

Data Manager

Deployment

Manager

Scheduler

Abstract Cloud API

Cloud Service Broker

IaaS Clouds

Service and

Provider

Repository

Figure 1: Multi-Cloud workflow framework architecture.

In order to execute workflows using the frame-

work, the Workflow Engine receives in a first step a

workflow description and the SLA requirements from

the user. After parsing the description, the Work-

flow Engine applies different clustering techniques

to reduce the number of workflow tasks. The re-

duced workflow and the user requirements are then

forwarded to the Broker. In the following, the Match-

Maker selects the Cloud resources that can fit the user

given requirements by applying different matching

policies. After that all the requested virtual machines

(VMs) and Cloud storage are deployed on the selected

Clouds, the Workflow Engine transfers the input data

from the client to the Cloud storage and then starts to

release the workflow tasks with respect to their ex-

ecution order. During execution, the scheduler as-

signs each task to a target requested VM according

Multi-dimensionalResourceAllocationforData-intensiveLarge-scaleCloudApplications

693

to different scheduling policies while the Data Man-

ager manages the Cloud-to-Cloud data transfers. A

Replica Catalog stores the list of data replicas by map-

ping workflow files to their current datacenter loca-

tions. Finally, the execution results are transferred to

the Cloud storage and can be retrieved via the user

interface. The requested VMs and Cloud Storage are

provisioned to the user until the workflow execution

is finished. However, if the same workflow should be

executed many times (e.g. with different input data),

a long-term lease period can be specified by the user.

4 SLA-BASED MULTI-CLOUD

MATCHING POLICIES

min latency

min throughput

min Availability

Budget

SLA

satisfaction

cost

makespan

data locality

non-functional SLA

requirements

utility

performance

Matching

Scheduling

(2)

(4)

(1)

(5)(3)

Compute Cloud A

Europe

Compute Cloud C

US

Storage Cloud B

Europe

Storage cost

Availability

Throughput

Latency

Bandwidth

Traffic cost

VM cost

Availability

Throughput

Figure 2: Multi-dimensional resource allocation scheme.

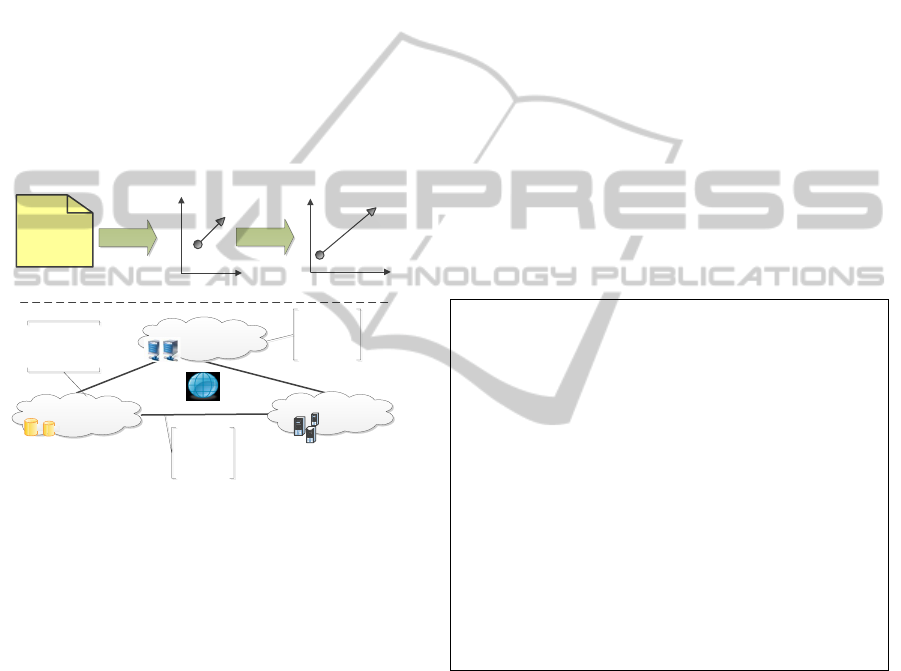

As illustrated in Figure 2, our multi-dimensional re-

source allocation is performed in five steps. After that

the user gives his SLA requirements and budget (step

1), a matching process is started (step 2), where the

functional and non-functional SLA requirements are

compared to the measured Cloud SLA metrics as well

as their service usage costs. The selection of the opti-

mal Clouds (step 3) is then performed using a utility-

based matching algorithm with the goal to maximize

the user utility for the provided QoS. In the follow-

ing step, The application workloads are distributed to

the requested compute resources using a scheduling

policy. For this purpose, a data-locality scheduling

scheme is used to achieve a minimal data movement

and improve the overall application performance (step

5). In the following subsections we describe in details

the used utility-based matching algorithm. In addi-

tion, we describe another simple matching algorithm,

we used for a comparative study with the utility-based

algorithm. The used scheduling policies are described

in the next Section.

4.1 Sieving Matching Algorithm

On the search for efficient matching algorithms, we

implemented a simple matching policy called Siev-

ing. Given a service list forming the requested Mutli-

Cloud service composition and a list of candidate

datacenters, the Sieving matching algorithm iterates

through the service list and selects randomly for

each service a candidate datacenter, which satisfies

all functional and non-functional SLA requirements.

Therefore, for each selected datacenter the measured

SLA metrics and his usage price should be respec-

tively within the ranges specified by the user in his

SLA requirements and budget. In addition, the algo-

rithm checks if the current datacenter capacity load

allows the deployment of the requested service type.

However, it may be possible that the result set is

empty in case that none of the Cloud providers ful-

fill the requested criteria. The following pseudo code

describes in detail the functionality of the Sieving al-

gorithm:

Algorithm 1: Sieving matching.

Input : r e q u i r e d S e r v i c e L i s t , D a t a c e n t e r L i s t

For e a ch s e r v i c e S i n r e q u i r e d S e r v i c e L i s t Do

For e a ch d a t a c e n t e r D i n D a t a c e n t e r L i s t Do

I f S i n o f f e r s (D) and i s D e p l o y a b l e I n (D) Then

I f p r i c e (D) <= bu d g e t ( S ) &

a v a i l a b i l i t y (D)>= r e q u i r e d A v i l a b i l i t y ( S ) &

t h r o u g h p u t (D)>= r e q u i r e d T h r o u g h p u t ( S ) Then

add D t o C a n d i d a t e s L i s t

Endif

Endif

Endfor

I f s i z e o f ( C a n d i d a t e s L i s t ) >0 Then

Choo se random d a t a c e n t e r D from C a n d i d a t e s L i s t

Clo u d Composi t i o nMap . add ( S ,D)

E l s e r e t u r n n u l l

Endif

Endfor

Output : Clou d C o mpositi o n Map

4.2 Utility-based Matching Algorithm

A major issue of the above described Sieving algo-

rithm is the lack of flexibility in the matching of

non-functional SLA attributes. Hence, it cannot han-

dle use cases like availability is more important than

throughput or select well qualified providers while

keeping the total costs low. In addition, the network

connectivity between the Clouds and traffic costs are

ignored in the matching. Therefore, we adopted

from the Attribute Auction Theory (Asker and Can-

tillon, 2008) a new economic utility-based matching

algorithm, which takes the payment of customers as

the focus. In a previous work (Jrad et al., 2013a),

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

694

we compared the utility algorithm with Sieving and

validated its cost benefits in matching single Cloud

services. This work, is our first attempt in using

the utility-for matching Multi-Cloud service compo-

sitions.

The main strategy of the utility-algorithm is to

maximize the user profit for the requested service

quality by using a quasi-linear utility function (Lam-

parter et al., 2007). The user preferences for the non-

functional SLA attributes are modeled by weighted

scoring functions, whereas all functional require-

ments must be fulfilled similar to Sieving. Let Q be

the set of m required non-functional SLA attributes q

with q ∈ Q =

{

1,...,m

}

. The utility of a customer i for

a required service quality Q with a candidate Cloud

composition j is computed as follows:

U

i j

(Q) = α

i

F

i

(Q) − P

j

, (1)

where α

i

represents the maximum willingness to

pay of consumer i for an “ideal” service quality, and

F

i

(Q) the customer’s scoring function translating the

aggregated service quality attribute levels into a rel-

ative fulfillment level of consumer requirements. P

j

denotes the total price that has to be paid for using all

Cloud services in the Cloud composition j with:

P

j

= T ∗C

j

V M

+ D

st

∗C

j

st

+ D

tr

∗C

j

tr

(2)

where C

j

V M

, C

j

st

and C

j

tr

denote respectively the

charged compute, storage and traffic cost with com-

position j, whereas D

st

and D

tr

denote respectively

the amount of data to be stored and transferred. The

lease period T is defined as the time period in which

the Cloud services are provisioned to the user. The

scoring function F

i

(Q) is defined as follows:

F

i

(Q) =

m

∑

q=1

λ

i

(q) f

i

(q) → [0,1], (3)

where λ

i

(q) and f

i

(q) denote respectively the rel-

ative assessed weight and the fitting function for

consumer i regarding the SLA attribute q, where

∑

m

q=1

λ

i

(q) = 1. The fitting function maps properly

to the user behavior each measured SLA attribute to

a normalized real value in the interval [0,1] with 1

representing an ideal expected SLA value. An exam-

ple for three non-linear fitting functions is provided in

Section 6. The aggregated SLA values for the Cloud

composition are calculated from the SLA values of

the component services by applying common used ag-

gregation functions as in (Zeng et al., 2003) presented

in Table 1.

A candidate Cloud service composition j is opti-

mal if it is feasible and if it leads to the maximum

utility value with:

U

ioptimal

(Q) =

n

max

j=1

U

i j

(Q) (4)

Table 1: Aggregated SLA attributes of N component ser-

vices S.

SLA attribute Aggregation function

Throughput min

N

i=1

T h(S

i

)

Latency Lat(S

i j

)

i ∈ {1, . . ., N −1}

j ∈ {i + 1,. . . ,N}

Availability

∏

N

i=1

Av(S

i

)

where n is the number of possible Cloud service

compositions. Hence, the match-making problem

can be formulated with a search for the Cloud com-

position with the highest utility value for the user.

As this kind of multi-attribute selection problems is

NP-Hard, we apply a single objective genetic algo-

rithm combined with crossover and mutation opera-

tions to search for the optimal candidates. To evaluate

each candidate we use as objective function the utility

function in equation 1. Additionally, we use a death

penalty function to penalize candidates, which not

satisfy the service constraints and to discard Cloud

compositions with a negative utility. In order to in-

clude the Cloud-to-Cloud latency and the traffic cost

in the utility calculation, we model each candidate

service composition as a full connected undirected

graph (see Figure 2), where the nodes represent the

component services and the edges the network con-

nectivity between them.

5 DATA LOCALITY DRIVEN

WORKFLOW SCHEDULING

To support the data locality during the workflow ex-

ecution, we implemented two dynamic greedy-based

scheduling heuristics, which distribute the workflow

tasks to all the user requested VMs allocated by the

previous described matching policies (see Section 4).

In the following subsections we describe in details the

functionality of the implemented policies.

5.1 DAS Scheduler

We implemented a Data-Aware Size-based scheduler

(DAS) capable of scheduling tasks to the provisioned

VMs running in different Cloud datacenters with re-

spect to the location of the required input data. In a

first step, the algorithm iterates through the workflow

tasks and calculates for each task the total size of the

required input files found in each matched datacen-

ter and store the result in a map data structure called

task data size affinity T

sizea f f

. If we assume that task

T has m required input files f req

i

, i ∈

{

1,...,m

}

and

there are k matched datacenters, the task affinity of

Multi-dimensionalResourceAllocationforData-intensiveLarge-scaleCloudApplications

695

the datacenter dc

j

, j ∈

{

1,...,k

}

is calculated using

the following equation:

T

sizea f f

(dc

j

) =

∑

f req

i

∈dc

j

size( f req

i

), (5)

After sorting the task affinity map by the data size

values in descending order, the policy assigns the task

to the first free provisioned VM running on the data-

center dc

cand

containing the maximum size of located

input files, where:

T

sizea f f

(dc

cand

) =

k

max

j=1

T

sizea f f

(dc

j

) (6)

In case that all the provisioned VMs in the selected

datacenter are busy, the algorithm tries the next can-

didate datacenters in the sorted map to find a free

VM. So that, a load balancing between the datacen-

ters is assured and an unnecessary waiting time for

free VMs can be avoided. The implemented data-

aware scheduling policy is described using the follow-

ing pseudo-code:

Algorithm 2: DAS/DAT Scheduler.

Input : re q u e s t e d V M L i s t , T a s k L i s t , s c h e d u l i n g P o l i c y

For e a c h t a s k T i n T a s k L i s t Do

p r o c e s s A f f i n i t y ( T )

I f s c h e d u l i n g P o l i c y =DAS Then

T a f f = s i z e A f f n i t y M a p

s o r t T a f f by s i z e i n d e s c e n d i n g o r d e r

E l s e i f s c h e d u l i n g P o l i c y =DAT Then

T a f f = t i m e A f f n i t y M a p

s o r t T a f f by t i m e i n a s c e n d i n g o r d e r

En d e l s e

For e a c h e n t r y i n T a f f Do

s i t e = e n t r y . g e t k e y ( )

For e a c h vm i n r e q u e s t e d V M L i s t Do

I f vm . g e t S t a t u s ( ) = i d l e

& vm . g e t D a t a c e n t e r ( ) = s i t e Then

s c h e d u l e T t o vm

s c h e d u l e d T a s k L i s t . a dd (T )

Break

Endif

Endfor

Endfor

Endfor

Output : s c h e d u l e d T a s k L i s t

Algorithm 3: Function processAffinity(Task T).

Input : m a t c h e d D a t a c e n t e r L i s t , R e p l i c a c a t a l o g R

For e a c h d a t a c e n t e r D i n m a t c h e d D a t a c e n t e r L i s t Do

Time = 0; S i z e = 0 ;

i n p u t F i l e L i s t = T . g e t I n p u t F i l e L i s t ( )

For e a c h f i l e i n i n p u t F i l e L i s t Do

maxBwth=0

s i t e L i s t = R . ge t ( f i l e )

I f s i t e L i s t . c o n t a i n s (D) Then

s i z e = s i z e + f i l e . g e t S i z e ( )

E l s e

For e a c h s i t e i n s i t e L i s t Do

I f r e g i o n ( s i t e ) = r e g i o n (D) Then

bwth=Cloud−t o −Clou d I n t r a −c o n t i n e n t a l

E l s e

bwth=Cloud−t o −Clou d I n t e r −c o n t i n e n t a l

En d e l s e

I f bwth>maxBwth Then

maxBwth=bwth

Endfor

En d e l s e

t i m e = t i m e + f i l e . g e t S i z e ( ) / maxBwth

Endfor

s i z e A f f n i t y M a p . add (D, s i z e )

t i m e A f f n i t y M a p . add (D, t i m e )

Endfor

Output s i z e A f f n i t y M a p , t i m e A f f n i t y M a p

5.2 DAT Scheduler

The second scheduling policy called Data-Aware

Time-based (DAT) scheduler has a strong similarity

with the previous described DAS policy except in the

method of calculating the task affinity. Instead of cal-

culating the maximum size of existing input files per

datacenter, the algorithm computes the time needed to

transfer the missing input files to each datacenter and

stores the transfer time values in a Map data structure

called T

timea f f

, which is calculated using the follow-

ing equation:

T

timea f f

(dc

j

) =

∑

f req

i

/∈dc

j

trans f ertime( f req

i

), (7)

As target datacenter, the algorithm chooses the data-

center, which assures the minimum transfer time by

sorting the affinity map in the ascending order.

T

timea f f

(dc

cand

) =

k

min

j=1

T

timea f f

(dc

j

) (8)

For each workflow task the algorithm (see func-

tion processAffinity() above) iterates through the

matched datacenters and checks the existence of local

input files. For each missing input file, it calculates

the needed time to transfer the file from a remote lo-

cation to that datacenter. For this, it fetches the replica

catalog and selects a source location, which assures

the maximum bandwidth and consequently the mini-

mal transfer time to that datacenter.

6 EVALUATION

In order to evaluate our proposed multi-dimensional

resource allocation scheme with a real large scale

workflow, we conducted a series of simulation experi-

ments. The simulation setup and results are presented

in the following subsections.

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

696

6.1 Simulation Setup

We implemented the matching and scheduling poli-

cies presented in Section 4 and 5 as part of the

Multi-Cloud simulation framework. As workflow en-

gine, we use WorkflowSim (Weiwei and Ewa, 2012),

a CloudSim-based version of the Pegasus WfMS

3

.

In addition, we use the opt4j (Lukasiewycz et al.,

2011) genetic framework to perform the utility-based

matching. For all the conducted simulation exper-

iments, we configured 20 compute and 12 storage

Clouds located in four world regions (Europe, USA,

Asia and Australia). Each compute Cloud is made

up of 50 physical hosts, which are equally divided be-

tween two different host types with respectively 8 and

16 CPU cores. In order to make the simulation more

realistic, we use real pay-as-you-go prices for com-

putation, storage and network traffic for each mod-

eled Cloud. Traffic inside the same datacenter is free.

The real Cloud SLA metrics values (average for last 3

months) for availability and Client-to-Cloud through-

put were acquired through CloudHarmony

4

network

tests from the same client host. In order to consider

the network traffic and latency in the matching and

scheduling, we defined based on the location of the

datacenters three constant bandwidth and latency val-

ues, which are presented in Table 2. The use of syn-

thetic values is justified by the lack of free accessible

Cloud-to-Cloud network metrics from current Cloud

benchmarking tools.

Table 2: Defined Cloud-to-Cloud latency and bandwidth

values.

Cloud-to local intra-con- inter-con-

Cloud transfer tinental tinental

Bandwidth 100 Mbit/s 30 Mbit/s 10 Mbit/s

Latency 10 ms 25 ms 150 ms

With the help of the framework, we modeled

the following use case for a workflow deployment

on Multi-Cloud. A user located in Europe requests

10 VMs of the type small and 10 VMs of the type

medium and one storage Cloud to store the workflow

input and output data. The configuration of each VM

type is reported in Table 3. We assume that all the

VMs located in the same datacenter are connected

to a shared storage. The Workflow Engine transfers

at execution start the input data from the Client to

the Cloud storage. The output data is stored in the

Cloud storage when the execution is finished. The

Data Manager fetches the Replica catalog and trans-

fers all the missing input files before each task execu-

3

http://pegasus.isi.edu/

4

http://www.cloudharmony.com

tion from their source datacenters with the maximal

possible bandwidth. We configured the Workflow En-

gine to release maximal 5 tasks to the broker in each

scheduling interval (default value used in Pegasus).

Table 3: VMs Setup; 1 CPU Core: 1GHZ Xeon 2007 Pro-

cessor of 1000 MIPS; OS: Linux 64 bits.

VM Type Cores RAM (GB) Disk (GB)

small 1 1.7 75

medium 2 3.75 150

It is worth to mention that if the user executes the

same workflow multiple times, our implemented data

locality scheduling scheme reuses the existing repli-

cated input data in order to save on data transfers

and costs. For simplicity, we consider in our evalu-

ation only the first run of the workflow. For collecting

the simulation results we repeated each of the exper-

iments ten times from the same host and then com-

puted the average values.

6.2 Montage Workflow Application

1111

2 2 2 2

3

4

5 5 5 5

6

7

8

9

mProjectPP

mDiffFit

mBackground

mJPEG

mShrink

mAdd

mConcatFit

mBgModel

mImgTbl

Figure 3: A sample 9-level Montage workflow.

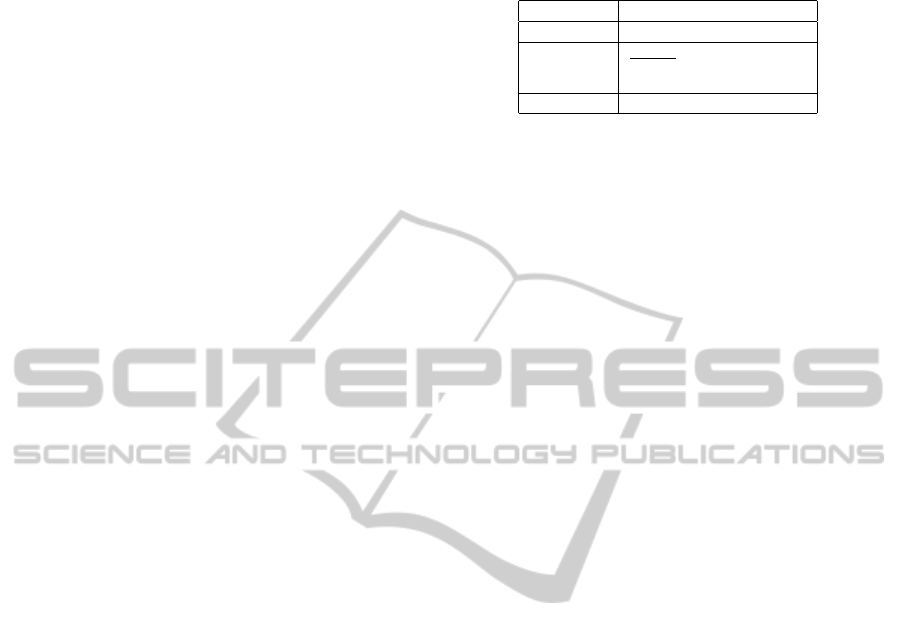

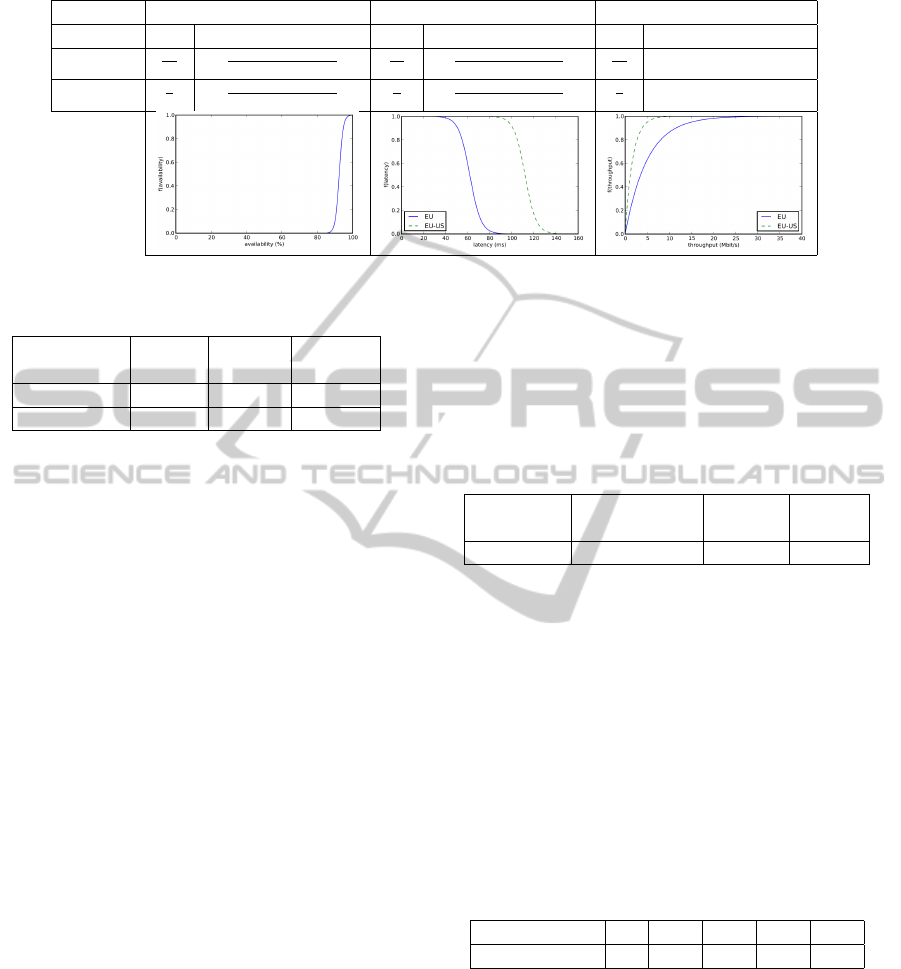

Montage (Berriman et al., 2004) is a data-

intensive (over 80% I/O operations) workflow appli-

cation used to construct large image mosaics of the

sky obtained from the 2MASS observatory at IPAC

5

.

A sample directed acyclic graph (DAG) of a 9 level

Montage workflow is illustrated in Figure 3. The

tasks at the same horizontal level execute the same

5

http://www.ipac.caltech.edu/2mass/

Multi-dimensionalResourceAllocationforData-intensiveLarge-scaleCloudApplications

697

Table 5: User preferences expressed using fitting functions f and relative weights λ; γ = 0.0005; β = 1 − γ.

Availability Latency Throughput

Scenario λ

av

f (av) λ

lat

f (lat) λ

th

f (th)

EU

3

10

γ

γ+βe

(−0.9(av−84))

6

10

γ

γ+βe

(0.2(lat−100))

1

10

1 − βe

−0.2th

EU-US

2

5

γ

γ+βe

(−0.9(av−84))

2

5

γ

γ+βe

(0.2(lat−150))

1

5

1 − βe

−0.6th

Table 4: User non-functional SLA Requirements; Availabil-

ity (av); Latency (lat); Throughput (th).

deployment min av max lat min th

scenario (%) (ms) (Mbit/s)

EU 95 50 12

EU-US 95 100 2

executable code (see right side of the figure) with

different input data. For all our conducted experi-

ments we imported with the help of the WorkflowSim

Parser a real XML formatted Montage trace executed

on the FutureGrid testbed using the Pegasus WfMS.

The tasks runtime and files’ size information are im-

ported from separate text files. The imported trace

contains 7463 tasks within 11 horizontal levels, has 3

GB of input data and generates respectively about 31

GB and 84 GB of intermediate output and traffic data.

6.3 Simulation Scenarios

For the purpose of evaluation, we modeled two sim-

ulation scenarios. In the first scenario, named “EU-

deployment”, all the requested VMs and storage

Cloud are deployed in the same user region (Europe).

In the second scenario, named “EU-US deployment”,

all the 10 VMs of type small are deployed on Clouds

located in the US region, while the 10 VMs of type

medium and the storage Cloud are located in Eu-

rope. The non-functional SLA requirements for both

scenarios, given in Table 4, express the user desired

ranges for availability, latency and throughput in or-

der to deploy the workflow with an acceptable quality.

These values are consumed by the Sieving matching

algorithm described in Section 4 to select the target

Clouds for the workflow deployment.

The fitting functions and the relative weight for

each SLA parameter, both required for the utility-

based matching, are given in Table 5. As we can

see from the table, the user prefers for the first sce-

nario Clouds with low Cloud-to-Cloud latency values

to advantage the data transfer time, while for the sec-

ond scenario availability is equally weighted with the

latency and the Client-to-Cloud throughput has more

significance.

The maximum user payment willingness for each

VM type as well as for storage and network traffic are

given in Table 6.

Table 6: Maximal payment for VMs, storage and traffic.

VM small VM medium storage traffic

($/hour) ($/hour) ($/GB) ($/GB)

0.09 0.18 0.1 0.12

6.4 Impact of Clustering

As mentioned before we use clustering to reduce

the scheduling overhead of large scale workflows on

Multi-Cloud. A well suited clustering technique for

the Montage workflow structure is horizontal cluster-

ing. Herewith tasks at the same horizontal level are

merged together into a predefined number k of clus-

tered jobs. Table 7 shows the resulted total number of

clustered jobs for each used k.

Table 7: Total Number of clustered jobs with different clus-

ter numbers k.

k 20 40 60 80 100

Jobs number 92 152 212 272 332

In order to evaluate the impact of clustering with

respect to increasing cluster number k, we measured

in a first experiment the total time needed to execute

a single run of the sample Montage workflow and the

total consumed time for data transfer in minutes for

the “EU-deployment” scenario. For this experiment,

we configured the Cloud Service Broker to use utility-

based matching together with DAS as scheduling pol-

icy. For an accurate calculation of the execution time,

we extracted from the workflow trace the real delay

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

698

overhead resulted from clustering, post-scripting and

queuing. Figure 4 illustrates the results achieved.

0

20

40

60

80

100

120

140

160

180

k=20 k=40 k=60 k=80 k=100

time (min)

makespan data transfer time

Figure 4: Workflow makespan and total data transfer time

with utlity+DAS EU for different cluster numbers k.

As depicted in the figure, the continually increase

of k results in a steady increase of the workflow ex-

ecution time. This demonstrates that our allocation

scheme scales well with increasing number of the

clustered workflow jobs. Although the transfer time

is nearly constant, as file transfers are performed with

a high throughput and low latency values because the

matched Clouds are close to the user, we observed

a slow decrease of the transfer time for small num-

bers of k. In the latter case more files are merged in a

clustered job, so that the amount of Intercloud trans-

fers is heavily reduced. For all the next conducted

experiments, we fixed the cluster number to k=20, as

it gives us the best results in term of makespan and

consequently execution cost.

6.5 Makespan Evaluation

We repeated the previous experiment with the “EU”

and “EU-US” scenarios with the different matching

and scheduling policies presented in Section 4 and 5.

For a comparative study we executed the workflow

using a simple Round Robin scheduler, which sched-

ules tasks to the first free available VMs regardless

of their datacenter location and the from the literature

well established “Min-Min” scheduler (Freund et al.,

1998), which prioritizes tasks with minimum runtime

and schedule them on the medium VM types. The re-

sults with k=20 for all the possible combinations are

shown in Figure 5.

It can be seen from the figure that for both scenar-

ios the use of utility-based matching combined with

the DAS or DAT scheduler gives the lowest execu-

tion time compared to Sieving. This result approves

how the efficiency of the utility matching affects the

scheduling performance. For the EU scenario, the

utility algorithm have a tendency to deploy all the re-

quested VMs on the cheapest datacenter to save cost

73

90

91

92

93

93

94

96

97

100

107

utility+All EU

sieving+DAS EU

sieving+DAT EU

sieving+RR EU

utility+DAS EU-US

utility+DAT EU-US

sieving+DAS EU-US

utility+RR EU-US

sieving+DAT EU-US

utility+MinMin EU-US

sieving+RR EU-US

makespan in minutes

Figure 5: Workflow makespan with different scheduling

and matching policies for k=20.

and minimize latency. So that, the user is charged

only for the costs to transfer input/output data from/to

storage Cloud. This explains the same makespan and

Intercloud transfer obtained with different schedul-

ing policies. We observed also that our implemented

DAS and DAT scheduler outperform Round Robin

and MinMin, especially for the EU-US scenario.

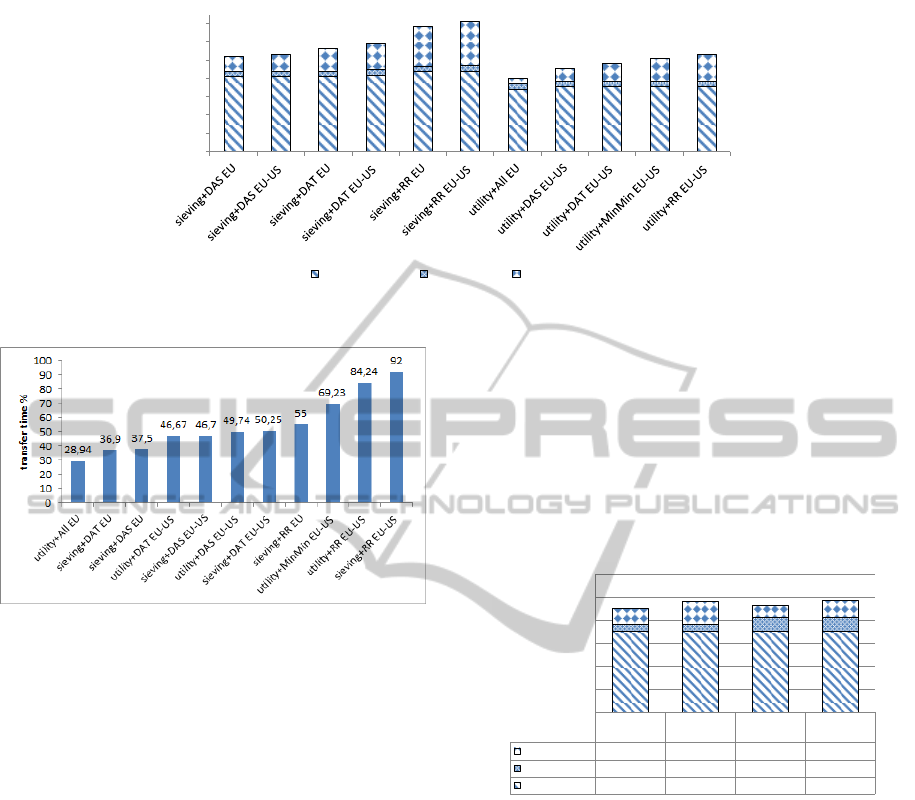

6.6 Intercloud Data Transfer

In order to assess the impact of our multi-dimensional

scheme on reducing the Intercloud data transfers, we

measured for the previous simulation experiment the

size of Intercloud transfers and the ratio of transfer

time over the total consumed processing time. The

results for different combinations of matching and

scheduling policies with k=20 are depicted respec-

tively in Figure 6 and Figure 7. Note that for the In-

tercloud transfer time calculation, we use the previous

defined ”Inter-Continental” and ”Intra-Continental”

bandwidth values from Table 2.

Figure 6: Total amount of Intercloud transfers with different

scheduling and matching policies for k=20.

It can be seen from Figure 6 that the DAS sched-

uler keeps the Intercloud transfer size under 10 GB

for both scenarios with utility and Sieving policies.

Next to DAS on saving Cloud-to-Cloud data move-

ments is DAT, whereas MinMin and Round robin oc-

cupy the last places. The evaluation results for the

Multi-dimensionalResourceAllocationforData-intensiveLarge-scaleCloudApplications

699

0

1

2

3

4

5

6

7

cost $

Computing cost Storage cost Traffic cost

Figure 8: Total execution cost with Sieving (left) and utility (right) matching for k=20.

Figure 7: Percentage of data transfers with different

scheduling and matching policies for k=20.

transfer time ratio approve also that DAS and DAT

are able to reduce the transfer time ratio up to 37%

and 50% respectively for the EU and EU-US scenario.

On the contrary, the use of Round Robin and Min-

Min scheduling regardless of the matching policy re-

sults in very high transfer ratios in particular for the

EU-US scenario, which disadvantages consequently

the workflow makespan. Therefore, data locality has

more impact when Clouds are not close to user, as in

this case latency and throughput affect more the trans-

fer time.

6.7 Cost Evaluation

In this subsection, we evaluate the impact of used

matching and scheduling policies on the traffic costs

for both simulation scenarios. The previous exper-

imental results show that the use of utility-based

matching and data-aware scheduling heavily reduces

the amount of Intercloud transfers and consequently

the data traffic costs. This result is approved in Figure

8, in which the amount of compute, storage and traffic

costs for different use cases is illustrated. Please note

that for the costs calculation the VMs are charged on

hourly basis. Therefore, the makespan of each sce-

nario is rounded up to the nearest next hour. Also, we

do not consider the additional license and VM images

costs which can be charged by some Cloud providers.

We found that the utility-based matching combined

with the DAS scheduler benefits more the compute

and traffic costs compared to the other use cases. For

example, comparing utility and Sieving with DAS,

respectively up to 25% and 15% cost-saving can be

achieved with the EU and EU-US deployment.

utility+DAS

EU-US

utility+DAT

EU-US

utility+DAS

EU-US (2 ST)

utility+DAT

EU-US (2 ST)

Traffic cost

0,68 0,97 0,53 0,75

Storage cost

0,3 0,3 0,585 0,585

Computing cost

3,54 3,54 3,54 3,54

0

1

2

3

4

5

6

cost $

Figure 9: Total execution cost with one and two storage

Clouds (ST) for k=20.

We conducted another experiment by adding an-

other storage Cloud located in the US region for the

EU-US scenario. The gathered cost results are de-

picted in Figure 9. For the purpose of comparison,

we not consider the time needed to transfer the input

files from the Client to the US located storage Cloud.

It can be seen from the figure that the total costs for

the two storage Clouds use-case are very close to the

one storage use-case, even with the doubled storage

cost. This is due to the tendency of the utility algo-

rithm to deploy the US located VMs and the added

storage Cloud on the same provider to save on traffic

costs.

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

700

7 CONCLUSIONS

This work presented a two-stage multi-dimensional

resource allocation approach for running data-

intensive workflow applications on a Multi-Cloud en-

vironment. In a first phase, a utility-based matching

policy selects the suitable Clouds for users with re-

spect to their SLA requirements and payment willing-

ness. In a second phase, a data locality driven sched-

uler brings the computation to its data to reduce the

Intercloud data transfer.

We evaluated our approach using an imple-

mented simulation environment with a real data-

intensive workflow application in different usage sce-

narios. The experimental results show the bene-

fits from utility-based matching and data locality

driven scheduling in reducing the amount of Inter-

cloud transfers and the total execution costs as well

improving the workflow makespan.

In the next step of this research work, we will

evaluate our multi-dimensional approach with other

Mutli-Cloud real large scale applications like MapRe-

duce. In addition, we will automate the collection

of the newest SLA metrics from real public Clouds

by extending the simulation framework to fetch them

from third-party Cloud monitoring services. Also, we

will include more accurate network models to make

the simulation more realistic. Furthermore, we will

investigate the use of adaptive matching and schedul-

ing policies like in (Oliveira et al., 2012) in order to

deal with resource and network failures on Clouds.

REFERENCES

Asker, J. and Cantillon, E. (2008). Properties of scoring

auctions. The RAND Journal of Economics, 39(1):69–

85.

Berriman, G. B., Deelman, E., Good, J. C., Jacob, J. C.,

Katz, D. S., Kesselman, C., Laity, A. C., Prince, T. A.,

Singh, G., and Su, M.-H. (2004). Montage: a grid-

enabled engine for delivering custom science-grade

mosaics on demand. In Quinn, P. J. and Bridger,

A., editors, Society of Photo-Optical Instrumentation

Engineers (SPIE) Conference Series, volume 5493 of

Society of Photo-Optical Instrumentation Engineers

(SPIE) Conference Series, pages 221–232.

Calheiros, R. N., Ranjan, R., Beloglazov, A., Rose, C. A.

F. D., and Buyya, R. (2011). Cloudsim: A toolkit for

modeling and simulation of cloud computing environ-

ments and evaluation of resource provisioning algo-

rithms. Software: Practice and Experience, 41(1):23–

50.

Dastjerdi, A. V., Garg, S. K., and Buyya, R. (2011). QoS-

aware Deployment of Network of Virtual Appliances

Across Multiple Clouds. 2011 IEEE Third Interna-

tional Conference on Cloud Computing Technology

and Science, pages 415–423.

Dean, J. and Ghemawat, S. (2008). Mapreduce: simpli-

fied data processing on large clusters. Commun. ACM,

51(1):107–113.

Deelman, E., Singh, G., Livny, M., Berriman, B., and Good,

J. (2008). The cost of doing science on the cloud:

The montage example. In Proceedings of the 2008

ACM/IEEE Conference on Supercomputing, SC ’08,

pages 50:1–50:12, Piscataway, NJ, USA. IEEE Press.

Fard, H. M., Prodan, R., Barrionuevo, J. J. D., and

Fahringer, T. (2012). A Multi-objective Approach

for Workflow Scheduling in Heterogeneous Environ-

ments. 2012 12th IEEE/ACM International Sympo-

sium on Cluster, Cloud and Grid Computing (ccgrid

2012), pages 300–309.

Fard, H. M., Prodan, R., and Fahringer, T. (2013). A

Truthful Dynamic Workflow Scheduling Mechanism

for Commercial Multicloud Environments. IEEE

Transactions on Parallel and Distributed Systems,

24(6):1203–1212.

Freund, R., Gherrity, M., Ambrosius, S., Campbell, M.,

Halderman, M., Hensgen, D., Keith, E., Kidd, T.,

Kussow, M., Lima, J., Mirabile, F., Moore, L., Rust,

B., and Siegel, H. (1998). Scheduling resources in

multi-user, heterogeneous, computing environments

with smartnet. In Heterogeneous Computing Work-

shop, 1998. (HCW 98) Proceedings. 1998 Seventh,

pages 184–199.

Jin, J., Luo, J., Song, A., Dong, F., and Xiong, R. (2011).

Bar: An efficient data locality driven task scheduling

algorithm for cloud computing. In Cluster, Cloud and

Grid Computing (CCGrid), 2011 11th IEEE/ACM In-

ternational Symposium on, pages 295–304.

Jrad, F., Tao, J., Knapper, R., Flath, C. M., and Streit,

A. (2013a). A utility-based approach for customised

cloud service selection. Int. J. Computational Science

and Engineering, in press.

Jrad, F., Tao, J., and Streit, A. (2013b). A broker-based

framework for multi-cloud workflows. In Proceedings

of the 2013 international workshop on Multi-cloud

applications and federated clouds, MultiCloud ’13,

pages 61–68, New York, NY, USA. ACM.

Lamparter, S., Ankolekar, S., Grimm, S., and R.Studer

(2007). Preference-based Selection of Highly Config-

urable Web Services. In Proc. of the 16th Int. World

Wide Web Conference (WWW’07), pages 1013–1022,

Banff, Canada.

Lukasiewycz, M., Glaß, M., Reimann, F., and Teich, J.

(2011). Opt4J - A Modular Framework for Meta-

heuristic Optimization. In Proceedings of the Genetic

and Evolutionary Computing Conference (GECCO

2011), pages 1723–1730, Dublin, Ireland.

Mian, R., Martin, P., Brown, A., and Zhang, M. (2011).

Managing data-intensive workloads in a cloud. In

Fiore, S. and Aloisio, G., editors, Grid and Cloud

Database Management, pages 235–258. Springer

Berlin Heidelberg.

Oliveira, D., Oca

˜

na, K. a. C. S., Bai

˜

ao, F., and Mattoso,

M. (2012). A Provenance-based Adaptive Scheduling

Multi-dimensionalResourceAllocationforData-intensiveLarge-scaleCloudApplications

701

Heuristic for Parallel Scientific Workflows in Clouds.

Journal of Grid Computing, 10(3):521–552.

Pandey, S., Wu, L., Guru, S., and Buyya, R. (2010). A par-

ticle swarm optimization-based heuristic for schedul-

ing workflow applications in cloud computing envi-

ronments. In Advanced Information Networking and

Applications (AINA), 2010 24th IEEE International

Conference on, pages 400–407.

Szabo, C., Sheng, Q. Z., Kroeger, T., Zhang, Y., and Yu, J.

(2013). Science in the Cloud: Allocation and Execu-

tion of Data-Intensive Scientific Workflows. Journal

of Grid Computing.

Weiwei, C. and Ewa, D. (2012). Workflowsim: A toolkit for

simulating scientific workflows in distributed environ-

ments. In The 8th IEEE International Conference on

eScience, Chicago. IEEE, IEEE.

Yuan, D., Yang, Y., Liu, X., and Chen, J. (2010). A data

placement strategy in scientific cloud workflows. Fu-

ture Gener. Comput. Syst., 26(8):1200–1214.

Zeng, L., Benatallah, B., Dumas, M., Kalagnanam, J., and

Sheng, Q. Z. (2003). Quality driven web services

composition. In Proceedings of the 12th International

Conference on World Wide Web, WWW ’03, pages

411–421, New York, NY, USA. ACM.

CLOSER2014-4thInternationalConferenceonCloudComputingandServicesScience

702