Finding You on the Internet

An Approach for Finding On-line Presences of People for Fraud Risk Analysis

Henry Been

1

and Maurice van Keulen

2

1

Snelstart Software, Harkebuurt 3, 1794HM Enschede, The Netherlands

2

Faculty of EEMCS, University of Twente, POBox 217, 7500AE Enschede, The Netherlands

Keywords:

Fraud Detection, Web Crawling, Twitter, Entity Resolution.

Abstract:

Fraud risk analysis on data from formal information sources, being a ‘paper reality’, suffers from blindness to

false information. Moreover, the very act of providing false information is a strong indicator for fraud. The

technology presented in this paper provides one step towards the vision of harnessing real-world data from

social media and internet for fraud risk analysis. We introduce a novel iterative search, monitor, and match

approach for finding on-line presences of people. A real-world experiment showed that Twitter accounts can

be effectively found given only limited name and address data. We also present an analysis of the ethical

considerations surrounding the application of such technology for fraud risk analysis.

1 INTRODUCTION

In the Netherlands, the governmental organization

Inspectie Sociale Zaken en Werkgelegenheid (ISZW)

(Inspectorate for the Ministry of Social Affairs and

Employment) is responsible for “detection of fraud,

exploitation and organised crime within the chain of

work and income (labour exploitation, human traf-

ficking and large scale fraud in the area of social secu-

rity)”.

1

The ISZW is facing both budget cuts and de-

mand for more fraud detection

2

To accommodate this,

they have been changing their work process to be risk-

driven, which is believed to increase efficiency. This

means that analysts of the ISZW first classify subjects

into categories of low through high risk of fraud. Re-

sources are then distributed accordingly.

Although in theory a sound approach, results in

practice are not as good as the ISZW believes they

can be. One major cause is that the ISZW uses only

formal information sources available within the Min-

istry and other ministries. Especially in fraudulent

cases, people are inclined to provide false informa-

tion, hence the formal information sources can be

considered to reflect a ‘paper reality’. A risk analy-

sis performed based on this data, assesses risk in the

1

http://www.inspectieszw.nl/english

2

http://www.rijksoverheid.nl/documenten-en-

publicaties/persberichten/2011/03/14/kleinere-en-

efficientere-overheid-bij-szw-uwv-en-svb-bespaart-410-

miljoen.html.

paper reality, not in the real world. Hence it will miss

concealed fraudulent cases.

To overcome problems like these, the ISZW is

looking for other sources for predictive characteris-

tics of subjects that it can use to discover fraud. One

successful experiment involved water usage (Inspec-

tie SZW, 2012). It is known that water usage at a

certain address correlates strongly with the number of

persons living at that address. By gathering water us-

age data, discrepancies can be detected between the

number of people registered to be living at an address

and the predicted number of people based on the wa-

ter usage. The discrepancy is an indicator for, for ex-

ample, someone falsifying his/her address to meet the

requirements for receiving welfare support.

Unfortunately, water usage can only be used to

predict certain types of fraud, like welfare or unem-

ployment benefits, which involve household charac-

teristics. Other types of fraud, like undeclared capi-

tal or income cannot be related to falsified addresses.

Nevertheless, the experiment showed that involving

‘data from the real world’ allows for indicators for fal-

sification and attempts at concealment, and that such

indicators are strong predictors for high risk of fraud.

One direction the ISZW is exploring, is the use

of social media. People voluntarily share a lot of

information about themselves online. Furthermore,

research has shown that, contrary to popular belief,

online profiles do not depict who we want to be, but

who we are (Back et al., 2010). For example, some-

697

Been H. and van Keulen M..

Finding You on the Internet - An Approach for Finding On-line Presences of People for Fraud Risk Analysis.

DOI: 10.5220/0004985006970706

In Proceedings of the 16th International Conference on Enterprise Information Systems (ISS-2014), pages 697-706

ISBN: 978-989-758-028-4

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

one who Twitters about bikes he repairs, buys or sells

might have undeclared income. Or someone who is

posting Facebook updates from all over Europe, is

apparently in possession of sufficient funds for trav-

eling, hence likely of undeclared capital or income.

Note that possibly incriminating information is

not only disclosed because of carelessness, but also

because many on-line activities unavoidably leave

traces. For example, if someone raises a substan-

tial income by buying and selling items on an on-

line auction website, the website unavoidably con-

tains all data necessary to calculate this income, hence

for checking whether or not that income has been de-

clared and whether or not that income violates a re-

quirement for receiving welfare support.

If data from social media and other websites could

be harnessed, many real-world fraud predictors might

be derived. However, before the ISZW is able to

harness information from social media, they need to

be able to identify which online presences belong

to a certain person. With online presence we mean

any account on some social media or other website,

such as Twitter, Facebook, eBay or some blog. The

ISZW does not have much information in its informa-

tion sources usable for this identification: typically

only name, address, phone number(s), and possibly

an email address.

This paper focusses on this particular problem:

Given only limited details about a person, can one au-

tomatically determine his/her online presences with

sufficient certainty? In essence an entity resolution

problem (Brizan and Tansel, 2006) on internet-scale.

In our experiments, we use a Twitter account as a rep-

resentative examples of an online presence. There are

two important characteristics of working with inter-

net data that make such a task difficult: First, the vast

amount of Twitter accounts (around 900 million at the

time of the experiment) makes a side-by-side com-

parison of details of a person and a Twitter account

infeasible. Secondly, the influence of noise, i.e., nick-

names, duplicates, false and incomplete accounts, in-

flicts a significant performance penalty.

Contributions. This paper presents (a) a novel iter-

ative search, monitor, and match approach for finding

on-line presences of people given only limited name,

address data; (b) a real-world experiment with two

subject groups of 22 (voluntary sign-up) and 85 sub-

jects (from ISZW), respectively, where our IMatcher

prototype gathered candidate accounts for extended

periods of time. Although our initial attempt at pin-

pointing the correct account for each subject proved

ineffective, we showed that in almost all cases, the

correct account was among the candidates. And (c) an

analysis of the ethical considerations surrounding the

application of such technology for fraud risk analy-

sis. In this way, the paper provides one step towards

the vision of harnessing real-world data from social

media and internet for fraud risk analysis.

Outlook. The rest of this paper is organized as fol-

lows. Section 2 discusses related work. Section 3 de-

scribes the approach on a conceptual level. Section 4

presents some technical aspects of realizing the ap-

proach in practice. Section 5 describes the performed

experiments, which are discussed in Section 6. Sec-

tion 7 discusses some of the ethical concerns sur-

rounding both the experiments and the application of

this approach for fraud detection. Finally Section 8

concludes the paper.

2 RELATED WORK

The task of finding a person’s Twitter account can

be seen as an entity resolution problem (Brizan and

Tansel, 2006). Many approaches from this field can-

not be applied, however, because one of the sources

is not directly accessible: the database behind twit-

ter.com. The task can also be described as an entity

extraction and disambiguation problem. Often, ap-

proaches in this field use a knowledge base, such as

Aida (Yosef et al., 2011) which uses Yago (Suchanek

et al., 2007). These are also not applicable here, be-

cause the sought entities are not famous, hence do not

appear in any knowledge base. An open world ap-

proach such as (Habib and van Keulen, 2013) comes

closer, but as opposed to this work, in the end we are

not interested in any homepage for the entity, but in

a specific one: the person’s Twitter account. Another

difference of this work with many others, is that our

approach is incremental: new candidates and/or at-

tributes are discovered and evaluated continuously.

Linking social media profiles is a related problem.

Veldman (Veldman, 2009) investigated the value of

the connection network for linking the Dutch social

network site Hyves with LinkedIn. Narayanan and

Shmatikov (Narayanan and Shmatikov, 2009) used

a known, labeled network surrounding a user to de-

anonymize an anonymous network surrounding the

same user. Both confirm that the network alone is

not discriminative enough, but including the network

in an attribute-based matching improves performance.

Nemo (Jain and Kumaraguru, 2012) uses three di-

mensions: profile details, user generated content, and

connection network. Starting with a social media pro-

file provides more information for search than in our

application. Therefore, one clue they found most ef-

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

698

fective cannot be exploited: self-mentioning, where

someone tweets a reference to his/her Facebook page.

Other approaches towards using online profiles

to gather data about individuals have surfaced. An

example is RIOT

3

. This system finds people on-

line, tracks their past activities and makes predictions

about future movements. RIOT is semi-automatic in

its disambiguation.

Other forms of input have been investigated. (Per-

ito et al., 2011) use usernames from Google and EBay

services to find accounts belonging to the same user.

Face recognition can also be used as input (Minder

and Bernstein, 2011). Although in itself it does not

perform better than text-based approaches, they do

show that combining both dimensions increases ac-

curacy.

Note that many approaches mentioned here work

with pre-fetched databases, not directly on internet-

scale data as we did.

3 CONCEPTUAL APPROACH

When looking for something, humans often take a

longlist/shortlist approach: using a set of heuristic

queries they quickly gather a set of possible answers

to their search: the longlist. Each possible answer un-

dergoes a brief examination to select the few answers

that (s)he deems most likely to be correct: the short-

list. The answers on the shortlist undergo a more thor-

ough examination for determining the final answer.

To automatically find online presences of a per-

son, we take a similar, but automated approach. First,

a set of possibly matching online presences are gath-

ered. Together they form the longlist. The challenge

is to ensure that the longlist includes the correct ones

while at the same time keeping the length of the list

within practical bounds. Every entry of the longlist

is examined to gather some characteristics about it.

Comparing these characteristics with the subject of

the initial search yields a similarity score. Select-

ing only the entries with the highest similarity scores

yields the shortlist, a list of likely correct results.

We deviate from the human longlist/shortlist ap-

proach in two ways:

• Besides a shortlist of likely correct results, also a

list of highly likely incorrect results is determined

(the exclusion list).

• The process is iterative: the longlist, shortlist and

exclusion list are updated and improved at each

iteration with the aim that the shortlist converges

to the correct result.

3

http://www.guardian.co.uk/world/2013/feb/10/software-

tracks-social-media-defence

3.1 Formalization of Approach

We assume that a set of persons P is given. For each

person p ∈ P, data on a number of attributes a ∈ A is

also given, denoted as p.a. In our experiments, A =

{firstname, lastname, address, email, telephone}.

Let T be the set of all Twitter accounts. The goal

of our approach is to identify for all p ∈ P, the Twitter

account of this person, denoted as

˜

t

p

.

Note that it is infeasible to iterate over all accounts

t ∈ T as there were around 900 million at the time

of the experiment. Therefore, we follow a three-step

strategy. Executing the three steps once is called an it-

eration denoted with I. A set of consecutive iterations

is call a run denoted with r = {I

1

, . . . , I

N

}. Each sub-

sequent iteration I

n

produces, for each p ∈ P, an im-

proved candidate set or longlist C

n

p

of accounts possi-

bly belonging to p and an exclusion set E

n

p

of accounts

that have been considered but found to be not belong-

ing to p with sufficient certainty.

Step 1. The aim of the first step is to construct a

longlist of candidates while ensuring that it includes

the correct one if there is one. The first step starts with

a search for possibly matching Twitter accounts using

a number of queries Q. Each query q ∈ Q results in a

set of Twitter accounts q(p) = T

0

.

Queries we used in our experiments were, for ex-

ample, Google queries like ‘p.firstname p.lastname

twitter’ from which we extracted Twitter accounts

from the top-8 (later top-20) results, or spatial queries

on Twitter producing all tweets sent within a 200 me-

ter radius around the coordinates of p.address from

which we extracted the accounts of the users who sent

them.

The union of all query results is the candidate set

of that iteration: c

n

p

=

S

q∈Q

q(p). The found candi-

dates are accumulated. Let C

n

p

= (C

n−1

p

∪ c

n

p

)\E

n−1

p

be the set of candidates after iteration I

n

. Obviously,

the start situation is C

0

p

=

/

0 and E

0

p

=

/

0.

The set of queries are constructed in such a way

that they achieve a high likelihood that the correct re-

sult is in the candidate set, i.e., 0 P(

˜

t

p

∈ c

n

p

) ≤ 1

even though the queries can only produce a very lim-

ited result set |q(p)| |T |. Sections 4 and 5.1 provide

more details on the exact construction of the queries.

Step 2. The goal of the second step is to gather more

details about the candidates on the longlist, including

by assumption, about the person who owns the ac-

count. Then, for each candidate of a person, we de-

termine how similar it is to the data we have on that

person.

FindingYouontheInternet-AnApproachforFindingOn-linePresencesofPeopleforFraudRiskAnalysis

699

We use a set of similarity functions S. Each

s ∈ S determines a similarity score s(p, t) ∈ [0, 1]

based on certain criteria. The overall similarity for

all p ∈ P and for all t ∈ C

n

p

is computed as os(p, t) =

1

W

∑

s∈S

w

s

s(p, t) where w

s

is the weight of similarity

function s and W =

∑

s∈S

w

s

.

Step 3. Along with (hopefully) the correct result

˜

t

p

,

there are obviously many incorrect results in C

n

p

called

false positives F

n

p

= C

n

p

\{

˜

t

p

}.

The goal of the third step, is to mark those ac-

counts as false positives for which sufficient certainty

has been obtained that they do not belong to p, i.e.,

which accounts to add to the exclusion set. Three

thresholds are in play here:

• Let β be the number of iterations during which no

accounts are excluded.

• Let α

1

be the similarity score below which ac-

counts are excluded.

• Let α

2

be the similarity score above which ac-

counts are considered plausible.

Therefore, E

n

p

=

(

/

0 if n ≤ β

{t ∈ C

n

p

|os(p, t) ≤ α

1

} otherwise

From the remaining candidates, we determine a

set of most plausible candidates or shortlist

˜

C

n

p

= {t ∈

C

n

p

|os(p, t) ≥ α

2

}

Convergence. The expectation is that with a grow-

ing number of iterations, the steps converge to the fol-

lowing two desirable situations:

˜

C

n

p

= {

˜

t

p

} in case

p has a Twitter account in reality, or

˜

C

n

p

=

/

0 in case

(s)he hasn’t. There are two important reasons that

strengthen this expectation.

• Query results may sometimes include

˜

t

p

, but also

many times not. Repeating the queries over time,

increases the likelihood that

˜

t

p

is encountered

eventually. Just once does a person need to write

a tweet sufficiently close to the known address

or sufficiently popular to appear in the top-20

Google result, for the system to pick it up.

• By accumulating the data gathered in step 2, the

similarity functions have more data to go one,

hence can be expected to gain in accuracy.

For these two reasons, it is expected that the correct

Twitter account will ‘surface’ eventually.

Note that for our application, it is not abso-

lutely necessary that the desirable situation is actually

achieved. A shortlist with several possible candidates

is usable as input to the risk analysis process. The lat-

ter is statistical in nature, hence it is straightforward to

deal with more than one candidate as long as each has

an adequate probability estimate (van Keulen, 2012).

Persons

On-line

presences

Matches

Input

Results

Finder

Attributes

Extractor

Crawler

pipelines

Matcher

pipeline

Internet

IMatcher

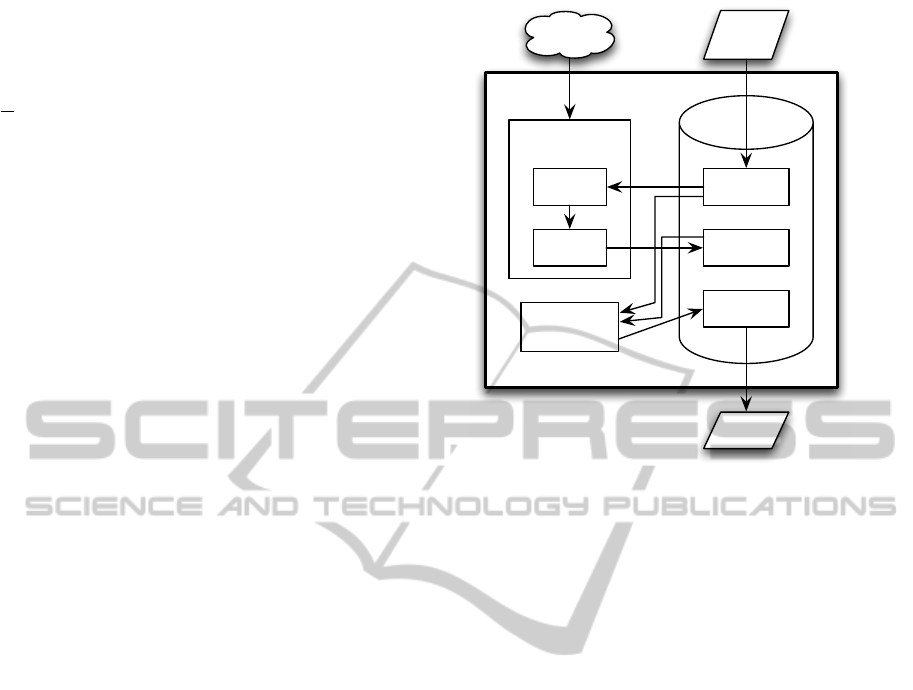

Figure 1: Overview of the IMatcher system.

4 TECHNICAL ASPECTS

Overview Architecture. The system implement-

ing the conceptual approach of Section 3 is called

IMatcher. Figure 1 presents the architectural

overview. The core of IMatcher is an XML database

storing all data: input data on the persons to be found

as well as data on the candidate Twitter accounts in-

cluding user profile data, photo, collected tweets, and

any email addresses and phone numbers found in the

tweets.

The two other main components are the crawler

and the matcher. The crawler is responsible for all

Internet access: executing the queries, retrieving re-

sulting web pages, and extracting attributes and ac-

counts from those pages, as well as Twitter access: for

all found accounts, retrieving profile data, collect all

tweets, and extract information from the tweet texts.

The matcher is responsible for a multi-criteria com-

parison between the input person data with the data

collected on the found accounts. Both components

use a pipeline architecture (also known as pipe-and-

filter) (Hofmann et al., 1996) for easy scheduling and

monitoring.

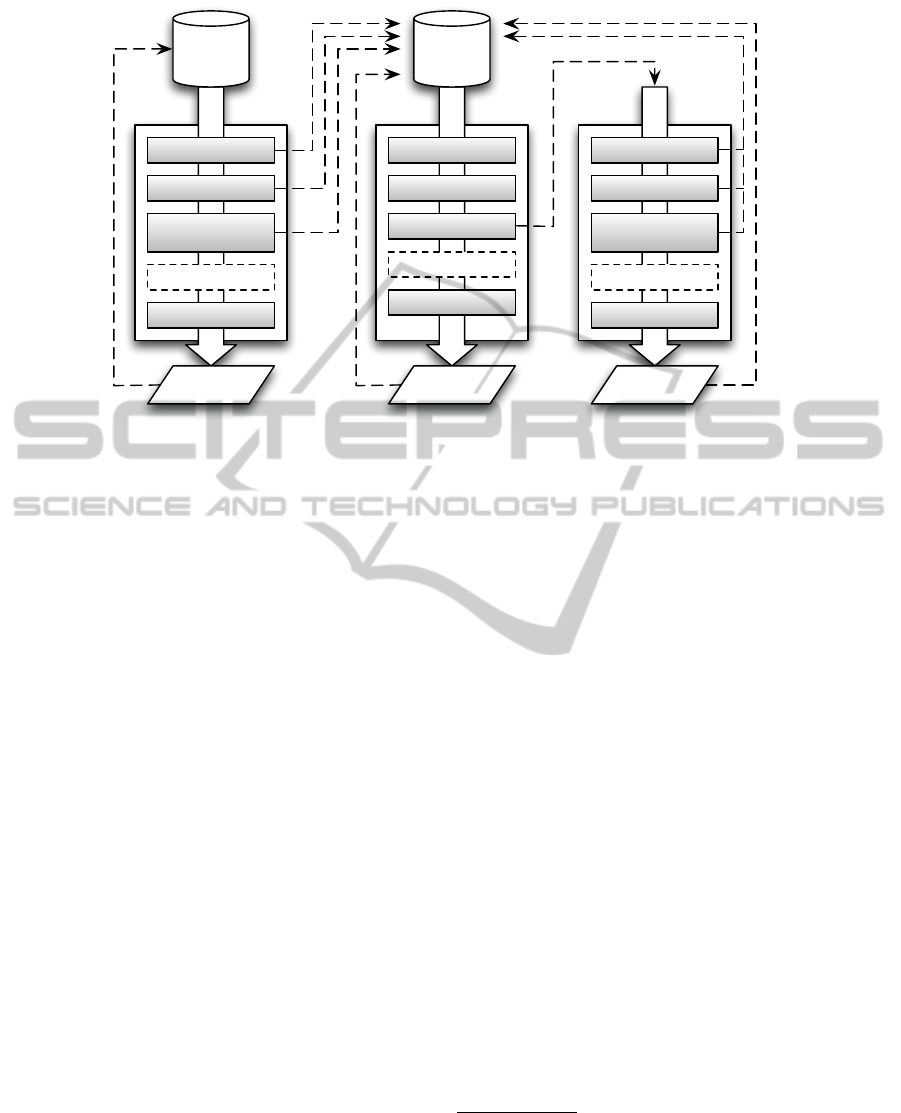

Crawler Pipelines. The pipelines for the crawler

are presented in Figure 2.

The inflow of the first pipeline are the persons.

The first few sinks execute the queries in an attempt

to find Twitter accounts. Thus found accounts pro-

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

700

Persons

ByNameFinder

ByLocationFinder

KnownAccount

Enumerator

other

PersonUpdater

Person

data

Person Pipeline

ProfileExtractor

PhotoExtractor

MsgExtractor

AccountPersister

other

Account

data

Twitter

Accounts

Account Pipeline

EmailExtractor

PhoneExtractor

Language

Extractor

other

MsgPersister

Message

data

Message Pipeline

attributes

Figure 2: The IMatcher crawler pipelines.

vide the inflow for the second pipeline. To complete

the inflow, the ‘KnownTwitterEnumerator’ also sends

the candidate accounts from previous iterations. Any

newly found accounts are stores with the person by

the ‘PersonUpdater’.

The second pipeline is responsible for extracting

information about the accounts. Profile information

and a photo are extracted at each iteration to ensure

that changes are caught. The pipeline also contains

a ‘TwitterAccountPruner’ (not shown) that filters ac-

counts in the exclusion list thereby avoiding that they

get processed. Furthermore, from the moment an ac-

count becomes a candidate for some person, its tweets

are collected.

Those tweets are the inflow for the third pipeline

responsible for extracting information from the

tweets, such as the language, email addresses, phone

numbers, etc. This information is added to the stored

account data as it provides valuable clues for match-

ing.

Note that although only Twitter-related sinks are

shown, the architecture is extensible with more sinks

and pipelines exploring other data sources, such as

Facebook or e-bay.

Matcher Pipeline. The matcher pipeline is rather

straightforward. Its inflow consists of all combina-

tions of a person and one of its candidate accounts.

Each sink computes a different similarity score based

on some specific criterion. A final sink aggregates the

individual scores to one overall score. All scores are

stored.

Input Data. The IMatcher requires that for each

person an unique number, e.g., the social security

number is provided. This number is not used in

searching or matching and only serves to uniquely

identify the person. Furthermore, the following can

be optionally provided for better search and match-

ing:

• Firstname(s)

• Lastname

• ‘Tussenvoegsel’ (if any)

4

• Any addresses known for the person, such as

home and work address. If unknown or not known

precisely, the information can be provided on

street, city, or country level.

• Any phone numbers known for the person.

• Any email addresses known for the person.

• Any aliases known for the person, such as nick-

names, often used online pseudonyms or avatar

names in online games.

Request Limits and Proxies. Many on-line ser-

vices impose strict limits on the usage of their

APIs. IMatcher uses Google search and the Twitter

API. Twitter limits the number of requests per hour.

Google search not only has a limit on the number of

requests, but also requires that requests are not sent in

4

A ‘tussenvoegsels’ is a typical Dutch phenomenon. It

is a prefix in the lastname. For example, in “Jan van der

Sloot”, “Jan” is the first name, “van der Sloot” the lastname

and “van der” the ‘tussenvoegsel’. The name would be al-

phabetically ordered under ‘S’ of “Sloot”. In other coun-

tries, the ‘tussenvoegsels’ are often contracted, e.g., “Jan

vanderSloot”.

FindingYouontheInternet-AnApproachforFindingOn-linePresencesofPeopleforFraudRiskAnalysis

701

quick succession in an attempt to only allow search

by humans.

In the envisioned production setting, IMatcher is

expected to run within the Dutch iRN infrastructure

5

already available for the Dutch national police to re-

search and investigation of the internet in a safe and

forensically secure way. Since iRN already provides

mechanisms for dealing with request limits, we did

chose to use a simple intermediary solution based on

proxies for carrying out the experiments. A proxy is

a service that forwards requests, hence mimicking a

different origin of the request with its own limit. In

the implementation, we re-used code from the “Neo-

geo” project

6

, which is a research prototype from the

University of Twente that allows for (almost) auto-

matic crawling. Its automated crawling and infor-

mation extraction functionality is not used; only its

lower-level functionality for handling proxies and re-

questing, parsing and storing web pages.

For more details on IMatcher, we refer to (Been,

2013).

5 EXPERIMENTS

We identify four goals in our experiments investigat-

ing how certain factors would influence the resulting

longlist. After these experiments, the gathered data

was analyzed to see how well it could be used to pin-

point the correct account or at least identify a shortlist

of most likely matches.

The first goal is to establish a relation between the

number of iterations n and the average setsize L

n

=

1

|P|

∑

p∈P

|C

n

p

|. This investigates the feasibility of the

chosen approach. The average setsize was expected

to increase with each iteration, but also that it would

stabilize and, over time, even reduce with a growing

exclusion list E

n

p

.

The second goal is to investigate the influence of

the input attributes A on the inclusion of correct ac-

counts I

n

=

{t ∈ C

n

p

| ∃p ∈ P : t =

˜

t

p

}

. It was ex-

pected that not using all the input attributes available,

but leaving out, for example, the lastname, would

negatively influence inclusion. Reversing this result

would show how inclusion would benefit from adding

a specific input parameter.

The third goal is to investigate the relation be-

tween the number of iterations n and inclusion I

n

. It

was expected that inclusion, i.e., the number of dis-

covered correct accounts, would rise during the first

few iterations, and then stabilize at some number with

5

http://columbo.nl

6

https://github.com/utwente-db/neogeo

only an incidental increment.

Finally, the fourth goal is to study the influence

of broader search criteria on the setsize L

n

. Broader

search queries produce longer longlists, but are also

expected to influence inclusion favorably.

5.1 Experimental Setup

Subject Selection. Two different datasets were

available for experimentation. The first dataset, called

‘sign-up’, consisted of 22 subjects, 16 male and 6 fe-

male. All subjects were self-selected and signed up

under full informed consent. It was explained that

the goal of the experiment was to discover their Twit-

ter account using an undirected exploratory approach

and that it was difficult to predict the results and what

kind of form they would take. Of these subjects, 12

had a Twitter account and provided the name of that

account. Therefore, for this dataset the ground truth

is known, hence inclusion could be measured.

The second dataset, called ‘ISZW’, was provided

by the ISZW and consisted of 85 subjects that were

picked from a real risk-analysis project. It was

claimed by the ISZW that the subjects were a repre-

sentative sample of the group currently under inves-

tigation by the ISZW. These subjects did not sign up

voluntarily and were (and are) not informed that they

were part of an experiment. A contract was signed

that defined the authors as responsible for carrying

out a pilot for the ISZW and specified the conditions

they had to uphold. This made it both legal as well as

moral to make this data available to the authors.

Execution. A number of runs were done with the

IMatcher to gather all data needed. Due to the depen-

dence on external services and data-intensive tasks,

the runs could take a lot of time (48 hours for the

longest). Therefore, great care was taken to select a

minimum number of runs to facilitate all goals. The

first four runs were done on the subjects of the sign-

up dataset. One run, including all information avail-

able about the subjects, had 15 iterations. The other

three runs, where some input attributes were left out,

were meant to run five times. Only the last iteration

of run 4 failed, due to an unfortunate reaching of a re-

quest limit. A fith run was performed on the subjects

from the ISZW dataset.

All runs were repeated with an altered version of

the IMatcher that had a broader search for building

the longlist (see ‘Queries’ below). Due to result sizes

and data volumes involved, each run consisted of only

one iteration. Again, there is a missing iteration for

the final run, run 9, with the sign-up dataset.

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

702

Table 1: Runs performed with the IMatcher.

Run data set n Input attributes A

1 sign-up 15 all

2 sign-up 5 all except lastname

3 sign-up 5 all except address

4 sign-up 5 all except e-mail & telephone

5 ISZW 3 all

6 sign-up 1 all

7 sign-up 1 all except lastname

8 sign-up 1 all except address

9 sign-up 1 all except e-mail & telephone

10 ISZW 1 all

Table 1 describes all runs, the dataset they used,

the number of iterations, and which input attributes

were used.

The reasons for choosing these 10 runs are as fol-

lows. The results of the first four runs were used for

the first three goals. Comparing the average setsize

and inclusion of the different runs, the influence of in-

put attributes can be established. Also, each run pro-

vides data related to the relation between the number

of iterations and setsize and inclusion. These mea-

surements are meant to be interpreted in relation to

each other, to see the value of each input attribute.

Run 1, which had the most input available and was

thus expected to perform best, was continued longer

than the other runs to establish a better absolute pro-

jection of the results. Runs 6 to 10 were run for the

fourth goal to discover the influence of search queries

on inclusion.

To make the results of certain iterations as compa-

rable as possible, they should run against an Internet

in more-or-less the same state. Therefore, all itera-

tions i of a run were started as close to each other

in time as possible. As a consequence, there was

a gap of about 16–25 hours between iterations of a

run, depending on the moment of completion of other

runs. After each iteration a snapshot was made of the

database, so metrics could be calculated afterwards.

Queries. For all input attributes except address,

keyword sets were constructed: each attribute indi-

vidually as well as all combinations of firstname(s)

and lastname(s). With each such keyword set, Google

search queries were constructed in two ways.

• direct: the query is equal to the keyword set.

• guided: two queries are constructed as “Twitter

keyword set” and “keyword set site:twitter.com”.

Twitter accounts were extracted from a top-k of

Google results: either directly if a result refers to

a Twitter profile, or from the page the result refers

to by means of the regular expressions

@[a-zA-Z ]*

and

(http(s)?://)?(www.)?twitter.com/[a-zA-Z ]

. For

address, a geographical search was performed with

a radius of r meters using the now retired Twitter API

V1

7

.

For runs 1–5, IMatcher was configured with

search criteria direct, k = 8, and r = 200. For runs

6–10, search criteria were guided, k = 20, r = 200.

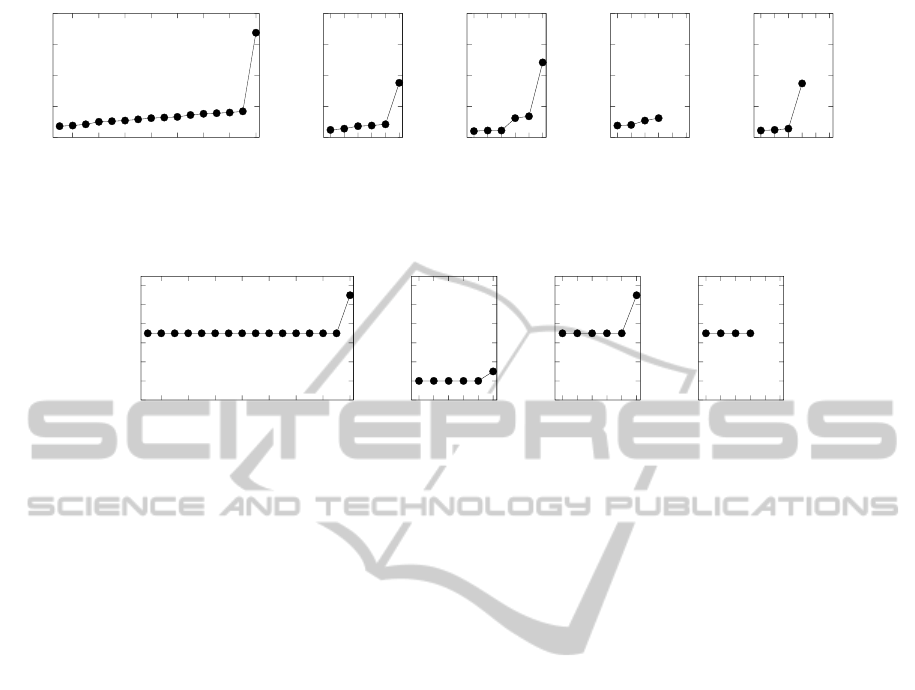

5.2 Results

Experimental results for average setsize L

n

and inclu-

sion I

n

are shown in Figures 3 and 4, respectively.

Each subfigure shows a pair of runs that use the same

input parameters.

It can be seen that specific input attributes do in-

fluence the results. Leaving out lastname shrinks the

average setsize by roughly 50%, but also reduces in-

clusion 7 → 2 and 11 → 3, respectively. Leaving out

address reduces the average setsize by roughly 33%,

but has no influence on inclusion. Leaving out phone

numbers and e-mail adresses does not seem to influ-

ence average setsize nor inclusion.

The convergence expectations are not confirmed

by our experiments. The average setsize did not sta-

bilize and inclusion did not increase with more itera-

tions.

Pinpointing

˜

t

p

among C

n

p

. Close to 37k rows of raw

feature data was extracted from the gathered data. It

consists of the person, the twitter candidate, a number

of similarity scores, the run, and the iteration number.

An attempt was made to predict whether the person

has a Twitter account, as well as whether a candidate

is the correct one. This relates to step 3 of our ap-

proach. Unfortunately, this attempt proved too inef-

fective for it to be useful to present here.

Note that this analysis could only be performed

for the sign-up subject group, since no ground truth

was known for the ISZW dataset.

6 DISCUSSION

From the experimental results, we can conclude a

number of things. First of all, the convergence ex-

pectations were not confirmed. Therefore, the amount

of data to be managed is larger and will grow longer

than the lengths of the runs we experimented with (15

being the longest). than expected. However, we still

expect that, after even more iterations, the pruner will

prune enough accounts to keep the candidate set size

constant or even decrease it over time.

7

https://blog.twitter.com/2013/api-v1-retirement-final-

dates

FindingYouontheInternet-AnApproachforFindingOn-linePresencesofPeopleforFraudRiskAnalysis

703

0

50

100

150

200

2 4 6 8 10 12 14 16

Average setsize

Iteration

(a) Run 1 & 6

0

50

100

150

200

1 2 3 4 5 6

(b) Run 2 & 7

0

50

100

150

200

1 2 3 4 5 6

(c) Run 3 & 8

0

50

100

150

200

1 2 3 4 5 6

(d) Run 4 & 9

0

50

100

150

200

1 2 3 4 5 6

(e) Run 5 & 10

Figure 3: Average setsize L

n

after n iterations. Runs 6–10 are depicted in the graphs as the last iteration (n = 16, 6, 6, 6, and

4, respectively). Last iteration of run 4 and 9 failed.

0

2

4

6

8

10

12

2 4 6 8 10 12 14 16

Inclusion

Iteration

(a) Runs 1 & 6

0

2

4

6

8

10

12

1 2 3 4 5 6

(b) Runs 2 & 7

0

2

4

6

8

10

12

1 2 3 4 5 6

(c) Runs 3 & 8

0

2

4

6

8

10

12

1 2 3 4 5 6

(d) Runs 4 & 9

Figure 4: Inclusion I

n

, i.e., the number of correct Twitter accounts included (out of 12) after n iterations. Since there is no

ground truth known for the ISZW dataset, there is no graph for runs 5&10. Again, Runs 6–9 are depicted in the graphs as the

last iteration (n = 16, 6, 6, and 6, respectively). Last iteration of run 4 and 9 failed.

Secondly, the approach is sensitive to certain in-

put attributes. The full name needs to be available,

since leaving it out will decrease inclusion dramati-

cally. E-mail addresses and phone numbers do not

seem to influence effectiveness.

Thirdly, there is no rise in inclusion after the first

iteration. This is unexpected and most likely due to

the small scale of our experiments and the open nature

of our volunteers. Fraudulent people are expected to

be less open, hence their account is not expected to be

found with a first search. We still believe that increas-

ing the number of subjects and the number of itera-

tions will show that inclusion will slowly converge to

the number of subjects with a Twitter account.

Finally, broadening the search queries Q did have

an impact on both setsize and inclusion. Research is

needed to investigate this relation in more detail. In

practice, an iterative deepening approach might prove

powerful in addressing the fact that candidates ac-

counts can be ranked at any position in query results.

Increasing the top-k of query results that is explored

with each iteration if no definite candidate is found

yet, might prove a good strategy.

Future Work. As stated above, we were not able to

perform step 3 of our approach satisfactorily. We sus-

pect that the problem lies in the chosen features we

extracted from the accounts and our similarity func-

tions. As we have shown the viability of a search,

monitor and match approach on internet-scale, we

aim to improve the second and third step in future

work. We intend to focus on retrieving more char-

acteristics of the online presences we find and com-

paring them to known characteristics. We expect that

including more characteristics like usernames, pho-

tographs, language usage and locations mentioned in

text will improve our matching algorithms and thus

allow us to pinpoint the one correct account or at least

a group of 2 or 3 most likely candidates.

We are also interested in extending our research

beyond Twitter to other online presences, like Face-

book, E-Bay and even homepages in general. Gather-

ing more online presences will allow for better char-

acteristic extraction and thus for better fraud pre-

diction. It will also enable the exploitation of self-

mentioning.

7 ETHICAL CONSIDERATIONS

When undertaking research such as ours, it is impor-

tant to consider its morality. We co-operated with an

ethicist to see if our work is ethically justifiable. We

were confident that our experiment was, since we fol-

lowed university guidelines for subject selection and

experiment design. However, the context necessitates

considering its (possible) uses to assess the desirabil-

ity of pursuing the research.

Aime van Wynsberghe (Wynsberghe et al., 2013)

used our work to develop a set of generic guidelines

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

704

for working with data from social network sites. To-

gether we published a paper describing the guidelines

and applying them to our research.

Using these guidelines and elements from value

sensitive design (Friedman et al., 2006), we con-

cluded that our research was ethically justifiable as

a value trade-off taking into account the interests of

people investigated, people who’s account is included

in a candidate set as false positive, the ISZW and all

Dutch citizens. One important factor was also that,

although requesting welfare support is not really by

choice, the receiver is not obliged to do so. By re-

questing welfare support, someone voluntarily gives

up some privacy to allow the government to investi-

gate if he rightfully does so. This aspect also shows

that using our design to investigate other groups has

to be considered for its own merits. For more details,

we refer to (Been, 2013).

8 CONCLUSIONS

Fraud risk analysis on data from formal information

sources, being a paper reality, suffers from blindness

to false information. Moreover, the very act of provid-

ing false information is a strong indicator for fraud.

As a step towards the vision of harnessing real-world

data from social media and internet for fraud risk

analysis, we present a novel iterative search, mon-

itor, and match approach for finding on-line pres-

ences of people. The approach needs only limited

name/address input data available to governmental or-

ganizations responsible for fraud detection. A real-

world experiment showed that Twitter accounts can

be effectively found: from a voluntary sign-up subject

group of 22 subjects, the correct account was almost

always captured. Our initial attempt at pinpointing

the correct account for each subject proved ineffec-

tive, but we expect this to be a matter of choosing

other features and classification techniques, since the

correct account is included and rich data is gathered.

We also experimented with a larger subject group of

85 subjects from the ISZW. Finally, an analysis is

given of the ethics surrounding the application of such

technology for fraud risk analysis. We aim to extend

IMatcher to search for more kinds of on-line pres-

ences such as other social networks, extract and mon-

itor more characteristics, and improve the person vs.

on-line presence matching.

ACKNOWLEDGMENTS

This publication was supported by the Dutch national

program COMMIT/.We thank Victor de Graaff for neo-

geo support and ISZW for providing the data.

REFERENCES

Back, M. D., Stopfer, J. M., Vazire, S., Gaddis, S.,

Schmukle, S. C., Egloff, B., and Gosling, S. D.

(2010). Facebook profiles reflect actual person-

ality, not self-idealization. Psychological science,

21(3):372–374.

Been, H. (2013). Finding you on the internet: Entity resolu-

tion on twitter accounts and real world people. Mas-

ter’s thesis, Univ. Twente, Netherlands.

Brizan, D. and Tansel, A. (2006). A survey of entity resolu-

tion and record linkage methodologies. Communica-

tions of the IIMA, 6(3):41–50.

Friedman, B., Kahn Jr, P. H., and Borning, A. (2006). Value

sensitive design and information systems. Human-

computer interaction in management information sys-

tems: Foundations, 5:348–372.

Habib, M. B. and van Keulen, M. (2013). A generic

open world named entity disambiguation approach

for tweets. In KDIR 2013, Vilamoura, Portugal.

SciTePress.

Hofmann, C., Horn, E., Keller, W., Renzel, K., and

Schmidt, M. (1996). The field of software architec-

ture. Technical Report TUM-I9641, TU Munich, Ger-

many.

Inspectie SZW (2012). Bestandskoppeling bij fraudebestri-

jding. Technical Report Nvb-Info 12/062, Ministerie

van Sociale Zaken en Werkgelegenheid.

Jain, P. and Kumaraguru, P. (2012). Finding nemo: Search-

ing and resolving identities of users across online so-

cial networks. Technical Report arXiv:1212.6147,

arXiv.

Minder, P. and Bernstein, A. (2011). Social network aggre-

gation using face-recognition. In SDoW 2011, Bonn,

Germany, volume 830. CEUR. ISSN 1613-0073.

Narayanan, A. and Shmatikov, V. (2009). De-anonymizing

social networks. In 30th IEEE Symposium on Security

and Privacy, pages 173–187.

Perito, D., Castelluccia, C., Kaafar, M. A., and Manils,

P. (2011). How unique and traceable are user-

names? In Privacy Enhancing Technologies, pages

1–17. Springer.

Suchanek, F., Kasneci, G., and Weikum, G. (2007). Yago:

a core of semantic knowledge. In WWW 2007, Banff,

Canada, pages 697–706.

van Keulen, M. (2012). Managing uncertainty: The road

towards better data interoperability. IT - Information

Technology, 54(3):138–146.

Veldman, I. (2009). Matching profiles from social network

sites. Master’s thesis, Univ. Twente, Netherlands.

FindingYouontheInternet-AnApproachforFindingOn-linePresencesofPeopleforFraudRiskAnalysis

705

Wynsberghe, A., Been, H., and Keulen, M. (2013). To use

or not to use: guidelines for researchers using data

from online social networking sites. Responsible Re-

search and Innovation Observatory.

Yosef, M. A., Hoffart, J., Bordino, I., Spaniol, M., and

Weikum, G. (2011). AIDA: an online tool for accu-

rate disambiguation of named entities in text and ta-

bles. PVLDB, 4(12):1450–1453.

ICEIS2014-16thInternationalConferenceonEnterpriseInformationSystems

706