Markov Chain Monte Carlo for Risk Measures

Yuya Suzuki

1,2

and Thorbj¨orn Gudmundsson

3

1

Double Degree Program between School of Engineering Science KTH Royal Institute of Technology,

SE-100 44 Stockholm, Sweden

2

School of Science for Open and Environmental Systems Keio University, Yokohama, Japan

3

Department of Mathematics Royal Institute of Technology, SE-100 44 Stockholm, Sweden

Keywords:

Markov Chain Monte Carlo, Risk Measures,Heavy Tails, Rare-event Simulation.

Abstract:

In this paper, we consider random sums with heavy-tailed increments. By the term random sum, we mean

a sum of random variables where the number of summands is also random. Our interest is to construct

an efficient method to calculate tail-based risk measures such as quantiles and conditional expectation (ex-

pected shortfalls). When assuming extreme quantiles and heavy-tailed increments, using standard Monte

Carlo method can be inefficient. In previous works, there exists an efficient method to sample rare-events

(tail-events) using a Markov chain Monte Carlo (MCMC) with a given threshold. We apply the sampling

method to estimate statistics based on tail-information, with a given rare-event probability. The performance

is compared with other methods by some numerical results in the case increments follow Pareto distributions .

We also show numerical results with Weibull, and Log-Normal distributions. Our proposed method is shown

to be efficient especially in cases of extreme tails.

1 INTRODUCTION

Simulation methods with intent to estimate extreme

tails of distributions are of practical importance in var-

ious areas such as telecommunication systems, risk

management for insurance and operational risk, etc.

For instance, to assess the risk of rare-events, it helps

an insurance company to avoid bankruptcy, also helps

a server to avoid being down.

Suppose the problem to estimate the probability

p = P(S

n

> a

n

) where a

n

is some large constant, S

n

=

X

1

+ X

2

+ ···+ X

n

and X

i

’s are non-negative,indepen-

dent and identically distributed (iid). Here we assume

that p → 0 as a

n

→ ∞ so that we consider events are

rare. When n = N is not constant but follows a Pois-

son distribution, then the setting becomes equivalent

to the waiting time of an M/G/1 queue system. In the

context of queuing theory, especially for the applica-

tion with high service time X

i

, especially the case X

i

following a Pareto distribution, has been studied sub-

stantially, see (Sees Jr and Shortle, 2002; Gross et al.,

2002). On the other hand, S

N

is sometimes mentioned

as compound sum, this term is mainly used in the

context of insurance risk. In the Cram´er-Lundberg

model, X

i

’s are interpreted as amount of claim and N

is number of claims, see (McNeil et al., 2010). This

type of statistical model assuming heavy-tail is also

applied to the quantification of operational risk in fi-

nance, (Embrechts et al., 2003).

The simplest solution for this problem is to use the

standard Monte Carlo method that makes T iid copies

{S

t

n

} of S

n

and estimates p by ˆp =

∑

T

t=1

I

{S

t

n

>a

n

}

/T.

However, this method is inefficient to compute small

probabilities with high threshold a

n

. Let us see the

relative error of the estimate:

Std( ˆp)

ˆp

=

s

1− ˆp

T ˆp

→ ∞,

as a

n

→ ∞. This shows the difficulty of computation

when using the standard Monte Carlo.

There are alternatively proposed methods mainly

based on importance sampling. In importance sam-

pling, {

˜

S

t

n

} instead of {S

t

n

} is sampled from a new

probability measure

e

P, see (Rubino et al., 2009;

Blanchet and Liu, 2008).

However, we use the method based on MCMC

proposed by (Gudmundsson and Hult, 2012). The

reason is two-fold: one is the ease of use, importance

sampling methods require to compute an appropriate

change of measure. For the MCMC method, imple-

mentation itself does not require us to do complex

computation.The other reason is that (Gudmundsson

330

Suzuki Y. and Gudmundsson T..

Markov Chain Monte Carlo for Risk Measures.

DOI: 10.5220/0005035303300338

In Proceedings of the 4th International Conference on Simulation and Modeling Methodologies, Technologies and Applications (SIMULTECH-2014),

pages 330-338

ISBN: 978-989-758-038-3

Copyright

c

2014 SCITEPRESS (Science and Technology Publications, Lda.)

and Hult, 2012) showed the MCMC based method is

compatible or rather better than existing importance

sampling methods. The MCMC estimator is con-

structed as non-biased, and having vanishing normal-

ized variance. They showed the efficiency of the pro-

posed method with the setting that X

i

’s have Pareto

distribution and the number of sum n = N has Geo-

metric distribution.

MCMC, stands for Markov chain Monte Carlo,

is initially proposed by (Metropolis et al., 1953) to

simulate the energy levels of atoms, and generalized

by (Hastings, 1970) . Suppose that we are interested

in sampling from a distribution π(x), but we can not

sample from the distribution directly. MCMC is one

of the solutions for the problem, by constructing a

Markov chain whose stationary (equilibrium) distri-

bution is π(x). States of the Markov chain after suffi-

cient steps of transition are used for sampling.

To estimate quantile of S

n

is an important prob-

lem. For instance, quantile is especially called ”Value

at Risk” in finance, and this risk measure is used in

the regulatory framework of Basel. In the context of

queuing theory there exist some papers on estimating

quantiles, for example (Fischer et al., 2001) assum-

ing Pareto service times in simulating Internet queues.

We propose a new method to estimate high quantiles

and it is shown that our proposed method is remark-

ably accurate.

The rest of this paper is organized as follows. In

Section 2, the MCMC-based method to estimate tail-

based statistics is provided. In Section 3, we assess

the convergence of the MCMC.In Section 4, numer-

ical results are shown. In Section 5, conclusions are

given.

2 ESTIMATION METHOD

In this section, the proposed method in (Gudmunds-

son and Hult, 2012) for sampling rare-events and es-

timating small probabilities using the MCMC is pre-

sented.

2.1 Estimator Construction

Throughout this paper, all stochastic variables are de-

fined on a complete probability space (Ω, F ,P). Con-

sider a sequence of iid non-negative random vari-

ables {X

i

,i ∈ N} with common cumulative distribu-

tion function (CDF) F and the density f with respect

to the Lesbegues measure F . Set X = (X

1

,X

2

,...,X

n

).

Notice that we first consider the case n being fixed.

Our problem is to estimate

p = P(X ∈A) =

Z

A

dF. (1)

Let F

A

be the conditional distribution of X given X ∈

A, and the density is given by

dF

A

dx

=

f(x)I(x ∈ A)

p

. (2)

Suppose that samples are from the target distribu-

tion F

A

, and consider a Markov chain {X

t

}

T

t=1

. For

any non-negative function v,

R

A

v(x)dx = 1 it follows.

E

F

A

v(X)I{X ∈ A}

f(X)

=

Z

Ω

v(x)I{x ∈ A}

f(x)

dF

A

(x)

=

Z

A

v(x) f(x)

f(x)p

dx

=

1

p

Z

A

v(x)dx =

1

p

.

(3)

Therefore an unbiased estimator cq

T

for q = 1/p is

calculated as

1

T

T

∑

t=1

v(X

t

)I{X

t

∈ A}

f(X

t

)

. (4)

Note that the estimation above is assuming stationar-

ity of the Markov chain, having its invariant distribu-

tion F

A

. It means also the burn-in period with suffi-

cient length should be discarded.

The function v(x) determines a variance of the es-

timator. For the sake of efficient estimation, the vari-

ance is required to be small. (Gudmundsson and Hult,

2012) showed that when X

i

’s are heavy-tailed in the

sense that there exists a sequence {a

n

},

lim

n→∞

P(max(X

1

,X

2

,...,X

n

) > a

n

)

P(X

1

+ X

2

+ ···+ X

n

> a

n

)

= lim

n→∞

P(M

n

> a

n

)

P(S

n

> a

n

)

= 1,

(5)

and choosing v(·) = P(X ∈ ·|M

n

> a

n

), then the fol-

lowing estimator has vanishing normalized variance,

cq

T

(n)

=

1

T

T

∑

t=1

I{M

n

> a

n

}

1−F

X

(a

n

)

n

, (6)

meaning that:

lim

n→∞

(p)

2

Var

F

(n)

A

n

(q

(n)

T

) = 0. (7)

Note that the condition (5) holds for large class of

distributions, including subexponential class. For the

case when n = N is non-negative integer valued ran-

dom variable, the estimator becomes

cq

T

(N)

=

1

T

T

∑

t=1

I{M

N

> a

n

}

1−g

N

(F

X

(a

n

))

, (8)

where g

N

is the probability generating function of N,

defined by g

N

(z) = E[z

N

].

MarkovChainMonteCarloforRiskMeasures

331

2.2 Description of the MCMC

Algorithm

To sample from the conditional distribution

P(X ∈ A) = P(S

N

= X

1

+ ···+ X

N

> a

n

), (9)

by the MCMC. We use Gibbs sampler for the MCMC

kernel. Description of the algorithm is as follows.

1. Sample N

0

from P(N ∈ ·), and set the initial state

X

0

= (X

0,1

,...,X

N

0

,1

).

so that satisfies

S

0,N

0

=

N

0

∑

i=1

X

0,i

> a

n

.

2. Suppose that

X

t

= (k

t

,x

t,1

,...,x

t,k

t

),

holding the condition

x

t,1

+ ... + x

t,k

t

> a

n

,

and let

k

∗

t

= min{j|x

t,1

+ ... + x

t, j

> a

n

}.

3. Iterate (a)-(c) until the sufficient length of Markov

Chain is constructed.

(a) Sample the number of sum N

t+1

from the con-

ditional distribution

p(k

t+1

|k

t+1

> k

∗

t

) =

P(N = k

t+1

)I{k

t+1

> k

∗

t

}

P(k

t+1

> k

∗

t

)

.

If N

t+1

> N

t

, then sample

X

t+1,N

t

+1

,...,X

t+1,N

t+1

from F

X

independently and set

X

t

′

= (X

t,1

,...,X

t,N

t

,X

t+1,N

t

+1

,...,X

t+1,N

t+1

)

(b) Make an order of updating step

{j

1

, j

2

,..., j

N

t+1

}, it is equivalent as a group of

{1,...,N

t+1

}

(c) Update X

t

′

for each k = 1,...,N

t+1

as follows

i. Let j = j

k

and

X

′

t,−j

= (X

′

t,1

,...,X

′

t, j−1

,X

′

t, j+1

,...,X

′

t,N

t+1

).

Sample Z

j

from the conditional distribution

P(Z

j

∈ ·|X

′

t,−j

) = P(X ∈ ·|X +

∑

k6= j

X

′

t,k

> a

n

).

ii. Set X

t, j

= Z

j

and

X

t

′

= (X

t,1

,...,X

t, j−1

,X

t, j

,X

t+1,k

t

+1

,...,X

t+1,N

t+1

),

then return to i step.

(d) Draw an uniform random permutation π of the

numbers {1,...,N

t+1

} and put

X

t+1

= (N

t+1

,X

t+1,π(1)

,...,X

t+1,π(N

t+1

)

).

2.3 Procedure of the Estimation

In previous sections, the method to estimate proba-

bilities has been shown. That method is assuming a

given arbitrary threshold a

n

and then compute the cor-

responding probability. Now our problem is opposite,

to compute a quantile given a probability. For this

solution, a trivial property is used :

M

n

= max(X

1

,...,X

n

) < S

n

= X

1

+ ... + X

n

⇒ P(M

n

< x) > P(S

n

< x)

⇒ G

−1

(y) < H

−1

(y),

(10)

where

G(x) = P(M

n

< x),H(x) = P(S

n

< x).

Let {S

j

N

}

T

j=1

be samples from the conditional dis-

tributions, P(S

N

|S

N

> b) and define the following

given a

n

> b:

p := P(S

N

> a

n

) = P(S

N

> a

N

|S

N

> b)P(S

N

> b).

(11)

We also define the empirical survival distribution,

¯

F

T

(x) =

1

T

T

∑

i=1

I{S

j

> x}. (12)

Then our motivation of quantile estimation method is

derived from the following:

F

−1

S

N

(1− p) = inf{x : F

S

N

(x) ≥ 1− p}

= inf{x :

¯

F

S

N

(x) ≤ p}

≈ inf{x :

¯

F

T

(x) ˆp

b

≤ p}

= inf{x :

¯

F

T

(x) ≤

p

ˆp

b

}

=

¯

F

−1

T

(1−

p

ˆp

b

) = S

⌊T

p

ˆp

b

⌋+1,T

N

(13)

where ˆp

b

is the estimated value of P(S

N

> b), ⌊·⌋ de-

notes the floor function and S

k,T

N

denotes the kth order

statistics of {S

j

N

}.

However,how to select the pre-determined thresh-

old b smaller than a

n

is a problem, because we do not

know a

n

. In addition, there are two hopeful require-

ments for selecting b:

• b should be generated endogenously using the in-

put p. Otherwise the method becomes unstable

and depends on user’s experience.

• b must be less than a

n

but also needed to be close

to a

n

, for the sake of efficiency of the estimation.

To solve these requirements, we use the property (10).

The procedure of estimation is as follows.

SIMULTECH2014-4thInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

332

1. Set the interest small probability p, and calculate

b = G

−1

(1− p) = F

−1

X

(g

−1

N

(1− p)).

2. Sample {S

j

|j = 1,..., T} from P(·|S

n

> b) and

calculate ˆp

b

, the estimate of P(S

n

> b) by using

MCMC described in the previous section.

3. Let {

˜

S

j

} be the order statistics of {S

j

} and take

ˆa

n

=

˜

S

j

∗

where, j

∗

= ⌊T

p

ˆp

b

⌋+ 1.

It is obvious that the way of selecting b satisfies

that b is smaller than a

n

and that b is generated en-

dogenously. The property that b is close to a

n

, is

shown by the subexponential property :

lim

x→∞

P(max(X

1

,X

2

,..., X

N

) > x)

P(X

1

+ X

2

+ ···+ X

N

> x)

= lim

x→∞

P(M

N

> x)

P(S

N

> x)

= 1.

This means that:

H

−1

(y) → G

−1

(y), as y → 1. (14)

Therefore this way of selecting b is efficient for esti-

mating high-level quantiles.

Extensively, we construct an estimator for condi-

tional expectations, mentioned as expected shortfall:

ES

α

= E[S

N

|S

N

> F

−1

S

N

(1− p)] =

1

p

Z

1

1−p

F

−1

S

N

(u)du.

(15)

The estimator for this statistics is

c

ES

α

=

1

#{

˜

S

j

>

˜

S

j

∗

}

∑

˜

S

j

>

˜

S

j

∗

˜

S

j

, (16)

where #{

˜

S

j

>

˜

S

j

∗

} represents the number of compo-

nents {

˜

S

j

} that satisfies

˜

S

j

>

˜

S

j

∗

.

3 ASSESSING CONVERGENCE

To determine the length of the burn-in period is a

difficult but also important matter when using the

MCMC. We try to determine burn-in period by using

the method, proposed by (Gelman and Rubin, 1992)

in this section.

The method in (Gelman and Rubin, 1992) is con-

structed as follows.

1. First simulate m Markov chains, each chain has

the length of 2n and each starting point

{X

i

0

|i = 1,...,m} should be over-dispersed.

2. For any scalar function of interest θ, calculate

√

R =

s

n−1

n

+

m+ 1

mn

B

W

d f + 1

d f + 3

, (17)

where,

W =

1

m(n−1)

m

∑

i=1

2n

∑

t=n+1

(θ

t

i

−

¯

θ

·

i

)

2

,

B =

1

m−1

m

∑

i=1

(

¯

θ

·

i

−

¯

θ

·

·

)

2

,

¯

θ

·

i

=

2n

∑

t=n+1

θ

t

i

/n,

¯

θ

·

·

=

m

∑

i=1

¯

θ

·

i

/m,

and θ

t

i

is t th observation from chain i. Also

d f =

2

b

V

2

\

Var(

b

V)

,

b

V =

n−1

n

W +

m+ 1

mn

B

.

They insist that when

√

R is close to 1, then we can

conclude that each chain approaches the target distri-

bution. For the purpose of determining the burn-in

period which we shall use in following numerical ex-

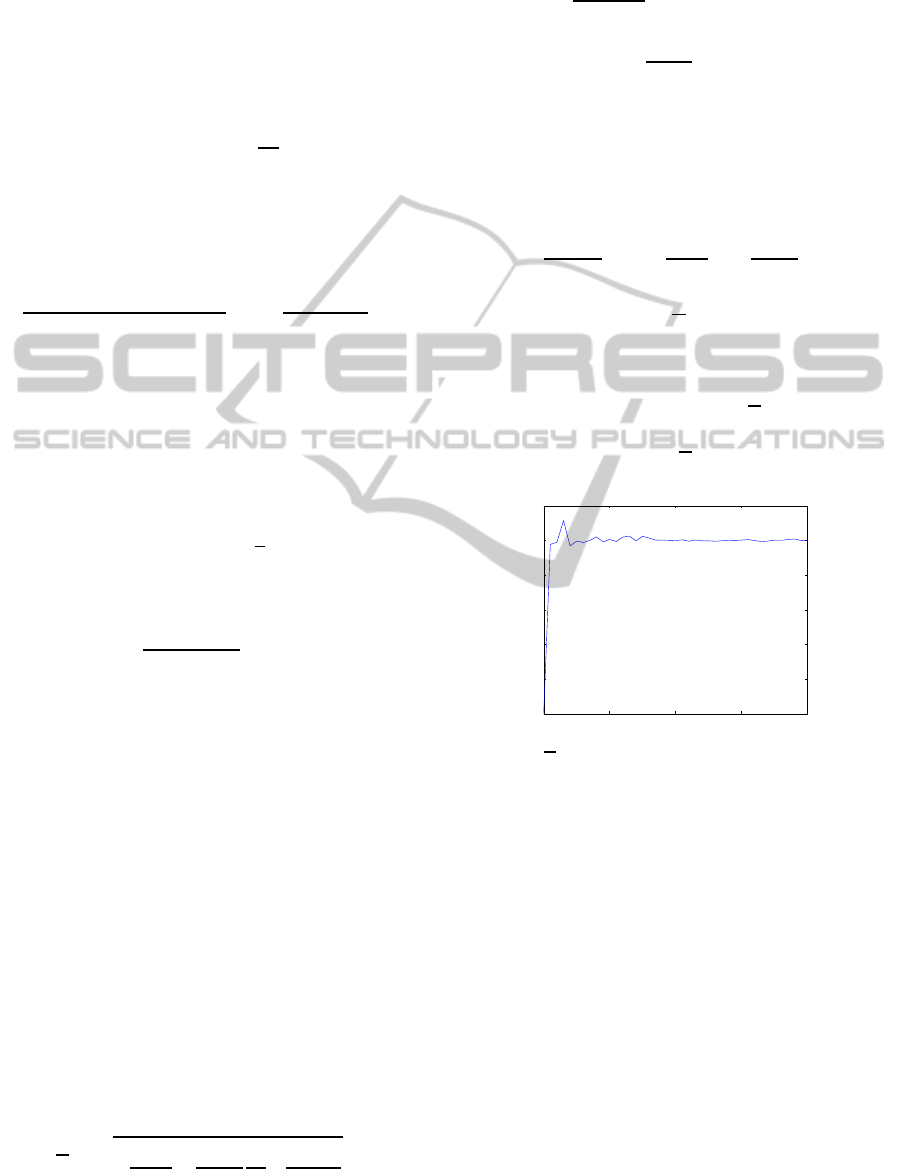

periments, we describe the plot of

√

R with respect to

iteration number in figure 1. In our case, the statis-

tics θ is chosen to be S

N

.

√

R converges to 1. 1000 is

0 500 1000 1500 2000

0.975

0.98

0.985

0.99

0.995

1

1.005

Figure 1:

√

R with respect to the number of iteration steps.

sufficient for the iteration number.

4 NUMERICAL RESULTS

In this section, some numerical results are shown. To

validate and compare the efficiency with other exist-

ing methods, we refer to (Hult and Svensson, 2009).

They consider a problem of fixed sum, meaning the

number of summands is not stochastic but constant.

We first apply our method to the fixed-sum prob-

lem and show the performance, labeled as MCMC.

MC represents standard Monte Carlo, DLW repre-

sents the conditional mixture algorithm (Dupuis et al.,

2007) and SM represents the scaling mixture algo-

rithm (Hult and Svensson, 2009). All figures of MC,

DLW, and SM in (Hult and Svensson, 2009) are used

MarkovChainMonteCarloforRiskMeasures

333

as a reference. For each estimation, all statistics are

calculated based on 10

4

samples and it is repeated 100

times to calculate the mean and standard deviation of

the estimates. For the MCMC method, we set 1000

times of iteration for the burn-in period.

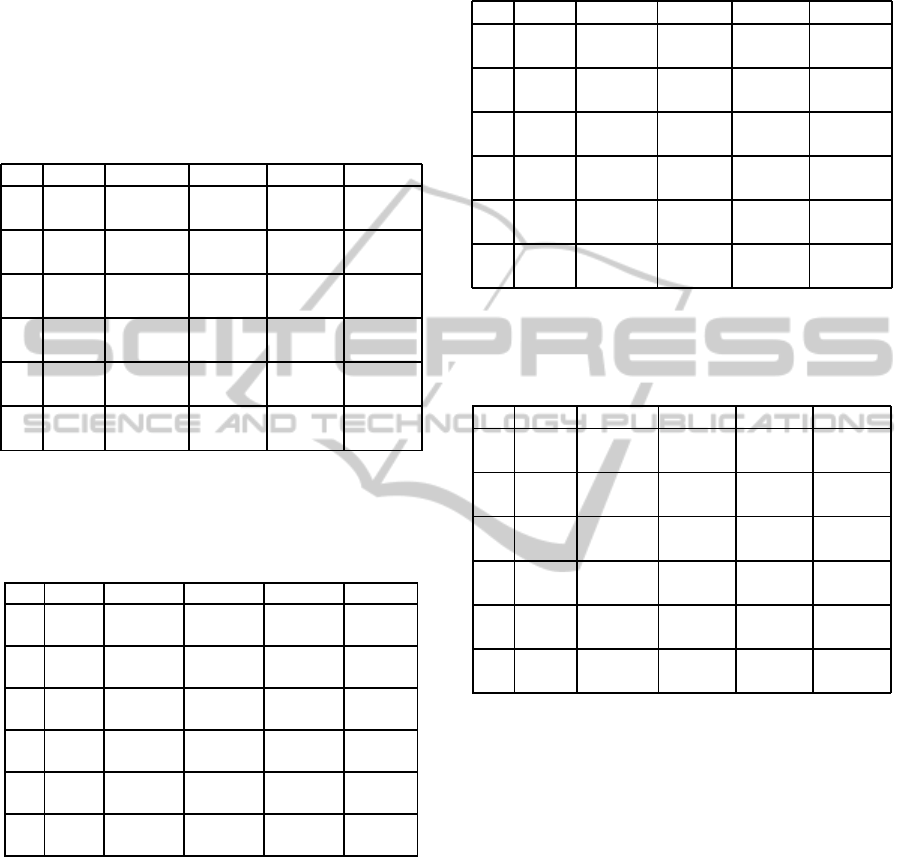

Table 1: Means (standard deviations). Simulating quantiles

q such that 1 − p = F

S

n

(q) where F

S

n

(·) is a cumulative

distribution function of S

n

, S

n

=

∑

n

i=1

X

i

and P(X

i

> x) =

(1+ x)

−2

.

n 1− p MCMC SM DLW MC

10 1e-2 40.179 41.007 40.166 40.038

(0.130) (0.246) (0.459) (1.78)

1e-3 108.587 109.33 108.29 84.821

(0.197) (0.847) (1.081) (47.23)

1e-5 1008.1 1003.1 1007.5 609.42

(0.495) (18.5) (1.51) (1594)

30 1e-2 84.585 85.841 84.681 84.362

(0.324) (0.395) (1.237) (2.739)

1e-3 202.557 203.56 202.29 171.16

(0.373) (1.53) (2.40) (71.26)

1e-5 1760.3 1753.7 1759.0 114.23

(0.903) (41.12) (1.487) (443.5)

Table 2: Means (standard deviations). Simulating quantiles

q such that 1 − p = F

S

n

(q) where F

S

n

(·) is a cumulative

distribution function of S

n

, S

n

=

∑

n

i=1

X

i

and P(X

i

> x) =

(1+ x)

−3

.

n 1 − p MCMC SM DLW MC

10 1e-2 14.205 14.853 14.195 14.182

(0.069) (0.090) (0.154) (0.305)

1e-3 25.651 26.125 25.588 24.965

(0.062) (0.171) (0.412) (2.212)

1e-5 103.64 104.23 103.40 5.283

(0.091) (0.799) (0.553) (16.03)

30 1e-2 29.845 31.054 29.943 29.949

(0.297) (0.287) (0.519) (0.500)

1e-3 46.016 46.725 46.277 44.608

(0.184) (0.286) (1.041) (2.688)

1e-5 158.057 158.460 157.620 13.847

(0.152) (1.080) (0.273) (28.53)

From the table 1 and the table 2, the MCMC based

method perform well comparing with other methods.

For simulating conditional expectations in the table 3

and the table 4, we see MCMC based method can per-

form a little bit less than other methods in some pa-

rameter settings. However, when we see the extreme

tail, our proposed method works the best.

Next we consider random sums, meaning the

number of summands N is random. For numerical

experiments, we calculate the case N is geometrically

distributed:

P(N = n) = ρ(1 −ρ)

(n−1)

, (18)

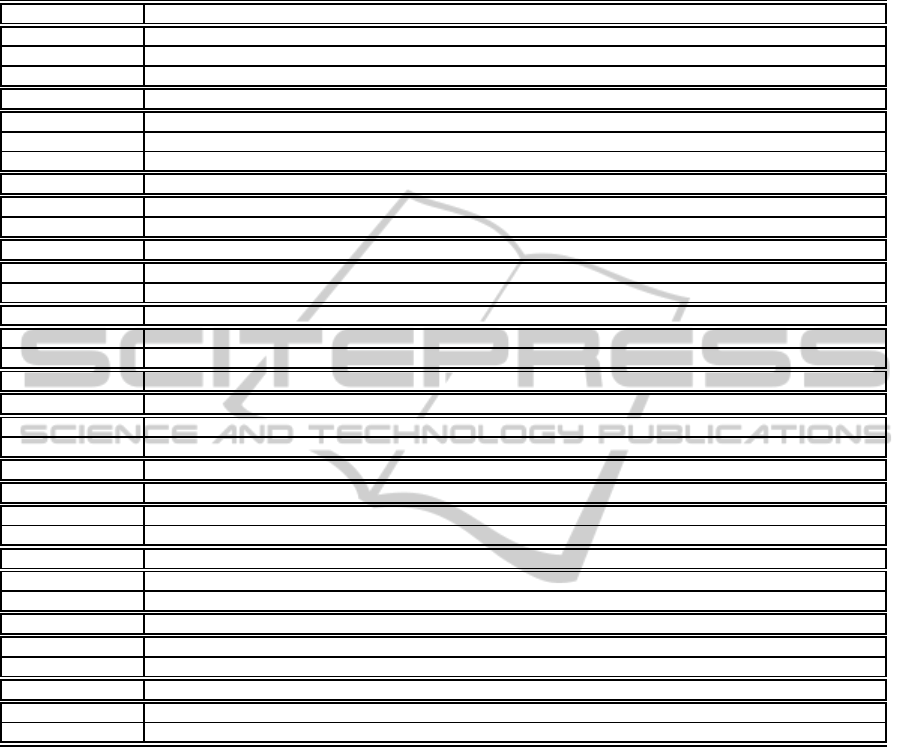

Table 3: Means (standard deviations). Simulating con-

ditional expectations E(S

n

|S

n

> a

n

) where P(S

n

> a

n

) =

1− p, S

n

=

∑

n

i=1

X

i

and P(X

i

> x) = (1+ x)

−2

.

n 1− p MCMC SM DLW MC

10 1e-2 71.694 73.065 71.831 72.252

(1.44) (1.06) (1.22) (8.75)

1e-3 208.67 209.37 209.30 213.42

(4.16) (3.60) (4.99) (65.8)

1e-5 2005.4 2009.8 2009.3 4787.8

(35.5) (37.1) (30.9) (23168)

30 1e-2 139.03 140.55 139.14 140.76

(3.74) (2.22) (3.09) (17.34)

1e-3 374.25 375.76 378.24 391.06

(6.45) (5.00) (11.49) (96.36)

1e-5 3496.7 3500.0 3496.9 745.7

(58.7) (65.2) (59.8) (3671)

Table 4: Means (standard deviations). Simulating con-

ditional expectations E(S

n

|S

n

> a

n

) where P(S

n

> a

n

) =

1− p, S

n

=

∑

n

i=1

X

i

and P(X

i

> x) = (1+ x)

−3

.

n 1− p MCMC SM DLW MC

10 1e-2 19.206 20.044 19.257 19.495

(0.218) (0.167) (0.395) (0.905)

1e-3 36.321 36.911 36.463 41.032

(0.282) (0.327) (0.776) (5.53)

1e-5 153.50 154.39 153.83 132.74

(0.901) (1.326) (2.705) (491.7)

30 1e-2 36.922 38.603 37.200 37.744

(0.930) (0.902) (1.169) (1.581)

1e-3 61.125 62.013 62.066 69.369

(0.728) (0.416) (1.814) (7.973)

1e-5 230.22 230.27 230.00 225.14

(1.40) (1.92) (1.47) (932)

with some different parameter settings. This time we

simulate 5000 number of iteration and 1000 number

of burn-in period with 25 batches to calculate each

means and standard deviations of estimates. ES (ex-

pected shortfall) represents the conditional expecta-

tion. Note that we calculate relative errors instead of

standard deviations in order to see how the accuracy

changes. Table 7 shows that, when calculating quan-

tiles, the more high quantiles we estimate, the more

estimates become accurate. For expected short falls,

relative errors do not change remarkably but the es-

timates are sufficiently accurate. For other settings,

meaning X

i

’s follow Weibull and Log-normal distri-

butions, see the Appendix.

SIMULTECH2014-4thInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

334

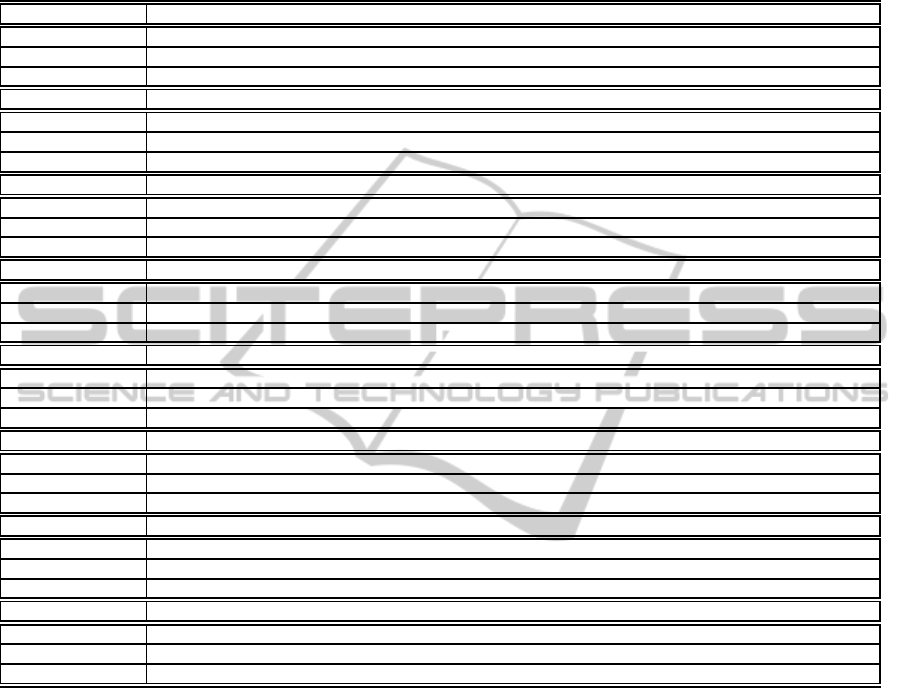

Table 5: Means and relative errors. Simulating quantiles and expected shortfalls of S

N

=

∑

N

i=1

X

i

where P(X

i

> x) = (1+x)

−β

, P(N = n) = ρ(1−ρ)

(n−1)

.

β = 3, ρ = 0.2

Pareto/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 6.87441 9.33847 15.3579 25.8747 42.9086 84.0273

Relative error 0.01508 0.01176 0.01354 0.01151 0.004778 0.002684

β = 2, ρ = 0.2

Pareto/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 13.61134 19.1129 35.4151 82.1348 233.466 715.999

Relative error 0.01223 0.01233 0.01058 0.006867 0.002183 0.0008949

β = 1.5,ρ = 0.2

Estimated quantile 24.3839 36.6780 82.9503 311.665 1375.97 6317.46

Relative error 0.01163 0.0096183 0.007067 0.002843 0.001107 0.0007270

β = 2, ρ = 0.05

Estimated quantile 48.8967 65.5929 109.858 202.007 490.835 1454.22

Relative error 0.01356 0.01625 0.01592 0.009312 0.0021054 0.001494

β = 1.5,ρ = 0.05

Estimated quantile 93.962 132.302 258.466 821.700 3500.29 15948.4

Relative error 0.01541 0.01283 0.01099 0.003944 0.001679 0.00050244

β = 3, ρ = 0.2

Pareto/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 10.3295 12.9366 19.8514 33.6573 60.7505 123.348

Relative error 0.01183 0.01346 0.01430 0.01447 0.01332 0.009787

β = 2, ρ = 0.2

Pareto/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 23.2326 31.1116 57.5991 150.307 457.184 1419.47

Relative error 0.02130 0.03127 0.03323 0.01582 0.02033 0.01836

β = 1.5,ρ = 0.2

Estimated ES 54.8321 83.7899 203.878 892.355 4006.29 18232.4

Relative error 0.06381 0.2062 0.07136 0.06701 0.06100 0.04304

β = 2, ρ = 0.05

Estimated ES 75.8177 95.6647 153.790 334.255 938.896 2867.00

Relative error 0.02580 0.03028 0.05590 0.02852 0.04177 0.02154

β = 1.5,ρ = 0.05

Estimated ES 179.384 249.374 572.885 2286.532 10194.5 46380.5

Relative error 0.05329 0.09100 0.1432 0.05691 0.07483 0.03651

5 CONCLUSIONS

The MCMC-based method for estimating tail-based

statistics, such as quantiles and conditional expecta-

tions, was shown. Comparing with other methods,

our method outperformed when estimating quantiles

in the parameter settings.

Our proposed method to estimate quantiles has

two distinctive features. First, the estimates are accu-

rate. The levelof quantile becomes higher,the relative

errors becomes smaller. This property is significant

because other methods such as standard Monte Carlo

have the opposite property. Second, the ease of use,

the algorithm does not require complicated analytical

calculations. We also calculated the case X

i

’s follow

Weibull and Log-normal distributions that results are

shown in the Appendix. Relative errors do not change

remarkably, or perform better for heavy and extreme

tails.

REFERENCES

Blanchet, J. H. and Liu, J. (2008). State-dependent impor-

tance sampling for regularly varying random walks.

Advances in Applied Probability, pages 1104–1128.

Dupuis, P., Leder, K., and Wang, H. (2007). Importance

sampling for sums of random variables with regularly

varying tails. ACM T. Model Comput. S., 17(3).

Embrechts, P., Furrer, H., and Kaufmann, R. (2003). Quan-

tifying regulatory capital for operational risk. Deriva-

tives Use, Trading & Regulation, 9(3):217–233.

MarkovChainMonteCarloforRiskMeasures

335

Fischer, M. J., Bevilacqua Masi, D. M., Gross, D., Shortle,

J., and Brill, P. H. (2001). Using quantile estimates in

simulating internet queues with pareto service times.

In Proceedings of the 33nd conference on Winter sim-

ulation, pages 477–485. IEEE Computer Society.

Gelman, A. and Rubin, D. B. (1992). Inference from iter-

ative simulation using multiple sequences. Statistical

science, pages 457–472.

Gross, D., Shortle, J. F., Fischer, M. J., and Masi, D. M. B.

(2002). Difficulties in simulating queues with pareto

service. In Simulation Conference, 2002. Proceedings

of the Winter, volume 1, pages 407–415. IEEE.

Gudmundsson, T. and Hult, H. (2012). Markov chain

monte carlo for computing rare-event probabilities for

a heavy-tailed random walk. arXiv preprint.

Hastings, W. K. (1970). Monte carlo sampling methods us-

ing markov chains and their applications. Biometrika,

57(1):97–109.

Hult, H. and Svensson, J. (2009). Efficient calculation

of risk measures by importance sampling–the heavy

tailed case. Preprint, KTH.

McNeil, A. J., Frey, R., and Embrechts, P. (2010). Quan-

titative risk management: concepts, techniques, and

tools. Princeton university press.

Metropolis, N., Rosenbluth, A. W., Rosenbluth, M. N.,

Teller, A. H., and Teller, E. (1953). Equation of state

calculations by fast computing machines. The journal

of chemical physics, 21:1087.

Rubino, G., Tuffin, B., et al. (2009). Rare event simulation

using Monte Carlo methods. Wiley Online Library.

Sees Jr, J. C. and Shortle, J. F. (2002). Simulating m/g/1

queues with heavy-tailed service. In Simulation Con-

ference, 2002. Proceedings of the Winter, volume 1,

pages 433–438. IEEE.

SIMULTECH2014-4thInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

336

Table 6: Means and relative errors. Simulating quantiles and expected shortfalls of S

N

=

∑

N

i=1

X

i

where P(X

i

> x) = exp(x

−β

)

, P(N = n) = ρ(1−ρ)

(n−1)

.

β = 0.8,ρ = 0.2

Weibull/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 15.5716 20.1657 31.2012 46.3961 62.3213 75.3965

Relative error 0.01313 0.01381 0.01707 0.02813 0.06063 0.08026

β = 0.6,ρ = 0.2

Weibull/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 21.6363 29.11683 46.9427 73.2428 99.7388 125.817

Relative error 0.009764 0.01169 0.01553 0.02094 0.01930 0.03121

β = 0.4,ρ = 0.2

Weibull/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 49.2906 73.5994 140.271 264.780 437.100 670.432

Relative error 0.009739 0.009350 0.01088 0.005121 0.003460 0.003296

β = 0.2,ρ = 0.2

Weibull/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 1022.07 2344.92 9999.33 46018.6 149669.9 390358.1

Relative error 0.01131 0.007263 0.004710 0.001813 0.001379 0.0006157

β = 0.8,ρ = 0.2

Weibull/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 21.7579 26.4005 37.2303 52.4836 67.0727 77.8810

Relative error 0.01005 0.01249 0.01726 0.02707 0.05173 0.07376

β = 0.6,ρ = 0.2

Weibull/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 31.9828 39.4908 57.4457 83.9030 110.503 136.550

Relative error 0.008739 0.008870 0.01721 0.02071 0.01772 0.02988

β = 0.4,ρ = 0.2

Weibull/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 88.0119 116.721 193.811 338.062 536.740 802.705

Relative error 0.008471 0.01070 0.01110 0.0060 0.004726 0.003834

β = 0.2,ρ = 0.2

Weibull/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 5193.00 8860.84 25693.4 90051.7 252252.9 595906.0

Relative error 0.03858 0.02782 0.01659 0.01266 0.007925 0.007637

APPENDIX

MarkovChainMonteCarloforRiskMeasures

337

Table 7: Means and relative errors. Simulating quantiles and expected shortfalls of S

N

=

∑

N

i=1

X

i

where P(X

i

> x) =

R

x

0

1

zσ

√

2π

exp(−

(lnz)

2

2σ

2

)dz , P(N = n) = ρ(1−ρ)

(n−1)

.

σ = 1.5,ρ = 0.2

Log-normal/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 43.0787 61.9818 116.712 244.512 511.005 1043.09

Relative error 0.01081 0.01143 0.01144 0.003841 0.003958 0.001575

σ = 2.0,ρ = 0.2

Log-normal/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 94.6375 153.475 389.479 1271.15 3775.13 10203.1

Relative error 0.01229 0.007735 0.007387 0.003748 0.001743 0.0007950

σ = 2.5,ρ = 0.2

Log-normal/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 225.102 426.242 1495.87 7191.37 29060.8 101773.9

Relative error 0.01195 0.006451 0.005317 0.002870 0.001553 0.00064034

σ = 3.0,ρ = 0.2

Log-normal/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated quantile 563.363 1250.45 6032.53 41582.1 225487.2 1019519.9

Relative error 0.008669 0.008767 0.005775 0.002417 0.0008147 0.0004598

σ = 1.5,ρ = 0.2

Log-normal/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 75.0995 99.0854 172.281 357.807 736.980 1469.82

Relative error 0.01496 0.01084 0.01375 0.009311 0.006701 0.007204

σ = 2.0,ρ = 0.2

Log-normal/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 234.143 341.323 780.272 2344.29 6563.31 16763.9

Relative error 0.07241 0.02705 0.01559 0.01516 0.009385 0.01131

σ = 2.5,ρ = 0.2

Log-normal/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 889.343 1428.11 4176.62 17149.0 62259.2 197743.9

Relative error 0.08343 0.05737 0.02405 0.01527 0.02784 0.02122

σ = 3.0,ρ = 0.2

Log-normal/Geometric 90%-quantile 95%-quantile 99%-quantile 99.9%-quantile 99.99%-quantile 99.999%-quantile

Estimated ES 3928.26 7097.71 25372.2 131697.1 611342.3 2391876.4

Relative error 0.1106 0.07361 0.1296 0.06921 0.043466 0.02005

SIMULTECH2014-4thInternationalConferenceonSimulationandModelingMethodologies,Technologiesand

Applications

338